Vision-Guided Hand–Eye Coordination for Robotic Grasping and Its Application in Tangram Puzzles

Abstract

:1. Introduction

2. Related Work

2.1. Hand–Eye Coordination

2.2. Robotic Grasping

2.3. Visual Feedback

3. Vision-Guided Hand–Eye Coordination for Robotic Grasping

3.1. System Structure

3.2. Problem Solving

| Algorithm 1 Servoing |

| 1: Given current image X and Task Precision |

| 2: Get state s from visual processing module |

| 3: Calculate p with image X and robot state |

| 4: Get subtask ti from problem-solving module and s |

| 5: |

| 6: for 1…n do |

| 7: Execute ti with motion planning module |

4. Application to Completing Tangram Puzzle

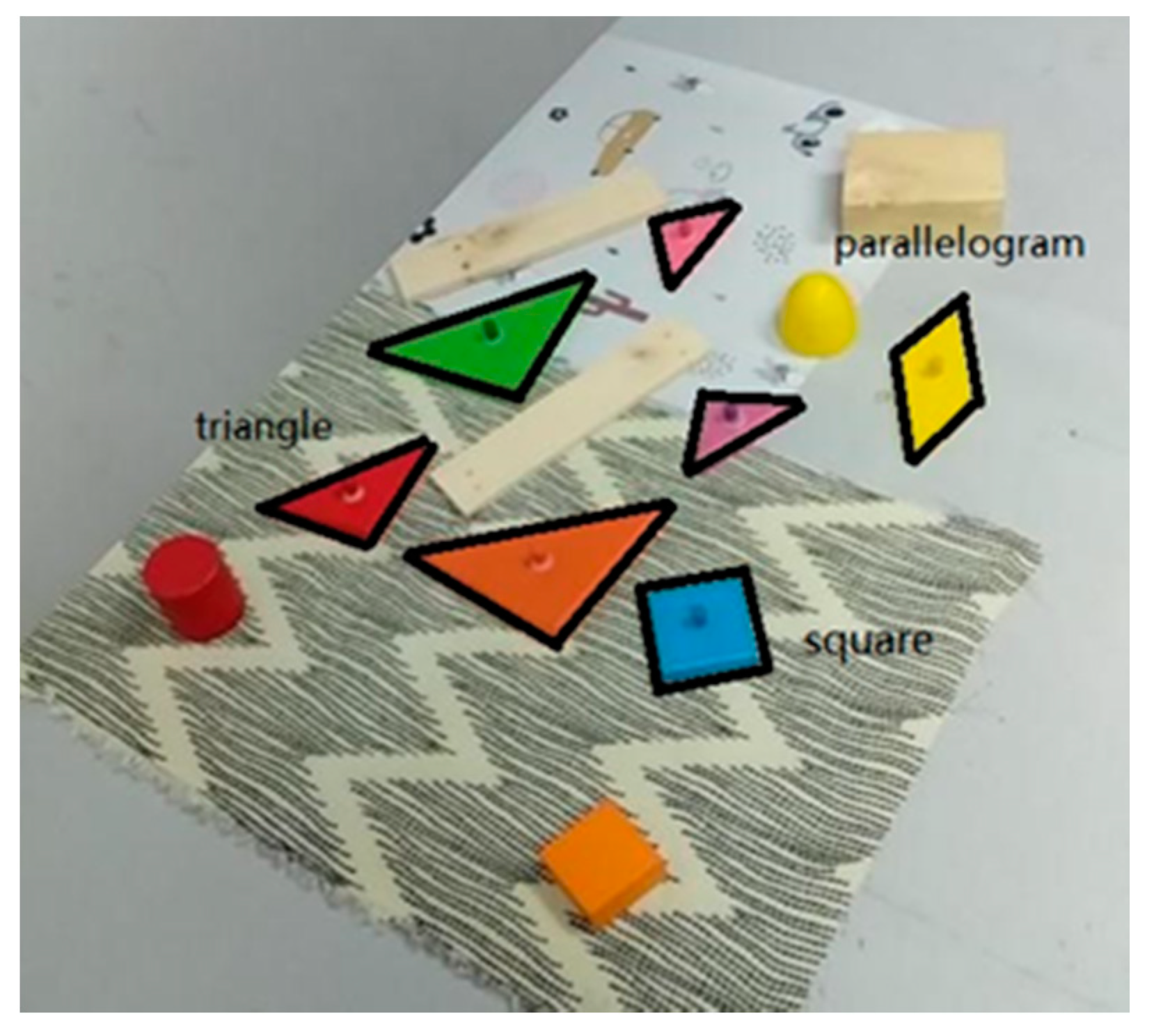

4.1. Task Description

4.2. Vision-Guided Hand–Eye Coordination for Tangram Task

4.3. Visual Processing of the Tangram Puzzle

4.3.1. Shape Recognition

4.3.2. Rotation Computation

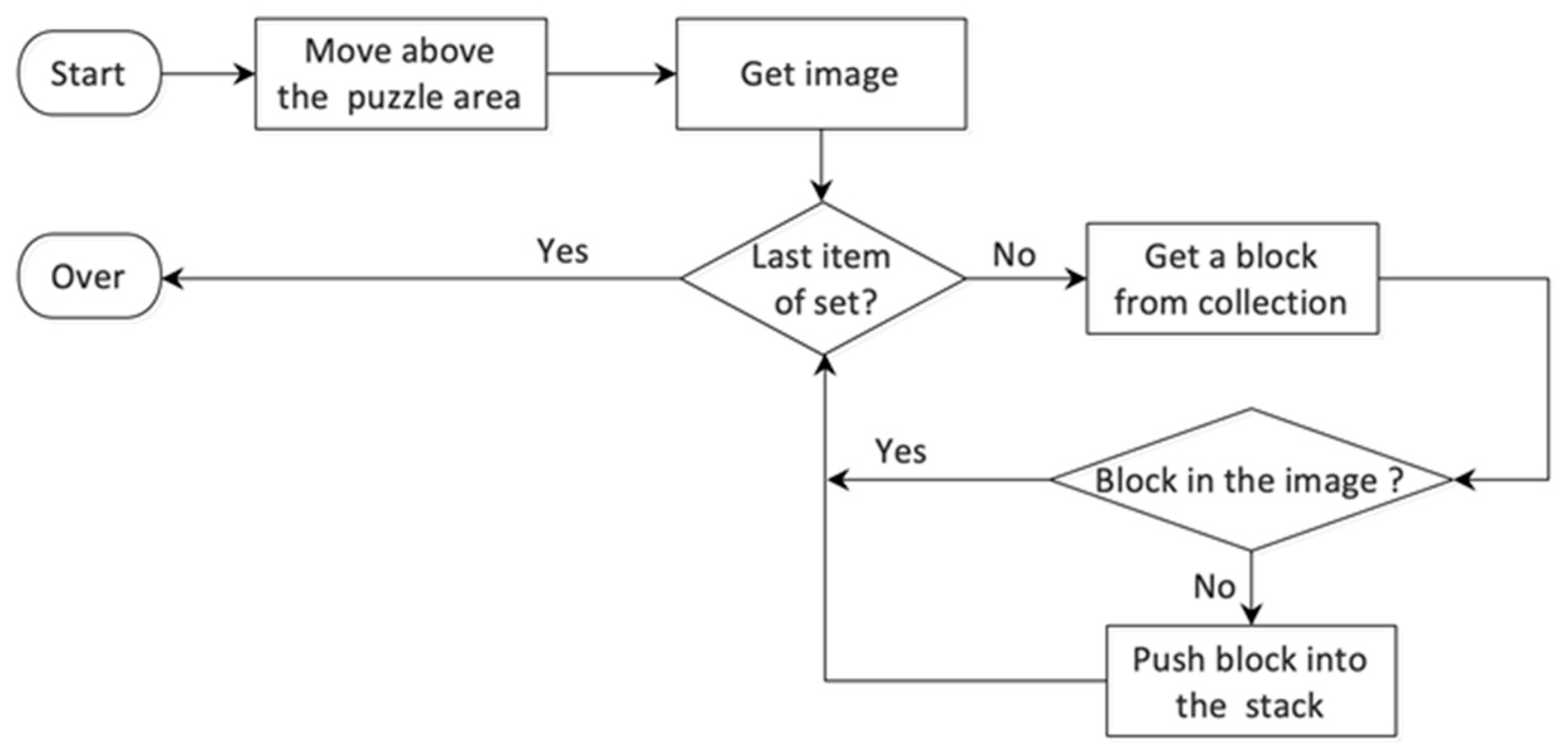

4.4. Tangram Problem Solving

4.5. Motion Planning

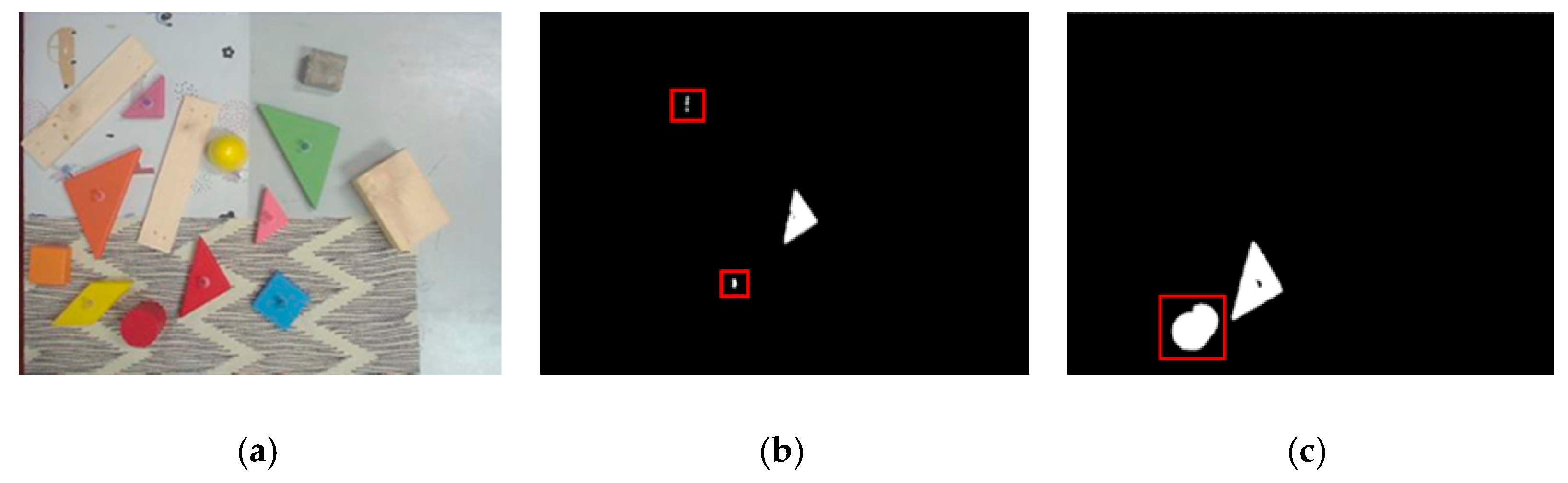

4.5.1. Locating the Tangram Blocks

4.5.2. Picking up Tangram Blocks

4.5.3. Rotating Tangram Blocks

4.5.4. Putting down Tangram Blocks

4.5.5. Evaluating Tangram Blocks

5. Experiments

5.1. Visual Feedback Statistical Experiment

5.1.1. Visual Feedback Indicators

5.1.2. Visual Feedback Statistical Results

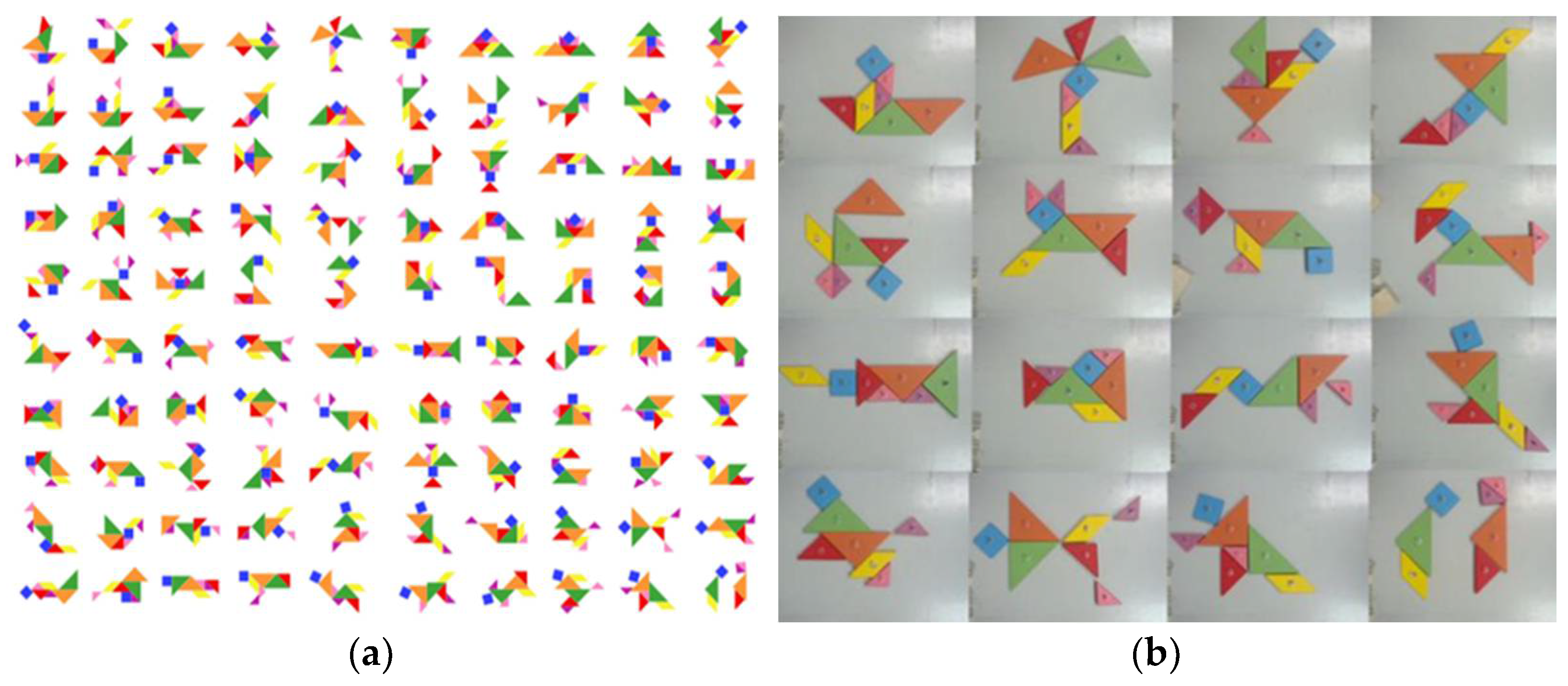

5.2. Tangram Experiment

5.2.1. Tangram Indicators

5.2.2. Dog Pattern Experiment

5.2.3. Statistical Experiment

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Robot Hardware

Appendix A.2. Kinematic Modeling

| i | ||||

|---|---|---|---|---|

| 1 | 0 | 0 | base | 0 |

| 2 | 0 | −π/2 | 0 | −π/2 |

| 3 | 0 | 0 | 0 | |

| 4 | 0 | −π/2 | 0 | |

| 5 | 0 | π/2 | 0 | 0 |

| 6 | 0 | −π/2 | 0 |

Appendix A.3. Sequential Instruction Communication Protocol

- When the CodeSys program detects that Send = F and Finish = F, it sends “ready” to the Python program.

- When Python receives the ready signal, it sends data to CodeSys.

- CodeSys receives the data sent by the Python program and then parses the data and passes it to the robot controller program. Set Send to T and write to port 4.

- When the robot controller detects Send = T and Finish = F, after executing the movement command, set Finish to T and write to port 5.

- When CodeSys detects Send = T and Finish = T, set Send to F and write to port 4.

- When the robot controller detects Send = F and Finish = T, set Finish to F and write to port 5.

References

- Morrison, D.; Tow, A.W.; Mctaggart, M.; Smith, R.; Kelly-Boxall, N.; Wade-Mccue, S.; Erskine, J.; Grinover, R.; Gurman, A.; Hunn, T.; et al. Cartman: The low-cost cartesian manipulator that won the amazon robotics challenge. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–26 May 2018; pp. 7757–7764. [Google Scholar]

- Ten Pas, A.; Gualtieri, M.; Saenko, K.; Platt, R. Grasp pose detection in point clouds. Int. J. Robot. Res. 2017, 36, 1455–1473. [Google Scholar] [CrossRef]

- Chavan-Dafle, N.; Rodriguez, A. Stable prehensile pushing: In-hand manipulation with alternating sticking contacts. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–26 May 2018; pp. 254–261. [Google Scholar]

- Hill, J. Real time control of a robot with a mobile camera. In Proceedings of the 9th International Symposium on Industrial Robots, Washington, DC, USA, 13–15 May 1979; pp. 233–246. [Google Scholar]

- Su, J.; Ma, H.; Qiu, W.; Xi, Y. Task-Independent robotic uncalibrated hand-eye coordination based on the extended state observer. IEEE Trans. Syst. Man Cybern. Part B (Cybernetics) 2004, 34, 1917–1922. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Qiu, Z.; Zhang, X. A Simultaneous Optimization Method of Calibration and Measurement for a Typical Hand-Eye Positioning System. IEEE Trans. Instrum. Meas. 2021, 70, 1–11. [Google Scholar] [CrossRef]

- Haixia, W.; Fan, X.; Lu, X. Application of a hand-eye self-calibration technique in robot vision. In Proceedings of the 25th Chinese Control and Decision Conference (CCDC 2013), Guiyang, China, 25–27 May 2013. [Google Scholar]

- Hervé, J.-Y.R.; Sharma, R.; Cucka, P. Toward robust vision-based control: Hand/eye coordination without calibration. In Proceedings of the 1991 IEEE International Symposium on Intelligent Control, Arlington, VA, USA, 13–15 August 1991. [Google Scholar]

- Su, J.; Qielu, P.; Yugen, X. Dynamic coordination of uncalibrated hand/eye robotic system based on neural network. J. Syst. Eng. Electron. 2001, 12, 45–50. [Google Scholar]

- Zhenzhen, X.; Su, J.; Ma, Z. Uncalibrated hand-eye coordination based HRI on humanoid robot. In Proceedings of the 33rd Chinese Control Conference, Nanjing, China, 28–30 July 2014. [Google Scholar]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Ibarz, J.; Quillen, D. Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int. J. Robot. Res. 2017, 37, 421–436. [Google Scholar] [CrossRef]

- Cooperstock, J.R.; Milios, E.E. Self-supervised learning for docking and target reaching. Robot. Auton. Syst. 1993, 11, 243–260. [Google Scholar] [CrossRef]

- Zeng, A.; Song, S.; Yu, K.T.; Donlon, E.; Hogan, F.R.; Bauza, M.; Ma, D.; Taylor, O.; Liu, M.; Romo, E.; et al. Robotic pick-and-place of novel objects in clutter with multi-affordance grasping and cross-domain image matching. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–26 May 2018. [Google Scholar]

- Zeng, A.; Yu, K.-T.; Song, S.; Suo, D.; Walker, E.; Rodriguez, A.; Xiao, J. Multi-view self-supervised deep learning for 6d pose estimation in the amazon picking challenge. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar]

- Mahler, J.; Matl, M.; Liu, X.; Li, A.; Gealy, D.; Goldberg, K. Dex-net 3.0: Computing robust vacuum suction grasp targets in point clouds using a new analytic model and deep learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–26 May 2018; pp. 1–8. [Google Scholar]

- Yu, K.T.; Rodriguez, A. Realtime state estimation with tactile and visual sensing. Application to planar manipulation. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–26 May 2018; pp. 7778–7785. [Google Scholar]

- Viereck, U.; Pas, A.T.; Saenko, K.; Platt, R. Learning a visuomotor controller for real world robotic grasping using simulated depth images. arXiv 2017, arXiv:1706.04652. [Google Scholar]

- Kalashnikov, D.; Irpan, A.; Pastor, P.; Ibarz, J.; Herzog, A.; Jang, E.; Quillen, D.; Holly, E.; Kalakrishnan, M.; Vanhoucke, V.; et al. Scalable deep reinforcement learning for vision-based robotic manipulation. In Proceedings of the Conference on Robot Learning, Zürich, Switzerland, 29–31 October 2018; pp. 651–673. [Google Scholar]

- Kehoe, B.; Matsukawa, A.; Candido, S.; Kuffner, J.; Goldberg, K. Cloud-Based robot grasping with the google object recognition engine. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Wang, Z.; Xu, Y.; He, Q.; Fang, Z.; Xu, G.; Fu, J. Grasping pose estimation for SCARA robot based on deep learning of point cloud. Int. J. Adv. Manuf. Technol. 2020, 108, 1217–1231. [Google Scholar] [CrossRef]

- Fang, H.-S.; Wang, C.; Gou, M.; Lu, C. Graspnet-1billion: A large-scale benchmark for general object grasping. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Bai, Q.; Li, S.; Yang, J.; Song, Q.; Li, Z.; Zhang, X. Object Detection Recognition and Robot Grasping Based on Machine Learning: A Survey. IEEE Access 2020, 8, 181855–181879. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, F.; Wang, J.; Cui, B. Robot grasping method optimization using improved deep deterministic policy gradient algorithm of deep reinforcement learning. Rev. Sci. Instrum. 2021, 92, 025114. [Google Scholar] [CrossRef] [PubMed]

- Mahler, J.; Matl, M.; Satish, V.; Danielczuk, M.; Derose, B.; McKinley, S.; Goldberg, K. Learning ambidextrous robot grasping policies. Sci. Robot. 2019, 4. [Google Scholar] [CrossRef] [PubMed]

- Marcos, A.; Izaguirre, A.; Graña, M. Current research trends in robot grasping and bin picking. In Proceedings of the 13th International Conference on Soft Computing Models in Industrial and Environmental Applications, San Sebastian, Spain, 6–8 June 2018; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Takuya, T.; Hashimoto, M. Model-Less estimation method for robot grasping parameters using 3D shape primitive approximation. In Proceedings of the 2018 IEEE 14th International Conference on Automation Science and Engineering (CASE), Munich, Germany, 20–24 August 2018. [Google Scholar]

- Delowar, H.; Capi, G.; Jindai, M. Evolution of deep belief neural network parameters for robot object recognition and grasping. Procedia Comput. Sci. 2017, 105, 153–158. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Sanderson, A. Image based visual servo control using relational graph error signal. In Proceedings of the International Conference on Cybernetics and Society, Tokyo, Japan, 3–7 November 1980. [Google Scholar]

- Le, H.M.; Jiang, N.; Agarwal, A.; Dudík, M.; Yue, Y.; Daumé, H. Hierarchical imitation and reinforcement learning. arXiv 2018, arXiv:1803.00590. [Google Scholar]

- Kanbar, M.S. Tangram Game Assembly. U.S. Patent 4,298,200, 3 November 1981. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E.; Eddins, S.L. Digital Image Processing Using MATLAB; Pearson Education India: Chennai, India, 2004. [Google Scholar]

- Nise, N.S. Control Systems Engineering, (With CD); John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Newell, A.; Simon, H.A. Computer science as empirical inquiry: Symbols and search. In ACM Turing Award Lectures; ACM: New York, NY, USA, 2007; p. 1975. [Google Scholar]

- Luger, G.F. Artificial Intelligence: Structures and Strategies for Complex Problem Solving; Pearson Education: London, UK, 2005. [Google Scholar]

- Fikes, R.E.; Nilsson, N.J. Strips: A new approach to the application of theorem proving to problem solving. Artif. Intell. 1971, 2, 189–208. [Google Scholar] [CrossRef]

- Chowdhury, A.; Koval, D. Fundamentals of Probability and Statistics; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar]

| Target State |  |  |  | |

|---|---|---|---|---|

|

Gap Error (mm) | ||||

| pink–red | 1.03 | 0.94 | 0.92 | |

| pink–orange | 0.42 | 0.43 | 1.04 | |

| pink–yellow | 0.73 | 0.09 | 0.26 | |

| pink–green | 0.81 | 0.06 | 0.06 | |

| pink–blue | 0.43 | 1.32 | 0.68 | |

| pink–purple | 1.45 | 1.04 | 0.08 | |

| red–orange | 0.38 | 0.36 | 0.58 | |

| red–yellow | 1.06 | 0.88 | 1.14 | |

| red–green | 1.28 | 0.13 | 1.23 | |

| red–blue | 0.60 | 0.35 | 0.27 | |

| red–purple | 2.74 | 0.11 | 0.3 | |

| orange–yellow | 1.04 | 0.13 | 1.04 | |

| orange–green | 0.94 | 0.6 | 1.57 | |

| orange–blue | 0.78 | 0.7 | 1.17 | |

| orange–purple | 1.78 | 0.39 | 0.02 | |

| yellow–green | 0.47 | 0.23 | 0.05 | |

| yellow–blue | 0.31 | 1.31 | 0.39 | |

| yellow–purple | 2.03 | 0.59 | 0.26 | |

| green–blue | 0.8 | 0.53 | 0.45 | |

| green–purple | 2.02 | 0.04 | 0.81 | |

| blue–purple | 1.77 | 0.48 | 0.46 | |

| Average | 1.09 | 0.51 | 0.61 | |

| Standard deviation | 0.64 | 0.39 | 0.45 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, H.; Pan, S.; Ma, G.; Duan, X. Vision-Guided Hand–Eye Coordination for Robotic Grasping and Its Application in Tangram Puzzles. AI 2021, 2, 209-228. https://doi.org/10.3390/ai2020013

Wei H, Pan S, Ma G, Duan X. Vision-Guided Hand–Eye Coordination for Robotic Grasping and Its Application in Tangram Puzzles. AI. 2021; 2(2):209-228. https://doi.org/10.3390/ai2020013

Chicago/Turabian StyleWei, Hui, Sicong Pan, Gang Ma, and Xiao Duan. 2021. "Vision-Guided Hand–Eye Coordination for Robotic Grasping and Its Application in Tangram Puzzles" AI 2, no. 2: 209-228. https://doi.org/10.3390/ai2020013