1. Introduction

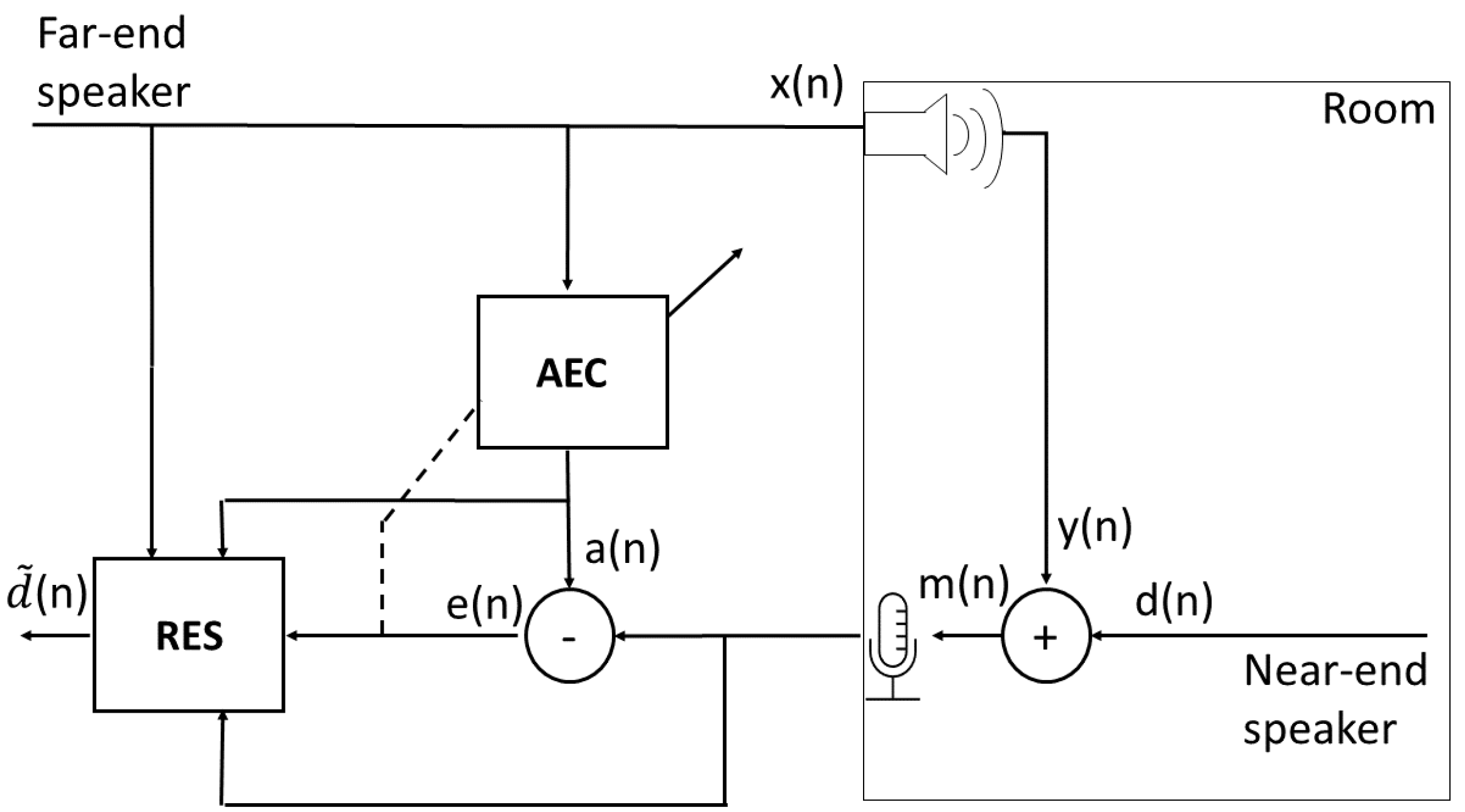

Modern telecommunication systems often suffer from speech intelligibility degradation caused by an acoustic echo. A typical scenario includes two speakers communicating between a far-end and a near-end point. At the near-end point, a microphone captures both the near-end speaker’s signal and the acoustic echo of a loudspeaker playing the far-end signal [

1]. When the far-end speaker speaks, he hears the echo of his voice, thus reducing the quality of the conversation. Therefore, canceling the acoustic echo while preserving near-end speech quality is desired in any full-duplex communication system. Linear acoustic echo cancellers (AECs) are commonly employed to cancel the echo component of the microphone signal and are traditionally based on linear adaptive filters [

2]. Linear AECs estimate the acoustic path from the loudspeaker to the microphone. The estimated filters are applied to the far-end reference signal resulting in an estimate of the echo signal as received by the microphone. Then, the estimated near-end signal is obtained by subtracting the estimated echo from the microphone signal. However, due to their linear nature, residual non-linear components of the echo remain at the output of the linear AECs. In most cases, the residual echo still interferes and degrades the near-end speech quality.

In recent years, deep-learning neural networks (DNNs) achieved unprecedented performance in many fields, e.g., computer vision, natural language processing, audio and speech processing, and more. Possessing high non-linear modeling capabilities, DNNs became a natural choice for acoustic echo cancellation. Zhang and Wang [

3] employ a bi-directional long short-term memory (BLSTM) [

4] recurrent neural network (RNN) operating in the time-frequency (T-F) domain to capture dependency between time frames. The model predicts an ideal ratio mask (IRM) [

5] applied to the microphone signal’s spectrogram magnitudes to estimate the near-end signal’s spectrogram magnitudes. Kim and Chang [

6] propose a time-domain U-Net [

7] architecture with an additional encoder that learns features from the far-end reference signal. An attention mechanism [

8] accentuates the meaningful far-end features for the U-Net’s encoder. Westhausen and Meyer [

9] combine T-F and time-domain processing by adapting the dual-signal transformation LSTM network (DTLN) [

10] to the task of acoustic echo cancellation. Although DNN AECs achieve performance superior to linear AECs and allow for end-to-end training and inference, they are prone to introducing distortion to the estimated near-end signal, especially when the signal-to-echo ratio (SER) is low.

An alternative for end-to-end DNN acoustic echo cancellation is residual echo suppression. In a typical residual echo suppression setting, a linear AEC is followed by a DNN aimed at suppressing the residual echo at the output of the linear AEC. Linear AECs introduce little distortion to the near-end signal. Their estimation of the echo and near-end signals provides the residual echo suppressor (RES) with better features, allowing for better near-end estimation with smaller model sizes. Carbajal et al. [

11] propose a simple fully-connected architecture that receives the spectrogram magnitudes of the far-end reference signal and the linear AEC’s outputs and predicts a phase-sensitive filter (PSF) [

12] to recover the near-end signal from the linear AEC’s error signal. Pfeifenberger and Pernkopf [

13] suggest utilizing an LSTM to predict a T-F gain mask from the log differences between the powers of the microphone signal and the AEC’s echo estimate. Chen et al. [

14] propose a time-domain RES based on the well-known Conv-TasNet architecture [

15]. They employ a multi-stream modification of the original architecture, where the outputs of the linear AEC are separately encoded before being fed to the main Conv-TasNet. Fazel et al. [

16] propose context-aware deep acoustic echo cancellation (CAD-AEC), which incorporates a contextual attention module to predict the near-end signal’s spectrogram magnitudes from the microphone and linear AEC output signals. Halimeh et al. [

17] employ a complex-valued convolutional recurrent network (CRN) to estimate a complex T-F mask applied to the complex spectrogram of the AEC’s error signal to recover the near-end signal’s spectrogram. Ivry et al. [

18] employ a 2-D U-Net operating on the spectrogram magnitudes of the linear AEC’s outputs. A custom loss function with a tunable parameter allows a dynamic tradeoff between the levels of echo suppression and estimated signal distortion. Franzen and Fingscheidt [

19] propose a 1-D fully convolutional recurrent network (FCRN) operating on discrete Fourier transform (DFT) inputs. An ablation study is performed to study the effect of different combinations of input signals on the joint task of residual echo suppression and noise reduction. Although achieving state-of-the-art residual echo suppression performance, none of the above studies focus on the challenging scenario of extremely low SER. Low SER may occur in typical real-life situations such as a conversation over a mobile phone where the loudspeaker plays the echo at a high volume.

In a typical residual echo suppression scenario, one of four situations may occur at each time point: both speakers are silent, only the far-end speaker speaks, only the near-end speaker speaks, and double-talk, where both speakers speak at the same time. When only the near-end speaker speaks, the microphone signal should remain unchanged to keep the near-end speech distortionless. Ideally, the microphone signal should be canceled when only far-end speech is present to remove any echo component. The challenging situation is double-talk, where it is desired to cancel the echo of the far-end speech while keeping the near-end speech distortion to a minimum. Therefore, it is natural to integrate a double-talk detector (DTD) into the system. Linear AECs typically employ a DTD to prevent the cancellation of the near-end speech in double-talk situations [

20,

21]. Several studies also integrate double-talk detection in deep-learning acoustic echo cancellation or residual echo suppression models. Zhang et al. [

22] employ an LSTM, which operates on the spectrogram magnitudes of the microphone and far-end reference signals, and predicts near-end speech presence via a binary mask that is applied to the output of the DNN AEC. Zhou and Leng [

23] formulate the problem as a multi-task learning problem where a single DNN learns to perform residual echo suppression and double-talk detection in tandem. The model consists of two output branches: the first branch predicts a PSF and acts as a RES and the second branch detects double-talk. The RES is conditioned on the DTD’s predictions by supplying it with features before the classification. Ma et al. [

24] propose to perform double-talk detection with two voice activity detectors (VADs), one for detecting near-end speech and the other for detecting far-end speech. Features from several layers of the VADs are fed to a gated recurrent unit (GRU) [

25] RNN that performs residual echo suppression. Ma et al. [

26] propose a multi-class classifier that receives the encoded features of the time-domain microphone and far-end signals and classifies each time frame independently of the AEC’s predictions. Zhang et al. [

27] also incorporate a VAD as an independent output branch in a residual echo suppression model. While exhibiting high residual echo performance, their results show that adding the VAD does not lead to improved objective metrics. The rest of the works mentioned above do not study the effect of the DTD/VAD on the RES’s performance. Therefore, it is worth studying the effect of DTD and RES integration configurations on the system’s performance, especially in the low SER setting where the echo may entirely screen the near-end speech.

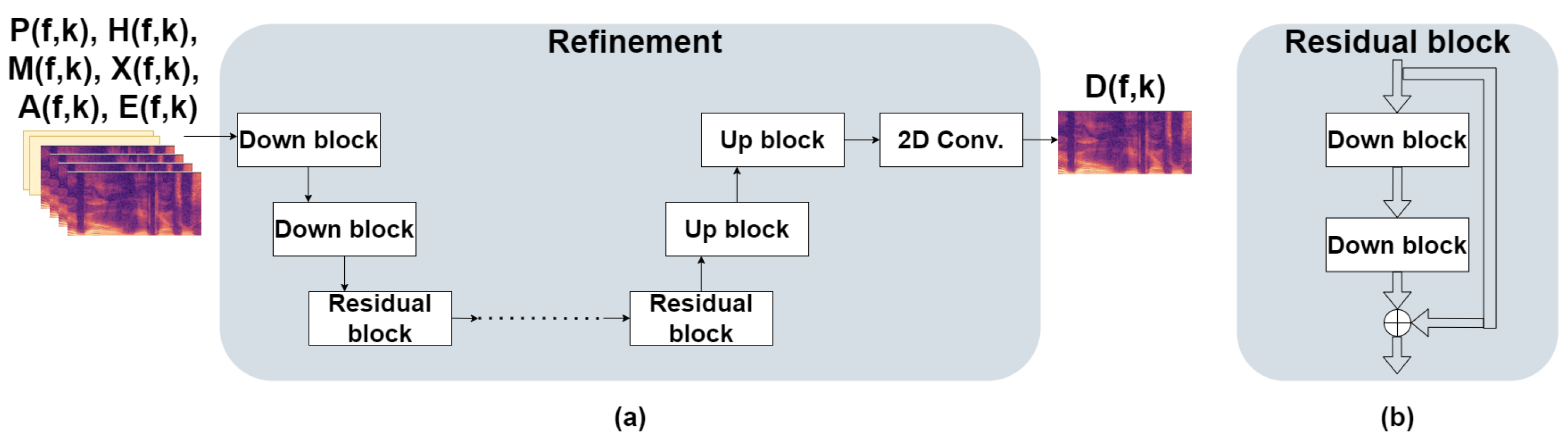

This study proposes a two-stage residual echo suppression deep-learning system focused on the challenging low SER scenario. Our approach is inspired by [

28], where a two-stage spectrogram masking and inpainting approach is taken to tackle the low signal-to-noise ratio (SNR) speech enhancement problem. We adopt this approach to the residual echo suppression setting while introducing changes and improvements. The first stage in our system is double-talk detection and spectrogram masking. We propose an architecture with fewer parameters and a faster inference time than the masking stage in [

28]. Furthermore, we study several ways of integrating double-talk detection in our model based on previous studies and show that the proposed configuration achieves the most significant improvement in residual echo suppression performance. The second stage in our system is spectrogram refinement. Unlike [

28], where the second stage consists of creating holes in the spectrogram and applying spectrogram inpainting to reconstruct the desired signal, we propose instead to perform spectrogram refinement. We optimize the model to maximize the desired speech quality measured by the perceptual evaluation of speech quality (PESQ) [

29] score by minimizing the perceptual metric for speech quality evaluation (PMSQE) loss function [

30]. We perform an ablation study to show the effectiveness of every component of the proposed system. Furthermore, we train and evaluate the proposed system in several levels of SER and show that it is most effective in the extremely-low SER setting. Finally, we compare the proposed system to several other residual echo suppression systems and show that it outperforms others in several residual echo suppression and speech quality measures.

The outline of this paper is as follows. In

Section 2, we formulate the residual echo suppression problem, present the proposed system’s components, and provide details regarding the data and model training procedure. Results and discussion are provided in

Section 3.

Section 4 concludes the paper.

3. Results

This section presents the performance measures used to evaluate the different systems, shows the ablation study results, and compares the proposed system with two other systems. Audio examples of the proposed system’s output, along with the microphone, error, and clean near-end speech, can be found in [

39].

3.1. Performance Measures

In order to evaluate the performance of the proposed and compared systems, two scenarios are considered: far-end only and double-talk. Near-end only periods are not considered for performance evaluation since all systems introduce little distortion to the input signal when no echo is present. Furthermore, since it is a trivial task to determine that the far-end speaker is silent, during these periods, the microphone signal can be directly passed to the system’s output. Thus, no distortion will be applied to it.

During far-end only periods, we expect the enhanced signal to have as low energy as possible (ideally, it is completely silent). Therefore, performance is evaluated during these periods using the echo return loss enhancement (ERLE), which measures the echo reduction between the microphone signal and the enhanced signal. ERLE is measured in dB and is defined as

ERLE may not always correlate well with human subjective ratings [

43]. AEC mean opinion score (AECMOS) [

44] provides a speech quality assessment metric for evaluating echo impairment that overcomes the drawbacks of conventional methods. AECMOS is a DNN trained to directly predict subjective ratings for echo impairment using ground-truth human ratings of more than 148 h of data. The model predicts two scores in the range

, one for echo impairment (AECMOS-echo) and the other for other degradations (AECMOS-degradations). The model distinguishes between three scenarios: near-end single-talk, far-end single-talk, and double-talk. In the far-end single-talk case, only AECMOS-echo is considered.

We aim to suppress the residual echo during double-talk periods while maintaining the near-end speech’s quality. During these periods, performance is evaluated using two different measures. The first measure is perceptual evaluation of speech quality (PESQ) [

29]. PESQ is an intrusive speech quality metric based on an algorithm designed to approximate a subjective evaluation of a degraded audio sample. PESQ score range is

, where a higher score indicates better speech quality. However, like ERLE, PESQ does not always correlate well with subjective human ratings. Therefore, the second performance measure is AECMOS-echo which measures the echo reduction during double-talk periods. We do not use AECMOS-degradations for performance evaluation for two reasons. The first reason is that we focus on the low SER scenario without including intense noise or distortions, which may cause additional degradation. The second reason is that, as we show in the results, AECMOS-degradations fail to capture the true residual echo suppression performance in the low SER case.

Finally, although not a performance measure, we formally define SER, measured in double-talk periods and used to measure the near-end signal’s energy relative to the echo signal’s energy. SER is expressed in dB as

3.2. Ablation Study

First, we present the ablation study’s results, showing how each part of the proposed system contributes to the performance.

Table 1 shows the performance of the AEC, the performance of the AEC followed by the masking stage with and without double-talk detection (AEC+M+D and AEC+M, respectively), the performance of the AEC followed by the refinement stage without the masking stage’s outputs (AEC+R, using only the input signals), and the entire system’s performance—AEC followed by masking and double-talk detection followed by the refinement model (AEC+M+D+R).

From the table, combining the DTD with the masking model improves ERLE by almost 2 dB while achieving on-par far-end only AECMOS, which indicates better echo suppression performance when there is no near-end speech. During double-talk, there is a notable increase of

in the PESQ score and a minor increase of

in AECMOS. These results indicate that combining the DTD with the masking model improves performance compared to not combining a DTD during double-talk periods. When adding the refinement stage to the masking+DTD stage, there is an additional improvement in all measures. Most notably, ERLE is increased by an additional

dB, and PESQ is increased by

. Far-end AECMOS and double-talk AECMOS are also improved, albeit by a negligible amount. It can also be observed how, without first employing the masking stage, the refinement stage on its own achieves on-par performance with the masking model without the DTD. This further asserts the efficacy of the proposed system; the masking stage, aided by the DTD, performs the initial residual echo suppression, and the refinement stage, which relies on the features provided by the masking stage, further improves performance. It can be concluded from the ablation study that the proposed configuration of the DTD aids the masking model’s performance and that the refinement stage indeed performs refinement to the outputs of the first stage since its stand-alone performance is inferior.

Figure 4 shows examples of spectrograms from different stages of the system.

It can be observed from the figure that the masking model suppresses the majority of the residual echo, notably evident after s and above 4000 Hz. The finer details of the near-end speech are blurred compared to the near-end spectrogram. The refinement model refines the output of the masking model, resulting in a finer-detailed spectrogram that closely resembles the near-end spectrogram.

Next, we study different ways to combine the DTD with the masking model. We compare five different configurations:

Table 2 shows the performance of the masking model combined with the DTD in each of the above configurations. The proposed configuration achieves the best residual echo suppression performance during far-end only periods, as indicated by ERLE and AECMOS. The proposed configuration’s ERLE is more than 1 dB greater than the second-best ERLE (Conf. 3), and the AECMOS equals the no-DTD baseline AECMOS. In contrast, all other configurations see a minor degradation. In the double-talk scenario, the proposed configuration’s PESQ score is nearly

greater than the second-best PESQ (Conf. 2), which is only

greater than the no-DTD baseline PESQ. The AECMOS is also the highest among all compared configurations’ AECMOS. Overall, results show that the proposed configuration of DTD combined with the masking model achieves a notable performance improvement compared to not combining a DTD, where all other configurations have little to no effect on performance. We conclude that combining a DTD with the masking model is beneficial when the double-talk detection is performed before the masking and that it is necessary to learn a feature representation from the DTD’s predictions to enable the masking model to use these predictions effectively.

For completion, we provide the DTD’s performance in

Table 3. Since the proposed DTD operates as a multi-label classifier where the labels are the presence of near-end speech and far-end speech, double-talk is not an actual class for the classifier. Instead, it is determined for time-frames containing both near-end and far-end speech. The provided results for near-end and far-end include time frames where both are present (double-talk). Multi-class classification results are also provided for comparison. We can observe from the table that both near-end and far-end performance is high and that precision and recall are balanced. The far-end performance is slightly better than that of the near-end. This small performance gap is expected in the low SER setting since, during double-talk periods, the near-end speech may be almost indistinguishable. This observation is also evident in the double-talk results, notably degraded. During these periods, the DTD may predict a time frame as containing far-end speech and not containing near-end speech. When using the DTD’s prediction directly as inputs to the subsequent masking model, it may cancel these time frames, as it learns to do so from the actual far-end-only time frames. Learning a representation from the DTD’s predictions helps overcome this issue. It can also be observed from the table that the proposed multi-label classifier outperforms the multi-class classifier. While near-end performance is on-par, the far-end performance and overall accuracy of the multi-label classifier are superior to that of the multi-class classifier. In the double-talk scenario, the multi-label classifier achieves superior precision and inferior recall, and its overall accuracy is notably superior to that of the multi-class classifier.

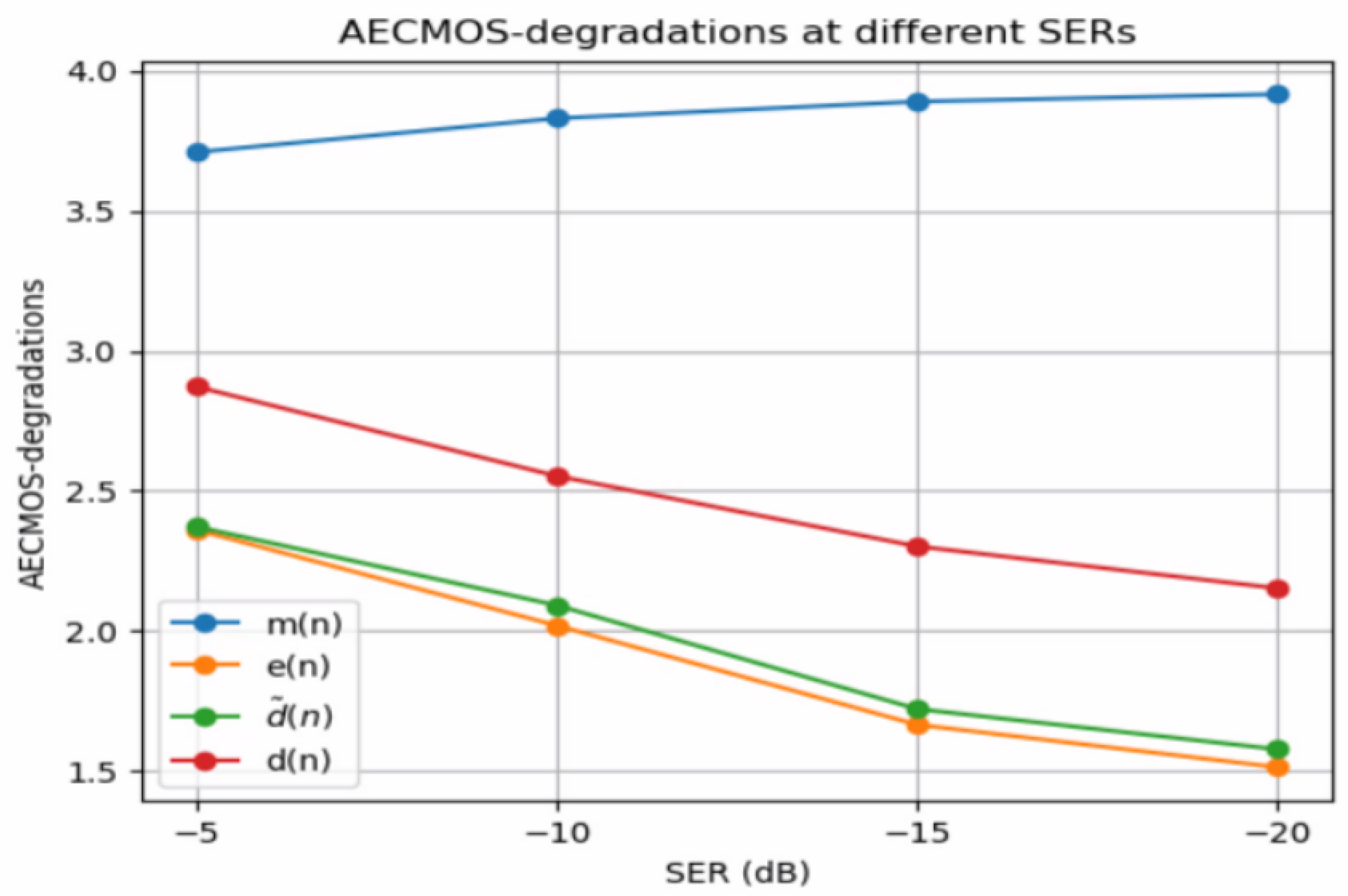

Finally, we address an issue with double-talk AECMOS-degradations in the low SER scenario.

Figure 5 shows double-talk AECMOS-degradations at different SERs, where the ‘degraded’ signals used to obtain the scores are

,

,

, and

. The graphs show how the microphone signal’s AECMOS is substantially higher than the clean near-end speech’s AECMOS. Furthermore, the gap between the two is more significant when the SER is lower. When the SER is low, the far-end speech is loud (and its quality is high since we do not consider noise or additional distortions in our data), while the near-end speech is nearly indistinguishable. Thus, the microphone signal’s AECMOS-degradations are high, despite mainly containing undesired echo. On the other hand, the clean near-end speech signal’s AECMOS-degradations are considerably lower, degrading further when the SER is lowered. This may indicate that the AECMOS model was not trained on such extreme cases since we expect this score to be high regardless of the SER as it contains no noise or distortions. Nevertheless, we can see that, at all SERs, the enhanced signal

obtains slightly better AECMOS-degradations than the error signal

, indicating that the proposed model improves AECMOS-degradations compared to its input.

3.3. Comparative Results

We compare the proposed system to two recent RES systems: Regression-U-Net [

18] and Complex-Masking [

17]. Both systems operate in the T-F domain. Regression-U-Net’s inputs are the spectrogram magnitudes of

and

. The model predicts the spectrogram magnitudes of

. Since we optimize our refinement model to increase the PESQ score, we choose

in the Regression-U-Net’s implementation, as it yields the best PESQ [

18]. Complex-Masking’s model consists of a convolutional encoder and decoder and a GRU between them. All layers in the model are complex, which allows the model to learn a phase-aware mask while utilizing the complete information from the input signals. The model’s inputs are the complex spectrograms of

and

, and its output is a complex mask applied to the spectrogram of

. We note the differences between the two systems: Regression-U-Net is real-valued and performs regression (outputs the desired signal directly). At the same time, Complex-Masking is complex-valued and performs masking rather than regression. Both systems were trained using the original code provided by the authors and the same training data used to train the proposed system, and they were evaluated using the same test data. Since our work focuses on the RES part, all systems used the same preceding linear AEC.

Table 4 shows the performance of the different systems, their number of parameters and memory consumption, and their real-time factor (RTF), defined as

where

is the time it takes the model to infer an output for an input of duration

. All systems’ RTF is measured on the standard Intel Core i7-11700K CPU

GHz.

Results show that the proposed and Complex-Masking systems achieve on-par performance during far-end only periods. Complex-Masking achieves negligibly better ERLE, and the proposed system achieves negligibly better AECMOS-echo. Regression-U-Net’s performance is inferior to the other two systems - most notably, its ERLE is dB less than that of the proposed system. Regression-U-Net’s performance is also inferior to the other systems during double-talk periods. This performance gap may be due to the model’s low complexity; it has only M parameters, which is M fewer than Complex-Masking. Therefore, it may be hard for the model to learn the input-output relations in such extreme conditions properly. Contrary to far-end only periods, during double-talk, the proposed system’s performance is notably superior to that of Complex-Masking. The proposed system’s PESQ is higher by more than , and AECMOS is higher by dB. Although the proposed system’s number of parameters is about three times greater than that of Complex-Masking, its RTF is significantly lower. Thus, when inference time is a more critical constraint than memory consumption, the proposed system is favorable over Complex-Masking. It is worth noting how the proposed system’s RTF is only slightly larger than Regression-U-Net’s RTF, despite having significantly more parameters and higher memory consumption. It is due to the difference in the systems’ input sizes; the proposed model was trained on 2 s-long segments while Regression-U-Net was trained on s-long segments. Although the proposed system’s architecture allows for variable-size input, it provides the best performance for 2 s-long inputs. Thus, in cases where low memory consumption and short algorithmic delay are high priorities while performance is not, Regression-U-Net might be favorable. We also note Complex-Masking’s high RTF despite the relatively small parameter number. This is due to the complex operations, which are more time-consuming.

Next, we study the different systems’ performance in different SERs. We focus on far-end only ERLE and double-talk PESQ.

Figure 6a shows the ERLE difference between the systems’ output signal

and the error signal

. Similarly,

Figure 6b shows the PESQ difference.

The proposed system’s graphs show its efficiency in lower SERs - it can be seen that both and are increased when the SER is lowered, and the increase rate is also increasing (the graphs’ slopes are higher in lower SERs). In other words, the proposed system is more effective in lower SERs. A similar trend can be seen in Regression-U-Net’s performance, although the increase rate is lower. Regarding Complex-Masking, which is more comparable to the proposed system, it can be seen that although its ERLE is consistently higher than the proposed system’s ERLE, the rate at which increases is lower. At dB SER, the gap between the two graphs is negligible. The increase rate of is lower at lower SERs, while for the proposed system, it grows larger, i.e., the proposed system is more effective at lower SERs than Complex-Masking.

Finally, we compare the performance of the proposed masking architecture (AEC+M, without the DTD) with the performance of the masking architecture proposed in [

28] (Masking-inpainting).

Table 5 shows the different performance measures, the number of parameters, the memory consumption, and the RTF of the models.

Results show that the performance measures of the two models are on-par with negligible differences. On the contrary, the proposed model is preferable to Masking-inpainting’s model concerning memory and running-time performance. Masking-inpainting’s parameter number and memory consumption are roughly

times that of AEC+M, and its RTF is an order of magnitude greater than AEC+M’s RTF. Hence the choice of the proposed masking architecture over the one proposed in [

28].