Scene Wireframes Sketching for Drones †

Abstract

:1. Introduction

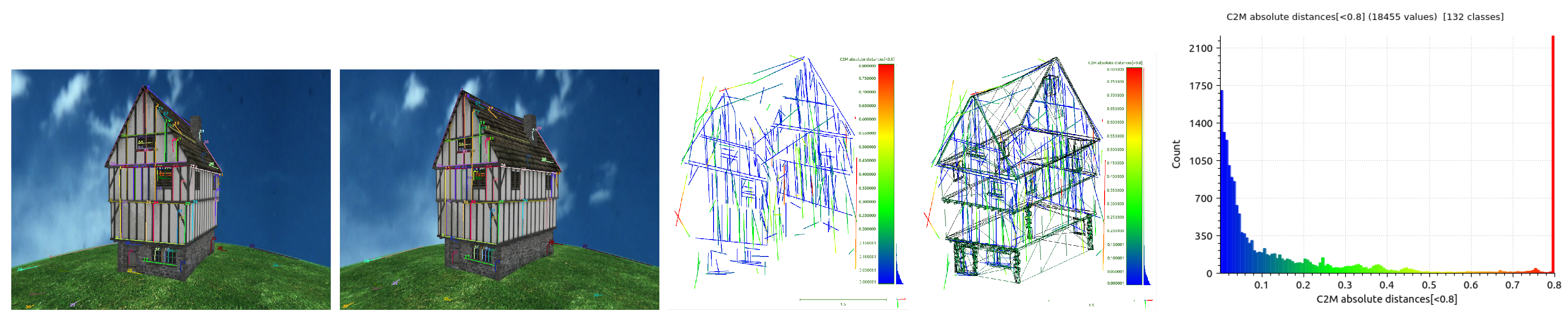

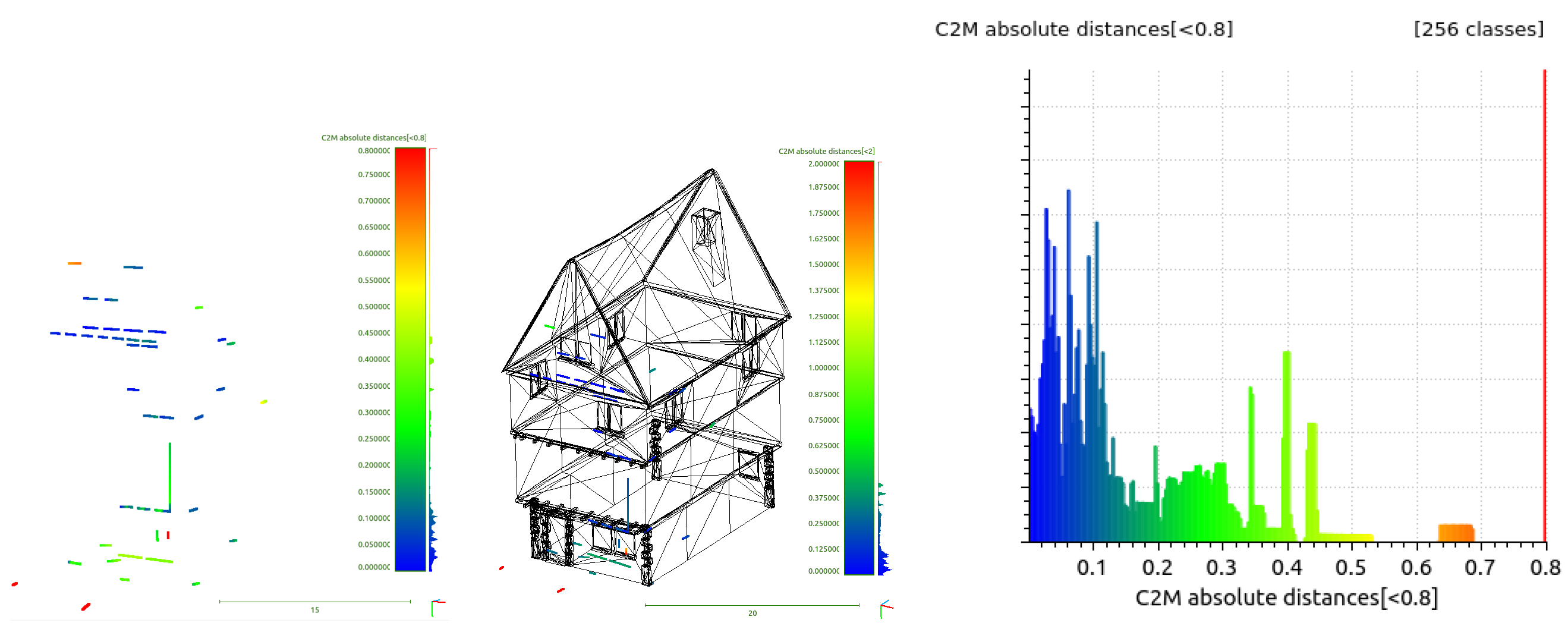

2. Materials and Methods

3. Results

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aereal Vehicle |

| SfM | Structure-From-Motion |

References

- Hartley, R.I.; Sturm, P. Triangulation. Comput. Vis. Image Underst. 1997, 68, 146–157. [Google Scholar] [CrossRef]

- Triggs, B.; Mclauchlan, P.; Hartley, R.; Fitzgibbon, A. Bundle Adjustment—A Modern Synthesis. In Proceedings of the ICCV ’99 International Workshop on Vision Algorithms: Theory and Practice, Corfu, Greece, 21–22 September 1999; Volume 1, pp. 298–372. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 2, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 IEEE International Conference on IEEE, Computer Vision (ICCV), Kona, HI, USA, 5–7 January 2011; pp. 2564–2571. [Google Scholar]

- Alcantarilla, P.; Bartoli, A.; Davison, A. KAZE features. In Proceedings of the Computer Vision–ECCV 2012, Florence, Italy, 7–13 October 2012; pp. 214–227. [Google Scholar]

- Pollefeys, M.; Van Gool, L.; Vergauwen, M.; Verbiest, F.; Cornelis, K.; Tops, J.; Koch, R. Visual modeling with a hand-held camera. Int. J. Comput. Vis. 2004, 59, 207–232. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Photo tourism: Exploring photo collections in 3D. ACM Trans. Graph. (TOG) 2006, 25, 835–846. [Google Scholar] [CrossRef]

- López, J.; Santos, R.; Fdez-Vidal, X.R.; Pardo, X.M. Two-view line matching algorithm based on context and appearance in low-textured images. Pattern Recognit. 2015, 48, 2164–2184. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge Press, 2004. [Google Scholar]

- Jain, A.; Kurz, C.; Thormählen, T.; Seidel, H.P. Exploiting Global Connectivity Constraints for Reconstruction of 3D Line Segment from Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2010), San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Hofer, M.; Maurer, M.; Bischof, H. Efficient 3D scene abstraction using line segments. Comput. Vis. Image Underst. 2016, 157, 167–178. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Santos, R.; Pardo, X.M.; Fdez-Vidal, X.R. Scene Wireframes Sketching for Drones. Proceedings 2018, 2, 1193. https://doi.org/10.3390/proceedings2181193

Santos R, Pardo XM, Fdez-Vidal XR. Scene Wireframes Sketching for Drones. Proceedings. 2018; 2(18):1193. https://doi.org/10.3390/proceedings2181193

Chicago/Turabian StyleSantos, Roi, Xose M. Pardo, and Xose R. Fdez-Vidal. 2018. "Scene Wireframes Sketching for Drones" Proceedings 2, no. 18: 1193. https://doi.org/10.3390/proceedings2181193