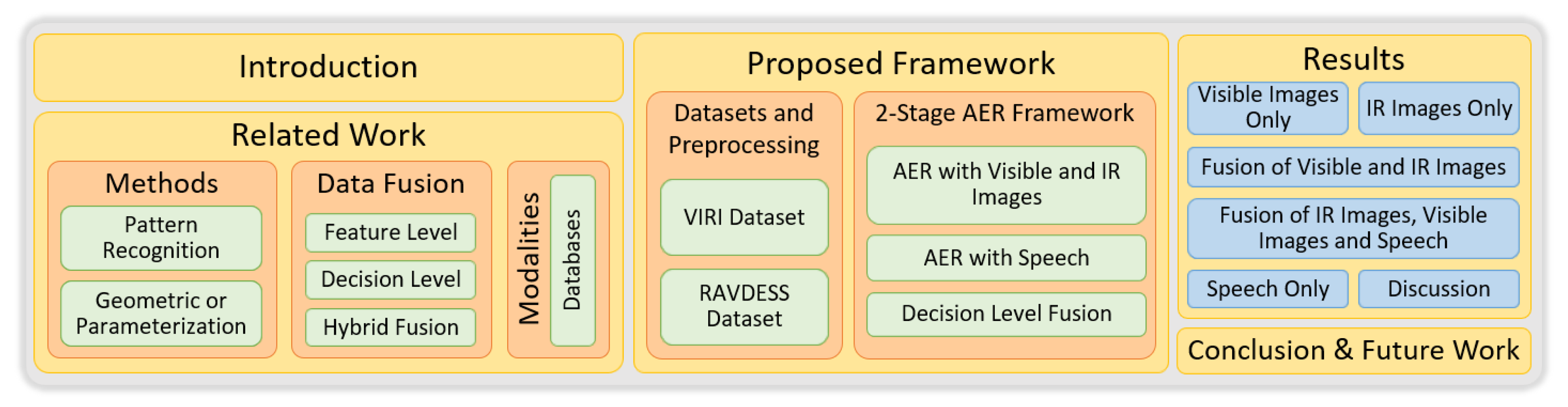

A Multimodal Facial Emotion Recognition Framework through the Fusion of Speech with Visible and Infrared Images

Abstract

:1. Introduction

2. Related Work

2.1. Use of Speech, Visible, and Infrared Images

2.2. Emotion Identification

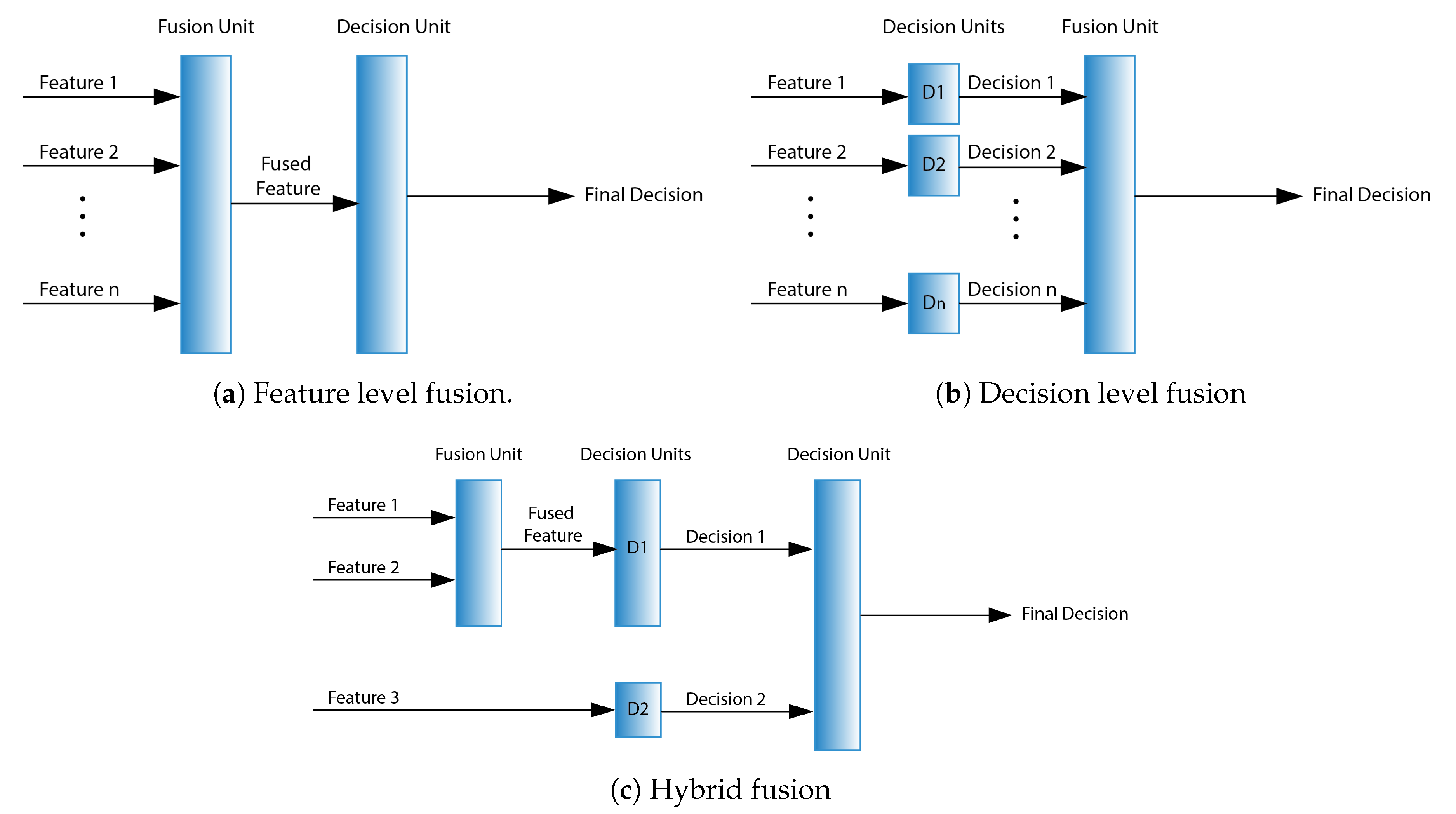

2.3. Data Fusion

2.3.1. Feature Level

2.3.2. Decision Level

2.3.3. Hybrid Fusion

3. Proposed Framework

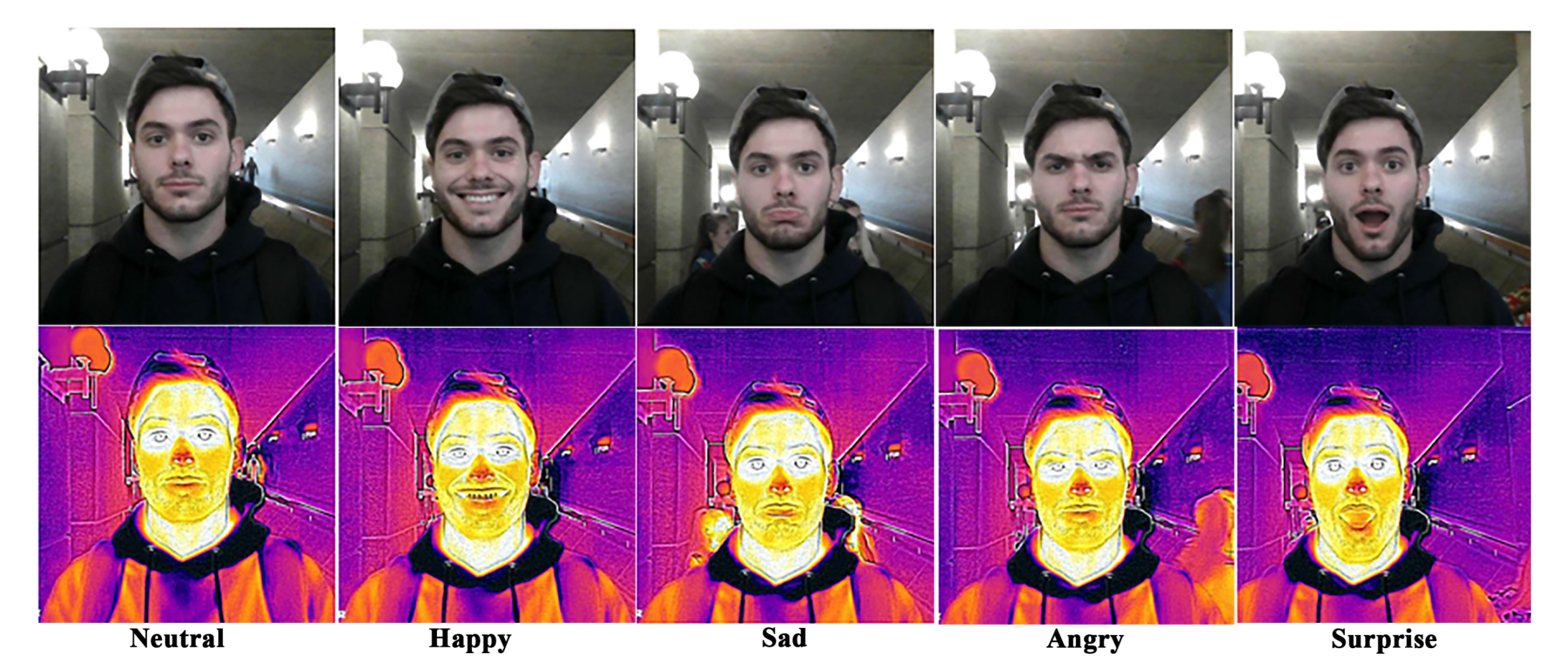

3.1. Datasets and Pre-Processing

3.1.1. VIRI Dataset

3.1.2. RAVDESS Dataset

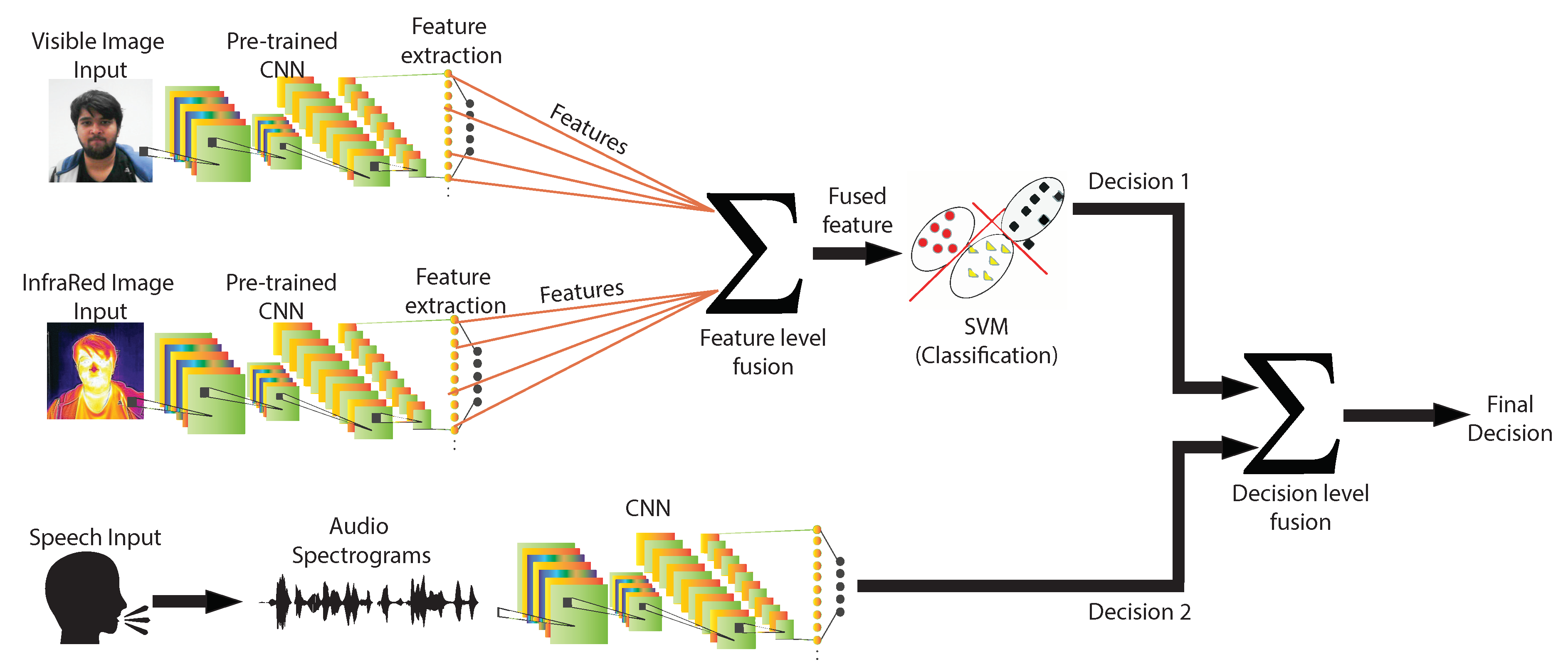

3.2. Two-Stage AER Framework

3.2.1. Stage Ia: AER with Visible and IR Images

- Choose a pre-trained network.

- Replace the final layers to adapt to the new dataset.

- Tweak the values of hyperparameters to achieve higher accuracy and train.

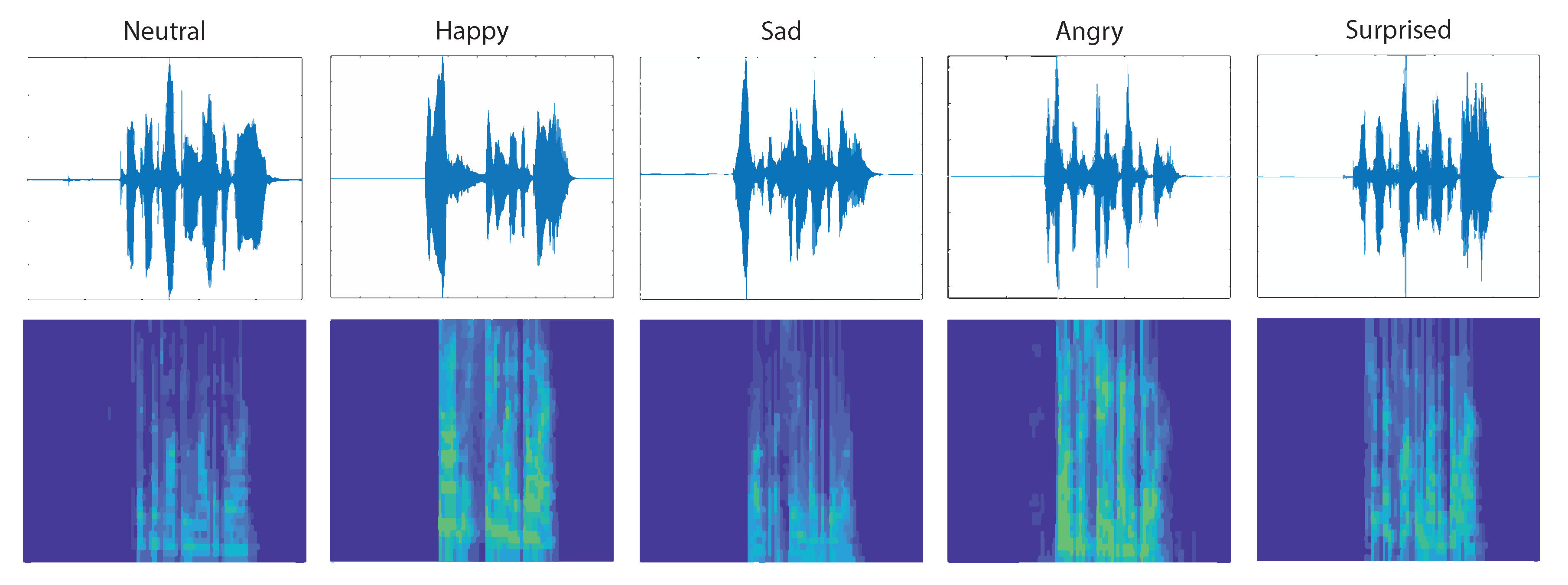

3.2.2. Stage Ib: AER with Speech

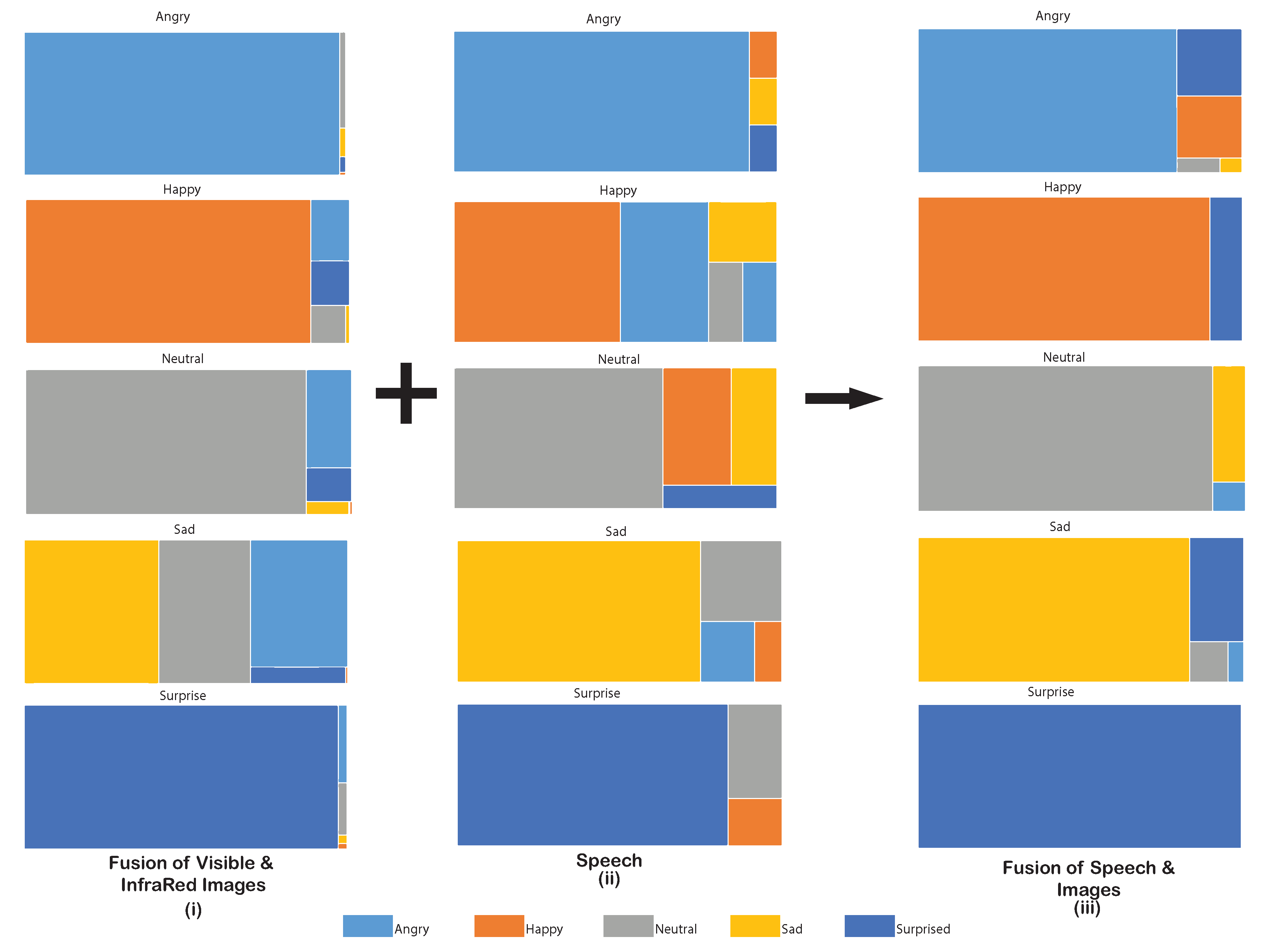

3.2.3. Stage II: Decision Level Fusion

4. Results

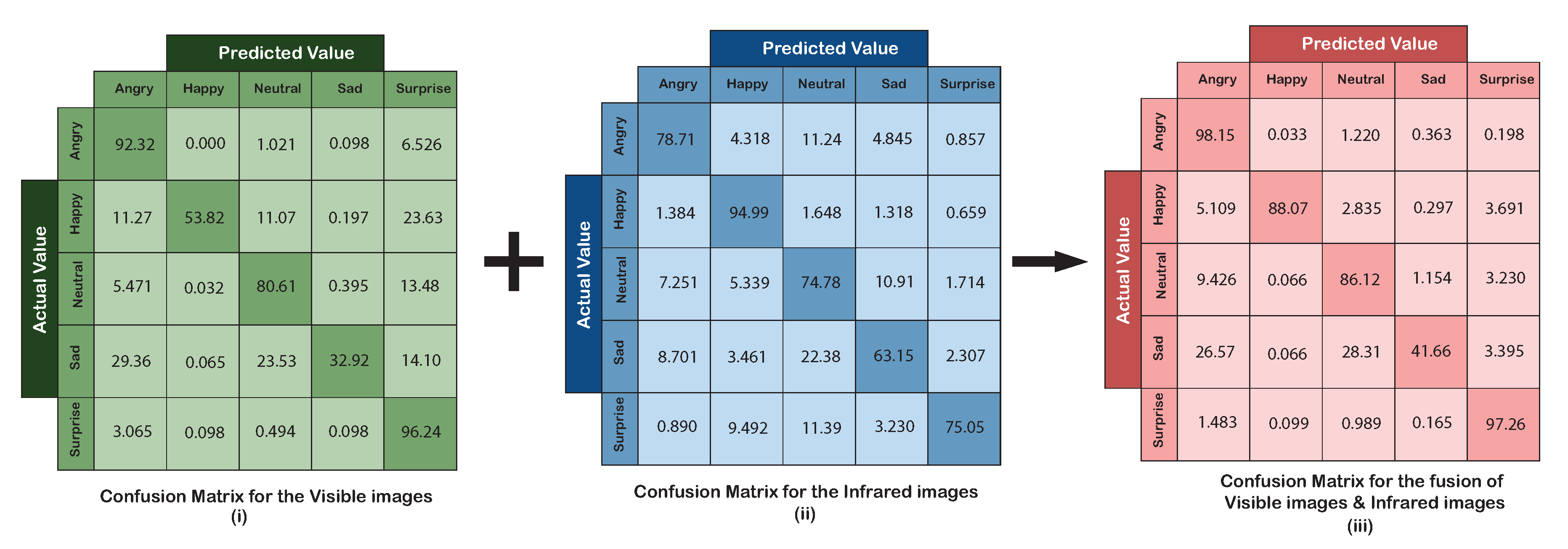

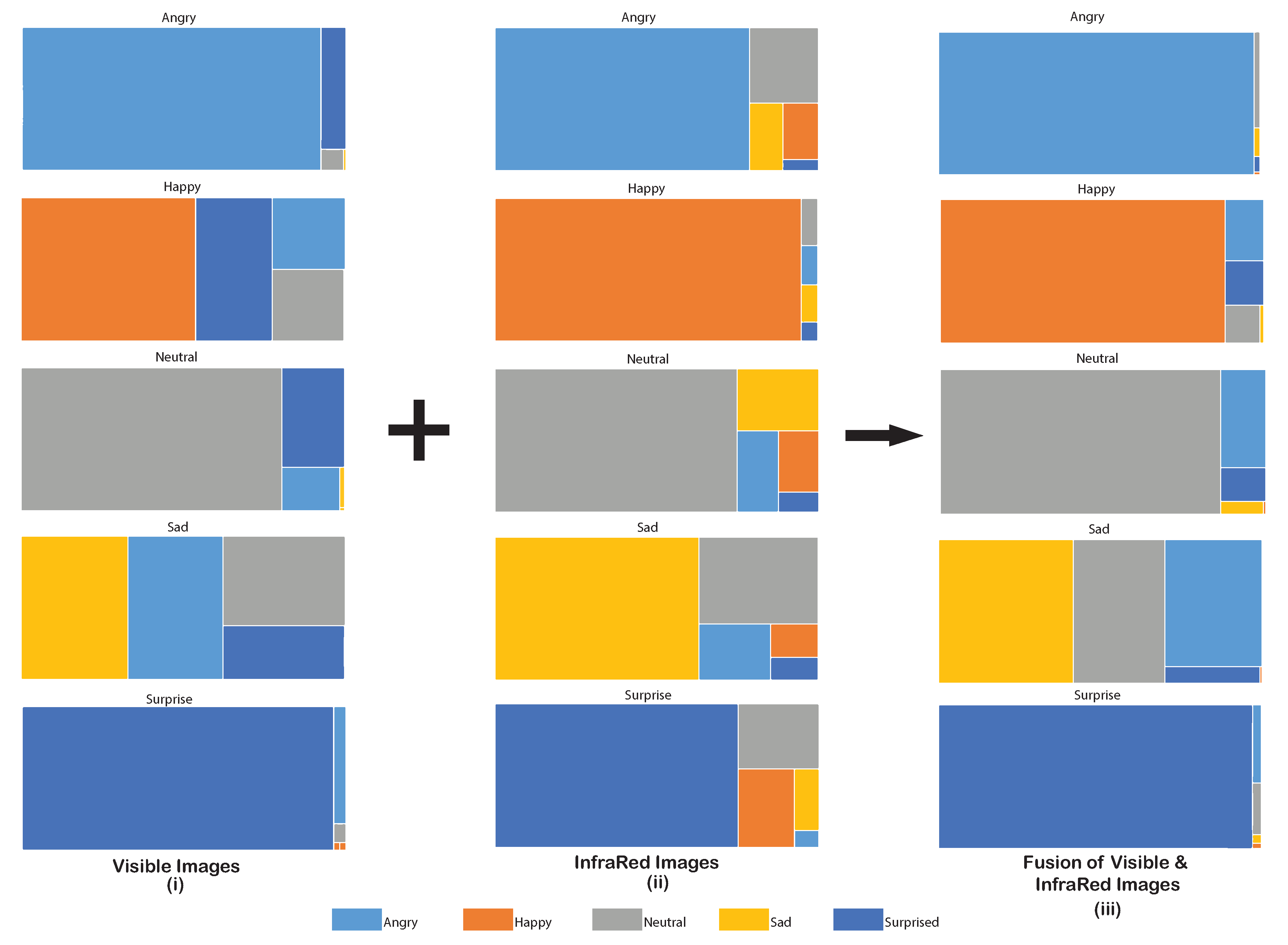

4.1. Visible Images Only

4.2. IR Images Only

4.3. Fusion of IR and Visible Images

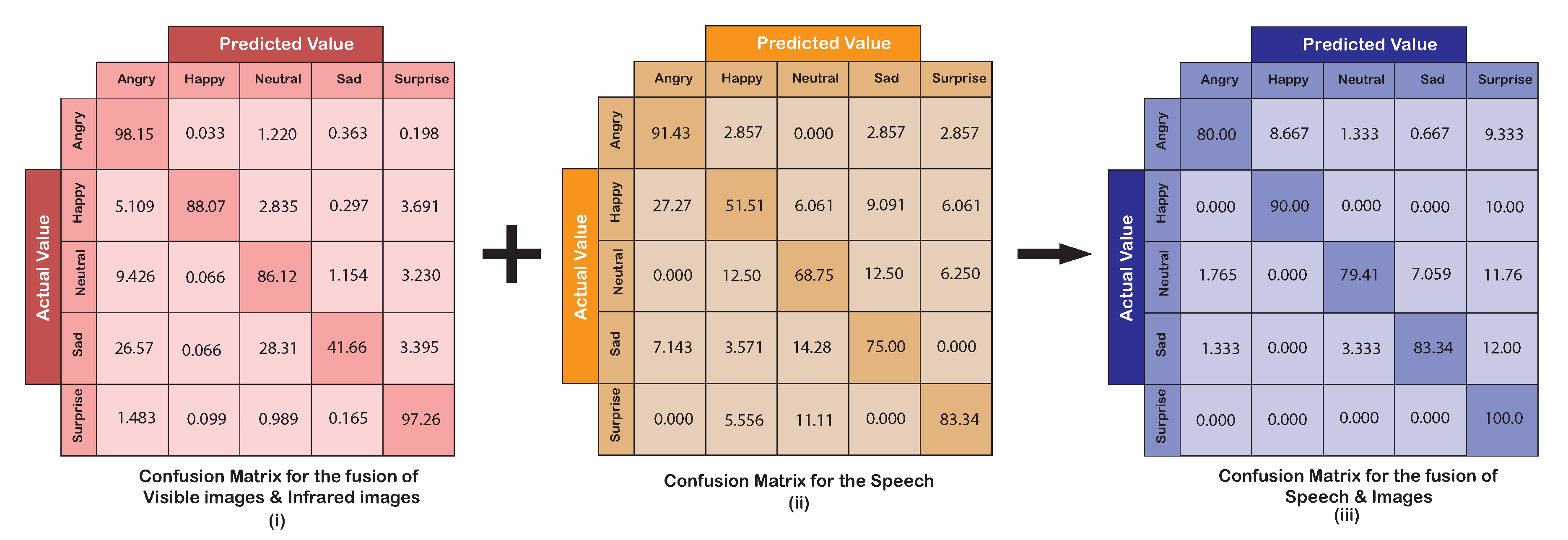

4.4. Speech Only

4.5. Fusion of IR Images, Visible Images, and Speech

4.6. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References and Notes

- Ekman, P.; Friesen, W.V. Facial Action Coding System; Consulting Psychologists Press, Stanford University: Palo Alto, Santa Clara County, CA, USA, 1977. [Google Scholar]

- Ekman, P.; Friesen, W.; Hager, J. Facial Action Coding System: The Manual on CD ROM. In A Human Face; Network Information Research Co.: Salt Lake City, UT, USA, 2002. [Google Scholar]

- Ekman, P.; Friesen, W.V.; Hager, J.C. FACS investigator’s guide. In A Human Face; Network Information Research Co.: Salt Lake City, UT, USA, 2002; p. 96. [Google Scholar]

- Kahou, S.E.; Bouthillier, X.; Lamblin, P.; Gulcehre, C.; Michalski, V.; Konda, K.; Jean, S.; Froumenty, P.; Dauphin, Y.; Boulanger-Lewandowski, N.; et al. Emonets: Multimodal deep learning approaches for emotion recognition in video. J. Multimodal User Interfaces 2016, 10, 99–111. [Google Scholar] [CrossRef] [Green Version]

- Kim, B.K.; Dong, S.Y.; Roh, J.; Kim, G.; Lee, S.Y. Fusing Aligned and Non-Aligned Face Information for Automatic Affect Recognition in the Wild: A Deep Learning Approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 48–57. [Google Scholar]

- Sun, B.; Li, L.; Wu, X.; Zuo, T.; Chen, Y.; Zhou, G.; He, J.; Zhu, X. Combining feature-level and decision-level fusion in a hierarchical classifier for emotion recognition in the wild. J. Multimodal User Interfaces 2016, 10, 125–137. [Google Scholar] [CrossRef]

- Bahreini, K.; Nadolski, R.; Westera, W. Data Fusion for Real-time Multimodal Emotion Recognition through Webcams and Microphones in E-Learning. Int. J.-Hum.-Comput. Interact. 2016, 32, 415–430. [Google Scholar] [CrossRef] [Green Version]

- Xu, C.; Cao, T.; Feng, Z.; Dong, C. Multi-Modal Fusion Emotion Recognition Based on HMM and ANN. In Proceedings of the Contemporary Research on E-business Technology and Strategy, Tianjin, China, 29–31 August 2012; pp. 541–550. [Google Scholar]

- Alonso-Martín, F.; Malfaz, M.; Sequeira, J.; Gorostiza, J.F.; Salichs, M.A. A multimodal emotion detection system during human–robot interaction. Sensors 2013, 13, 15549–15581. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, J.; Chen, Z.; Chi, Z.; Fu, H. Emotion recognition in the wild with feature fusion and multiple kernel learning. In Proceedings of the 16th International Conference on Multimodal Interaction, Istanbul, Turkey, 12–16 November 2014; ACM: New York, NY, USA, 2014; pp. 508–513. [Google Scholar]

- Tzirakis, P.; Trigeorgis, G.; Nicolaou, M.A.; Schuller, B.; Zafeiriou, S. End-to-End Multimodal Emotion Recognition using Deep Neural Networks. arXiv 2017, arXiv:1704.08619. [Google Scholar] [CrossRef] [Green Version]

- Torres, J.M.M.; Stepanov, E.A. Enhanced face/audio emotion recognition: Video and instance level classification using ConvNets and restricted Boltzmann Machines. In Proceedings of the International Conference on Web Intelligence, Leipzig, Germany, 23–27 August 2017; ACM: New York, NY, USA, 2017; pp. 939–946. [Google Scholar]

- Dobrišek, S.; Gajšek, R.; Mihelič, F.; Pavešić, N.; Štruc, V. Towards efficient multi-modal emotion recognition. Int. J. Adv. Robot. Syst. 2013, 10, 53. [Google Scholar] [CrossRef] [Green Version]

- Kim, Y.; Lee, H.; Provost, E.M. Deep learning for robust feature generation in audiovisual emotion recognition. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 3687–3691. [Google Scholar]

- Hossain, M.S.; Muhammad, G.; Alhamid, M.F.; Song, B.; Al-Mutib, K. Audio-visual emotion recognition using big data towards 5G. Mob. Netw. Appl. 2016, 21, 753–763. [Google Scholar] [CrossRef]

- Hossain, M.S.; Muhammad, G. Audio-visual emotion recognition using multi-directional regression and Ridgelet transform. J. Multimodal User Interfaces 2016, 10, 325–333. [Google Scholar] [CrossRef]

- Noroozi, F.; Marjanovic, M.; Njegus, A.; Escalera, S.; Anbarjafari, G. Fusion of classifier predictions for audio-visual emotion recognition. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 61–66. [Google Scholar]

- Chen, J.; Chen, Z.; Chi, Z.; Fu, H. Facial expression recognition in video with multiple feature fusion. IEEE Trans. Affect. Comput. 2016, 9, 38–50. [Google Scholar] [CrossRef]

- Yan, J.; Zheng, W.; Xu, Q.; Lu, G.; Li, H.; Wang, B. Sparse Kernel Reduced-Rank Regression for Bimodal Emotion Recognition From Facial Expression and Speech. IEEE Trans. Multimed. 2016, 18, 1319–1329. [Google Scholar] [CrossRef]

- Kim, Y. Exploring sources of variation in human behavioral data: Towards automatic audio-visual emotion recognition. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, China, 21–24 September 2015; pp. 748–753. [Google Scholar]

- Pei, E.; Yang, L.; Jiang, D.; Sahli, H. Multimodal dimensional affect recognition using deep bidirectional long short-term memory recurrent neural networks. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, China, 21–24 September 2015; pp. 208–214. [Google Scholar]

- Nguyen, D.; Nguyen, K.; Sridharan, S.; Ghasemi, A.; Dean, D.; Fookes, C. Deep spatio-temporal features for multimodal emotion recognition. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 1215–1223. [Google Scholar]

- Fu, J.; Mao, Q.; Tu, J.; Zhan, Y. Multimodal shared features learning for emotion recognition by enhanced sparse local discriminative canonical correlation analysis. Multimed. Syst. 2019, 25, 451–461. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, S.; Huang, T.; Gao, W.; Tian, Q. Learning Affective Features with a Hybrid Deep Model for Audio-Visual Emotion Recognition. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 3030–3043. [Google Scholar] [CrossRef]

- Cid, F.; Manso, L.J.; Núnez, P. A Novel Multimodal Emotion Recognition Approach for Affective Human Robot Interaction. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1–9. [Google Scholar]

- Haq, S.; Jan, T.; Jehangir, A.; Asif, M.; Ali, A.; Ahmad, N. Bimodal Human Emotion Classification in the Speaker-Dependent Scenario; Pakistan Academy of Sciences: Islamabad, Pakistan, 2015; p. 27. [Google Scholar]

- Gideon, J.; Zhang, B.; Aldeneh, Z.; Kim, Y.; Khorram, S.; Le, D.; Provost, E.M. Wild wild emotion: A multimodal ensemble approach. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; ACM: New York, NY, USA, 2016; pp. 501–505. [Google Scholar]

- Wang, Y.; Guan, L.; Venetsanopoulos, A.N. Kernel cross-modal factor analysis for information fusion with application to bimodal emotion recognition. IEEE Trans. Multimed. 2012, 14, 597–607. [Google Scholar] [CrossRef]

- Noroozi, F.; Marjanovic, M.; Njegus, A.; Escalera, S.; Anbarjafari, G. Audio-visual emotion recognition in video clips. IEEE Trans. Affect. Comput. 2017, 10, 60–75. [Google Scholar] [CrossRef]

- Afouras, T.; Chung, J.S.; Senior, A.; Vinyals, O.; Zisserman, A. Deep audio-visual speech recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018. [Google Scholar] [CrossRef] [Green Version]

- Albanie, S.; Nagrani, A.; Vedaldi, A.; Zisserman, A. Emotion recognition in speech using cross-modal transfer in the wild. arXiv 2018, arXiv:1808.05561. [Google Scholar]

- Yan, J.; Zheng, W.; Cui, Z.; Tang, C.; Zhang, T.; Zong, Y. Multi-cue fusion for emotion recognition in the wild. Neurocomputing 2018, 309, 27–35. [Google Scholar] [CrossRef]

- Seng, K.P.; Ang, L.M.; Ooi, C.S. A Combined Rule-Based & Machine Learning Audio-Visual Emotion Recognition Approach. IEEE Trans. Affect. Comput. 2018, 9, 3–13. [Google Scholar]

- Dhall, A.; Kaur, A.; Goecke, R.; Gedeon, T. Emotiw 2018: Audio-video, student engagement and group-level affect prediction. In Proceedings of the 2018 on International Conference on Multimodal Interaction, Boulder, CO, USA, 16–20 October 2018; ACM: New York, NY, USA, 2018; pp. 653–656. [Google Scholar]

- Avots, E.; Sapiński, T.; Bachmann, M.; Kamińska, D. Audiovisual emotion recognition in wild. Mach. Vis. Appl. 2018, 30, 975–985. [Google Scholar] [CrossRef] [Green Version]

- Wagner, J.; Andre, E.; Lingenfelser, F.; Kim, J. Exploring fusion methods for multimodal emotion recognition with missing data. IEEE Trans. Affect. Comput. 2011, 2, 206–218. [Google Scholar] [CrossRef]

- Kessous, L.; Castellano, G.; Caridakis, G. Multimodal emotion recognition in speech-based interaction using facial expression, body gesture and acoustic analysis. J. Multimodal User Interfaces 2010, 3, 33–48. [Google Scholar] [CrossRef] [Green Version]

- Ranganathan, H.; Chakraborty, S.; Panchanathan, S. Multimodal emotion recognition using deep learning architectures. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–9. [Google Scholar]

- Caridakis, G.; Castellano, G.; Kessous, L.; Raouzaiou, A.; Malatesta, L.; Asteriadis, S.; Karpouzis, K. Multimodal emotion recognition from expressive faces, body gestures and speech. In Artificial Intelligence and Innovations 2007: From Theory to Applications; Springer: Boston, MA, USA, 2007; Volume 247, pp. 375–388. [Google Scholar]

- Ghayoumi, M.; Bansal, A.K. Multimodal architecture for emotion in robots using deep learning. In Proceedings of the Future Technologies Conference (FTC), San Francisco, CA, USA, 6–7 December 2016; pp. 901–907. [Google Scholar]

- Ghayoumi, M.; Thafar, M.; Bansal, A.K. Towards Formal Multimodal Analysis of Emotions for Affective Computing. In Proceedings of the 22nd International Conference on Distributed Multimedia Systems, Salerno, Italy, 25–26 November 2016; pp. 48–54. [Google Scholar]

- Filntisis, P.P.; Efthymiou, N.; Koutras, P.; Potamianos, G.; Maragos, P. Fusing Body Posture with Facial Expressions for Joint Recognition of Affect in Child-Robot Interaction. arXiv 2019, arXiv:1901.01805. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; He, M.; Gao, Z.; He, S.; Ji, Q. Emotion recognition from thermal infrared images using deep Boltzmann machine. Front. Comput. Sci. 2014, 8, 609–618. [Google Scholar] [CrossRef]

- Wang, S.; Liu, Z.; Lv, S.; Lv, Y.; Wu, G.; Peng, P.; Chen, F.; Wang, X. A natural visible and infrared facial expression database for expression recognition and emotion inference. IEEE Trans. Multimed. 2010, 12, 682–691. [Google Scholar] [CrossRef]

- Abidi, B. Dataset 02: IRIS Thermal/Visible Face Database. DOE University Research Program in Robotics under grant DOE-DE-FG02-86NE37968. Available online: http://vcipl-okstate.org/pbvs/bench/ (accessed on 6 August 2020).

- Elbarawy, Y.M.; El-Sayed, R.S.; Ghali, N.I. Local Entropy and Standard Deviation for Facial Expressions Recognition in Thermal Imaging. Bull. Electr. Eng. Inform. 2018, 7, 580–586. [Google Scholar]

- He, S.; Wang, S.; Lan, W.; Fu, H.; Ji, Q. Facial expression recognition using deep Boltzmann machine from thermal infrared images. In Proceedings of the 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction (ACII), Geneva, Switzerland, 2–5 September 2013; pp. 239–244. [Google Scholar]

- Basu, A.; Routray, A.; Shit, S.; Deb, A.K. Human emotion recognition from facial thermal image based on fused statistical feature and multi-class SVM. In Proceedings of the 2015 Annual IEEE India Conference (INDICON), New Delhi, India, 17–20 December 2015; pp. 1–5. [Google Scholar]

- Cruz-Albarran, I.A.; Benitez-Rangel, J.P.; Osornio-Rios, R.A.; Morales-Hernandez, L.A. Human emotions detection based on a smart-thermal system of thermographic images. Infrared Phys. Technol. 2017, 81, 250–261. [Google Scholar] [CrossRef]

- Yoshitomi, Y.; Kim, S.I.; Kawano, T.; Kilazoe, T. Effect of sensor fusion for recognition of emotional states using voice, face image and thermal image of face. In Proceedings of the 9th IEEE International Workshop on Robot and Human Interactive Communication, RO-MAN 2000, Osaka, Japan, 27–29 September 2000; pp. 178–183. [Google Scholar]

- Kitazoe, T.; Kim, S.I.; Yoshitomi, Y.; Ikeda, T. Recognition of emotional states using voice, face image and thermal image of face. In Proceedings of the Sixth International Conference on Spoken Language Processing, Beijing, China, 16–20 October 2000. [Google Scholar]

- Soleymani, M.; Lichtenauer, J.; Pun, T.; Pantic, M. A multimodal database for affect recognition and implicit tagging. IEEE Trans. Affect. Comput. 2012, 3, 42–55. [Google Scholar] [CrossRef] [Green Version]

- Caridakis, G.; Wagner, J.; Raouzaiou, A.; Curto, Z.; Andre, E.; Karpouzis, K. A multimodal corpus for gesture expressivity analysis. In Multimodal Corpora: Advances in Capturing, Coding and Analyzing Multimodality; LREC: Valletta, Malta, 17–23 May 2010; pp. 80–85. [Google Scholar]

- Caridakis, G.; Wagner, J.; Raouzaiou, A.; Lingenfelser, F.; Karpouzis, K.; Andre, E. A cross-cultural, multimodal, affective corpus for gesture expressivity analysis. J. Multimodal User Interfaces 2013, 7, 121–134. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A database for emotion analysis; using physiological signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef] [Green Version]

- Ringeval, F.; Sonderegger, A.; Sauer, J.; Lalanne, D. Introducing the RECOLA multimodal corpus of remote collaborative and affective interactions. In Proceedings of the 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Shanghai, China, 22–26 April 2013; pp. 1–8. [Google Scholar]

- O’Reilly, H.; Pigat, D.; Fridenson, S.; Berggren, S.; Tal, S.; Golan, O.; Bölte, S.; Baron-Cohen, S.; Lundqvist, D. The EU-emotion stimulus set: A validation study. Behav. Res. Methods 2016, 48, 567–576. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Martin, O.; Kotsia, I.; Macq, B.; Pitas, I. The eNTERFACE’05 audio-visual emotion database. In Proceedings of the 22nd International Conference on Data Engineering Workshops, Atlanta, GA, USA, 3–7 April 2006; p. 8. [Google Scholar]

- Wang, Y.; Guan, L. Recognizing human emotional state from audiovisual signals. IEEE Trans. Multimed. 2008, 10, 936–946. [Google Scholar] [CrossRef]

- Valstar, M.F.; Jiang, B.; Mehu, M.; Pantic, M.; Scherer, K. The first facial expression recognition and analysis challenge. In Proceedings of the 2011 IEEE International Conference on Automatic Face & Gesture Recognition and Workshops (FG 2011), Santa Barbara, CA, USA, 21–25 March 2011; pp. 921–926. [Google Scholar]

- Bänziger, T.; Scherer, K.R. Introducing the geneva multimodal emotion portrayal (gemep) corpus. In Blueprint for Affective Computing: A sourcebook; Oxford University Press: Oxford, UK, 2010; pp. 271–294. [Google Scholar]

- Haq, S.; Jackson, P.J. Multimodal emotion recognition. In Machine Audition: Principles, Algorithms and Systems; University of Surrey: Guildford, UK, 2010; pp. 398–423. [Google Scholar]

- Valstar, M.; Schuller, B.; Smith, K.; Eyben, F.; Jiang, B.; Bilakhia, S.; Schnieder, S.; Cowie, R.; Pantic, M. AVEC 2013: The continuous audio/visual emotion and depression recognition challenge. In Proceedings of the 3rd ACM International Workshop on Audio/Visual Emotion Challenge, Barcelona, Spain, 21 October 2013; ACM: New York, NY, USA, 2013; pp. 3–10. [Google Scholar]

- Valstar, M.; Schuller, B.; Smith, K.; Almaev, T.; Eyben, F.; Krajewski, J.; Cowie, R.; Pantic, M. Avec 2014: 3d dimensional affect and depression recognition challenge. In Proceedings of the 4th International Workshop on Audio/Visual Emotion Challenge, Orlando, FL, USA, 3–7 November 2014; pp. 1–8. [Google Scholar] [CrossRef]

- Zhalehpour, S.; Onder, O.; Akhtar, Z.; Erdem, C.E. BAUM-1: A Spontaneous Audio-Visual Face Database of Affective and Mental States. IEEE Trans. Affect. Comput. 2017, 8, 300–313. [Google Scholar] [CrossRef]

- Erdem, C.E.; Turan, C.; Aydin, Z. BAUM-2: A multilingual audio-visual affective face database. Multimed. Tools Appl. 2015, 74, 7429–7459. [Google Scholar] [CrossRef]

- Douglas-Cowie, E.; Cowie, R.; Sneddon, I.; Cox, C.; Lowry, O.; Mcrorie, M.; Martin, J.C.; Devillers, L.; Abrilian, S.; Batliner, A.; et al. The HUMAINE database: Addressing the collection and annotation of naturalistic and induced emotional data. In Affective Computing and Intelligent Interaction; Springer: Berlin/Heidelberg, Germany, 2007; pp. 488–500. [Google Scholar]

- Grimm, M.; Kroschel, K.; Narayanan, S. The Vera am Mittag German audio-visual emotional speech database. In Proceedings of the 2008 IEEE International Conference on Multimedia and Expo, Hannover, Germany, 23 June–26 April 2008; pp. 865–868. [Google Scholar]

- McKeown, G.; Valstar, M.; Cowie, R.; Pantic, M.; Schroder, M. The semaine database: Annotated multimodal records of emotionally colored conversations between a person and a limited agent. IEEE Trans. Affect. Comput. 2012, 3, 5–17. [Google Scholar] [CrossRef] [Green Version]

- McKeown, G.; Valstar, M.F.; Cowie, R.; Pantic, M. The SEMAINE corpus of emotionally coloured character interactions. In Proceedings of the 2010 IEEE International Conference on Multimedia and Expo (ICME), Suntec City, Singapore, 19–23 July 2010; pp. 1079–1084. [Google Scholar]

- Gunes, H.; Pantic, M. Dimensional emotion prediction from spontaneous head gestures for interaction with sensitive artificial listeners. In Intelligent Virtual Agents; Springer: Berlin/Heidelberg, Germany, 2010; pp. 371–377. [Google Scholar]

- Metallinou, A.; Yang, Z.; Lee, C.c.; Busso, C.; Carnicke, S.; Narayanan, S. The USC CreativeIT database of multimodal dyadic interactions: From speech and full body motion capture to continuous emotional annotations. Lang. Resour. Eval. 2016, 50, 497–521. [Google Scholar] [CrossRef]

- Chang, C.M.; Lee, C.C. Fusion of multiple emotion perspectives: Improving affect recognition through integrating cross-lingual emotion information. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 5820–5824. [Google Scholar]

- Dailey, M.N.; Joyce, C.; Lyons, M.J.; Kamachi, M.; Ishi, H.; Gyoba, J.; Cottrell, G.W. Evidence and a computational explanation of cultural differences in facial expression recognition. Emotion 2010, 10, 874. [Google Scholar] [CrossRef] [Green Version]

- Lyons, M.; Akamatsu, S.; Kamachi, M.; Gyoba, J. Coding facial expressions with gabor wavelets. In Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 200–205. [Google Scholar]

- Lyons, M.J.; Budynek, J.; Akamatsu, S. Automatic classification of single facial images. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 1357–1362. [Google Scholar] [CrossRef] [Green Version]

- Kanade, T.; Cohn, J.F.; Tian, Y. Comprehensive database for facial expression analysis. In Proceedings of the Fourth IEEE International Conference on Automatic Face and Gesture Recognition, Grenoble, France, 28–30 March 2000; pp. 46–53. [Google Scholar]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Burkhardt, F.; Paeschke, A.; Rolfes, M.; Sendlmeier, W.F.; Weiss, B. A database of german emotional speech. In Proceedings of the Interspeech, Lisbon, Portugal, 4–8 September 2005; Volume 5, pp. 1517–1520. [Google Scholar]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Collecting large, richly annotated facial-expression databases from movies. IEEE Multimed. 2012, 1, 34–41. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.H.; et al. Challenges in representation learning: A report on three machine learning contests. In Proceedings of the International Conference on Neural Information Processing, Daegu, Korea, 3–7 November 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 117–124. [Google Scholar]

- Ng, H.W.; Nguyen, V.D.; Vonikakis, V.; Winkler, S. Deep learning for emotion recognition on small datasets using transfer learning. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 3–13 November 2015; ACM: New York, NY, USA, 2015; pp. 443–449. [Google Scholar]

- Dhall, A.; Goecke, R.; Sebe, N.; Gedeon, T. Emotion recognition in the wild. In Journal on Multimodal User Interfaces; Springer Nature: Cham, Switzerland, 2016; Volume 10, pp. 95–97. [Google Scholar] [CrossRef] [Green Version]

- Busso, C.; Bulut, M.; Lee, C.C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335. [Google Scholar] [CrossRef]

- Mehta, D.; Siddiqui, M.F.H.; Javaid, A.Y. Facial Emotion Recognition: A Survey and Real-World User Experiences in Mixed Reality. Sensors 2018, 18, 416. [Google Scholar]

- Gao, Y.; Hendricks, L.A.; Kuchenbecker, K.J.; Darrell, T. Deep learning for tactile understanding from visual and haptic data. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 536–543. [Google Scholar]

- Pramerdorfer, C.; Kampel, M. Facial Expression Recognition using Convolutional Neural Networks: State of the Art. arXiv 2016, arXiv:1612.02903. [Google Scholar]

- Sun, B.; Cao, S.; Li, L.; He, J.; Yu, L. Exploring multimodal visual features for continuous affect recognition. In Proceedings of the 6th International Workshop on Audio/Visual Emotion Challenge, Amsterdam, The Netherlands, 12–16 October 2016; ACM: New York, NY, USA, 2016; pp. 83–88. [Google Scholar]

- Keren, G.; Kirschstein, T.; Marchi, E.; Ringeval, F.; Schuller, B. End-to-end learning for dimensional emotion recognition from physiological signals. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 985–990. [Google Scholar]

- Zhang, S.; Zhang, S.; Huang, T.; Gao, W. Multimodal Deep Convolutional Neural Network for Audio-Visual Emotion Recognition. In Proceedings of the 2016 ACM on International Conference on Multimedia Retrieval, New York, NY, USA, 6–9 June 2016; ACM: New York, NY, USA, 2016; pp. 281–284. [Google Scholar]

- Wen, G.; Hou, Z.; Li, H.; Li, D.; Jiang, L.; Xun, E. Ensemble of Deep Neural Networks with Probability-Based Fusion for Facial Expression Recognition. Cogn. Comput. 2017, 9, 597–610. [Google Scholar] [CrossRef]

- Cho, J.; Pappagari, R.; Kulkarni, P.; Villalba, J.; Carmiel, Y.; Dehak, N. Deep neural networks for emotion recognition combining audio and transcripts. In Proceedings of the Interspeech 2018, Hyderabad, India, 2–6 September 2018; pp. 247–251. [Google Scholar]

- Gu, Y.; Chen, S.; Marsic, I. Deep Multimodal Learning for Emotion Recognition in Spoken Language. arXiv 2018, arXiv:1802.08332. [Google Scholar]

- Majumder, A.; Behera, L.; Subramanian, V.K. Emotion recognition from geometric facial features using self-organizing map. Pattern Recognit. 2014, 47, 1282–1293. [Google Scholar] [CrossRef]

- Kotsia, I.; Pitas, I. Facial expression recognition in image sequences using geometric deformation features and support vector machines. IEEE Trans. Image Process. 2007, 16, 172–187. [Google Scholar] [CrossRef]

- Tian, Y.I.; Kanade, T.; Cohn, J.F. Recognizing action units for facial expression analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 97–115. [Google Scholar] [CrossRef] [Green Version]

- Lien, J.J.; Kanade, T.; Cohn, J.F.; Li, C.C. Automated facial expression recognition based on FACS action units. In Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 390–395. [Google Scholar]

- Tian, Y.l.; Kanade, T.; Cohn, J.F. Evaluation of Gabor-wavelet-based facial action unit recognition in image sequences of increasing complexity. In Proceedings of the Fifth IEEE International Conference on Automatic Face Gesture Recognition, Washington, DC, USA, 21 May 2002; pp. 229–234. [Google Scholar]

- Lien, J.J.J.; Kanade, T.; Cohn, J.F.; Li, C.C. Detection, tracking, and classification of action units in facial expression. Robot. Auton. Syst. 2000, 31, 131–146. [Google Scholar] [CrossRef]

- Siddiqui, M.F.H.; Javaid, A.Y.; Carvalho, J.D. A Genetic Algorithm Based Approach for Data Fusion at Grammar Level. In Proceedings of the 2017 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 14–16 December 2017; pp. 286–291. [Google Scholar]

- Atrey, P.K.; Hossain, M.A.; El Saddik, A.; Kankanhalli, M.S. Multimodal fusion for multimedia analysis: A survey. Multimed. Syst. 2010, 16, 345–379. [Google Scholar] [CrossRef]

- Verma, G.K.; Tiwary, U.S. Multimodal fusion framework: A multiresolution approach for emotion classification and recognition from physiological signals. NeuroImage 2014, 102, 162–172. [Google Scholar] [CrossRef]

- Huang, X.; Kortelainen, J.; Zhao, G.; Li, X.; Moilanen, A.; Seppänen, T.; Pietikäinen, M. Multi-modal emotion analysis from facial expressions and electroencephalogram. Comput. Vis. Image Underst. 2016, 147, 114–124. [Google Scholar] [CrossRef]

- Goshvarpour, A.; Abbasi, A.; Goshvarpour, A. Fusion of heart rate variability and pulse rate variability for emotion recognition using lagged poincare plots. Australas Phys. Eng. Sci. Med. 2017, 40, 617–629. [Google Scholar] [CrossRef] [PubMed]

- Gievska, S.; Koroveshovski, K.; Tagasovska, N. Bimodal feature-based fusion for real-time emotion recognition in a mobile context. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, China, 21–24 September 2015; pp. 401–407. [Google Scholar]

- Yoon, S.; Byun, S.; Jung, K. Multimodal Speech Emotion Recognition Using Audio and Text. arXiv 2018, arXiv:1810.04635. [Google Scholar]

- Hazarika, D.; Gorantla, S.; Poria, S.; Zimmermann, R. Self-attentive feature-level fusion for multimodal emotion detection. In Proceedings of the 2018 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), Miami, FL, USA, 10–12 April 2018; pp. 196–201. [Google Scholar]

- Lee, C.W.; Song, K.Y.; Jeong, J.; Choi, W.Y. Convolutional Attention Networks for Multimodal Emotion Recognition from Speech and Text Data. arXiv 2018, arXiv:1805.06606. [Google Scholar]

- Majumder, N.; Hazarika, D.; Gelbukh, A.; Cambria, E.; Poria, S. Multimodal sentiment analysis using hierarchical fusion with context modeling. Knowl.-Based Syst. 2018, 161, 124–133. [Google Scholar] [CrossRef] [Green Version]

- Hazarika, D.; Poria, S.; Zadeh, A.; Cambria, E.; Morency, L.P.; Zimmermann, R. Conversational Memory Network for Emotion Recognition in Dyadic Dialogue Videos. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; (Long Papers). Volume 1, pp. 2122–2132. [Google Scholar]

- Mencattini, A.; Ringeval, F.; Schuller, B.; Martinelli, E.; Di Natale, C. Continuous monitoring of emotions by a multimodal cooperative sensor system. Procedia Eng. 2015, 120, 556–559. [Google Scholar] [CrossRef] [Green Version]

- Shah, M.; Chakrabarti, C.; Spanias, A. A multi-modal approach to emotion recognition using undirected topic models. In Proceedings of the 2014 IEEE International Symposium on Circuits and Systems (ISCAS), Melbourne, Australia, 1–5 June 2014; pp. 754–757. [Google Scholar]

- Liang, P.P.; Zadeh, A.; Morency, L.P. Multimodal local-global ranking fusion for emotion recognition. In Proceedings of the 2018 on International Conference on Multimodal Interaction, Boulder, CO, USA, 16–20 October 2018; ACM: New York, NY, USA, 2018; pp. 472–476. [Google Scholar]

- Yin, Z.; Zhao, M.; Wang, Y.; Yang, J.; Zhang, J. Recognition of emotions using multimodal physiological signals and an ensemble deep learning model. Comput. Methods Prog. Biomed. 2017, 140, 93–110. [Google Scholar] [CrossRef]

- Tripathi, S.; Beigi, H. Multi-Modal Emotion recognition on IEMOCAP Dataset using Deep Learning. arXiv 2018, arXiv:1804.05788. [Google Scholar]

- Dataset 01: NIST Thermal/Visible Face Database 2012.

- Nguyen, H.; Kotani, K.; Chen, F.; Le, B. A thermal facial emotion database and its analysis. In Proceedings of the Pacific-Rim Symposium on Image and Video Technology, Guanajuato, Mexico, 28 October–1 November 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 397–408. [Google Scholar]

- Livingstone, S.R.; Russo, F.A. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English. PLoS ONE 2018, 13, e0196391. [Google Scholar] [CrossRef] [Green Version]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 22–24 June 2009; pp. 248–255. [Google Scholar]

- Sun, Q.S.; Zeng, S.G.; Liu, Y.; Heng, P.A.; Xia, D.S. A new method of feature fusion and its application in image recognition. Pattern Recognit. 2005, 38, 2437–2448. [Google Scholar] [CrossRef]

- Haghighat, M.; Abdel-Mottaleb, M.; Alhalabi, W. Fully automatic face normalization and single sample face recognition in unconstrained environments. Expert Syst. Appl. 2016, 47, 23–34. [Google Scholar] [CrossRef]

- Mi, A.; Wang, L.; Qi, J. A Multiple Classifier Fusion Algorithm Using Weighted Decision Templates. Sci. Prog. 2016, 2016, 3943859. [Google Scholar] [CrossRef] [Green Version]

| Database | Modalities |

|---|---|

| Multimodal Analysis of Human Nonverbal Behavior: Human Computer Interfaces (MAHNOB-HCI) [52] | EEG, Face, Audio, Eye Gaze, Body movements |

| Conveying Affectiveness in Leading-edge Living Adaptive Systems (CALLAS) Expressivity Corpus [53,54] | Speech, Facial expressions, Gestures |

| Emotion Face Body Gesture Voice and Physiological signals (emoFBVP) [38] | Face, Speech, Body Gesture, Physiological signals |

| Database for Emotion Analysis using Physiological Signals (DEAP) [55] | EEG, Peripheral physiological signals, Face |

| Remote Collaborative and Affective Interactions (RECOLA) [56] | Speech, Face, ECG, EDA |

| EU Emotion Stimulus [57] | Face, Speech, Body gestures, Contextual social scenes |

| eNTERFACE’05 [58] | Face and Speech |

| Ryerson Multimedia Lab (RML) [59] | Face and speech |

| Geneva Multimodal Emotion Portrayal-Facial Expression Recognition and Analysis (GEMEP-FERA 2011) [60,61] | Face and Speech |

| Maja Pantic, Michel Valstar and Ioannis Patras (MMI) [38] | Face |

| Surrey Audio-Visual Expressed Emotion (SAVEE) [38,62] | Face and Speech |

| Audio Visual Emotion Challenge (AVEC) [63,64] | Face and speech |

| Bahcesehir University Multilingual (BAUM-1) [65] | Face and speech |

| Bahcesehir University Multilingual (BAUM-2) [66] | Face and speech |

| HUman-MAchine Interaction Network on Emotion (HUMAINE) [67] | Face, Speech, Gestures |

| Vera am Mittag German Audio-Visual Spontaneous Speech (VAM) [68] | Face and speech |

| Sustained Emotionally colored Machine human Interaction using Nonverbal Expression (SEMAINE) [69,70] | Face and Speech |

| Sensitive Artificial Listener (SAL) [67,71] | Face and Speech |

| The University of Southern California (USC) CreativeIT [72,73] | Speech and Body movements |

| Japanese Female Facial Expressions (JAFFE) [74,75,76] | Face |

| Cohn-Kanade (CK/CK+) [77,78] | Face |

| Berlin [79] | Speech |

| Acted Facial Expression in Wild (AFEW) [80] | Face |

| Facial Expression Recognition (FER2013) [81,82] | Face |

| Static Facial Expressions in the Wild (SFEW) [83] | Face |

| National Taiwan University of Arts (NTUA) [73] | Speech |

| Interactive Emotional dyadic Motion Capture (IEMOCAP) [84] | Face, Speech, Body gestures, Dialogue transcriptions |

| IR DB | # Participants | Emotions | WBK | S/P | A |

|---|---|---|---|---|---|

| IRIS [45] | 30 (28 M, 2 F) | Surprise, Laugh, Anger | No | P | Yes |

| NIST [116] | 600 | Smile, Frown, Surprise | No | P | No |

| NVIE [44] | 215 (157 M, 58 F) | Happy, Sad, Surprise, Fear, Anger, Disgust, Neutral | No | S, P | Yes |

| KTFE [117] | 26 (16 M, 10 F) | Happy, Sad, Surprise, Fear, Anger, Disgust, Neutral | No | S | Yes |

| VIRI | 110 (70 M, 40 F) | Happy, Sad, Surprise, Anger, Neutral | Yes | S | Yes |

| Modality | Accuracy | Recall | Precision | F-Measure |

|---|---|---|---|---|

| V | 71.19% | 0.71 | 0.78 | 0.75 |

| IR | 77.34% | 0.77 | 0.78 | 0.78 |

| V+IR | 82.26% | 0.82 | 0.85 | 0.83 |

| S | 73.28% | 0.73 | 0.72 | 0.72 |

| V+IR+S | 86.36% | 0.86 | 0.88 | 0.87 |

| Research | Modalities | Dataset | LI | W | Accuracy |

|---|---|---|---|---|---|

| Kahou et al. [4] | Face, Speech | AFEW | No | Yes | 47.67% |

| Kim et al. [5] | Aligned and Non-aligned faces | FER-2013 | No | Yes | 73.73% |

| Sun et al. [6] | Face, Speech | AFEW, FERA-2013, CK | No | Yes | 47.17% |

| Tzirakis et al. [11] | Face, Speech | RECOLA | No | No | Arousal: 0.714, Valence: 0.612 |

| Noroozi et al. [17] | Face, Speech | eNTERFACE’05 | No | No | 99.52% |

| Hossain et al. [16] | Face, Speech | eNTERFACE’05, Berlin | No | No | 83.06% |

| Zhang et al. [90] | Face, Speech | RML | No | No | 74.32% |

| Nguyen et al. [22] | Face, Speech | eNTERFACE’05 | No | No | 89.39% |

| Fu et al. [23] | Face, Speech | eNTERFACE’05 | No | No | 80.1% |

| Ranganathan et al. [38] | Face, Speech, Body gestures, Physiological Signals | emoFBVP, CK, DEAP, MAHNOB-HCI | No | No | 97.3% (CK) |

| Sun et al. [88] | Face, Speech, Phys. signals | RECOLA | No | No | Arousal: 0.683, Valence: 0.642 |

| Yoon et al. [106] | Speech, Text | IEMOCAP | No | No | 71.8% |

| Lee et al. [108] | Speech, Text | CMU-MOSI | No | No | 88.89% |

| Majumder et al. [109] | Face, Speech, Text | CMU-MOSI, IEMOCAP | No | No | CMU-MOSI - 80.0% |

| Hazarika et al. [110] | Face, Speech, Text | IEMOCAP | No | No | 77.6% |

| Yoshitomi et al. [50] | Visible and Thermal face, Speech | - | Yes | No | 85% |

| Kitazoe et al. [51] | Visible and Thermal face, Speech | - | Yes | No | 85% |

| Our work | Visible and Thermal face, Speech | VIRI | Yes | Yes | 86.36% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Siddiqui, M.F.H.; Javaid, A.Y. A Multimodal Facial Emotion Recognition Framework through the Fusion of Speech with Visible and Infrared Images. Multimodal Technol. Interact. 2020, 4, 46. https://doi.org/10.3390/mti4030046

Siddiqui MFH, Javaid AY. A Multimodal Facial Emotion Recognition Framework through the Fusion of Speech with Visible and Infrared Images. Multimodal Technologies and Interaction. 2020; 4(3):46. https://doi.org/10.3390/mti4030046

Chicago/Turabian StyleSiddiqui, Mohammad Faridul Haque, and Ahmad Y. Javaid. 2020. "A Multimodal Facial Emotion Recognition Framework through the Fusion of Speech with Visible and Infrared Images" Multimodal Technologies and Interaction 4, no. 3: 46. https://doi.org/10.3390/mti4030046