Effect of Body Representation Level of an Avatar on Quality of AR-Based Remote Instruction

Abstract

:1. Introduction

2. Background

2.1. VR/AR-Based Remote Collaboration/Instruction

2.2. Role of Body Representation in Interpersonal Communication

2.3. Role of Body Representation in Remote Collaboration/Instruction

2.4. Evaluation of Remote Instruction in This Study

3. Experimental Design

3.1. Hypothesis

3.2. Method

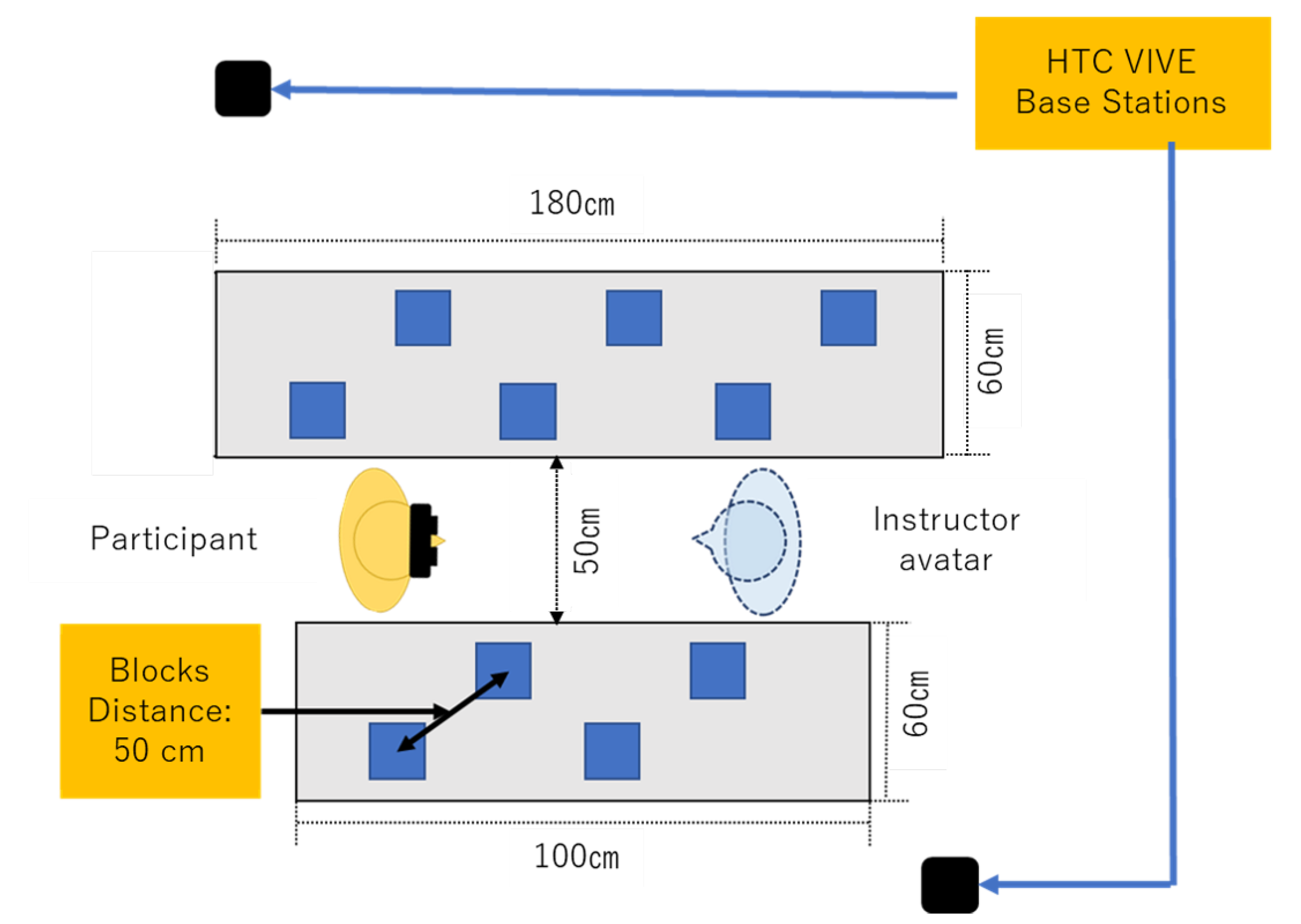

3.3. Material

3.4. Participants

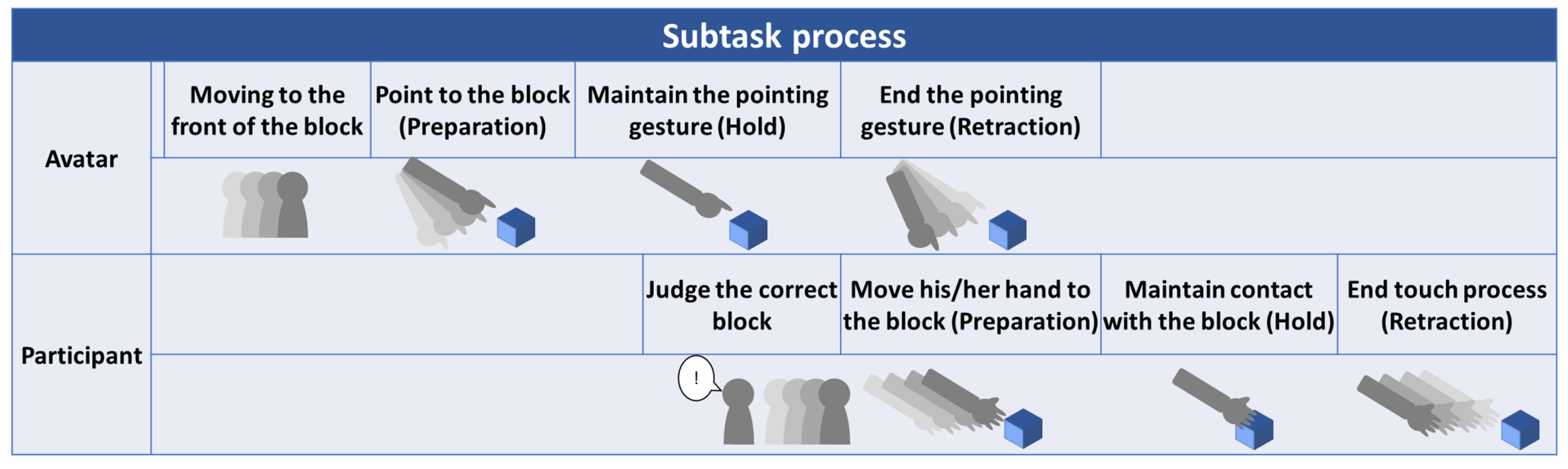

3.5. Procedure

3.6. Measures

3.6.1. Usability

3.6.2. Workload

3.6.3. Performance

3.6.4. Efficiency

3.6.5. Preference

4. Results

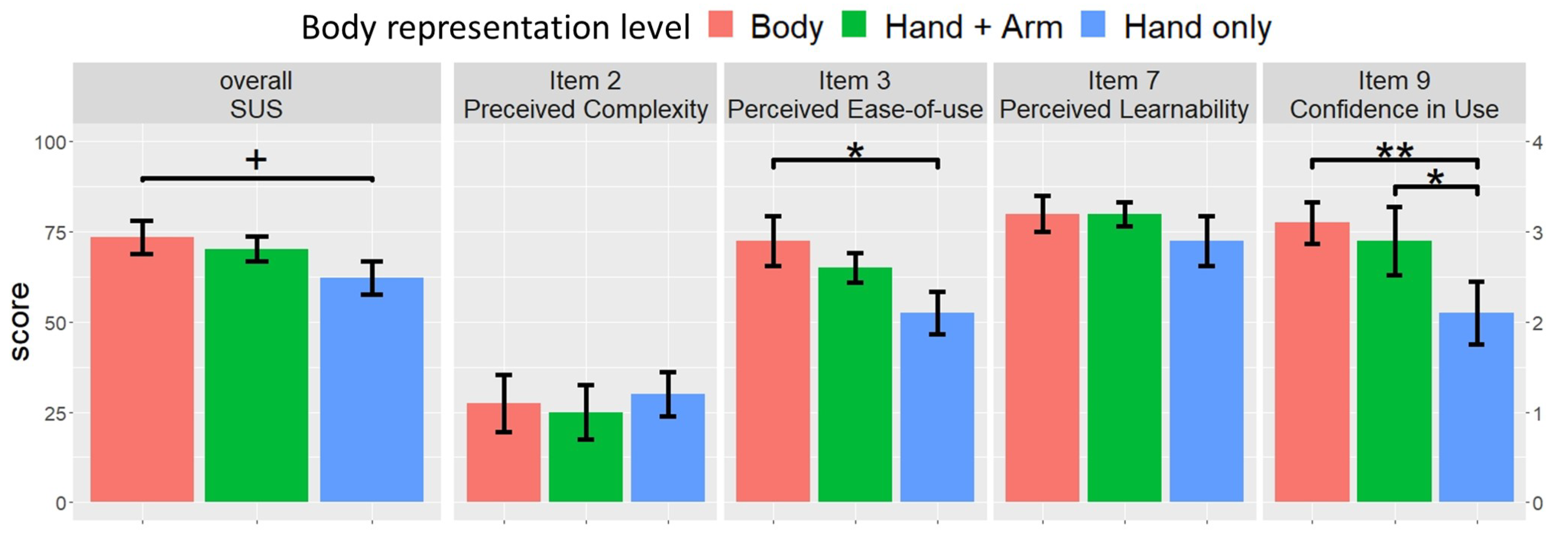

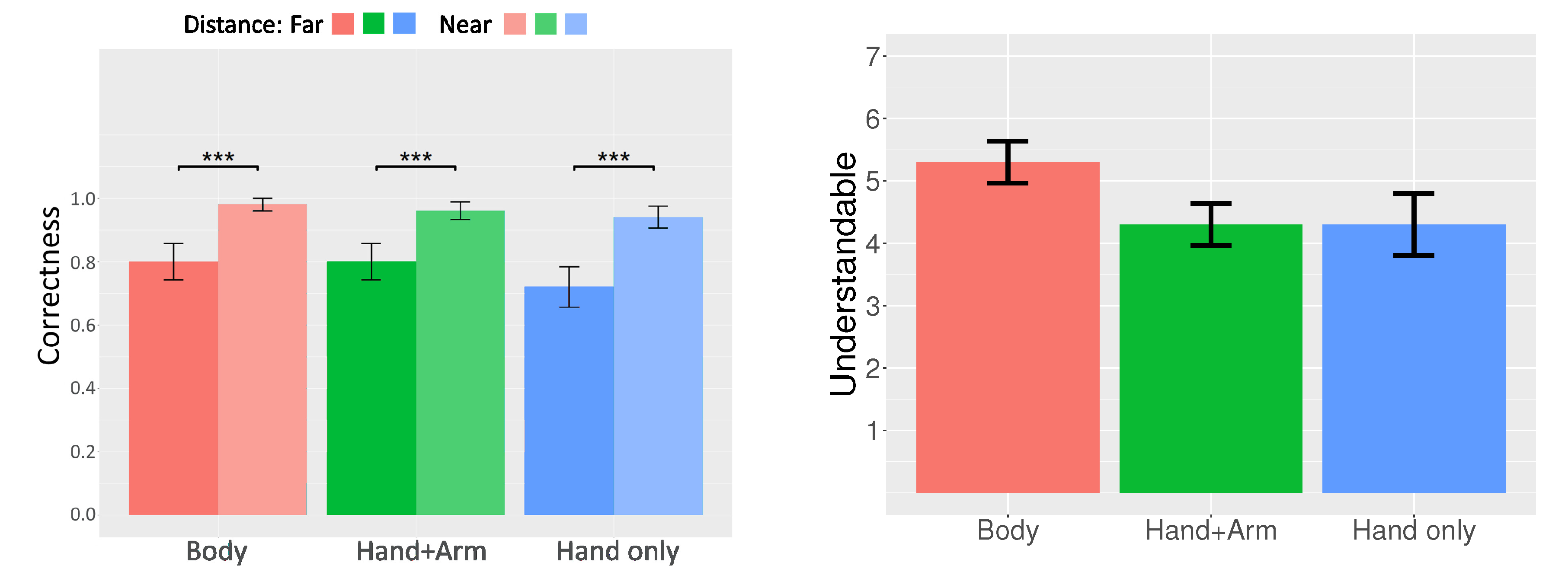

4.1. Usability

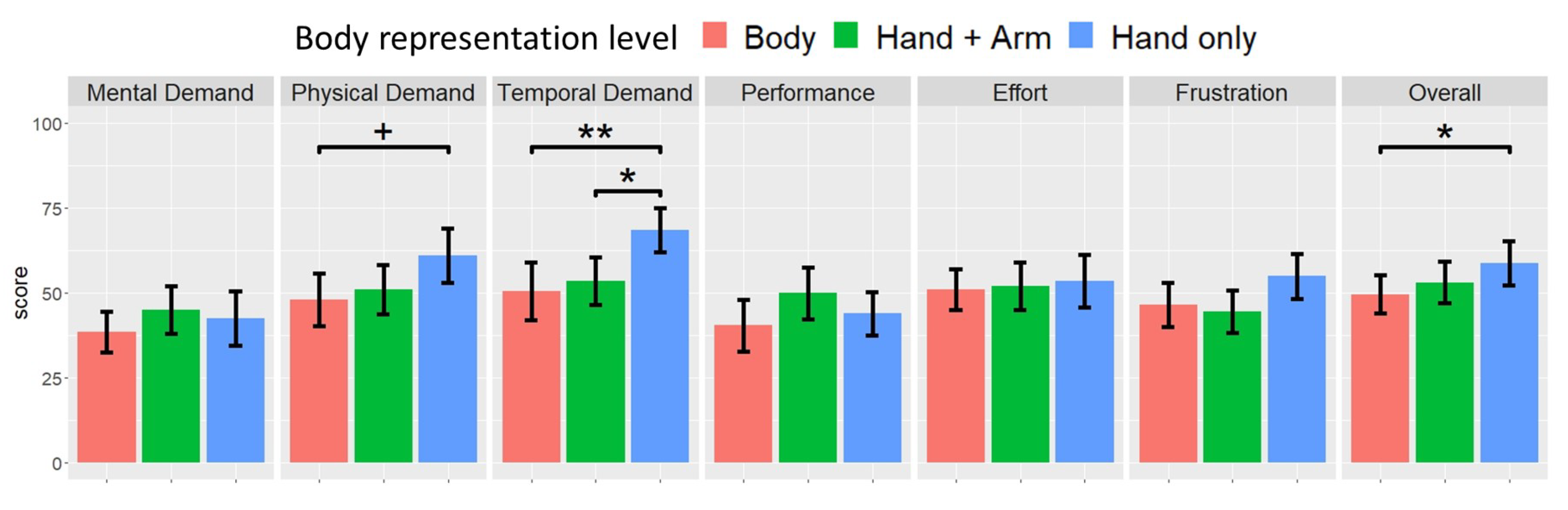

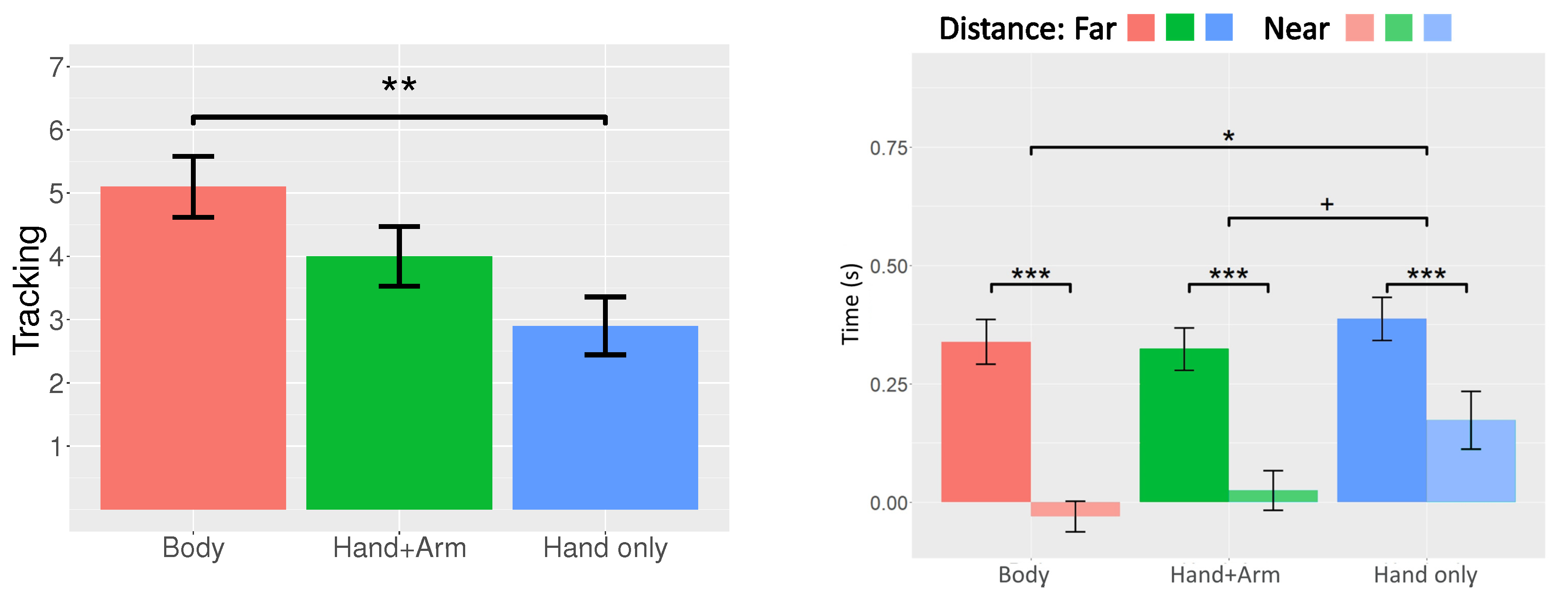

4.2. Workload

4.3. Performance

4.4. Efficiency

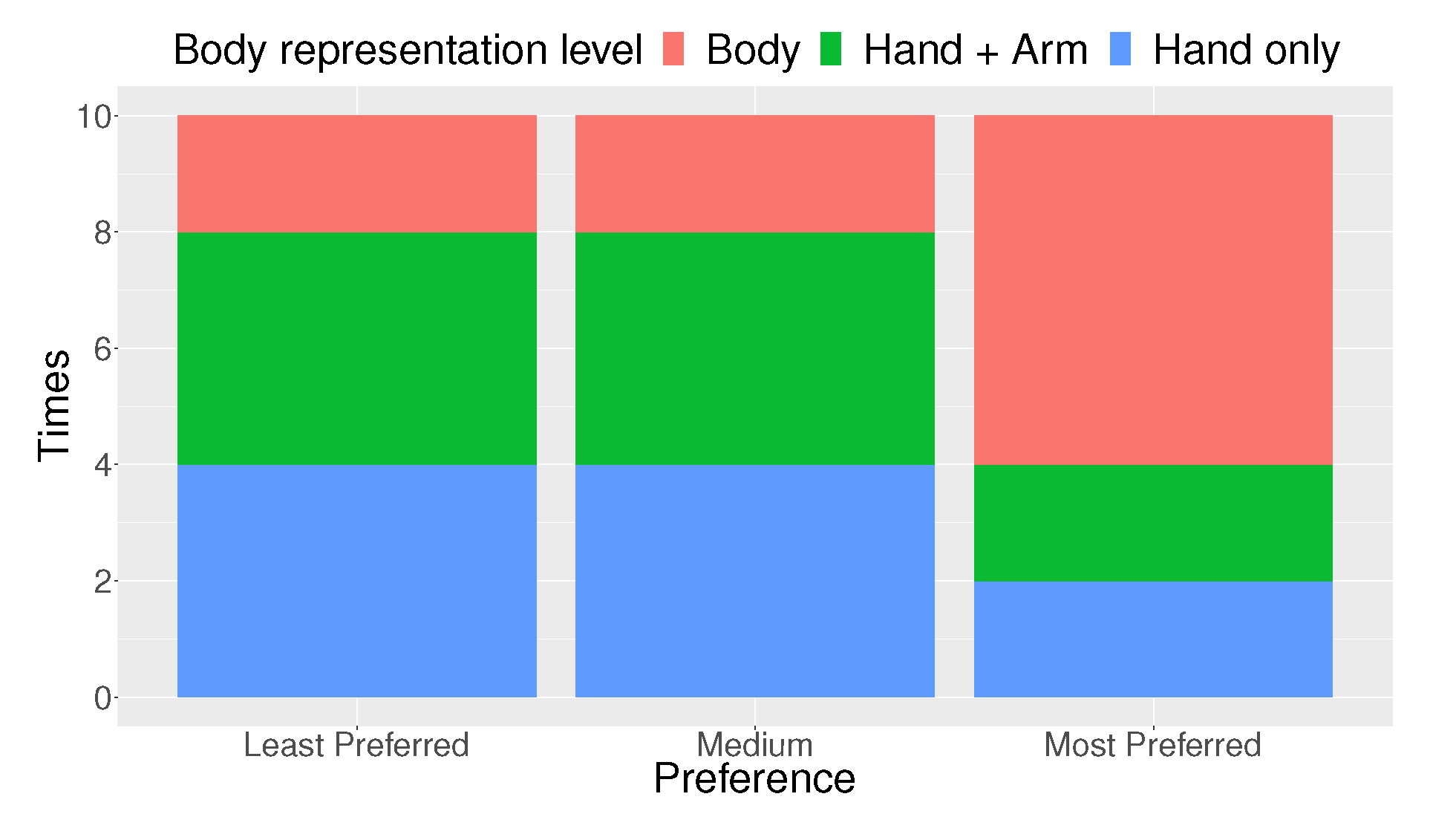

4.5. Preference

5. Discussion

5.1. Usability of Avatars with Different Body Representation Levels

5.2. Quality of Instruction with Different Avatars

5.3. Preference of Different Avatars

5.4. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Smith, H.J.; Neff, M. Communication Behavior in Embodied Virtual Reality. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018; pp. 289:1–289:12. [Google Scholar] [CrossRef] [Green Version]

- Doucette, A.; Gutwin, C.; Mandryk, R.L.; Nacenta, M.; Sharma, S. Sometimes when We Touch: How Arm Embodiments Change Reaching and Collaboration on Digital Tables. In Proceedings of the 2013 Conference on Computer Supported Cooperative Work, CSCW ‘13, San Antonio, TX, USA, 23–27 February 2013; ACM: New York, NY, USA, 2013; pp. 193–202. [Google Scholar] [CrossRef] [Green Version]

- Shu, L.; Flowers, W. Groupware Experiences in Three-dimensional Computer-aided Design. In Proceedings of the 1992 ACM Conference on Computer-Supported Cooperative Work, CSCW ‘92, Toronto, ON, Canada, 31 October–4 November 1992; ACM: New York, NY, USA, 1992; pp. 179–186. [Google Scholar] [CrossRef]

- Tait, M.; Billinghurst, M. The Effect of View Independence in a Collaborative AR System. Comput. Support. Coop. Work (CSCW) 2015, 24, 563–589. [Google Scholar] [CrossRef]

- Piumsomboon, T.; Lee, Y.; Lee, G.; Billinghurst, M. CoVAR: A Collaborative Virtual and Augmented Reality System for Remote Collaboration. In Proceedings of the SIGGRAPH Asia 2017 Emerging Technologies, SA ‘17, Bangkok, Thailand, 27–30 November 2017; ACM: New York, NY, USA, 2017; pp. 3:1–3:2. [Google Scholar] [CrossRef]

- Piumsomboon, T.; Day, A.; Ens, B.; Lee, Y.; Lee, G.; Billinghurst, M. Exploring enhancements for remote mixed reality collaboration. In Proceedings of the SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications, SA ‘17, Bangkok, Thailand, 27–30 November 2017; ACM: New York, NY, USA, 2017; p. 16. [Google Scholar]

- Pausch, R.; Pausch, R.; Proffitt, D.; Williams, G. Quantifying Immersion in Virtual Reality. In Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH ‘97, Los Angeles, CA, USA, 3–8 August 1997; ACM Press; Addison-Wesley Publishing Co.: New York, NY, USA, 1997; pp. 13–18. [Google Scholar] [CrossRef]

- Ruddle, R.A.; Payne, S.J.; Jones, D.M. Navigating Large-Scale Virtual Environments: What Differences Occur between Helmet-Mounted and Desk-Top Displays? Presence 1999, 8, 157–168. [Google Scholar] [CrossRef] [Green Version]

- Ragan, E.D.; Kopper, R.; Schuchardt, P.; Bowman, D.A. Studying the Effects of Stereo, Head Tracking, and Field of Regard on a Small-Scale Spatial Judgment Task. IEEE Trans. Vis. Comput. Graph. 2013, 19, 886–896. [Google Scholar] [CrossRef]

- Schuchardt, P.; Bowman, D.A. The Benefits of Immersion for Spatial Understanding of Complex Underground Cave Systems. In Proceedings of the 2007 ACM Symposium on Virtual Reality Software and Technology, VRST ‘07, Newport Beach, CA, USA, 5–7 November 2007; ACM: New York, NY, USA, 2007; pp. 121–124. [Google Scholar] [CrossRef]

- Huang, W.; Alem, L.; Tecchia, F.; Duh, H.B.L. Augmented 3D hands: A gesture-based mixed reality system for distributed collaboration. J. Multimodal User Interfaces 2018, 12, 77–89. [Google Scholar] [CrossRef]

- Gergle, D.; Kraut, R.E.; Fussell, S.R. Language Efficiency and Visual Technology: Minimizing Collaborative Effort with Visual Information. J. Lang. Soc. Psychol. 2004, 23, 491–517. [Google Scholar] [CrossRef]

- Gergle, D.; Kraut, R.E.; Fussell, S.R. Using Visual Information for Grounding and Awareness in Collaborative Tasks. Hum. Comput. Interact. 2013, 28, 1–39. [Google Scholar] [CrossRef]

- Fussell, S.R.; Setlock, L.D.; Yang, J.; Ou, J.; Mauer, E.; Kramer, A.D.I. Gestures over Video Streams to Support Remote Collaboration on Physical Tasks. Hum. Comput. Interact. 2004, 19, 273–309. [Google Scholar] [CrossRef]

- Gergle, D.; Rose, C.P.; Kraut, R.E. Modeling the Impact of Shared Visual Information on Collaborative Reference. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ‘07, San Jose, CA, USA, 28 April–3 May 2007; ACM: New York, NY, USA, 2007; pp. 1543–1552. [Google Scholar] [CrossRef]

- Kraut, R.E.; Gergle, D.; Fussell, S.R. The Use of Visual Information in Shared Visual Spaces: Informing the Development of Virtual Co-presence. In Proceedings of the 2002 ACM Conference on Computer Supported Cooperative Work, CSCW ‘02, New Orleans, LA, USA, 16–20 November 2002; ACM: New York, NY, USA, 2002; pp. 31–40. [Google Scholar] [CrossRef]

- Whittaker, S. Theories and methods in mediated communication. In Handbook of Discourse Processes; Graesser, A., Gernsbacher, M., Goldman, S., Eds.; Erlbaum: Mahwah, NJ, USA, 2003; pp. 253–293. [Google Scholar]

- Higuchi, K.; Yonetani, R.; Sato, Y. Can Eye Help You? Effects of Visualizing Eye Fixations on Remote Collaboration Scenarios for Physical Tasks. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, CHI ‘16, San Jose, CA, USA, 7–12 May 2016; ACM: New York, NY, USA, 2016; pp. 5180–5190. [Google Scholar]

- Huang, W.; Alem, L.; Tecchia, F. HandsIn3D: Supporting Remote Guidance with Immersive Virtual Environments. In Proceedings of the Human-Computer Interaction—INTERACT 2013, Cape Town, South Africa, 2–6 September 2013; Kotzé, P., Marsden, G., Lindgaard, G., Wesson, J., Winckler, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 70–77. [Google Scholar]

- Piumsomboon, T.; Lee, G.A.; Hart, J.D.; Ens, B.; Lindeman, R.W.; Thomas, B.H.; Billinghurst, M. Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, CHI ‘18, Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018; pp. 46:1–46:13. [Google Scholar] [CrossRef]

- Kolkmeier, J.; Harmsen, E.; Giesselink, S.; Reidsma, D.; Theune, M.; Heylen, D. With a Little Help from a Holographic Friend: The OpenIMPRESS Mixed Reality Telepresence Toolkit for Remote Collaboration Systems. In Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology, VRST ‘18, Tokyo, Japan, 28 November–1 December 2018; ACM: New York, NY, USA, 2018; pp. 26:1–26:11. [Google Scholar] [CrossRef]

- Pejsa, T.; Kantor, J.; Benko, H.; Ofek, E.; Wilson, A. Room2Room: Enabling Life-Size Telepresence in a Projected Augmented Reality Environment. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing, CSCW ‘16, San Francisco, CA, USA, 27 February–2 March 2016; ACM: New York, NY, USA, 2016; pp. 1716–1725. [Google Scholar] [CrossRef]

- Mehrabian, A. Nonverbal Communication; Aldine Publishing Company: Chicago, IL, USA, 1972. [Google Scholar]

- Heath, C.; Luff, P. Disembodied Conduct: Communication Through Video in a Multi-media Office Environment. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ‘91, New Orleans, LA, USA, 27 April–2 May 1991; ACM: New York, NY, USA, 1991; pp. 99–103. [Google Scholar] [CrossRef]

- Scheflen, A.E. The Significance of Posture in Communication Systems. Psychiatry 1964, 27, 316–331. [Google Scholar] [CrossRef]

- Kendon, A. Chapter 9—Some Relationships Between Body Motion and Speech: An Analysis of an Example. In Studies in Dyadic Communication; Pergamon General Psychology, Series; Siegman, A.W., Pope, B., Eds.; Pergamon: Amsterdam, The Netherlands, 1972; Volume 7, pp. 177–210. [Google Scholar] [CrossRef]

- Schegloff, E.A. Body Torque. Soc. Res. 1998, 65, 535–596. [Google Scholar]

- Hall, E. The Hidden Dimension; Anchor Books: Garden City, NY, USA, 1992. [Google Scholar]

- George, C.; Spitzer, M.; Hussmann, H. Training in IVR: Investigating the Effect of Instructor Design on Social Presence and Performance of the VR User. In Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology, VRST ‘18, Tokyo, Japan, 28 November–1 December 2018; ACM: New York, NY, USA, 2018; pp. 27:1–27:5. [Google Scholar] [CrossRef]

- Yamamoto, T.; Otsuki, M.; Kuzuoka, H.; Suzuki, Y. Tele-Guidance System to Support Anticipation during Communication. Multimodal Technol. Interact. 2018, 2, 55. [Google Scholar] [CrossRef] [Green Version]

- Waldow, K.; Fuhrmann, A.; Grünvogel, S.M. Investigating the Effect of Embodied Visualization in Remote Collaborative Augmented Reality. In Virtual Reality and Augmented Reality; Bourdot, P., Interrante, V., Nedel, L., Magnenat-Thalmann, N., Zachmann, G., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 246–262. [Google Scholar]

- Yoon, B.; Kim, H.I.; Lee, G.; Billinghurst, M.; Woo, W. The Effect of Avatar Appearance on Social Presence in an Augmented Reality Remote Collaboration. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019. [Google Scholar]

- Chapanis, A.; Ochsman, R.B.; Parrish, R.N.; Weeks, G.D. Studies in Interactive Communication: I. The Effects of Four Communication Modes on the Behavior of Teams during Cooperative Problem-Solving. Hum. Factors 1972, 14, 487–509. [Google Scholar] [CrossRef]

- Williams, E. Experimental comparisons of face-to-face and mediated communication: A review. Psychol. Bull. 1977, 84, 963. [Google Scholar] [CrossRef]

- O’Malley, C.; Langton, S.; Anderson, A.; Doherty-Sneddon, G.; Bruce, V. Comparison of face-to-face and video-mediated interaction. Interact. Comput. 1996, 8, 177–192. [Google Scholar] [CrossRef]

- Gaver, W.W.; Sellen, A.; Heath, C.; Luff, P. One is Not Enough: Multiple Views in a Media Space. In Proceedings of the INTERACT ‘93 and CHI ‘93 Conference on Human Factors in Computing Systems, CHI ‘93, Amsterdam, The Netherlands, 24–29 April 1993; ACM: New York, NY, USA, 1993; pp. 335–341. [Google Scholar] [CrossRef]

- Sodhi, R.S.; Jones, B.R.; Forsyth, D.; Bailey, B.P.; Maciocci, G. BeThere: 3D Mobile Collaboration with Spatial Input. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ‘13, Paris, France, 27 April–2 May 2013; ACM: New York, NY, USA, 2013; pp. 179–188. [Google Scholar] [CrossRef]

- Borst, C.W.; Lipari, N.G.; Woodworth, J.W. Teacher-Guided Educational VR: Assessment of Live and Prerecorded Teachers Guiding Virtual Field Trips. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Reutlingen, Germany, 18–22 March 2018; pp. 467–474. [Google Scholar] [CrossRef]

- Kim, S.; Lee, G.; Huang, W.; Kim, H.; Woo, W.; Billinghurst, M. Evaluating the Combination of Visual Communication Cues for HMD-based Mixed Reality Remote Collaboration. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, CHI ‘19, Glasgow, Scotland, UK, 4–9 May 2019; ACM: New York, NY, USA, 2019; pp. 173:1–173:13. [Google Scholar] [CrossRef]

- Pouliquen-Lardy, L.; Milleville-Pennel, I.; Guillaume, F.; Mars, F. Remote collaboration in virtual reality: Asymmetrical effects of task distribution on spatial processing and mental workload. Virtual Real. 2016, 20, 213–220. [Google Scholar] [CrossRef]

- Tan, C.S.S.; Schöning, J.; Luyten, K.; Coninx, K. Investigating the Effects of Using Biofeedback as Visual Stress Indicator during Video-mediated Collaboration. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ‘14, Toronto, ON, Canada, 26 April–1 May 2014; ACM: New York, NY, USA, 2014; pp. 71–80. [Google Scholar] [CrossRef]

- Aschenbrenner, D.; Leutert, F.; Çençen, A.; Verlinden, J.; Schilling, K.; Latoschik, M.; Lukosch, S. Comparing Human Factors for Augmented Reality Supported Single-User and Collaborative Repair Operations of Industrial Robots. Front. Robot. AI 2019, 6, 37. [Google Scholar] [CrossRef] [Green Version]

- Kraut, R.E.; Miller, M.D.; Siegel, J. Collaboration in Performance of Physical Tasks: Effects on Outcomes and Communication. In Proceedings of the 1996 ACM Conference on Computer Supported Cooperative Work, CSCW ‘96, Boston, MA, USA, 16–20 November 1996; ACM: New York, NY, USA, 1996; pp. 57–66. [Google Scholar] [CrossRef]

- Kiyokawa, K.; Takemura, H.; Yokoya, N. A collaboration support technique by integrating a shared virtual reality and a shared augmented reality. In Proceedings of the IEEE SMC ‘99 International Conference on Systems, Man, and Cybernetics (Cat. No.99CH37028), Tokyo, Japan, 12–15 October 1999; Volume 6, pp. 48–53. [Google Scholar] [CrossRef]

- Kuzuoka, H. Spatial Workspace Collaboration: A SharedView Video Support System for Remote Collaboration Capability. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ‘92, Monterey, CA, USA, 3–7 May 1992; ACM: New York, NY, USA, 1992; pp. 533–540. [Google Scholar] [CrossRef]

- Weaver, K.A.; Baumann, H.; Starner, T.; Iben, H.; Lawo, M. An Empirical Task Analysis of Warehouse Order Picking Using Head-mounted Displays. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ‘10, Atlanta, GA, USA, 10–15 April 2010; ACM: New York, NY, USA, 2010; pp. 1695–1704. [Google Scholar] [CrossRef]

- Funk, M.; Mayer, S.; Nistor, M.; Schmidt, A. Mobile in-situ pick-by-vision: Order picking support using a projector helmet. In Proceedings of the 9th ACM International Conference on PErvasive Technologies Related to Assistive Environments, Corfu Island, Greece, 29 June–1 July 2016; ACM: New York, NY, USA, 2016; p. 45. [Google Scholar]

- Herbort, O.; Kunde, W. How to point and to interpret pointing gestures? Instructions can reduce pointer–observer misunderstandings. Psychol. Res. 2018, 82, 395–406. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A quick and dirty usability scale. In Usability Evaluation in Industry; Jordan, P.W., Thomas, B., McClelland, I.L., Weerdmeester, B., Eds.; Taylor & Francis: Bristol, PA, USA, 11 June 1996; pp. 189–194. [Google Scholar]

- Sauro, J.; Lewis, J.R. Quantifying the User Experience: Practical Statistics for User Research, 1st ed.; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2012. [Google Scholar]

- Brooke, J. SUS: A Retrospective. J. Usability Stud. 2013, 8, 29–40. [Google Scholar]

- Tullis, T.S.; Stetson, J.N. A comparison of questionnaires for assessing website usability. In Proceedings of the Usability Professionals Association (UPA) 2004 Conference, Minneapolis, MN, USA, 7–11 June 2004. [Google Scholar]

- Lewis, J.R.; Sauro, J. Item Benchmarks for the System Usability Scale. J. Usability Stud. 2018, 13, 158–167. [Google Scholar]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Kendon, A. Gesture: Visible Action as Utterance; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- McNeill, D. Hand and Mind: What Gestures Reveal about Thought; University of Chicago Press: Chicago, IL, USA, 1992. [Google Scholar]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [Green Version]

- McGraw, K.O.; Wong, S.P. Forming inferences about some intraclass correlation coefficients. Psychol. Methods 1996, 1, 30. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Hum. Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Herbort, O.; Kunde, W. Spatial (mis-) interpretation of pointing gestures to distal referents. J. Exp. Psychol. Hum. Percept. Perform. 2016, 42, 78. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sousa, M.; dos Anjos, R.K.; Mendes, D.; Billinghurst, M.; Jorge, J. WARPING DEIXIS: Distorting Gestures to Enhance Collaboration. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, UK, 4–9 May 2019; ACM: New York, NY, USA, 2019; p. 608. [Google Scholar]

- Bangerter, A.; Oppenheimer, D.M. Accuracy in detecting referents of pointing gestures unaccompanied by language. Gesture 2006, 6, 85–102. [Google Scholar] [CrossRef]

- Wong, N.; Gutwin, C. Where Are You Pointing? The Accuracy of Deictic Pointing in CVEs. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI‘10, Atlanta, GA, USA, 10–15 April 2010; ACM: New York, NY, USA, 2010; pp. 1029–1038. [Google Scholar] [CrossRef]

- Bailenson, J.N.; Blascovich, J.; Beall, A.C.; Loomis, J.M. Equilibrium Theory Revisited: Mutual Gaze and Personal Space in Virtual Environments. Presence 2001, 10, 583–598. [Google Scholar] [CrossRef]

- Andersen, P.A.; Leibowitz, K. The development and nature of the construct touch avoidance. Environ. Psychol. Nonverbal Behav. 1978, 3, 89–106. [Google Scholar] [CrossRef]

| Bartlett’s K-Squared | p Value | |

|---|---|---|

| Mental Demand | 0.79 | 0.673 |

| Physical Demand | 0.082 | 0.96 |

| Temporal Demand | 0.663 | 0.72 |

| Performance | 0.371 | 0.83 |

| Effort | 0.506 | 0.78 |

| Frustration | 0.022 | 0.99 |

| Overall | 0.193 | 0.91 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, T.-Y.; Sato, Y.; Otsuki, M.; Kuzuoka, H.; Suzuki, Y. Effect of Body Representation Level of an Avatar on Quality of AR-Based Remote Instruction. Multimodal Technol. Interact. 2020, 4, 3. https://doi.org/10.3390/mti4010003

Wang T-Y, Sato Y, Otsuki M, Kuzuoka H, Suzuki Y. Effect of Body Representation Level of an Avatar on Quality of AR-Based Remote Instruction. Multimodal Technologies and Interaction. 2020; 4(1):3. https://doi.org/10.3390/mti4010003

Chicago/Turabian StyleWang, Tzu-Yang, Yuji Sato, Mai Otsuki, Hideaki Kuzuoka, and Yusuke Suzuki. 2020. "Effect of Body Representation Level of an Avatar on Quality of AR-Based Remote Instruction" Multimodal Technologies and Interaction 4, no. 1: 3. https://doi.org/10.3390/mti4010003