1. Introduction

Although the COVID-19 pandemic has slowed down the global energy demand growth, an increase between 4% and 9% is estimated between the years 2019 and 2030 [

1]. Furthermore, there are around 840 million people without electricity access [

2], and the vast majority (85%) come from rural areas [

1,

2]. In this context, hybrid renewable energy systems (HRES) constitute an affordable solution to electrify rural areas, given the technologies’ portability and their usefulness to reduce greenhouse gases emissions [

3,

4].

Several configurations of hybrid renewable energy systems (HRES) are analyzed in the literature, combining different technologies, the most common being solar photovoltaic arrays (PV) and wind turbines (WT) along with storage batteries [

5,

6]. For instance, Ref. [

7] presents a stand-alone system based on solar PV generators hybridized with internal combustion engines and micro gas turbines. This work is focused on the analyses for cost of energy (COE), waste heat, duty factor, and life cycle emissions (LCE). Likewise, in [

8] the techno-economic and performance analysis of a microgrid integrated with solar, wind, diesel and storage technologies is carried out. The mathematical modeling of these technologies has been widely studied [

9,

10]. The modeling aspects are important considerations to be accounted for in optimization procedures. A comprehensive review of state-of-the-art problem formulations related with solar and wind energy supplies along with machine and deep learning techniques applied in this field is presented in [

11].

Classical and modern optimization methodologies have been employed for the optimal sizing of HRES [

5]. These techniques are referred in the literature as three different methods: classical, meta heuristic techniques, and computer software [

12]. Classical methods involve the use of linear programming (LP) [

13] problems, mixed integer linear programming (MILP) problems [

14], analytical [

15], and numerical [

16] methods. Meta-heuristics involve the use of a single [

17] or an hybrid [

18] algorithm. Finally, some of the most used software are HOMER [

19] and ViPOR [

20]. A complete review of the latter category is presented in [

19]. The optimal sizing of HRES raises the importance of counting on accurate and long-term solar radiation forecasting.

The vast majority of solar forecasting techniques are focused on the short and mid terms [

21]. For instances, a probabilistic approach is presented to calculate the clearness index in [

22]. Ref. [

23] presents a hybrid model which entails variational mode decomposition (VMD) and two convolutional networks (CN) together with random forests (RF) or long short-term memory cells (LSTM) to predict 15 min ahead or up to 24 h.

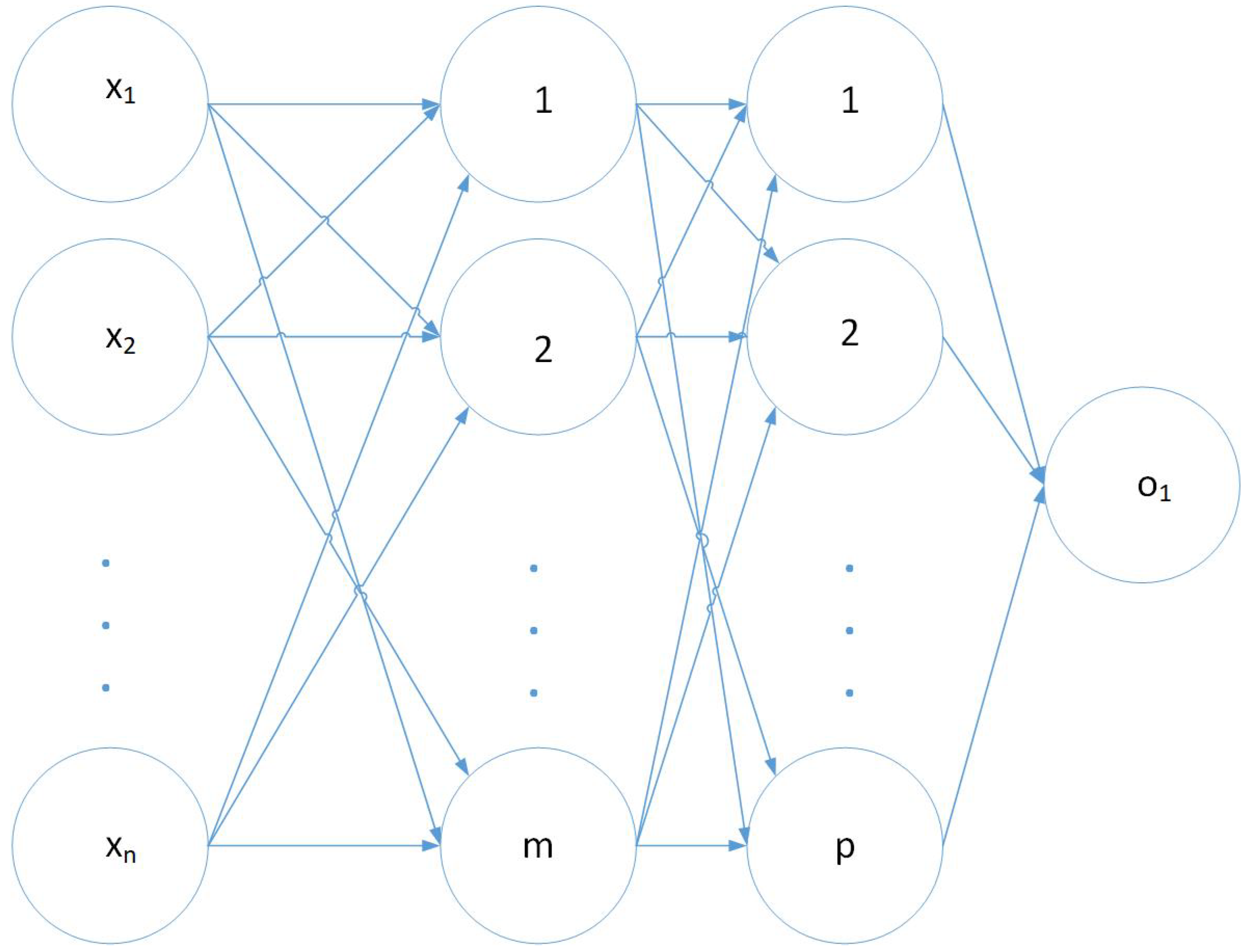

Deep learning (which is entailed in machine learning) is about obtaining structural descriptions that contain information from raw data [

24]. Four fundamental architectures of deep learning are feed forward neural networks (FFNN), convolutional neural networks (CNN), recurrent neural networks (RNN), and recursive neural networks. Automatic feature extraction is one of the main advantages that deep learning exhibits over traditional machine learning (ML) methods. Furthermore, the latter ones are based on linear combinations of fixed nonlinear basis functions, whereas in feed forward neural networks, the simplest architecture, these basis functions are dependent on the parameters which are adjusted during training [

25].

A machine learning method, random forest regression (RFR), is used in [

26] to obtain three years ahead solar irradiance to quantify energy potentials. Four deep learning (long short-term memory cells (LSTM), gated recurrent unit (GRU), recurrent neural networks (RNN), feed forward neural networks (FFNN)) and one machine learning (support vector regression (SVR)) models were implemented and compared in [

27] to predict one year ahead hourly and daily solar radiation. According to their results, the state-of-the-art models outperformed the traditional one they use for benchmark (RFR). The autoregressive integrated moving average (ARIMA) model was compared with RFR, neural networks, linear regression, and support vector machines in [

28]. The results suggest that the ARIMA model performs better than the other approaches. A comparison between only deep learning models is presented by [

29]. Seasonal ARIMA has also been applied to study GHI short-term forecasting [

30]. In that work, GRU is proposed for forecasting one year of hourly and daily solar radiation with a performance better than LSTM, RNN, FFNN, and SVR. Finally, transfer learning has also been employed to address geographical spaces where there is a shortage of previous data, and it results in being too expensive or complex to collect by refining trained recurrent neural network-based models at other locations with abundant data [

31]. Among the mentioned works, the use of RNNs is of particular importance.

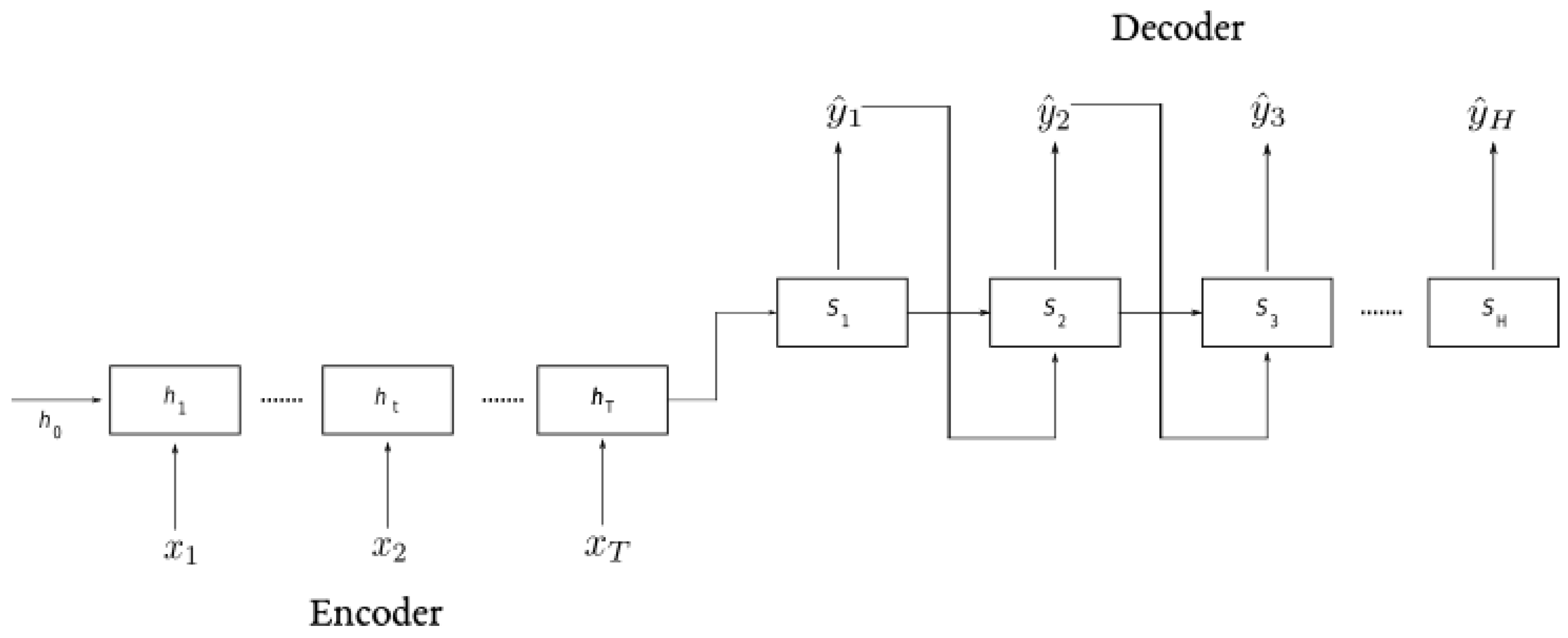

RNNs recently demonstrated competitiveness against traditional statistical models, such as exponential smoothing (ES) and ARIMA [

32]. However, there is still the necessity to improve their suitability for non-expert users as it is explained by [

33]. Furthermore, hybridizing deep learning models has also been used as an alternative for this forecasting task. For instance, a model including both convolutional neural networks and LSTM (CLSTM) is presented for half-hourly solar radiation forecasting in [

34]. The proposed hybrid model is compared with single hidden layer and decision tree models and outperforms its counterparts from a forecasting horizon of 1 day ahead up to 8 months. To the best of our knowledge, RNNs have not been used for long-term solar forecasting (one year ahead or more), which is the scope desired for optimal HRES sizing.

Very few jobs have incorporated long-term forecasting into the optimal sizing of off-grid hybrid renewable energy systems. In [

35], load demand, solar irradiation, wind temperature, and wind speed are forecasted hourly for one year using artificial neural networks (ANN). In addition to that, tabu search is proposed to optimize the system and compared with harmony search and simulated annealing. A new algorithm is proposed by [

36] as a combination of chaotic search, harmony search, and simulated annealing for the optimal sizing of a solar-wind energy system. Again, weather and load forecasting is incorporated using ANN to improve the accuracy of the size optimization algorithm. In [

37], the biogeography-based optimization (BBO) algorithm is proposed along with ANN as an optimization algorithm and solar-wind forecasting model, respectively. A mix between harmony search and a combination of harmony search and chaotic search is proposed in [

38] for the optimum design of a stand-alone hybrid desalination scheme; this work includes the use of an iterative neural networks scheme to forecast weather parameters.

Any of the works which include weather forecasting into the optimal sizing procedures have incorporated modern techniques, such as recurrent neural networks (RNN), which are now overcoming traditional methods. Moreover, to the best of our knowledge, there are currently very few works addressing long-term GHI forecasting. For this reason, in this work, we propose the use of long short-term memory (LSTM) cells and feed forward neural networks (FFNN) to address the long-term GHI forecasting considering half-hourly spaced data, which are suitable for microgrids planning purposes [

5]. This work focuses on deep learning since they, under certain characteristics, should be able to subsume classical linear methods, given their capability to map complex functions [

39].

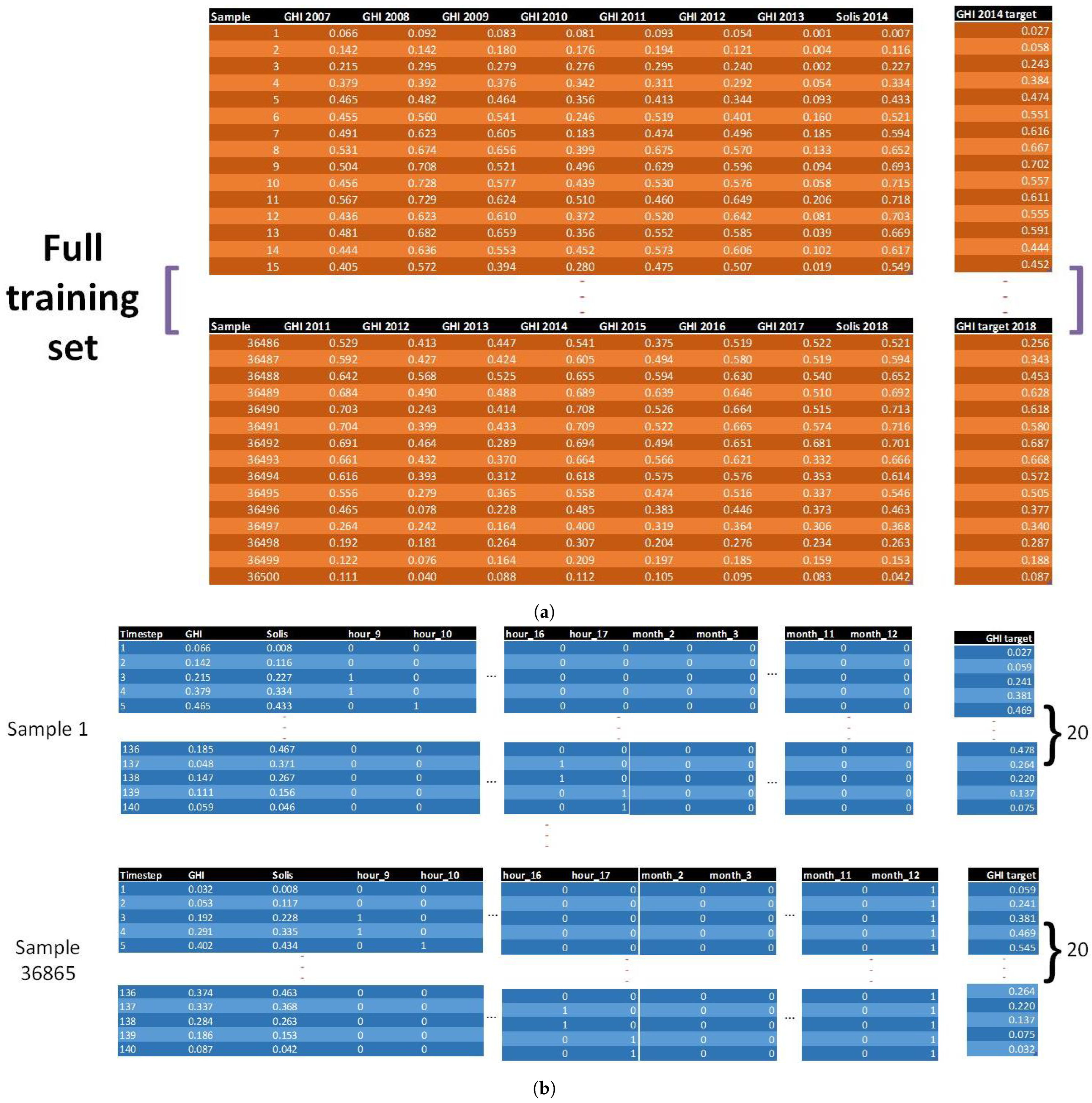

It is important to highlight the main contribution of this work. We propose a new alternative using RNN and FFNN for long-term GHI forecasting to predict one-year ahead using one single model. Specifically, we present a new procedure to feed data into supervised learning models which require either a 3D shape (such as RNN) or 2D shape as any traditional supervised learning model. From the first group, RNN are selected for this task since they allow to carry information throughout the neurons while training and help to grasp the temporal dependence of data. From the last group, we focus on FFNN for being representative and, at the same time, given that it provides enough complexity to make a good comparison with RNN.

4. Methodology

In this section, we describe the steps followed to apply deep learning techniques to predict global horizontal irradiance (GHI). As we know, there are three components of solar irradiance: direct, diffuse, and global components. However, this work is focused on the global component since it has been widely used to estimate power generation in photovoltaic technologies [

44].

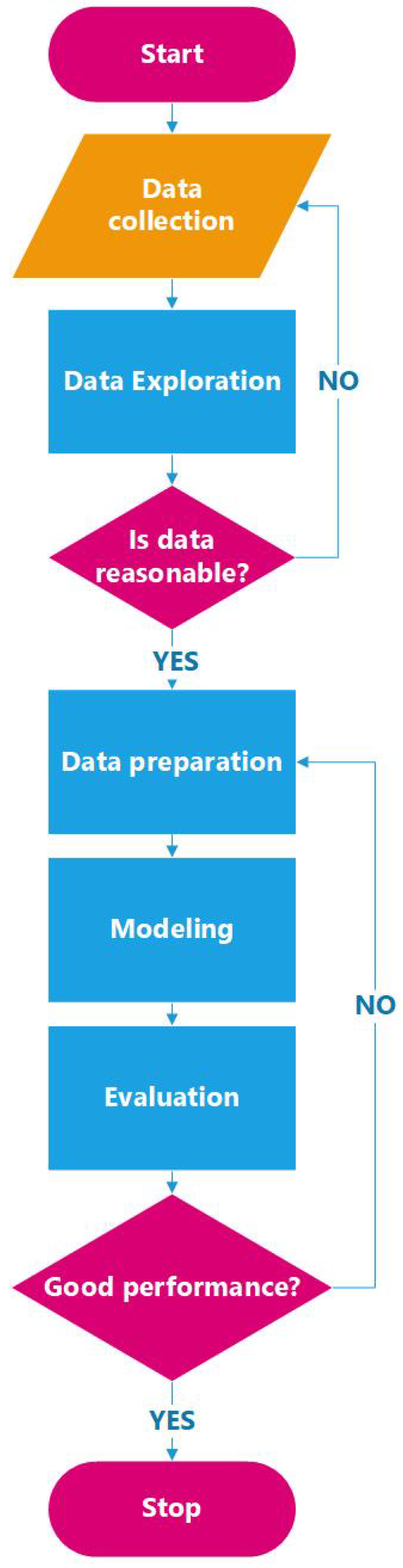

Figure 3 represents the flow diagram with the methodology employed for this work. This methodology is based on the CRISP-DM methodology [

45].

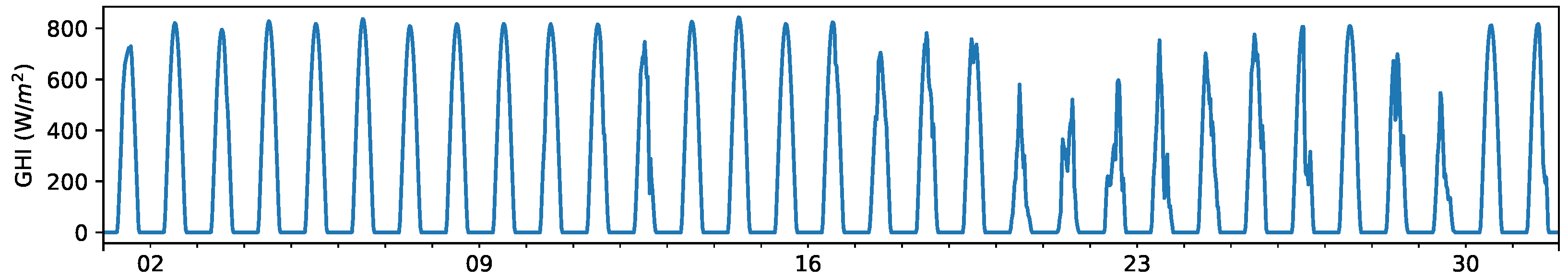

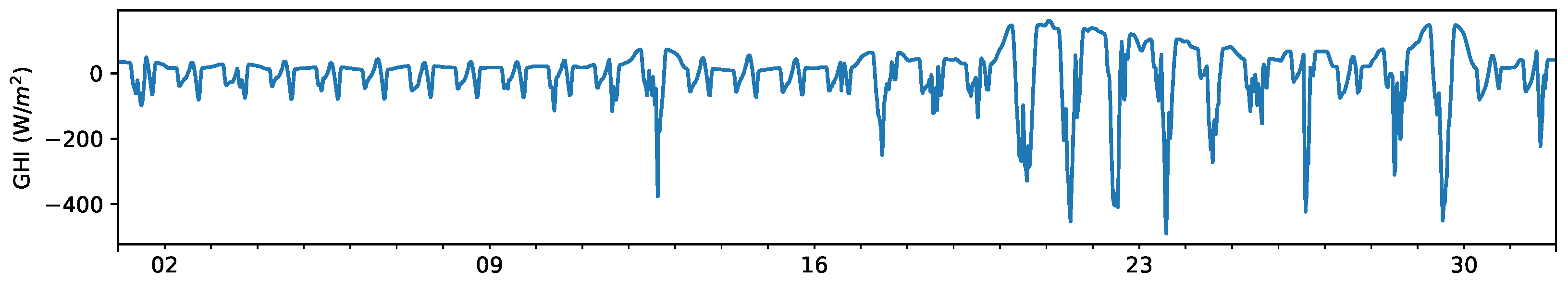

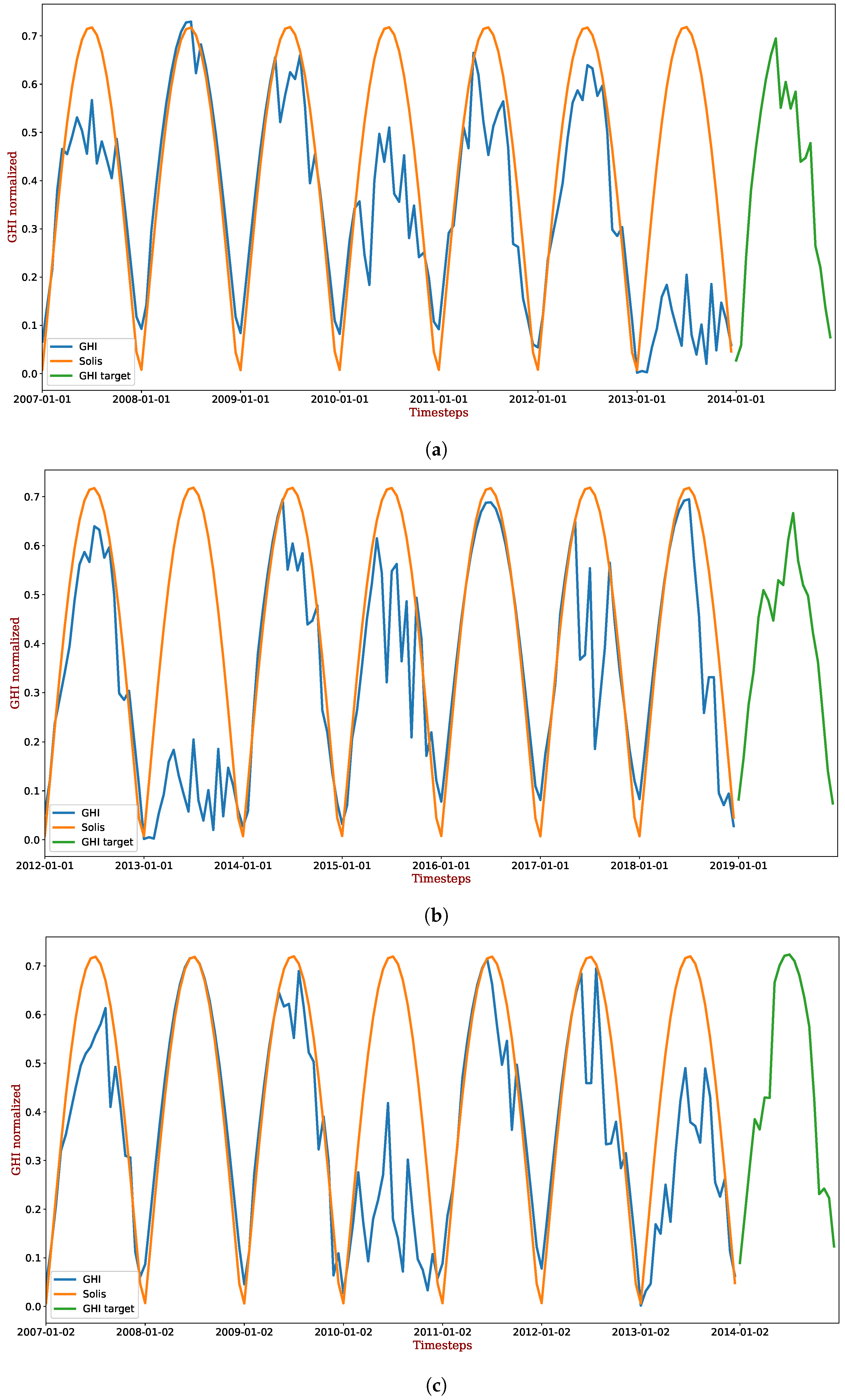

The first step was to describe the data acquisition. To this aim, 14 years of historical half-hourly data were collected (from 2007 to 2020). In order to explore the data obtained, a time series decomposition was performed to identify the seasonality, trend, and residual components in the historical data. Analyzing the GHI distribution with respect to the time gives us enough information to get rid of 0 values, which were not considered as part of the forecasting task.

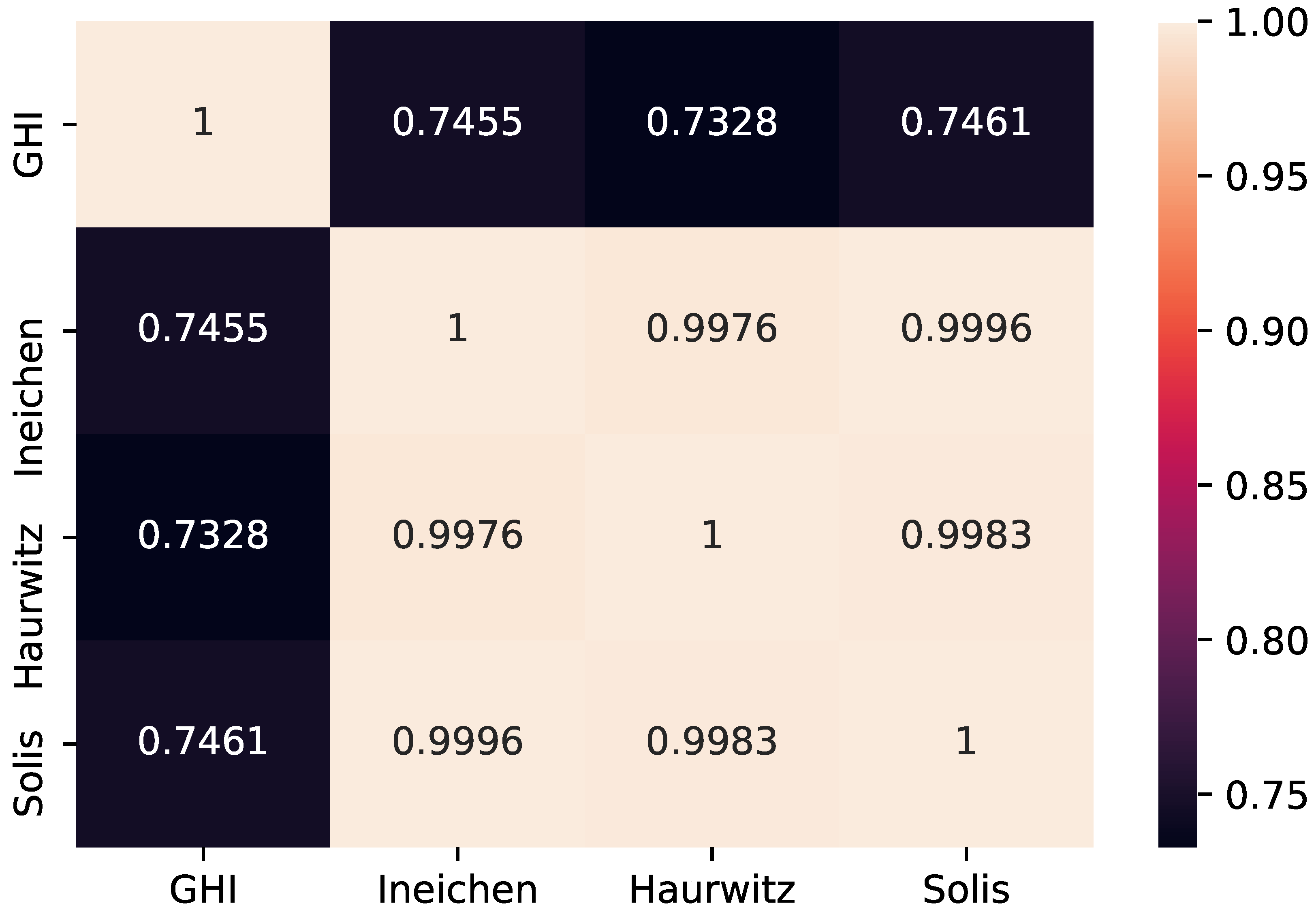

An analysis of clear-sky models was necessary to include not only historical data, but also a variable which adds information to predict GHI. To avoid colinearity, only one clear-sky model was included as an exogenous variable for the models.

Applying deep learning techniques for long-term forecasting requires specific data preparation steps. FFNN and RNN require both different pre-processing, which are explained in

Section 5. Then, the fitting of these two models is carried out.

The evaluation part includes the computation of metrics to evaluate the long-term forecast with respect to the observed values of 2020. A grid search procedure is carried out to find good hyperparameters for this forecasting task.

Uncertainty for these models comes from two sources: aleatoric and epidemic [

46]. Aleatoric uncertainty has to do with data collection and is irreducible. In this case, it is related to the instruments’ accuracy. On the other hand, epistemic uncertainty occurs due to training data being not appropriate. This kind of uncertainty tends to arise in regions where there are not enough training samples.

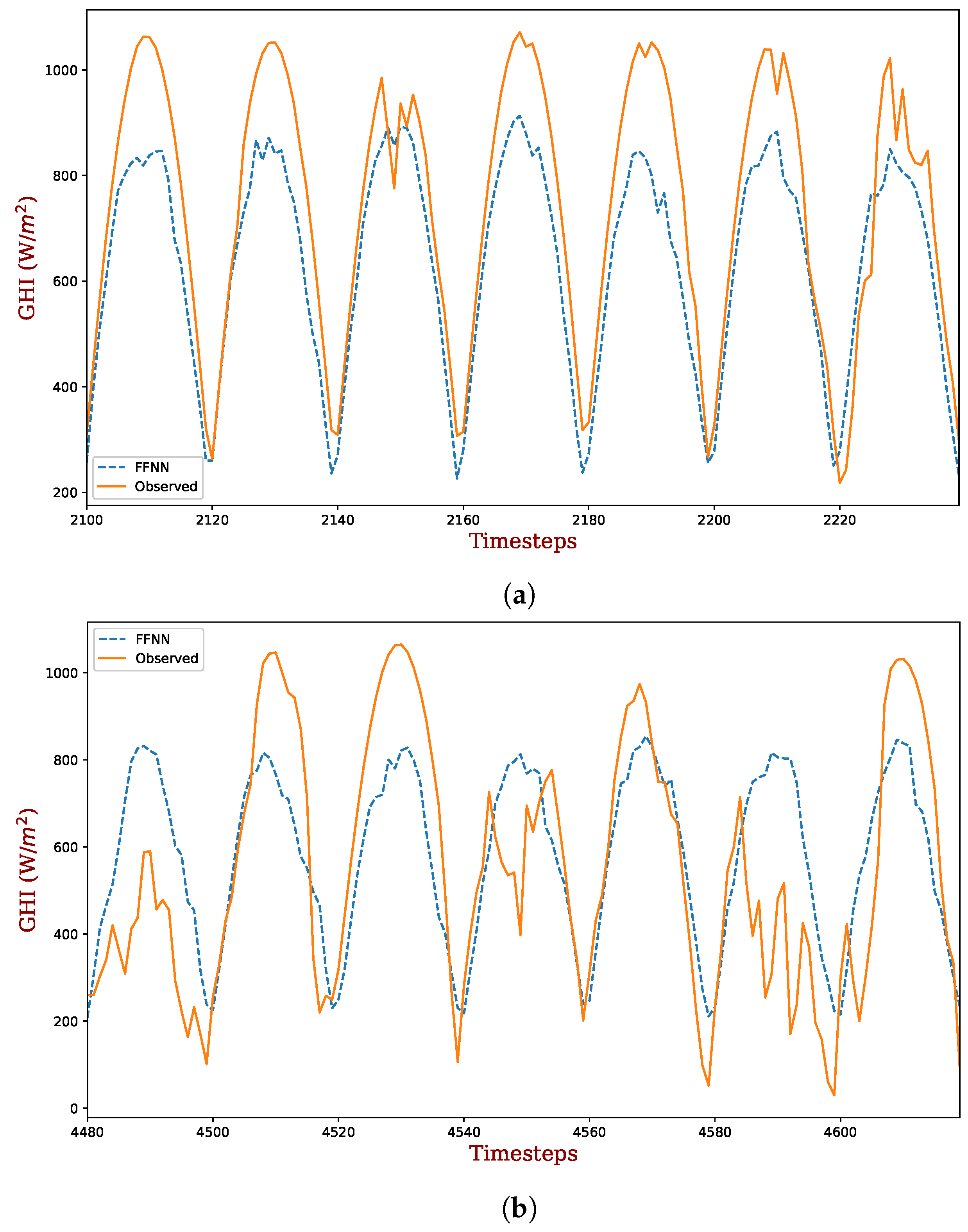

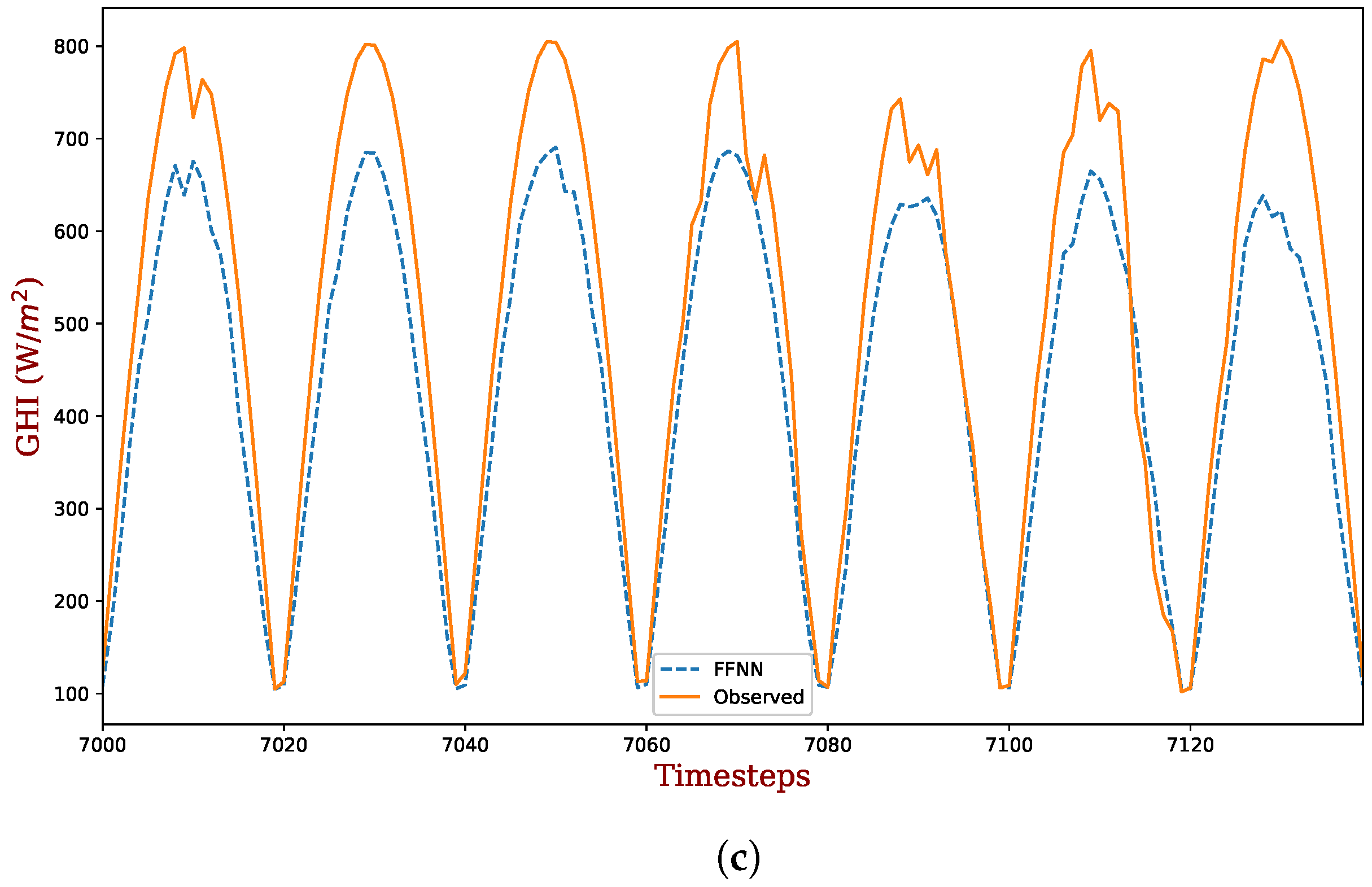

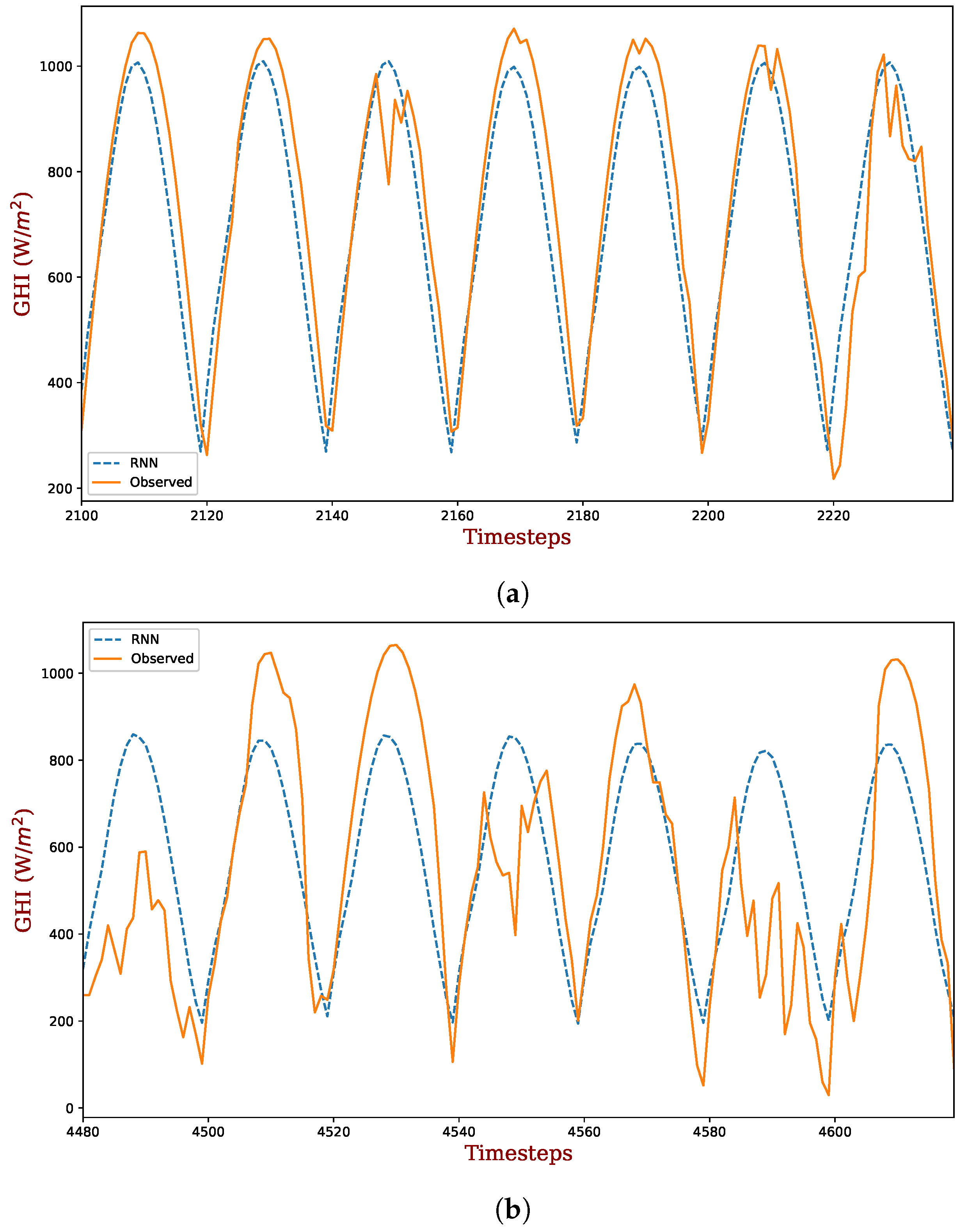

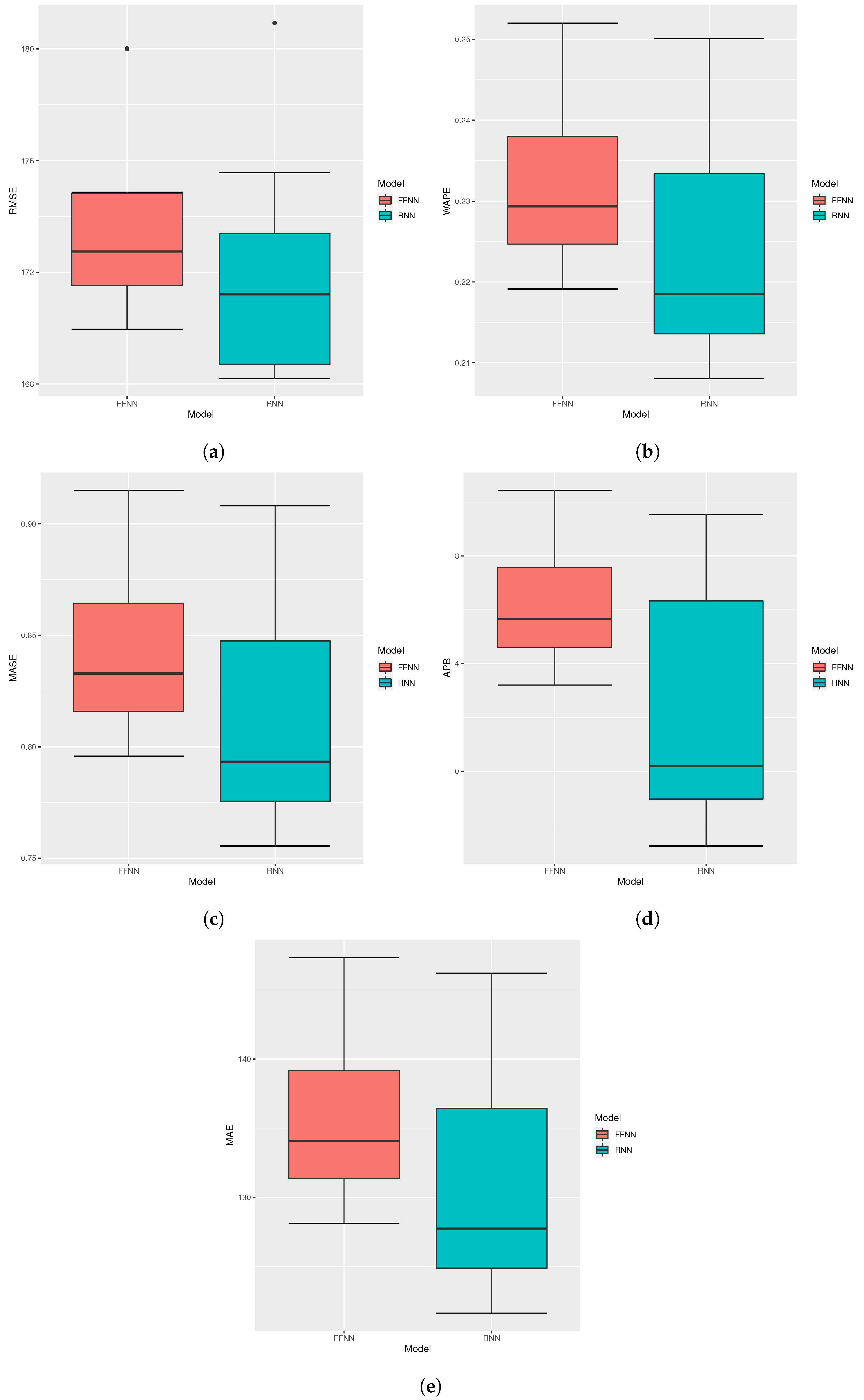

Finally, each metric distribution is presented using boxplots to analyze the variability and the median of each deep learning model.

The forecast for each technique corresponds to the average of 10 different forecasts applying a particular random seed to avoid a bias caused by some extraordinary result generated by a “lucky” seed.

6. Conclusions

Long-term GHI forecasting has been slightly studied in the literature until now. In this work, we propose a new approach to feed two deep learning models to face this task: feed forward neural networks and recurrent neural networks. For each technique, a single model was trained in a very short computational time (less than 3 s in each case). We applied the proposed technique to a case study in Michoacan, Mexico. Data exploration showed that GHI non-zero values were found between 8:00 a.m. and 5:30 p.m. Two different data preparation techniques were employed to feed the inputs to the deep learning models. The clear-sky model “Solis” was employed to enrich historical data and improve the forecasting power of our proposed approaches, given that it provided better correlation with the historical data (0.7461). Three time series forecasting methods (ARIMA), exponential smoothing (ES), and seasonal naive (SN) were used for benchmarking purposes. In terms of RMSE, the two deep learning methods resulted in being more effective (FFNN: 174.728, RNN: 170.033) with respect to the benchmark (S.N.: 225.817, E.S.: 231.580, ARIMA: 218.461). Finally, the Mann–Whitney U test showed that RNN showed significantly better performance over FFNN in terms of WAPE, MAPE, MASE, and MAE (

p value of 0.032). In this work, RNN and FFNN were used for long-term forecasting using the proposed approach. However, these two approaches are commonly used for short-term forecasting. Only deep learning models were included in this study. However, for a further comparison, machine learning models, such as support vector machines and random forests will be employed. In addition to that, tensor-train recurrent neural networks, which is a novel and specialized deep learning technique for long-term time series forecasting (see [

48]), will also be used to explore its performance facing this task in future work. This work has entailed the GHI forecast as part of a planning problem (considering the desired resolution for microgrids sizing). However, the importance of the optimal control of microgrids has gained importance in the context of smart cities and smart grids, which are part of the internet of things (IIOT) [

49]. The study of the challenges related to IIOT and big data in the context of mobile devices includes how to manage and store data (see [

50]). Specifically, one alternative to tackle this problem is to reduce the number of parameters to increase the model’s speed of training (see [

51]), which will be studied in a next work. The long-term prediction of GHI is vital to achieve a good microgrid design when photovoltaic technologies are included. For future work, we will embed the long-term forecasting methodology in an optimization framework to obtain the optimal design of microgrids.