1. Introduction

Model predictive control (MPC) has attracted considerable attention, since it is an effective control algorithm to deal with multivariable constrained control problems. The nominal MPC for constrained linear systems has been solved in systematic ways around 2000 [

1,

2]. Recently, some approaches have been extended to distributed implementations [

3,

4]. Synthesizing robust MPC for constrained uncertain systems has attracted great attention after the nominal MPC. This has become a significant branch of MPC. The lack of robustness in MPC, based on a nominal model [

5], calls for a robust MPC technique based on uncertainty models. Up to now, robust MPC has been solved in several ways [

6,

7,

8]. A good technique for robust MPC, however, requires not only guaranteed stability, but also low computational burden, big (at least desired) domain of attraction, and low performance cost value [

9].

The authors in [

6] firstly solved a min-max optimization problem in an infinite horizon for systems with polytopic description, by fixing the control moves as a state feedback law that was on-line calculated. The authors in [

9] off-line calculated a feedback law sequence with corresponding ellipsoidal domains of attraction, and on-line interpolated the control law at each sampling time applying this sequence. The computational burden is largely reduced. In addition, nominal performance cost is used in [

9] to the take place of the “worst-case” one so that feasibility can be improved. A heuristic varying-horizon formulation is used and the feedback gains can be obtained in a backward manner.

In this paper, the off-line technique in [

9] is further studied. Originally, each off-line feedback law was calculated where the infinite horizon control move was treated as a single state feedback law. This paper, instead, optimizes the feedback law in the larger ellipsoid by considering that the feedback laws in the smaller ellipsoids will be applied at the next time if it is applied at the current time. In a sense, the new technique in this paper is equivalent to a varying-horizon MPC, i.e., the control horizon (say

) gradually changes from

M > 1 to

M = 1, while the technique in [

9] can be taken as a fixed horizon MPC with

M = 1. Hence, the new technique achieves better control performance and can control a wider class of systems, i.e., improve control performance and feasibility.

So far, the state feedback approach is popular in most of the robust MPC problem, and the full state is assumed to be exactly measured to act as the initial condition for future predictions [

10,

11,

12,

13,

14]. However, in many control problems, not all states can be measured exactly, and only the output information is available for feedback. In this case, an output feedback RMPC design is necessary (e.g., [

3,

15]). The output feedback MPC approach, parallel to that in [

6], has been proposed in [

16], and the off-line robust MPC has been studied in [

17]. The new approach in this paper may be applied to improve the procedure in [

17], which will be studied in the near future.

Notation: The notations are fairly standard.

is the

-dimensional space of real valued vectors.

W > 0 (

W ≥ 0) means that

W is symmetric positive-definite (symmetric non-negative-definite). For a vector

x and positive-definite matrix

,

.

is the value of vector

x at a future time

k +

i predicted at time

k. The symbol * induces a symmetric structure, e.g., when

and

are symmetric matrices, then

.

2. Problem Statement

Consider the following time varying model:

with input and state constraints, i.e.,

where

and

are input and measurable state, respectively;

, and

with

,

and

,

;

. Input constraint is common in practice, which arises from physical and technological limitations. It is well known that the negligence of control input constraints usually leads to limit cycles, parasitic equilibrium points, or even causes instability. Moreover, we assume that

, where

, i.e., there exist

nonnegative coefficients

,

such that

A predictive controller is proposed to drive systems (1) and (2) to the origin

, and at each time

, to solve the following optimization problem:

The following constraints are imposed on Equation (4a):

for all

i ≥ 0. In (4),

and

are weighting matrices and

are the decision variables. At time

k,

is implemented and the optimization (4) is repeated at time

k + 1.

The authors in [

9] simplified problem (4) by fixing

as a state feedback law, i.e.,

,

. Define a quadratic function

with the robust stability constraint as follows:

For a stable closed-loop system,

and

. Summing (6) from

to

obtains

where

. Define

and

, then Equations (4c), (6) and (7) are satisfied if

where

(

) is the

th (

th) diagonal element of

(

) [

18]. In this way, problem (4) is approximated by

s.t. Equations (8)–(11).

The closed-loop system is exponentially stable if (12) is feasible at the initial time k = 0.

Based on [

6], the authors in [

9] off-line determined a look-up table of feedback laws with corresponding ellipsoidal domains of attraction. The control law was determined on-line from the look-up table. A linear interpolation of the two corresponding off-line feedback laws was chosen when the state stayed between two ellipsoids and an additional condition was satisfied.

| Algorithm 1 The Basic Off-Line MPC [9] |

| 1: Off-line, generate state points where , |

| 2: is nearer to the origin than . |

| 3: Substitute in (8) by , |

| 4: |

| 5: Solve (12) to obtain , , , |

| 6: The ellipsoids |

| 7: The feedback laws . |

| 8: Take appropriate choices to ensure , . |

| 9: On-line, if for each , |

| 10: The following condition is satisfied: |

| 11: |

| 12: Then each time adopt the following control law: |

| 13: |

| 14: where , and . |

Compared with [

6], the on-line computational burden is reduced, but the optimization problem gives worse control performance. In this paper, we propose a new algorithm with better control performance and larger domains of attraction.

3. The Improved Off-Line Technique

In calculating Fh, Algorithm 1 does not consider Fi, . However, for smaller ellipsoids Fi, are better feedback laws than Fh. In the following, the selection of , , is the same with Algorithm 1, but a different technique is adopted in this paper to calculate , , , . For , , we choose , such that, for all , at the following time is applied and .

3.1. Calculating Q2, F2

Define an optimization problem

and solve this problem by

By analogy to Equation (7),

where

. In this way, problem (4a) is turned into min-max optimization of (also refer to [

18])

Solve

by

and define

Introduce a slack variable

such that

and

Moreover,

should satisfy hard constraints

and terminal constraint

Equation (23) is equivalent to

. Define

and

, then the following LMIs can be obtained:

Constraint (22) is satisfied if [

6]

Thus,

,

and

can be obtained by solving

3.2. Calculating Qh, Fh,

The rationale in

Section 3.1 is applied, with a little change. Define an optimization problem

s.t. Equations (4b) and (4c) for all

i ≥

h − 1

By analogy to Equation (18), problem (4a) is turned into min-max optimization of

which is solved by

By analogy to Equation (19), define

where, by induction, for

,

and

By Equation (33), introduce a slack variable

and define

such that

and

Moreover, the terminal constraint should be equivalent to

where, by induction,

Equation (38) means that, for

, if at the following time

is applied, then

. Equation (38) can be transformed into

Again,

should satisfy

and

Thus,

,

and

can be obtained by solving

s.t. Equations (36), (37) and (40)–(42).

| Algorithm 2 The Improved Off-Line MPC |

| 1: Off-line, generate state points where , |

| 2: is nearer to the origin than . |

| 3: Substitute in (8) by |

| 4: Solve (12) to obtain , |

| 5:

, , |

| 6:

. |

| 7: For , solve (29) |

| 8: Obtain , and . |

| 9: For , , solve (43) |

| 10: Obtain , and . |

| 11: , . |

| 12: On-line, at each time adopt the control law (14). |

Theorem 1. For systems (1) and (2), and an initial state , the off-line constrained robust MPC in Algorithm 2 robustly asymptotically stabilizes the closed-loop system.

Proof. Similar to [

9], when

satisfies

and

,

, let

,

and

. By linear interpolation,

and

, which means that

satisfies the input and state constraints. Since

is a stable feedback law sequence for all initial state inside of

, it is shown that

Moreover, Equation (40) is equivalent to

. Hence, by linear interpolation,

which means that

is a stable control law sequence for all

and is guaranteed to drive

into

, with the constraints satisfied. Inside of

,

is applied, which is stable and drives the state to the origin. ☐

If Equation (38) is made to be automatically satisfied, more off-line feedback laws may be needed in order for to cover a desired space region. However, with this automatic satisfaction, better control performance can be obtained. Hence, we give the following alternative algorithm.

| Algorithm 3 The Improved Off-Line MPC with an Automatic Condition |

| 1: Off-line, as in Algorithm 2, |

| 2: Obtain , , and , . |

| 3: , . |

| 4: On-line, if for each , , |

| 5: The following condition is satisfied: |

| 6: |

| 7: for |

| 8: The following condition is satisfied: |

| 9: |

| 10: Then at each time adopt the control law (14). |

4. Numerical Example

4.1. Example 1

Consider the system , where is an uncertain parameter. Use to form polytopic description and to calculate the state evolution. The constraints are , . Choose the weighting matrices as and . Consider the following two cases.

Case 1. Choose , . Choose and , . Choose large such that: (i) condition (13) is satisfied, (ii) optimization problem (12) is feasible for , and (iii) optimization problem (29) or (43) is feasible for . Thus, we obtain , and . The initial state lies at .

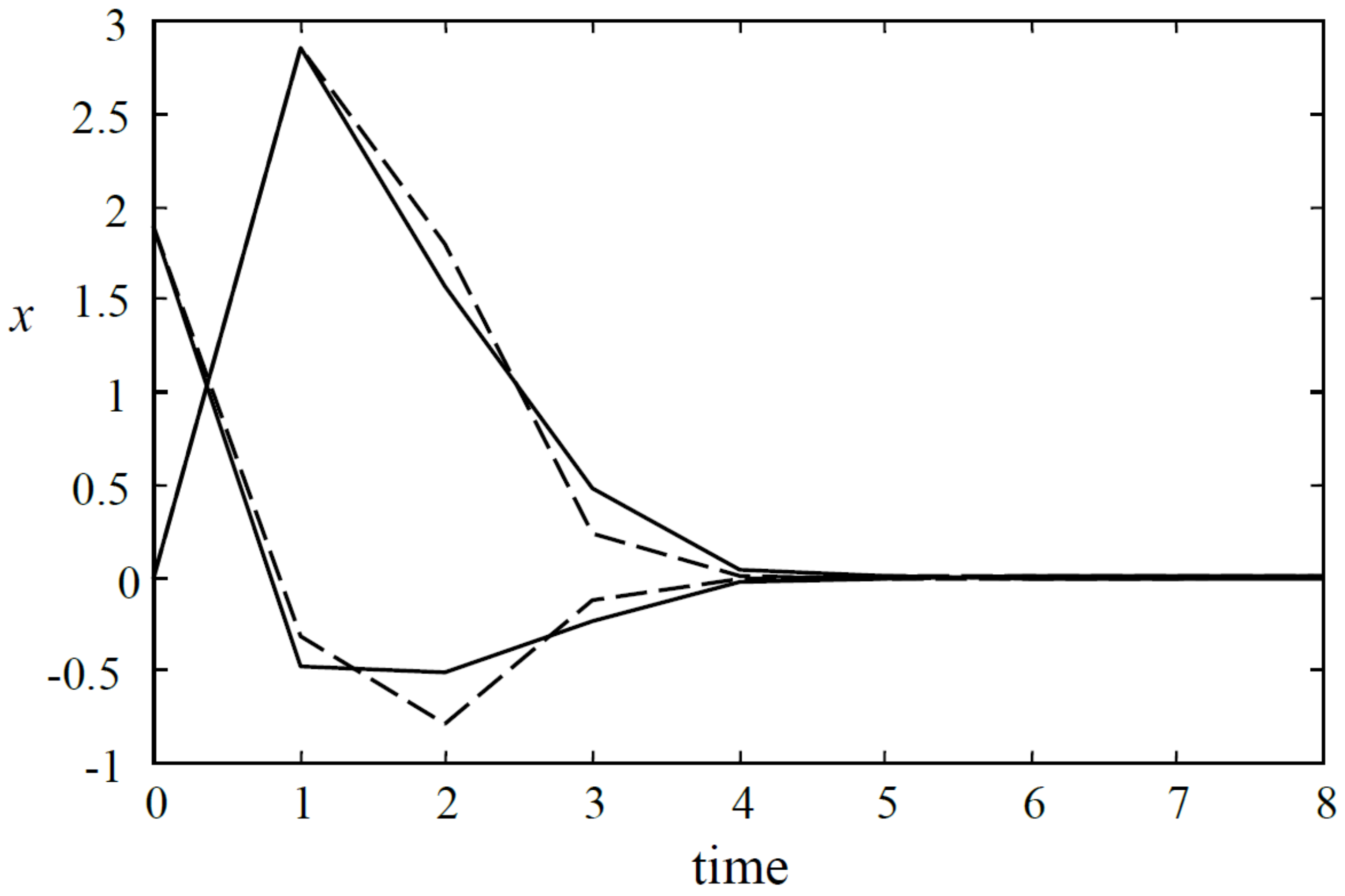

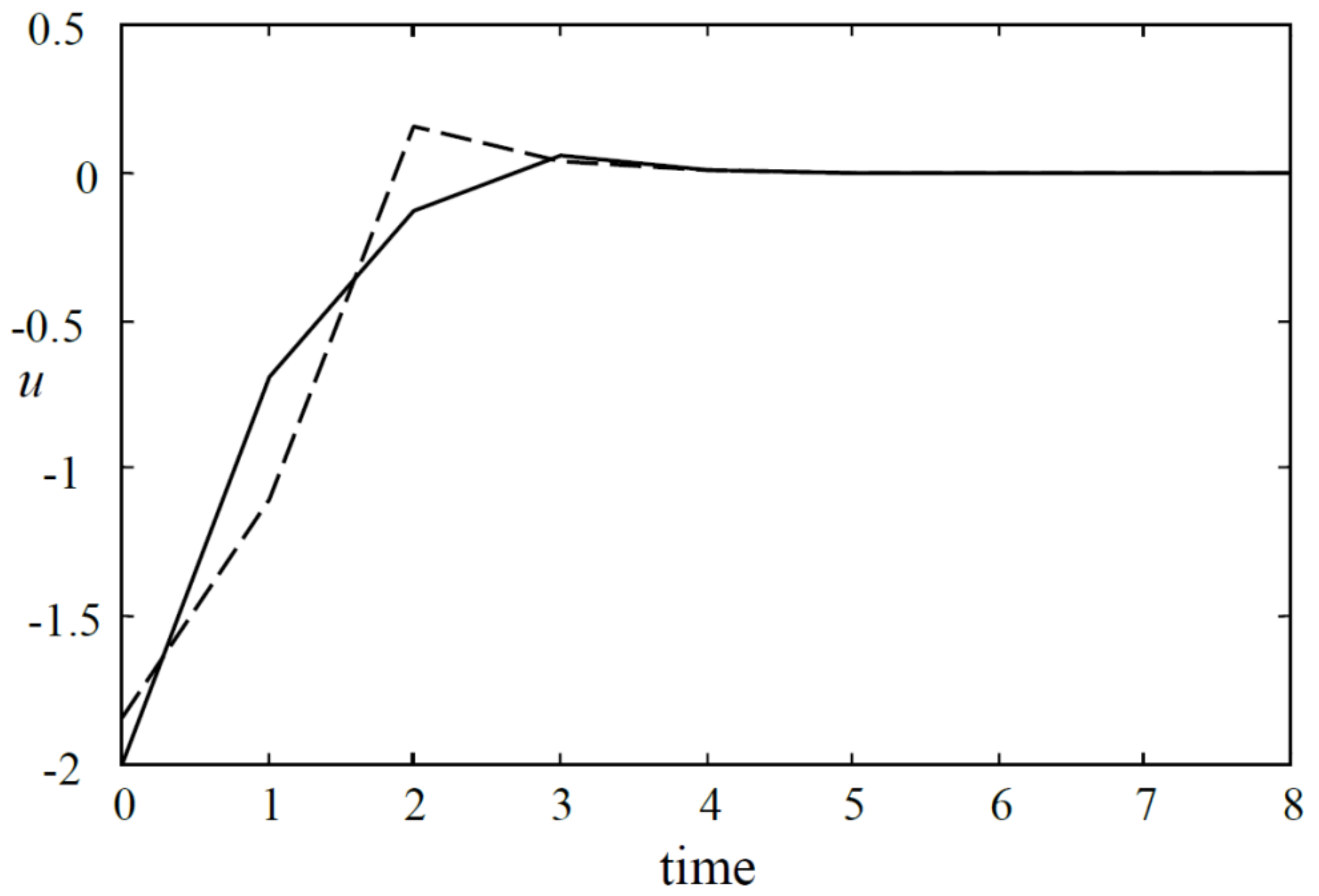

Apply Algorithms 1 and 2. The state and input responses for these two algorithms are shown in

Figure 1 and

Figure 2, respectively. The upper bounds of the cost value

for these two algorithms are

and

, respectively. Moreover, denote

then

for Algorithm 1 and

for Algorithm 2. The simulation results show that Algorithm 2 achieves better control performance.

Case 2. Increase . Algorithm 1 becomes infeasible at . However, Algorithm 2 is still feasible by choosing , , , , , , , etc.

4.2. Example 2

Directly consider the system in [

9]:

,

where

is an uncertain parameter. Use

to form a polytopic description and use

to calculate the state evolution. The constraints are

,

,

. Choose the weighting matrices as

and

.

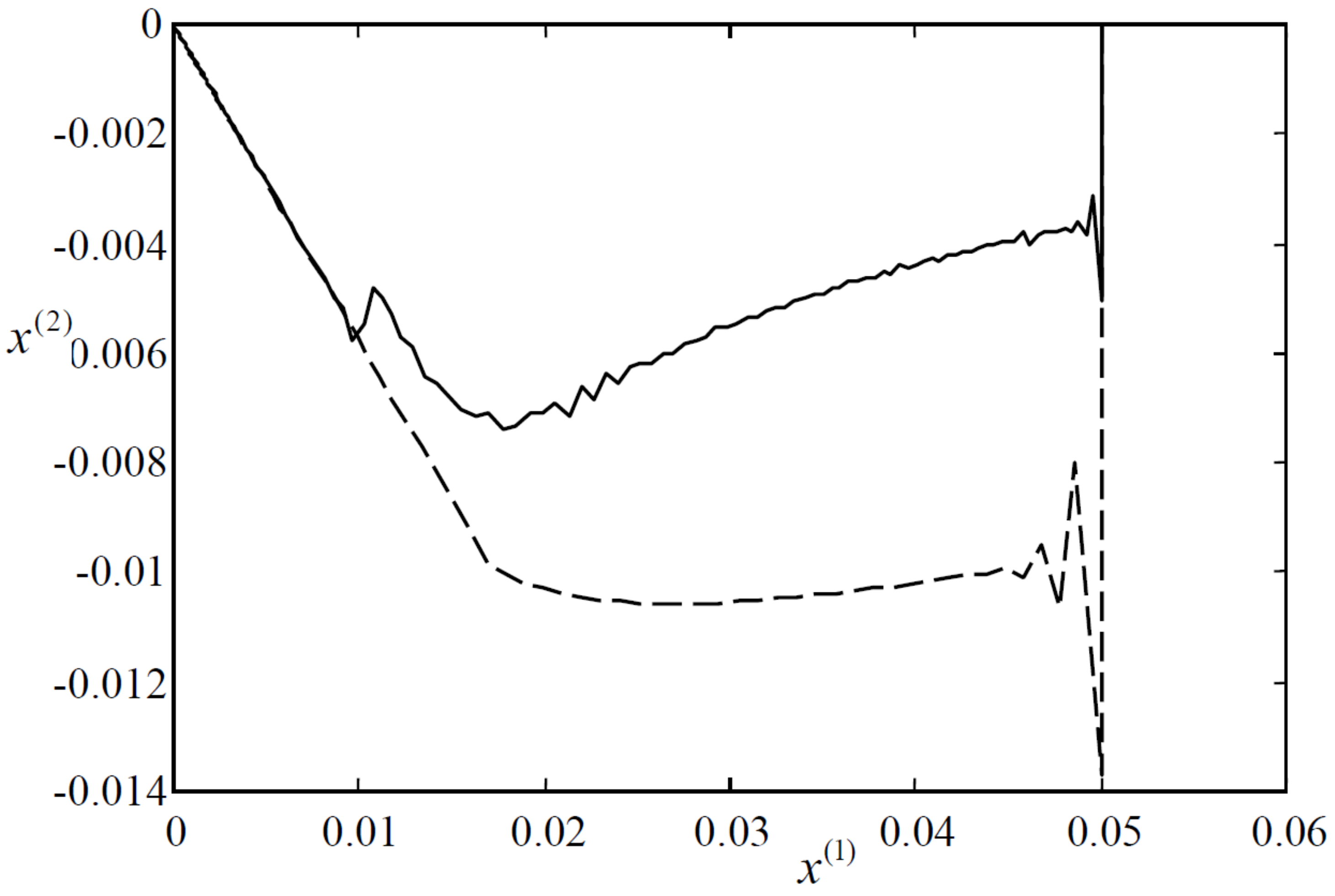

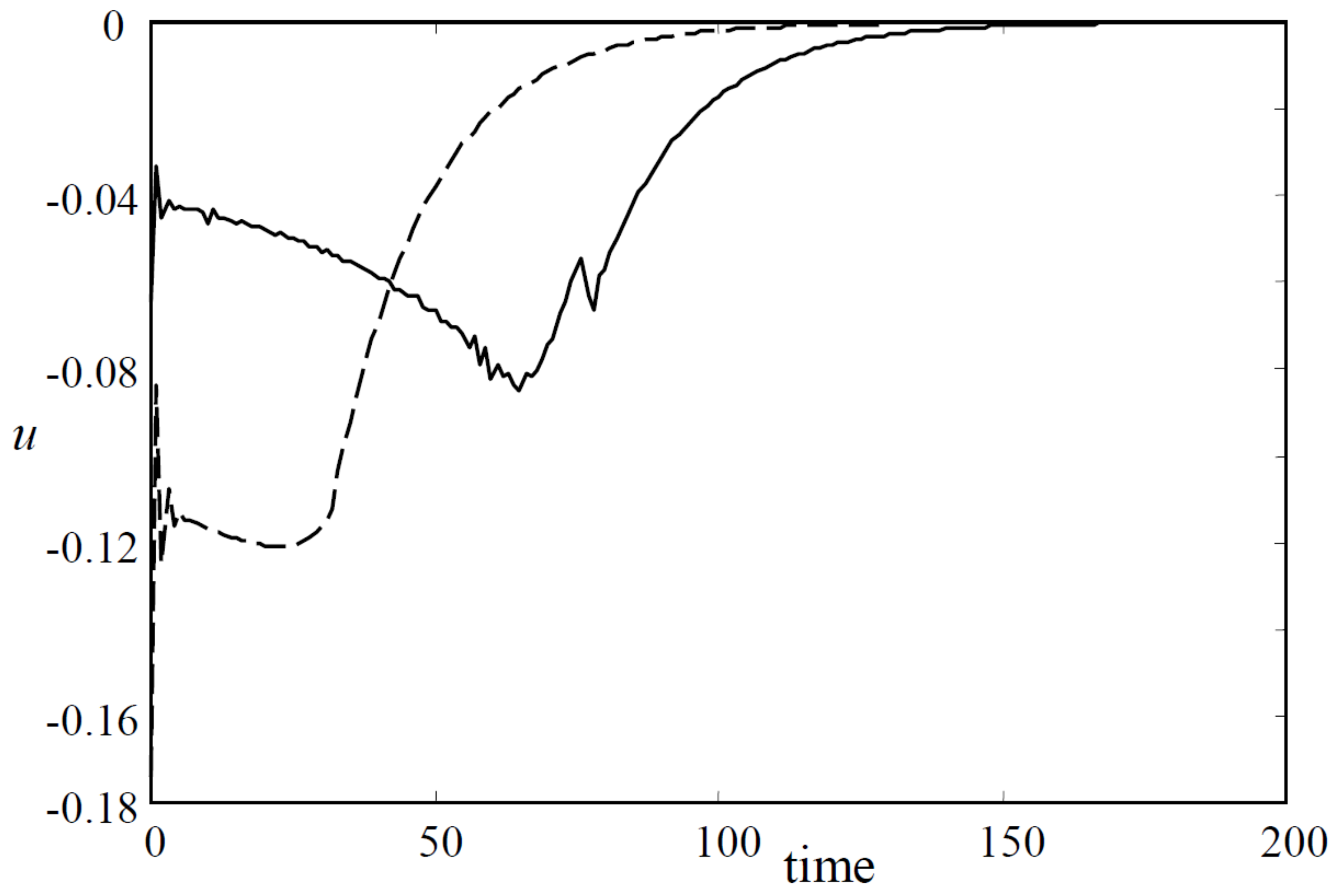

Case 1. Choose

and

. The initial state lies at

. Apply Algorithms 1 and 3. The state trajectories, the state responses and the input responses for these two algorithms are shown in

Figure 3,

Figure 4 and

Figure 5, respectively. Moreover, denote

, then

for Algorithm 1 and

for Algorithm 3.

Case 2. Choose

,

such that the optimization problem is infeasible for

. Then, for Algorithm 1,

is

, and for Algorithm 3,

.