Navigation of an Autonomous Wheeled Robot in Unknown Environments Based on Evolutionary Fuzzy Control

Abstract

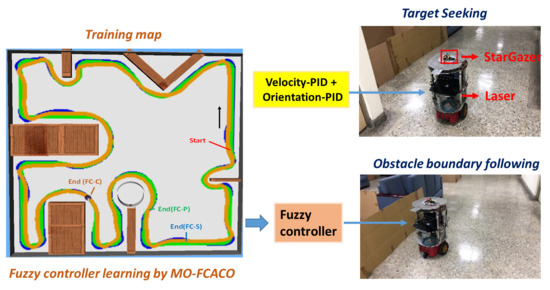

:1. Introduction

2. Materials and Methods

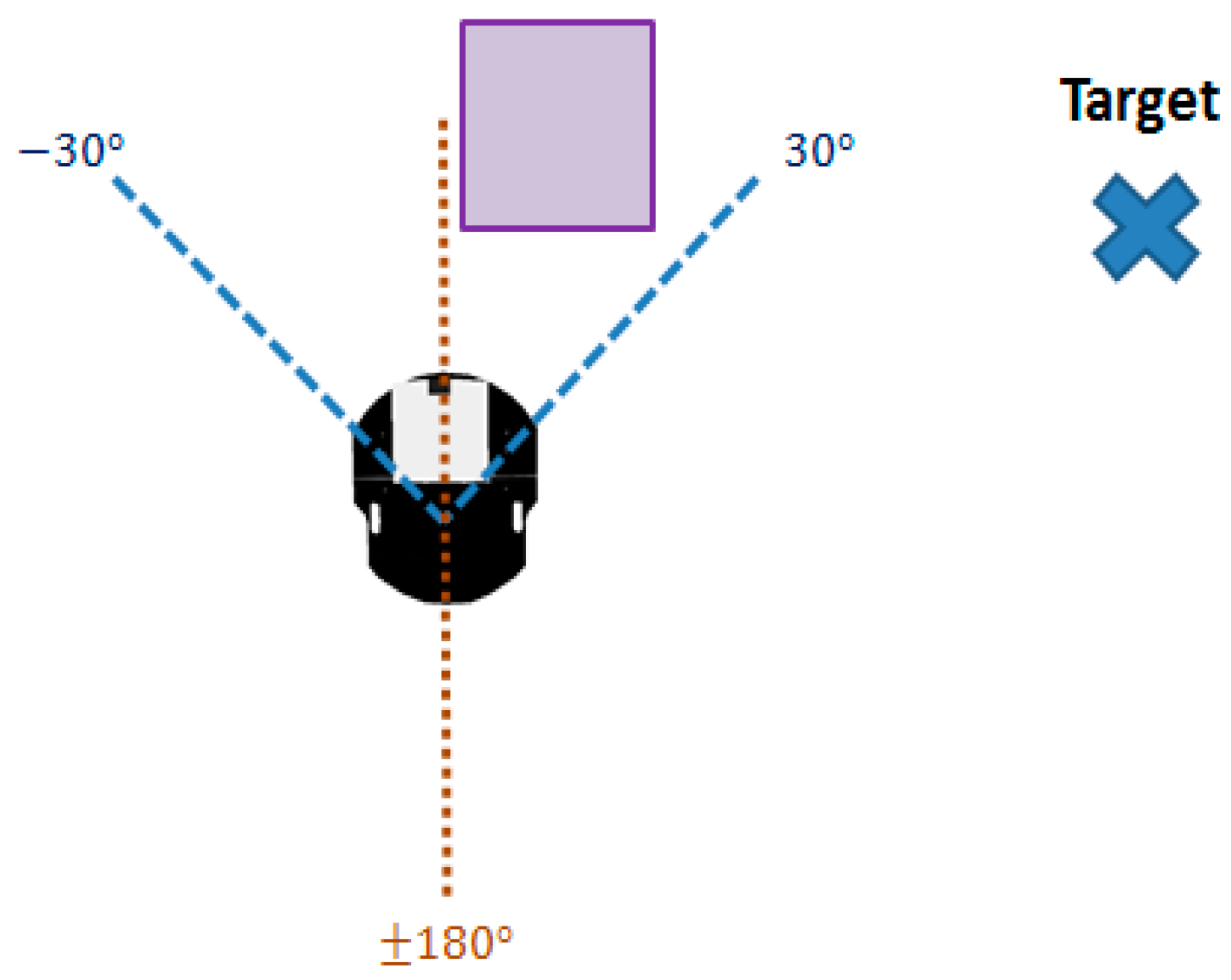

2.1. Fuzzy Controller and Obstacle Avoidance Behavior

2.1.1. Fuzzy Controller

2.1.2. Multiple Control Objectives

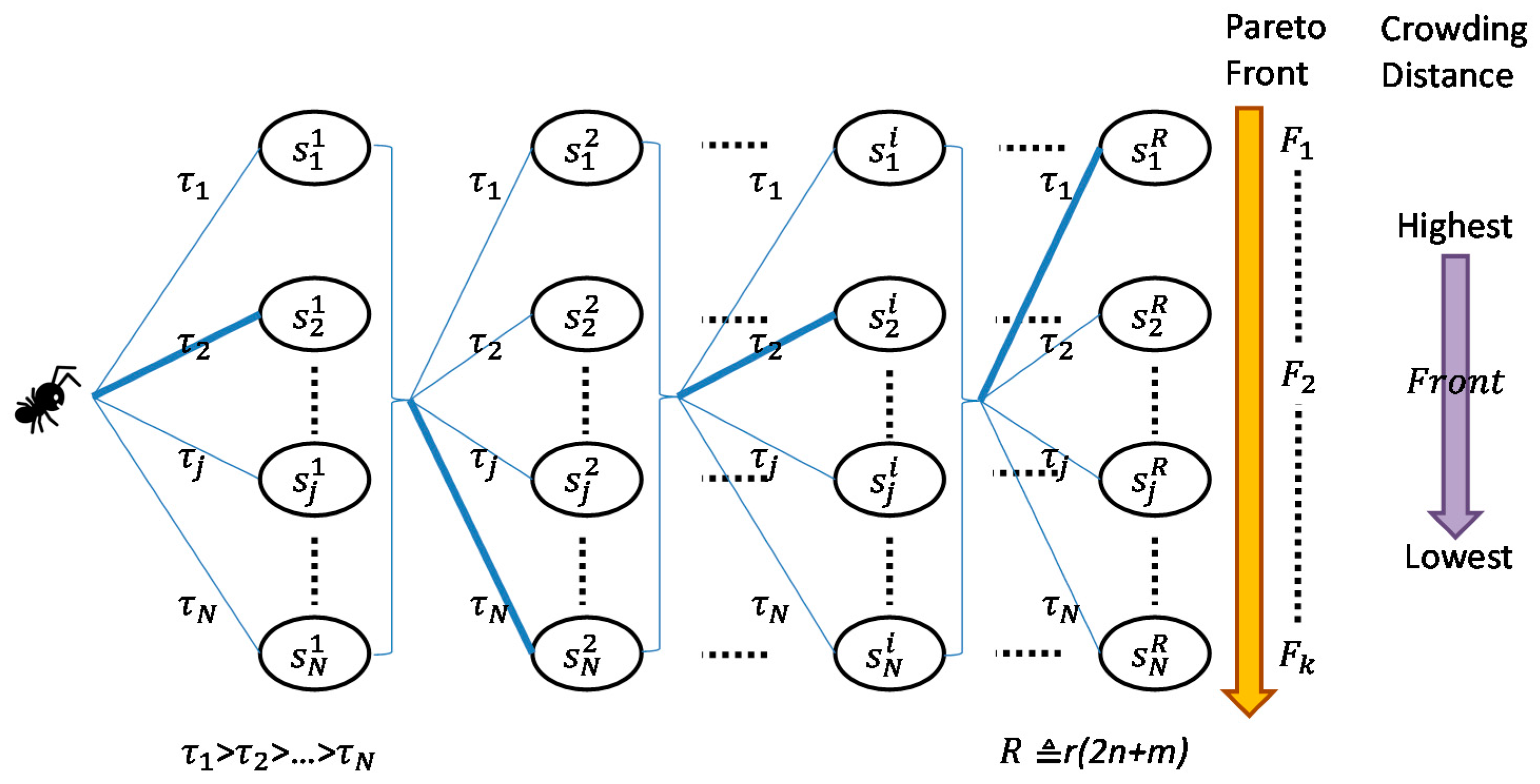

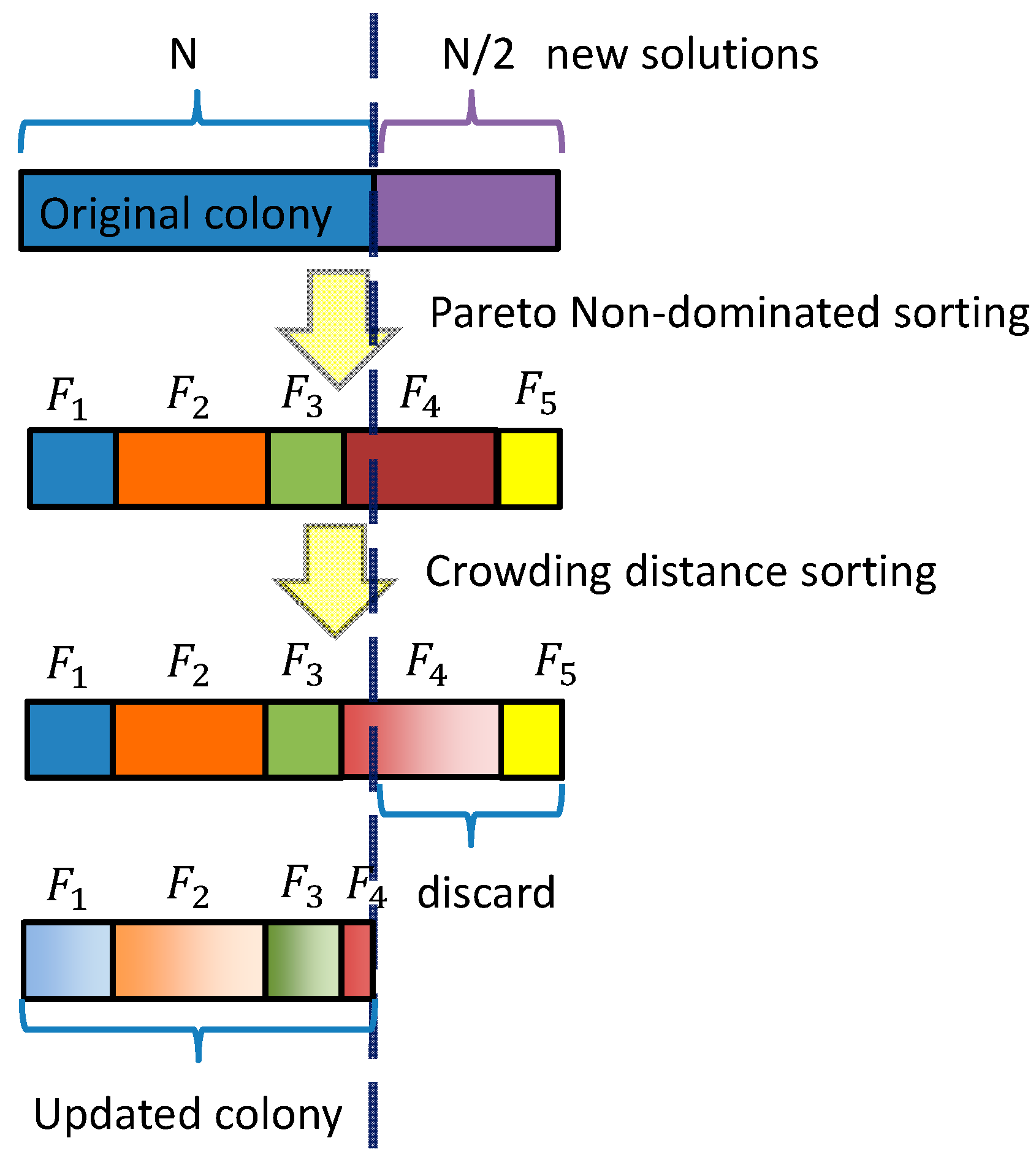

2.2. Multi-Objective Front-Guided Continuous Ant Colony Optimization

2.3. Navigation

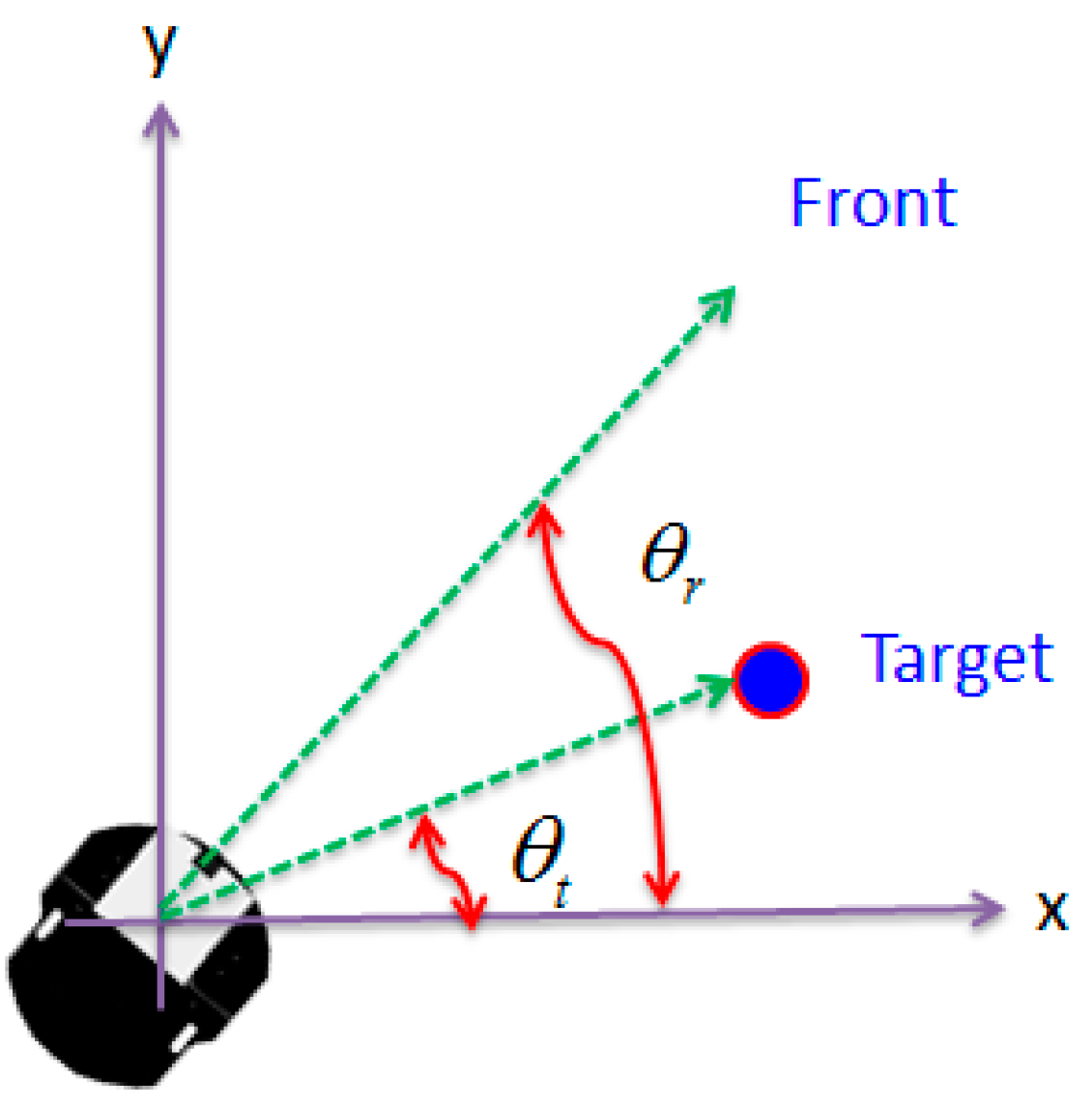

2.3.1. Target Seeking

2.3.2. Behavior Supervisor

3. Results and Discussion

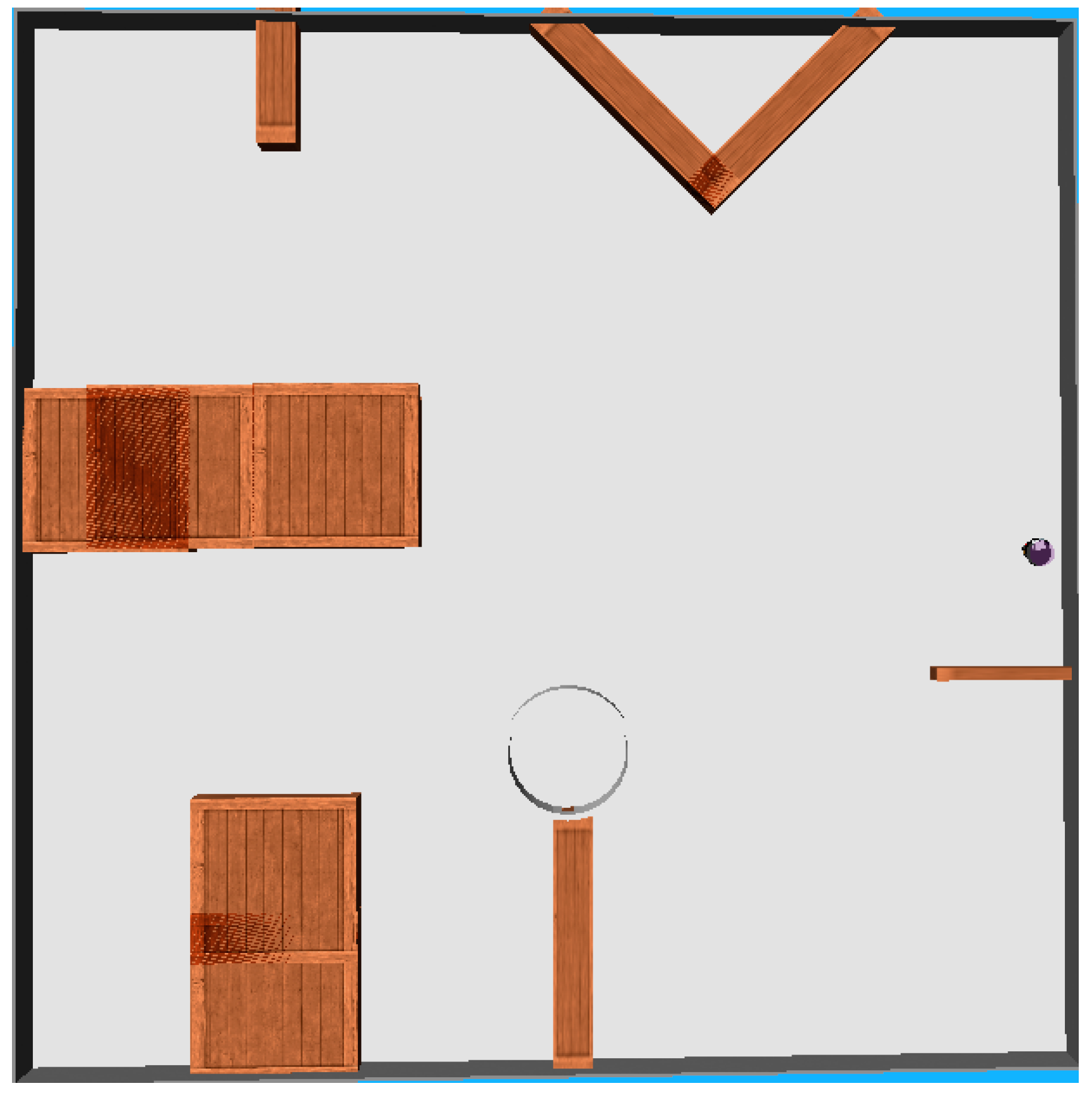

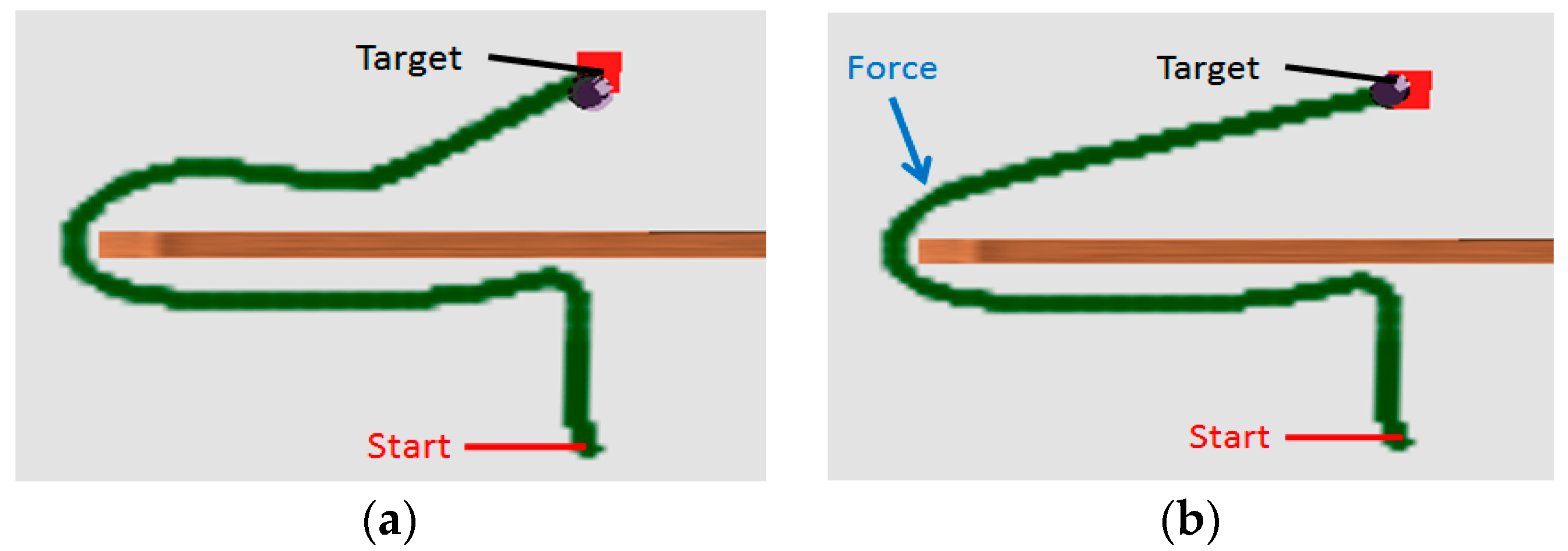

3.1. Simulations

3.2. Experiments

4. Conclusions

Author Contributions

Conflicts of Interest

References

- Rose, C.; Britt, J.; Allen, J.; Bevly, D. An integrated vehicle navigation system utilizing lane-detection and lateral position estimation systems in difficult environments for GPS. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2615–2629. [Google Scholar] [CrossRef]

- Kim, S.B.; Bazin, J.C.; Lee, H.K.; Choi, K.H.; Park, S.Y. Ground vehicle navigation in harsh urban conditions by integrating inertial navigation system, global positioning system, odometer and vision data. IET Radar Sonar Navig. 2011, 5, 814–823. [Google Scholar] [CrossRef]

- Reyher, A.V.; Joos, A.; Winner, H. A Lidar-Based Approach for Near Range Lane Detection. In Proceedings of the 2005 IEEE Conference on Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; pp. 147–152. [Google Scholar]

- Vu, A.; Ramanandan, A.; Chen, A.; Farrell, J.A.; Barth, M. Real-time computer vision/DGPS-aided inertial navigation system for lane-level vehicle navigation. IEEE Trans. Intell. Transp. Syst. 2012, 13, 899–913. [Google Scholar] [CrossRef]

- Lima, D.A.D.; Victorino, A.C. A Visual Servoing Approach for Road Lane Following with Obstacle Avoidance. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 412–417. [Google Scholar]

- Li, Q.; Chen, L.; Li, M.; Shaw, S.L.; Nüchter, A. A Sensor-fusion drivable-region and lane-detection system for autonomous vehicle navigation in challenging road scenarios. IEEE Trans. Veh. Technol. 2014, 63, 540–555. [Google Scholar] [CrossRef]

- Gwon, G.P.; Hur, W.S.; Kim, S.W.; Seo, S.W. Generation of a precise and efficient lane-level road map for intelligent vehicle systems. IEEE Trans. Veh. Technol. 2017, 66, 4517–4533. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhao, H.; Fu, H. A Control Method to Avoid Obstacles for an Intelligent Car Based on Rough Sets and Neighborhood Systems. In Proceedings of the 10th International Conference on Intelligent Systems and Knowledge Engineering (ISKE), Taipei, Taiwan, 24–27 November 2015; pp. 66–70. [Google Scholar]

- Cupertino, F.; Giordano, V.; Naso, D.; Delfine, L. Fuzzy control of a mobile robot. IEEE Robot. Autom. Mag. 2006, 13, 74–81. [Google Scholar] [CrossRef]

- Farooq, U.; Hasan, K.M.; Raza, A.; Amar, M.; Khan, S.; Javaid, S. A Low Cost Microcontroller Implementation of Fuzzy Logic Based Hurdle Avoidance Controller for a Mobile Robot. In Proceedings of the 2010 3rd IEEE International Conference on Computer Sciences and Information Technology, Chengdu, China, 9–11 July 2010; pp. 480–485. [Google Scholar]

- Farooq, U.; Hasan, K.M.; Amar, M.; Asad, M.U. Design and Implementation of Fuzzy Logic Based Autonomous Car for Navigation in Unknown Environments. In Proceedings of the 2013 International Conference on Informatics, Electronics and Vision (ICIEV), Dhaka, Bangladesh, 17–18 May 2013; pp. 1–7. [Google Scholar]

- Tao, T. Fuzzy Automatic Driving System for a Robot Car. In Proceedings of the 2014 International Conference on Fuzzy Theory and Its Applications (iFUZZY2014), Kaohsiung, Taiwan, 26–28 November 2014; pp. 132–137. [Google Scholar]

- Juang, C.F.; Hsu, C.H. Reinforcement ant optimized fuzzy controller for mobile-robot wall-following control. IEEE Trans. Ind. Electron. 2009, 56, 3931–3940. [Google Scholar] [CrossRef]

- Juang, C.F.; Chang, Y.C. Evolutionary group-based particle swarm-optimized fuzzy controller with application to mobile robot navigation in unknown environments. IEEE Trans. Fuzzy Syst. 2011, 19, 379–392. [Google Scholar] [CrossRef]

- Juang, C.F.; Jeng, T.L.; Chang, Y.C. An interpretable fuzzy system learned through online rule generation and multi-objective ACO with a mobile robot control application. IEEE Trans. Cybern. 2016, 46, 2706–2718. [Google Scholar] [CrossRef] [PubMed]

- Korf, R.E. Depth-first iterative-deepening: An optimal admissible tree search. Artif. Intell. 1985, 27, 97–109. [Google Scholar] [CrossRef]

- Koenig, S.; Likhachev, M.; Furcy, D. Lifelong planning A*. Artif. Intell. 2004, 155, 93–146. [Google Scholar] [CrossRef]

- Daniel, K.; Nash, A.; Koenig, S.; Felner, A. Theta*: Any-angle path planning on grids. J. Artif. Intell. Res. 2010, 39, 533–579. [Google Scholar]

- Zhong, C.; Liu, S.; Lu, Q.; Zhang, B.; Yang, S.X. An efficient fine-to-coarse wayfinding strategy for robot navigation in regionalized environments. IEEE Trans. Cybern. 2016, 46, 3157–3170. [Google Scholar] [CrossRef] [PubMed]

- Zalama, E.; Gomez, J.; Paul, M.; Peran, J.R. Adaptive behavior navigation of a mobile robot. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2002, 32, 160–169. [Google Scholar] [CrossRef]

- Rusu, P.; Petriu, E.M.; Whalen, T.E.; Cornell, A.; Spoelder, H.J.W. Behavior-based neuron-fuzzy controller for mobile robot navigation. IEEE Trans. Instrum. Meas. 2003, 52, 1335–1340. [Google Scholar] [CrossRef]

- Hui, N.B.; Mahendar, V.; Pratihar, D.K. Time-optimal, collision free navigation of a car-like mobile robot using neuro-fuzzy approaches. Fuzzy Sets Syst. 2006, 157, 2172–2204. [Google Scholar] [CrossRef]

- Zhu, A.; Yang, S.X. Neurofuzzy-based approach to mobile robot navigation in unknown environments. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2007, 37, 610–621. [Google Scholar] [CrossRef]

- Jolly, K.G.; Sreerama Kumar, R.; Vijayakumar, R. Intelligent task planning and action selection of a mobile robot in a multi-agent system through a fuzzy neural network approach. Eng. Appl. Artif. Intell. 2010, 23, 923–933. [Google Scholar] [CrossRef]

- Antonelo, E.A.; Schrauwen, B. On Learning Navigation Behaviors for Small Mobile Robots With Reservoir Computing Architectures. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 763–780. [Google Scholar] [CrossRef] [PubMed]

- Brahimi, S.; Tiar, R.; Azouaoui, O.; Lakrouf, M.; Loudini, M. Car-Like Mobile Robot Navigation in Unknown Urban Areas. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 1727–1732. [Google Scholar]

- Yoon, S.W.; Park, S.B.; Kim, J.S. Kalman filter sensor fusion for mecanum wheeled automated guided vehicle localization. J. Sens. 2015, 2015, 347379. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGAII. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chou, C.-Y.; Juang, C.-F. Navigation of an Autonomous Wheeled Robot in Unknown Environments Based on Evolutionary Fuzzy Control. Inventions 2018, 3, 3. https://doi.org/10.3390/inventions3010003

Chou C-Y, Juang C-F. Navigation of an Autonomous Wheeled Robot in Unknown Environments Based on Evolutionary Fuzzy Control. Inventions. 2018; 3(1):3. https://doi.org/10.3390/inventions3010003

Chicago/Turabian StyleChou, Ching-Yu, and Chia-Feng Juang. 2018. "Navigation of an Autonomous Wheeled Robot in Unknown Environments Based on Evolutionary Fuzzy Control" Inventions 3, no. 1: 3. https://doi.org/10.3390/inventions3010003

APA StyleChou, C.-Y., & Juang, C.-F. (2018). Navigation of an Autonomous Wheeled Robot in Unknown Environments Based on Evolutionary Fuzzy Control. Inventions, 3(1), 3. https://doi.org/10.3390/inventions3010003