Improved Color Mapping Methods for Multiband Nighttime Image Fusion

Abstract

:1. Introduction

2. Overview of Color Fusion Methods

- Statistical methods, resulting in an image in which the statistical properties (e.g., average color, width of the distribution) match that of a reference image;

- Sample-based methods, in which the color transformation is derived from a training set of samples for which the input and output (the reference values) are known.

3. Existing and New Color Fusion Methods

3.1. Existing Color Fusion Methods

3.1.1. Statistics Based Method

3.1.2. Sample Based Method

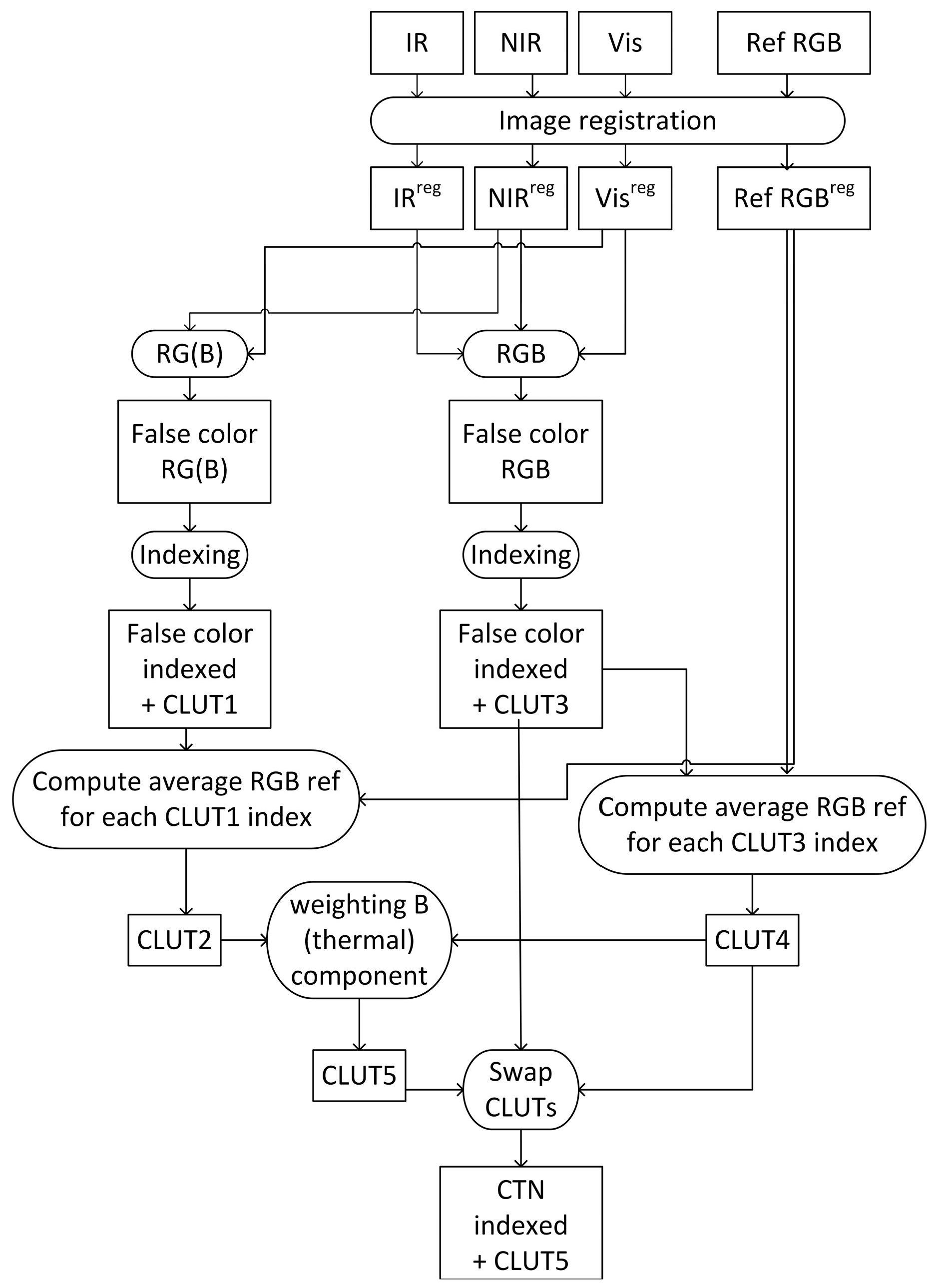

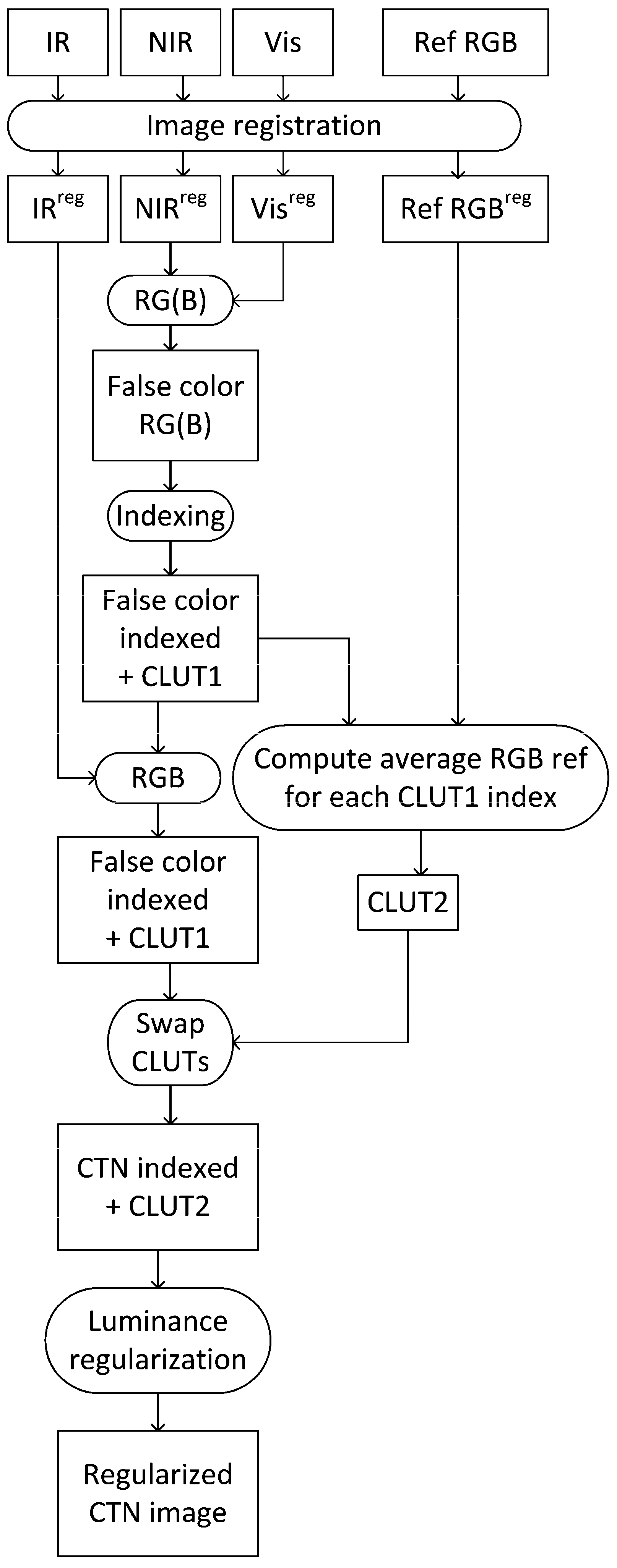

- The individual bands of the multiband sensor images and the daytime color reference image are spatially aligned.

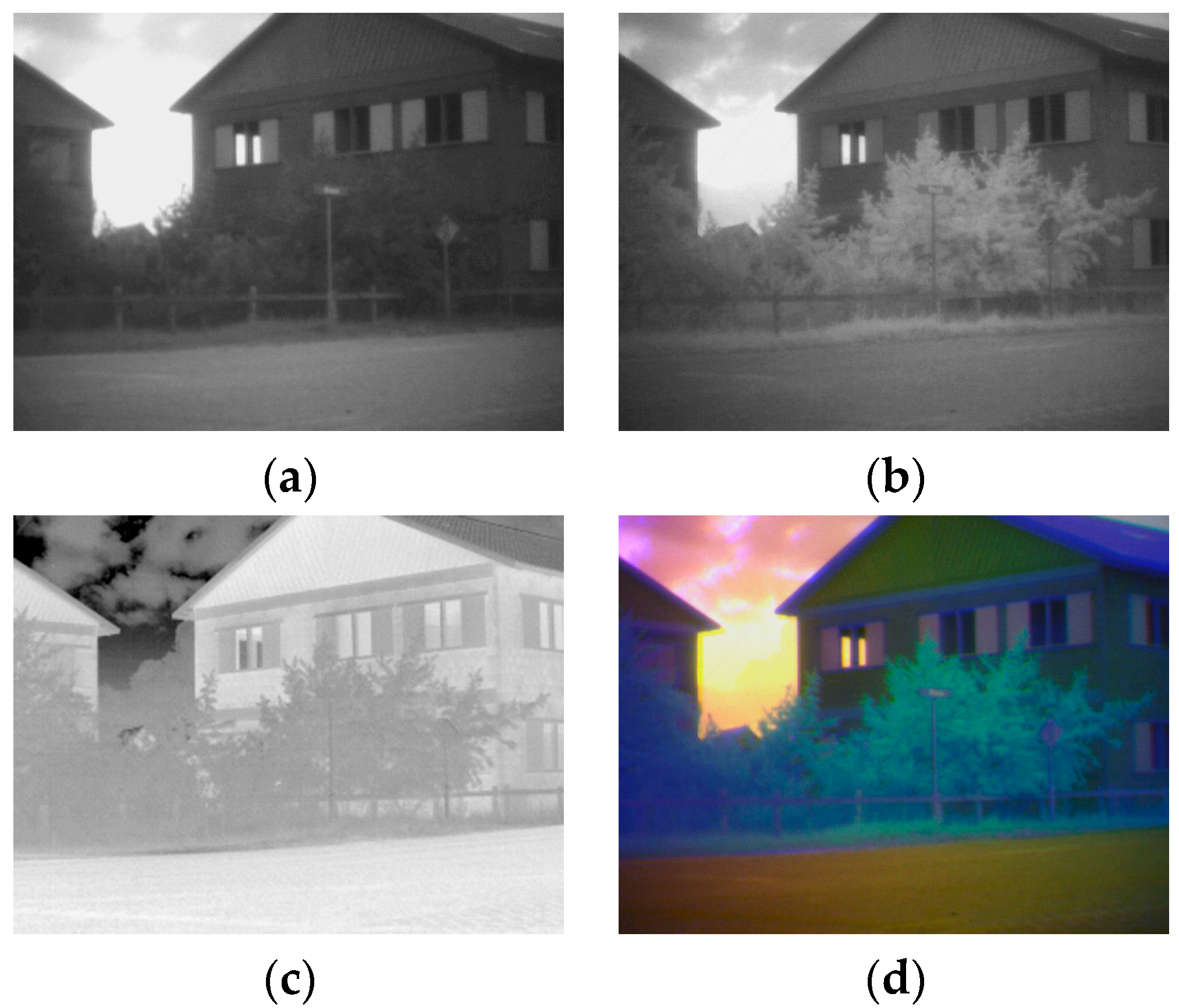

- The different sensor bands are fed into the R, G, B, channels (e.g., Figure 1d) to create an initial false-color fused representation of the multiband sensor image. In principle, it is not important which band feeds into which channel. This is merely a first presentation of the image and has no influence on the final outcome (the color fused multiband sensor image). To create an initial representation that is closest to the natural daytime image we adopted the ‘black-is-hot’ setting of the LWIR sensor.

- The false-color fused image is transformed to an indexed image with a corresponding CLUT1 (color lookup table) that has a limited number of entries N. This comes down to a cluster analysis in 3-D sensor space, with a predefined number of clusters (e.g., the standard k-means clustering techniques may be used for implementation, thus generalizing to N-band multiband sensor imagery).

- A new CLUT2 is computed as follows. For a given index d in CLUT1 all pixels in the false-color fused image with index d are identified. Then, the median RGB color value is computed over the corresponding set of pixels in the daytime color reference image, and is assigned to index d. Repeating this step for each index in CLUT1 results in a new CLUT2 in which each entry represents the daytime color equivalent of the corresponding false color entry in CLUT1. Thus, when represents the set of indices used in the indexed image representation, and represents a given index in I, then the support of d in the source (false-colored) image S is given byand the new RGB color value for index d is computed as the median color value over the same support in the daytime color reference image R as follows:

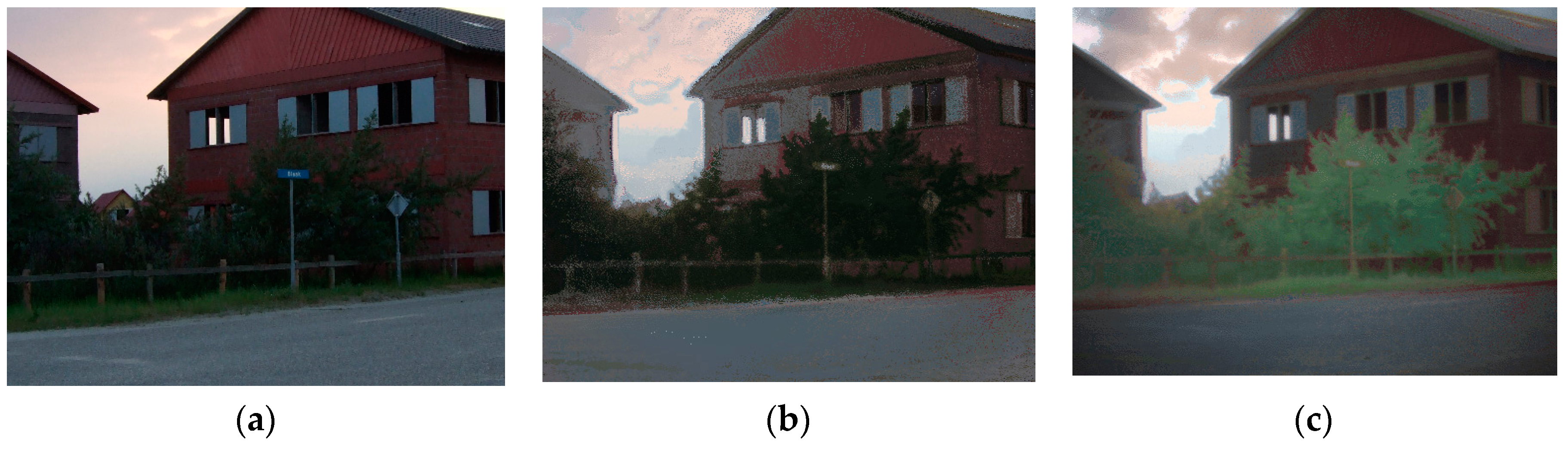

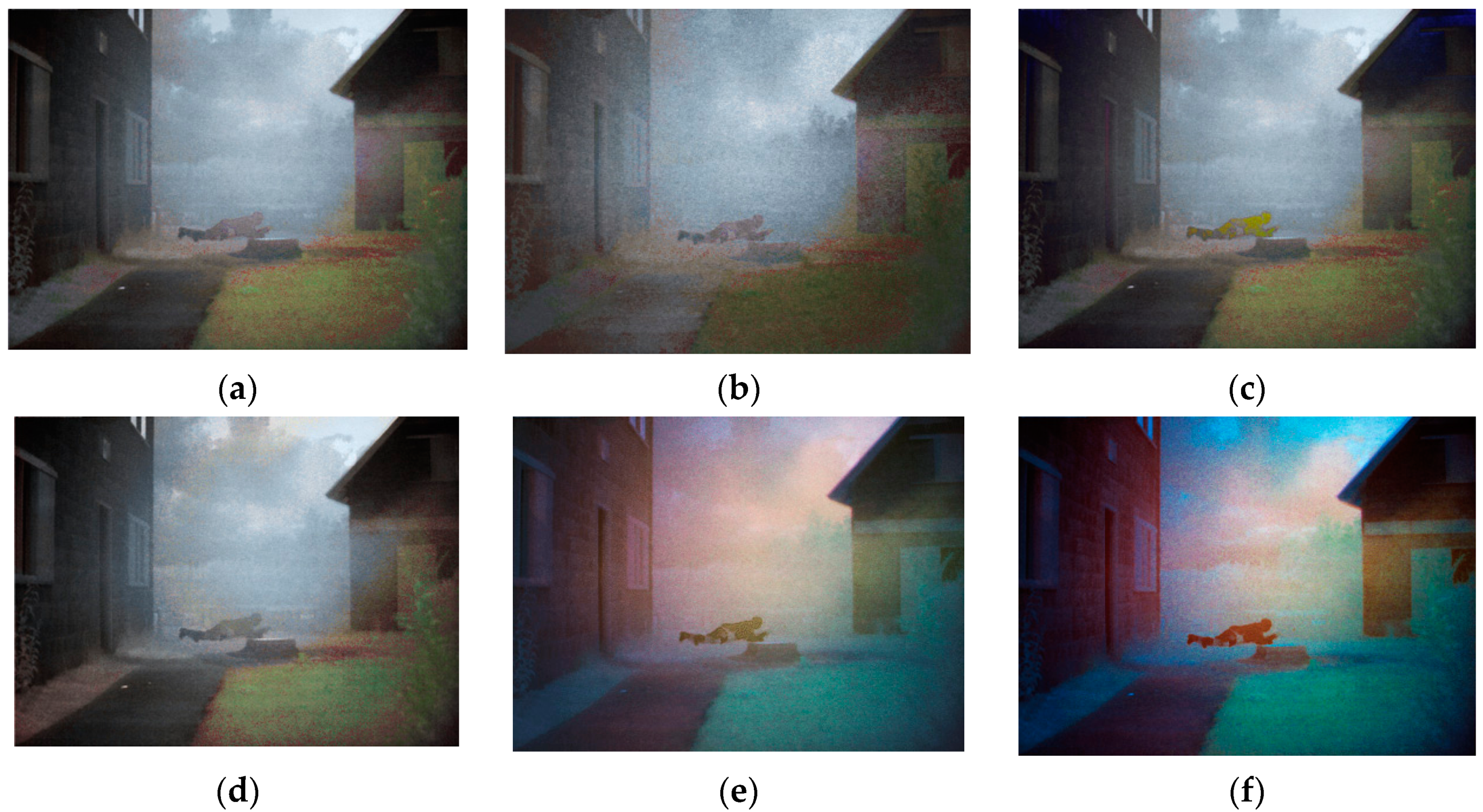

- The color fused image is created by swapping the CLUT1 of the indexed sensor image to the new daytime reference CLUT2. The result from this step may suffer from ‘solarizing effects’ when small changes in the input (i.e., the sensor values) lead to a large jump in the output luminance. This is undesirable, unnatural and leads to clutter (see Figure 2b).

- To eliminate these undesirable effects, a final step was included in which the luminance channel is adapted such that it varies monotonously with increasing input values. The luminance of the entry is thereto made proportional to the Euclidean distance in RGB space of the initial representation (the sensor values; see Figure 2c).

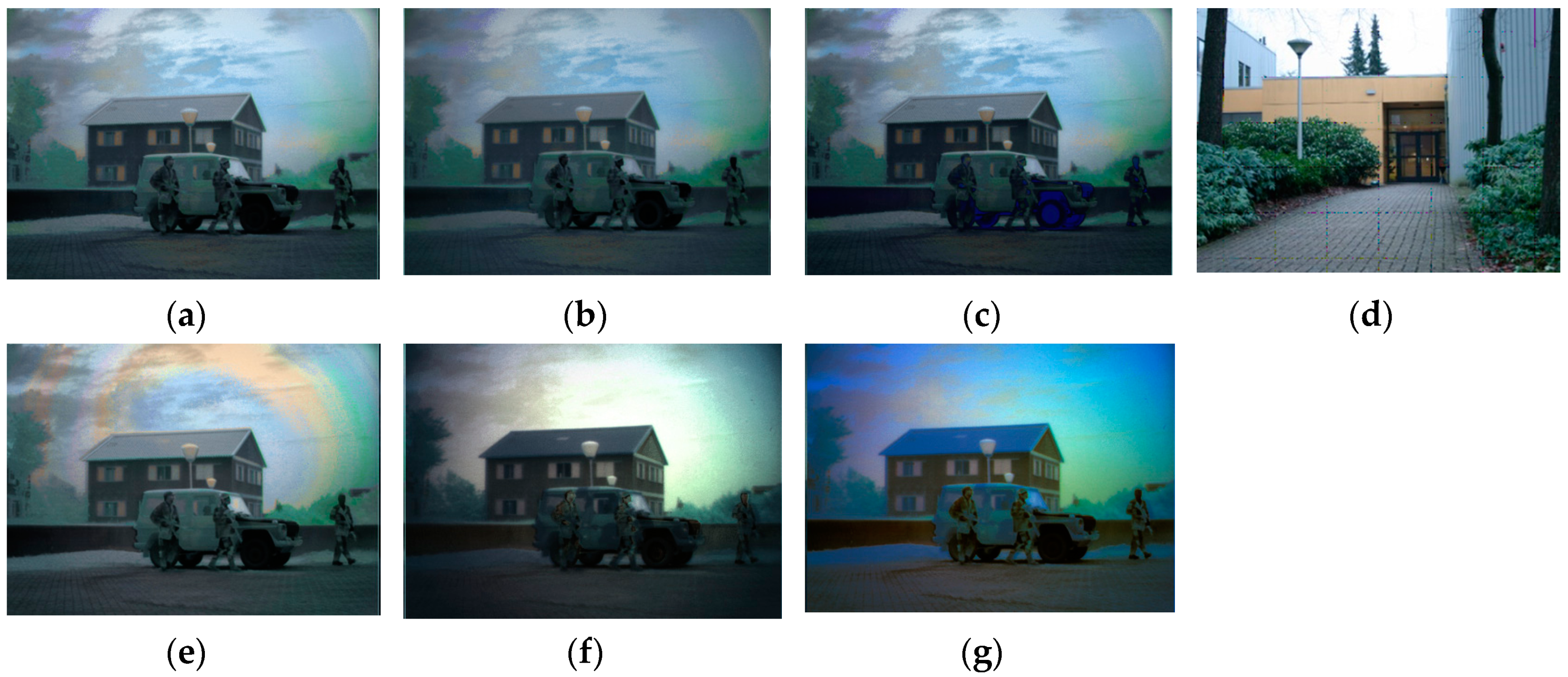

3.2. New Color Fusion Methods

3.2.1. Luminance-From-Fit

3.2.2. Salient-Hot-Targets

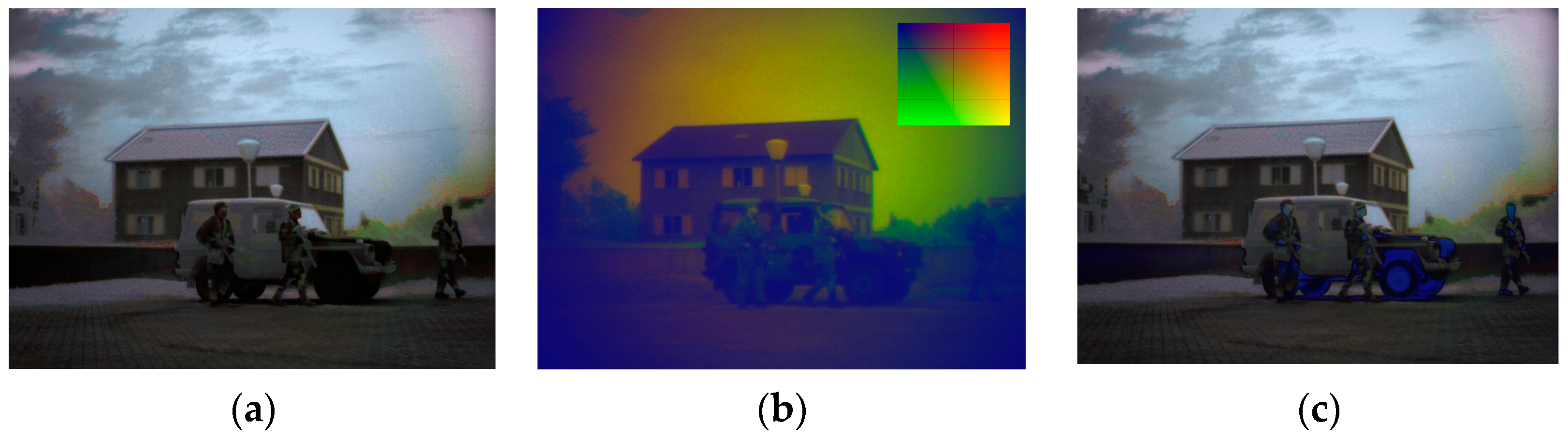

3.2.3. Rigid 3D-Fit

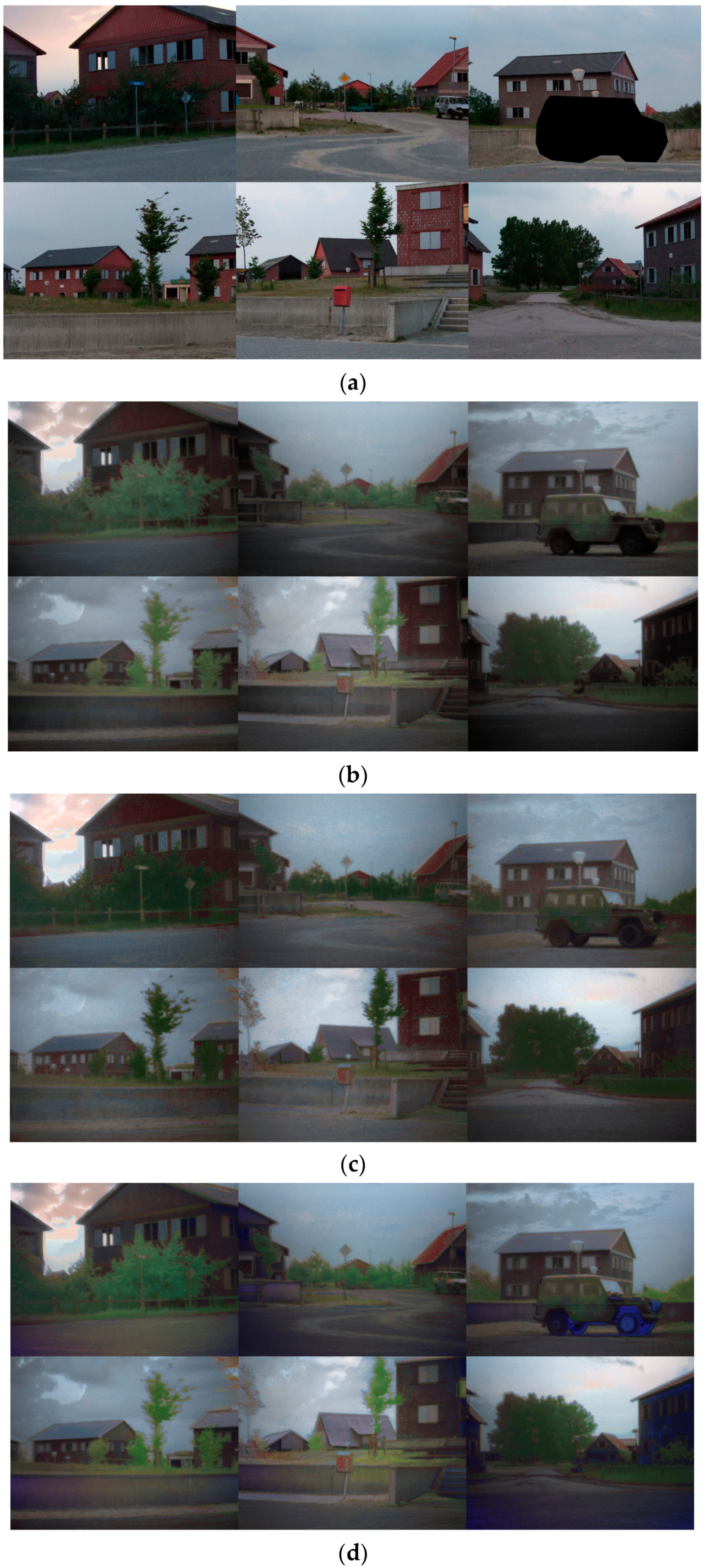

4. Qualitative Comparison of Color Fusion Methods

5. Quantitative Evaluation of Color Fusion Methods

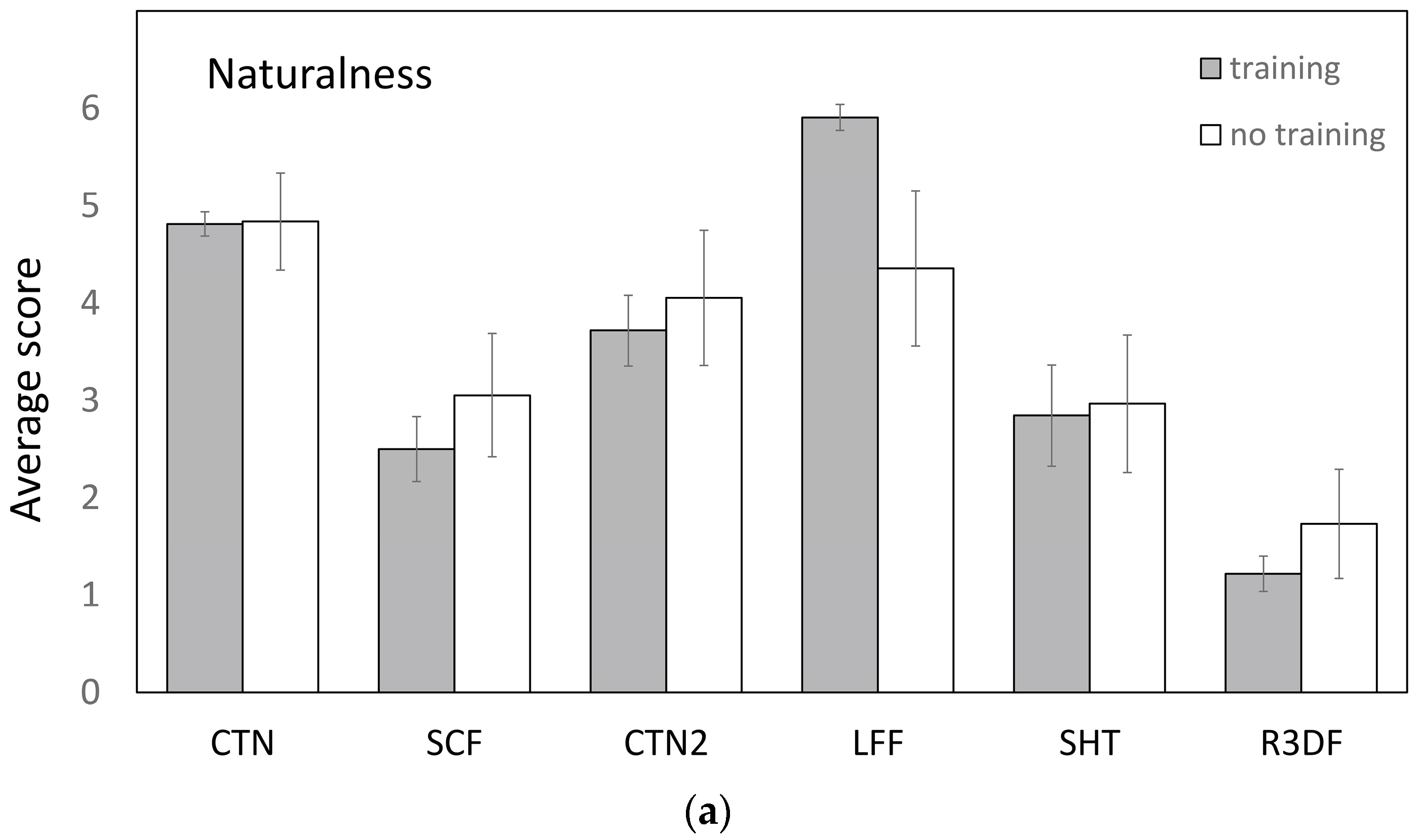

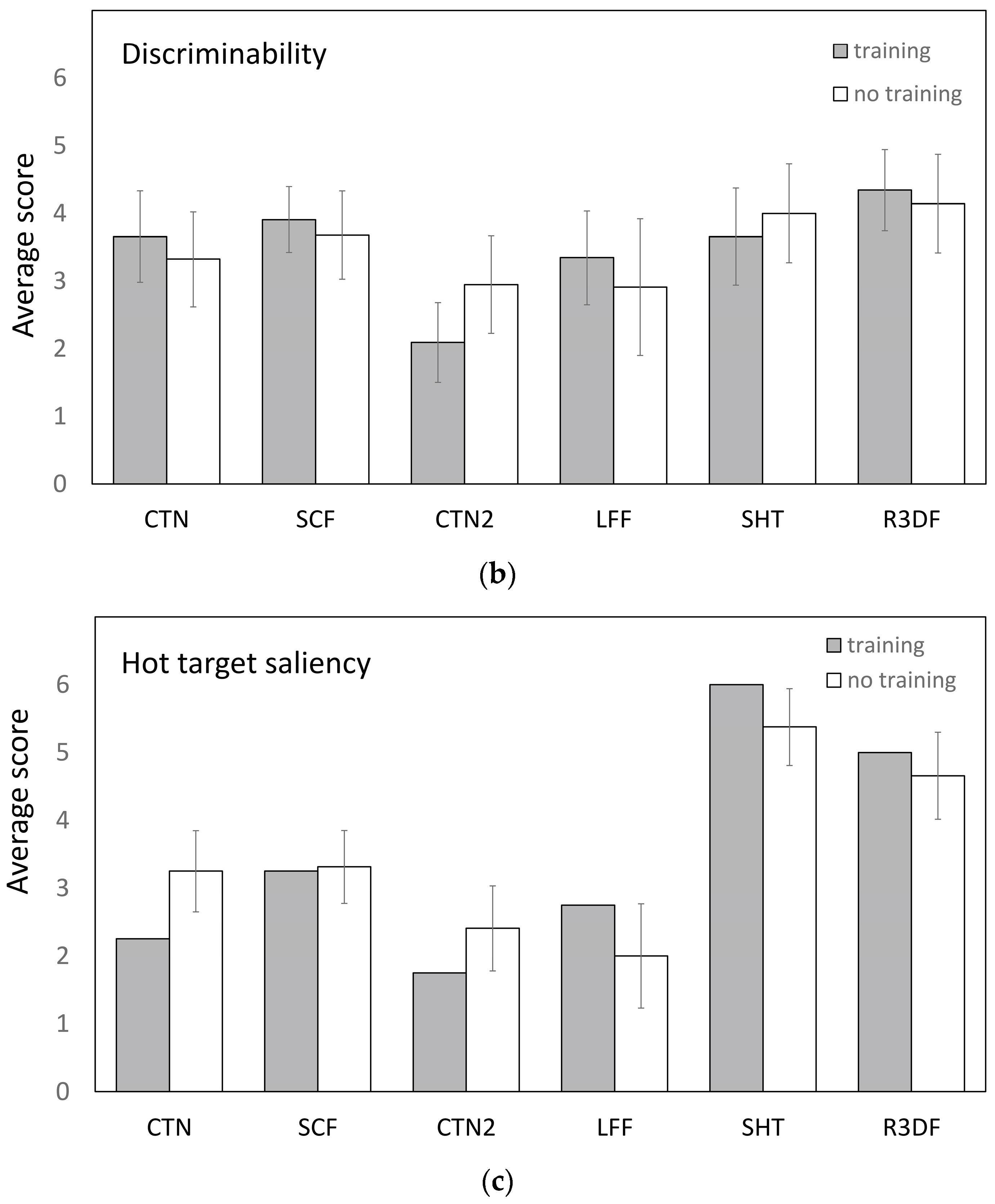

5.1. Subjective Ranking Experiment

5.1.1. Methods

5.1.2. Results

5.2. Objective Quality Metrics

5.2.1. Methods

5.2.2. Results

6. Discussion and Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Li, S.; Kang, X.; Fang, L.; Hu, J.; Yin, H. Pixel-level image fusion: A survey of the state of the art. Inf. Fusion 2017, 33, 100–112. [Google Scholar] [CrossRef]

- Mahmood, S.; Khan, Y.D.; Khalid Mahmood, M. A treatise to vision enhancement and color fusion techniques in night vision devices. Multimed. Tools Appl. 2017, 76, 1–49. [Google Scholar] [CrossRef]

- Zheng, Y. An Overview of Night Vision Colorization Techniques Using Multispectral Images: From Color Fusion to Color Mapping. In Proceedings of the IEEE International Conference on Audio, Language and Image Processing (ICALIP), Shanghai, China, 16–18 July 2012; pp. 134–143. [Google Scholar]

- Toet, A.; Hogervorst, M.A. Progress in color night vision. Opt. Eng. 2012, 51, 010901. [Google Scholar] [CrossRef]

- Zheng, Y. An exploration of color fusion with multispectral images for night vision enhancement. In Image Fusion and Its Applications; Zheng, Y., Ed.; InTech Open: Rijeka, Croatia, 2011; pp. 35–54. [Google Scholar]

- Wichmann, F.A.; Sharpe, L.T.; Gegenfurtner, K.R. The contributions of color to recognition memory for natural scenes. J. Exp. Psychol. Learn. Mem. Cognit. 2002, 28, 509–520. [Google Scholar] [CrossRef]

- Bramão, I.; Reis, A.; Petersson, K.M.; Faísca, L. The role of color information on object recognition: A review and meta-analysis. Acta Psychol. 2011, 138, 244–253. [Google Scholar] [CrossRef] [PubMed]

- Sampson, M.T. An Assessment of the Impact of Fused Monochrome and Fused Color Night Vision Displays on Reaction Time and Accuracy in Target Detection; Report AD-A321226; Naval Postgraduate School: Monterey, CA, USA, 1996. [Google Scholar]

- Gegenfurtner, K.R.; Rieger, J. Sensory and cognitive contributions of color to the recognition of natural scenes. Curr. Biol. 2000, 10, 805–808. [Google Scholar] [CrossRef]

- Tanaka, J.W.; Presnell, L.M. Color diagnosticity in object recognition. Percept. Psychophys. 1999, 61, 1140–1153. [Google Scholar] [CrossRef] [PubMed]

- Castelhano, M.S.; Henderson, J.M. The influence of color on the perception of scene gist. J. Exp. Psychol. Hum. Percept. Perform. 2008, 34, 660–675. [Google Scholar] [CrossRef] [PubMed]

- Rousselet, G.A.; Joubert, O.R.; Fabre-Thorpe, M. How long to get the “gist” of real-world natural scenes? Vis. Cognit. 2005, 12, 852–877. [Google Scholar] [CrossRef]

- Goffaux, V.; Jacques, C.; Mouraux, A.; Oliva, A.; Schyns, P.; Rossion, B. Diagnostic colours contribute to the early stages of scene categorization: Behavioural and neurophysiological evidence. Vis. Cognit. 2005, 12, 878–892. [Google Scholar] [CrossRef]

- Oliva, A.; Schyns, P.G. Diagnostic colors mediate scene recognition. Cognit. Psychol. 2000, 41, 176–210. [Google Scholar] [CrossRef] [PubMed]

- Frey, H.-P.; Honey, C.; König, P. What’s color got to do with it? The influence of color on visual attention in different categories. J. Vis. 2008, 8, 6. [Google Scholar] [CrossRef] [PubMed]

- Bramão, I.; Inácio, F.; Faísca, L.; Reis, A.; Petersson, K.M. The influence of color information on the recognition of color diagnostic and noncolor diagnostic objects. J. Gen. Psychol. 2011, 138, 49–65. [Google Scholar] [CrossRef] [PubMed]

- Spence, I.; Wong, P.; Rusan, M.; Rastegar, N. How color enhances visual memory for natural scenes. Psychol. Sci. 2006, 17, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Ansorge, U.; Horstmann, G.; Carbone, E. Top-down contingent capture by color: Evidence from RT distribution analyses in a manual choice reaction task. Acta Psychol. 2005, 120, 243–266. [Google Scholar] [CrossRef] [PubMed]

- Green, B.F.; Anderson, L.K. Colour coding in a visual search task. J. Exp. Psychol. 1956, 51, 19–24. [Google Scholar] [CrossRef] [PubMed]

- Folk, C.L.; Remington, R. Selectivity in distraction by irrelevant featural singletons: Evidence for two forms of attentional capture. J. Exp. Psychol. Hum. Percept. Perform. 1998, 24, 847–858. [Google Scholar] [CrossRef] [PubMed]

- Driggers, R.G.; Krapels, K.A.; Vollmerhausen, R.H.; Warren, P.R.; Scribner, D.A.; Howard, J.G.; Tsou, B.H.; Krebs, W.K. Target detection threshold in noisy color imagery. In Infrared Imaging Systems: Design, Analysis, Modeling, and Testing XII; Holst, G.C., Ed.; The International Society for Optical Engineering: Bellingham, WA, USA, 2001; Volume 4372, pp. 162–169. [Google Scholar]

- Horn, S.; Campbell, J.; O’Neill, J.; Driggers, R.G.; Reago, D.; Waterman, J.; Scribner, D.; Warren, P.; Omaggio, J. Monolithic multispectral FPA. In International Military Sensing Symposium; NATO RTO: Paris, France, 2002; pp. 1–18. [Google Scholar]

- Lanir, J.; Maltz, M.; Rotman, S.R. Comparing multispectral image fusion methods for a target detection task. Opt. Eng. 2007, 46, 1–8. [Google Scholar] [CrossRef]

- Martinsen, G.L.; Hosket, J.S.; Pinkus, A.R. Correlating military operators’ visual demands with multi-spectral image fusion. In Signal Processing, Sensor Fusion, and Target Recognition XVII; Kadar, I., Ed.; The International Society for Optical Engineering: Bellingham, WA, USA, 2008; Volume 6968, pp. 1–7. [Google Scholar]

- Jacobson, N.P.; Gupta, M.R. Design goals and solutions for display of hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2684–2692. [Google Scholar] [CrossRef]

- Joseph, J.E.; Proffitt, D.R. Semantic versus perceptual influences of color in object recognition. J. Exp. Psychol. Learn. Mem. Cognit. 1996, 22, 407–429. [Google Scholar] [CrossRef]

- Fredembach, C.; Süsstrunk, S. Colouring the near-infrared. In IS&T/SID 16th Color Imaging Conference; The Society for Imaging Science and Technology: Springfield, VA, USA, 2008; pp. 176–182. [Google Scholar]

- Krebs, W.K.; Sinai, M.J. Psychophysical assessments of image-sensor fused imagery. Hum. Factors 2002, 44, 257–271. [Google Scholar] [CrossRef] [PubMed]

- McCarley, J.S.; Krebs, W.K. Visibility of road hazards in thermal, visible, and sensor-fused night-time imagery. Appl. Ergon. 2000, 31, 523–530. [Google Scholar] [CrossRef]

- Toet, A.; IJspeert, J.K. Perceptual evaluation of different image fusion schemes. In Signal Processing, Sensor Fusion, and Target Recognition X; Kadar, I., Ed.; The International Society for Optical Engineering: Bellingham, WA, USA, 2001; Volume 4380, pp. 436–441. [Google Scholar]

- Toet, A.; IJspeert, J.K.; Waxman, A.M.; Aguilar, M. Fusion of visible and thermal imagery improves situational awareness. In Enhanced and Synthetic Vision 1997; Verly, J.G., Ed.; International Society for Optical Engineering: Bellingham, WA, USA, 1997; Volume 3088, pp. 177–188. [Google Scholar]

- Essock, E.A.; Sinai, M.J.; McCarley, J.S.; Krebs, W.K.; DeFord, J.K. Perceptual ability with real-world nighttime scenes: Image-intensified, infrared, and fused-color imagery. Hum. Factors 1999, 41, 438–452. [Google Scholar] [CrossRef] [PubMed]

- Essock, E.A.; Sinai, M.J.; DeFord, J.K.; Hansen, B.C.; Srinivasan, N. Human perceptual performance with nonliteral imagery: Region recognition and texture-based segmentation. J. Exp. Psychol. Appl. 2004, 10, 97–110. [Google Scholar] [CrossRef] [PubMed]

- Gu, X.; Sun, S.; Fang, J. Coloring night vision imagery for depth perception. Chin. Opt. Lett. 2009, 7, 396–399. [Google Scholar]

- Vargo, J.T. Evaluation of Operator Performance Using True Color and Artificial Color in Natural Scene Perception; Report AD-A363036; Naval Postgraduate School: Monterey, CA, USA, 1999. [Google Scholar]

- Krebs, W.K.; Scribner, D.A.; Miller, G.M.; Ogawa, J.S.; Schuler, J. Beyond third generation: A sensor-fusion targeting FLIR pod for the F/A-18. In Sensor Fusion: Architectures, Algorithms, and Applications II; Dasarathy, B.V., Ed.; International Society for Optical Engineering: Bellingham, WA, USA, 1998; Volume 3376, pp. 129–140. [Google Scholar]

- Scribner, D.; Warren, P.; Schuler, J. Extending Color Vision Methods to Bands Beyond the Visible. In Proceedings of the IEEE Workshop on Computer Vision Beyond the Visible Spectrum: Methods and Applications, Fort Collins, CO, USA, 22 June 1999; pp. 33–40. [Google Scholar]

- Sun, S.; Jing, Z.; Li, Z.; Liu, G. Color fusion of SAR and FLIR images using a natural color transfer technique. Chin. Opt. Lett. 2005, 3, 202–204. [Google Scholar]

- Tsagaris, V.; Anastassopoulos, V. Fusion of visible and infrared imagery for night color vision. Displays 2005, 26, 191–196. [Google Scholar] [CrossRef]

- Zheng, Y.; Hansen, B.C.; Haun, A.M.; Essock, E.A. Coloring night-vision imagery with statistical properties of natural colors by using image segmentation and histogram matching. In Color Imaging X: Processing, Hardcopy and Applications; Eschbach, R., Marcu, G.G., Eds.; The International Society for Optical Engineering: Bellingham, WA, USA, 2005; Volume 5667, pp. 107–117. [Google Scholar]

- Toet, A. Natural colour mapping for multiband nightvision imagery. Inf. Fusion 2003, 4, 155–166. [Google Scholar] [CrossRef]

- Wang, L.; Jin, W.; Gao, Z.; Liu, G. Color fusion schemes for low-light CCD and infrared images of different properties. In Electronic Imaging and Multimedia Technology III; Zhou, L., Li, C.-S., Suzuki, Y., Eds.; The International Society for Optical Engineering: Bellingham, WA, USA, 2002; Volume 4925, pp. 459–466. [Google Scholar]

- Li, J.; Pan, Q.; Yang, T.; Cheng, Y.-M. Color Based Grayscale-Fused Image Enhancement Algorithm for Video Surveillance. In Proceedings of the IEEE Third International Conference on Image and Graphics (ICIG’04), Hong Kong, China, 18–20 December 2004; pp. 47–50. [Google Scholar]

- Howard, J.G.; Warren, P.; Klien, R.; Schuler, J.; Satyshur, M.; Scribner, D.; Kruer, M.R. Real-time color fusion of E/O sensors with PC-based COTS hardware. In Targets and Backgrounds VI: Characterization, Visualization, and the Detection Process; Watkins, W.R., Clement, D., Reynolds, W.R., Eds.; The International Society for Optical Engineering: Bellingham, WA, USA, 2000; Volume 4029, pp. 41–48. [Google Scholar]

- Scribner, D.; Schuler, J.M.; Warren, P.; Klein, R.; Howard, J.G. Sensor and Image Fusion; Driggers, R.G., Ed.; Marcel Dekker Inc.: New York, NY, USA; pp. 2577–2582.

- Schuler, J.; Howard, J.G.; Warren, P.; Scribner, D.A.; Klien, R.; Satyshur, M.; Kruer, M.R. Multiband E/O color fusion with consideration of noise and registration. In Targets and Backgrounds VI: Characterization, Visualization, and the Detection Process; Watkins, W.R., Clement, D., Reynolds, W.R., Eds.; The International Society for Optical Engineering: Bellingham, WA, USA, 2000; Volume 4029, pp. 32–40. [Google Scholar]

- Waxman, A.M.; Gove, A.N.; Fay, D.A.; Racamoto, J.P.; Carrick, J.E.; Seibert, M.C.; Savoye, E.D. Color night vision: Opponent processing in the fusion of visible and IR imagery. Neural Netw. 1997, 10, 1–6. [Google Scholar] [CrossRef]

- Waxman, A.M.; Fay, D.A.; Gove, A.N.; Seibert, M.C.; Racamato, J.P.; Carrick, J.E.; Savoye, E.D. Color night vision: Fusion of intensified visible and thermal IR imagery. In Synthetic Vision for Vehicle Guidance and Control; Verly, J.G., Ed.; The International Society for Optical Engineering: Bellingham, WA, USA, 1995; Volume 2463, pp. 58–68. [Google Scholar]

- Warren, P.; Howard, J.G.; Waterman, J.; Scribner, D.A.; Schuler, J. Real-Time, PC-Based Color Fusion Displays; Report A073093; Naval Research Lab: Washington, DC, USA, 1999. [Google Scholar]

- Fay, D.A.; Waxman, A.M.; Aguilar, M.; Ireland, D.B.; Racamato, J.P.; Ross, W.D.; Streilein, W.; Braun, M.I. Fusion of multi-sensor imagery for night vision: Color visualization, target learning and search. In Third International Conference on Information Fusion, Vol. I-TuD3; IEEE Press: Piscataway, NJ, USA, 2000; pp. 3–10. [Google Scholar]

- Aguilar, M.; Fay, D.A.; Ross, W.D.; Waxman, A.M.; Ireland, D.B.; Racamoto, J.P. Real-time fusion of low-light CCD and uncooled IR imagery for color night vision. In Enhanced and Synthetic Vision 1998; Verly, J.G., Ed.; The International Society for Optical Engineering: Bellinggam, WA, USA, 1998; Volume 3364, pp. 124–135. [Google Scholar]

- Waxman, A.M.; Aguilar, M.; Baxter, R.A.; Fay, D.A.; Ireland, D.B.; Racamoto, J.P.; Ross, W.D. Opponent-Color Fusion of Multi-Sensor Imagery: Visible, IR and SAR. Available online: http://www.dtic.mil/docs/citations/ADA400557 (accessed on 28 August 2017).

- Aguilar, M.; Fay, D.A.; Ireland, D.B.; Racamoto, J.P.; Ross, W.D.; Waxman, A.M. Field evaluations of dual-band fusion for color night vision. In Enhanced and Synthetic Vision 1999; Verly, J.G., Ed.; The International Society for Optical Engineering: Bellingham, WA, USA, 1999; Volume 3691, pp. 168–175. [Google Scholar]

- Fay, D.A.; Waxman, A.M.; Aguilar, M.; Ireland, D.B.; Racamato, J.P.; Ross, W.D.; Streilein, W.; Braun, M.I. Fusion of 2-/3-/4-sensor imagery for visualization, target learning, and search. In Enhanced and Synthetic Vision 2000; Verly, J.G., Ed.; SPIE—The International Society for Optical Engineering: Bellingham, WA, USA, 2000; Volume 4023, pp. 106–115. [Google Scholar]

- Huang, G.; Ni, G.; Zhang, B. Visual and infrared dual-band false color image fusion method motivated by Land’s experiment. Opt. Eng. 2007, 46, 1–10. [Google Scholar] [CrossRef]

- Li, G. Image fusion based on color transfer technique. In Image Fusion and Its Applications; Zheng, Y., Ed.; InTech Open: Rijeka, Croatia, 2011; pp. 55–72. [Google Scholar]

- Zaveri, T.; Zaveri, M.; Makwana, I.; Mehta, H. An Optimized Region-Based Color Transfer Method for Night Vision Application. In Proceedings of the 3rd IEEE International Conference on Signal and Image Processing (ICSIP 2010), Chennai, India, 15–17 December 2010; pp. 96–101. [Google Scholar]

- Zhang, J.; Han, Y.; Chang, B.; Yuan, Y. Region-based fusion for infrared and LLL images. In Image Fusion; Ukimura, O., Ed.; INTECH: Rijeka, Croatia, 2011; pp. 285–302. [Google Scholar]

- Qian, X.; Han, L.; Wang, Y.; Wang, B. Color contrast enhancement for color night vision based on color mapping. Infrared Phys. Technol. 2013, 57, 36–41. [Google Scholar] [CrossRef]

- Li, G.; Xu, S.; Zhao, X. Fast Color-Transfer-Based Image Fusion Method for Merging Infrared and Visible Images; Braun, J.J., Ed.; The International Society for Optical Engineering: Bellingham, WA, USA, 2010; Volume 77100S, pp. 1–12. [Google Scholar]

- Li, G.; Xu, S.; Zhao, X. An efficient color transfer algorithm for recoloring multiband night vision imagery. In Enhanced and Synthetic Vision 2010; Güell, J.J., Bernier, K.L., Eds.; The International Society for Optical Engineering: Bellingham, WA, USA, 2010; Volume 7689, pp. 1–12. [Google Scholar]

- Li, G.; Wang, K. Applying daytime colors to nighttime imagery with an efficient color transfer method. In Enhanced and Synthetic Vision 2007; Verly, J.G., Guell, J.J., Eds.; The International Society for Optical Engineering: Bellingham, WA, USA, 2007; Volume 6559, pp. 1–12. [Google Scholar]

- Shen, H.; Zhou, P. Near natural color polarization imagery fusion approach. In Third International Congress on Image and Signal Processing (CISP 2010); IEEE Press: Piscataway, NJ, USA, 2010; Volume 6, pp. 2802–2805. [Google Scholar]

- Yin, S.; Cao, L.; Ling, Y.; Jin, G. One color contrast enhanced infrared and visible image fusion method. Infrared Phys. Technol. 2010, 53, 146–150. [Google Scholar] [CrossRef]

- Ali, E.A.; Qadir, H.; Kozaitis, S.P. Color night vision system for ground vehicle navigation. In Infrared Technology and Applications XL; Andresen, B.F., Fulop, G.F., Hanson, C.M., Norton, P.R., Eds.; SPIE: Bellingham, WA, USA, 2014; Volume 9070, pp. 1–5. [Google Scholar]

- Jiang, M.; Jin, W.; Zhou, L.; Liu, G. Multiple reference images based on lookup-table color image fusion algorithm. In International Symposium on Computers & Informatics (ISCI 2015); Atlantis Press: Amsterdam, The Netherlands, 2015; pp. 1031–1038. [Google Scholar]

- Sun, S.; Zhao, H. Natural color mapping for FLIR images. In 1st International Congress on Image and Signal Processing CISP 2008; IEEE Press: Piscataway, NJ, USA, 2008; pp. 44–48. [Google Scholar]

- Li, Z.; Jing, Z.; Yang, X. Color transfer based remote sensing image fusion using non-separable wavelet frame transform. Pattern Recognit. Lett. 2005, 26, 2006–2014. [Google Scholar] [CrossRef]

- Hogervorst, M.A.; Toet, A. Fast natural color mapping for night-time imagery. Inf. Fusion 2010, 11, 69–77. [Google Scholar] [CrossRef]

- Hogervorst, M.A.; Toet, A. Presenting Nighttime Imagery in Daytime Colours. In Proceedings of the IEEE 11th International Conference on Information Fusion, Cologne, Germany, 30 June–3 July 2008; pp. 706–713. [Google Scholar]

- Hogervorst, M.A.; Toet, A. Method for applying daytime colors to nighttime imagery in realtime. In Multisensor, Multisource Information Fusion: Architectures, Algorithms, and Applications 2008; Dasarathy, B.V., Ed.; The International Society for Optical Engineering: Bellingham, WA, USA, 2008; pp. 1–9. [Google Scholar]

- Toet, A.; de Jong, M.J.; Hogervorst, M.A.; Hooge, I.T.C. Perceptual evaluation of color transformed multispectral imagery. Opt. Eng. 2014, 53, 043101. [Google Scholar] [CrossRef]

- Toet, A.; Hogervorst, M.A. TRICLOBS portable triband lowlight color observation system. In Multisensor, Multisource Information Fusion: Architectures, Algorithms, and Applications 2009; Dasarathy, B.V., Ed.; The International Society for Optical Engineering: Bellingham, WA, USA, 2009; pp. 1–11. [Google Scholar]

- Toet, A.; Hogervorst, M.A.; Pinkus, A.R. The TRICLOBS Dynamic Multi-Band Image Data Set for the development and evaluation of image fusion methods. PLoS ONE 2016, 11, e0165016. [Google Scholar] [CrossRef] [PubMed]

- Pitié, F.; Kokaram, A.C.; Dahyot, R. Automated colour grading using colour distribution transfer. Comput. Vis. Image Underst. 2007, 107, 123–137. [Google Scholar] [CrossRef]

- Arun, K.S.; Huang, T.S.; Blostein, S.D. Least-squares fitting of two 3-D point sets. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 5, 698–700. [Google Scholar] [CrossRef]

- Gamer, M.; Lemon, J.; Fellows, I.; Sing, P. Package ‘irr’: Various Coefficients of Interrater Reliability and Agreement (Version 0.84). 2015. Available online: http://CRAN.R-project.org/package=irr (accessed on 25 August 2017).

- Yuan, Y.; Zhang, J.; Chang, B.; Han, Y. Objective quality evaluation of visible and infrared color fusion image. Opt. Eng. 2011, 50, 1–11. [Google Scholar] [CrossRef]

- Heckbert, P. Color image quantization for frame buffer display. Comput. Gr. 1982, 16, 297–307. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Dong, W.; Chen, G.; Blasch, E.P. The Objective Evaluation Index (OEI) for Evaluation of Night Vision Colorization Techniques; Miao, Q., Ed.; New Advances in Image Fusion; InTech Open: Rijeka, Croatia, 2013; pp. 79–102. [Google Scholar]

- Zheng, Y.; Dong, W.; Blasch, E.P. Qualitative and quantitative comparisons of multispectral night vision colorization techniques. Opt. Eng. 2012, 51, 087004. [Google Scholar] [CrossRef]

- Ma, M.; Tian, H.; Hao, C. New method to quality evaluation for image fusion using gray relational analysis. Opt. Eng. 2005, 44, 1–5. [Google Scholar]

- Kovesi, P. Image features from phase congruency. Videre J. Comput. Vis. Res. 1999, 1, 2–26. [Google Scholar]

| Method | No-Reference Metric | Full-Reference Metric | ||||

|---|---|---|---|---|---|---|

| ICM | CCM | NC | FSIMc | CNM | OEI | |

| CTN | 0.382 (0.008) | 4.6 (1.2) | 815 (43) | 0.78 (0.02) | 0.82 (0.02) | 0.73 (0.02) |

| SCF | 0.370 (0.006) | 3.6 (1.0) | 1589 (117) | 0.77 (0.02) | 0.76 (0.02) | 0.72 (0.02) |

| CTN2 | 0.380 (0.008) | 4.4 (1.2) | 938 (102) | 0.78 (0.02) | 0.82 (0.02) | 0.73 (0.02) |

| LFF | 0.358 (0.009) | 4.5 (1.2) | 860 (64) | 0.80 (0.01) | 0.82 (0.02) | 0.74 (0.02) |

| SHT | 0.349 (0.007) | 4.4 (1.2) | 1247 (241) | 0.78 (0.01) | 0.82 (0.02) | 0.72 (0.02) |

| R3DF | 0.341 (0.011) | 4.1 (1.1) | 2630 (170) | 0.77 (0.01) | 0.74 (0.02) | 0.70 (0.03) |

| Method | No-Reference Metric | Full-Reference Metric | ||||

|---|---|---|---|---|---|---|

| ICM | CCM | NC | FSIMc | CNM | OEI | |

| Naturalness | 0.66 | 0.64 | 0.92 | 0.81 | 0.81 | 0.95 |

| Discriminability | 0.68 | 0.45 | 0.77 | 0.67 | 0.66 | 0.84 |

| Saliency hot targets | 0.68 | 0.16 | 0.58 | 0.65 | 0.32 | 0.77 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hogervorst, M.A.; Toet, A. Improved Color Mapping Methods for Multiband Nighttime Image Fusion. J. Imaging 2017, 3, 36. https://doi.org/10.3390/jimaging3030036

Hogervorst MA, Toet A. Improved Color Mapping Methods for Multiband Nighttime Image Fusion. Journal of Imaging. 2017; 3(3):36. https://doi.org/10.3390/jimaging3030036

Chicago/Turabian StyleHogervorst, Maarten A., and Alexander Toet. 2017. "Improved Color Mapping Methods for Multiband Nighttime Image Fusion" Journal of Imaging 3, no. 3: 36. https://doi.org/10.3390/jimaging3030036

APA StyleHogervorst, M. A., & Toet, A. (2017). Improved Color Mapping Methods for Multiband Nighttime Image Fusion. Journal of Imaging, 3(3), 36. https://doi.org/10.3390/jimaging3030036