RiskLogitboost Regression for Rare Events in Binary Response: An Econometric Approach

Abstract

1. Introduction

- (i)

- Some models exhibit bias towards the majority class or underestimate the minority class. Some classifiers are suitable for balanced data [28,29] or treat the minority class as noise [30]. Moreover, some popular tree-based and boosting-based algorithms have been shown to have a high predictive performance measured only with evaluation metrics that consider all observations equally important [31].

- (ii)

- Unlike econometric methods, several machine learning methods are considered as black boxes in terms of interpretation. They are frequently interpreted using single metrics such as classification accuracy as unique descriptions of complex tasks [32], and they are not able to provide robust explanations for high-risk environments.

2. Background

2.1. Boosting Methods

| Algorithm 1.Gradient Boosting Machine |

| 1. |

| 2. For d = 1 to D do: |

| 2.1 |

| 2.2 |

| 2.3. . |

| 2.4 . |

| 3. End for |

| Algorithm 2. Tree Gradient Boost |

| 1. , where is the mean of . |

| 2. For d = 1 to D do: |

| 2.1 |

| 2.2 ) |

| 2.3 . |

| 2.4 |

| 3. End for |

2.2. Penalized Regression Methods

| Algorithm 3. Ridge Logistic Regression. |

| 1. Minimizing the negative likelihood function: |

| 2. Penalizing: + |

| Algorithm 4. Lasso Logistic Regression. |

| 1. Minimizing the negative likelihood function: |

| 2. Penalizing: + . |

2.3. Interpretable Machine Learning

3. The Rare Event Problem with RiskLogitboost Regression

| Algorithm 5. Logitboost |

| 1. , |

| are the probability estimates. |

| 2. For d= 1 to D do: |

| 2.1 |

| 2.2 |

| 2.3 |

| 2.4 and |

| = |

| 3. End for |

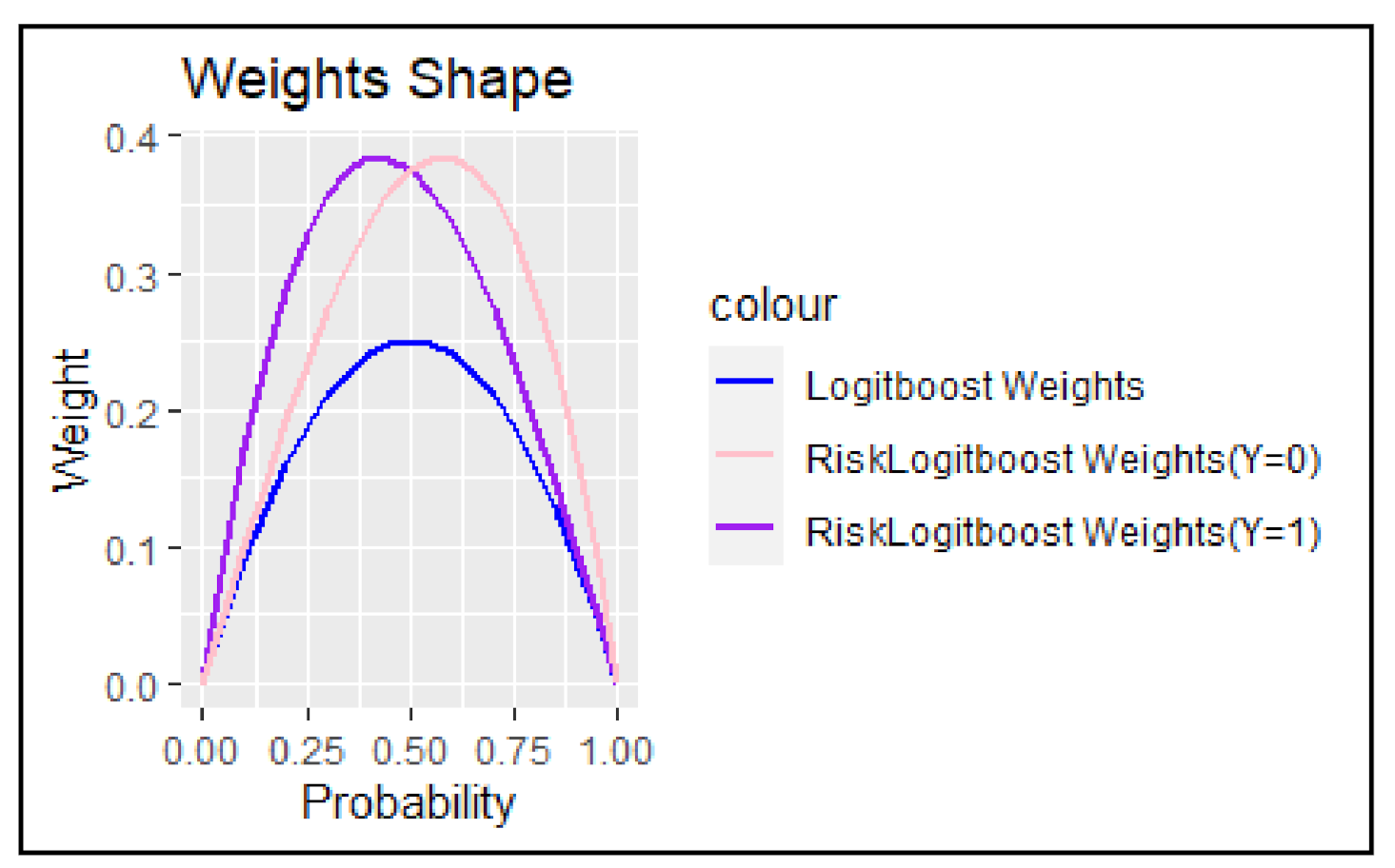

3.1. RiskLogitboost Regression Weighting Mechanism to Improve Rare-Class Learning

3.2. Bias Correction with Weights

3.3. RiskLogitboost Regression

| Algorithm 6. RiskLogitboost regression |

| 1. = 0, |

| are the probability estimates. |

| 2. For d = 1 to D do: |

| 2.1 |

| 2.2 |

| 2.3 |

| 2.4 |

| 2.5 |

| 2.6 . |

| 2.7 |

| 3. End For |

| 4. |

| 5. p = 1, …, P. |

| 6. Correcting Bias: . |

4. Illustrative Data

5. Discussion of Results

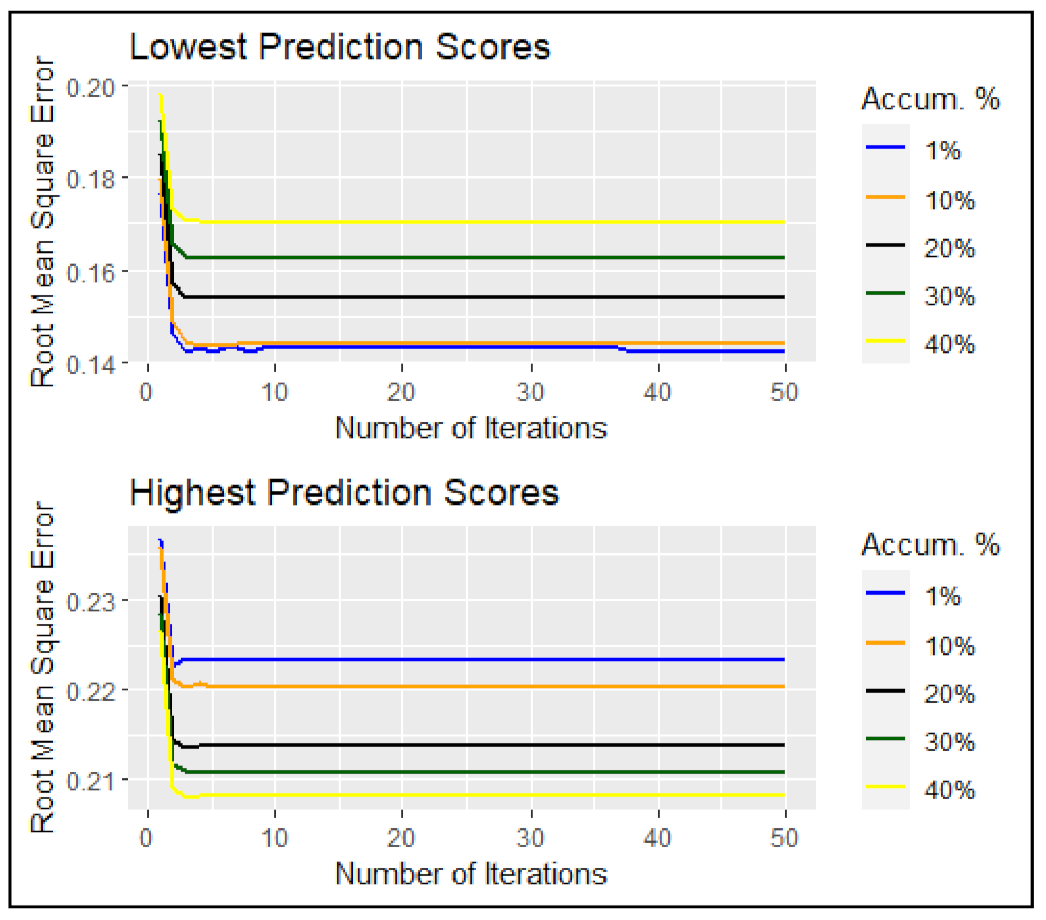

5.1. Predictive Performance of Extremes

5.2. Interpretable RiskLogitboost Regression

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Computation of as Transformed Response

Appendix B. Computation of Weights

References

- Wei, W.; Li, J.; Cao, L.; Ou, Y.; Chen, J. Effective detection of sophisticated online banking fraud on extremely imbalanced data. World Wide Web 2013, 16, 449–475. [Google Scholar] [CrossRef]

- Jiang, C.; Wang, Z.; Wang, R.; Ding, Y. Loan default prediction by combining soft information extracted from descriptive text in online peer-to-peer lending. Ann. Oper. Res. 2018, 266, 511–529. [Google Scholar] [CrossRef]

- Barboza, F.; Kimura, H.; Altman, E. Machine learning models and bankruptcy prediction. Expert Syst. Appl. 2017, 83, 405–417. [Google Scholar] [CrossRef]

- Zaremba, A.; Czapkiewicz, A. Digesting anomalies in emerging European markets: A comparison of factor pricing models. Emerg. Mark. Rev. 2017, 31, 1–15. [Google Scholar] [CrossRef]

- Verbeke, W.; Martens, D.; Baesens, B. Social network analysis for customer churn prediction. Appl. Soft Comput. 2014, 14, 431–446. [Google Scholar] [CrossRef]

- Ayuso, M.; Guillen, M.; Pérez-Marín, A.M. Time and distance to first accident and driving patterns of young drivers with pay-as-you-drive insurance. Accid. Anal. Prev. 2014, 73, 125–131. [Google Scholar] [CrossRef]

- King, G.; Zeng, L. Logistic regression in rare events data. Political Anal. 2001, 9, 137–163. [Google Scholar] [CrossRef]

- Maalouf, M.; Trafalis, T.B. Robust weighted kernel Logistic regression in imbalanced and rare events data. Comput. Stat. Data Anal. 2011, 55, 168–183. [Google Scholar] [CrossRef]

- Pesantez-Narvaez, J.; Guillen, M. Penalized Logistic regression to improve predictive capacity of rare events in surveys. J. Intell. Fuzzy Syst. 2020, 1–11. [Google Scholar] [CrossRef]

- Maalouf, M.; Mohammad, S. Weighted logistic regression for large-scale imbalanced and rare events data. Knowl. Based Syst. 2014, 59, 142–148. [Google Scholar] [CrossRef]

- Rao, V.; Maulik, R.; Constantinescu, E.; Anitescu, M. A Machine-Learning-Based Importance Sampling Method to Compute Rare Event Probabilities. In Computational Science—ICCS 2020; Krzhizhanovskaya, V., Závodszky, G., Lees, M., Dongarra, J., Sloot, P., Brissos, S., Texeira, J., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12142. [Google Scholar] [CrossRef]

- Kuklev, E.A.; Shapkin, V.S.; Filippov, V.L.; Shatrakov, Y.G. Solving the Rare Events Problem with the Fuzzy Sets Method. In Aviation System Risks and Safety; Springer: Singapore, 2019. [Google Scholar] [CrossRef]

- Kamalov, F.; Denisov, D. Gamma distribution-based sampling for imbalanced data. Knowl. Based Syst. 2020, 207, 106368. [Google Scholar] [CrossRef]

- Cook, S.J.; Hays, J.C.; Franzese, R.J. Fixed effects in rare events data: A penalized maximum likelihood solution. Political Sci. Res. Methods 2020, 8, 92–105. [Google Scholar] [CrossRef]

- Carpenter, D.P.; Lewis, D.E. Political learning from rare events: Poisson inference, fiscal constraints, and the lifetime of bureaus. Political Anal. 2004, 201–232. [Google Scholar] [CrossRef]

- Bo, L.; Wang, Y.; Yang, X. Markov-modulated jump–diffusions for currency option pricing. Insur. Math. Econ. 2010, 46, 461–469. [Google Scholar] [CrossRef]

- Artís, M.; Ayuso, M.; Guillén, M. Detection of automobile insurance fraud with discrete choice models and misclassified claims. J. Risk Insur. 2002, 69, 325–340. [Google Scholar] [CrossRef]

- Wilson, J.H. An analytical approach to detecting insurance fraud using logistic regression. J. Financ. Account. 2009, 1, 1. [Google Scholar]

- Falk, M.; Hüsler, J.; Reiss, R.D. Laws of Small Numbers: Extremes and Rare Events; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- L’Ecuyer, P.; Demers, V.; Tuffin, B. Rare events, splitting, and quasi-Monte Carlo. ACM Trans. Model. Comput. Simul. 2007, 17. [Google Scholar] [CrossRef]

- Buch-Larsen, T.; Nielsen, J.P.; Guillén, M.; Bolancé, C. Kernel density estimation for heavy-tailed distributions using the Champernowne transformation. Statistics 2005, 39, 503–516. [Google Scholar] [CrossRef]

- Bolancé, C.; Guillén, M.; Nielsen, J.P. Transformation Kernel Estimation of Insurance Claim Cost Distributions. In Mathematical and Statistical Methods for Actuarial Sciences and Finance; Corazza, M., Pizzi, C., Eds.; Springer: Milano, Italy, 2010. [Google Scholar] [CrossRef]

- Rached, I.; Larsson, E. Tail Distribution and Extreme Quantile Estimation Using Non-Parametric Approaches. In High-Performance Modelling and Simulation for Big Data Applications; Kołodziej, J., González-Vélez, H., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; Volume 11400. [Google Scholar] [CrossRef]

- Jha, S.; Guillen, M.; Westland, J.C. Employing transaction aggregation strategy to detect credit card fraud. Expert Syst. Appl. 2012, 39, 12650–12657. [Google Scholar] [CrossRef]

- Jin, Y.; Rejesus, R.M.; Little, B.B. Binary choice models for rare events data: A crop insurance fraud application. Appl. Econ. 2005, 37, 841–848. [Google Scholar] [CrossRef]

- Pesantez-Narvaez, J.; Guillen, M. Weighted Logistic Regression to Improve Predictive Performance in Insurance. Adv. Intell. Syst. Comput. 2020, 894, 22–34. [Google Scholar] [CrossRef]

- Calabrese, R.; Osmetti, S.A. Generalized extreme value regression for binary rare events data: An application to credit defaults. J. Appl. Stat. 2013, 40, 1172–1188. [Google Scholar] [CrossRef]

- Loyola-González, O.; Martínez-Trinidad, J.F.; Carrasco-Ochoa, J.A.; García-Borroto, M. Study of the impact of resampling methods for contrast pattern based classifiers in imbalanced databases. Neurocomputing 2016, 175, 935–947. [Google Scholar] [CrossRef]

- Krawczyk, B. Learning from imbalanced data: Open challenges and future directions. Prog. Artif. Intell. 2016, 5, 221–232. [Google Scholar] [CrossRef]

- Beyan, C.; Fisher, R. Classifying imbalanced data sets using similarity based hierarchical decomposition. Pattern Recognit. 2015, 48, 1653–1672. [Google Scholar] [CrossRef]

- Pesantez-Narvaez, J.; Guillen, M.; Alcañiz, M. Predicting motor insurance claims using telematics data—XGBoost versus Logistic regression. Risks 2019, 7, 70. [Google Scholar] [CrossRef]

- Doshi-Velez, F.; Kim, B. Towards a rigorous science of interpretable machine learning. arXiv, 2017; arXiv:1702.08608. [Google Scholar]

- Friedman, J.; Hastie, T.; Tibshirani, R. Additive Logistic regression: A statistical view of boosting (with discussion and a rejoinder by the authors). Ann. Stat. 2000, 28, 337–407. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. Experiments with a new boosting algorithm. ICML 1996, 96, 148–156. [Google Scholar]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of online learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Domingo, C.; Watanabe, O. MadaBoost: A modification of AdaBoost. In Proceedings of the Thirteenth Annual Conference on Computational Learning Theory (COLT), Graz, Austria, 9–12 July 2000; pp. 180–189. [Google Scholar]

- Freund, Y. An adaptive version of the boost by majority algorithm. Mach. Learn. 2001, 43, 293–318. [Google Scholar] [CrossRef]

- Lee, S.C.; Lin, S. Delta boosting machine with application to general insurance. N. Am. Actuar. J. 2018, 22, 405–425. [Google Scholar] [CrossRef]

- Joshi, M.V.; Kumar, V.; Agarwal, R.C. Evaluating boosting algorithms to classify rare classes: Comparison and improvements. In Proceedings of the 2001 IEEE International Conference on Data Mining, San Jose, CA, USA, 29 November–2 December 2001; IEEE: San Jose, CA, USA, 2001; pp. 257–264. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Fast and robust classification using asymmetric Adaboost and a detector cascade. Adv. Neural Inf. Process. Syst. 2001, 14, 1311–1318. [Google Scholar]

- Chawla, N.V.; Lazarevic, A.; Hall, L.O.; Bowyer, K.W. SMOTEBoost: Improving prediction of the minority class in boosting. In Proceedings of the EUROPEAN Conference on Principles of Data Mining and Knowledge Discovery, Dubrovnik, Croatia, 22–26 September 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 107–119. [Google Scholar] [CrossRef]

- Guo, H.; Viktor, H.L. Learning from imbalanced data sets with boosting and data generation: The databoost-im approach. ACM Sigkdd Explor. Newsl. 2004, 6, 30–39. [Google Scholar] [CrossRef]

- Seiffert, C.; Khoshgoftaar, T.M.; Van Hulse, J.; Napolitano, A. RUSBoost: A hybrid approach to alleviating class imbalance. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2009, 40, 185–197. [Google Scholar] [CrossRef]

- Hu, S.; Liang, Y.; Ma, L.; He, Y. MSMOTE: Improving classification performance when training data is imbalanced. In Proceedings of the 2009 Second International Workshop on Computer Science and Engineering, Qingdao, China, 28–30 October 2009; pp. 13–17. [Google Scholar] [CrossRef]

- Fan, W.; Stolfo, S.J.; Zhang, J.; Chan, P.K. AdaCost: Misclassification cost-sensitive boosting. In Proceedings of the 16th International Conference on Machine Learning, Bled, Slovenia, 27–30 June 1999; Volume 99, pp. 97–105. [Google Scholar]

- Ting, K.M. A comparative study of cost-sensitive boosting algorithms. In Proceedings of the 17th International Conference on Machine Learning, Stanford, CA, USA, 29 June–2 July 2000. [Google Scholar]

- Wang, S.; Chen, H.; Yao, X. Negative correlation learning for classification ensembles. In Proceedings of the 2010 International Joint Conference on Neural Networks (IJCNN), Barcelona, Spain, 18–23 July 2010. [Google Scholar] [CrossRef]

- Sun, Y.; Kamel, M.S.; Wang, Y. Boosting for learning multiple classes with imbalanced class distribution. In Proceedings of the Sixth IEEE International Conference on Data Mining, Hong Kong, China, 18–22 December 2006; pp. 592–602. [Google Scholar] [CrossRef]

- Sun, Y.; Kamel, M.S.; Wong, A.K.; Wang, Y. Cost-sensitive boosting for classification of imbalanced data. Pattern Recognit. 2007, 40, 3358–3378. [Google Scholar] [CrossRef]

- Masnadi-Shirazi, H.; Vasconcelos, N. Cost-sensitive boosting. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 294–309. [Google Scholar] [CrossRef] [PubMed]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.; Stone, C.; Olshen, R. Classification and Regression Trees; The Wadsworth and Brooks-Cole Statistics-Probability Series; Taylor and Francis: Wadsworth, OH, USA, 1984. [Google Scholar]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning; Springer: New York, NY, USA, 2013. [Google Scholar] [CrossRef]

- McCullagh, P.; Nelder, J.A. Generalized Linear Models, 2nd ed.; Chapman and Hall: New York, NY, USA, 1989. [Google Scholar] [CrossRef]

- Mease, D.; Wyner, A.J.; Buja, A. Boosted classification trees and class probability/quantile estimation. J. Mach. Learn. Res. 2007, 8, 409–439. [Google Scholar]

- Pesantez-Narvaez, J.; Guillen, M.; Alcañiz, M. A Synthetic Penalized Logitboost to Model Mortgage Lending with Imbalanced Data. Comput. Econ. 2020, 57, 1–29. [Google Scholar] [CrossRef]

- Liska, G.R.; Cirillo, M.Â.; de Menezes, F.S.; Bueno Filho, J.S.D.S. Machine learning based on extended generalized linear model applied in mixture experiments. Commun. Stat. Simul. Comput. 2019, 1–15. [Google Scholar] [CrossRef]

- De Menezes, F.S.; Liska, G.R.; Cirillo, M.A.; Vivanco, M.J. Data classification with binary response through the Boosting algorithm and Logistic regression. Expert Syst. Appl. 2017, 69, 62–73. [Google Scholar] [CrossRef]

- Charpentier, A. Computational Actuarial Science with R; CRC Press: Boca Raton, FL, USA, 2014. [Google Scholar] [CrossRef][Green Version]

| Training Data Set (RMSE Y = 1) | ||||||||||||

| Lower Extreme | Upper Extreme | |||||||||||

| 0.01 | 0.05 | 0.10 | 0.20 | 0.3 | 0.4 | 0.01 | 0.05 | 0.10 | 0.20 | 0.3 | 0.4 | |

| RiskLogitboost regression | 0.2454 | 0.1825 | 0.1496 | 0.1132 | 0.0927 | 0.0803 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| Ridge Logistic | 0.9629 | 0.9629 | 0.9629 | 0.9629 | 0.9628 | 0.9628 | 0.9627 | 0.9627 | 0.9627 | 0.9627 | 0.9627 | 0.9627 |

| Lasso Logistic | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 |

| Boosting Tree | 0.9787 | 0.9747 | 0.9727 | 0.9700 | 0.9679 | 0.9665 | 0.9162 | 0.9293 | 0.9417 | 0.9495 | 0.9522 | 0.9539 |

| Logitboost | 0.9829 | 0.9799 | 0.9781 | 0.9736 | 0.9707 | 0.9688 | 0.9416 | 0.9479 | 0.9505 | 0.9530 | 0.9545 | 0.9557 |

| SMOTEBoost | 0.6963 | 0.6901 | 0.6852 | 0.6800 | 0.6761 | 0.6725 | 0.6046 | 0.6090 | 0.6117 | 0.6178 | 0.6222 | 0.6264 |

| RUSBoost | 0.5811 | 0.5742 | 0.562 | 0.5517 | 0.5447 | 0.5391 | 0.4466 | 0.4727 | 0.4853 | 0.4931 | 0.4970 | 0.5001 |

| WLR | 0.9992 | 0.9982 | 0.9973 | 0.9961 | 0.9950 | 0.9939 | 0.4788 | 0.7092 | 0.7961 | 0.8676 | 0.8996 | 0.9183 |

| PLR (PSWa) | 0.9992 | 0.9982 | 0.9973 | 0.9961 | 0.9950 | 0.9939 | 0.4788 | 0.7092 | 0.7961 | 0.8676 | 0.8996 | 0.9183 |

| PLR (PSWb) | 0.9820 | 0.9790 | 0.9771 | 0.9725 | 0.9697 | 0.9678 | 0.9407 | 0.9470 | 0.9496 | 0.9520 | 0.9536 | 0.9547 |

| SyntheticPL | 0.9830 | 0.9803 | 0.9783 | 0.9736 | 0.9708 | 0.9689 | 0.9380 | 0.9467 | 0.9497 | 0.9523 | 0.9540 | 0.9552 |

| WeiLogRFL | 0.3696 | 0.2860 | 0.2386 | 0.1826 | 0.1498 | 0.1297 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| Testing Data Set (RMSE Y = 1) | ||||||||||||

| Lower Extreme | Upper Extreme | |||||||||||

| 0.01 | 0.05 | 0.10 | 0.20 | 0.3 | 0.4 | 0.01 | 0.05 | 0.10 | 0.20 | 0.3 | 0.4 | |

| RiskLogitboost regression | 0.4690 | 0.3725 | 0.3133 | 0.2421 | 0.1991 | 0.1724 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| Ridge Logistic | 0.9629 | 0.9629 | 0.9629 | 0.9629 | 0.9628 | 0.9628 | 0.9627 | 0.9627 | 0.9627 | 0.9627 | 0.9627 | 0.9627 |

| Lasso Logistic | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 | 0.9628 |

| Boosting Tree | 0.9788 | 0.9750 | 0.9731 | 0.9705 | 0.9683 | 0.9669 | 0.9156 | 0.9297 | 0.9424 | 0.9498 | 0.9525 | 0.9542 |

| Logitboost | 0.8745 | 0.8723 | 0.8710 | 0.8688 | 0.8674 | 0.8665 | 0.8558 | 0.8577 | 0.8586 | 0.8595 | 0.8601 | 0.8606 |

| SMOTEBoost | 0.6959 | 0.6901 | 0.6854 | 0.6801 | 0.6762 | 0.6727 | 0.6042 | 0.6088 | 0.6116 | 0.6180 | 0.6226 | 0.6270 |

| RUSBoost | 0.5781 | 0.5600 | 0.5515 | 0.5425 | 0.5358 | 0.5312 | 0.4434 | 0.4539 | 0.4727 | 0.4858 | 0.4913 | 0.4948 |

| WLR | 0.9993 | 0.9982 | 0.9973 | 0.9961 | 0.9950 | 0.9938 | 0.4523 | 0.7057 | 0.7959 | 0.8664 | 0.8989 | 0.9178 |

| PLR (PSWa) | 0.9993 | 0.9982 | 0.9973 | 0.9961 | 0.9950 | 0.9938 | 0.4523 | 0.7057 | 0.7959 | 0.8664 | 0.8989 | 0.9178 |

| PLR (PSWb) | 0.9822 | 0.9792 | 0.9773 | 0.9729 | 0.9700 | 0.9681 | 0.9409 | 0.9471 | 0.9497 | 0.9522 | 0.9537 | 0.9549 |

| SyntheticPL | 0.8745 | 0.8721 | 0.8708 | 0.8686 | 0.8673 | 0.8664 | 0.8559 | 0.8577 | 0.8587 | 0.8596 | 0.8602 | 0.8607 |

| WeiLogRFL | 0.4690 | 0.3725 | 0.3133 | 0.2421 | 0.1991 | 0.1724 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| Training Data Set (RMSE Y = 0) | ||||||||||||

| Lower Extreme | Upper Extreme | |||||||||||

| 0.01 | 0.05 | 0.10 | 0.20 | 0.3 | 0.4 | 0.01 | 0.05 | 0.10 | 0.20 | 0.3 | 0.4 | |

| RiskLogitboost regression | 0.7508 | 0.8219 | 0.8605 | 0.9062 | 0.9352 | 0.9514 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

| Ridge Logistic | 0.0371 | 0.0371 | 0.0371 | 0.0371 | 0.0371 | 0.0372 | 0.0373 | 0.0373 | 0.0373 | 0.0373 | 0.0373 | 0.0373 |

| Lasso Logistic | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 |

| Boosting Tree | 0.0197 | 0.0221 | 0.0247 | 0.0273 | 0.0294 | 0.0313 | 0.0773 | 0.0583 | 0.0513 | 0.0470 | 0.0451 | 0.0436 |

| Logitboost | 0.0157 | 0.0188 | 0.0202 | 0.0227 | 0.0264 | 0.0289 | 0.0574 | 0.0510 | 0.0485 | 0.0460 | 0.0445 | 0.0434 |

| SMOTEBoost | 0.2978 | 0.3070 | 0.3116 | 0.3171 | 0.3206 | 0.3240 | 0.3958 | 0.3909 | 0.3865 | 0.3797 | 0.3752 | 0.3704 |

| RUSBoost | 0.4219 | 0.4403 | 0.4488 | 0.4579 | 0.4646 | 0.4692 | 0.5566 | 0.5463 | 0.5281 | 0.5149 | 0.5094 | 0.5058 |

| WLR | 0.0008 | 0.0020 | 0.0029 | 0.0043 | 0.0055 | 0.0067 | 0.5230 | 0.3106 | 0.2356 | 0.1733 | 0.1433 | 0.1250 |

| PLR (PSWa) | 0.0008 | 0.0020 | 0.0029 | 0.0043 | 0.0055 | 0.0067 | 0.5230 | 0.3106 | 0.2356 | 0.1733 | 0.1433 | 0.1250 |

| PLR (PSWb) | 0.0166 | 0.0198 | 0.0212 | 0.0237 | 0.0274 | 0.0299 | 0.0582 | 0.0519 | 0.0495 | 0.0470 | 0.0455 | 0.0444 |

| SyntheticPL | 0.0157 | 0.0183 | 0.0198 | 0.0225 | 0.0262 | 0.0287 | 0.0601 | 0.0521 | 0.0493 | 0.0466 | 0.0450 | 0.0439 |

| WeiLogRFL | 0.6258 | 0.7202 | 0.7758 | 0.8467 | 0.8945 | 0.9212 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

| Testing Data Set (RMSE Y = 0) | ||||||||||||

| Lower Extreme | Upper Extreme | |||||||||||

| 0.01 | 0.05 | 0.10 | 0.20 | 0.3 | 0.4 | 0.01 | 0.05 | 0.10 | 0.20 | 0.3 | 0.4 | |

| RiskLogitboost regression | 0.5446 | 0.6488 | 0.7134 | 0.7988 | 0.8598 | 0.8957 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

| Ridge Logistic | 0.0374 | 0.0374 | 0.0374 | 0.0374 | 0.0374 | 0.0374 | 0.0374 | 0.0374 | 0.0374 | 0.0374 | 0.0374 | 0.0374 |

| Lasso Logistic | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 | 0.0372 |

| Boosting Tree | 0.0197 | 0.0220 | 0.0246 | 0.0272 | 0.0293 | 0.0312 | 0.0774 | 0.0583 | 0.0512 | 0.0470 | 0.0451 | 0.0436 |

| Logitboost | 0.1247 | 0.1269 | 0.1279 | 0.1295 | 0.1311 | 0.1322 | 0.1440 | 0.1420 | 0.1411 | 0.1401 | 0.1395 | 0.1390 |

| SMOTEBoost | 0.2976 | 0.3069 | 0.3116 | 0.3171 | 0.3206 | 0.3240 | 0.3959 | 0.3909 | 0.3865 | 0.379 | 0.375 | 0.3705 |

| RUSBoost | 0.4189 | 0.4259 | 0.4383 | 0.4487 | 0.4558 | 0.4614 | 0.5534 | 0.5280 | 0.5154 | 0.5074 | 0.5034 | 0.5003 |

| WLR | 0.0009 | 0.0020 | 0.0029 | 0.0043 | 0.0055 | 0.0067 | 0.5294 | 0.3119 | 0.2364 | 0.1738 | 0.1438 | 0.1254 |

| PLR (PSWa) | 0.0008 | 0.0020 | 0.0029 | 0.0043 | 0.0055 | 0.0067 | 0.5230 | 0.3106 | 0.2356 | 0.1733 | 0.1433 | 0.1250 |

| PLR (PSWb) | 0.0167 | 0.0198 | 0.0212 | 0.0236 | 0.0273 | 0.0298 | 0.0582 | 0.0519 | 0.0495 | 0.0470 | 0.0455 | 0.0444 |

| SyntheticPL | 0.1247 | 0.1271 | 0.1283 | 0.1298 | 0.1313 | 0.1323 | 0.1438 | 0.1418 | 0.1409 | 0.1400 | 0.1394 | 0.1389 |

| WeiLogRFL | 0.5446 | 0.6488 | 0.7134 | 0.7988 | 0.8598 | 0.8957 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

| Variables | Categories | RiskLogitboost Regression | RiskLogitboost Regression (Standard Error) | RiskLogitboost Regression (Confidence Intervals) |

|---|---|---|---|---|

| * Intercept | 20.874 | 7.4130 | (6.3445; 35.4035) | |

| Power | e | −0.6527 | 3.5641 | (−7.6383; 6.3329) |

| f | −1.3379 | 3.4769 | (−8.1526; 5.4768) | |

| g | −0.8003 | 3.4506 | (−7.5635; 5.9629) | |

| h | 4.9061 | 4.9344 | (−4.7653; 14.578) | |

| i | 7.8770 | 5.4611 | (−2.8268; 18.5808) | |

| j | 8.0675 | 5.5682 | (−2.8462; 18.9812) | |

| * k | 18.1880 | 7.1178 | (4.2371; 32.1389) | |

| * l | 45.3320 | 1.0540 | (43.2662; 47.3978) | |

| * m | 99.6840 | 1.5136 | (96.7173; 102.6507) | |

| * n | 144.1900 | 1.7590 | (140.7424; 147.6376) | |

| * o | 145.8000 | 17.6033 | (111.2975; 180.3025) | |

| Brand | Japanese (except Nissan) or Korean | −7.6774 | 5.7732 | (−18.9929; 3.6381) |

| Mercedes, Chrysler or BMW | −2.0130 | 6.7667 | (−15.2757; 11.2497) | |

| Opel, General Motors or Ford | −6.5298 | 5.7170 | (−17.7351; 4.6755) | |

| other | 8.2048 | 7.9329 | (−7.3437; 23.7533) | |

| * Renault, Nissan or Citroen | −10.3760 | 4.9954 | (−20.1669; −0.5850) | |

| Volkswagen, Audi, Skoda or Seat | −5.5055 | 5.8621 | (−16.9952; 5.9842) | |

| Region | Basse-Normandie | 10.279 | 7.1850 | (−3.8036; 24.3616) |

| Bretagne | −3.4953 | 4.9434 | (−13.1844; 6.1938) | |

| Centre | −6.5749 | 4.2924 | (−14.9880; 1.8382) | |

| * Haute-Normandie | 27.6060 | 9.3055 | (9.3672; 45.8448) | |

| Ile-de-France | −4.1033 | 5.12264 | (−14.1437; 5.9371) | |

| * Limousin | 34.5520 | 10.0028 | (14.9465; 54.1575) | |

| Nord-Pas-de-Calais | 0.0897 | 5.7443 | (−11.1691; 11.3485) | |

| Pays-de-la-Loire | −2.7310 | 5.0910 | (−12.7094; 7.2474) | |

| Poitou-Charentes | 2.4523 | 5.9926 | (−9.2932; 14.1978) | |

| Density | 0.0003 | 0.00025 | (−0.0003; 0.0009) | |

| Gas Regular | 0.0187 | 2.1895 | (−4.2727; 4.3101) | |

| Car Age | 0.1053 | 0.1969 | (−0.2806; 0.4912) | |

| Driver Age | 0.0217 | 0.0712 | (−0.1179; 0.1613) |

| Order | RiskLogitboost | Boosting Tree | Ridge Logistic | Logitboost |

|---|---|---|---|---|

| First | Power o | Driver Age | Brand Japanese (except Nissan) or Korean | Region Limousin |

| Second | Power n | Brand Japanese (except Nissan) or Korean | Region Haute-Normandie | Power m |

| Third | Power m | Car Age | Brand Opel, General Motors or Ford | Power l |

| Fourth | Power l | Density | Brand Volkswagen, Audi, Skoda or Seat | Power n |

| Fifth | Region Limousin | Brand Opel, General Motors or Ford | Region Nord-Pas-de-Calais | Region Haute-Normandie |

| Sixth | Region Haute-Normandie | Region Haute-Normandie | Brand Mercedes, Chrysler or BMW | Power k |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pesantez-Narvaez, J.; Guillen, M.; Alcañiz, M. RiskLogitboost Regression for Rare Events in Binary Response: An Econometric Approach. Mathematics 2021, 9, 579. https://doi.org/10.3390/math9050579

Pesantez-Narvaez J, Guillen M, Alcañiz M. RiskLogitboost Regression for Rare Events in Binary Response: An Econometric Approach. Mathematics. 2021; 9(5):579. https://doi.org/10.3390/math9050579

Chicago/Turabian StylePesantez-Narvaez, Jessica, Montserrat Guillen, and Manuela Alcañiz. 2021. "RiskLogitboost Regression for Rare Events in Binary Response: An Econometric Approach" Mathematics 9, no. 5: 579. https://doi.org/10.3390/math9050579

APA StylePesantez-Narvaez, J., Guillen, M., & Alcañiz, M. (2021). RiskLogitboost Regression for Rare Events in Binary Response: An Econometric Approach. Mathematics, 9(5), 579. https://doi.org/10.3390/math9050579