An Improved YOLOv5 Crack Detection Method Combined with a Bottleneck Transformer

Abstract

1. Introduction

- We modified YOLOv5 in combination with Bottleneck Transformer and proposed an end-to-end pavement crack detection network for efficient detection of crack regions.

- The C2f module proposed in the state-of-the-art object detection model YOLOv8 is introduced to optimize the network. In addition, we compared the effect of introducing the C2f module at different locations in the model on the performance of network.

- We achieved competitive results on the evaluation dataset with fewer parameters and lower Giga Floating-point Operations Per second (GFLOPs).

2. Related Work

2.1. Deep Convolutional Nerual Network Methods

2.2. CNN and Transformer Combined Methods

3. Method

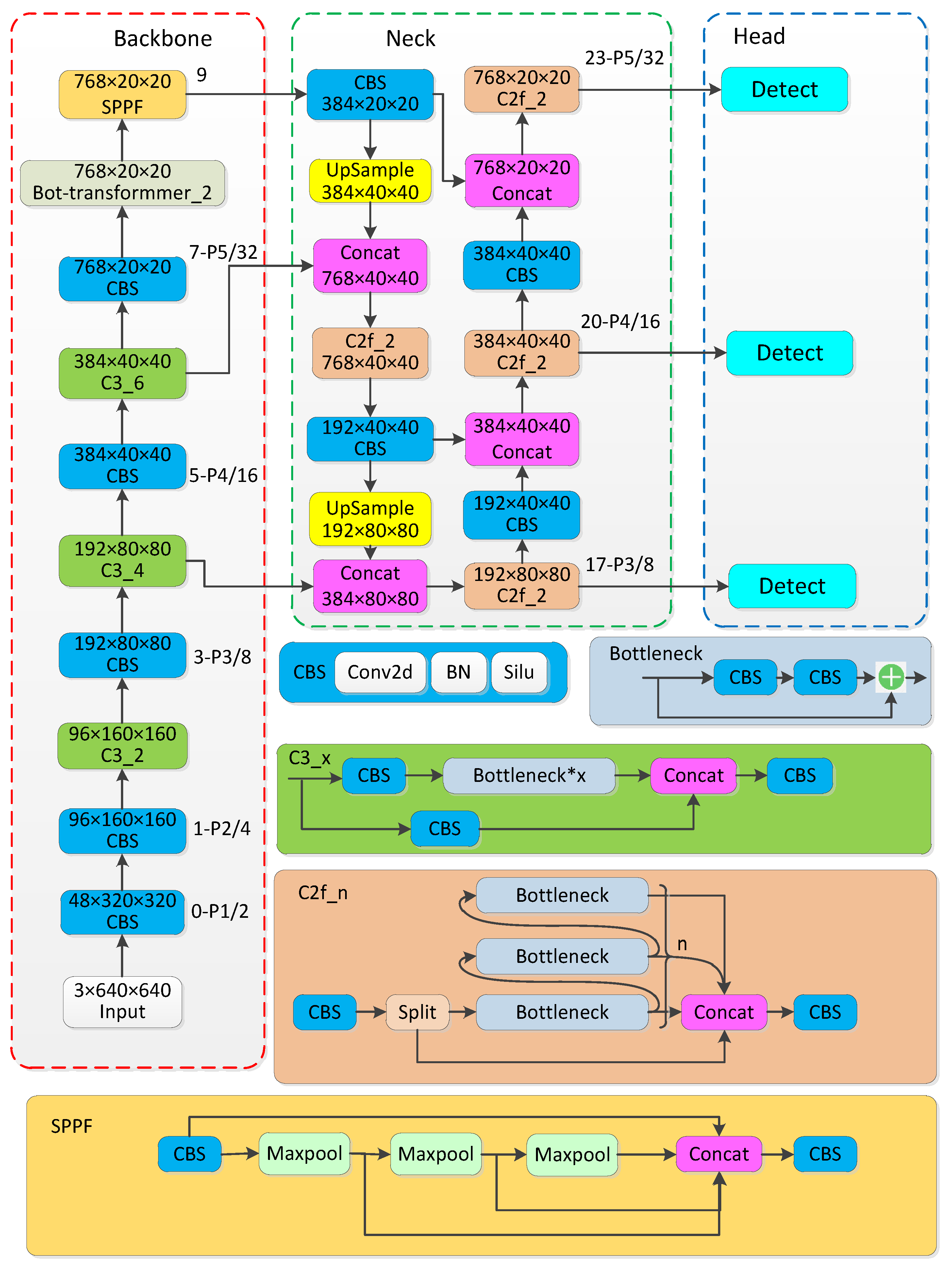

3.1. Network Architecture

- The last C3 module in the original YOLOv5 backbone network, that is, the C3 module before the SPPF module, is replaced by the Bot-transformer module.

- All C3 modules in the Neck networks are replaced by C2f modules.

- C2f reduces a standard convolutional module (CBS), which contributes to the lightweight of the network.

- In addition to the serial stacking of the Bottleneck module similar to C3, a parallel concatenating operation of the Bottleneck module is added in C2f, which helps to obtain rich gradient flow information.

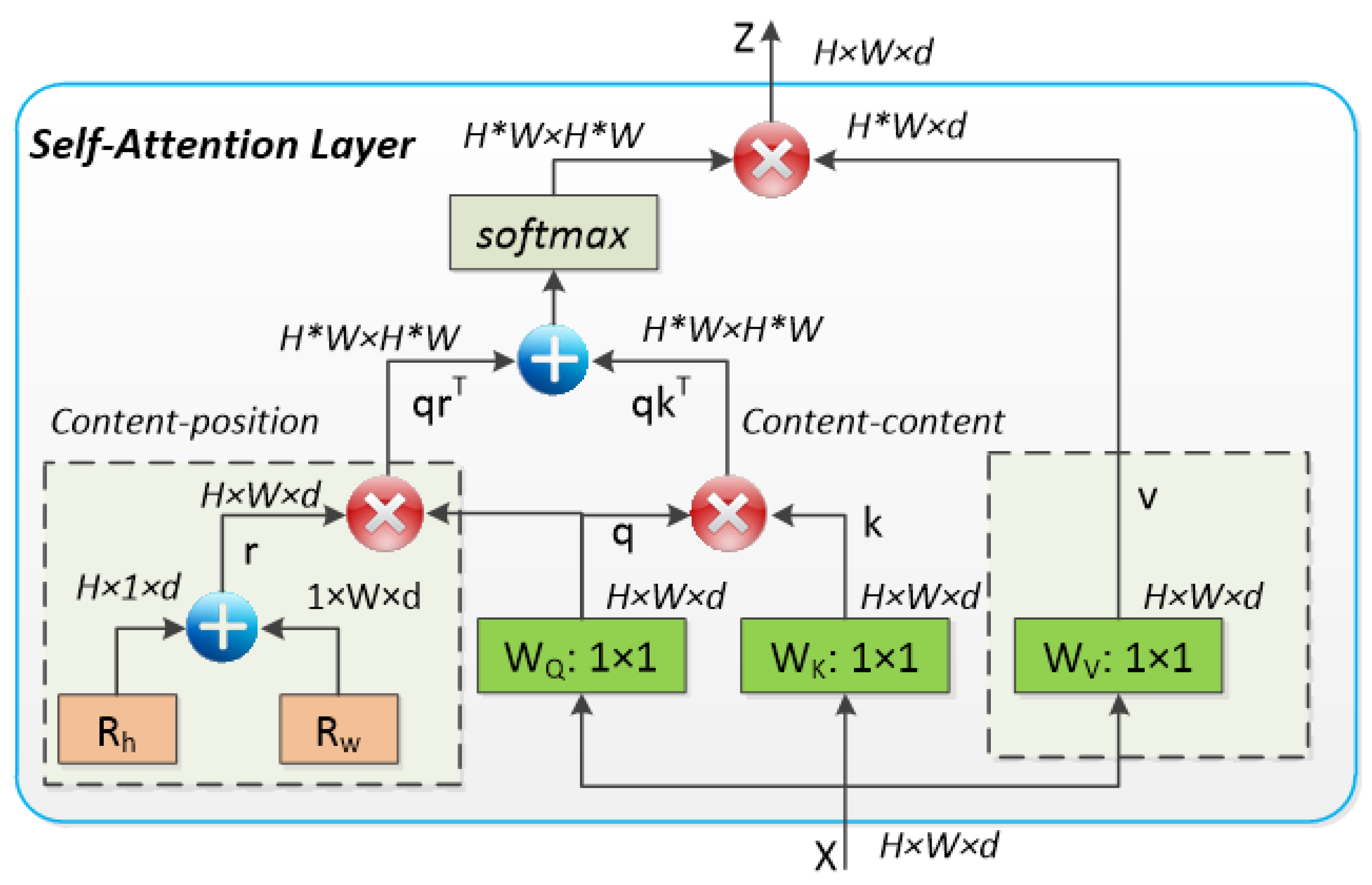

3.2. Transformer Structure

3.2.1. Bot-Transformer Module

3.2.2. MHSA Block

4. Experiment

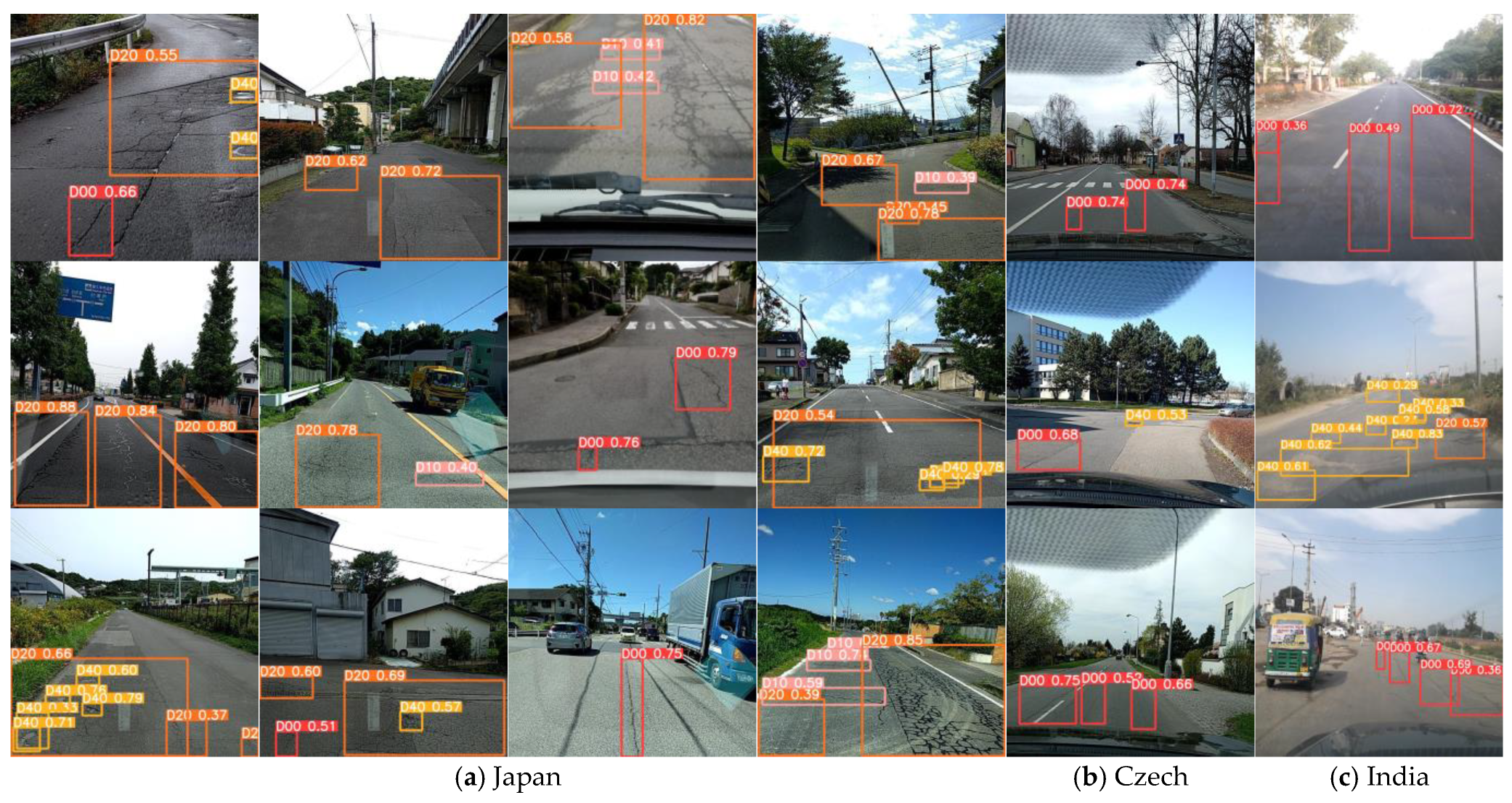

4.1. Dataset

4.2. Evaluation Metrics

4.3. Experimental Environment and Parameter Settings

4.4. Results

4.5. Ablation Study

4.5.1. Effect of C2f Module Location on Network Performance

4.5.2. Effect of the Number of Head of MHSA on Network Performance

4.5.3. Selection of Training Hyperparameters

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Guo, G.; Zhang, Z. Road Damage Detection Algorithm for Improved YOLOv5. Sci. Rep. 2022, 12, 15523. [Google Scholar] [CrossRef] [PubMed]

- Quan, Y.; Sun, J.; Zhang, Y.; Zhang, H. The Method of the Road Surface Crack Detection by the Improved Otsu Threshold. In Proceedings of the 2019 IEEE International Conference on Mechatronics and Automation (ICMA), Tianjin, China, 4–7 August 2019; pp. 1615–1620. [Google Scholar]

- Oliveira, H.; Correia, P.L. Automatic Road Crack Segmentation Using Entropy and Image Dynamic Thresholding. In Proceedings of the 2009 17th European Signal Processing Conference, Glasgow, UK, 24–28 August 2009; pp. 622–626. [Google Scholar]

- Tsai, Y.C.; Kaul, V.; Mersereau, R.M. Critical assessment of pavement distress segmentation methods. J. Transp. Eng. 2010, 136, 11–19. [Google Scholar] [CrossRef]

- Li, P.; Wang, C.; Li, S.; Feng, B. Research on crack detection method of airport runway based on twice-threshold segmentation. In Proceedings of the 5th International Conference on Instrumentation and Measurement, Computer, Communication, and Control, IMCCC 2015, Qinhuangdao, China, 18–20 September 2015; pp. 1716–1720. [Google Scholar]

- Santhi, B.; Krishnamurthy, G.; Siddharth, S.; Ramakrishnan, P.K. Automatic Detection of Cracks in Pavements Using Edge Detection Operator. J. Theor. Appl. Inf. Technol. 2012, 36, 199–205. [Google Scholar]

- Yeum, C.M.; Dyke, S.J. Vision-Based Automated Crack Detection for Bridge Inspection. Comput. Civ. Infrastruct. Eng. 2015, 30, 759–770. [Google Scholar] [CrossRef]

- Nisanth, A.; Mathew, A. Automated Visual Inspection of Pavement Crack Detection and Characterization. Int. J. Technol. Eng. Syst. 2014, 6, 14–20. [Google Scholar]

- Zhou, J. Wavelet-Based Pavement Distress Detection and Evaluation. Opt. Eng. 2006, 45, 27007. [Google Scholar] [CrossRef]

- Amhaz, R.; Chambon, S.; Idier, J.; Baltazart, V. Automatic Crack Detection on Two-Dimensional Pavement Images: An Algorithm Based on Minimal Path Selection. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2718–2729. [Google Scholar] [CrossRef]

- Zou, Q.; Li, Q.; Zhang, F.; Xiong Qian Wang, Z.; Wang, Q. Path Voting Based Pavement Crack Detection from Laser Range Images. In Proceedings of the 2016 IEEE International Conference on Digital Signal Processing (DSP), Beijing, China, 16–18 October 2016; pp. 432–436. [Google Scholar]

- Lee, B.J.; Lee, H.D. Position-Invariant Neural Network for Digital Pavement Crack Analysis. Comput. Civ. Infrastruct. Eng. 2004, 19, 105–118. [Google Scholar] [CrossRef]

- Moon, H.G.; Kim, J.H. Inteligent Crack Detecting Algorithm on the Concrete Crack Image Using Neural Network. In Proceedings of the 28th ISARC, Seoul, Republic of Korea, 29 June–2 July 2011; pp. 1461–1467. [Google Scholar]

- Gavilán, M.; Balcones, D.; Marcos, O.; Llorca, D.F.; Sotelo, M.A.; Parra, I.; Ocaña, M.; Aliseda, P.; Yarza, P.; Amírola, A. Adaptive Road Crack Detection System by Pavement Classification. Sensors 2011, 11, 9628–9657. [Google Scholar] [CrossRef]

- O’Byrne, M.; Schoefs, F.; Ghosh, B.; Pakrashi, V. Texture Analysis Based Damage Detection of Ageing Infrastructural Elements. Comput. Civ. Infrastruct. Eng. 2013, 28, 162–177. [Google Scholar] [CrossRef]

- Cha, Y.J.; You, K.; Choi, W. Vision-Based Detection of Loosened Bolts Using the Hough Transform and Support Vector Machines. Autom. Constr. 2016, 71, 181–188. [Google Scholar] [CrossRef]

- Shi, Y.; Cui, L.; Qi, Z.; Meng, F.; Chen, Z. Automatic Road Crack Detection Using Random Structured Forests. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3434–3445. [Google Scholar] [CrossRef]

- Cord, A.; Chambon, S. Automatic Road Defect Detection by Textural Pattern Recognition Based on AdaBoost. Comput. Civ. Infrastruct. Eng. 2012, 27, 244–259. [Google Scholar] [CrossRef]

- Hu, G.X.; Hu, B.L.; Yang, Z.; Huang, L.; Li, P. Pavement Crack Detection Method Based on Deep Learning Models. Wirel. Commun. Mob. Comput. 2021, 2021, 5573590. [Google Scholar] [CrossRef]

- Yang, X.; Li, H.; Yu, Y.; Luo, X.; Huang, T.; Yang, X. Automatic Pixel-Level Crack Detection and Measurement Using Fully Convolutional Network. Comput. Civ. Infrastruct. Eng. 2018, 33, 1090–1109. [Google Scholar] [CrossRef]

- Li, S.; Zhao, X.; Zhou, G. Automatic Pixel-Level Multiple Damage Detection of Concrete Structure Using Fully Convolutional Network. Comput. Civ. Infrastruct. Eng. 2019, 34, 616–634. [Google Scholar] [CrossRef]

- Yu, G.; Dong, J.; Wang, Y.; Zhou, X. RUC-Net: A Residual-Unet-Based Convolutional Neural Network for Pixel-Level Pavement Crack Segmentation. Sensors 2023, 23, 53. [Google Scholar] [CrossRef]

- Zou, Q.; Zhang, Z.; Li, Q.; Qi, X.; Wang, Q.; Wang, S. DeepCrack: Learning Hierarchical Convolutional Features for Crack Detection. IEEE Trans. Image Process. 2019, 28, 1498–1512. [Google Scholar] [CrossRef]

- Zhuang, J.; Yang, J.; Gu, L.; Dvornek, N. Shelfnet for Fast Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 847–856. [Google Scholar]

- Available online: https://github.com/ultralytics/yolov5 (accessed on 5 March 2023).

- Arya, D.; Maeda, H.; Kumar Ghosh, S.; Toshniwal, D.; Omata, H.; Kashiyama, T.; Sekimoto, Y. Global Road Damage Detection: State-of-the-Art Solutions. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5533–5539. [Google Scholar]

- Srinivas, A.; Lin, T.Y.; Parmar, N.; Shlens, J.; Abbeel, P.; Vaswani, A. Bottleneck Transformers for Visual Recognition. In Proceedings of the IEEE/CVF Conference on Computer vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16514–16524. [Google Scholar]

- Girshick, R.B. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Nie, M.; Wang, K. Pavement Distress Detection Based on Transfer Learning. In Proceedings of the 2018 5th International Conference on Systems and Informatics (ICSAI), Nanjing, China, 10–12 November 2018; pp. 435–439. [Google Scholar]

- Hascoet, T.; Zhang, Y.; Persch, A.; Takashima, R.; Takiguchi, T.; Ariki, Y. FasterRCNN Monitoring of Road Damages: Competition and Deployment. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5545–5552. [Google Scholar]

- Vishwakarma, R.; Vennelakanti, R. CNN Model Tuning for Global Road Damage Detection. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5609–5615. [Google Scholar]

- Pei, Z.; Lin, R.; Zhang, X.; Shen, H.; Tang, J.; Yang, Y. CFM: A Consistency Filtering Mechanism for Road Damage Detection. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5584–5591. [Google Scholar]

- Xiang, X.; Wang, Z.; Qiao, Y. An Improved YOLOv5 Crack Detection Method Combined with Transformer. IEEE Sens. J. 2022, 22, 14328–14335. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint Triplets for Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6568–6577. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Mandal, V.; Uong, L.; Adu-gyamfi, Y. Automated Road Crack Detection Using Deep Convolutional Neural Networks. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 5212–5215. [Google Scholar]

- Shao, C.; Zhang, L.; Pan, W. PTZ Camera-Based Image Processing for Automatic Crack Size Measurement in Expressways. IEEE Sens. J. 2021, 21, 23352–23361. [Google Scholar] [CrossRef]

- Zhang, R.; Shi, Y.; Yu, X. Pavement Crack Detection Based on Deep Learning. In Proceedings of the 2021 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 22–24 May 2021; pp. 7367–7372. [Google Scholar]

- Zhang, X.; Xia, X.; Li, N.; Lin, M.; Song, J.; Ding, N. Exploring the Tricks for Road Damage Detection with A One-Stage Detector. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5616–5621. [Google Scholar]

- Mandal, V.; Mussah, A.R.; Adu-Gyamfi, Y. Deep Learning Frameworks for Pavement Distress Classification: A Comparative Analysis. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5577–5583. [Google Scholar]

- Liu, Y.; Zhang, X.; Zhang, B.; Chen, Z. Deep Network for Road Damage Detection. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5572–5576. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Jing, Y.; Ren, Y.; Liu, Y.; Wang, D.; Yu, L. Automatic Extraction of Damaged Houses by Earthquake Based on Improved YOLOv5: A Case Study in Yangbi. Remote Sens. 2022, 14, 382. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured Scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Lei, F.; Tang, F.; Li, S. Underwater Target Detection Algorithm Based on Improved YOLOv5. J. Mar. Sci. Eng. 2022, 10, 310. [Google Scholar] [CrossRef]

- Zhang, X.; Zeng, H.; Guo, S.; Zhang, L. Efficient Long-Range Attention Network for Image Super-Resolution. In Computer Vision –ECCV 2022. ECCV 2022. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; pp. 1–20. [Google Scholar]

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Sekimoto, Y. RDD2020: An Annotated Image Dataset for Automatic Road Damage Detection Using Deep Learning. Data Br. 2021, 36, 107133. [Google Scholar] [CrossRef]

- Jeong, D. Road Damage Detection Using YOLO with Smartphone Images. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5559–5562. [Google Scholar]

| Country | D00 | D10 | D20 | D40 |

|---|---|---|---|---|

| Czech | 764 | 300 | 129 | 154 |

| India | 1109 | 60 | 1758 | 1530 |

| Japan | 2297 | 2240 | 4714 | 1390 |

| Total | 4670 | 2600 | 6601 | 3074 |

| Methods | Precision | Recall | F1 | mAP0.5 | mAP0.5:0.95 | Params/M | GFLOPs | FPS |

|---|---|---|---|---|---|---|---|---|

| Faster-RCNN | 0.529 | 0.607 | 0.565 | 0.513 | 0.220 | 60.1 | 108.6 | 29 |

| Cascade-RCNN | 0.494 | 0.656 | 0.564 | 0.548 | 0.250 | 87.9 | 110.6 | 24 |

| YOLOv3 | 0.608 | 0.612 | 0.610 | 0.627 | 0.308 | 58.7 | 154.6 | 48 |

| YOLOv4-CSP | 0.606 | 0.595 | 0.600 | 0.631 | 0.317 | 50.1 | 119.1 | 49 |

| YOLOv5 | 0.620 | 0.606 | 0.613 | 0.633 | 0.321 | 44.0 | 107.7 | 59 |

| YOLOv7 | 0.629 | 0.601 | 0.615 | 0.640 | 0.338 | 34.8 | 103.2 | 85 |

| CenterNet | 0.500 | 0.626 | 0.556 | 0.510 | 0.215 | 14.4 | 19.3 | 70 |

| Tood | 0.615 | 0.578 | 0.596 | 0.601 | 0.275 | 53.2 | 71.8 | 17 |

| Ours | 0.654 | 0.591 | 0.621 | 0.646 | 0.331 | 22.9 | 55.9 | 85 |

| Methods | Precision | Recall | F1 | mAP0.5 | mAP0.5:0.95 | Params/M | GFLOPs | FPS |

|---|---|---|---|---|---|---|---|---|

| C2f-Backbone | 0.604 | 0.602 | 0.603 | 0.628 | 0.320 | 21 | 60.6 | 80 |

| C2f-head (ours) | 0.654 | 0.591 | 0.621 | 0.646 | 0.331 | 22.9 | 55.9 | 85 |

| C2f-all | 0.637 | 0.575 | 0.604 | 0.630 | 0.319 | 25.4 | 70.1 | 74 |

| Number of Heads | Precision | Recall | F1 | mAP0.5 | mAP0.5:0.95 |

|---|---|---|---|---|---|

| Head = 4(ours) | 0.654 | 0.591 | 0.621 | 0.646 | 0.331 |

| Head = 8 | 0.658 | 0.576 | 0.614 | 0.644 | 0.327 |

| Head = 12 | 0.615 | 0.621 | 0.618 | 0.646 | 0.330 |

| Head = 16 | 0.652 | 0.588 | 0.618 | 0.642 | 0.326 |

| Hyperparameters Group | Precision | Recall | F1 | mAP0.5 | mAP0.5:0.95 | Training Time |

|---|---|---|---|---|---|---|

| LAHG | 0.587 | 0.623 | 0.605 | 0.628 | 0.311 | 3 h 35 min |

| MAHG | 0.654 | 0.591 | 0.621 | 0.646 | 0.331 | 4 h 3 min |

| evolve based on LAHG | 0.641 | 0.593 | 0.616 | 0.637 | 0.321 | 89 h 35 min |

| evolve based on MAHG | 0.618 | 0.608 | 0.613 | 0.634 | 0.334 | 101 h 15 min |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, G.; Zhou, X. An Improved YOLOv5 Crack Detection Method Combined with a Bottleneck Transformer. Mathematics 2023, 11, 2377. https://doi.org/10.3390/math11102377

Yu G, Zhou X. An Improved YOLOv5 Crack Detection Method Combined with a Bottleneck Transformer. Mathematics. 2023; 11(10):2377. https://doi.org/10.3390/math11102377

Chicago/Turabian StyleYu, Gui, and Xinglin Zhou. 2023. "An Improved YOLOv5 Crack Detection Method Combined with a Bottleneck Transformer" Mathematics 11, no. 10: 2377. https://doi.org/10.3390/math11102377

APA StyleYu, G., & Zhou, X. (2023). An Improved YOLOv5 Crack Detection Method Combined with a Bottleneck Transformer. Mathematics, 11(10), 2377. https://doi.org/10.3390/math11102377