Enhancing Teaching-Learning Effectiveness by Creating Online Interactive Instructional Modules for Fundamental Concepts of Physics and Mathematics

Abstract

1. Introduction

1.1. Prior Knowledge

1.2. Benefits of Online Supplementary Instruction

1.2.1. Individualized, Self-Paced Instruction

1.2.2. Presence in Online Instruction

1.2.3. Cognitive Apprenticeship

1.3. Integrating Self-Testing into Study Sessions

2. Methods

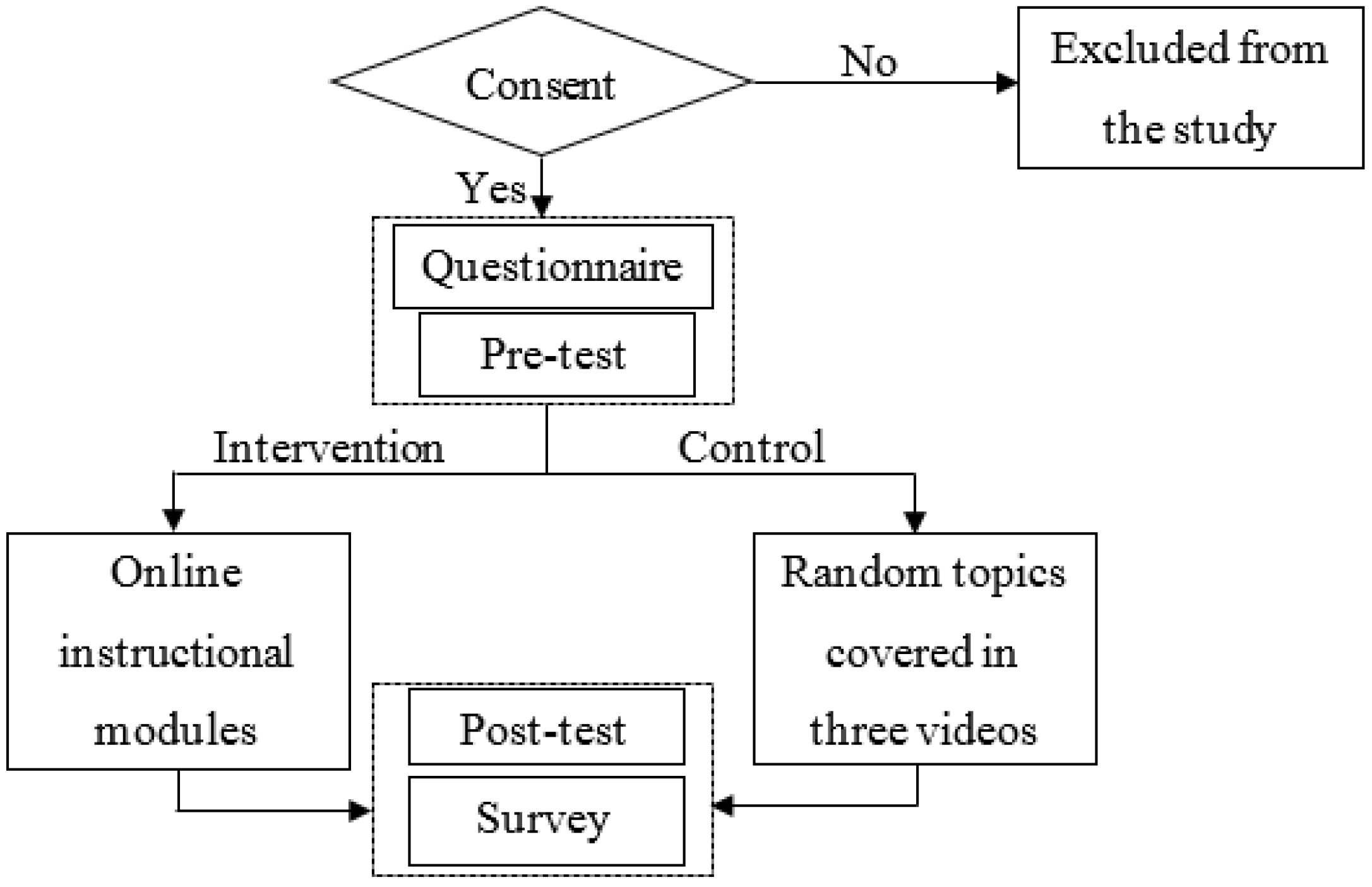

2.1. Procedure

- The consent form was distributed to all students in the course (in the classroom);

- The students who chose to participate in the study returned the completed consent form and were randomly assigned to either the intervention or control group;

- A questionnaire was provided and filled out by the study participants (in the classroom);

- All participants took the pre-test (in the classroom);

- Links to all the videos and related assignments were provided to the participants (intervention and control groups) and they were required to complete all parts of these online instructional modules within a specific 10-day period. All quizzes and videos were provided on the institution’s Learning Management System (Blackboard) website (outside the classroom);

- All participants took the post-test (in the classroom);

- A survey form was provided and completed by all participants.

2.2. Participants

2.3. Modules

2.4. Measures

2.4.1. Questionnaire: Demographic, Academic, and Social Background Data

2.4.2. Pre- and Post-Quiz

- x (t) = exp () is the displacement of a particle. Find its velocity. (Differentiation module)

- The acceleration of a car, which was initially at rest, is approximated as: a (t) = ¾. What would be the velocity of the car at t = 2? (Integration module)

2.4.3. Pre- and Post-Test

2.4.4. Survey

2.5. Data Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Krause, S.; Decker, J.C.; Griffin, R. Using a materials concept inventory to assess conceptual gain in introductory materials engineering courses. In Proceedings of the 33rd Annual Frontiers in Education (FIE 2003), Westminster, CO, USA, 5–8 November 2003; p. T3D-7. [Google Scholar]

- Litzinger, T.; Lattuca, L.R.; Hadgraft, R.; Newstetter, W. Engineering education and the development of expertise. J. Eng. Educ. 2011, 100, 123–150. [Google Scholar] [CrossRef]

- Cook, M.P. Visual representations in science education: The influence of prior knowledge and cognitive load theory on instructional design principles. Sci. Educ. 2006, 90, 1073–1091. [Google Scholar] [CrossRef]

- Garfield, J.; del Mas, R.; Chance, B. Using students’ informal notions of variability to develop an understanding of formal measures of variability. In Thinking with Data; Psychology Press: New York, NY, USA, 2007; pp. 117–147. [Google Scholar]

- Martin, V.L.; Pressley, M. Elaborative-interrogation effects depend on the nature of the question. J. Educ. Psychol. 1991, 83, 113–119. [Google Scholar] [CrossRef]

- Woloshyn, V.E.; Paivio, A.; Pressley, M. Use of elaborative interrogation to help students acquire information consistent with prior knowledge and information inconsistent with prior knowledge. J. Educ. Psychol. 1994, 86, 79–89. [Google Scholar] [CrossRef]

- Ambrose, S.A.; Bridges, M.W.; Lovett, M.C.; DiPietro, M.; Norman, M.K. How Learning Works: 7 Research-Based Principles for Smart Teaching; John Wiley & Sons: Hoboken, NJ, USA, 2010. [Google Scholar]

- Bos, N.; Groeneveld, C.; Bruggen, J.; Brand-Gruwel, S. The use of recorded lectures in education and the impact on lecture attendance and exam performance. Br. J. Educ. Technol. 2016, 47, 906–917. [Google Scholar] [CrossRef]

- Giannakos, M.N.; Jaccheri, L.; Krogstie, J. Exploring the relationship between video lecture usage patterns and students’ attitudes. Br. J. Educ. Technol. 2016, 47, 1259–1275. [Google Scholar] [CrossRef]

- Heitink, M.; Voogt, J.; Verplanken, L.; van Braak, J.; Fisser, P. Teachers’ professional reasoning about their pedagogical use of technology. Comput. Educ. 2016, 101, 70–83. [Google Scholar] [CrossRef]

- Amador, J.M. Video simulations to develop preservice mathematics teachers’ discourse practices. Technol. Pedag. Educ. 2017, 1–14. [Google Scholar] [CrossRef]

- Major, L.; Watson, S. Using video to support in-service teacher professional development: The state of the field, limitations and possibilities. Technol. Pedag. Educ. 2017, 1–20. [Google Scholar] [CrossRef]

- Admiraal, W. Meaningful learning from practice: Web-based video in professional preparation programmes in university. Technol. Pedag. Educ. 2014, 23, 491–506. [Google Scholar] [CrossRef]

- Means, B.; Toyama, Y.; Murphy, R.; Bakia, M.; Jones, K. Evaluation of Evidence-Based Practices in Online Learning: A Meta-Analysis and Review of Online Learning Studies; US Department of Education: Washington, DC, USA, 2009.

- Todd, E.M.; Watts, L.L.; Mulhearn, T.J.; Torrence, B.S.; Turner, M.R.; Connelly, S.; Mumford, M.D. A meta-analytic comparison of face-to-face and online delivery in ethics instruction: The case for a hybrid approach. Sci. Eng. Ethics 2017, 23, 1719–1754. [Google Scholar] [CrossRef] [PubMed]

- Fraser, B. Classroom learning environments. In Encyclopedia of Science Education; Springer: Berlin, Germany, 2015; pp. 154–157. [Google Scholar]

- Papastergiou, M.; Antoniou, P.; Apostolou, M. Effects of student participation in an online learning community on environmental education: A Greek case study. Technol. Pedag. Educ. 2011, 20, 127–142. [Google Scholar] [CrossRef]

- Park, J.Y. Student interactivity and teacher participation: An application of legitimate peripheral participation in higher education online learning environments. Technol. Pedag. Educ. 2015, 24, 389–406. [Google Scholar] [CrossRef]

- Admiraal, W.; van Vugt, F.; Kranenburg, F.; Koster, B.; Smit, B.; Weijers, S.; Lockhorst, D. Preparing pre-service teachers to integrate technology into K–12 instruction: Evaluation of a technology-infused approach. Technol. Pedag. Educ. 2017, 26, 105–120. [Google Scholar] [CrossRef]

- Schick, J.A.; de Felix, J.W. Using Technology to Help Teachers Meet the Needs of Language-minority Students in the USA. J. Inf. Technol. Teach. Educ. 1992, 1, 159–171. [Google Scholar] [CrossRef]

- Allen, L.K.; Eagleson, R.; de Ribaupierre, S. Evaluation of an online three-dimensional interactive resource for undergraduate neuroanatomy education. Anat. Sci. Educ. 2016, 9, 431–439. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.T. Teachers using an integrated learning environment to cater for individual learning differences in Hong Kong primary classrooms. Technol. Pedag. Educ. 2005, 14, 371–389. [Google Scholar] [CrossRef]

- Steils, N.; Tombs, G.; Mawer, M.; Savin-Baden, M.; Wimpenny, K. Implementing the liquid curriculum: The impact of virtual world learning on higher education. Technol. Pedag. Educ. 2015, 24, 155–170. [Google Scholar] [CrossRef]

- Chung, G.K.; Delacruz, G.C.; Dionne, G.B.; Baker, E.L.; Lee, J.J.; Osmundson, E. Towards Individualized Instruction with Technology-Enabled Tools and Methods: An Exploratory Study. CRESST Report 854; National Center for Research on Evaluation, Standards, and Student Testing (CRESST): Los Angeles, CA, USA, 2016. [Google Scholar]

- Moores, D.F. One size does not fit all: Individualized instruction in a standardized educational system. Am. Ann. Deaf 2013, 158, 98–103. [Google Scholar] [CrossRef] [PubMed]

- Cox, B.E.; McIntosh, K.L.; Terenzini, P.T.; Reason, R.D.; Quaye, B.R.L. Pedagogical signals of faculty approachability: Factors shaping faculty–student interaction outside the classroom. Res. High. Educ. 2010, 51, 767–788. [Google Scholar] [CrossRef]

- Griffin, W.; Cohen, S.D.; Berndtson, R.; Burson, K.M.; Camper, K.M.; Chen, Y.; Smith, M.A. Starting the conversation: An exploratory study of factors that influence student office hour use. Coll. Teach. 2014, 62, 94–99. [Google Scholar] [CrossRef]

- Adamopoulos, P. What Makes a Great MOOC? An Interdisciplinary Analysis of Student Retention in Online Courses. In Reshaping Society Through Information Systems Design, Proceedings of the 34th International Conference on Information Systems, Università Bocconi, Milan, Italy, 15–18 December 2013; Association for Information Systems, AIS Electronic Library (AISeL): Atlanta, GA, USA, 2013. [Google Scholar]

- Hew, K.F.; Cheung, W.S. Students’ and instructors’ use of massive open online courses (MOOCs): Motivations and challenges. Educ. Res. Rev. 2014, 12, 45–58. [Google Scholar] [CrossRef]

- Lewis, S.; Whiteside, A.; Dikkers, A.G. Autonomy and responsibility: Online learning as a solution for at-risk high school students. Int. J. E-Learn. Distance Educ. 2014, 29, 1–11. [Google Scholar]

- Tseng, J.-L.; Pai, C. Analyzing the Learning Modes of Learners using Time-Management Modules in Self-Paced Learning. GSTF J. Comput. (JoC) 2014, 2, 53–58. [Google Scholar]

- Simonson, M.; Smaldino, S.; Zvacek, S.M. Teaching and Learning at a Distance: Foundations of Distance Education, 6th ed.; Information Age Publishing: Charlotte, NC, USA, 2014. [Google Scholar]

- Terras, M.M.; Ramsay, J. Massive open online courses (MOOCs): Insights and challenges from a psychological perspective. Br. J. Educ. Technol. 2015, 46, 472–487. [Google Scholar] [CrossRef]

- Naidu, S. Openness and Flexibility Are the Norm, But What Are the Challenges; Taylor & Francis: Abingdon, UK, 2017. [Google Scholar]

- Anderson, T. Towards a theory of online learning. In Theory and Practice of Online Learning; Anderson, T., Ed.; Athabasca University Press: Athabasca, AB, Canada, 2004; pp. 109–119. [Google Scholar]

- Martín-Rodríguez, Ó.; Fernández-Molina, J.C.; Montero-Alonso, M.Á.; González-Gómez, F. The main components of satisfaction with e-learning. Technol. Pedag. Educ. 2015, 24, 267–277. [Google Scholar] [CrossRef]

- Laat, M.D.; Lally, V.; Lipponen, L.; Simons, R.-J. Online teaching in networked learning communities: A multi-method approach to studying the role of the teacher. Instr. Sci. 2006, 35, 257–286. [Google Scholar] [CrossRef]

- Gunawardena, C.N. Social presence theory and implications for interaction and collaborative learning in computer conferences. Int. J. Educ. Telecommun. 1995, 1, 147–166. [Google Scholar]

- Richardson, J.; Swan, K. Examing Social Presence in Online Courses in Relation to Students’ Perceived Learning and Satisfaction; J. Asynchronous Learning Networks (fmr), Online Learning Consortium (current): Newburyport, MA, USA, 2003. [Google Scholar]

- Zhan, Z.; Hu, M. Academic self-concept and social presence in face-to-face and online learning: Perceptions and effects on students’ learning achievement and satisfaction across environments. Comput. Educ. 2013, 69, 131–138. [Google Scholar] [CrossRef]

- Zhang, D.J.; Allon, G.; Van Mieghem, J.A. Does Social Interaction Improve Learning Outcomes? Evidence from Field Experiments on Massive Open Online Courses. Manuf. Serv. Oper. Manag. 2017, 19, 347–367. [Google Scholar] [CrossRef]

- Garrison, D.R.; Anderson, T.; Archer, W. Critical thinking, cognitive presence, and computer conferencing in distance education. Am. J. Distance Educ. 2001, 15, 7–23. [Google Scholar] [CrossRef]

- Garrison, D.R.; Arbaugh, J.B. Researching the community of inquiry framework: Review, issues, and future directions. Internet High. Educ. 2007, 10, 157–172. [Google Scholar] [CrossRef]

- Anderson, T.; Liam, R.; Garrison, D.R.; Archer, W. Assessing Teaching Presence in a Computer Conferencing Context; J. Asynchronous Learning Networks (fmr), Online Learning Consortium (current): Newburyport, MA, USA, 2001. [Google Scholar]

- Collins, A.; Brown, J.S.; Holum, A. Cognitive apprenticeship: Making thinking visible. Am. Educ. 1991, 15, 6–11. [Google Scholar]

- Dennen, V.P.; Burner, K.J. The cognitive apprenticeship model in educational practice. In Handbook of Research on Educational Communications and Technology; Routledge: Abingdon, UK, 2008; Volume 3, pp. 425–439. [Google Scholar]

- Anderson, T. Teaching in an online learning context. In Theory and Practice of Online Learning; Athabasca University Press: Athabasca, AB, Canada, 2004; p. 273. [Google Scholar]

- Roediger, H.L., III; Karpicke, J.D. The power of testing memory: Basic research and implications for educational practice. Perspect. Psychol. Sci. 2006, 1, 181–210. [Google Scholar] [CrossRef] [PubMed]

- Pastötter, B.; Bäuml, K.-H.T. Distinct slow and fast cortical theta dynamics in episodic memory retrieval. Neuroimage 2014, 94, 155–161. [Google Scholar] [CrossRef] [PubMed]

- Szpunar, K.K.; Khan, N.Y.; Schacter, D.L. Interpolated memory tests reduce mind wandering and improve learning of online lectures. Proc. Natl. Acad. Sci. USA 2013, 110, 6313–6317. [Google Scholar] [CrossRef] [PubMed]

- Moradi, M.; Liu, L.; Luchies, C. Identifying Important Aspects in Developing Interactive Instructional Videos. In Proceedings of the 2016 American Society for Engineering Education Midwest Section Conference, Manhattan, NY, USA, 25 September 2016; pp. 1–8. [Google Scholar]

- Arnold, S.F. Mathematical Statistics; Prentice-Hall: Upper Saddle River, NJ, USA, 1990. [Google Scholar]

- Casella, G.; Berger, R.L. Statistical Inference; Thomson Learning: Boston, MA, USA, 2002. [Google Scholar]

- Moore, D.S.; McCabe, G.P. Introduction to the Practice of Statistics; WH Freeman: New York, NY, USA, 1993. [Google Scholar]

- Ratcliffe, J. The effect on the t distribution of non-normality in the sampled population. Appl. Stat. 1968, 17, 42–48. [Google Scholar] [CrossRef]

- Lumley, T.; Diehr, P.; Emerson, S.; Chen, L. The importance of the normality assumption in large public health data sets. Ann. Rev. Public Health 2002, 23, 151–169. [Google Scholar] [CrossRef] [PubMed]

- Erbas, A.K.; Ince, M.; Kaya, S. Learning Mathematics with Interactive Whiteboards and Computer-Based Graphing Utility. Educ. Technol. Soc. 2015, 18, 299–312. [Google Scholar]

- Hwang, G.-J.; Lai, C.-L. Facilitating and Bridging Out-Of-Class and In-Class Learning: An Interactive E-Book-Based Flipped Learning Approach for Math Courses. J. Educ. Technol. Soc. 2017, 20, 184–197. [Google Scholar]

- Delialioglu, O.; Yildirim, Z. Students’ perceptions on effective dimensions of interactive learning in a blended learning environment. Educ. Technol. Soc. 2007, 10, 133–146. [Google Scholar]

- Sahin, I.; Shelley, M.C. Considering Students’ Perceptions: The Distance Education Student Satisfaction Model. Educ. Technol. Soc. 2008, 11, 216–223. [Google Scholar]

- Kuo, F.-R.; Chin, Y.-Y.; Lee, C.-H.; Chiu, Y.-H.; Hong, C.-H.; Lee, K.-L.; Ho, W.-H.; Lee, C.-H. Web-based learning system for developing and assessing clinical diagnostic skills for dermatology residency program. Educ. Technol. Soc. 2016, 19, 194–207. [Google Scholar]

- Neo, M.; Neo, T.-K. Engaging students in multimedia-mediated constructivist learning-Students’ perceptions. Educ. Technol. Soc. 2009, 12, 254–266. [Google Scholar]

- Montrieux, H.; Vangestel, S.; Raes, A.; Matthys, P.; Schellens, T. Blending Face-to-Face Higher Education with Web-Based Lectures: Comparing Different Didactical Application Scenarios. Educ. Technol. Soc. 2015, 18, 170–182. [Google Scholar]

| t-Test Results | |

|---|---|

| t Stat | 0.87 |

| p (T ≤ t) | 0.38 1 |

| t Critical | 2.01 |

| 18 Years Old | 19 Years Old | 20 Years Old | 21+ Years Old | |

|---|---|---|---|---|

| Intervention | 0 | 48% | 32% | 20% |

| Control | 9% | 50% | 23% | 18% |

| Increased Score | Decreased Score | Same Score | |

|---|---|---|---|

| Intervention | 43% | 14% | 43% |

| Control | 18% | 59% | 23% |

| Pre-Test | Post-Test | |||

|---|---|---|---|---|

| Intervention | Control | Intervention | Control | |

| Mean | 6.68 | 7.59 | 7.52 | 6.73 |

| Median | 6 | 8 | 7 | 7 |

| Standard Deviation | 1.80 | 1.71 | 1.68 | 1.86 |

| Age of Participants in Two Groups | Pre-Test Scores of the Two Groups | Post- vs. Pre-Test Scores (Intervention Group) | Post- vs. Pre-Test Scores (Control Group) | |

|---|---|---|---|---|

| t Stat | 0.87 | −2.55 | −3.67 | 2.02 |

| p (T ≤ t) | 0.38 1 | 0.01 2 | 0.001 2 | 0.06 1 |

| t Critical | 2.01 | 2.02 | 2.06 | 2.08 |

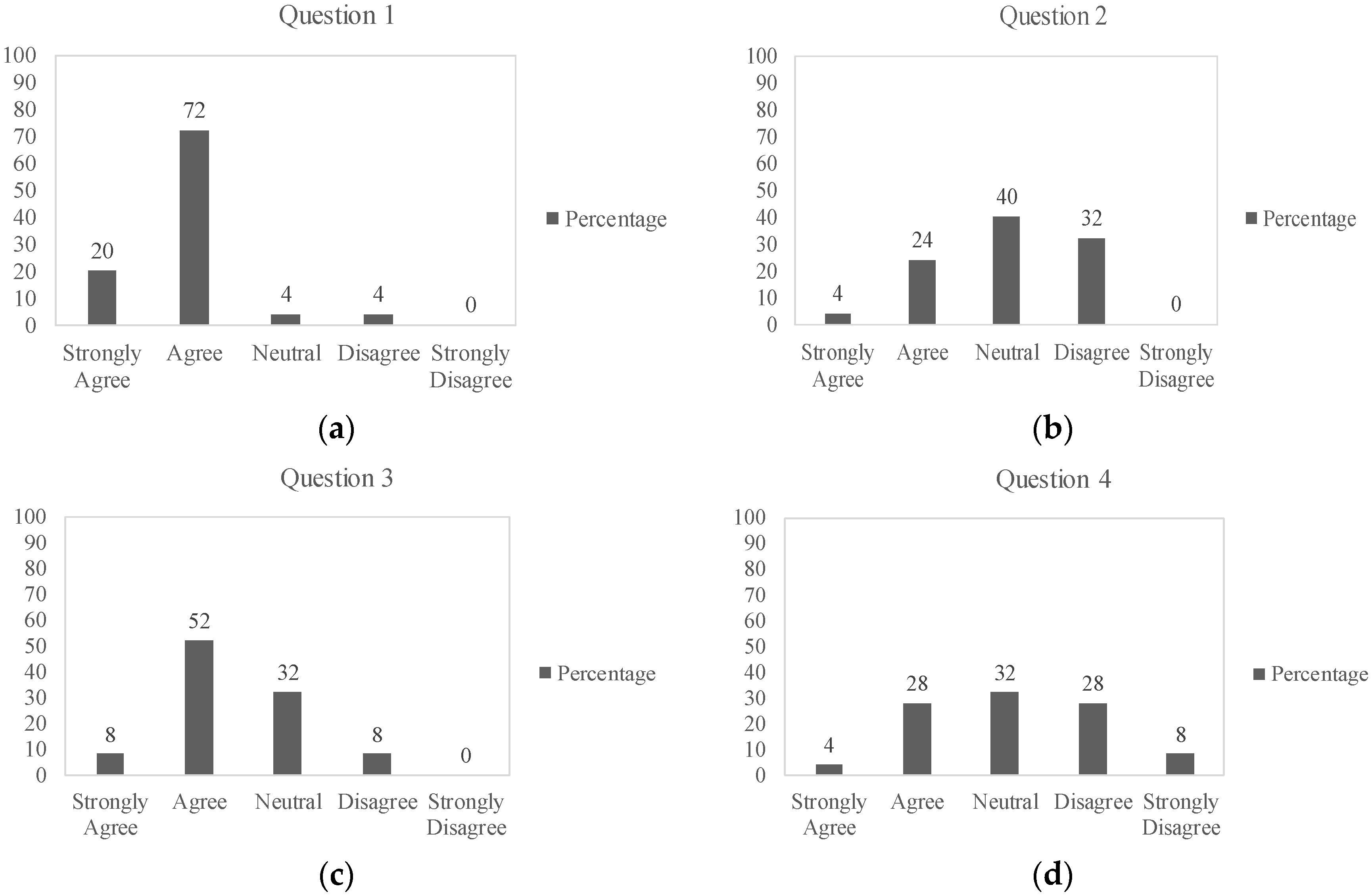

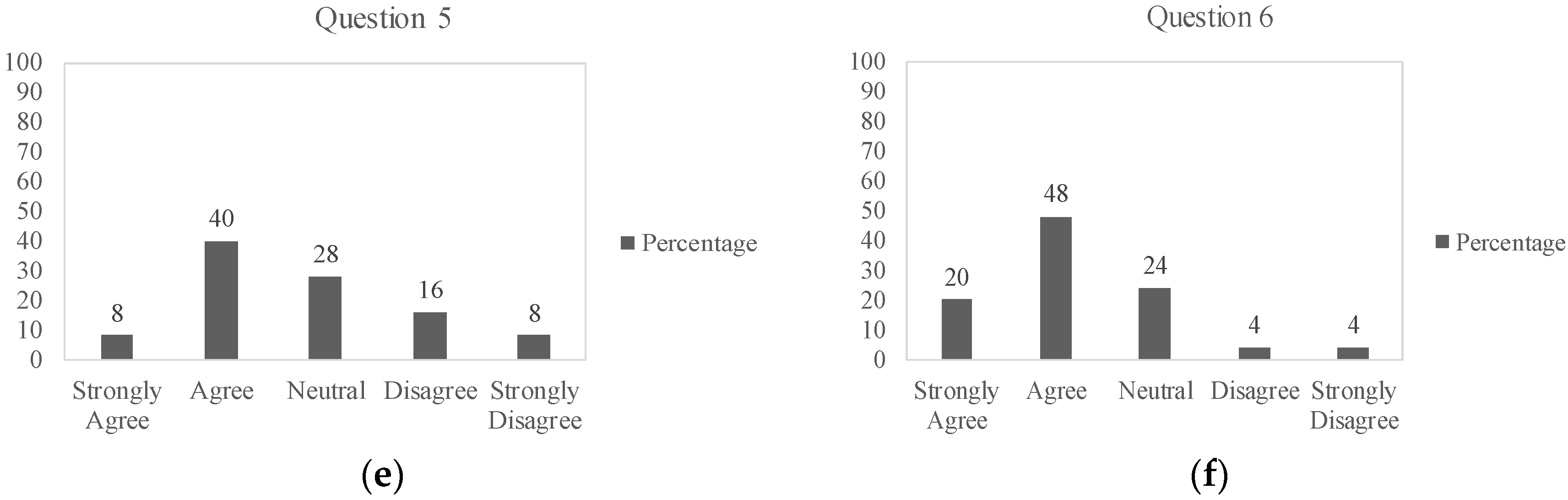

| Survey Questions | Mean | STD | Mode |

|---|---|---|---|

| Videos were good quality-wise (audio, visual, length, buffering) | 4.08 | 0.62 | 4 |

| Having lectures covered in videos was better than in classroom | 3 | 0.84 | 3 |

| Having a quiz helped me in understanding concepts better | 3.6 | 0.74 | 4 |

| I think one of the two quizzes was unnecessary | 2.92 | 1.01 | 3 |

| I felt more engaged to the course and I had a better control over course flow | 3.24 | 1.06 | 4 |

| I recommend using these modules to cover basic concepts | 3.76 | 0.94 | 4 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moradi, M.; Liu, L.; Luchies, C.; Patterson, M.M.; Darban, B. Enhancing Teaching-Learning Effectiveness by Creating Online Interactive Instructional Modules for Fundamental Concepts of Physics and Mathematics. Educ. Sci. 2018, 8, 109. https://doi.org/10.3390/educsci8030109

Moradi M, Liu L, Luchies C, Patterson MM, Darban B. Enhancing Teaching-Learning Effectiveness by Creating Online Interactive Instructional Modules for Fundamental Concepts of Physics and Mathematics. Education Sciences. 2018; 8(3):109. https://doi.org/10.3390/educsci8030109

Chicago/Turabian StyleMoradi, Moein, Lin Liu, Carl Luchies, Meagan M. Patterson, and Behnaz Darban. 2018. "Enhancing Teaching-Learning Effectiveness by Creating Online Interactive Instructional Modules for Fundamental Concepts of Physics and Mathematics" Education Sciences 8, no. 3: 109. https://doi.org/10.3390/educsci8030109

APA StyleMoradi, M., Liu, L., Luchies, C., Patterson, M. M., & Darban, B. (2018). Enhancing Teaching-Learning Effectiveness by Creating Online Interactive Instructional Modules for Fundamental Concepts of Physics and Mathematics. Education Sciences, 8(3), 109. https://doi.org/10.3390/educsci8030109