Simulation and Feedback in Health Education: A Mixed Methods Study Comparing Three Simulation Modalities

Abstract

:1. Introduction

2. Materials and Methods

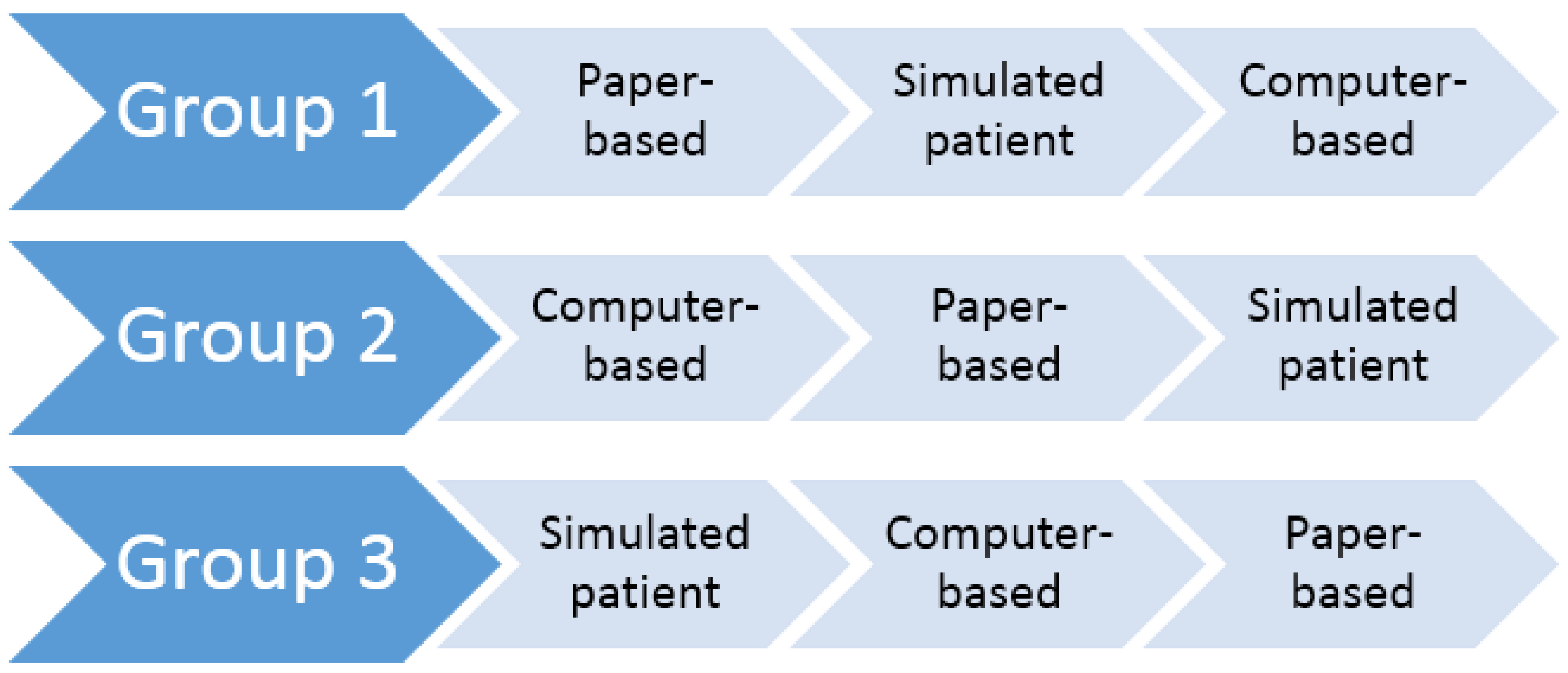

2.1. Study Design

- The paper-based simulation was designed as a series of written questions whereby participants had to write the relevant questions they needed to ask the patient, the information they needed to provide the patient, and recommended courses of action.

- The computer-based simulation was designed as a three-dimensional computer game whereby participants could interact with the patient by selecting relevant text options (questions, advice, and actions) from a list of available text options; this computer simulation has been described in more detail in previous studies [11,12].

- The simulated patient (actor-based) simulation was designed as a role-playing exercise whereby actors played the role of a patient presenting to the pharmacy. These actors were given a standard backstory as a guide to follow.

- For the paper-based simulation, feedback was delivered immediately after scenario completion in the form of model answers to the written questions, accompanied by a small group discussion with other participants in the same group allocation. A practicing pharmacist, who was employed as a lecturer at UON advised the most appropriate course of action for the scenario and facilitated small group discussion.

- For the computer-based simulation, feedback was delivered automatically immediately after scenario completion as a detailed scorecard within the game. Specifically, an itemized list of the participant’s chosen text responses was presented alongside relevant feedback for each response, associated points for each response, as well as a total score.

- For the simulated patient simulation, a video recording of the participant’s role-play was provided to the participant, along with their overall score and feedback as judged by experienced pharmacists using a marking guide; this feedback was provided to the participant on the following day.

2.2. Data Collection

2.3. Data Analysis

3. Results

3.1. Demographics

3.2. Simulation Experience, Feedback Provision, and Overall Satisfaction

Descriptive Statistics

3.3. Manifest-Level Themes

“Different and fun way of learning” [computer-based simulation]

“Great learning experience. Shows real life scenarios that can happen within a pharmacy” [simulated patient simulation]

“Interactive component was the best as you got to discuss answers and hear other people’s opinions” [feedback for the paper-based simulation]

“I think it was a good learning modality that should be given to first year students as it will allow students to practice communicating with a customer before being exposed to real life situations” [computer-based simulation]

3.4. Latent-Level Themes

“Fun way to put pharmacy practice into reality” [computer-based simulation]

“Boring, does not simulate true experiences” [paper-based simulation]

“The feedback was given straight after the tutorial. Was really good to go through the answers at the end with everyone because you can learn from your peers” [feedback for paper-based simulation]

“Feedback is very structured and clear, but we may need more detailed feedback on when to ask some particular questions” [computer-based simulation]

“Feedback was given straight away which is good to review your strengths and weaknesses” [feedback for computer-based simulation]

“This is the best way to give feedback but may not be the fastest way due to its own nature” [feedback for simulated patient simulation]

“Little bit complicated to navigate initially but was good after I understood how to work it” [computer-based simulation]

“I wasn’t aware the patient would just walk in and the scenario would start, was a little off putting and forgot to introduce myself” [simulated patient simulation]

4. Discussion

4.1. Principal Findings

4.2. Limitations

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

Appendix A. Student Satisfaction

| Statement | SD 1 | D 2 | N 3 | A 4 | SA 5 |

| The scenario was fun to work through | |||||

| I was interested in the subject matter covered by the scenario | |||||

| This was an engaging way to present the scenario | |||||

| Working through the scenario helped me learn about the subject matter | |||||

| I found the scenario easy to complete | |||||

| I think this was a good way to learn about pharmacy practice issues | |||||

| I think that this was a good way to learn about taking a patient/medication/medical history | |||||

| I think this was a good way to learn about counselling patients | |||||

| I think this was a good way to learn about medication/health condition related problems | |||||

| I would like to work through this scenario again using this learning modality | |||||

| I would like to work through different scenarios using this learning modality |

- (1)

- Please write any positive points you have about this modality of learning_________________________________________________________________________________________________________________________________________________________________________________________________________________________________

- (2)

- Please write any negative points you have about this modality of learning_______________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________

- (3)

- Please provide any other comments in relation to this learning modality__________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________

References

- Bradley, P. The history of simulation in medical education and possible future directions. Med. Educ. 2006, 40, 254–262. [Google Scholar] [CrossRef] [PubMed]

- Issenberg, S.B.; Scalese, R.J. Simulation in health care education. Perspect. Biol Med. 2008, 51, 31–46. [Google Scholar] [CrossRef] [PubMed]

- Cook, D.A.; Hatala, R.; Brydges, R.; Zendejas, B.; Szostek, J.H.; Wang, A.T.; Erwin, P.J.; Hamstra, S.J. Technology-enhanced simulation for health professions education. JAMA 2011, 306. [Google Scholar] [CrossRef] [PubMed]

- Watson, K.; Wright, A.; Morris, N.; Mcmeeken, J.; Rivett, D.; Blackstock, F.; Jones, A.; Haines, T.; O’Connor, V.; Watson, G.; et al. Can simulation replace part of clinical time? Two parallel randomised controlled trials. Med. Educ. 2012, 46, 657–667. [Google Scholar] [CrossRef] [PubMed]

- Larue, C.; Pepin, J.; Allard, É. Simulation in preparation or substitution for clinical placement: A systematic review of the literature. J. Nurs. Educ. Pract. 2015, 5. [Google Scholar] [CrossRef]

- Kelly, M.A.; Berragan, E.; Husebo, S.E.; Orr, F. Simulation in nursing education-international perspectives and contemporary scope of practice. J. Nurs. Scholarsh. 2016, 48, 312–321. [Google Scholar] [CrossRef] [PubMed]

- Cant, R.P.; Cooper, S.J. Simulation-based learning in nurse education: Systematic review. J. Adv. Nurs. 2010, 66, 3–15. [Google Scholar] [CrossRef] [PubMed]

- Biggs, J.; Tang, C. Teaching for Quality Learning at University, 4th ed.; Open University Press: Maidenhead, UK, 2011. [Google Scholar]

- Quinton, S.; Smallbone, T. Feeding forward: using feedback to promote student reflection and learning—A teaching model. Innov. Educ. Teach. Int. 2010, 47, 125–135. [Google Scholar] [CrossRef]

- Dreifuerst, K.T. The essentials of debriefing in simulation learning: A concept analysis. Nurs. Educ. Perspect. 2009, 30, 109–114. [Google Scholar] [PubMed]

- Bindoff, I.; Cummings, E.; Ling, T.; Chalmers, L.; Bereznicki, L. Development of a flexible and extensible computer-based simulation platform for healthcare students. Stud. Health Technol. Inform. 2015, 208, 83–87. [Google Scholar] [PubMed]

- Bindoff, I.; Ling, T.; Bereznicki, L.; Westbury, J.; Chalmers, L.; Peterson, G.; Ollington, R. A computer simulation of community pharmacy practice for educational use. Am. J. Pharm. Educ. 2014, 78. [Google Scholar] [CrossRef] [PubMed]

- Gale, N.K.; Heath, G.; Cameron, E.; Rashid, S.; Redwood, S. Using the framework method for the analysis of qualitative data in multi-disciplinary health research. BMC Med. Res. Methodol. 2013, 13. [Google Scholar] [CrossRef] [PubMed]

- Boyatzis, R.E. Transforming Qualitative Information: Thematic Analysis and Code Development; Sage Publications: Thousand Oaks, CA, USA, 1998. [Google Scholar]

- Lee, K.; Hoti, K.; Hughes, J.D.; Emmerton, L. Dr google and the consumer: A qualitative study exploring the navigational needs and online health information-seeking behaviors of consumers with chronic health conditions. J. Med. Internet. Res. 2014, 16. [Google Scholar] [CrossRef] [PubMed]

- Creswell, J.W. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches, 4th ed.; Sage Publications: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Carini, R.M.; Kuh, G.D.; Klein, S.P. Student engagement and student learning: Testing the linkages. Res. High Educ. 2006, 47, 1–32. [Google Scholar] [CrossRef]

- Kuh, G.D.; Kinzie, J.; Buckley, J.A.; Bridges, B.K.; Hayek, J.C. What matters to student success: A review of the literature. In Commissioned Report for the National Symposium on Postsecondary Student Success: Spearheading a Dialog on Student Success; NPEC: Washington, DC, USA, 2006. [Google Scholar]

| Sex | n (%) |

| Male | 3 (15.0) |

| Female | 17 (85.0) |

| Age Range (years) | |

| 22–25 | 11 (55.0) |

| 26–29 | 4 (20.0) |

| 30+ | 5 (25.0) |

| Currently Working in a Community Pharmacy | |

| Yes | 11 (55.0) |

| No | 9 (45.0) |

| Satisfaction Items | Scenario One: Reflux | Scenario Two: Angina | Scenario Three: Constipation | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Paper | Computer | Patient | Paper | Computer | Patient | Paper | Computer | Patient | |

| Median (Mean) | Median (Mean) | Median (Mean) | Median (Mean) | Median (Mean) | Median (Mean) | Median (Mean) | Median (Mean) | Median (Mean) | |

| Percentage of total maximum rating 1: | 91.8% (77.8%) | 90.0% (78.7%) | 81.8% (76.9%) | 90.9% (78.4%) | 80.0% (73.1%) | 80.0% (75.8%) | 76.4% (70.0%) | 70.9% (60.9%) | 91.8% (78.5%) |

| The scenario was fun to work through | 4.0 (4.0) | 4.5 (4.5) | 4.0 (4.0) | 4.5 (4.0) | 4.0 (4.3) | 4.0 (4.1) | 3.0 (3.4) | 3.0 (3.3) | 4.5 (4.2) |

| I was interested in the subject matter covered by the scenario | 5.0 (4.6) | 5.0 (4.8) | 4.0 (4.3) | 5.0 (4.5) | 4.0 (4.1) | 4.0 (4.3) | 4.0 (4.0) | 4.0 (4.0) | 4.5 (4.3) |

| This was an engaging way to present to scenario | 4.5 (3.8) | 5.0 (4.7) | 4.0 (4.4) | 4.0 (3.8) | 4.0 (4.1) | 4.0 (4.3) | 4.0 (3.6) | 4.0 (3.9) | 4.5 (4.3) |

| Working through the scenario helped me learn about the subject matter | 5.0 (4.9) | 4.5 (4.3) | 4.0 (4.3) | 4.0 (4.3) | 4.0 (4.0) | 4.0 (3.7) | 4.0 (4.1) | 3.0 (3.1) | 4.0 (4.2) |

| I found the scenario easy to complete | 5.0 (4.6) | 4.0 (3.7) | 3.0 (3.4) | 4.0 (4.0) | 4.0 (4.4) | 4.0 (3.9) | 4.0 (4.0) | 3.0 (2.4) | 4.0 (3.5) |

| I think this was a good way to learn about pharmacy practice issues | 4.0 (4.3) | 4.0 (4.3) | 4.0 (4.4) | 5.0 (4.7) | 4.0 (4.1) | 4.0 (4.3) | 4.0 (3.9) | 3.0 (3.2) | 5.0 (4.7) |

| I think this was a good way to learn about taking a patient/medication/medical history | 4.0 (4.0) | 4.0 (4.0) | 5.0 (4.6) | 5.0 (4.7) | 4.0 (3.7) | 4.0 (4.4) | 4.0 (3.9) | 3.0 (3.0) | 5.0 (4.7) |

| I think this was a good way to learn about counselling patients | 5.0 (4.3) | 4.0 (3.8) | 5.0 (4.6) | 3.5 (3.8) | 4.0 (3.7) | 4.0 (4.4) | 3.0 (3.7) | 4.0 (3.4) | 5.0 (4.8) |

| I think this was a good way to learn about medication/health condition related problems | 5.0 (4.3) | 4.5 (4.5) | 4.0 (4.0) | 5.0 (4.8) | 4.0 (3.9) | 4.0 (4.0) | 4.0 (4.0) | 4.0 (3.6) | 4.0 (3.8) |

| I would like to work through this scenario again using this learning modality | 4.0 (4.0) | 5.0 (4.7) | 4.0 (4.3) | 5.0 (4.5) | 4.0 (3.9) | 4.0 (4.3) | 4.0 (3.9) | 4.0 (3.6) | 5.0 (4.7) |

| I would like to work through different scenarios using this learning modality | 5.0 (4.7) | 5.0 (4.7) | 4.0 (4.4) | 5.0 (4.7) | 4.0 (4.0) | 4.0 (4.3) | 4.0 (3.7) | 4.0 (3.9) | 5.0 (4.7) |

| Feedback Items | Scenario One: Reflux | Scenario Two: Angina | Scenario Three: Constipation | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Paper | Computer | Patient | Paper | Computer | Patient | Paper | Computer | Patient | |

| Median (Mean) | Median (Mean) | Median (Mean) | Median (Mean) | Median (Mean) | Median (Mean) | Median (Mean) | Median (Mean) | Median (Mean) | |

| Percentage of total maximum rating 2: | 98.2% (86.0%) | 84.5% (82.4%) | 98.2% (92.2%) | 95.5% (91.8%) | 80.0% (80.4%) | 100% (95.5%) | 92.7% (85.6%) | 72.7% (72.0%) | 100% (97.5%) |

| Feedback provided for this scenario was provided in a timely manner | 5.0 (4.6) | 5.0 (4.7) | 5.0 (4.7) | 5.0 (4.7) | 4.0 (4.3) | 5.0 (4.4) | 5.0 (4.4) | 5.0 (4.4) | 5.0 (4.8) |

| Feedback provided from the learning modality was constructive and clarified what good performance is | 5.0 (4.4) | 4.5 (4.2) | 5.0 (4.7) | 5.0 (4.7) | 4.0 (4.1) | 5.0 (4.9) | 4.0 (4.4) | 4.0 (4.0) | 5.0 (4.8) |

| Feedback provided allowed me to reflect on my performance | 5.0 (4.6) | 4.5 (4.3) | 5.0 (4.7) | 5.0 (5.0) | 4.0 (4.1) | 5.0 (4.9) | 5.0 (4.4) | 4.0 (4.0) | 5.0 (5.0) |

| The feedback provided motivates my learning | 5.0 (4.1) | 4.5 (4.2) | 5.0 (4.6) | 5.0 (5.0) | 4.0 (4.0) | 5.0 (4.9) | 5.0 (4.4) | 4.0 (4.0) | 5.0 (4.8) |

| The feedback provided allowed me to analyze my patient history taking skills | 5.0 (4.4) | 4.0 (4.2) | 5.0 (4.7) | 4.5 (4.5) | 4.0 (4.4) | 5.0 (4.9) | 4.0 (4.1) | 4.0 (3.7) | 5.0 (4.8) |

| The feedback provided allowed me to self-analyze my clinical knowledge | 5.0 (4.9) | 4.0 (4.2) | 5.0 (4.6) | 4.5 (4.5) | 4.0 (4.1) | 5.0 (4.9) | 5.0 (4.4) | 4.0 (4.0) | 5.0 (5.0) |

| Feedback provided allowed me to analyze/self-assess my patient counselling advice | 5.0 (4.1) | 4.0 (4.2) | 5.0 (4.7) | 5.0 (4.7) | 4.0 (3.7) | 5.0 (4.7) | 5.0 (4.3) | 4.0 (3.9) | 5.0 (4.8) |

| Feedback provided allowed me to reflect on my communication skills | 5.0 (4.0) | 4.0 (4.0) | 5.0 (4.7) | 5.0 (4.7) | 4.0 (3.7) | 5.0 (4.9) | 4.0 (4.1) | 3.0 (3.3) | 5.0 (4.8) |

| The feedback provided allowed me to reflect on my verbal communication skills | 5.0 (3.7) | 4.0 (3.8) | 5.0 (4.4) | 4.0 (3.8) | 4.0 (3.9) | 5.0 (4.9) | 4.0 (4.0) | 2.0 (2.4) | 5.0 (5.0) |

| The feedback provided allowed me to reflect on my non-verbal communication skills | 4.0 (4.1) | 4.0 (3.5) | 4.0 (4.3) | 4.5 (4.2) | 4.0 (3.9) | 5.0 (4.4) | 5.0 (4.3) | 3.0 (2.9) | 5.0 (4.8) |

| Feedback provided will alter how I approach future pharmacy practice issues | 5.0 (4.4) | 4.0 (4.0) | 5.0 (4.6) | 5.0 (4.7) | 4.0 (4.0) | 5.0 (4.7) | 5.0 (4.3) | 3.0 (3.0) | 5.0 (5.0) |

| Paper-Based Themes | Computer-Based Themes | Simulated Patient (Actor-Based) Themes |

|---|---|---|

| Simulation Experience | Simulation Experience | Simulation Experience |

|

|

|

| Feedback | Feedback | Feedback |

|

|

|

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tait, L.; Lee, K.; Rasiah, R.; Cooper, J.M.; Ling, T.; Geelan, B.; Bindoff, I. Simulation and Feedback in Health Education: A Mixed Methods Study Comparing Three Simulation Modalities. Pharmacy 2018, 6, 41. https://doi.org/10.3390/pharmacy6020041

Tait L, Lee K, Rasiah R, Cooper JM, Ling T, Geelan B, Bindoff I. Simulation and Feedback in Health Education: A Mixed Methods Study Comparing Three Simulation Modalities. Pharmacy. 2018; 6(2):41. https://doi.org/10.3390/pharmacy6020041

Chicago/Turabian StyleTait, Lauren, Kenneth Lee, Rohan Rasiah, Joyce M. Cooper, Tristan Ling, Benjamin Geelan, and Ivan Bindoff. 2018. "Simulation and Feedback in Health Education: A Mixed Methods Study Comparing Three Simulation Modalities" Pharmacy 6, no. 2: 41. https://doi.org/10.3390/pharmacy6020041

APA StyleTait, L., Lee, K., Rasiah, R., Cooper, J. M., Ling, T., Geelan, B., & Bindoff, I. (2018). Simulation and Feedback in Health Education: A Mixed Methods Study Comparing Three Simulation Modalities. Pharmacy, 6(2), 41. https://doi.org/10.3390/pharmacy6020041