Wireless Sensor Network Operating System Design Rules Based on Real-World Deployment Survey

Abstract

:1. Introduction

2. Methodology

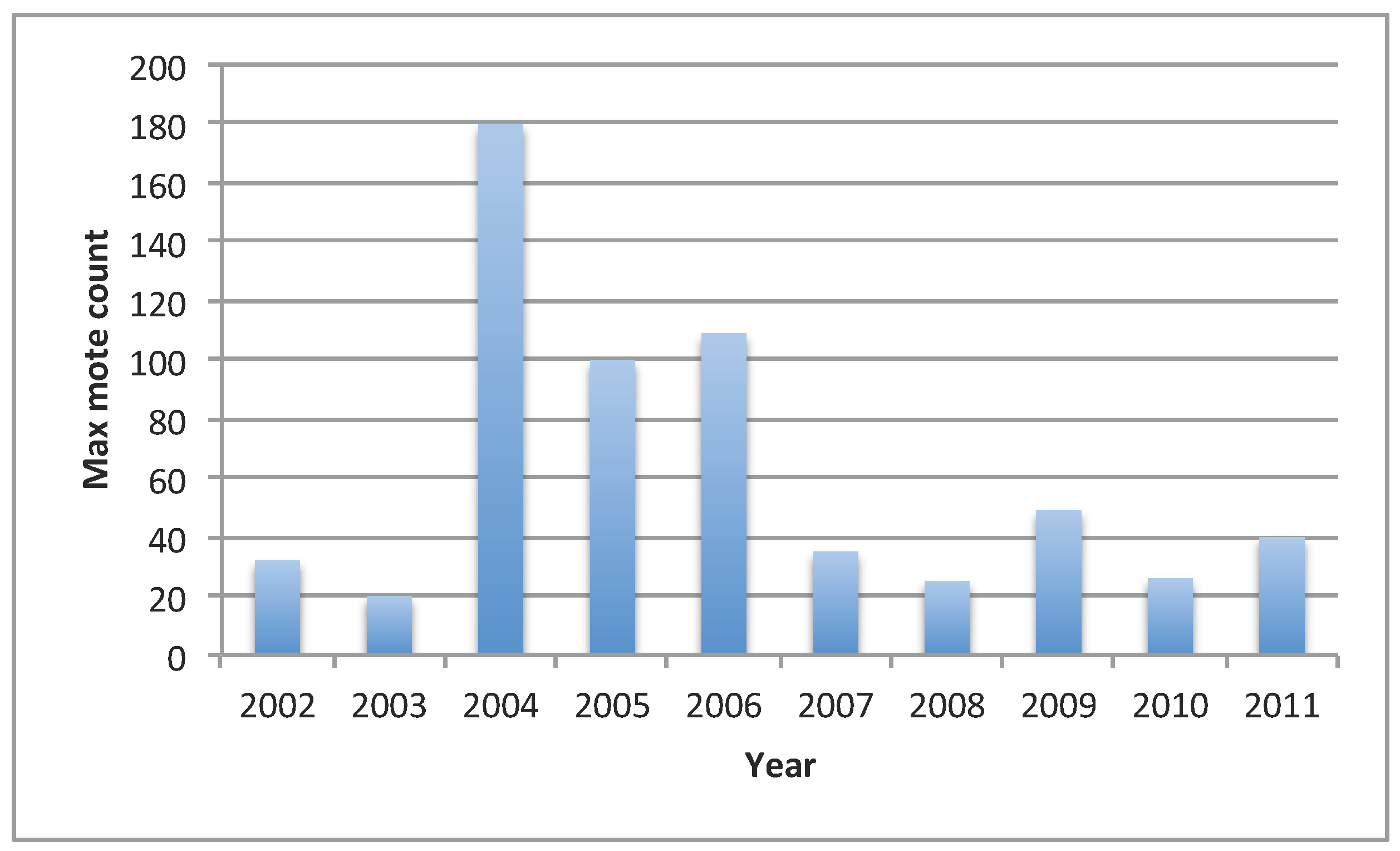

- The years 2002 up to 2011 have been reviewed uniformly, without emphasis on any particular year. Deployments before the year 2002 are not considered, as early sensor network research projects used custom hardware, differing from modern embedded systems significantly.

- Articles have been searched using the Association for Computing Machinery (ACM) Digital Library (http://dl.acm.org/), the Institute of Electrical and Electronics Engineers (IEEE) Xplore Digital Library (http://ieeexplore.ieee.org/), Elsevier ScienceDirect and SpringerLink databases. Several articles have been found as external references from the aforementioned databases.

- Deployments are selected to cover a wide WSN application range, including environmental monitoring, animal monitoring, human-centric applications, infrastructure monitoring, smart buildings and military applications.

3. Survey Results

| Nr | Codename | Year | Title | Class | Description |

|---|---|---|---|---|---|

| 1 | Habitats [11] | 2002 | Wireless Sensor Networks for Habitat Monitoring | Habitat and weather monitoring | One of the first sensor network deployments, designed for bird nest monitoring on a remote island |

| 2 | Minefield [12] | 2003 | Collaborative Networking Requirements for Unattended Ground Sensor Systems | Opposing force investigation | Unattended ground sensor system for self healing minefield application |

| 3 | Battlefield [19] | 2004 | Energy-Efficient Surveillance System Using Wireless Sensor Networks | Battlefield surveillance | System for tracking of the position of moving targets in an energy-efficient and stealthy manner |

| 4 | Line in the sand [20] | 2004 | A Line in the Sand: A Wireless Sensor Network for Target Detection, Classification, and Tracking | Battlefield surveillance | System for intrusion detection, target classification and tracking |

| 5 | Counter-sniper [21] | 2004 | Sensor Network-Based Countersniper System | Opposing force investigation | An ad hoc wireless sensor network-based system that detects and accurately locates shooters, even in urban environments. |

| 6 | Electro-shepherd [22] | 2004 | Electronic Shepherd—A Low-Cost, Low-Bandwidth, Wireless Network System | Domestic animal monitoring and control | Experiments with sheep GPS and sensor tracking |

| 7 | Virtual fences [23] | 2004 | Virtual Fences for Controlling Cows | Domestic animal monitoring and control | Experiments with virtual fence for domestic animal control |

| 8 | Oil tanker [24] | 2005 | Design and Deployment of Industrial Sensor Networks: Experiences from a Semiconductor Plant and the North Sea | Industrial equipment monitoring and control | Sensor network for industrial machinery monitoring, using Intel motes with Bluetooth and high-frequency sampling |

| 9 | Enemy vehicles [25] | 2005 | Design and Implementation of a Sensor Network System for Vehicle Tracking and Autonomous Interception | Opposing force investigation | A networked system of distributed sensor nodes that detects an evader and aids a pursuer in capturing the evader |

| 10 | Trove game [26] | 2005 | Trove: A Physical Game Running on an Ad hoc Wireless Sensor Network | Child education and sensor games | Physical multiplayer real-time game, using collaborative sensor nodes |

| 11 | Elder Radio-Frequency Identification (RFID) [27] | 2005 | A Prototype on RFID and Sensor Networks for Elder Healthcare: Progress Report | Medication intake accounting | In-home elder healthcare system integrating sensor networks and RFID technologies for medication intake monitoring |

| 12 | Murphy potatoes [28] | 2006 | Murphy Loves Potatoes: Experiences from a Pilot Sensor Network Deployment in Precision Agriculture | Precision agriculture | A rather unsuccessful sensor network pilot deployment for precision agriculture, demonstrating valuable lessons learned |

| 13 | Firewxnet [29] | 2006 | FireWxNet: A Multi-Tiered Portable Wireless System for Monitoring Weather Conditions in Wildland Fire Environments | Forest fire detection | A multi-tier WSN for safe and easy monitoring of fire and weather conditions over a wide range of locations and elevations within forest fires |

| 14 | AlarmNet [30] | 2006 | ALARM-NET: Wireless Sensor Networks for Assisted-Living and Residential Monitoring | Human health telemonitoring | Wireless sensor network for assisted-living and residential monitoring, integrating environmental and physiological sensors and providing end-to-end secure communication and sensitive medical data protection |

| 15 | Ecuador Volcano [31] | 2006 | Fidelity and Yield in a Volcano Monitoring Sensor Network | Volcano monitoring | Sensor network for volcano seismic activity monitoring, using high frequency sampling and distributed event detection |

| 16 | Pet game [32] | 2006 | Wireless Sensor Network-Based Mobile Pet Game | Child education and sensor games | Augmenting mobile pet game with physical sensing capabilities: sensor nodes act as eyes, ears and skin |

| 17 | Plug [33] | 2007 | A Platform for Ubiquitous Sensor Deployment in Occupational and Domestic Environments | Smart energy usage | Wireless sensor network for human activity logging in offices; sensor nodes implemented as power strips |

| 18 | B-Live [34] | 2007 | B-Live—A Home Automation System for Disabled and Elderly People | Home/office automation | Home automation for disabled and elderly people integrating heterogeneous wired and wireless sensor and actuator modules |

| 19 | Biomotion [35] | 2007 | A Compact, High-Speed, Wearable Sensor Network for Biomotion Capture and Interactive Media | Smart userinterfaces and art | Wireless sensor platform designed for processing multipoint human motion with low latency and high resolutions. Example applications: interactive dance, where movements of multiple dancers are translated into real-time audio or video |

| 20 | AID-N [36] | 2007 | The Advanced Health and Disaster Aid Network: A Light-Weight Wireless Medical System for Triage | Human health telemonitoring | Lightweight medical systems to help emergency service providers in mass casualty incidents |

| 21 | Firefighting [37] | 2007 | A Wireless Sensor Network and Incident Command Interface for Urban Firefighting | Human-centric applications | Wireless sensor network and incident command interface for firefighting and emergency response, especially in large and complex buildings. During a fire accident, fire spread is tracked, and firefighter position and health status are monitored. |

| 22 | Rehabil [38] | 2007 | Ubiquitous Rehabilitation Center: AnImplementation of a Wireless Sensor Network-Based Rehabilitation Management System | Human indoor tracking | Zigbee sensor network-based ubiquitous rehabilitation center for patient and rehabilitation machine monitoring |

| 23 | CargoNet [39] | 2007 | CargoNet: A Low-Cost Micropower Sensor Node Exploiting Quasi-Passive Wake Up for Adaptive Asynchronous Monitoring of Exceptional Events | Good and daily object tracking | System of low-cost, micropower active sensor tags for environmental monitoring at the crate and case level for supply-chain management and asset security |

| 24 | Fence monitor [40] | 2007 | Fence Monitoring—Experimental Evaluation of a Use Case for Wireless Sensor Networks | Security systems | Sensor nodes attached to a fence for collaborative intrusion detection |

| 25 | BikeNet [41] | 2007 | The BikeNet Mobile Sensing System for Cyclist Experience Mapping | City environment monitoring | Extensible mobile sensing system for cyclist experience (personal, bicycle and environmental sensing) mapping, leveraging opportunistic networking principles |

| 26 | BriMon [42] | 2008 | BriMon: A Sensor Network System for Railway Bridge Monitoring | Bridge monitoring | Delay tolerant network for bridge vibration monitoring using accelerometers. Gateway mote collects data and forwards opportunistically to a mobile base station attached to a train passing by. |

| 27 | IP net [43] | 2008 | Experiences from Two Sensor Network Deployments—Self-Monitoring and Self-Configuration Keys to Success | Battlefield surveillance | Indoor and outdoor surveillance network for detecting troop movement |

| 28 | Smart home [44] | 2008 | The Design and Implementation of Smart Sensor-Based Home Networks | Home/office automation | Wireless sensor network deployed in a miniature model house, which controls different household equipment: window curtains, gas valves, electric outlets, TV, refrigerator and door locks |

| 29 | SVATS [45] | 2008 | SVATS: A Sensor-Network-Based Vehicle Anti-Theft System | Anti-theft systems | Low cost, reliable sensor-network based, distributed vehicle anti-theft system with low false-alarm rate |

| 30 | Hitchhiker [46] | 2008 | The Hitchhikers Guide to Successful Wireless Sensor Network Deployments | Flood and glacier detection | Multiple real-world sensor network deployments performed, including glacier detection; experience and suggestions reported. |

| 31 | Daily morning [47] | 2008 | Detection of Early Morning Daily Activities with Static Home and Wearable Wireless Sensors | Daily activity recognition | Flexible, cost-effective, wireless in-home activity monitoring system integrating static and mobile body sensors for assisting patients with cognitiveimpairments |

| 32 | Heritage [48] | 2009 | Monitoring Heritage Buildings with Wireless Sensor Networks: The Torre Aquila Deployment | Heritage building and site monitoring | Three different motes (sensing temperature, vibrations and deformation) deployed in a historical tower to monitor its health and identify potential damage risks |

| 33 | AC meter [49] | 2009 | Design and Implementation of a High-Fidelity AC Metering Network | Smart energy usage | AC outlet power consumption measurement devices, which are powered from the same AC line, but communicate wirelessly to IPv6 router |

| 34 | Coal mine [50] | 2009 | Underground Coal Mine Monitoring with Wireless Sensor Networks | Coal mine monitoring | Self-adaptive coal mine wireless sensor network (WSN) system for rapid detection of structure variations caused by underground collapses |

| 35 | ITS [51] | 2009 | Wireless Sensor Networks for Intelligent Transportation Systems | Vehicle tracking and traffic monitoring | Traffic monitoring system implemented through WSN technology within the SAFESPOT Project |

| 36 | Underwater [52] | 2010 | Adaptive Decentralized Control of Underwater Sensor Networks for Modeling Underwater Phenomena | Underwater networks | Measurement of dynamics of underwater bodies and their impact in the global environment, using sensor networks with nodes adapting their depth dynamically |

| 37 | PipeProbe [53] | 2010 | PipeProbe: A Mobile Sensor Droplet for Mapping Hidden Pipeline | Power line and water pipe monitoring | Mobile sensor system for determining the spatial topology of hidden water pipelines behind walls |

| 38 | Badgers [54] | 2010 | Evolution and Sustainability of a Wildlife Monitoring Sensor Network | Wild animal monitoring | Badger monitoring in a forest |

| 39 | Helens volcano [55] | 2011 | Real-World Sensor Network for Long-Term Volcano Monitoring: Design and Findings | Volcano monitoring | Robust and fault-tolerant WSN for active volcano monitoring |

| 40 | Tunnels [56] | 2011 | Is There Light at the Ends of the Tunnel? Wireless Sensor Networks for Adaptive Lighting in Road Tunnels | Tunnel monitoring | Closed loop wireless sensor and actuator system for adaptive lighting control in operational tunnels |

3.1. Deployment State and Attributes

| Nr | Codename | Deployment state | Mote count | Heterog. motes | Base stations | Base station hardware |

|---|---|---|---|---|---|---|

| 1 | Habitats | pilot | 32 | n | 1 | Mote + PC with satellite link to Internet |

| 2 | Minefield | pilot | 20 | n | 0 | All motes capable of connecting to a PC via Ethernet |

| 3 | Battlefield | prototype | 70 | y (soft, by role) | 1 | Mote + PC |

| 4 | Line in the sand | pilot | 90 | n | 1 | Root connects to long-range radio relay |

| 5 | Counter-sniper | prototype | 56 | n | 1 | Mote + PC |

| 6 | Electro-shepherd | pilot | 180 | y | 1+ | Mobile mote |

| 7 | Virtual fences | prototype | 8 | n | 1 | Laptop |

| 8 | Oil tanker | pilot | 26 | n | 4 | Stargate gateway + Intel mote, wall powered. |

| 9 | Enemy vehicles | pilot | 100 | y | 1 | Mobile power motes - laptop on wheels |

| 10 | Trove game | pilot | 10 | n | 1 | Mote + PC |

| 11 | Elder RFID | prototype | 3 | n | 1 | Mote + PC |

| 12 | Murphy potatoes | pilot | 109 | n | 1 | Stargate gateway + Tnode, solar panel |

| 13 | Firewxnet | pilot | 13 | n | 1 Base Station (BS) + 5 gateways | Gateway: Soekris net4801 with Gentoo Linux and Trango Access5830 long-range 10 Mbps wireless; BS: PC with satellite link 512/128Kbps |

| 14 | AlarmNet | prototype | 15 | y | varies | Stargate gateway with MicaZ, wall powered. |

| 15 | Ecuador Volcano | pilot | 19 | y | 1 | Mote + PC |

| 16 | Pet game | prototype | ? | n | 1+ | Mote + MIB510 board + PC |

| 17 | Plug | pilot | 35 | n | 1 | Mote + PC |

| 18 | B-Live | pilot | 10+ | y | 1 | B-Live modules connected to PC, wheelchair computer, etc. |

| 19 | Biomotion | pilot | 25 | n | 1 | Mote + PC |

| 20 | AID-N | pilot | 10 | y | 1+ | Mote + PC |

| 21 | Firefighting | prototype | 20 | y | 1+ | ? |

| 22 | Rehabil | prototype | ? | y | 1 | Mote + PC |

| 23 | CargoNet | pilot | <10 | n | 1+ | Mote + PC? |

| 24 | Fence monitor | prototype | 10 | n | 1 | Mote + PC? |

| 25 | BikeNet | prototype | 5 | n | 7+ | 802.15.4/Bluetooth bridge + Nokia N80 OR mote + Aruba AP-70 embedded PC |

| 26 | BriMon | prototype | 12 | n | 1 | Mobile train TMote, static bridge Tmotes |

| 27 | IP net | pilot | 25 | n | 1 | Mote + PC? |

| 28 | Smart home | prototype | 12 | y | 1 | Embedded PC with touchscreen, internet, wall powered |

| 29 | SVATS | prototype | 6 | n | 1 | ? |

| 30 | Hitchhiker | pilot? | 16 | 1 | ? | |

| 31 | Daily morning | prototype | 1 | n | 1 | Mote + MIB510 board + PC |

| 32 | Heritage | stable | 17 | y | 1 | 3Mate mote + Gumstix embedded PC with SD card and WiFi |

| 33 | AC meter | pilot | 49 | n | 2+ | Meraki Mini and the OpenMesh Mini-Router wired together with radio |

| 34 | Coal mine | prototype | 27 | n | 1 | ? |

| 35 | ITS | prototype | 8 | n | 1 | ? |

| 36 | Underwater | prototype | 4 | n | 0 | - |

| 37 | PipeProbe | prototype | 1 | n | 1 | Mote + PC |

| 38 | Badgers | stable | 74 mobile + 26? static | y | 1+ | Mote |

| 39 | Helens volcano | pilot | 13 | n | 1 | ? |

| 40 | Tunnels | pilot | 40 | n | 2 | Mote + Gumstix Verdex Pro |

- Design rule 1:

- The communication stack included in the default OS libraries should concentrate on usability, simplicity and resource efficiency, rather than providing complex and resource-intensive, scalable protocols for thousands of nodes.

- Design rule 2:

- Sink-oriented protocols must be provided and, optionally, multiple sink support.

- Design rule 3:

- The OS toolset must include a default solution for base station application, which is easily extensible to user specific needs.

3.2. Sensing

| Nr | Codename | Sensors | Sampling rate, Hz | GPS used |

|---|---|---|---|---|

| 1 | Habitats | temperature, light, barometric pressure, humidity and passive infrared | 0.0166667 | n |

| 2 | Minefield | sound, magnetometer, accelerometers, voltage and imaging | ? | y |

| 3 | Battlefield | magnetometer, acoustic and light | 10 | n |

| 4 | Line in the sand | magnetometer and radar | ? | n |

| 5 | Counter-sniper | sound | 1,000,000 | n |

| 6 | Electro-shepherd | temperature | ? | y |

| 7 | Virtual fences | - | ? | y |

| 8 | Oil tanker | accelerometer | 19,200 | n |

| 9 | Enemy vehicles | magnetometer and ultrasound transceiver | ? | y, on powered nodes |

| 10 | Trove game | accelerometers and light | ? | n |

| 11 | Elder RFID | RFID reader | 1 | n |

| 12 | Murphy potatoes | temperature and humidity | 0.0166667 | n |

| 13 | Firewxnet | temperature, humidity, wind speed and direction | 0.8333333 | n |

| 14 | AlarmNet | motion, blood pressure, body scale, dust, temperature and light | ≤ 1 | n |

| 15 | Ecuador Volcano | seismometers and acoustic | 100 | y, on BS |

| 16 | Pet game | temperature, light and sound | configurable | n |

| 17 | Plug | sound, light, electric current, voltage, vibration, motion and temperature | 8,000 | n |

| 18 | B-Live | light, electric current and switches | ? | n |

| 19 | Biomotion | accelerometer, gyroscope and capacitive distance sensor | 100 | n |

| 20 | AID-N | pulse oximeter, Electrocardiogram (ECG), blood pressure and heart beat | depends on queries | n |

| 21 | Firefighting | temperature | ? | n |

| 22 | Rehabil | temperature, humidity and light | ? | n |

| 23 | CargoNet | shock, light, magnetic switch, sound, tilt, temperature and humidity | 0.0166667 | n |

| 24 | Fence monitor | accelerometer | 10 | n |

| 25 | BikeNet | magnetometer, pedal speed, inclinometer, lateral tilt, Galvanic Skin Response (GSR) stress, speedometer, CO2, sound and GPS | configurable | y |

| 26 | BriMon | accelerometer | 0.6666667 | n |

| 27 | IP net | temperature, luminosity, vibration, microphone and movement detector | ? | n |

| 28 | Smart home | Light, temperature, humidity, air pressure, acceleration, gas leak and motion | ? | n |

| 29 | SVATS | radio Received Signal Strength Indicator (RSSI) | ? | n |

| 30 | Hitchhiker | air temperature and humidity, surface temperature, solar radiation, wind speed and direction, soil water content and suction and precipitation | ? | n |

| 31 | Daily morning | accelerometer | 50 | n |

| 32 | Heritage | fiber optic deformation, accelerometers and analog temperature | 200 | n |

| 33 | AC meter | current | ≤ 14,000 | n |

| 34 | Coal mine | - (sense radio neighbors only) | - | n |

| 35 | ITS | anisotropic magneto-resistive and pyroelectric | varies | n |

| 36 | Underwater | pressure, temperature, CDOM, salinity, dissolved oxygen and cameras; motor actuator | ≤1 | n |

| 37 | PipeProbe | gyroscope and pressure | 33 | n |

| 38 | Badgers | humidity and temperature | ? | n |

| 39 | Helens volcano | geophone and accelerometer | 100,000? | y |

| 40 | Tunnels | light, temperature and voltage | 0.0333333 | n |

- Design rule 4:

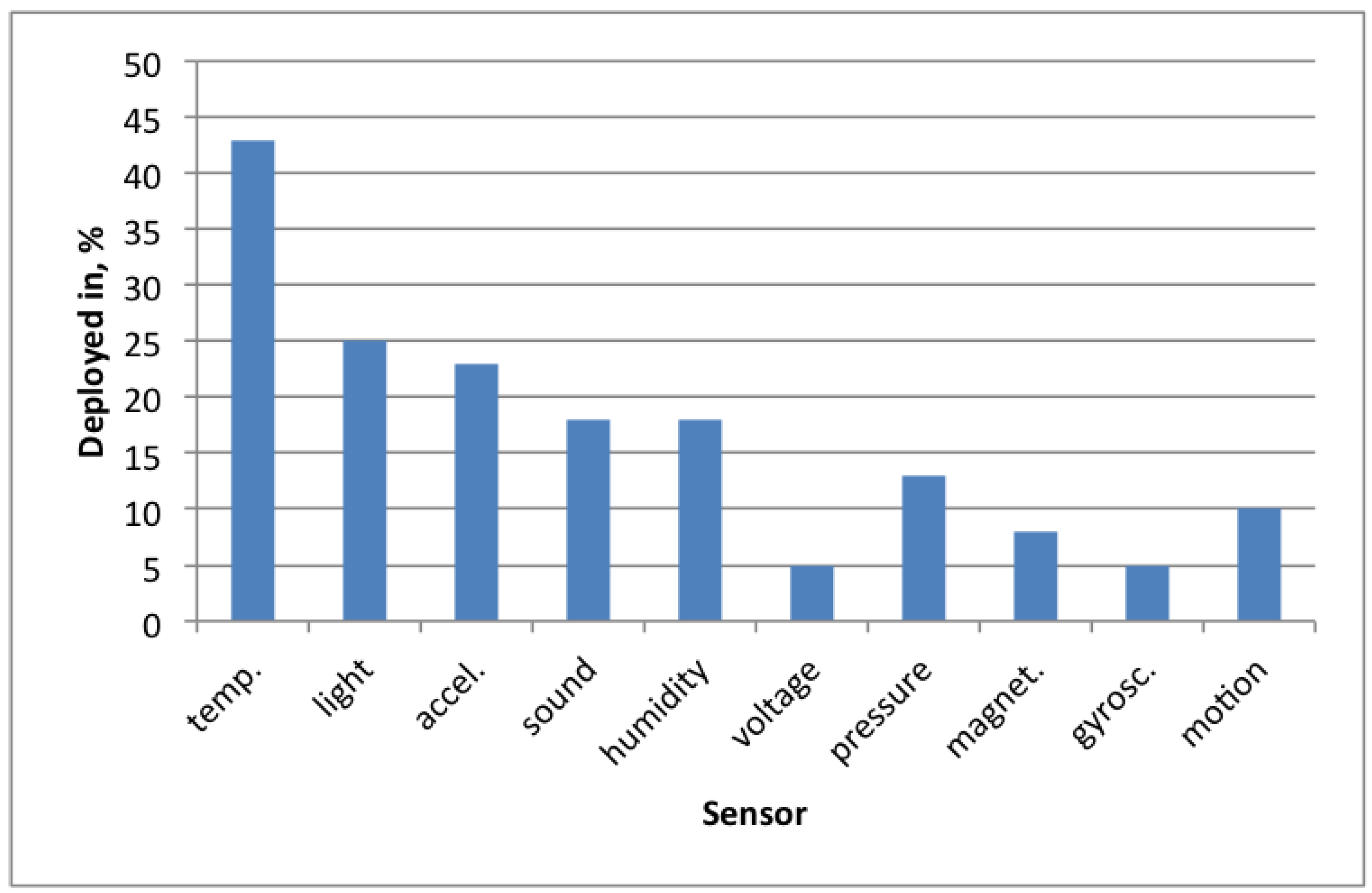

- The WSN operating system should include an Application Programming Interface (API) for temperature, light and acceleration sensors in the default library set.

- Design rule 5:

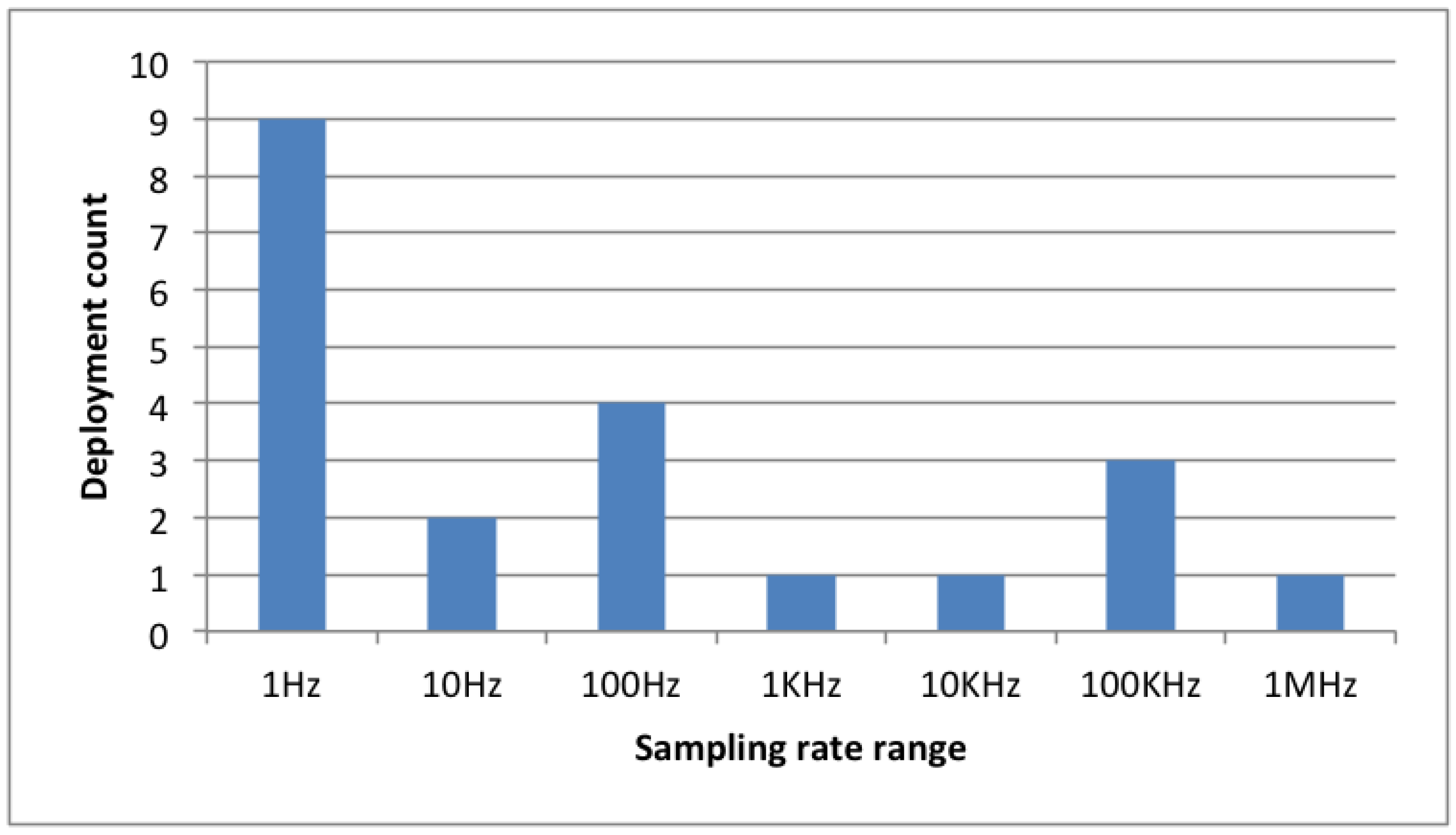

- The operating system must set effective low-frequency, low duty-cycle sampling as the first priority. High performance for sophisticated audio signal processing and other high-frequency sampling applications is secondary, yet required.

3.3. Lifetime and Energy

| Nr | Codename | Lifetime, days | Energy source | Sleep time, sec | Duty cycle, % | Powered-motes present |

|---|---|---|---|---|---|---|

| 1 | Habitats | 270 | battery | 60 | ? | yes, gateways |

| 2 | Minefield | ? | battery | ? | ? | yes, all |

| 3 | Battlefield | 5–50 | battery | varies | varies | yes, base station |

| 4 | Line in the sand | ? | battery and solar | ? | ? | yes, root |

| 5 | Counter-sniper | ? | battery | 0 | 100 | no |

| 6 | Electro-shepherd | 50 | battery | ? | no | |

| 7 | Virtual fences | 2 h 40 min | battery | 0 | 100 | no |

| 8 | Oil tanker | 82 | battery | 64,800 | yes, gateways | |

| 9 | Enemy vehicles | ? | battery | ? | ? | yes, mobile nodes |

| 10 | Trove game | ? | battery | ? | ? | yes, base station |

| 11 | Elder RFID | ? | battery | 0? | 100? | yes, base station |

| 12 | Murphy potatoes | 21 | battery | 60 | 11 | yes, base station |

| 13 | Firewxnet | 21 | battery | 840 | 6.67 | yes, gateways |

| 14 | AlarmNet | ? | battery | ? | configurable | yes, base stations |

| 15 | Ecuador Volcano | 19 | battery | 0 | 100 | yes, base station |

| 16 | Pet game | ? | battery | ? | ? | yes, base station |

| 17 | Plug | - | power-net | 0 | 100 | yes, all |

| 18 | B-Live | - | battery | 0 | 100 | yes, all |

| 19 | Biomotion | 5 h | battery | 0 | 100 | yes, base stations |

| 20 | AID-N | 6 | battery | 0 | 100 | yes, base station |

| 21 | Firefighting | 4+ | battery | 0 | 100 | yes, infrastructure motes |

| 22 | Rehabil | ? | battery | ? | ? | yes, base station |

| 23 | CargoNet | 1825 | battery | varies | 0.001 | no |

| 24 | Fence monitor | ? | battery | 1 | ? | yes, base station |

| 25 | BikeNet | ? | battery | ? | ? | yes, gateways |

| 26 | BriMon | 625 | battery | 0.55 | no | |

| 27 | IP net | ? | battery | ? | 20 | yes, base station |

| 28 | Smart home | ? | battery | ? | ? | yes |

| 29 | SVATS | unlimited | power-net | not implemented | - | yes, all |

| 30 | Hitchhiker | 60 | battery and solar | 5 | 10 | yes, base station |

| 31 | Daily morning | ? | battery | 0? | 100? | yes, base station |

| 32 | Heritage | 525 | battery | 0.57 | 0.05 | yes, base station |

| 33 | AC meter | ? | power-net | ? | ? | yes, gateways |

| 34 | Coal mine | ? | battery | ? | ? | yes, base station? |

| 35 | ITS | ? | power-net? | 0? | 100? | yes, all |

| 36 | Underwater | ? | battery | ? | ? | no |

| 37 | PipeProbe | 4 h | battery | 0 | 100 | yes, base station |

| 38 | Badgers | 7 | battery | ? | 0.05 | no |

| 39 | Helens volcano | 400 | battery | 0? | 100? | yes, all |

| 40 | Tunnels | 480 | battery | 0.25 | ? | yes, base stations |

- Design rule 6:

- The option, “automatically activate sleep mode whenever possible”, would decrease the complexity and increase the lifetime for deployments in the prototyping phase and also help beginner sensor network programmers.

- Design rule 7:

- Powered mote availability should be considered when designing a default networking protocol library.

3.4. Sensor Mote

| Nr | Codename | Mote | Ready or custom | Mote motivation | Radio chip | Radio protocol |

|---|---|---|---|---|---|---|

| 1 | Habitats | Mica | adapted | custom Mica weather board and packaging | RFMonolitics TR1001 | ? |

| 2 | Minefield | WINS NG 2.0 [59] | custom | need for high performance | ? | ? |

| 3 | Battlefield | Mica2 | adapted | energy and bandwidth efficient; simple and flexible | Chipcon CC1000 | SmartRF |

| 4 | Line in the sand | Mica2 | adapted | ? | Chipcon CC1000 | SmartRF |

| 5 | Counter-sniper | Mica2 | adapted | ? | Chipcon CC1000 | SmartRF |

| 6 | Electro-shepherd | Custom + Active RFID tags | custom | packaging adapted to sheep habits | unnamed Ultra High Frequency (UHF) transceiver | ? |

| 7 | Virtual fences | Zaurus PDA | ready | off-the-shelf | unnamed WiFi | 802.11 |

| 8 | Oil tanker | Intel Mote | adapted | ? | Zeevo TC2001P | Bluetooth 1.1 |

| 9 | Enemy vehicles | Mica2Dot | adapted | ? | Chipcon CC1000 | SmartRF |

| 10 | Trove game | Mica2 | ready | off-the-shelf | Chipcon CC1000 | SmartRF |

| 11 | Elder RFID | Mica2 | adapted | off-the-shelf; RFID reader added | Chipcon CC1000 + RFID | SmartRF + RFID |

| 12 | Murphy potatoes | TNOde, Mica2-like | custom | packaging + sensing | Chipcon CC1000 | SmartRF |

| 13 | Firewxnet | Mica2 | adapted | Mantis OS [60] support, AA batteries, extensible | Chipcon CC1000 | SmartRF |

| 14 | AlarmNet | Mica2 + TMote Sky | adapted | off-the-shelf; extensible | Chipcon CC1000 | SmartRF |

| 15 | Ecuador Volcano | Tmote Sky | adapted | off-the-shelf | Chipcon CC2420 | 802.15.4 |

| 16 | Pet game | MicaZ | ready | off-the-shelf | Chipcon CC2420 | 802.15.4 |

| 17 | Plug | Plug Mote | custom | specific sensing + packaging | Chipcon CC2500 | ? |

| 18 | B-Live | B-Live module | custom | custom modular system | ? | ? |

| 19 | Biomotion | custom | custom | size constraints | Nordic nRF2401A | - |

| 20 | AID-N | TMote Sky + MicaZ | adapted | off-the-shelf; extensible | Chipcon CC2420 | 802.15.4 |

| 21 | Firefighting | TMote Sky | adapted | off-the-shelf; easy prototyping | Chipcon CC2420 | 802.15.4 |

| 22 | Rehabil | Maxfor TIP 7xxCM: TelosB-compatible | ready | off-the-shelf | Chipcon CC2420 | 802.15.4 |

| 23 | CargoNet | CargoNet mote | custom | low power; low cost components | Chipcon CC2500 | - |

| 24 | Fence monitor | Scatterweb ESB [61] | ready | off-the-shelf | Chipcon CC1020 | ? |

| 25 | BikeNet | TMote Invent | adapted | off-the-shelf mote providing required connectivity | Chipcon CC2420 | 802.15.4 |

| 26 | BriMon | Tmote Sky | adapted | off the shelf | Chipcon CC2420 | 802.15.4 |

| 27 | IP net | Scatterweb ESB | adapted | Necessary sensors on board | TR1001 | ? |

| 28 | Smart home | ZigbeX | custom | specific sensor, size and power constraints | Chipcon CC2420 | 802.15.4 |

| 29 | SVATS | Mica2 | ready | off-the-shelf | Chipcon CC1000 | SmartRF |

| 30 | Hitchhiker | TinyNode | adapted | long-range communication | Semtech XE1205 | ? |

| 31 | Daily morning | MicaZ | ready | off-the-shelf | Chipcon CC2420 | 802.15.4 |

| 32 | Heritage | 3Mate! | adapted | TinyOS supported mote with custom sensors | Chipcon CC2420 | 802.15.4 |

| 33 | AC meter | ACme (Epic core) | adapted | modular; convenient prototyping | Chipcon CC2420 | 802.15.4 |

| 34 | Coal mine | Mica2 | ready | off-the-shelf | Chipcon CC1000 | SmartRF |

| 35 | ITS | Custom | custom | specific sensing needs | Chipcon CC2420 | 802.15.4 |

| 36 | Underwater | AquaNode | custom | specific packaging, sensor and actuator needs | custom | - |

| 37 | PipeProbe | Eco mote | adapted | size and energy constraints | Nordic nRF24E1 | ? |

| 38 | Badgers | V1: Tmote Sky + external board; V2: custom | v1: adapted; v2: custom | v1: off-the-shelf v2: optimizations | Atmel AT86RF230 | 802.15.4 |

| 39 | Helens volcano | custom | custom | specific computational, sensing and packaging needs | Chipcon CC2420 | 802.15.4 |

| 40 | Tunnels | TRITon mote [62] : TelosB-like | custom | reuse and custom packaging | Chipcon CC2420 | 802.15.4 |

- Design rule 8:

- TelosB platform support is essential.

- Design rule 9:

- The WSN OS must support implementation of additional sensor drivers for existing commercial motes

- Design rule 10:

- Development of completely new platforms must be simple enough, and highly reusable code should be contained in the OS

- Design rule 11:

- Driver support for CC2420 radio is essential.

3.5. Sensor Mote: Microcontroller

| Nr | Codename | MCU count | MCU Name | Architecture, bits | MHz | RAM, KB | Program Memory, KB |

|---|---|---|---|---|---|---|---|

| 1 | Habitats | 1 | Atmel ATMega103L | 8 | 4 | 4 | 128 |

| 2 | Minefield | 1 | Hitachi SH4 7751 | 32 | 167 | 64,000 | 0 |

| 3 | Battlefield | 1 | Atmel ATMega128 | 8 | 7.3 | 4 | 128 |

| 4 | Line in the sand | 1 | Atmel ATMega128 | 8 | 4 | 4 | 128 |

| 5 | Counter-sniper | 1 + Field-Programmable Gate Array (FPGA) | Atmel ATMega128L | 8 | 7.3 | 4 | 128 |

| 6 | Electro-shepherd | 1 | Atmel ATMega128 | 8 | 7.3 | 4 | 128 |

| 7 | Virtual fences | 1 | Intel StrongArm | 32 | 206 | 65,536 | ? |

| 8 | Oil tanker | 1 | Zeevo ARM7TDMI | 32 | 12 | 64 | 512 |

| 9 | Enemy vehicles | 1 | Atmel ATMega128L | 8 | 4 | 4 | 128 |

| 10 | Trove game | 1 | Atmel ATMega128 | 8 | 7.3 | 4 | 128 |

| 11 | Elder RFID | 1 | Atmel ATMega128 | 8 | 7.3 | 4 | 128 |

| 12 | Murphy potatoes | 1 | Atmel ATMega128L | 8 | 8 | 4 | 128 |

| 13 | Firewxnet | 1 | Atmel ATMega128L | 8 | 7.3 | 4 | 128 |

| 14 | AlarmNet | 1 | Atmel ATMega128L | 8 | 7.3 | 4 | 128 |

| 15 | Ecuador Volcano | 1 | Texas Instruments (TI) MSP430F1611 | 16 | 8 | 10 | 48 |

| 16 | Pet game | 1 | Atmel ATMega128 | 8 | 7.3 | 4 | 128 |

| 17 | Plug | 1 | Atmel AT91SAM7S64 | 32 | 48 | 16 | 64 |

| 18 | B-Live | 2 | Microchip PIC18F2580 | 8 | 40 | 1.5 | 32 |

| 19 | Biomotion | 1 | TI MSP430F149 | 16 | 8 | 2 | 60 |

| 20 | AID-N | 1 | TI MSP430F1611 | 16 | 8 | 10 | 48 |

| 21 | Firefighting | 1 | TI MSP430F1611 | 16 | 8 | 10 | 48 |

| 22 | Rehabil | 1 | TI MSP430F1611 | 16 | 8 | 10 | 48 |

| 23 | CargoNet | 1 | TI MSP430F135 | 16 | 8? | 0.512 | 16 |

| 24 | Fence monitor | 1 | TI MSP430F1612 | 16 | 7.3 | 5 | 55 |

| 25 | BikeNet | 1 | TI MSP430F1611 | 16 | 8 | 10 | 48 |

| 26 | BriMon | 1 | TI MSP430F1611 | 16 | 8 | 10 | 48 |

| 27 | IP net | 1 | TI MSP430F149 | 16 | 8 | 2 | 60 |

| 28 | Smart home | 1 | Atmel ATMega128 | 8 | 8 | 4 | 128 |

| 29 | SVATS | 1 | Atmel ATMega128L | 8 | 7.3 | 4 | 128 |

| 30 | Hitchhiker | 1 | TI MSP430F1611 | 16 | 8 | 10 | 48 |

| 31 | Daily morning | 1 | Atmel ATMega128 | 8 | 7.3 | 4 | 128 |

| 32 | Heritage | 1 | TI MSP430F1611 | 16 | 8 | 10 | 48 |

| 33 | AC meter | 1 | TI MSP430F1611 | 16 | 8 | 10 | 48 |

| 34 | Coal mine | 1 | Atmel ATMega128 | 8 | 7.3 | 4 | 128 |

| 35 | ITS | 2 | ARM7 + MSP430F1611 | 32 + 8 | ? + 8 | 64 + 10 | ? + 48 |

| 36 | Underwater | 1 | NXP LPC2148 ARM7TDMI | 32 | 60 | 40 | 512 |

| 37 | PipeProbe | 1 | Nordic nRF24E1 DW8051 | 8 | 16 | 4.25 | 32 |

| 38 | Badgers | 1 | Atmel ATMega128V | 8 | 8 | 8 | 128 |

| 39 | Helens volcano | 1 | Intel XScale PXA271 | 32 | 13 (624 max) | 256 | 32768 |

| 40 | Tunnels | 1 | TI MSP430F1611 | 16 | 8 | 10 | 48 |

- Design rule 12:

- Support for Atmel AVR and Texas Instruments MSP430 MCU architectures is essential for sensor network operating systems.

3.6. Sensor Mote: External Memory

| Nr | Codename | Available external memory, KB | Secure Digital (SD) | External memory used | File system used |

|---|---|---|---|---|---|

| 1 | Habitats | 512 | n | y | n |

| 2 | Minefield | 16,000 | n | y | y |

| 3 | Battlefield | 512 | n | n | n |

| 4 | Line in the sand | 512 | n | n | ? |

| 5 | Counter-sniper | 512 | n | n | n |

| 6 | Electro-shepherd | 512 | n | y | n |

| 7 | Virtual fences | ? | y | y | y |

| 8 | Oil tanker | 0 | n | n | n |

| 9 | Enemy vehicles | 512 | n | n | n |

| 10 | Trove game | 512 | n | n | n |

| 11 | Elder RFID | 512 | n | n | n |

| 12 | Murphy potatoes | 512 | n | n | n |

| 13 | Firewxnet | 512 | n | n | n |

| 14 | AlarmNet | 512 | n | n | n |

| 15 | Ecuador Volcano | 1,024 | n | y | n |

| 16 | Pet game | 512 | n | n | n |

| 17 | Plug | 0 | n | n | n |

| 18 | B-Live | 0 | n | n | n |

| 19 | Biomotion | 0 | n | n | n |

| 20 | AID-N | 1,024 | n | n | n |

| 21 | Firefighting | 1,024 | n | n | n |

| 22 | Rehabil | 1,024 | n | n | n |

| 23 | CargoNet | 1,024 | n | y | n |

| 24 | Fence monitor | 0 | n | n | n |

| 25 | BikeNet | 1,024 | n | y? | n |

| 26 | BriMon | 1,024 | n | y | n |

| 27 | IP net | 1,024 | n | n | n |

| 28 | Smart home | 512 | n | ? | n |

| 29 | SVATS | 512 | n | n | n |

| 30 | Hitchhiker | 1,024 | n | n | n |

| 31 | Daily morning | 512 | n | n | n |

| 32 | Heritage | 1,024 | n | n | n |

| 33 | AC meter | 2,048 | n | y | n |

| 34 | Coal mine | 512 | n | n | n |

| 35 | ITS | ? | n? | ? | n? |

| 36 | Underwater | 2,097,152 | y | ? | n |

| 37 | PipeProbe | 0 | n | n | n |

| 38 | Badgers | 2,097,152 | y | y | n |

| 39 | Helens volcano | 0 | n | n | n |

| 40 | Tunnels | 1024 | n | n | n |

- Design rule 13:

- External memory support for user data storage at the OS level is optional; yet, it should be provided.

- Design rule 14:

- A convenient filesystem interface should be provided by the operating system, so that sensor network users can use it without extra complexity.

3.7. Communication

| Nr | Codename | Report rate, 1/h | Payload size, B | Radio range, m | Speed, kbps | Connectivity type |

|---|---|---|---|---|---|---|

| 1 | Habitats | 60 | ? | 200 (1,200 with Yagi 12dBi) | 40 | connected |

| 2 | Minefield | ? | ? | ? | ? | connected |

| 3 | Battlefield | ? | ? | 300 | 38.4 | intermittent |

| 4 | Line in the sand | ? | 1 | 300 | 38.4 | connected |

| 5 | Counter-sniper | ? | ? | 60 | 38.4 | connected |

| 6 | Electro-shepherd | 0.33 | 7+ | 150–200 | ? | connected |

| 7 | Virtual fences | 1,800 | 8? | ? | 54,000 | connected |

| 8 | Oil tanker | 0.049 | ? | 30 | 750 | connected |

| 9 | Enemy vehicles | 1,800 | ? | 30 | 38.4 | connected |

| 10 | Trove game | ? | ? | ? | 38.4 | connected |

| 11 | Elder RFID | ? | 19 | ? | 38.4 | connected |

| 12 | Murphy potatoes | 6 | 22 | 76.8 | connected | |

| 13 | Firewxnet | 200 | ? | 400 | 38.4 | intermittent |

| 14 | AlarmNet | configurable | 29 | ? | 38.4 | connected |

| 15 | Ecuador Volcano | depends on events | 16 | 1,000 | 250 | connected |

| 16 | Pet game | configurable | ? | 100 | 250 | connected |

| 17 | Plug | 720 | 21 | ? | ? | connected |

| 18 | B-Live | - | ? | ? | ? | connected |

| 19 | Biomotion | 360,000 | 16 | 15 | 1,000 | connected |

| 20 | AID-N | depends on queries | ? | 66 | 250 | connected |

| 21 | Firefighting | ? | ? | 20 | 250 | connected |

| 22 | Rehabil | ? | 12 | 30 | 250 | connected |

| 23 | CargoNet | depends on events | ? | ? | 250 | sporadic |

| 24 | Fence monitor | ? | ? | 300 | 76.8 | connected |

| 25 | BikeNet | opportunistic | ? | 20 | 250 | sporadic |

| 26 | BriMon | 62 | 116 | 125 | 250 | sporadic |

| 27 | IP net | ? | ? | 300 | 19.2 | connected |

| 28 | Smart home | ? | ? | 75–100 outdoor/20–30 indoor | 250 | connected |

| 29 | SVATS | ? | ? | 400 | 38.4 | connected |

| 30 | Hitchhiker | ? | 24 | 500 | 76.8 | connected |

| 31 | Daily morning | 180,000 | 2? | 100 | 250 | connected |

| 32 | Heritage | 6 | ? | 125 | 250 | intermittent |

| 33 | AC meter | 60 default (configurable) | ? | 125 | 250 | connected |

| 34 | Coal mine | ? | 7 | 4 m forced, 20 m max | 38.4 | intermittent |

| 35 | ITS | varies | 5*n | ? | 250 | connected |

| 36 | Underwater | 900 | 11 | ? | 0.3 | intermittent |

| 37 | PipeProbe | 72,000 | ? | 10 | 1,000 | connected |

| 38 | Badgers | 2,380+ | 10 | 1,000 | 250 | connected |

| 39 | Helens volcano | configurable | ? | 9,600 | 250 | connected |

| 40 | Tunnels | 120 | ? | ? | 250 | connected |

- Design rule 15:

- The default packet size provided by the operating system should be at least 30 bytes, with an option to change this constant easily, when required.

- Design rule 16:

- The option to change radio transmission power (if provided by radio chip) is a valuable option for collision avoidance and energy efficiency.

- Design rule 17:

- Data transmission speed is usually below 1 MBit, theoretically, and even lower, practically. This must be taken into account when designing a communication protocol stack.

3.8. Communication Media

| Nr | Codename | Communication media | Used channels | Directionality used |

|---|---|---|---|---|

| 1 | Habitats | radio over air | 1 | n |

| 2 | Minefield | radio over air + sound over air | ? | n |

| 3 | Battlefield | radio over air | 1 | n |

| 4 | Line in the sand | radio over air | 1 | n |

| 5 | Counter-sniper | radio over air | 1 | n |

| 6 | Electro-shepherd | radio over air | ? | n |

| 7 | Virtual fences | radio over air | 2 | y |

| 8 | Oil tanker | radio over air | 79 | n |

| 9 | Enemy vehicles | radio over air | 1 | n |

| 10 | Trove game | radio over air | 1 | n |

| 11 | Elder RFID | radio over air | 1 | n |

| 12 | Murphy potatoes | radio over air | 1 | n |

| 13 | Firewxnet | radio over air | 1 | y, gateways |

| 14 | AlarmNet | radio over air | 1 | n |

| 15 | Ecuador Volcano | radio over air | 1 | y |

| 16 | Pet game | radio over air | 1 | n |

| 17 | Plug | radio over air | ? | n |

| 18 | B-Live | wire mixed with radio over air | ? | n |

| 19 | Biomotion | radio over air | 1 | n |

| 20 | AID-N | radio over air | 1 | n |

| 21 | Firefighting | radio over air | 4 | n |

| 22 | Rehabil | radio over air | 1? | n |

| 23 | CargoNet | radio over air | 1 | n |

| 24 | Fence monitor | radio over air | 1 | n |

| 25 | BikeNet | radio over air | 1 | n |

| 26 | BriMon | radio over air | 16 | n |

| 27 | IP net | radio over air | 1 | n |

| 28 | Smart home | radio over air | 16 | ? |

| 29 | SVATS | radio over air | ? | n |

| 30 | Hitchhiker | radio over air | 1 | n |

| 31 | Daily morning | radio over air | 1 | n |

| 32 | Heritage | radio over air | 1 | n |

| 33 | AC meter | radio over air | 1 | n |

| 34 | Coal mine | radio over air | 1 | n |

| 35 | ITS | radio over air | 1 | n |

| 36 | Underwater | ultra-sound over water | 1 | n |

| 37 | PipeProbe | radio over air and water | 1 | n |

| 38 | Badgers | radio over air | ? | n |

| 39 | Helens volcano | radio over air | 1? | y |

| 40 | Tunnels | radio over air | 2 | n |

3.9. Network

| Nr | Codename | Network topology | Mobile motes | Deployment area | Max hop count | Randomly deployed |

|---|---|---|---|---|---|---|

| 1 | Habitats | multi-one-hop | n | 1,000 × 1,000 m | 1 | n |

| 2 | Minefield | multi-one-hop | y | 30 × 40 m | ? | y |

| 3 | Battlefield | multi-one-hop | n | 85 m long road | ? | y |

| 4 | Line in the sand | mesh | n | 18 × 8 m | ? | n |

| 5 | Counter-sniper | multi-one-hop | n | 30 × 15 m | 11 | y |

| 6 | Electro-shepherd | one-hop | y | ? | 1 | y (attached to animals) |

| 7 | Virtual fences | mesh | y | 300 × 300 m | 5 | y (attached to animals) |

| 8 | Oil tanker | multi-one-hop | n | 150 × 100 m | 1 | n |

| 9 | Enemy vehicles | mesh | y, power node | 20 × 20 m | 6 | n |

| 10 | Trove game | one-hop | y | ? | 1 | y, attached to users |

| 11 | Elder RFID | one-hop | n (mobile RFID tags) | 1 | n | |

| 12 | Murphy potatoes | mesh | n | 10,00 × 1,000 m | 10 | n |

| 13 | Firewxnet | multi-mesh | n | 4? | n | |

| 14 | AlarmNet | mesh | y, mobile body motes | apartment | ? | n |

| 15 | Ecuador Volcano | mesh | n | 8,000 × 1,000 m | 6 | n |

| 16 | Pet game | mesh | y | ? | ? | y |

| 17 | Plug | mesh | n | 40 × 40 | ? | n |

| 18 | B-Live | multi-one-hop | n | house | 2 | n |

| 19 | Biomotion | one-hop | y, mobile body motes | room | 1 | n (attached to predefined body parts) |

| 20 | AID-N | mesh | y | ? | 1+ | y, attached to users |

| 21 | Firefighting | predefined tree | y, human mote | ? | n | |

| 22 | Rehabil | one-hop | y, human motes | gymnastics room | 1 | y, attached to patients and training machines |

| 23 | CargoNet | one-hop | y | truck, ship or plane | 1 | n |

| 24 | Fence monitor | one-hop? | n | 35 × 2 m | 1? | n |

| 25 | BikeNet | mesh | y | 5 km long track | ? | y (attached to bicycles) |

| 26 | BriMon | multi-mesh | y, mobile BS | 2,000 × 1 | 4 | n |

| 27 | IP net | multi-one-hop | n | 250 × 25 3 story building + mock-up town | ? | n |

| 28 | Smart home | one-hop | n | ? | ? | n |

| 29 | SVATS | mesh | y, motes in cars | parking place | ? | n |

| 30 | Hitchhiker | mesh | n | 500 × 500 m | 2? | n |

| 31 | Daily morning | one-hop | y, body mote | house | 1 | n (attached to human) |

| 32 | Heritage | mesh | n | 7.8 × 4.5 × 26 m | 6 | n (initial deployment static, but can be moved later) |

| 33 | AC meter | mesh | n | building | ? | y (Given to users who plug in power outlets of their choice) |

| 34 | Coal mine | multi-path mesh | n | 8 × 4 × ? m | ? | n |

| 35 | ITS | mesh | n | 140 m long road | 7? | n |

| 36 | Underwater | mesh | y | ? | 1 | n |

| 37 | PipeProbe | one-hop | y | 0.18 × 1.40 × 3.45 m | 1 | n |

| 38 | Badgers | mesh | y | 1,000 × 2,000 m ? | ? | y (attached to animals) |

| 39 | Helens volcano | mesh | n | ? | 1+? | n |

| 40 | Tunnels | multi-mesh | n | 230 m long tunnel | 4 | n |

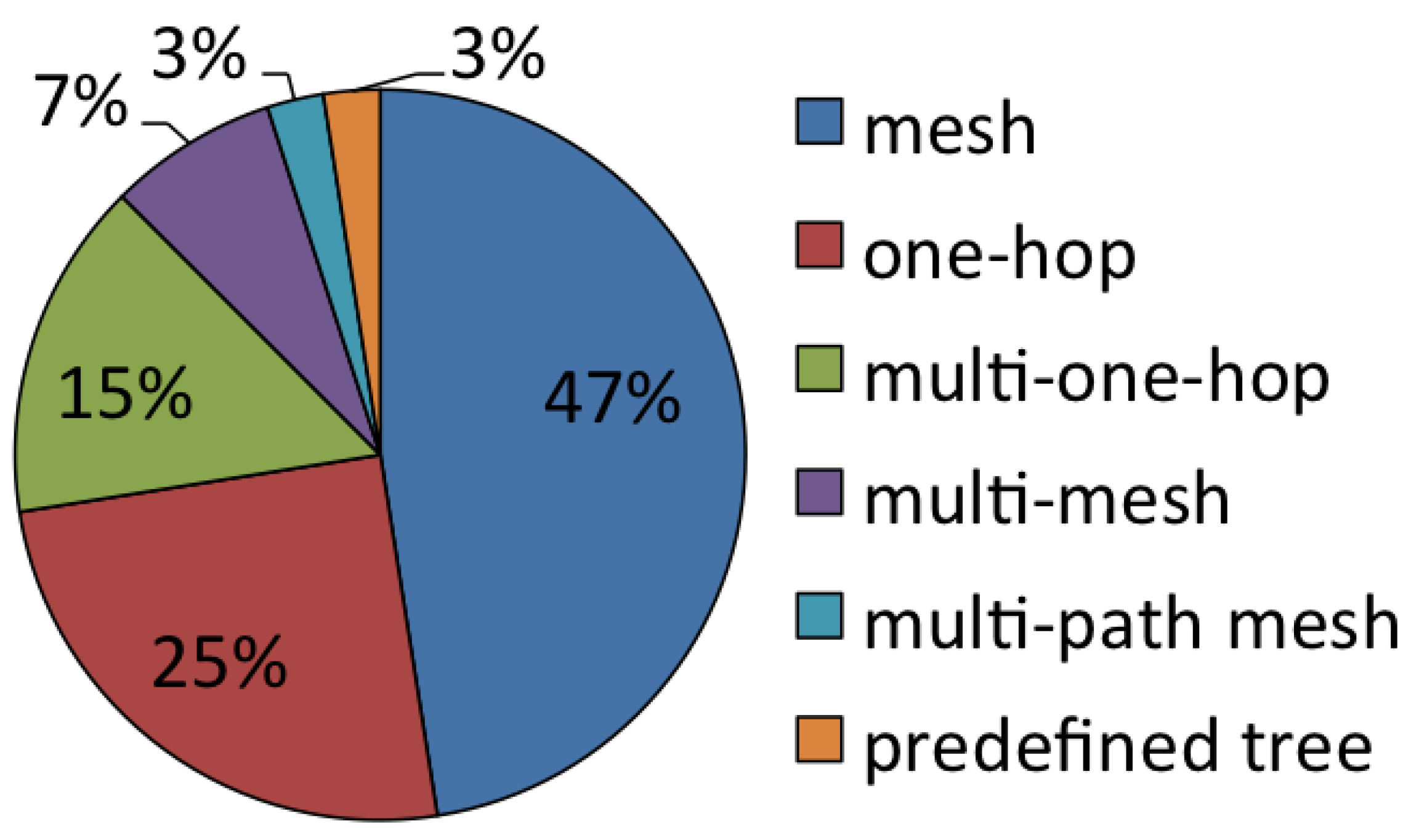

- Design rule 18:

- Multi-hop routing is required as a default component, which can be turned off, if one-hop topology is used. Topology changes must be expected; at least 11 hops should be supported.

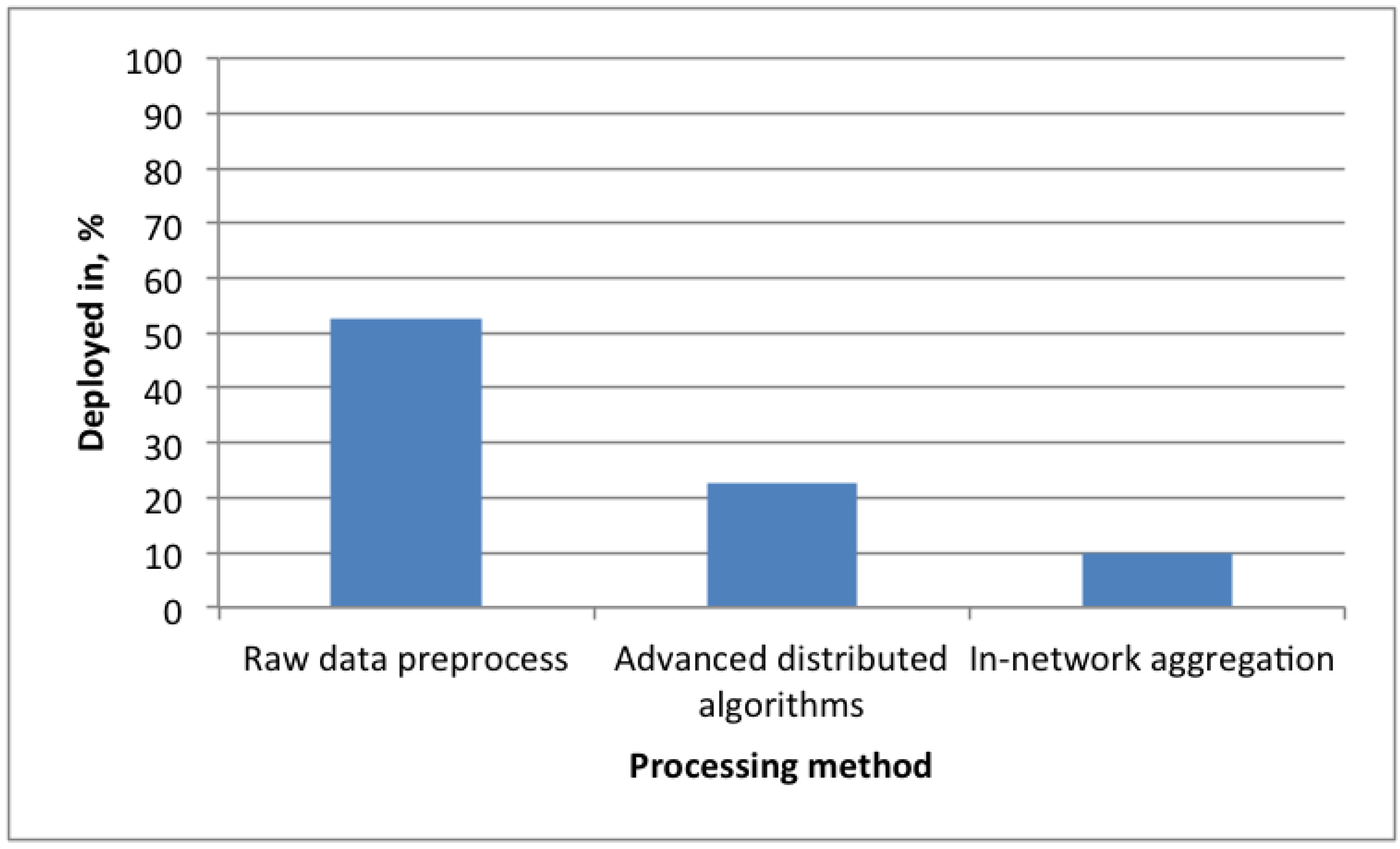

3.10. In-Network Processing

| Nr | Codename | Raw data preprocess | Advanced distributed algorithms | In-network aggregation |

|---|---|---|---|---|

| 1 | Habitats | n | n | n |

| 2 | Minefield | y | y | ? |

| 3 | Battlefield | y | n | y |

| 4 | Line in the sand | y | y | ? |

| 5 | Counter-sniper | y | n | y |

| 6 | Electro-shepherd | n | n | n |

| 7 | Virtual fences | n | n | n |

| 8 | Oil tanker | n | n | n |

| 9 | Enemy vehicles | y | y | y |

| 10 | Trove game | n | y | n |

| 11 | Elder RFID | n | n | n |

| 12 | Murphy potatoes | n | n | n |

| 13 | Firewxnet | n | n | n |

| 14 | AlarmNet | y | n | n |

| 15 | Ecuador Volcano | y | y | n |

| 16 | Pet game | n | n | n |

| 17 | Plug | y | n | n |

| 18 | B-Live | y | n | n |

| 19 | Biomotion | n | n | n |

| 20 | AID-N | y | n | n |

| 21 | Firefighting | n | n | n |

| 22 | Rehabil | n | n | n |

| 23 | CargoNet | n | n | n |

| 24 | Fence monitor | y | y | n |

| 25 | BikeNet | n | n | n |

| 26 | BriMon | n | n | n |

| 27 | IP net | y | n | n |

| 28 | Smart home | y | n | ? |

| 29 | SVATS | y | y | n |

| 30 | Hitchhiker | n | n | n |

| 31 | Daily morning | n | n | n |

| 32 | Heritage | y | n | n |

| 33 | AC meter | y | n | n |

| 34 | Coal mine | y | y | y |

| 35 | ITS | y | n | n |

| 36 | Underwater | n | y | n |

| 37 | PipeProbe | y | n | n |

| 38 | Badgers | y | n | n |

| 39 | Helens volcano | y | n | n |

| 40 | Tunnels | n | n | n |

3.11. Networking Stack

| Nr | Codename | Custom MAC | Channel access method | Routing used | Custom routing | Reactive or proactive routing | IPv6 used | Safe delivery | Data priorities |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Habitats | n | Carrier Sense Multiple Access (CSMA) | n | - | - | n | n | n |

| 2 | Minefield | ? | ? | ? | ? | ? | ? | ? | ? |

| 3 | Battlefield | y | CSMA | y | y | proactive | n | y | ? |

| 4 | Line in the sand | y | CSMA | y | y | proactive | n | y | n |

| 5 | Counter-sniper | n | CSMA | y | y | proactive | n | n | - |

| 6 | Electro-shepherd | y | CSMA | - | - | - | n | n | n |

| 7 | Virtual fences | n | CSMA | - | - | - | IPv4? | n | n |

| 8 | Oil tanker | n | CSMA | - | n | - | n | y | n |

| 9 | Enemy vehicles | y | CSMA | y | y | proactive | n | n | - |

| 10 | Trove game | n | CSMA | n | - | - | n | n | n |

| 11 | Elder RFID | n | CSMA | n | - | - | n | n | n |

| 12 | Murphy potatoes | y | CSMA | y | n | proactive | n | n | n |

| 13 | Firewxnet | y | CSMA | y | y | proactive | n | y | n |

| 14 | AlarmNet | y | CSMA | y | n | ? | n | y | y |

| 15 | Ecuador Volcano | n | CSMA | y | y | proactive | n | y | n |

| 16 | Pet game | n | CSMA | y | n | ? | n | n | n |

| 17 | Plug | y | CSMA | y | y | ? | n | n | n |

| 18 | B-Live | ? | ? | n | - | - | n | ? | ? |

| 19 | Biomotion | y | Time Division Multiple Access (TDMA) | n | - | - | n | n | n |

| 20 | AID-N | ? | ? | y | n | proactive | n | y | n |

| 21 | Firefighting | n | CSMA | y, static | n | proactive | n | n | n |

| 22 | Rehabil | n | CSMA | n | - | - | n | n | n |

| 23 | CargoNet | y | CSMA | n | - | - | n | n | n |

| 24 | Fence monitor | n | CSMA? | y | y | proactive? | n | n | n |

| 25 | BikeNet | y | CSMA | y | y | reactive | n | y | n |

| 26 | BriMon | y | TDMA | y | y | proactive | n | y | n |

| 27 | IP net | n | CSMA | y | y | proactive | ? | ? | ? |

| 28 | Smart home | ? | ? | y | ? | ? | n | ? | ? |

| 29 | SVATS | n | CSMA | y | n | ? | n | n | n |

| 30 | Hitchhiker | y | TDMA | y | y | reactive | n | y | n |

| 31 | Daily morning | n | CSMA | n | - | - | n | n | n |

| 32 | Heritage | y | TDMA | y | y | proactive | n | y | y |

| 33 | AC meter | n | ? | y | n | proactive | y | y | n |

| 34 | Coal mine | n | CSMA | y | y | proactive | n | y | n |

| 35 | ITS | y? | CSMA? | y | y | reactive | n | y | n |

| 36 | Underwater | y | TDMA | n | - | - | n | n | n |

| 37 | PipeProbe | n | ? | n | - | - | n | n | n |

| 38 | Badgers | n | CSMA | y | y | proactive | y | n | y |

| 39 | Helens volcano | y | TDMA | y | ? | ? | n | y | y |

| 40 | Tunnels | n | CSMA | y | y | proactive | n | n | n |

- Design rule 19:

- The operating system should provide a simple, effective and generic CSMA-based MAC protocol by default.

- Design rule 20:

- The interface for custom MAC and routing protocol substitution must be provided.

- Design rule 21:

- It is wise to include a IPv6 (6lowpan) networking stack in the operating system to increase interoperability.

- Design rule 22:

- Simple transport layer delivery acknowledgment mechanisms should be provided by the operating system.

3.12. Operating System and Middleware

| Nr | Codename | OS used | Self-made OS | Middleware used |

|---|---|---|---|---|

| 1 | Habitats | TinyOS | n | |

| 2 | Minefield | customized Linux | n | |

| 3 | Battlefield | TinyOS | n | |

| 4 | Line in the sand | TinyOS | n | |

| 5 | Counter-sniper | TinyOS | n | |

| 6 | Electro-shepherd | ? | y | |

| 7 | Virtual fences | Linux | n | |

| 8 | Oil tanker | ? | n | |

| 9 | Enemy vehicles | TinyOS | n | |

| 10 | Trove game | TinyOS | n | |

| 11 | Elder RFID | TinyOS | n | |

| 12 | Murphy potatoes | TinyOS | n | |

| 13 | Firewxnet | Mantis OS [60] | y | |

| 14 | AlarmNet | TinyOS | n | |

| 15 | Ecuador Volcano | TinyOS | n | Deluge [74] |

| 16 | Pet game | TinyOS | n | Mate Virtual Machine + TinyScript [75] |

| 17 | Plug | custom | y | |

| 18 | B-Live | custom | y | |

| 19 | Biomotion | no OS | y | |

| 20 | AID-N | ? | ? | |

| 21 | Firefighting | TinyOS | n | Deluge [74]? |

| 22 | Rehabil | TinyOS | n | |

| 23 | CargoNet | custom | y | |

| 24 | Fence monitor | ScatterWeb | y | FACTS [76] |

| 25 | BikeNet | TinyOS | n | |

| 26 | BriMon | TinyOS | n | |

| 27 | IP net | Contiki | n | |

| 28 | Smart home | TinyOS | n | |

| 29 | SVATS | TinyOS? | n | |

| 30 | Hitchhiker | TinyOS | n | |

| 31 | Daily morning | TinyOS | n | |

| 32 | Heritage | TinyOS | n | TeenyLIME [77] |

| 33 | AC meter | TInyOS | n | |

| 34 | Coal mine | TinyOS | n | |

| 35 | ITS | custom? | y? | |

| 36 | Underwater | custom | y | |

| 37 | PipeProbe | custom | y | |

| 38 | Badgers | Contiki | n | |

| 39 | Helens volcano | TinyOS | n | customized Deluge [74], remote procedure calls |

| 40 | Tunnels | TinyOS | n | TeenyLIME [77] |

3.13. Software Level Tasks

| Nr | Codename | Kernel service count | Kernel services | App-level task count | App-level tasks |

|---|---|---|---|---|---|

| 1 | Habitats | 0 | 1 | sensing + caching to flash + data transfer | |

| 2 | Minefield | ? | linux services | 11 | |

| 3 | Battlefield | 2 | MAC, routing | 2 + 4 | Entity tracking, status, middleware (time sync, group management, sentry service, dynamic configuration) |

| 4 | Line in the sand | ? | ? | ? | ? |

| 5 | Counter-sniper | ? | ? | ? | ? |

| 6 | Electro-shepherd | ? | - | sense and send | |

| 7 | Virtual fences | ? | MAC | 1 | sense and issue warning (play sound file) |

| 8 | Oil tanker | 0 | 4 | cluster formation and time sync, sensing, data transfer | |

| 9 | Enemy vehicles | ? | ? | ? | ? |

| 10 | Trove game | 1 | MAC | 3 | sense and send, receive, buzz |

| 11 | Elder RFID | 1 | MAC | 2 | query RFID, report |

| 12 | Murphy potatoes | 2 | MAC, routing | 1 | sense and send |

| 13 | Firewxnet | 2 | MAC, routing | 2 | sensing and sending, reception and time-sync |

| 14 | AlarmNet | ? | ? | 3 | query processing, sensing, report sending |

| 15 | Ecuador Volcano | 3 | time sync, remote reprogram, routing | 3 | sense, detect events, process queries |

| 16 | Pet game | 2 | MAC, routing | ? | sense and send, receive configuration |

| 17 | Plug | 2 | MAC, routing, radio listen | 2 | sensing and statistics and report, radio RX |

| 18 | B-Live | ? | ? | 3 | sensing, actuation, data transfer |

| 19 | Biomotion | 2 | MAC, time sync | 1 | sense and send |

| 20 | AID-N | 3 | MAC, routing, transport | 3 | query processing, sensing, report sending |

| 21 | Firefighting | 1 | routing | 2 | sensing and sending, user input processing |

| 22 | Rehabil | 0? | ? | 1 | sense and send |

| 23 | CargoNet | 0? | ? | 1 | sense and send |

| 24 | Fence monitor | 2 | MAC, routing | 4 | sense, preprocess, report, receive neighbor response |

| 25 | BikeNet | 1 | MAC | 5 | hello broadcast, neighbor discovery and task reception, sensing, data download, data upload |

| 26 | BriMon | 3 | Time sync, MAC, routing | 3 | sensing, flash storage, sending |

| 27 | IP net | ? | ? | ? | ? |

| 28 | Smart home | ? | ? | ? | ? |

| 29 | SVATS | 2 | MAC, time sync | 2 | listen, decide |

| 30 | Hitchhiker | 4 | MAC, routing, transport, timesync | 1 | sense and send |

| 31 | Daily morning | 1 | MAC | 1 | sense and send |

| 32 | Heritage | ? | ? | ||

| 33 | AC meter | ? | ? | 2 | sampling, routing |

| 34 | Coal mine | 2 | MAC, routing | 2 | receive beacons, send beacon and update neighbor map and report accidents |

| 35 | ITS | 2 | MAC, routing | 1 | listen for queries and sample and process and report |

| 36 | Underwater | 2 | MAC, timesync | 3 | sensing + sending, reception, motor control |

| 37 | PipeProbe | 0? | - | 1 | sense and send |

| 38 | Badgers | 3 | MAC, routing, User Datagram Protocol (UDP) connection establishment | 1 | sense and send |

| 39 | Helens volcano | 5 | MAC, routing, transport, time sync, remote reprogram | 5 | sense, detect events, compress, Remote Procedure Call (RPC) response, data report |

| 40 | Tunnels | 2 | MAC, routing | 1 | sense and send |

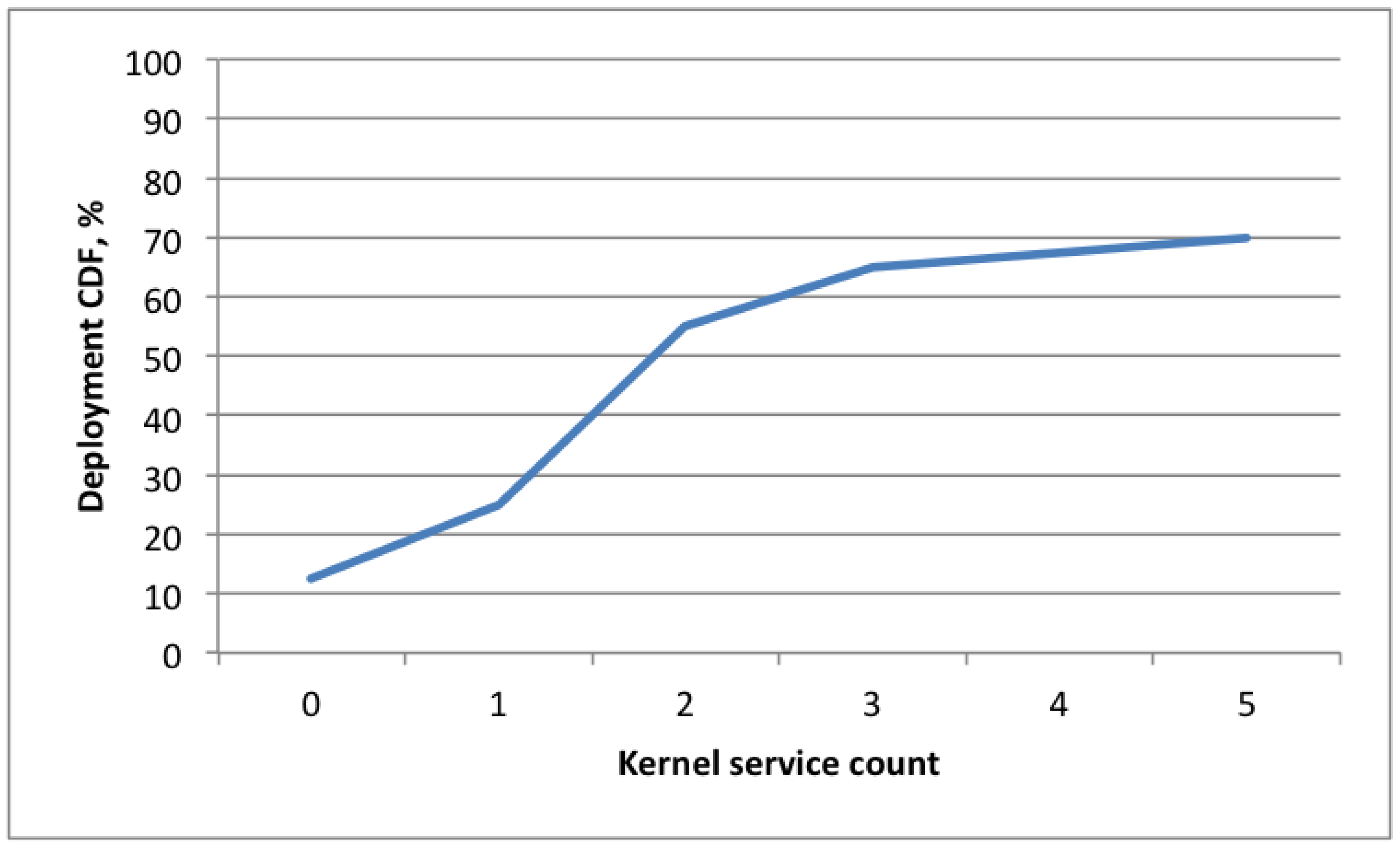

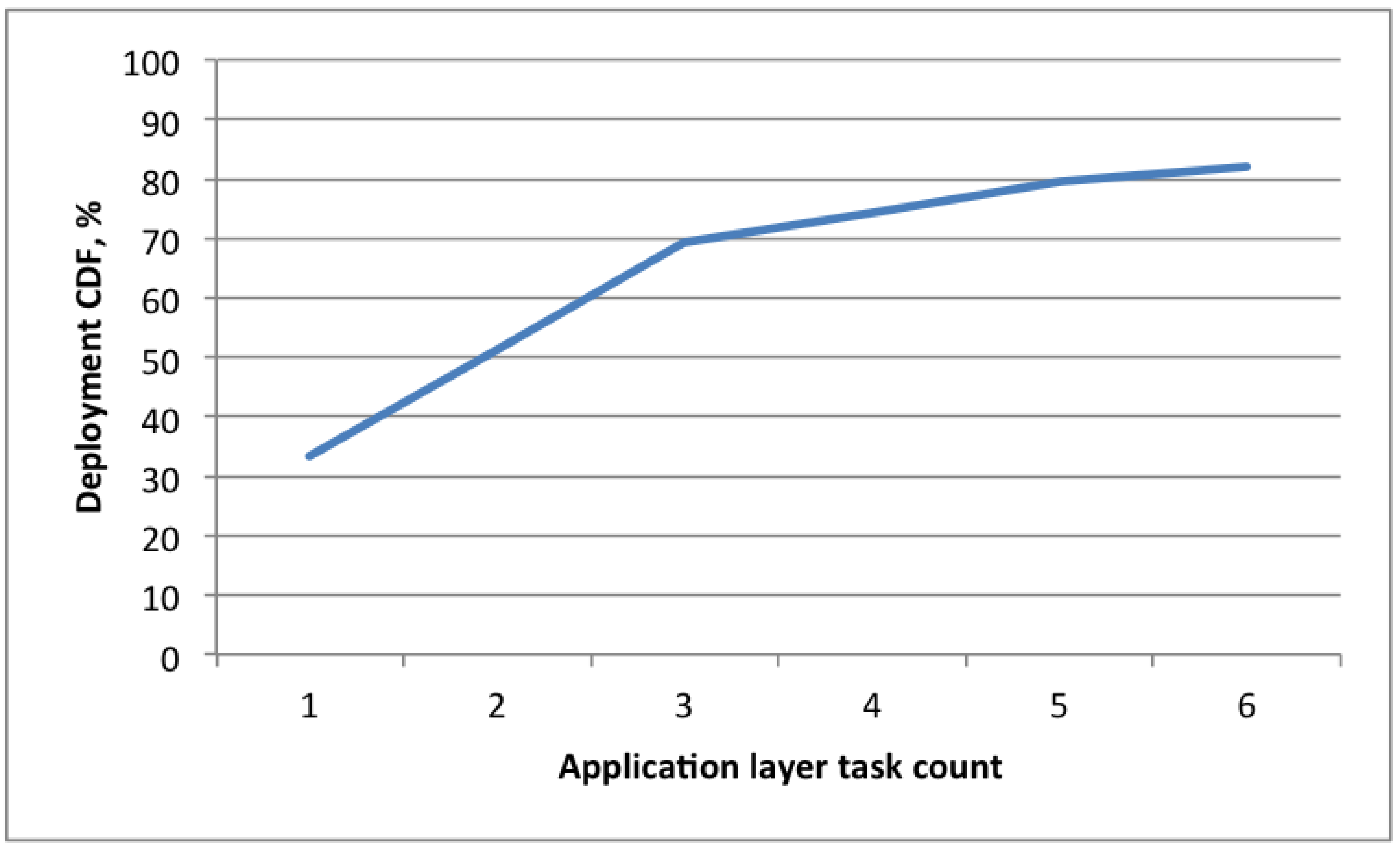

- Design rule 23:

- The OS task scheduler should support up to five kernel services and up to six user level tasks. An alternative configuration might be useful, providing a single user task to simplify the programming approach and provide maximum resource efficiency, which might be important for the most resource-constrained platforms.

3.14. Task Scheduling

| Nr | Codename | Time sensitive app-level tasks | Preemptive scheduling needed | Task comments |

|---|---|---|---|---|

| 1 | Habitats | 0 | n | sense + cache + send in every period |

| 2 | Minefield | 7+ | y | complicated localization, network awareness and cooperation |

| 3 | Battlefield | 0 | n | |

| 4 | Line in the sand | 1 ? | n | |

| 5 | Counter-sniper | 3? | n | localization, synchronization, blast detection |

| 6 | Electro-shepherd | ? | ? | |

| 7 | Virtual fences | ? | n | |

| 8 | Oil tanker | 1 | y | user-space cluster node discovery and sync are time critical |

| 9 | Enemy vehicles | 0 | n | |

| 10 | Trove game | 0 | n | |

| 11 | Elder RFID | 0 | n | |

| 12 | Murphy potatoes | 0 | n | |

| 13 | Firewxnet | 1 | y | sensing can take up to 200 ms; should be preemptive |

| 14 | AlarmNet | 0 | n | - |

| 15 | Ecuador Volcano | 1 | y | sensing is time-critical, but it is stopped, when the query is received |

| 16 | Pet game | 0 | n | |

| 17 | Plug | 0 | n | |

| 18 | B-Live | 0 | y | |

| 19 | Biomotion | 0 | y | preemption needed for time sync and TDMA MAC |

| 20 | AID-N | 0 | n | |

| 21 | Firefighting | 0 | n | |

| 22 | Rehabil | ? | ? | |

| 23 | CargoNet | 0 | n | wake up on external interrupts; process them; return to sleep mode |

| 24 | Fence monitor | 0 | n | if preprocessing is time-consuming, preemptive scheduling is needed |

| 25 | BikeNet | 1 | y | sensing realized as an app-level TDMA schedule and is time-critical. Data upload may be time-consuming; therefore, preemptive scheduling may be required |

| 26 | BriMon | 0 | n | sending is time critical, but in the MAC layer |

| 27 | IP net | 0 | ? | |

| 28 | Smart home | ? | ? | |

| 29 | SVATS | 0 | y | preemption needed for time sync and MAC |

| 30 | Hitchhiker | 0 | y | preemption needed for time sync and MAC |

| 31 | Daily morning | 0 | n | |

| 32 | Heritage | 1 | y | preemptive scheduling needed for time sync? |

| 33 | AC meter | 0 | n | |

| 34 | Coal mine | 0 | n | preemptive scheduling needed, if the neighbor update is time-consuming |

| 35 | ITS | 0 | n | |

| 36 | Underwater | 0 | y | preemption needed for time sync and TDMA MAC |

| 37 | PipeProbe | 0 | n | no MAC; just send |

| 38 | Badgers | 0 | n | |

| 39 | Helens volcano | 0 | y | preemption needed for time sync and MAC |

| 40 | Tunnels | 0 | n |

- Design rule 24:

- The operating system should provide both cooperative and preemptive scheduling, which are switchable as needed.

3.15. Time Synchronization

| Nr | Codename | Time-sync used | Accuracy, μsec | Advanced time-sync | Self-made time-sync |

|---|---|---|---|---|---|

| 1 | Habitats | n | - | - | - |

| 2 | Minefield | y | 1000 | ? | ? |

| 3 | Battlefield | y | ? | n | y |

| 4 | Line in the sand | y | 110 | n | y |

| 5 | Counter-sniper | y | 17.2 (1.6 per hop) | y | y |

| 6 | Electro-shepherd | n | - | - | - |

| 7 | Virtual fences | n | - | - | - |

| 8 | Oil tanker | y | ? | n | y |

| 9 | Enemy vehicles | n | - | - | - |

| 10 | Trove game | n | - | - | - |

| 11 | Elder RFID | n | - | - | - |

| 12 | Murphy potatoes | n | - | - | - |

| 13 | Firewxnet | y | >1000 | n | y |

| 14 | AlarmNet | n | - | - | - |

| 15 | Ecuador Volcano | y | 6800 | y | n |

| 16 | Pet game | n | - | - | - |

| 17 | Plug | n | - | - | - |

| 18 | B-Live | n | - | - | - |

| 19 | Biomotion | y | ? | n | y |

| 20 | AID-N | n | - | - | - |

| 21 | Firefighting | n | - | - | - |

| 22 | Rehabil | n | - | - | - |

| 23 | CargoNet | n | - | - | - |

| 24 | Fence monitor | n | - | - | - |

| 25 | BikeNet | y | 1 ms? | n, GPS | n |

| 26 | BriMon | y | 180 | n | y |

| 27 | IP net | ? | ? | ? | ? |

| 28 | Smart home | ? | ? | ? | ? |

| 29 | SVATS | y, not implemented | - | - | - |

| 30 | Hitchhiker | y | ? | n | y |

| 31 | Daily morning | n | - | - | - |

| 32 | Heritage | y | 732 | y | y |

| 33 | AC meter | n | - | - | - |

| 34 | Coal mine | n | - | - | - |

| 35 | ITS | n | - | - | - |

| 36 | Underwater | y | ? | ? | y |

| 37 | PipeProbe | n | - | - | - |

| 38 | Badgers | n | - | - | - |

| 39 | Helens volcano | y | 1 ms? | n, GPS | n |

| 40 | Tunnels | n | - | - | - |

- A 100% duty cycle is used on all network nodes functioning as data routers without switching to sleep mode.

- Network nodes agree on a cooperative schedule for packet forwarding; time synchronization is required.

- Design rule 25:

- Time synchronization provided by the operating system would be of a high value, saving sensor network designers time and effort for custom synchronization development.

3.16. Localization

| Nr | Codename | Localization used | Localization accuracy, cm | Advanced Localization | Self-made Localization |

|---|---|---|---|---|---|

| 1 | Habitats | n | - | - | - |

| 2 | Minefield | y | +/−25 | y | y |

| 3 | Battlefield | y | couple feet | n | y |

| 4 | Line in the sand | n | - | - | - |

| 5 | Counter-sniper | y | 11 | y | y |

| 6 | Electro-shepherd | y, GPS | >1 m | n | n |

| 7 | Virtual fences | y, GPS | >1 m | n | n |

| 8 | Oil tanker | n | - | - | - |

| 9 | Enemy vehicles | y | ? | n | y |

| 10 | Trove game | n | - | - | - |

| 11 | Elder RFID | n | - | - | - |

| 12 | Murphy potatoes | n | - | - | - |

| 13 | Firewxnet | n | - | - | - |

| 14 | AlarmNet | y | room | n, motion sensor in rooms | y |

| 15 | Ecuador Volcano | n | - | - | - |

| 16 | Pet game | n | - | - | - |

| 17 | Plug | n | - | - | - |

| 18 | B-Live | n | - | - | - |

| 19 | Biomotion | n | - | - | - |

| 20 | AID-N | n | - | - | - |

| 21 | Firefighting | y | <5 m? | n | y |

| 22 | Rehabil | n | - | - | - |

| 23 | CargoNet | n | - | - | - |

| 24 | Fence monitor | n | - | - | - |

| 25 | BikeNet | y, GPS | >1 m | n | n |

| 26 | BriMon | n | - | - | - |

| 27 | IP net | n | - | - | - |

| 28 | Smart home | n | - | - | - |

| 29 | SVATS | y | ? | n, RSSI | y |

| 30 | Hitchhiker | n | - | - | - |

| 31 | Daily morning | y | room | n | y |

| 32 | Heritage | n | - | - | - |

| 33 | AC meter | n | - | - | - |

| 34 | Coal mine | y | ? | n, static | y |

| 35 | ITS | y, static | ? | n | n |

| 36 | Underwater | y | ? | n | y |

| 37 | PipeProbe | y | 8 cm | y | y |

| 38 | Badgers | n | - | - | - |

| 39 | Helens volcano | n | - | - | - |

| 40 | Tunnels | n | - | - | - |

4. A Typical Wireless Sensor Network

- is used as a prototyping tool to test new concepts and approaches for monitoring specific environments

- is developed and deployed incrementally in multiple iterations and, therefore, needs effective debugging mechanisms

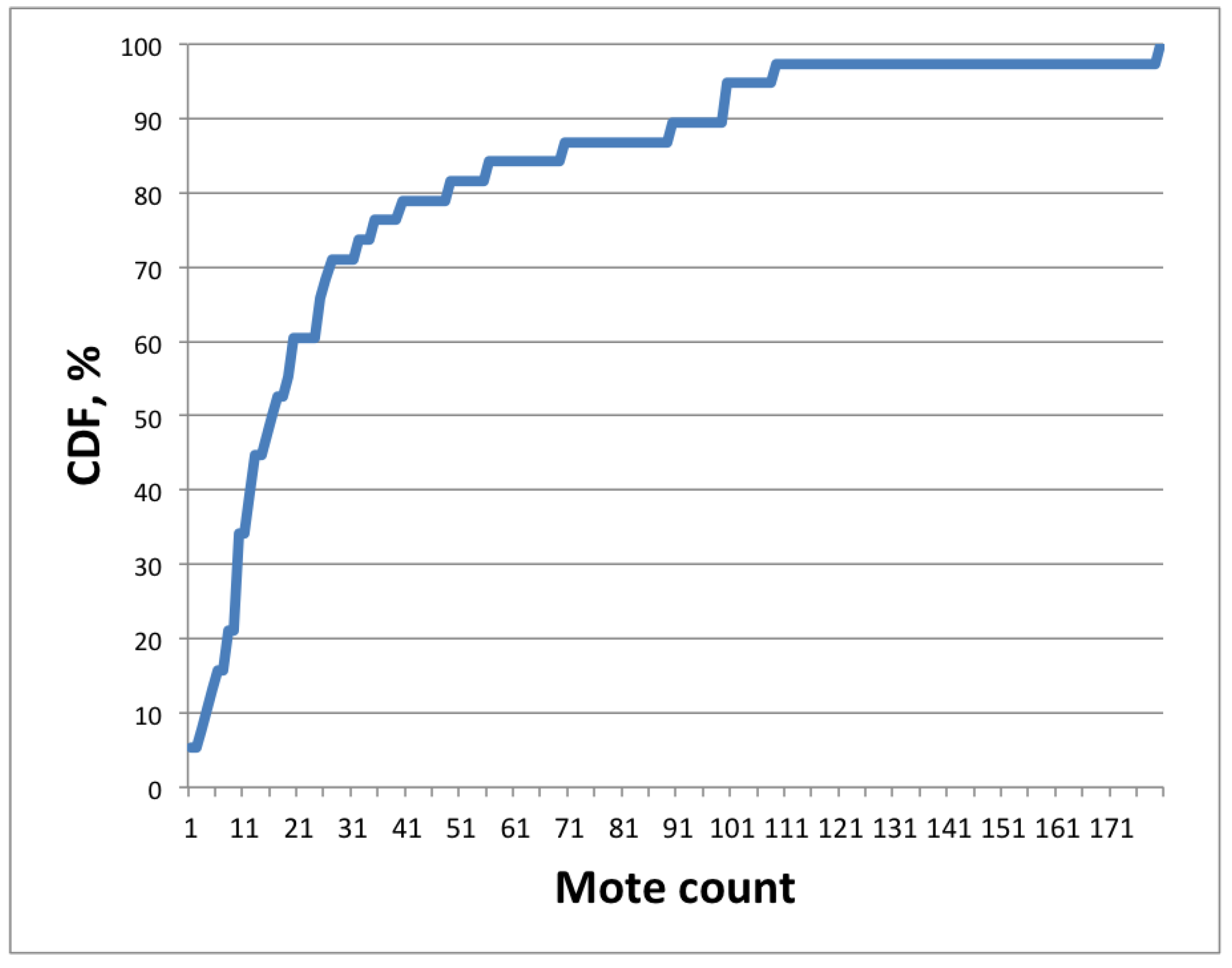

- contains 10–50 sensor nodes and one or several base stations (a sensor node is connected to a personal computer) that act as data collection sinks

- uses temperature, light and accelerometer sensors

- uses low frequency sensor sampling with less than one sample per second, on average, in most cases; some sensors (accelerometers) require sampling in the range 10–100 Hz, and some scenarios (seismic or audio sensing) use high frequency sampling with a sampling rate above 10 kHz

- has a desired lifetime, varying from several hours (short trials) to several years; relatively often, the desired final lifetime is specified; yet, a significantly shorter lifetime is used in the first proof-of-concept trials with a 100% duty cycle (no sleep mode used)

- has at least one sensor node with increased energy budget—either connected to a static power network or a battery with significantly larger capacity

- has specific sensing and packaging constraints; therefore, packaging and hardware selection are important problems in WSN design

- contains MSP430 or AVR architecture microcontrollers on the sensor nodes, typically with eight-bit or 16-bit architecture, 8 MHz CPU frequency, 4–10 KB RAM, 48–128 KB program memory and 512–1,024 KB external memory

- has communication according to the 802.15.4 protocol; TI CC2420 is an example of a widely used wireless communication chip [67]

- sends data packets with a size of 10–30 bytes; the report rate varies significantly—for some scenarios, only one packet per day is sent; for others, each sensor sample is sent at 100 Hz

- uses omnidirectional communication in the range of 100–300 m (each hop) with a transmission speed less than 256 Kbps and uses a single communication channel that can lead to collisions

- considers constant multi-hop connectivity available (with up to 11 hops on the longest route), with possible topology changes, due to mobile nodes or other environmental changes in the sensing region

- has either a previously specified or at least a known sensor node placement (not random)

- is likely to use at least primitive raw data preprocessing before reporting results

- uses CSMA-based MAC protocol and proactive routing, often adapted or completely custom-developed for the particular sensing task

- uses some form of reliable data delivery with acknowledgment reception mechanisms

- has been programmed using the TinyOS operating system

- uses multiple semantically simultaneous application-level tasks, and multiple kernel services are running in background, creating the necessity for effective scheduling mechanisms in the operating system and, also, careful programming of the applications; cooperative scheduling (each task voluntarily yields the CPU to other tasks) is enough in most cases; yet, it requires even more accuracy from the programmers

- requires at least simple time synchronization with millisecond accuracy for common duty cycle management or data time stamping

- may require some form of node localization; yet, the environments pose very specific constraints: indoor/outdoor, required accuracy, update rate, infrastructure availability and many other factors

5. OS Conformance

| # | Rule | TinyOS | Contiki | LiteOS | MansOS |

|---|---|---|---|---|---|

| General | |||||

| 1 | Simple, efficient networking protocols | + | + | ± | + |

| 2 | Sink-oriented protocols | + | + | + | |

| 3 | Base station example | + | + | + | |

| Sensing | |||||

| 4 | Temperature, light, acceleration API | ± | + | ||

| 5 | Low duty cycle sampling | + | + | + | + |

| Lifetime and energy | |||||

| 6 | Auto sleep mode | + | + | + | + |

| 7 | Powered mode in protocol design | + | + | + | |

| Sensor mote | |||||

| 8 | TelosB support | + | + | + | |

| 9 | Rapid driver development | + | + | + | |

| 10 | Rapid platform definition | ± | + | ||

| 11 | CC2420 radio chip driver | + | + | + | + |

| 12 | AVR and MSP430 architecture support | + | + | ± | + |

| 13 | External storage support | + | + | + | + |

| 14 | Simple file system | + | + | + | |

| Communication | |||||

| 15 | Configurable packet payload (default: 30 bytes) | + | + | + | + |

| 16 | Configurable transmission power | + | + | + | + |

| 17 | Protocols for ≤ 1 Mbps bandwidth | + | + | + | + |

| 18 | Simple proactive routing | + | + | ± | + |

| 19 | Simple CSMA MAC | + | + | + | |

| 20 | Custom MAC and routing API | + | + | + | |

| 21 | IPv6 support | + | + | ||

| 22 | Simple reception acknowledgment | + | + | + | |

| Tasks and scheduling | |||||

| 23 | five kernel and six user task support | + | + | ± | ± |

| 24 | Cooperative and preemptive scheduling | + | + | + | |

| 25 | Simple time synchronization | + | + | ||

5.1. TinyOS

- The event-driven nature: while event handlers impose less overhead compared to sequential programming, with blocking calls and polling, it is more complex for programmers to design and keep in mind the state machine for split-phase operation of the application

- Modular component architecture: a high degree of modularity and code reuse leads to program logic distribution into many components. Each new functionality may require modification in multiple locations, requiring deep knowledge of internal system structure

- nesC language peculiarities: confusion of interfaces and components, component composition and nesting and specific requirements for variable definitions are examples of language aspects interfering with the creativity of novice WSN programmers

- TinyOS provides an interface for writing data and debug logs to external storage devices; yet, no file system is available. Third party external storage filesystem implementations do exist, such as TinyOS FAT16 support for SD cards [85].

- TinyOS contains Flooding Time Synchronization Protocol (FTSP) time synchronization protocol [9] in its libraries. However, it requires deep understanding of clock skew issues and FTSP protocol operation to be useful

- The temperature, light, acceleration, sound and humidity sensing API is not provided

5.2. Contiki

5.3. LiteOS

5.4. MansOS

- IPv6 support is not built into the MansOS core; it must be implemented at a different level

- MansOS provides both scheduling techniques: preemptive and cooperative. In the preemptive case, only one kernel thread and several user threads are allowed. Multiple kernel tasks must share a single thread in this case. For the cooperative scheduler (protothreads, adopted from Contiki [82]), any number of simultaneous threads is allowed, and they all share the same stack space; therefore, the stack overflow probability is significantly lower, compared to LiteOS.

5.5. Summary

6. Conclusions

- In many cases, customized commercial sensor nodes or fully custom-built motes are used. Therefore, OS portability and code reuse are very important.

- Simplicity and extensibility should be preferred over scalability, as existing sensor networks rarely contain more than 100 nodes.

- Both preemptive and cooperative task schedulers should be included in the OS.

- Default networking protocols should be sink-oriented and use CSMA-based MAC and proactive routing protocols. WSN researchers should be able to easily replace default networking protocols with their own to evaluate their performance.

- Simple time synchronization with millisecond (instead of microsecond) accuracy is sufficient for most deployments.

Acknowledgments

References

- Global Security.org. Sound Surveillance System (SOSUS). Available online: http://www.globalsecurity.org/intell/systems/sosus.htm (accessed on 8 August 2013).

- Polastre, J.; Szewczyk, R.; Culler, D. Telos: Enabling Ultra-low Power Wireless Research. In Proceedings of the 4th International Symposium on Information Processing in Sensor Networks, (IPSN’05), UCLA, Los Angeles, CA, USA, 25–27 April 2005.

- Crossbow Technology. MicaZ mote datasheet. Available online: http://www.openautomation.net/uploadsproductos/micaz_datasheet.pdf (accessed on 8 August 2013).

- Levis, P.; Madden, S.; Polastre, J.; Szewczyk, R.; Whitehouse, K.; Woo, A.; Gay, D.; Hill, J.; Welsh, M.; Brewer, E.; et al. Tinyos: An operating system for sensor networks. Ambient Intell. 2005, 35, 115–148. [Google Scholar]

- Dunkels, A.; Gronvall, B.; Voigt, T. Contiki-A Lightweight and Flexible Operating System for Tiny Networked Sensors. In Proceedings of the Annual IEEE Conference on Local Computer Networks, Tampa, FL, USA, 17–18 April 2004; pp. 455–462.

- Madden, S.; Franklin, M.; Hellerstein, J.; Hong, W. TinyDB: An acquisitional query processing system for sensor networks. ACM Trans. Database Syst. (TODS) 2005, 30, 122–173. [Google Scholar] [CrossRef]

- Muller, R.; Alonso, G.; Kossmann, D. A Virtual Machine for Sensor Networks. ACM SIGOPS Operat. Syst. Rev. 2007, 41.3, 145–158. [Google Scholar] [CrossRef]

- Demirkol, I.; Ersoy, C.; Alagoz, F. MAC protocols for wireless sensor networks: A survey. IEEE Commun. Mag. 2006, 44, 115–121. [Google Scholar] [CrossRef]

- Maróti, M.; Kusy, B.; Simon, G.; Lédeczi, Á. The Flooding Time Synchronization Protocol. In Proceedings of the 2nd International Conference on Embedded Networked Sensor Systems, (Sensys’04), Baltimore, MD, USA, 3–5 November 2004; pp. 39–49.

- Mao, G.; Fidan, B.; Anderson, B. Wireless sensor network localization techniques. Comput. Netw. 2007, 51, 2529–2553. [Google Scholar] [CrossRef]

- Mainwaring, A.; Culler, D.; Polastre, J.; Szewczyk, R.; Anderson, J. Wireless Sensor Networks for Habitat Monitoring. In Proceedings of the 1st ACM International Workshop on Wireless Sensor Networks and Applications, (WSNA’02), Atlanta, GA, USA, 28 September 2002; pp. 88–97.

- Merrill, W.; Newberg, F.; Sohrabi, K.; Kaiser, W.; Pottie, G. Collaborative Networking Requirements for Unattended Ground Sensor Systems. In Proceedings of IEEE Aerospace Conference, Big Shq, MI, USA, 8–15 March 2003; pp. 2153–2165.

- Lynch, J.; Loh, K. A summary review of wireless sensors and sensor networks for structural health monitoring. Shock Vib. Digest 2006, 38, 91–130. [Google Scholar] [CrossRef]

- Dunkels, A.; Eriksson, J.; Mottola, L.; Voigt, T.; Oppermann, F.J.; Römer, K.; Casati, F.; Daniel, F.; Picco, G.P.; Soi, S.; et al. Application and Programming Survey; Technical report, EU FP7 Project makeSense; Swedish Institute of Computer Science: Kista, Sweden, 2010. [Google Scholar]

- Mottola, L.; Picco, G.P. Programming wireless sensor networks: Fundamental concepts and state of the art. ACM Comput. Surv. 2011, 43, 19:1–19:51. [Google Scholar] [CrossRef]

- Bri, D.; Garcia, M.; Lloret, J.; Dini, P. Real Deployments of Wireless Sensor Networks. In Proceedings of SENSORCOMM’09, Athens/Glyfada, Greece, 18–23 June 2009; pp. 415–423.

- Yick, J.; Mukherjee, B.; Ghosal, D. Wireless sensor network survey. Comput. Netw. 2008, 52, 2292–2330. [Google Scholar] [CrossRef]

- Latré, B.; Braem, B.; Moerman, I.; Blondia, C.; Demeester, P. A survey on wireless body area networks. Wirel. Netw. 2011, 17, 1–18. [Google Scholar] [CrossRef]

- He, T.; Krishnamurthy, S.; Stankovic, J.A.; Abdelzaher, T.; Luo, L.; Stoleru, R.; Yan, T.; Gu, L.; Hui, J.; Krogh, B. Energy-efficient Surveillance System Using Wireless Sensor Networks. In Proceedings of the 2nd International Conference on Mobile Systems, Applications, and Services, (MobiSys’04), Boston, MA, USA, 6–9 June 2004; pp. 270–283.

- Arora, A.; Dutta, P.; Bapat, S.; Kulathumani, V.; Zhang, H.; Naik, V.; Mittal, V.; Cao, H.; Demirbas, M.; Gouda, M.; et al. A line in the sand: A wireless sensor network for target detection, classification, and tracking. Comput. Netw. 2004, 46, 605–634. [Google Scholar] [CrossRef]

- Simon, G.; Maróti, M.; Lédeczi, A.; Balogh, G.; Kusy, B.; Nádas, A.; Pap, G.; Sallai, J.; Frampton, K. Sensor Network-based Countersniper System. In Proceedings of the 2nd International Conference on Embedded Networked Sensor Systems, (SenSys’04), Baltimore, MD, USA, 3–5 November 2004; pp. 1–12.

- Thorstensen, B.; Syversen, T.; Bjørnvold, T.A.; Walseth, T. Electronic Shepherd-a Low-cost, Low-bandwidth, Wireless Network System. In Proceedings of the 2nd International Conference on Mobile Systems, Applications, and Services, (MobiSys’04), Boston, MA, USA, 6–9 June 2004; pp. 245–255.

- Butler, Z.; Corke, P.; Peterson, R.; Rus, D. Virtual Fences for Controlling Cows. In Proceedings of the 2004 IEEE International Conference on Robotics and Automation, (ICRA’04), Barcelona, Spain, 18–22 April 2004; Volume 5, pp. 4429–4436.

- Krishnamurthy, L.; Adler, R.; Buonadonna, P.; Chhabra, J.; Flanigan, M.; Kushalnagar, N.; Nachman, L.; Yarvis, M. Design and Deployment of Industrial Sensor Networks: Experiences from a Semiconductor Plant and the North Sea. In Proceedings of the 3rd International Conference on Embedded Networked Sensor Systems, (SenSys’05), San Diego, CA, USA, 2–4 November 2005; pp. 64–75.

- Sharp, C.; Schaffert, S.; Woo, A.; Sastry, N.; Karlof, C.; Sastry, S.; Culler, D. Design and Implementation of a Sensor Network System for Vehicle Tracking and Autonomous Interception. In Proceeedings of the Second European Workshop on Wireless Sensor Networks, Istanbul, Turkey, 31 January–2 February 2005; pp. 93–107.

- Mount, S.; Gaura, E.; Newman, R.M.; Beresford, A.R.; Dolan, S.R.; Allen, M. Trove: A Physical Game Running on an Ad-hoc Wireless Sensor Network. In Proceedings of the 2005 Joint Conference on Smart Objects and Ambient Intelligence: Innovative Context-Aware Services: Usages and Technologies, (sOc-EUSAI’05), Grenoble, France, 12–14 October 2005; pp. 235–239.

- Ho, L.; Moh, M.; Walker, Z.; Hamada, T.; Su, C.F. A Prototype on RFID and Sensor Networks for Elder Healthcare: Progress Report. In Proceedings of the 2005 ACM SIGCOMM Workshop on Experimental Approaches to Wireless Network Design and Analysis, (E-WIND’05), Philadelphia, PA, USA, 22 August 2005; pp. 70–75.

- Langendoen, K.; Baggio, A.; Visser, O. Murphy Loves Potatoes: Experiences from a Pilot Sensor Network Deployment in Precision Agriculture. In Proceedings of the 20th International IEEE Parallel and Distributed Processing Symposium, (IPDPS 2006), Rhodes Island, Greece, 25–29 April 2006; pp. 1–8.

- Hartung, C.; Han, R.; Seielstad, C.; Holbrook, S. FireWxNet: A Multi-tiered Portable Wireless System for Monitoring Weather Conditions in Wildland Fire Environments. In Proceedings of the 4th International Conference on Mobile Systems, Applications and Services, (MobiSys’06), Uppsala, Sweden, 19–22 June 2006; pp. 28–41.

- Wood, A.; Virone, G.; Doan, T.; Cao, Q.; Selavo, L.; Wu, Y.; Fang, L.; He, Z.; Lin, S.; Stankovic, J. ALARM-NET: Wireless Sensor Networks for Assisted-Living and Residential Monitoring; Technical Report; University of Virginia Computer Science Department: Charlottesville, VA, USA, 2006. [Google Scholar]

- Werner-Allen, G.; Lorincz, K.; Johnson, J.; Lees, J.; Welsh, M. Fidelity and Yield in a Volcano Monitoring Sensor Network. In Proceedings of the 7th Symposium on Operating Systems Design and Implementation, (OSDI’06), Seattle, WA, USA, 6–8 November 2006; pp. 381–396.

- Liu, L.; Ma, H. Wireless Sensor Network Based Mobile Pet Game. In Proceedings of 5th ACM SIGCOMM Workshop on Network and System Support for Games, (NetGames’06), Singapore, Singapore, 30–31 October 2006.

- Lifton, J.; Feldmeier, M.; Ono, Y.; Lewis, C.; Paradiso, J.A. A Platform for Ubiquitous Sensor Deployment in Occupational and Domestic Environments. In Proceedings of the 6th International Conference on Information Processing in Sensor Networks, (IPSN’07), Cambridge, MA, USA, 25–27 April 2007; pp. 119–127.

- Santos, V.; Bartolomeu, P.; Fonseca, J.; Mota, A. B-Live-a Home Automation System for Disabled and Elderly People. In Proceedings of the International Symposium on Industrial Embedded Systems, (SIES’07), Lisbon, Portugal, 04–06 July 2007; pp. 333–336.

- Aylward, R.; Paradiso, J.A. A Compact, High-speed, Wearable Sensor Network for Biomotion Capture and Interactive Media. In Proceedings of the 6th International Conference on Information Processing in Sensor Networks, (IPSN’07), Cambridge, MA, USA, 25–27 April 2007; pp. 380–389.

- Gao, T.; Massey, T.; Selavo, L.; Crawford, D.; Chen, B.; Lorincz, K.; Shnayder, V.; Hauenstein, L.; Dabiri, F.; Jeng, J.; et al. The advanced health and disaster aid network: A light-weight wireless medical system for triage. IEEE Trans. Biomed. Circuits Syst. 2007, 1, 203–216. [Google Scholar] [CrossRef] [PubMed]

- Wilson, J.; Bhargava, V.; Redfern, A.; Wright, P. A Wireless Sensor Network and Incident Command Interface for Urban Firefighting. In Proceedings of the 4th Annual International Conference on Mobile and Ubiquitous Systems: Networking Services, (MobiQuitous’07), Philadelphia, PA, USA, 6–10 August 2007; pp. 1–7.

- Jarochowski, B.; Shin, S.; Ryu, D.; Kim, H. Ubiquitous Rehabilitation Center: An Implementation of a Wireless Sensor Network Based Rehabilitation Management System. In Proceedings of the International Conference on Convergence Information Technology, (ICCIT 2007), Gyeongju, Korea, 21–23 November 2007; pp. 2349–2358.

- Malinowski, M.; Moskwa, M.; Feldmeier, M.; Laibowitz, M.; Paradiso, J.A. CargoNet: A Low-cost Micropower Sensor Node Exploiting Quasi-passive Wakeup for Adaptive Asychronous Monitoring of Exceptional Events. In Proceedings of the 5th International Conference on Embedded Networked Sensor Systems, (SenSys’07), Sydney, Australia, 6–9 November 2007; pp. 145–159.

- Wittenburg, G.; Terfloth, K.; Villafuerte, F.L.; Naumowicz, T.; Ritter, H.; Schiller, J. Fence Monitoring: Experimental Evaluation of a Use Case for Wireless Sensor Networks. In Proceedings of the 4th European Conference on Wireless Sensor Networks, (EWSN’07), Delft, The Netherlands, 29–31 January 2007; pp. 163–178.

- Eisenman, S.B.; Miluzzo, E.; Lane, N.D.; Peterson, R.A.; Ahn, G.S.; Campbell, A.T. BikeNet: A mobile sensing system for cyclist experience mapping. ACM Trans. Sen. Netw. 2010, 6, 1–39. [Google Scholar] [CrossRef]

- Chebrolu, K.; Raman, B.; Mishra, N.; Valiveti, P.; Kumar, R. Brimon: A Sensor Network System for Railway Bridge Monitoring. In Proceedings of the 6th International Conference on Mobile Systems, Applications, and Services (MobiSys’08), Breckenridge, CO, USA, 17–20 June 2008; pp. 2–14.

- Finne, N.; Eriksson, J.; Dunkels, A.; Voigt, T. Experiences from Two Sensor Network Deployments: Self-monitoring and Self-configuration Keys to Success. In Proceedings of the 6th International Conference on Wired/wireless Internet Communications, (WWIC’08), Tampere, Finland, 28–30 May 2008; pp. 189–200.

- Suh, C.; Ko, Y.B.; Lee, C.H.; Kim, H.J. The Design and Implementation of Smart Sensor-based Home Networks. In Proceedings of the International Symposium on Ubiquitous Computing Systems, (UCS’06), Seoul, Korea, 11–13 November 2006; p. 10.

- Song, H.; Zhu, S.; Cao, G. SVATS: A Sensor-Network-Based Vehicle Anti-Theft System. In Proceedings of the 27th Conference on Computer Communications, (INFOCOM 2008), Phoenix, AZ, USA, 15–17 April 2008; pp. 2128–2136.

- Barrenetxea, G.; Ingelrest, F.; Schaefer, G.; Vetterli, M. The Hitchhiker’s Guide to Successful Wireless Sensor Network Deployments. In Proceedings of the 6th ACM Conference on Embedded Network Sensor Systems, (SenSys’08), Raleigh, North Carolina, 5–7 November 2008; pp. 43–56.

- Ince, N.F.; Min, C.H.; Tewfik, A.; Vanderpool, D. Detection of early morning daily activities with static home and wearable wireless sensors. EURASIP J. Adv. Signal Process. 2008. [Google Scholar] [CrossRef]