1. Introduction

Semantic similarity relies on similar attributes and relations between terms, whilst semantic relatedness is based on the aggregate of interconnections between terms [

1]. Semantic similarity is a subset of semantic relatedness: all similar terms are related, but related terms are not necessarily similar [

2]. For example, “river” and “stream” are semantically similar, while “river” and “boat” are dissimilar but semantically related [

3]. Similarity has been characterized as a central element of the human cognitive system and is understood nowadays as a pivotal concept for simulating intelligence [

4]. Semantic similarity/relatedness measures are used to solve problems in a broad range of applications and domains. The domains of application include: (i) Natural Language Processing, (ii) Knowledge Engineering/Semantic Web and Linked Data [

5], (iii) Information retrieval, (iv) Artificial intelligence [

6], and so on. In this article, to accurately present our research, our study is restricted to semantic relatedness.

For geographic information science, semantic relatedness is important for the retrieval of geospatial data [

7,

8], Linked Geospatial Data [

9], geoparsing [

10], and geo-semantics [

11]. For example, when some researchers tried to quantitatively interlink geospatial data [

9], computing the semantic similarity/relatedness of the theme keywords of two geospatial data was required, such as the semantic similarity/relatedness of “land use” and “land cover” between a land use data set and a land cover data set. But computing the semantic similarity/relatedness of these geographic terminology is still an urgent issue to tackle.

In the ISO standard, a thesaurus is defined as a “controlled and structured vocabulary in which concepts are represented by terms, organized so that relationships between concepts are made explicit, and referred terms are accompanied by lead-in entries for synonyms or quasi-synonyms” [

12]. Actually, a thesaurus consists of numerous term trees. A term tree expresses one theme. In a term tree, there is a top term and other terms organized by three types of explicitly indicated relationships: (1) equivalence, (2) hierarchical and (3) associative. Equivalence relationships convey that two or more synonymous or quasi-synonymous terms label the same concept. Hierarchical relationships are established between a pair of concepts when the scope of one of them falls completely within the scope of the other. The associative relationship is used in “suggesting additional or alternative concepts for use in indexing or retrieval” and is to be applied between “semantically or conceptually” related concepts that are not hierarchically related [

13,

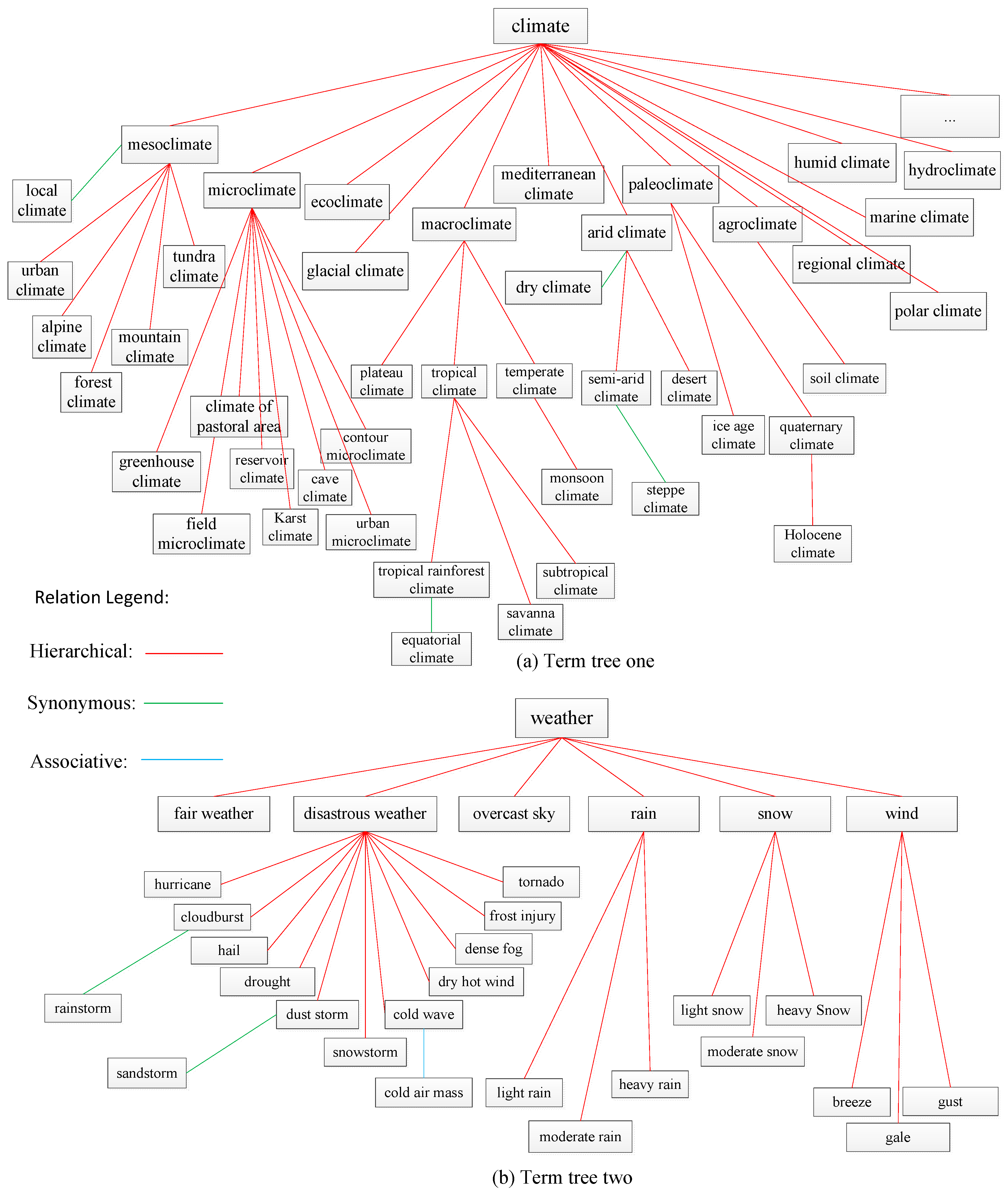

14]. For example, there are two term trees in

Figure 1 whose top terms are “climate” and “weather” respectively. Terms are linked by three types of relationships; hierarchical relations are the main kind. A thesaurus is a sophisticated formalization of knowledge and there are several hundreds of thesauri in the world currently [

13]. It has been used for decades for information retrieval and other purposes [

13]. A thesaurus has also been used to retrieve information in GIScience [

15], although the relationships it contains have not yet been analyzed quantitatively.

Given that there are only three types of relationships between terms in a thesaurus, it is relatively easy to build a thesaurus covering most of the terminologies in a discipline. So it is a practical choice to use a thesaurus to organize the knowledge of a discipline.

Thesaurus for Geographic Sciences was a controlled and structured vocabulary edited by about 20 experts from the Institute of Geographic Sciences and Natural Resources Research (IGSNRR), Lanzhou Institute of Glaciology and Cryopedology (LIGC), and the Institute of Mountain Hazards and Environment (IMHE) of the Chinese Academy of Sciences. Over 10,800 terminologies of Geography and related domains were formalized in it. It was written in English and Chinese [

16]. Its structure and modeling principles conformed to the international standards ISO-25964-1-2011 and its predecessor [

12]. In this article, “the thesaurus” refers to the

Thesaurus for Geographic Sciences hereafter.

WordNet was a large generic lexical database of English which was devised by psychologists, linguists, and computer engineers from Princeton University [

17]. In WordNet, nouns, verbs, adjectives and adverbs are grouped into sets of cognitive synonyms (synsets), each expressing a distinct concept. Synsets are interlinked by means of conceptual-semantic and lexical relations. In WordNet 3.0, there are 147,278 concept nodes in which 70% are nouns. There are a variety of semantic relations in WordNet, such as synonymy, antonymy, hypernymy, hyponymy and meronymy. The most common relations are hypernymy and hyponymy [

18]. WordNet is an excellent lexicon to conduct word similarity computation. The main similarity measures for WordNet include edge counting-based methods [

1], information theory-based methods [

19], Jiang and Conrath’s methods [

20], Lin’s methods [

21], Leacock and Chodorow’s methods [

22], Wu and Palmer’s methods [

23], and Patwardhan and Pedersen’s vector and vectorp methods [

24]. These measures obtain varying plausibility depending on the context and can be combined into ensembles to obtain higher plausibility [

25]. WordNet is created by linguists, and it includes most of the commonly used concepts. Nevertheless, it does not cover most of the terminologies in a specific discipline. For example, in geography, the terminologies of “phenology”, “foredune”, “regionalization”, “semi-arid climate”, “periglacial landform”, and so on are not recorded in the WordNet database. Thus, when we want to tackle issues where semantic relatedness matters in a concrete domain, that is, in geography, WordNet fails. HowNet is a generic lexical database in Chinese and English. It was created by Zhendong Dong [

26]. HowNet uses a markup language called KDML to describe the concept of words which facilitate computer processing [

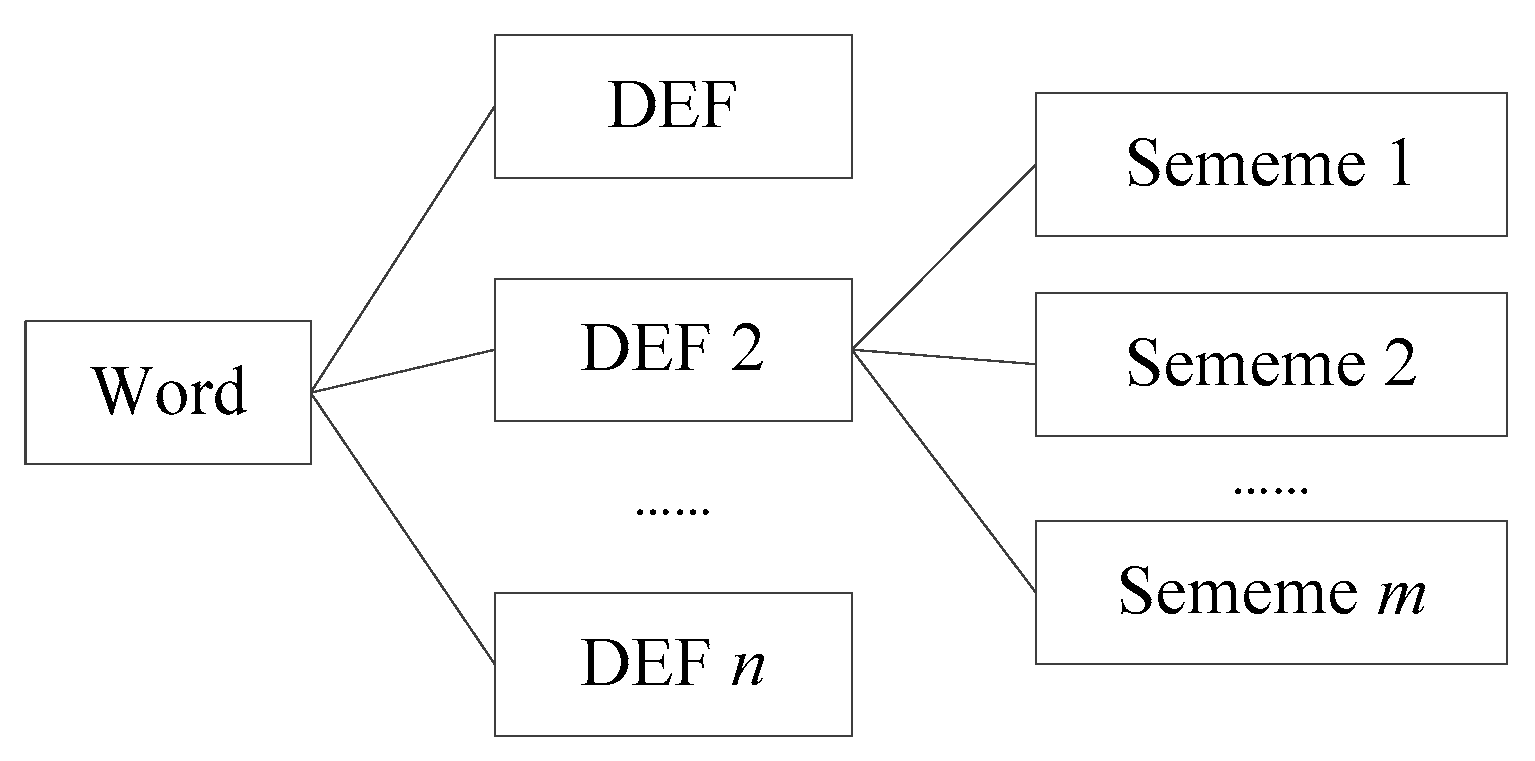

27]. There are more than 173,000 words in HowNet which are described by bilingual concept definition (DEF for short). A different semantic of one word has a different DEF description. A DEF is defined by a number of sememes and the descriptions of semantic relations between words. It is worth mentioning that a sememe is the most basic and the smallest unit which cannot be easily divided [

28], and the sememes are extracted from about 6000 Chinese characters [

29]. One word description in HowNet is shown in

Figure 2.

An example of one DEF of “fishing pole” can be described as follows:

In the example above, the words describing DEF, such as

tool,

catch,

instrument,

patient, and

fish, are sememes. Then the description of DEF is a tree-like structure as shown in

Figure 3. The relations between different sememes in DEF are described as a tree structure in the taxonomy document of HowNet.

To compute the similarity of Chinese words, Liu proposed an up-down algorithm on HowNet and achieved a good result [

28]. Li proposed an algorithm based on the hierarchic DEF descriptions of words on HowNet [

27]. Similar to WordNet, HowNet is also created by linguists and computer engineers. It includes most of the commonly used concepts and can not be used to compute the similarity of terminologies in a concrete domain.

In this article, we want to combine the generic lexical database with the thesaurus and present a new algorithm to compute the relatedness of any two terms in the Thesaurus for Geographic Science to realize the practical application of similarity/relatedness in geography for English and Chinese users.

The remainder of this article is organized as follows.

Section 2 surveys relevant literature on semantic similarity or relatedness and issues that exist in semantic similarity and relatedness measures.

Section 3 details TLRM, the proposed Thesaurus Lexical Relatedness Measure based on WordNet or HowNet and the

Thesaurus for Geographic Sciences. Subsequently, an empirical evaluation of the measure is presented and discussed in

Section 4. Then, we apply the proposed measure to geospatial data retrieval in

Section 5. Lastly, we conclude with a discussion and a summary of directions for future research in

Section 6 and

Section 7.

2. Background

A semantic similarity/relatedness measure aims at quantifying the similarity/relatedness of terms as a real number, typically normalized in the interval

. There are numerous semantic similarity/relatedness measures proposed by researchers in different domains. These measures can be extensively categorized into two types: knowledge-based methods and corpus-based methods. Knowledge-based techniques utilize manually-generated artifacts as a source of conceptual knowledge, such as taxonomies or full-fledged ontologies [

3]. Under a structuralist assumption, most of these techniques observe the relationships that link the terms, assuming, for example, that the distance is inversely proportional to the semantic similarity [

1]. The corpus-based methods, on the other hand, do not require explicit relationships between terms and compute the semantic similarity of two terms based on their co-occurrence in a large corpus of text documents [

30,

31]. In terms of precision, the knowledge-based measures generally outperform the corpus-based ones [

32].

In this article, we only review the literature of semantic similarity/relatedness measures based on the thesaurus knowledge sources or in the context of GIScience.

As a sophisticated and widely used knowledge formalization tool, the thesaurus has been used for computing the semantic similarity/relatedness of terms. For example, Qiu and Yu [

33] devised a new thesaurus model and computed the conceptual similarity of the terms in a material domain thesaurus. In their thesaurus model, the terms with common super-class had been assigned a similarity value by domain experts. McMath et al. [

34] compared several semantic relatedness (Distance) algorithms for terms in the medical and computer science thesaurus to match documents to the queries of users. Rada et al. [

35] calculated the minimum conceptual distance for terms with hierarchical and non-hierarchical relations in EMTREE (The Excerpta Medica) thesaurus for ranking documents. Golitsyna et al. [

36] proposed semantic similarity algorithms which were based on a set-theoretical model using hierarchical and associative relationships for terms in a combined thesaurus. Han and Li [

37] quantified the relationships among the terms in a forestry thesaurus to compute the semantic similarity of forestry terms and built a semantic retrieval tool. Otakar and Karel [

38] computed the similarity of the concept “forest” from seven different thesauri. Those approaches obtained nice results when applied to improve information retrieval processes. However, these methods remain incomplete and inaccurate when used to compute a semantic similarity/relatedness of terms. There are three aspects of issues in existing semantic similarity/relatedness measures based on a thesaurus. The first is that the measures can only compute the semantic similarity/relatedness of two terms which are in the same term tree of a thesaurus. The semantic similarity/relatedness of terms in different term trees cannot be computed or the value is assigned to 0 directly, which is not consistent with the facts. For example, in

Figure 1, the relatedness of the terms of “macroclimate” and “polar climate”—which are in the same term tree—can be computed to 0.63 (assuming), but the semantic relatedness of “climate” and “fair weather” is assigned to 0 directly according to the existing method [

37]. The second issue is that the researchers have not evaluated their semantic similarity/relatedness results because there are no suitable benchmarks available. The third issue is that some parameters of their algorithms have been directly obtained from previous research. Hence, they may not represent the optimal values for their specific situation. These issues have hampered the practical application of semantic similarity/relatedness, such as in a large-scale Geographic Information Retrieval System.

For geographic information science, several semantic similarity/relatedness measures have been proposed. For example, Rodríguez and Egenhofer [

7] have extended Tversky’s set-theoretical ratio model in their Matching-Distance Similarity Measure (MDSM) [

39]. Schwering and Raubal [

40] proposed a technique to include spatial relations in the computation of semantic similarity. Janowicz et al. [

11] developed Sim-DL, a semantic similarity measure for geographic terms based on description logic (DL), a family of formal languages for the Semantic Web. Sunna and Cruz [

41] applied network-based similarity measures for ontology alignment. As such approaches rely on rich, formal definitions of geographic terminologies, it is almost impossible to build a large enough knowledge network required for a large-scale Geographic Information Retrieval System that covers most of the terminologies in the discipline of geography. Ballatore, Wilson, and Bertolotto [

42] explored graph-based measures of semantic similarity on the OpenStreetMap (OSM) Semantic Network. They [

43] have also outlined a Geographic Information Retrieval (GIR) system based on the semantic similarity of map viewport. In 2013, Ballatore et al. [

3] proposed a lexical definition semantic similarity approach using paraphrase-detection techniques and the lexical database WordNet based on volunteered lexical definitions which were extracted from the OSM Semantic Network. More recently, Ballatore et al. [

10] proposed a hybrid semantic similarity measure, the network-lexical similarity measure (NLS). The main limitation of these methods lies in the lack of a precise context for the computation of the similarity measure [

10], because the crowdsourcing geo-knowledge graph of the OpenStreetMap Semantic Network is not of high quality intrinsically in terms of knowledge representation and has limitations in coverage.

Therefore, in this article, we use the thesaurus as an expert-authored knowledge base which is relatively easy to construct, utilize a generic lexical database (hereafter, the generic lexical database refers to WordNet or HowNet) to interlink the term trees in the thesaurus and adopt a knowledge-based approach to compute the semantic relatedness of terminologies. Furthermore, a new baseline for the evaluation of the relatedness measure of geographic terminologies will be built and used to evaluate our measure. In this way, we can reliably compute the relatedness of any two terms recorded in the thesaurus by leveraging quantitative algorithms. In the next section, we will first provide details regarding the semantic relatedness algorithms.

4. Evaluation

The quality of a computational method for computing term relatedness can only be established by investigating its performance against human common sense [

6]. To evaluate our method, we have to compare the relatedness computed by our algorithms against what experts rated on a benchmark terminology set. There are many benchmark data sets used to evaluate similarity/relatedness algorithms, for example, that of Miller and Charles [

48], called M & C, that of Rubenstein and Goodenough [

49], called R & G, that of Finkelstein et al. [

50], called WordSimilarity-353, and that of Nelson et al. [

51], called WordSimilarity-1016, in Natural Language Processing. In the areas of GIScience, Rodríguez and Egenhofer [

7] collected similarity judgements about geographical terms to evaluate their Matching-Distance Similarity Measure (MDSM). Ballatore et al. [

2] proposed GeReSiD as a new open dataset designed to evaluate computational measures of geo-semantic relatedness and similarity. Rodríguez and Egenhofer’s similarity evaluation datasets and GeReSiD are gold standards for the evaluation of geographic term similarity or relatedness measures. However, their terms mostly regard geographic entities, such as the “theater” and “stadium”, “valley” and “glacier”. They are not very suitable to evaluate the relatedness of geography terminologies, such as “marine climate” and “polar climate”. We call the Rodríguez and Egenhofer’s similarity evaluation data set and GeReSiD geo-semi-terminology evaluation data sets. Now we have to build a geo-terminology evaluation dataset that can be used to evaluate the relatedness measure of geography terminology.

This section presents the Geo-Terminology Relatedness Dataset (GTRD), a dataset of expert ratings that we have developed to provide a ground truth for assessment of the computational relatedness measurements.

4.1. Survey Design and Results

Given that terminologies deal with professional knowledge, we should conduct the survey with geography experts. The geographic terms included in this survey are taken from the Thesaurus for Geographic Science. First, we selected term pairs from a term tree, which were the combinations of terms with different relationships, distances, depths, and local semantic density. Then, other term pairs were selected from different term trees whose top terms were of different relatedness ranging from very high to very low, which were also combinations of terms with different relationships, distances, depths, and local semantic density. We eventually selected a set of 66 terminology pairs for the relatedness rating questionnaire which could be logically divided into two parts: 33 pairs of the data set were the combinations of terms with different characteristics from the same and different term trees, and the other 33 pairs were the combinations of terms with duplicate characteristics compared to the former. The 33 terminology pairs of the data set were assembled by the following components: 8 high-relatedness pairs, 13 middle-relatedness pairs, and 12 low-relatedness pairs.

We sent emails attaching the questionnaires to 167 geography experts whose research interests included Physical Geography, Human Geography, Ecology, Environmental Science, Pedology, Natural Resources, and so on. The experts were asked to judge the relatedness of the meaning of 66 terminology pairs and rate them on a 5-point scale from 0 to 4, where 0 represented no relatedness of meaning and 4 perfect synonymy or the same concept. The ordering of the 66 pairs was randomly determined for each subject.

Finally, we received 53 responses, among which, two experts did not complete the task and another two subjects provided incorrect ratings, that is the highest rating score offered was 5. These four responses were discarded. In order to detect unreliable and random answers of experts, we computed the Pearson’s correlation coefficient between every individual subject and the mean response value. Pearson’s correlation coefficient, also referred to as the Pearson’s r or bivariate correlation, is a measure of the linear correlation between two variables X and Y. Generally, the value of [0.8,1] of Pearson’s r means a strong positive correlation [

52], so the responses with Pearson’s correlation coefficient <0.8 were also removed from the data set in order to ensure the high reliability of the relatedness gold standard. Eight experts’ judgments were removed because of a lower Pearson’s correlation coefficient. Finally, 41 expert responses were utilized as the data source for our relatedness baseline.

Intra-Rater Reliability (IRR) refers to the relative consistency in ratings provided by multiple judges of multiple targets [

53]. In contrast, Intra-Rater Agreement (IRA) refers to the absolute consensus in scores furnished by multiple judges for one or more targets [

54]. Following LeBreton and Senter [

55] who recommended using several indices for IRR and IRA to avoid the bias of any single index, the following indices for IRA and IRR were selected: the Pearson’s

r [

56], the Kendall’s

[

57], the Spearman’s

for IRR; the Kendall’s

[

58], James, Demaree and Wolf’s

[

59] for IRA.

Table 1 shows the values of the indices of IRR and IRA.

The indices of IRR and IRA in

Table 1 indicate that the GTRD possesses a high reliability and is in agreement among geography experts. The correlation is satisfactory, and is better than analogous surveys [

2].

Given the set of terminology pairs and expert ratings, we computed the mean ratings of the 41 experts, and normalized them in the interval of [0,1] as relatedness scores (

https://github.com/czgbjy/GTRD). The GTRD is shown in

Table 2 and

Table 3.

4.2. Determination of Parameters

There are five parameters to be determined in our algorithms in

Section 3, which are

,

,

,

,

with

[0,1]. Determining their values is significantly important. Many researchers have searched for the optimal values by traversing every combination of discrete equidistant levels of different parameters when they enssure the values of the parameters are in the interval of [0,1]. However, in our research, we cannot enssure that the values of

,

,

,

are in the range of [0,1]. As discussed in the previous section, GTRD consists of two parts, 33 pairs of which are the terminologies with different semantic characteristics, that is different distance, depth, local semantic density and in different term trees in the thesaurus, and the remainders of which possess duplicate characteristics with the former. Therefore, we can use the former 33 pairs of terminologies, which are called inversion pairs, to determine the values of

,

,

,

,

, and utilize the other 33, which are called evaluation pairs, to evaluate the algorithms. We build the relatedness equations on the inversion pairs whose independent variables are

,

,

,

,

and dependent variables are the corresponding relatedness from GTRD. Then, we use the function of ‘fsolve’ in MATLAB to solve these equations. The functions of ‘fsolve’ are based on the Levenberg-Marquardt and trust-region methods [

60,

61]. In mathematics and computing, the Levenberg–Marquardt algorithm (LMA or just LM), also known as the damped least-squares (DLS) method, is used to solve non-linear least squares problems. The trust-region dogleg algorithm is a variant of the Powell dogleg method described in [

62].

At last, we obtain the optimal values of

,

,

,

,

which are

= 0.2493,

= 18.723,

= 0.951,

= 4.158,

= 0.275 for WordNet and

= 0.2057,

= 11.102,

= 0.8,

= 4.112,

= 0.275 for HowNet. The inversion pairs of GTRD are shown in

Table 2.

To evaluate the performance of our measure, we compute the relatedness on the evaluation pairs using the optimal values of

,

,

,

,

. Then we obtain the tie-corrected Spearman’s correlation coefficient

between the computed relatedness and the expert ratings. The correlation

captures the cognitive plausibility of our computational approach, using the evaluation pairs as ground truth, where

= 1 corresponds to perfect correlation,

= 0 corresponds to no correlation and

= −1 corresponds to perfect inverse correlation. The results are shown in

Table 4 for WordNet and HowNet.

The relatedness values generated by our Thesaurus–Lexical Relatedness Measure (TLRM) and corresponding values from GTRD on evaluation pairs are shown in

Table 3.

It can be determined that the relatedness computed by TLRM is substantially consistent with expert judgements. The coefficient is in the interval of [0.9,0.92]. Therefore, TLRM is suitable to compute semantic relatedness for a Thesaurus based knowledge source. In the next section, we will apply the TLRM on a geospatial data sharing portal to improve the performance of data retrieval.

5. Application of TLRM

The National Earth System Science Data Sharing Infrastructure (NESSDSI,

http://www.geodata.cn) is one of the national science and technology infrastructures of China. It provides one-stop scientific data sharing and open services. As of the 15 November 2017, NESSDSI has shared 15,142 multiple-disciplinary data sets, including geography, geology, hydrology, geophysics, ecology, astronomy, and so on. The page view of the website has exceeded 21,539,917.

NESSDSI utilizes the ISO19115-based metadata to describe geospatial datasets [

63]. Users search for the required data set via the metadata. The metadata of NESSDSI includes the data set title, data set language, a set of thematic keywords, abstract, category, spatial coverage, temporal coverage, format, lineage, and so on (The spatial coverage was represented by a geographic bounding box and a geographic description in NESSDSI. Parts of the metadata of a geospatial dataset in NESSDSI are shown in

Figure 4). All the metadata and datasets can be openly accessed. We extracted 7169 geospatial data sets and their metadata from NESSDSI and applied TLRM to realize the semantic retrieval of geospatial data. Then, we compared the retrieval results between semantic retrieval and the traditional retrieval methods, which were mainly keyword-matching techniques.

In general, geographic information retrieval concerns the retrieval of thematically and geographically relevant information resources [

64,

65,

66]. A GIR facility must evaluate the relatedness in terms of both the theme and spatial location (sometimes the temporal similarity is also evaluated, but it is not the most common situation). For geospatial data retrieval, it is required for users to type the search words of a theme and location. For example, when users are searching for the land use data set of San Francisco, they usually type a keyword pair (“land use”, “San Francisco”) to retrieve data in a geospatial data sharing website. Hence, we devise the following algorithm to retrieve geospatial data:

where

is the thematic relatedness between the theme words the user typed and the thematic keywords of geospatial data,

is the geographical relatedness between the locations the user typed and the spatial coverage of the geospatial data, and

is the Matching Score (MS) between the data set that user desired and the geospatial data set from NESSDSI. In addition,

and

are weights in the interval

[0,1] and

that can be determined by using the weight measurement method (WMM) of the analytic hierarchy process (AHP) (hereafter referred to as AHP-WMM) [

67]. The detailed steps of AHP-WMM are as follows: First, we should establish a pairwise comparison matrix of the relative importance of all factors that affect the same upper-level goal. Then, domain experts establish pairwise comparison scores using a 1–9 preference scale. The normalized eigenvector of the pairwise comparison matrix is regarded as the weight of the factors. If the number of factors is more than two, a consistency check is required. The standard to pass the consistency check is that the consistency ratio (CR) is less than 0.1. The weights of

and

calculated by AHP-WMM are 0.667 and 0.333 respectively.

Recall is the ratio of the number of retrieved relevant records to the total number of relevant records in the database. Precision is the ratio of the number of retrieved relevant records to the total number of retrieved irrelevant and relevant records. We will compare the recall and precision between the traditional keyword-matching technique and the semantic retrieval technique based on the TLRM proposed in this article.

A traditional GIR search engine based on a keyword-matching technique will match the theme and location keywords typed by users with the thematic keywords and geospatial coverage description of geospatial metadata, respectively. If the two are both successfully matched, and are equal to 1, and MS is equal to 1. Otherwise, MS is equal to 0.

Our semantic retrieval techniques will not only match the user typed theme keywords with that of geospatial metadata, but also match the synonyms, hyponyms, hypernyms, and the synonyms of hyponyms and hypernyms of the typed theme keywords with that of geospatial metadata to search for geospatial data set within a larger range. At the same time, the relatedness between the typed theme keyword and its expansions is computed by using our TLRM which will be the value of . For the sake of simplicity, we will also match location keywords with the geospatial coverage description of geospatial metadata. If the location keywords the user typed match the geospatial coverage description of geospatial metadata, then is equal to 1, otherwise, = 0. The retrieved data sets both have and .

Based on the devised experiment above, we typed 30 pairs of the most frequently used keywords which were derived from the user access logs of NESSDSI to search for data in 7169 geospatial datasets. In 2017, the users of NESSDSI typed 60085 keywords. The 30 pairs of keywords were used 19,164 times. Then we computed the Recall and Precision of each pair of keywords for the traditional keyword-matching and our semantic retrieval techniques. The 30 pairs of keywords and their Recall and Precision are listed in

Table 5.

Among the 30 queries in

Table 5, the recall for 18 queries was improved by our semantic retrieval techniques, while it was unchanged for the other 12; the precision of 20 queries was unchanged, with 3 queries increased and 7 queries decreased. Our semantic retrieval techniques can improve the recall of geospatial data retrieval in most situations but decrease the precision in minority queries according to the evaluation results of the 30 most frequently used queries of NESSDSI. Moreover, our semantic retrieval techniques can rank the retrieval result. For example, the retrieval results of <‘Natural resources’, ‘China’> for the two retrieval methods are shown in

Table 6 and

Table 7.

It is obvious that semantic retrieval techniques based on TLRM are more capable of discovering geospatial data. The semantic retrieval techniques can find the data sets of subtypes of natural resources, such as the land resources, energy resources, water resources, and biological resources data sets in this example, though some of the data sets (ID: 6–8) are not the correct results. Furthermore, using the TLRM we can quantify the Matching Score between the query of users and the geospatial data. The retrieved geospatial data sets can be ranked by matching scores. With the ranked results users can find data of interest more quickly.

7. Conclusions

In this article, we described the thesaurus–lexical relatedness measure (TLRM), a measure to capture the relatedness of any two terms in the Thesaurus for Geographic Science. We successfully interlinked the term trees in the thesaurus and quantified the relations of terms. We built a new evaluative baseline for geo-terminology-semantic relatedness and the cognitive plausibility of the TLRM was evaluated, obtaining high correlations with expert judgements. Finally, we applied the TLRM to geospatial data retrieval and improved the recall of geospatial data retrieval to some extent according to the evaluation results of 30 most frequently used queries of the NESSDSI.

We first combined the generic lexical database with a professional controlled vocabulary and proposed new algorithms to compute the relatedness of any two terms in the thesaurus. Our algorithms are not only suitable for geography, but also for other disciplines as well.

Although the TLRM obtained a high cognitive plausibility, some limitations remain to be addressed in future research. Despite the fact that there are more than 10,000 terminologies in the thesaurus, we cannot guarantee that all geographic terminologies have been included. Automatically and continually adding unrecorded geographic terminologies to the thesaurus database remains a challenge to be addressed. Furthermore, there are only three types of relationships between terminologies in a thesaurus. This is both an advantage and a disadvantage. The advantage is that it is relatively easy to build a thesaurus covering most of the terminologies in a discipline. The disadvantage is that three kinds of relationships are not sufficient to precisely represent the relationships between geographic terminologies. Geo-knowledge triples may be alternatives in the future. In addition, we evaluated the TLRM on its ability to simulate expert judgements on the entire range of semantic relatedness, that is from very related to very unrelated concepts. Given that no cognitive plausibility evaluation is fully generalizable, robust evidence can only be constructed by cross-checking different evaluations. For example, complementary indirect evaluations could focus on specific relatedness-based tasks, such as word sense disambiguation.

In the next study, we will share and open the thesaurus database to the public and any geography expert can add terms to or revise the database. We will render it a consensual geographic knowledge graph built via expert crowdsourcing. At the same time, we will continue to find the optimal functions to further improve the cognitive plausibility of TLRM and cross-check different evaluations. We are planning to build a geography semantic sharing network and share the relatedness measure interface with all users.