Visual-LiDAR Odometry Aided by Reduced IMU

Abstract

:1. Introduction

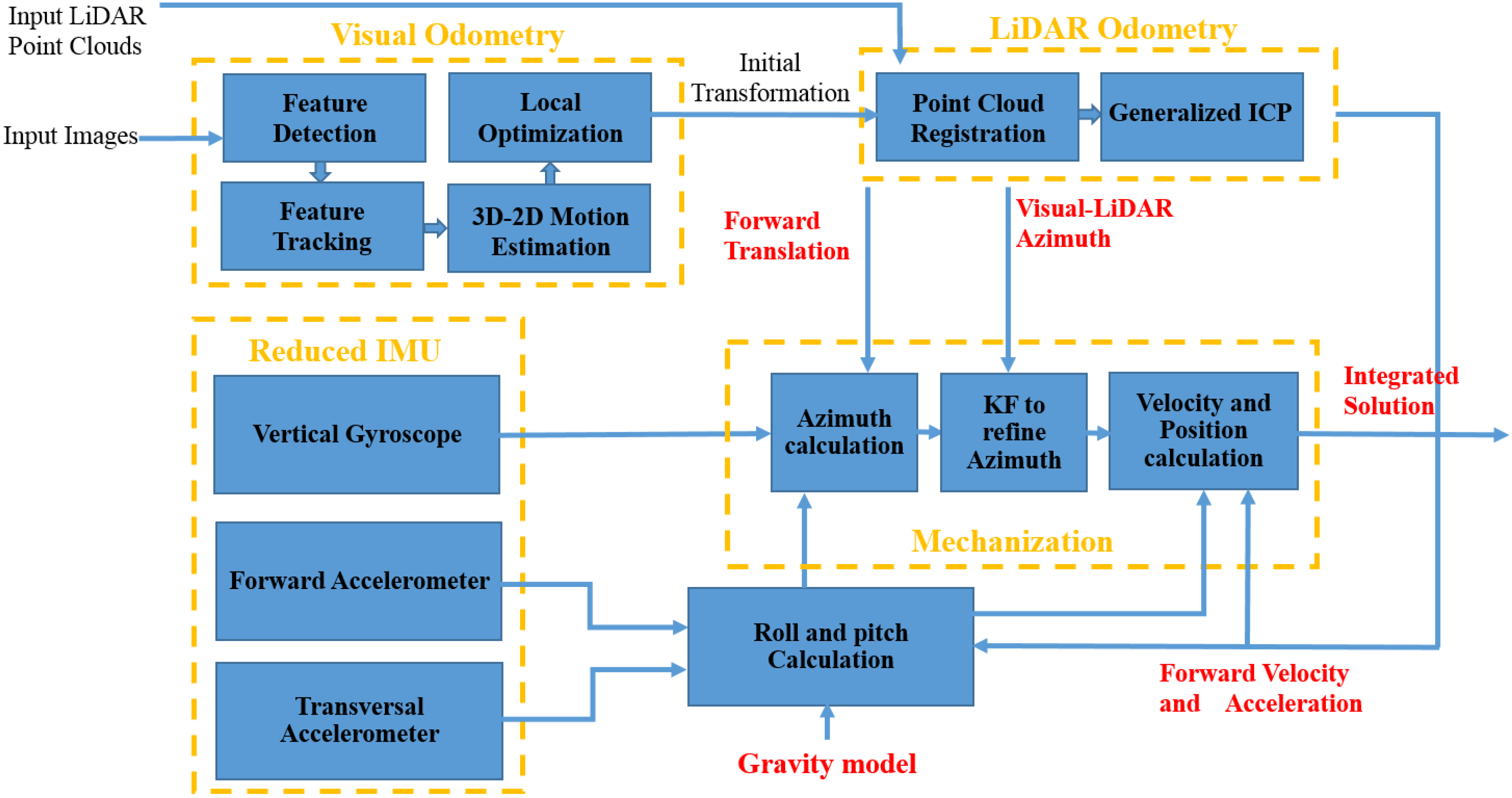

2. Previous Works

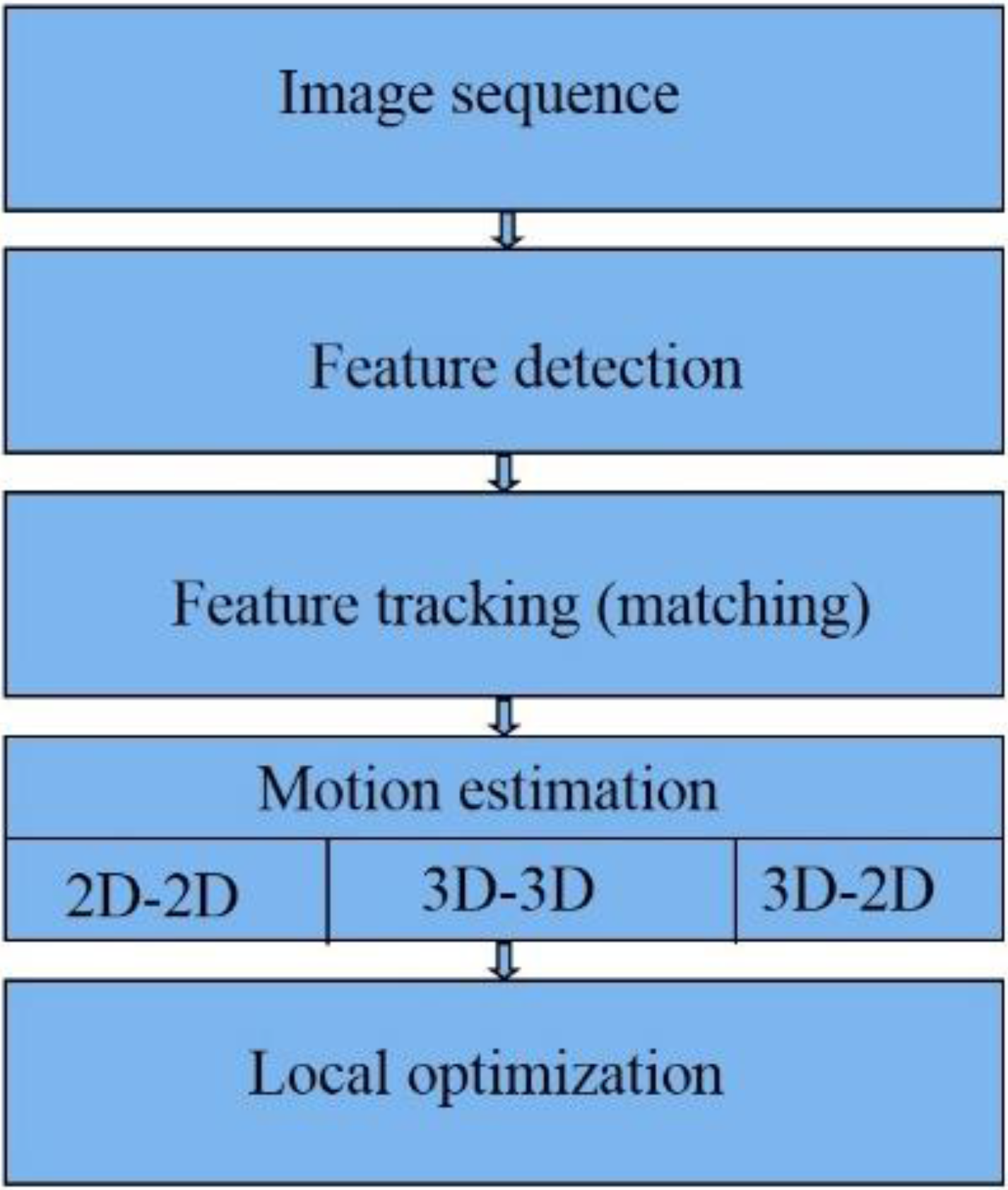

2.1. Visual Odometry

- (1)

- There is sufficient illumination in the environment

- (2)

- Static objects in the image dominates over moving objects

- (3)

- There is enough texture to allow apparent motion to be extracted

- (4)

- There is sufficient scene overlap between consecutive frames

2.2. LiDAR Odometry

2.3. Sensor Integration

3. Methodology

4. Results and Analysis

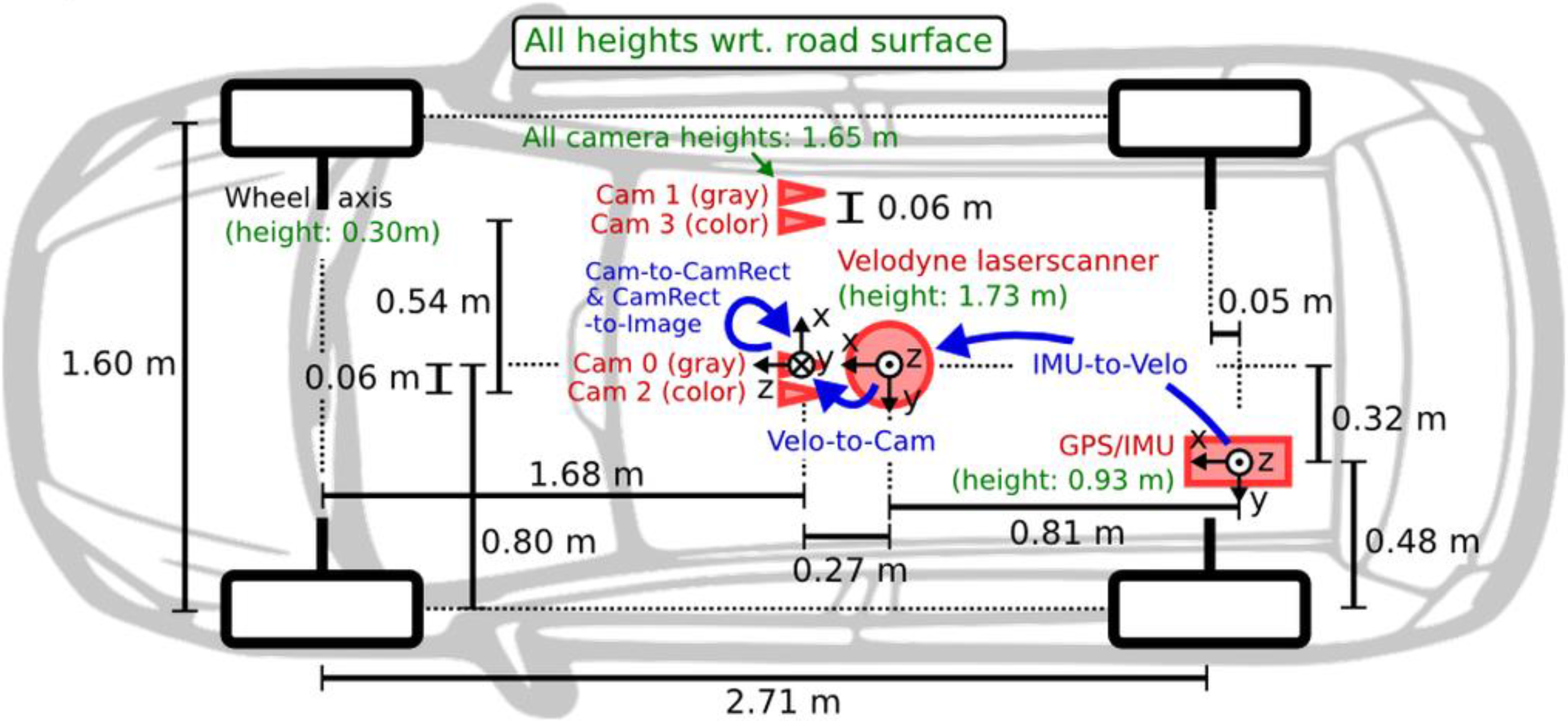

4.1. Data Description

- GPS/IMU: OXTS RT 3003

- Laser scanner: Velodyne HDL-64E

- Grayscale cameras, 1.4 Megapixels: Point Grey Flea 2 (FL2-14S3M-C)

- Color cameras, 1.4 Megapixels: Point Grey Flea 2 (FL2-14S3C-C)

4.2. Sensor Calibration

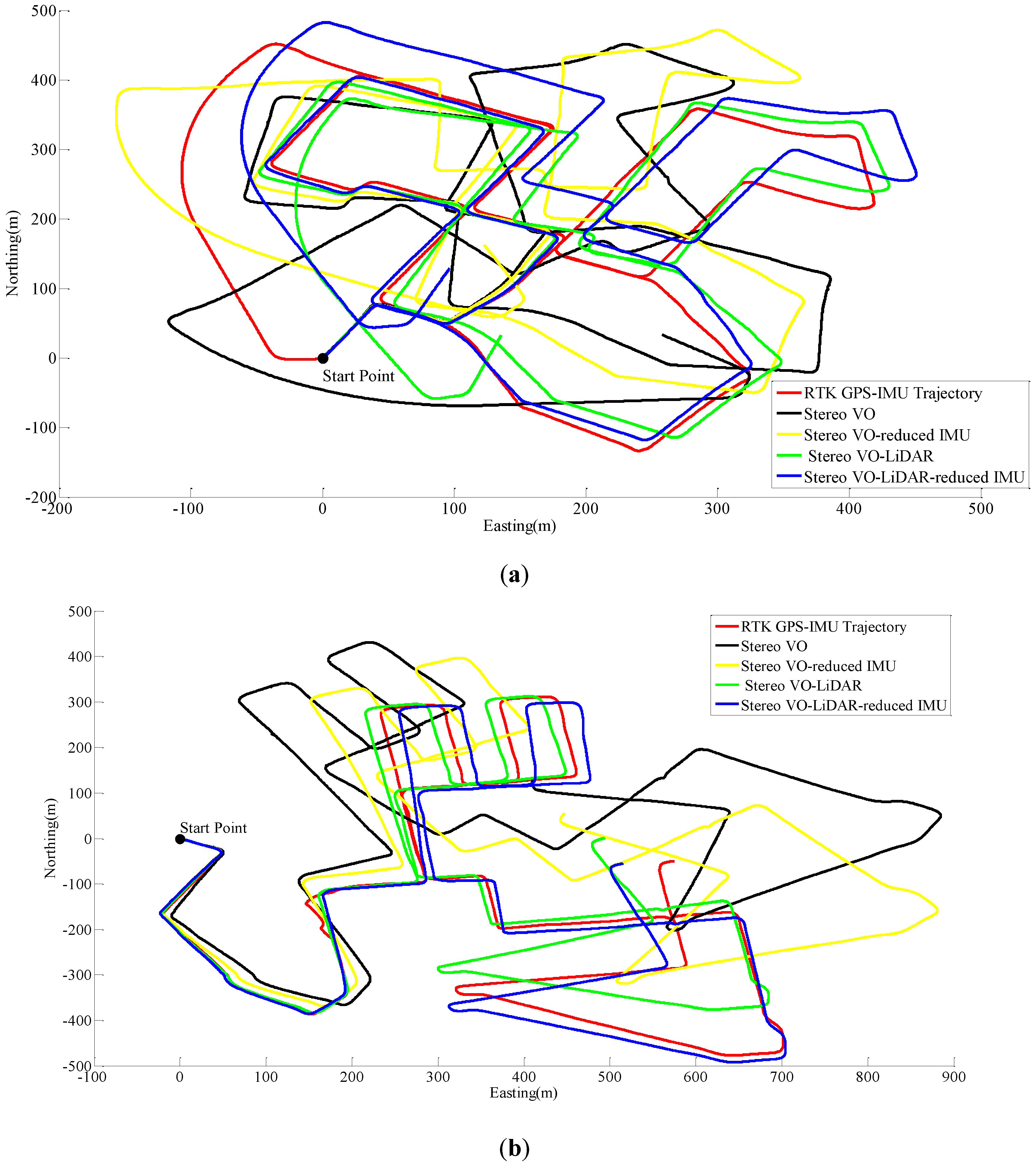

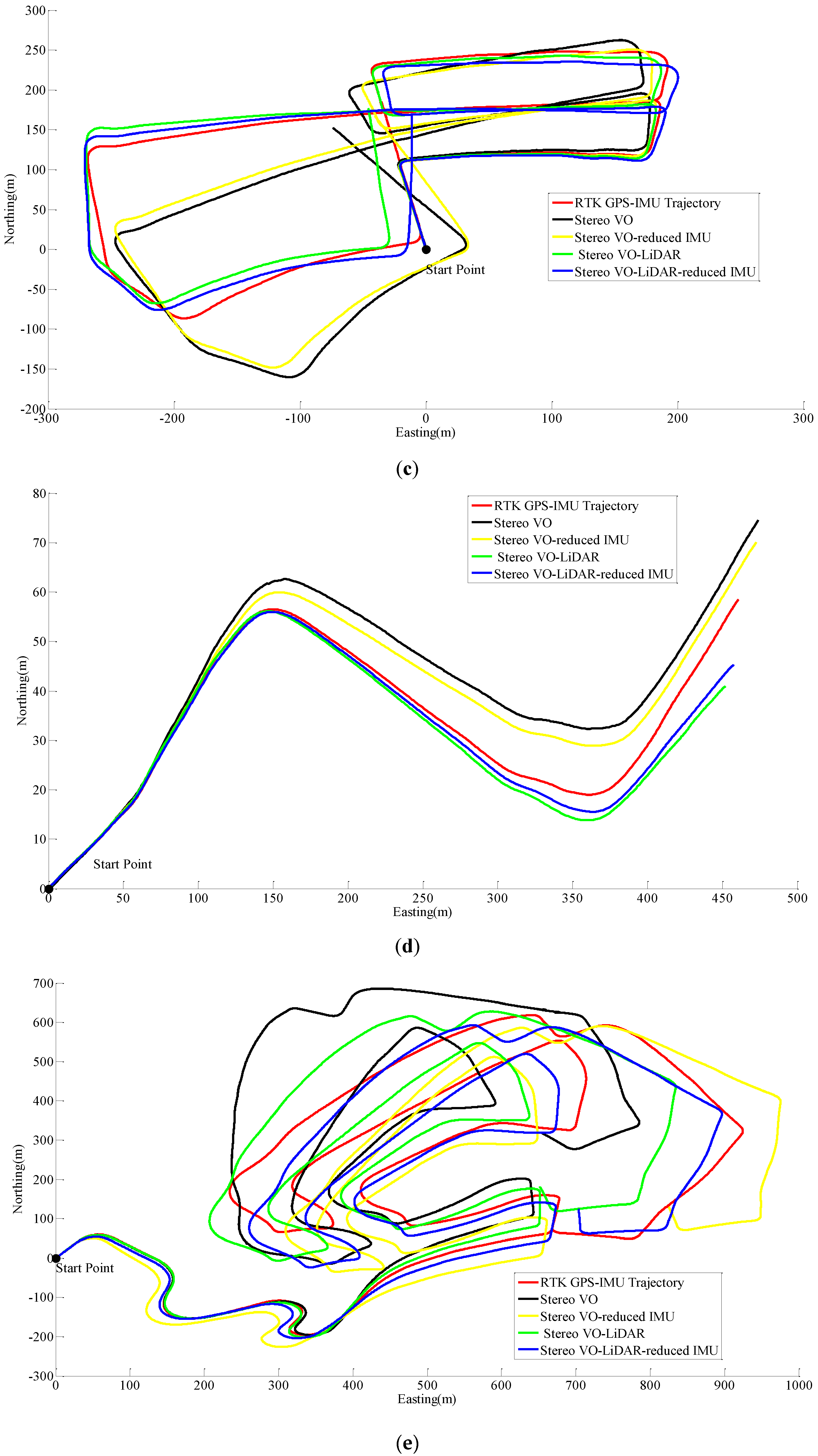

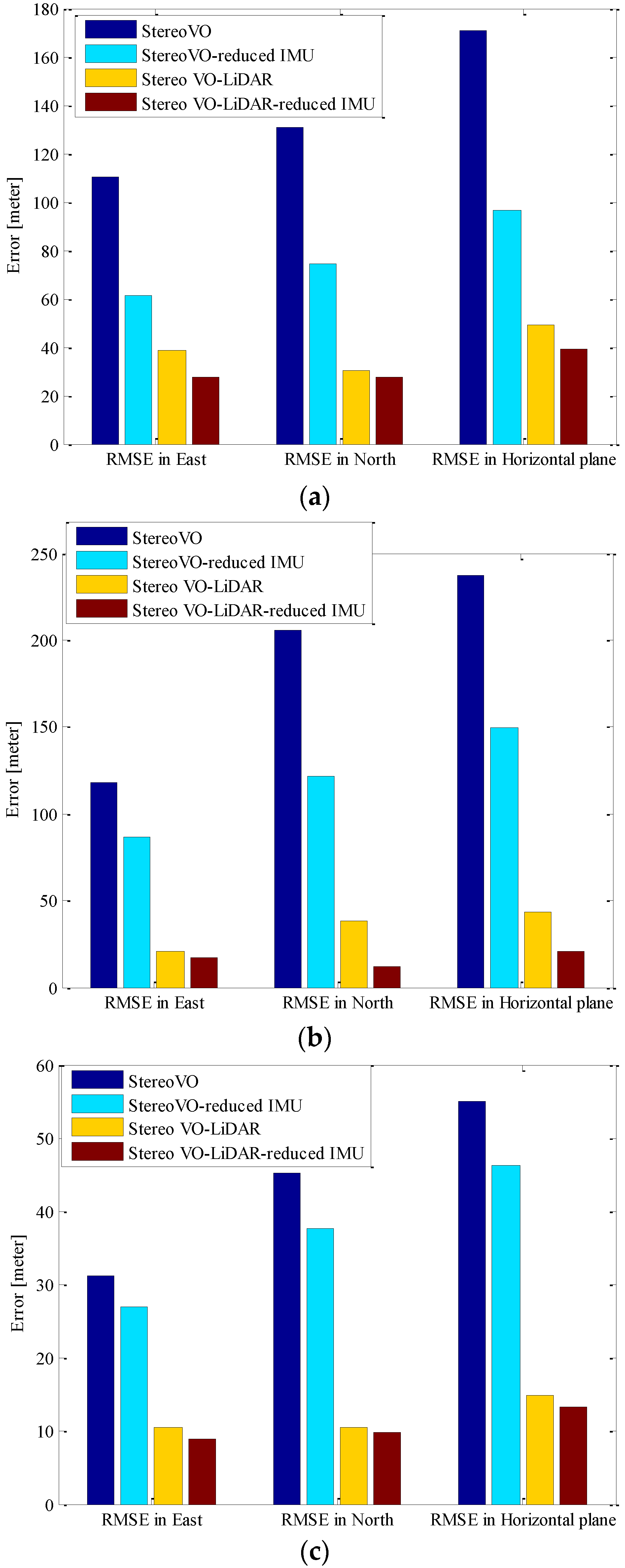

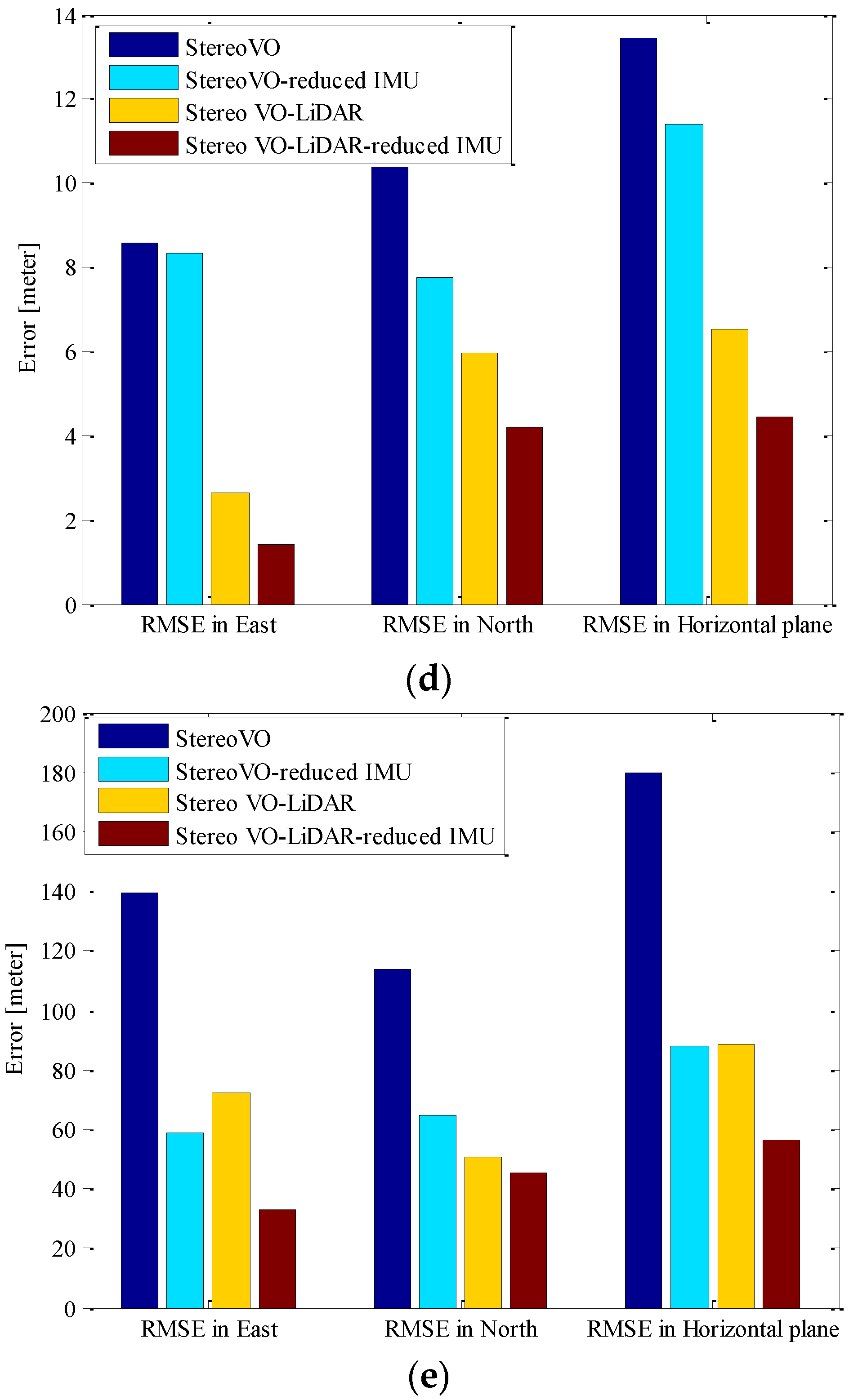

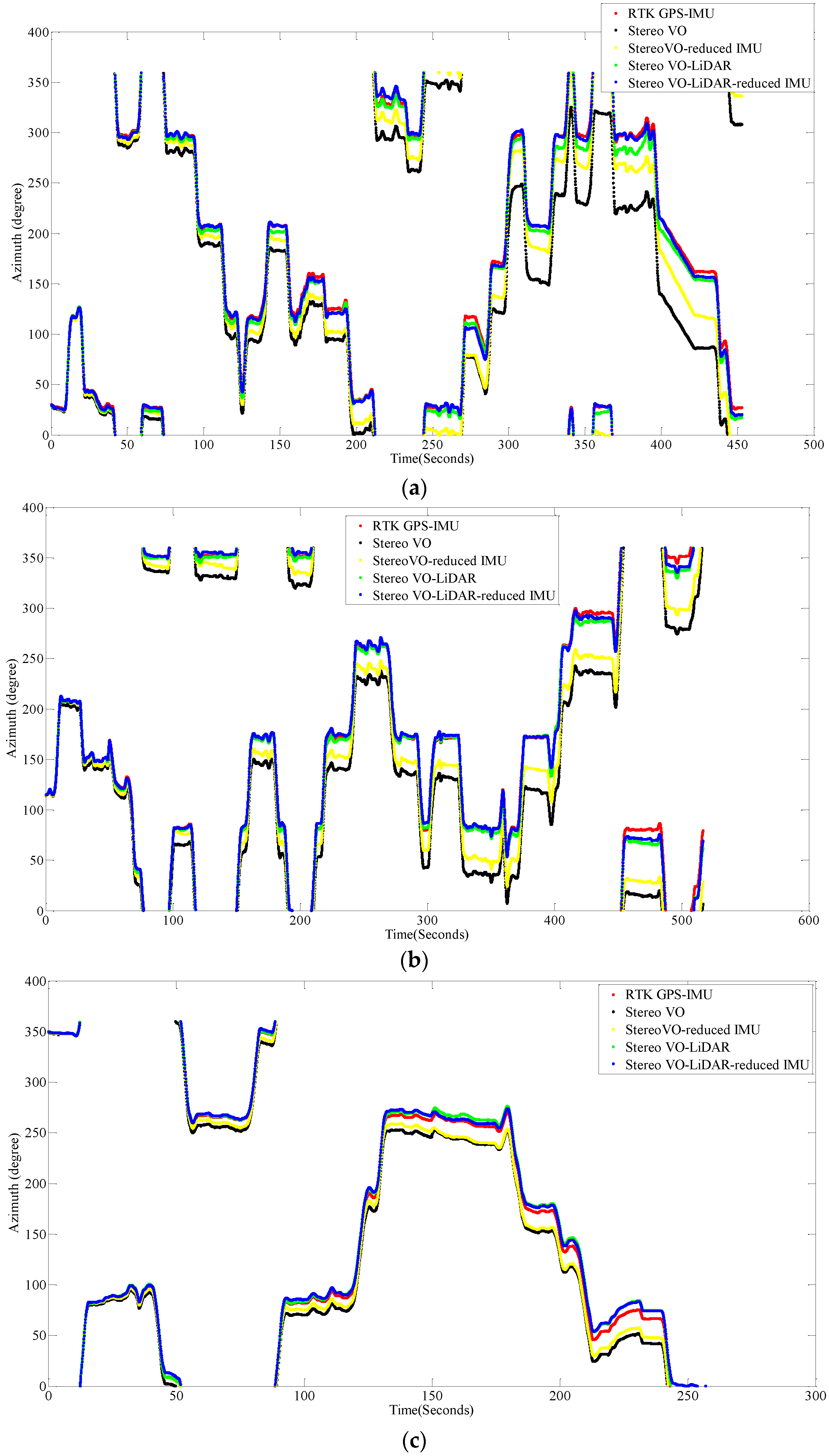

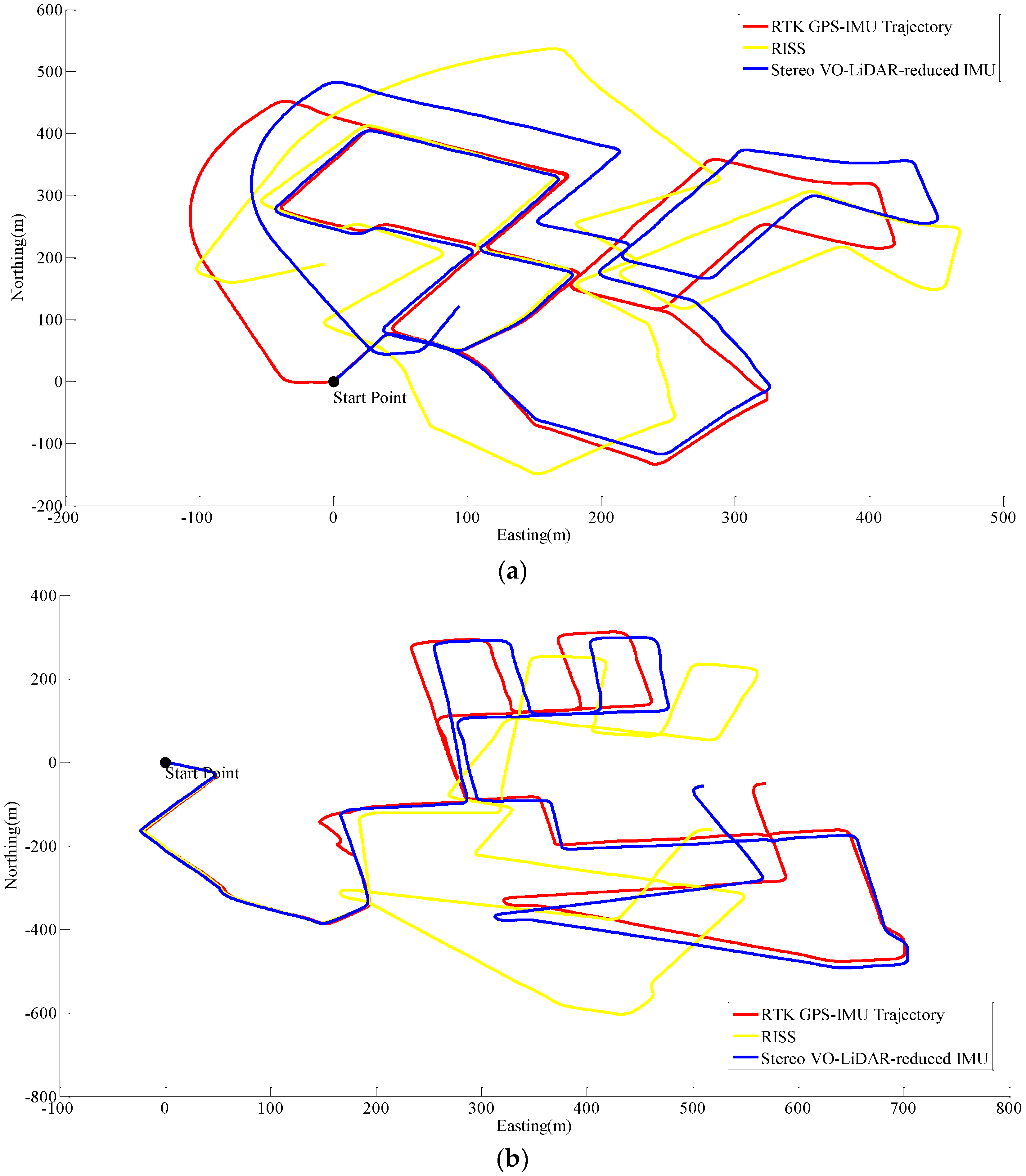

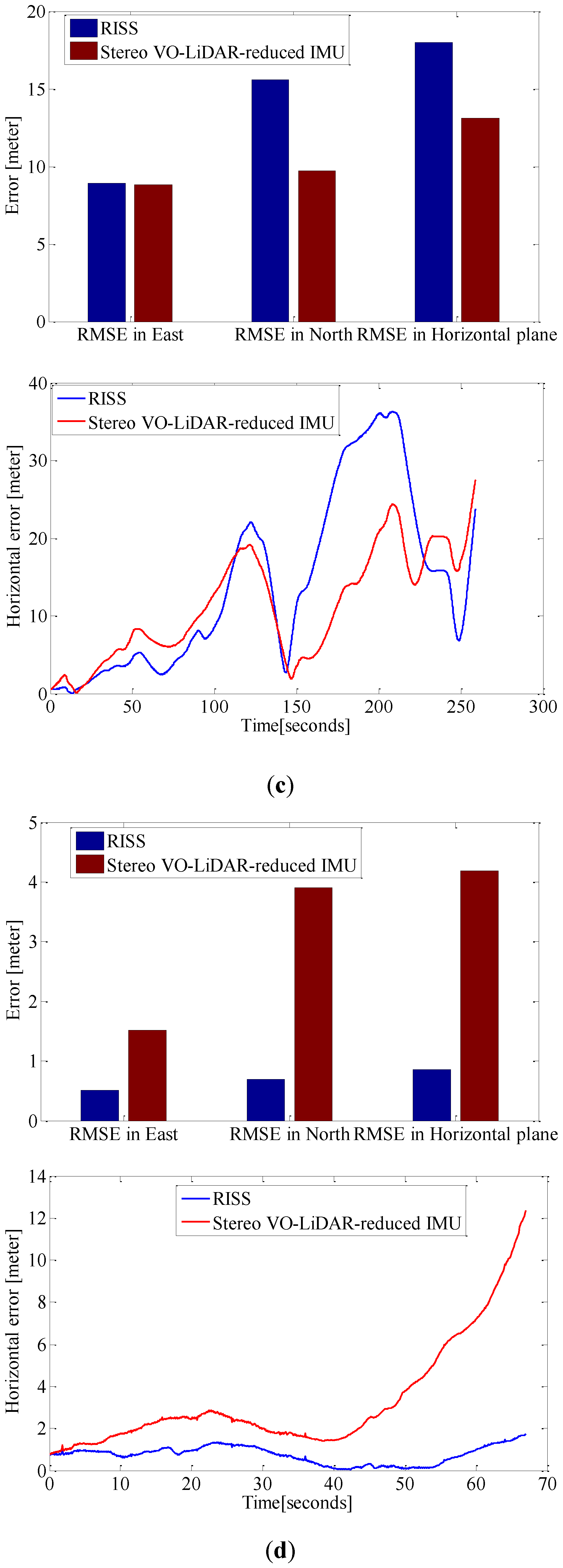

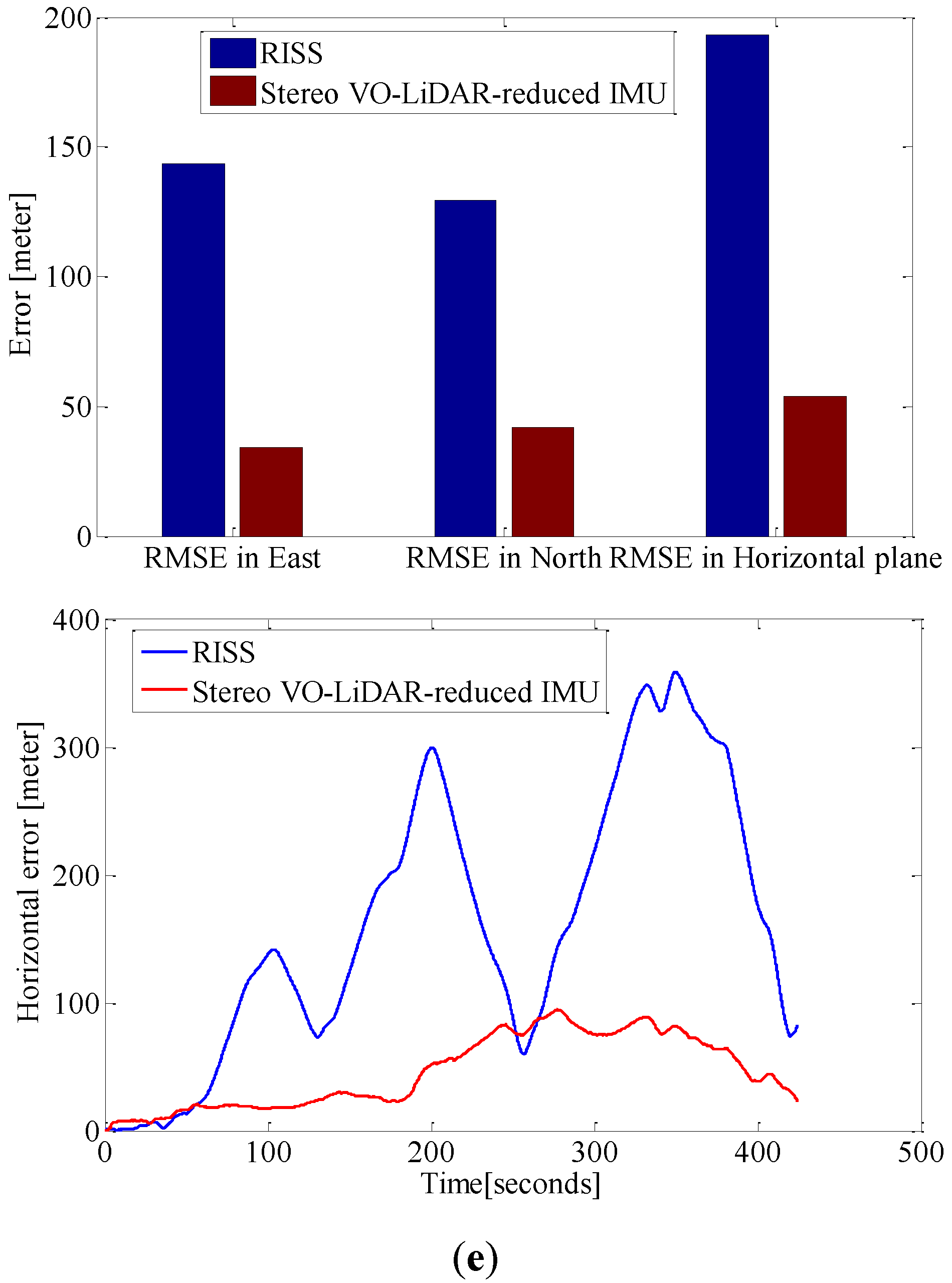

4.3. Sensor Integration Results and Analysis

| Dataset No. | Dist. | Relative Horizontal Position Error | |||

|---|---|---|---|---|---|

| VO | VO-RI | VO-L | VO-L-RI | ||

| dataset #27 | 3705 m | 4.62% | 2.60% | 1.33% | 1.05% |

| dataset #28 | 4110 m | 5.77% | 3.64% | 1.06% | 0.51% |

| dataset #18 | 2025 m | 2.71% | 2.29% | 0.73% | 0.65% |

| dataset #61 | 485 m | 2.78% | 2.34% | 1.34% | 0.91% |

| dataset #34 | 4509 m | 3.99% | 1.94% | 1.96% | 1.25% |

5. Conclusions

6. Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Milella, A.; Siegwart, R. Stereo-based ego-motion estimation using pixel tracking and iterative closest point. In Proceedings of the IEEE International Conference on Computer Vision Systems, New York, NY, USA, 4–7 January 2006; p. 21.

- Hong, S.; Ko, H.; Kim, J. VICP: Velocity updating iterative closest point algorithm. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 1893–1898.

- Hong, S.; Ko, H.; Kim, J. Improved motion tracking with velocity update and distortion correction from planar laser scan data. In Proceedings of the International Conference Artificial Reality and Telexistence, 1–3 December 2008; pp. 315–318.

- Zhang, J.; Singh, S. Visual-lidar odometry and mapping: Low-drift, robust, and fast. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2174–2181.

- Noureldin, A.; Karamat, T.B.; Georgy, J. Fundamentals of Inertial Navigation, Satellite-Based Positioning and Their Integration; Springer: Berlin, Germany, 2013. [Google Scholar]

- Kitt, B.; Geiger, A.; Lategahn, H. Visual odometry based on stereo image sequences with RANSAC-based outlier rejection scheme. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 486–492.

- Scaramuzza, D.; Fraundorfer, F. Tutorial: Visual odometry. IEEE Robot. Autom. Mag. 2011, 18, 80–92. [Google Scholar] [CrossRef]

- Steffen, R. Visual SLAM from Image Sequences Acquired by Unmanned Aerial Vehicles. Ph.D. Thesis, University of Bonn, Bonn, Germany, 2009. [Google Scholar]

- Weiss, S. Visual SLAM in Pieces; ETH Zurich: Zurich, Switzerland, 2009. [Google Scholar]

- Hosseinyalamdary, S.; Yilmaz, A. Motion vector field estimation using brightness constancy assumption and epipolar geometry constraint. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, II–1, 9–16. [Google Scholar] [CrossRef]

- Sarvrood, Y.B.; Gao, Y. Analysis and reduction of stereo vision alignment and velocity errors for vision navigation. In Proceedings of the 27th International Technical Meeting of The Satellite Division of the Institute of Navigation, San Diego, CA, USA, 27–29 January 2014; pp. 3254–3262.

- Holz, D.; Ichim, A.E.; Tombari, F.; Rusu, R.B.; Behnke, S. Registration with the point cloud library PCL A modular framework for aligning 3D point clouds. IEEE Robot. Autom. Mag. 2015, 22, 110–124. [Google Scholar] [CrossRef]

- Biber, P.; Strasser, W. The normal distributions transform: A new approach to laser scan matching. IEEE Int. Conf. Intell. Robot. Syst. 2003, 3, 2743–2748. [Google Scholar]

- Low, K. Linear Least-Squares Optimization for Point-to-Plane ICP Surface Registration; The University of North Carolina at Chapel Hill: Chapel Hill, NC, USA, 2004. [Google Scholar]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-ICP. Robot. Sci. Syst. 2009, 5, 168–176. [Google Scholar]

- Sirtkaya, S.; Seymen, B.; Alatan, A. Loosely coupled Kalman filtering for fusion of Visual Odometry and inertial navigation. In Proceedings of the 2013 16th International Conference on Information Fusion (FUSION), Istanbul, Turkey, 9–12 July 2013; pp. 219–226.

- Corke, P.; Lobo, J.; Dias, J. An introduction to inertial and visual sensing. Int. J. Rob. Res. 2007, 26, 519–535. [Google Scholar] [CrossRef]

- Qian, G.; Chellappa, R.; Zheng, Q. Robust structure from motion estimation using inertial data. J. Opt. Soc. Am. A 2001, 18, 2982. [Google Scholar] [CrossRef]

- Kleinert, M.; Schleith, S. Inertial aided monocular SLAM for GPS-denied navigation. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Salt Lake City, UT, USA, 5–7 September 2010; pp. 20–25.

- Nützi, G.; Weiss, S.; Scaramuzza, D.; Siegwart, R. Fusion of IMU and vision for absolute scale estimation in monocular SLAM. J. Intell. Robot. Syst. Theory Appl. 2011, 61, 287–299. [Google Scholar] [CrossRef]

- Schmid, K.; Hirschmuller, H. Stereo vision and IMU based real-time ego-motion and depth image computation on a handheld device. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 4671–4678.

- Konolige, K.; Agrawal, M.; Sola, J. Large scale visual odometry for rough terrain. Proc. Int. Symp. Robot. Res. 2007, 2, 1150–1157. [Google Scholar]

- Tardif, J.P.; George, M.; Laverne, M.; Kelly, A.; Stentz, A. A new approach to vision-aided inertial navigation. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 4161–4168.

- Strelow, D. Motion estimation from image and inertial measurements. Int. J. Rob. Res. 2004, 23, 1157–1195. [Google Scholar] [CrossRef]

- Scherer, S.; Rehder, J.; Achar, S.; Cover, H.; Chambers, A.; Nuske, S.; Singh, S. River mapping from a flying robot: State estimation, river detection, and obstacle mapping. Auton. Robots 2012, 33, 189–214. [Google Scholar] [CrossRef]

- Jekeli, C. Inertial Navigation Systems with Geodetic Applications; DE GRUYTER: Berlin, Germany, 2001. [Google Scholar]

- Szeliski, R. Computer vision: Algorithms and applications. Computer (Long. Beach. Calif). 2010, 5, 832. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Rob. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Geiger, A.; Moosmann, F.; Car, O.; Schuster, B. Automatic camera and range sensor calibration using a single shot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 3936–3943.

- Hosseinyalamdary, S.; Balazadegan, Y.; Toth, C. Tracking 3D moving objects based on GPS/IMU navigation solution, laser scanner point cloud and GIS data. ISPRS Int. J. Geo-Inform. 2015, 4, 1301–1316. [Google Scholar] [CrossRef]

- Meng, Y. Improved positioning of land vehicle in its using digital map and other accessory information. Ph.D. Thesis, Hong Kong Polytechnic University, Hong Kong, 2006. [Google Scholar]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Balazadegan Sarvrood, Y.; Hosseinyalamdary, S.; Gao, Y. Visual-LiDAR Odometry Aided by Reduced IMU. ISPRS Int. J. Geo-Inf. 2016, 5, 3. https://doi.org/10.3390/ijgi5010003

Balazadegan Sarvrood Y, Hosseinyalamdary S, Gao Y. Visual-LiDAR Odometry Aided by Reduced IMU. ISPRS International Journal of Geo-Information. 2016; 5(1):3. https://doi.org/10.3390/ijgi5010003

Chicago/Turabian StyleBalazadegan Sarvrood, Yashar, Siavash Hosseinyalamdary, and Yang Gao. 2016. "Visual-LiDAR Odometry Aided by Reduced IMU" ISPRS International Journal of Geo-Information 5, no. 1: 3. https://doi.org/10.3390/ijgi5010003