Abstract

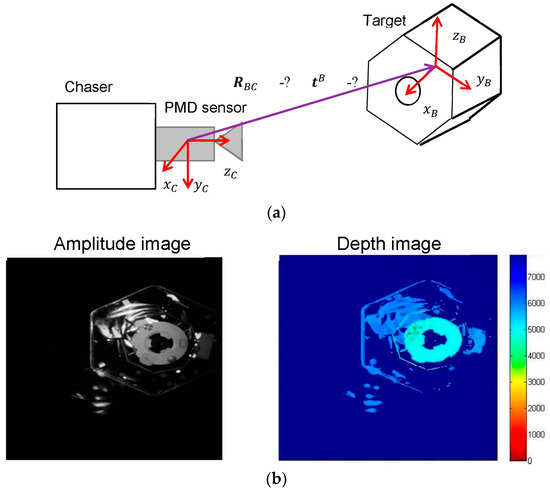

This paper presents a pose estimation routine for tracking attitude and position of an uncooperative tumbling spacecraft during close range rendezvous. The key innovation is the usage of a Photonic Mixer Device (PMD) sensor for the first time during space proximity for tracking the pose of the uncooperative target. This sensor requires lower power consumption and higher resolution if compared with existing flash Light Identification Detection and Ranging (LiDAR) sensors. In addition, the PMD sensor provides two different measurements at the same time: depth information (point cloud) and amplitude of the reflected signal, which generates a grayscale image. In this paper, a hybrid model-based navigation technique that employs both measurements is proposed. The principal pose estimation technique is the iterative closed point algorithm with reverse calibration, which relies on the depth image. The second technique is an image processing pipeline that generates a set of 2D-to-3D feature correspondences between amplitude image and spacecraft model followed by the Efficient Perspective-n-Points (EPnP) algorithm for pose estimation. In this way, we gain a redundant estimation of the target’s current state in real-time without hardware redundancy. The proposed navigation methodology is tested in the German Aerospace Center (DLR)’s European Proximity Operations Simulator. The hybrid navigation technique shows the capability to ensure robust pose estimation of an uncooperative tumbling target under severe illumination conditions. In fact, the EPnP-based technique allows to overcome the limitations of the primary technique when harsh illumination conditions arise.

1. Introduction

Space rendezvous is a key operation required in all the missions involving more than one spacecraft [1]. Considering the problem of space debris removal and future On-Orbit Servicing (OOS) missions, the autonomous close-range approach of a controlled chaser spacecraft towards a target spacecraft is a key-enabling phase in both activities. In order to autonomously navigate to the target, the chaser spacecraft must estimate the pose (i.e., position and orientation) of the target spacecraft regularly during the maneuver.

The autonomous navigation is not only an important action in space applications, but also a key activity for the mobile robots in urban, pedestrian and industrial environments. These robots can perform various tasks with high precision in most severe environments, where the human is not able to complete the required work [2]. The main challenge in the autonomous navigation for the mobile robots is simultaneously map the environment and, moreover, keep track of its pose and location [3]. For this purpose, in the last decay, many distinctive visual navigation techniques using various visual sensors for the map building and robot localization [4,5,6] have been developed and tested.

Concerning space activities and especially the topic on which we are concentrating in this article, Autonomous Rendezvous and Docking (RvD), several autonomous navigation techniques that employ visual-based sensors for motion tracking were applied and tested during real space missions. Examples of such missions are the ETS-VII experiment [7], Orbital Express [8], RvD of the Automated Transfer Vehicle (ATV) to the International Space Station and the Hubble Space Telescope (HST) Servicing Mission 4 (SM4) [9]. In these missions the target spacecraft was always cooperative, since its attitude was stabilized, it communicated its state to the chaser and it was equipped with dedicated visual markers for relative pose estimation.

The difference between the aforementioned in-orbit rendezvous and the close-range approach in future OOS and debris removal missions is the uncooperativeness of the target spacecraft as it is not equipped with dedicated visual markers for distance and attitude estimation and it may tumble around the principal axes. For this reason, new technologies must be developed for the real-time estimation of the pose of the uncooperative target, which represents the key challenge during the autonomous close-range approach. Due to the lack of visual markers, the general approach for uncooperative pose estimation is to extract the most important patterns of the target’s body from the sensor measurements and fit the known shape of the target with the detected features [10]. In general, extracting meaningful features and computing the pose in real time is a challenging task since the detected pattern may vary frame by frame due to the tumbling motion of the target. Moreover, the task is further complicated by the harsh illumination conditions that may occur during the maneuver. By harsh illumination condition we assume excessive levels of sunlight, which oversaturate and distort some pixels of the images. In fact, strong direct sunlight can be reflected by the satellite’s high reflective surfaces covered with Multi-Layer Insulation (MLI) and saturate the sensor’s pixels. Also in this case, unfavorable illumination conditions may arise suddenly due to the uncontrolled motion of the target spacecraft. These high reflections on the target’s surface cause saturation of the sensor pixels, which generate corrupted measurements. On the contrary, complete darkness occurs regularly during each orbit. This situation requires the usage of infrared sensors such as the Light Detection And Ranging (LiDAR) sensor.

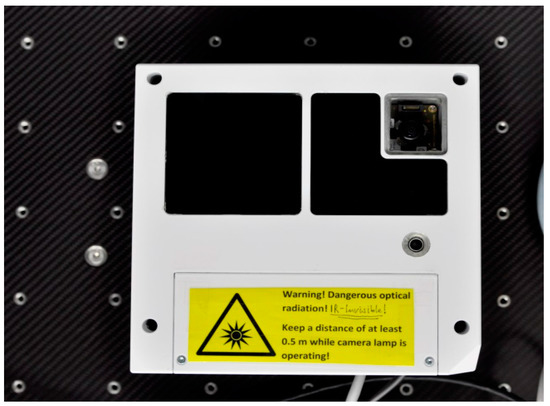

Different types of visual sensors have been considered and tested for the close range pose estimation of an uncooperative target spacecraft, viz. LiDAR [11], monocular camera [12,13,14], stereo camera [15,16] and also time-of-flight sensors based on Photonic Mixer Device (PMD) technology [17,18]. LiDAR sensors have high requirements in terms of mass and power, but ensure robustness in any illumination condition as they employ reflected infrared (IR) signals. However, this signal can be reflected multiple times on the target’s surfaces in some particular attitude configurations and thus generate incorrect features in the acquired point cloud [8]. Furthermore, the resolution of the depth measurements must be gradually reduced during the approach to ensure rapid pose estimation in proximity of the target [19]. On the contrary, monocular pose estimation guarantees rapid estimation of the target pose under low mass and power requirements. This approach is robust to reflected features on the target’s surface, but suffers from the harsh illumination conditions of the space environment. With respect to the aforementioned technologies, cameras based on PMD technologies have important advantages, such as the lower mass and power requirements with respect to LiDARs and the capability to provide both depth measurements and 2D images in low-resolution obtained from the reflected signal [17,20]. Due to these advantages, new technologies for uncooperative pose estimation based on PMD measurements have been developed and tested recently. Tzschichholz [18] demonstrated the capability of the PMD sensor to provide raster depth images at a high rate with partly depth data loss when working under strong illumination. In most cases, the real-time pose estimation of the target spacecraft produced accurate results.

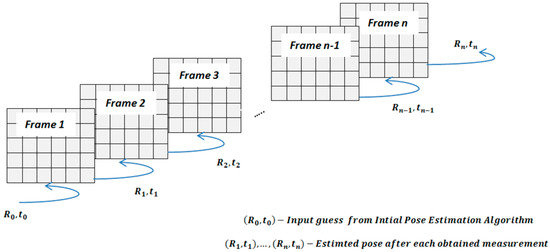

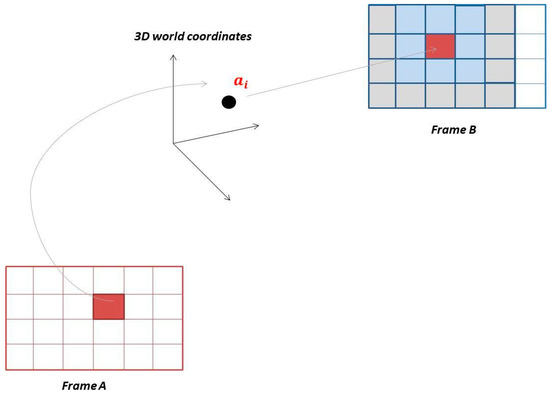

In this paper we further investigate the application of the PMD sensor to uncooperative pose estimation and tracking during close-range space rendezvous approaches. We propose a novel navigation technique capable to estimate, even under harsh illumination conditions and with a (partly) depth data loss, the six degrees-of-freedom (DoF) relative pose of the target spacecraft during the close rendezvous phase from 10 to 1 m using the measurement from the PMD sensor. The key feature of the proposed architecture is its hybrid nature, meaning that it employs two different model-based pose estimation techniques to ensure robustness to any illumination condition. The first method estimates the pose of the target spacecraft from the depth measurements using the Iterative Closest Point algorithm (ICP) with a reverse calibration technique. The second method consists of an image processing procedure that extracts low-level features of the target spacecraft from the 2D amplitude images and estimates the current target pose by fitting the projection of the known 3D model on the detected features.

The main contribution of this research is the combination of two different pose estimation techniques for redundant real-time estimation of the target’s state vector without hardware redundancy. To do this, the back-up amplitude images for pose estimation is employed in case of failure of the ICP-based pose estimation with depth images, which is used as primary approach. This ensures applicability and robustness to any illumination condition during the maneuver.

The proposed navigation system was tested in the European Proximity Operations Simulator (EPOS 2.0) [21] at the German Aerospace Center (DLR), which allows real-time simulation of close range proximity operations under realistic space illumination conditions. We tested each designed algorithm for estimation of the relative position and attitude of the satellite mockup in order to compare robustness and accuracy and to identify cases of appliance for each of them.

The paper is organized as follows: Section 2 provides the pose estimation problem of an uncooperative target spacecraft during proximity operations and describes the PMD sensor in detail. Section 3 illustrates the navigation algorithms. Section 4 provides results and analyses of the experimental campaign carried out at EPOS. Finally, Section 5 concludes the paper.

4. Results

The proposed hybrid navigation technique was tested offline on two datasets of images recorded in two different rendezvous scenarios simulated in DLR’s EPOS laboratory. The overall positioning accuracy of the EPOS facility is 1.56 mm (position) and 0.2 deg (orientation) [21]. The algorithms proposed in this paper were implemented in C++, using OpenCV [40] library for straight line and central circle detection.

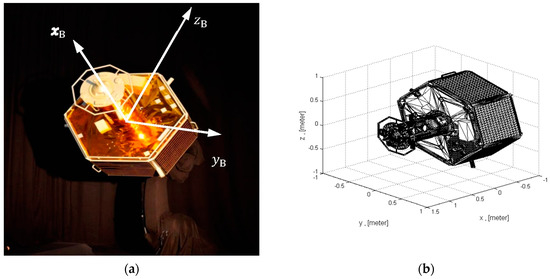

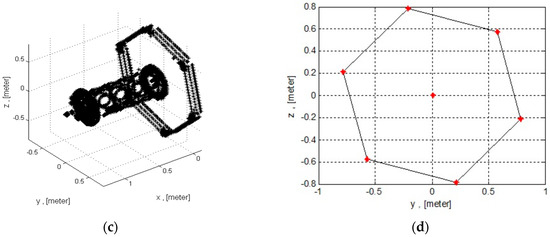

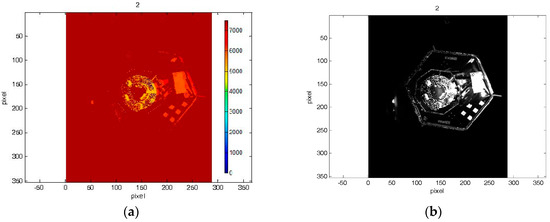

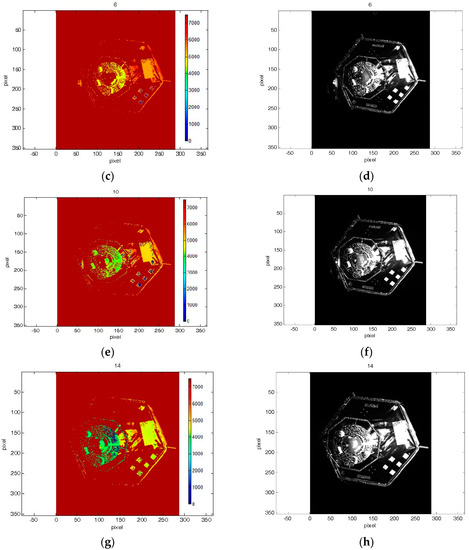

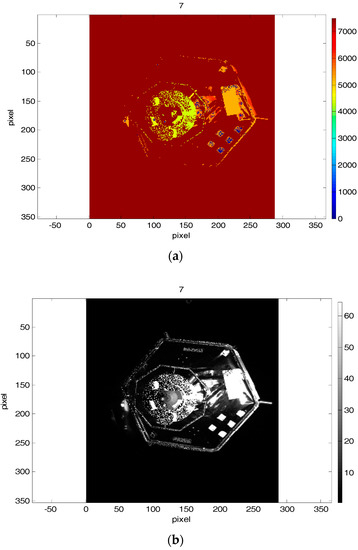

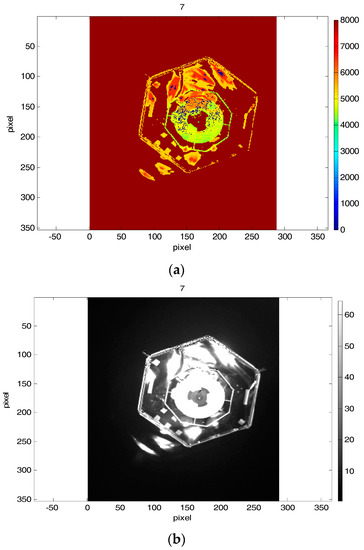

In the first scenario, we considered a frontal approach at a certain angle to an attitude-stabilized target. Figure 8 shows the first dataset of depth and amplitude images acquired during this simulated maneuver. In the second scenario, we considered a straight frontal approach to a target tumbling about its X axis (see Figure 4) at a rate of 5 deg/s. The second dataset of depth and amplitude images acquired during this maneuver is shown in Figure 9. Initial distance for both approach scenarios was 7 m. Overall, every data set consists of 20 images. For every test image acquired by the simulator, the ground truth between camera and the mockup was logged by EPOS.

Figure 8.

(a–h) Some depth and amplitude images from the first dataset.

Figure 9.

(a–h) Some depth and amplitude images from the second dataset.

Figure 8 and Figure 9 highlight the fact that amplitude and depth images, which were acquired at the same instants, present some differences. In most cases, the amplitude image reflects most of the visible contours, whereas on the depth image no information of some edges is available.

The experimental campaign, which we considered in this paper, is as follows. The pose estimation using the ICP with reverse calibration algorithm (detailed explanation is provided in Section 3.2.1 and Section 3.2.2) is primary conducted. The image processing procedure, which is described in Section 3.2.1 and Section 3.2.2, has been run thereafter for the amplitude images. After processing the output results from the obtained depth images, we have revealed and shown how the redundant algorithm with the amplitude images can (partly) compensate and/or provide more accurate estimations of the complete pose, when the algorithm with the depth images provides inaccurate estimated pose.

4.1. ICP-Based Pose Estimation from PMD Depth Images

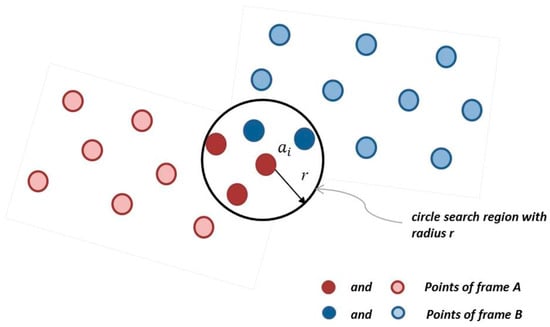

For the ICP with reverse calibration, we varied the radius for the 2D neighbor search. The radius was defined in a range from 10 to 50 pixels. In Table 2, the Root Mean Square (RMS) errors and the processing time are presented for both sequences of images after appliance of ICP with the reverse calibration.

Table 2.

Root Mean Square (RMS) errors and average computational time for Iterative Closest Point algorithm (ICP) with reverse calibration.

The demanded central processing unit (CPU) times for processing one image for the both data sets are also presented in Table 2. Considering the results from the recorded datasets of the images, it could be noticed that the radius of the neighbor search influences the position errors. It is connected with the obtained data from the sensor. If some depth data of the mockup are absent on the image, no neighbor points could be found for some points of the projected model. Besides that, some incorrect information could be presented on the image, e.g., high reflection from the target can create additional areas of the depth, which in reality does not exist. It evidently can be observed on the Figure 9 in the images 5, 7 and 9.

Therefore, the low number of point correspondences or mismatches between scene and model points can lead to the incorrect estimation of the pose. In general, the good case with low RMS position errors could be found throughout the whole simulated results both for the first and for the second datasets. For example, if the radius for 2D neighbor search for the non-rotated target is in a range of 25, 30 and 35, the RMS errors for the rotation components do not exceed 4 degrees and the RMS errors for the translation components are in the range less than 10 cm. For the rotated target, because of the quite large number of outliers and completely absent depth information from one side of the mockup in the first depth images, the translation RMS errors along the X axis are high almost in all simulated cases. However, if the radius is equal 10, 25 and 30 pixels the RMS errors for the rotation components do not exceed 7.05 degrees and the RMS errors for the translation components are less than 15 cm. In fact, input for the pose estimation algorithm with depth images is the raw depth data without depth correction algorithm. Therefore, we assume that the RMS errors for the radiuses 10, 25 and 30 are acceptable, knowing that they include not only the errors from pose estimation algorithms.

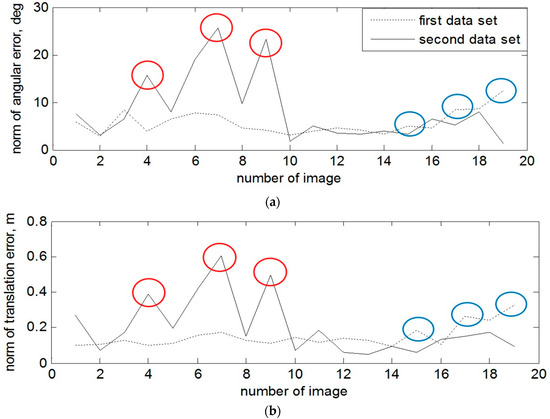

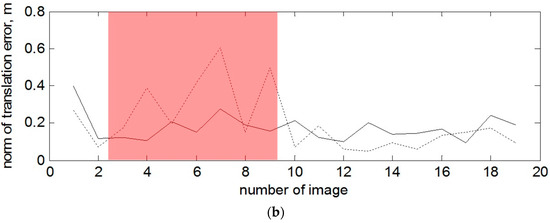

Let us consider the detailed errors of pose estimation for every depth image when the radius of the neighbor search is equal 25 pixels. On Figure 10, the norm of angular and translation errors are presented for the attitude–stabilized and for the tumbling target over time. The spots in a form of error spikes on these two plots (e.g., images 15, 17, 19 for the first data set and images 4, 7 and 9 for the second data set) indicate the moments when the error of estimated pose with depth image is very high. For the first scenario, these peaks have been caused by position of the stabilized target, as the left part of the spacecraft was hardly observed. For the second dataset, peaks of errors have arisen since the target was tumbling, causing severe reflections from the surface. Therefore, in the next section we show the results for the same data sets the EPnP-based pose estimation technique on the amplitude images in order to investigate how to overcome these peaks of pose error.

Figure 10.

(a) Norm of angular error for the attitude-stabilized and rotated target with ICP-based technique and radius for neighbor search 25 pixels. (b) Norm of translation error for the attitude-stabilized and rotated target with ICP-based technique and radius for neighbor search 25 pixels.

4.2. Pose Estimation with the Amplitude Image from PMD Sensor

We now test the supplementary EPnP-based pose estimation technique on the amplitude images of the two datasets. In particular, we want to investigate when this technique can overcome the peaks of error generated by the ICP-based pose estimation algorithm. This comparison is performed by considering the results of the ICP-based algorithm with search neighbor radius of 25 pixels.

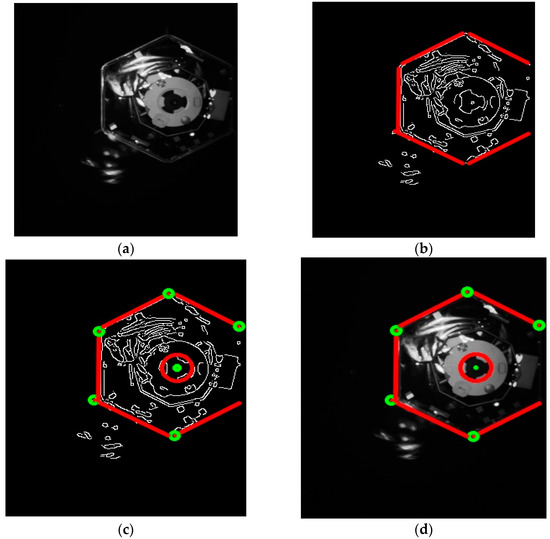

Because of the shape of the mockup (see Figure 4), we can get only a limited amount of the endpoints from the detected lines (see explanation in Section 3.3.1). As shown in Figure 7, the maximum number is 7 points: 6 intersected points or corners of the hexagon along with the center of the central circle. Since not all the feature points can be detected in every frame, we just run the algorithm for that number of points which was available after image processing.

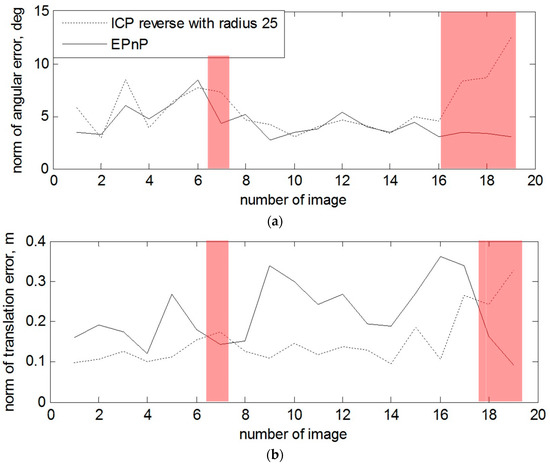

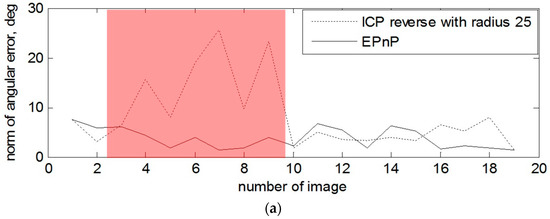

On Figure 11 and on Figure 12, the norms for rotation and translation errors of pose estimation are individually shown for each depth and amplitude image. The red marking on both Figures shows the cases when the algorithm with the amplitude images shows better results by estimating pose than ICP with the depth images. The average central processing unit CPU time to process one amplitude image is about 1 s.

Figure 11.

(a) Norm of angular error for every amplitude and distance image for the first data set. (b) Norm of distance error for every amplitude and distance image for the first data set.

Figure 12.

(a) Norm of angular error for every amplitude and distance image for the second data set. (b) Norm of distance error for every amplitude and distance image for the second data set.

From the results in Figure 11, we can determine that, in the case of the non-rotated target, the errors for estimated orientation using the EPnP-based pose estimation technique (images 7, 18 and 19) are lower. In Table 3, the error norms for rotation and translation are presented for the mentioned images.

Table 3.

Norms of translation and rotation errors for the stabilized target of the first dataset.

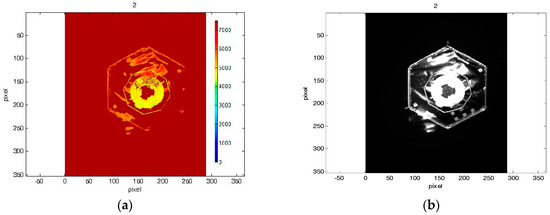

These errors are due to the approaching scenario. The closer the target was, the less the front left part was visible and the central circle part could not be fully detected correctly. The Figure 13 shows the 7th depth and amplitude images from the first data set, where the EPnP outperforms the ICP with reverse calibration in the form of calculation of the rotation and translation components, because of the missed depth data of the left upper and lower parts of the mockup in the depth image.

Figure 13.

Subfigure (a) depicts 7th depth image and subfigure (b) contains the 7th amplitude image from the first data set, where the EPnP outperforms the ICP with reverse calibration in form of calculation of the rotation and translation components.

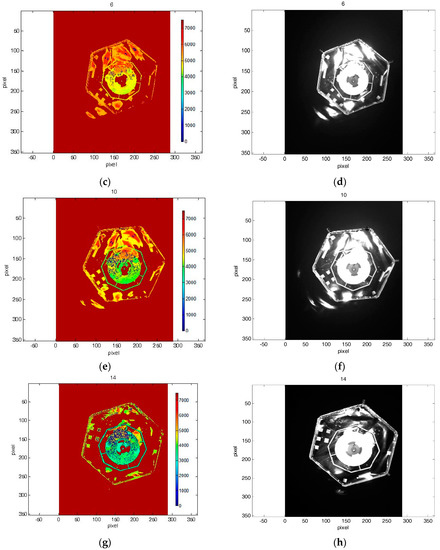

In the case with the rotating target and when the depth measurement of the right part of the mockup are also absent at the beginning of the tracking, one can observe a sufficient advantage of using 2D amplitude images for the estimation of the rotation and also some of the translation components. For example, the most obvious example is presented on Figure 12 and image 4, 7 and 9.

The 7th depth and amplitude images from the second data set are depicted on Figure 14, as it was made for the first scenario. The norms of attitude and position errors for the mentioned images with the ICP-based technique and the EPnP technique are presented in Table 4.

Figure 14.

Subfigure (a) depicts the 7th depth image and subfigure (b) contains the 7th amplitude image from the second data set, where the EPnP outperforms the ICP with reverse calibration in form of calculation of the rotation and translation components.

Table 4.

Norms of translation and rotation errors for the tumbling target in the second dataset.

The monocular vision overcomes the performance of the algorithm with depth images due to the higher quality of the amplitude images. The depth images were strongly corrupted by the absence of the depth data.

5. Discussion and Conclusions

A hybrid navigation technique for tracking the pose of an uncooperative target spacecraft during close range rendezvous has been proposed in this paper. The key innovation of this technique is the usage of two different pose estimation approaches to ensure robustness in the tracking procedure even under harsh illumination conditions. This is possible since the PMD sensor provides two types of measurement at the same time: one depth image and one amplitude image, which is analogous to a gray scale image. The first method for pose tracking is the ICP algorithm with reverse calibration, which determines the current pose by matching the measured point cloud with a reference 3D model of the spacecraft. The second technique employs the Hough algorithm to detect the target’s true edges in the amplitude image as straight lines. Subsequently, the endpoints are matched with the corresponding vertexes of the 3D model. Finally, the EPnP solver estimates the current pose of the target spacecraft using these point correspondences.

The main advantage of the proposed technique is the software redundancy by using the measurements of the PMD sensor without hardware redundancy. This redundancy is crucial to ensure robustness of the tracking procedure. In fact, the test campaign using realistic sensor data from DLR’s European Proximity Operation Simulator facility showed that the ICP routine produced some inaccurate estimates. These errors were originated by the corrupted depth images caused by the intense light reflection on the target’s surface that saturates the sensor’s pixels. To ensure precise pose tracking, we cannot rely on the depth measurements only. In case of problems, it is necessary to use the additional pose estimation technique based on the EPnP algorithm and the amplitude images.

It turned out that that the amplitude measurements provide an accurate 2D image of the spacecraft algorithm under the same illumination conditions as in the case with depth measurements, allowing precise pose estimation using the image processing and EPnP. With these results, we proved the necessity to adapt the pose estimation pipeline to the sun illumination and we demonstrate the potential usage of the proposed techniques in one unique hybrid navigation system. The proposed improvement will increase the accuracy and robustness of the navigation system to keep tracking the target with the PMD camera. We are interested in the further combination of the best measurements from the pose estimation techniques in order to get the final one pose. This solution should balance the pros and cons of the proposed completely different techniques, based on the experience obtained during the test simulation with real data. Throughout different techniques for the fusion of the state estimates, we would consider the Kalman filter as a typical one applied in visual tracking applications.

Potentially, it is necessary to test the hybrid navigation technique for different test scenarios, e.g., by simulation of a sun illumination from different directions or by choosing another approach scenario with other visible parts of the mockup, which have other shapes and types of material.

Author Contributions

K.K. and H.B. conceived, designed and collected images for the experiments; J.V. and K.K. provided software to perform the experiments; F.H. contributed to the methods; K.K. wrote the paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fehse, W. Automated Rendezvous and Docking of Spacecraft; Cambridge Aerospace Series; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Merriaux, P.; Dupuis, Y.; Boutteau, R.; Vasseur, P.; Savatier, X. Robust Robot Localization in a Complex Oil and Gas Industrial Environment. J. Field Robot. 2017. [CrossRef]

- Ballantyne, J.; Johns, E.; Valibeik, S.; Wong, C.; Yang, G.Z. Autonomous Navigation for Mobile Robots with Human-Robot Interaction. In Robot Intelligence. Advanced Information and Knowledge Processing; Springer: London, UK, 2010. [Google Scholar]

- Biswajit, S.; Prabir, K.P.; Debranjan, S. Building maps of indoor environments by merging line segments extracted from registered laser range scans. Robot. Auton. Syst. 2014, 62, 603–615. [Google Scholar]

- Lakaemper, R. Simultaneous Multi-Line-Segment Merging for Robot Mapping Using Mean Shift Clustering. In Proceedings of the Intelligent Robots and Systems (IROS), St. Louis, MO, USA, 10–15 October 2009. [Google Scholar]

- Pozna, C.; Precup, R.E.; Földesi, P. A novel pose estimation algorithm for robotic navigation. Robot. Auton. Syst. 2015, 63, 10–21. [Google Scholar] [CrossRef]

- Inaba, N.; Oda, M. Autonomous Satellite Capture by a Space Robot. In Proceedings of the IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 24–28 April 2000. [Google Scholar]

- Howard, R.T.; Heaton, A.F.; Pinson, R.M.; Carington, C.K. Orbital Express Advanced Video Guidance Sensor. In Proceedings of the IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2008. [Google Scholar]

- Naasz, B.; Eepoel, J.V.; Queen, S.; Southward, C.M.; Hannah, J. Flight results of the HST SM4 Relative Navigation Sensor System. In Proceedings of the 33rd Annual AAS Guidance and Control Conference, Breckenridge, CO, USA, 6–10 February 2010. [Google Scholar]

- Opromolla, R.; Fasano, G.; Rufino, G.; Grassi, M. A review of cooperative and uncooperative spacecraft pose determination techniques for close-proximity operations. Prog. Aerosp. Sci. 2017, 93, 53–72. [Google Scholar] [CrossRef]

- Opromolla, R.; Fasano, G.; Rufino, G.; Grassi, M. Uncooperative pose estimation with a LIDAR-based system. Acta Astronaut. 2015, 10, 287–297. [Google Scholar] [CrossRef]

- Ventura, J. Autonomous Proximity Operations to Noncooperative Space Target. In Proceedings of the Deptment of Mechanical Engineering, Orlando, FL, USA, 30 January 2005–1 February 2017. [Google Scholar]

- D’Amico, S.; Benn, M.; Jørgensen, J.L. Pose Estimation of an Uncooperative Spacecraft from Actual Space Imagery. Int. J. Space Sci. Eng. 2014, 2, 171–189. [Google Scholar]

- Oumer, N.W. Visual Tracking and Motion Estimation for an On-Orbit Servicing of a Satellite. Ph.D. Thesis, University of Osnabruck, Osnabruck, Germany, 2016. [Google Scholar]

- Benninghoff, H.; Rems, F.; Boge, T. Development and hardware-in-the-loop test of a guidance navigation and control system for on-orbit servicing. Acta Astronaut. 2014, 102, 67–80. [Google Scholar] [CrossRef]

- Oumer, N.W.; Panin, G. 3D Point Tracking and Pose Estimation of a Space Object Using Stereo Images. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR 2012), Tsukuba, Japan, 11–15 November 2012. [Google Scholar]

- Ringbeck, T.; Hagebeuker, B. A 3D Time of Flight Camera for object detection. In Optical 3D Measurement Technologies; ETH Zürich: Zürich, Switzerland, 2007. [Google Scholar]

- Tzschichholz, T. Relative Pose Estimation of Known Rigid Objects Using a Novel Approach of High-Level PMD-/CCD-Sensor Data Fusion with Regard to Application in Space. Ph.D. Thesis, University of Würzburg, Würzburg, Germany, 2014. [Google Scholar]

- Ventura, J.; Fleischner, A.; Walter, U. Pose Tracking of a Noncooperative Spacecraft during Docking Maneuvers Using a Time-of-Flight Sensor. In Proceedings of the AIAA Guidance, Navigation, and Control Conference (AIAA SciTech Forum), San Diego, CA, USA, 4–8 January 2016. [Google Scholar]

- Dufaux, F.; Pesquet-Popescu, B.; Cagnazzo, M. Emerging Technologies for 3D Video: Creation, Transmission and Rendering; John Wiley & Sons, Ltd.: London, UK, 2013. [Google Scholar]

- Benninghoff, H.; Rems, F.; Risse, E.A.; Mietner, C. European Proximity Operations Simulator 2.0. (EPOS)-A Robotic-Based Rendezvous and Docking Simulator. J. Large-Scale Res. Facil. 2017, 3. [Google Scholar] [CrossRef]

- Fuchs, S.; Hirzinger, G. Extrinsic and depth calibration of ToF-cameras. In Proceedings of the 22nd IEEE Conference Computer Vision Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Reynolds, R.; Dobos, J.; Peel, L.; Weyrich, T.; Brostow, G.J. Capturing time-of-flight data with confidence. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Klionovska, K.; Benninghoff, H. Initial pose estimation using PMD sensor during the rendezvous phase in on-orbit servicing missions. In Proceedings of the 27th AAS/AIAA Space Flight Mechanics Meeting, San Antonio, TX, USA, 5–9 February 2017. [Google Scholar]

- Or, S.H.; Luk, W.S.; Wong, K.H.; King, I. An efficient iterative pose estimation algorithm. Image Vis. Comput. 1998, 16, 353–362. [Google Scholar] [CrossRef]

- Besl, P.J.; Mckay, N.D. A method for registration of 3D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Pomerlau, F.; Colas, F.; Siegwart, R.; Magnenat, S. Comparing ICP Variants on Real world Data Sets. Auton. Robot. 2013, 34, 133–148. [Google Scholar] [CrossRef]

- Blais, G.; Levine, M. Registering multiview range data to create 3D computer objects. IEEE Trans. Pattern Anal. Mach. Intell. 1995, 17, 820–854. [Google Scholar] [CrossRef]

- Levoy, M.; Rusinkiewicz, A. Efficient variants of the icp algorithm. In Proceedings of the 3rd International Conference on 3D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001. [Google Scholar]

- Klionovska, K.; Benninghoff, H.; Strobl, K.H. PMD Camera-and Hand-Eye Calibration for On-Orbit Servicing Test Scenarios on the Ground. In Proceedings of the 14th Symposium on Advanced Space Technologies in Robotics and Automation (ASTRA), Leiden, The Netherlands, 20–22 June 2017. [Google Scholar]

- DLR CalDe and DLR CalLab. Available online: http://www.robotic.dlr.de/callab/ (accessed on 25 October 2017).

- Horn, B.K.P. Closed-form solution of absolute orientation using unit quaternions. J. Opt. Soc. Am. 1987, 4, 629–642. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. Read. Comput. Vis. 1987, 184–203. [Google Scholar] [CrossRef]

- Amtonovic, D. Review of the Hough Transform Method, with an Implementation of the Fast Hough Variant for Line Detection; Department of Computer Science, Indiana University: Bloomington, IN, USA, 2008. [Google Scholar]

- Available online: http://web.ipac.caltech.edu/staff/fmasci/home/astro_refs/HoughTrans_lines_09.pdf (accessed on 15 October 2017).

- Cropp, A.; Palmer, P. Pose estimation and relative orbit determination of nearby target microsatellite using passive imagery. In Proceedings of the 5th Cranfield Conference on Dynamics and Control of Systems and Structures in Space, Cambridge, UK, 14–18 July 2002. [Google Scholar]

- Sharma, S.; D’Amico, S. Comparative assessment of techniques for initial pose estimation using monocular vision. Acta Astronaut. 2016, 123, 435–445. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An Accurate O(n) Solution to the PnP Problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Bhat, S. Visual words for pose estimation. In Signal and Image Processing; Universite de Lorraine: Lorraine, France, 2013. [Google Scholar]

- OpenCV. Available online: http://opencv.org/ (accessed on 25 October 2017).

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).