The Making of Catalogues of Very-High-Energy γ-ray Sources

Abstract

:1. Introduction

2. Technical Aspect of Survey and Catalogue Constructions

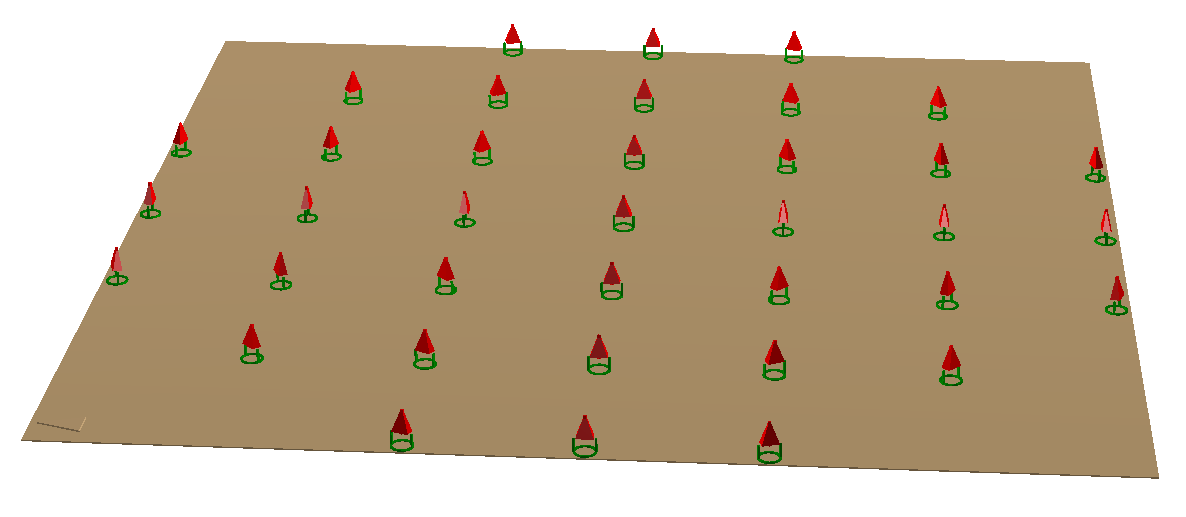

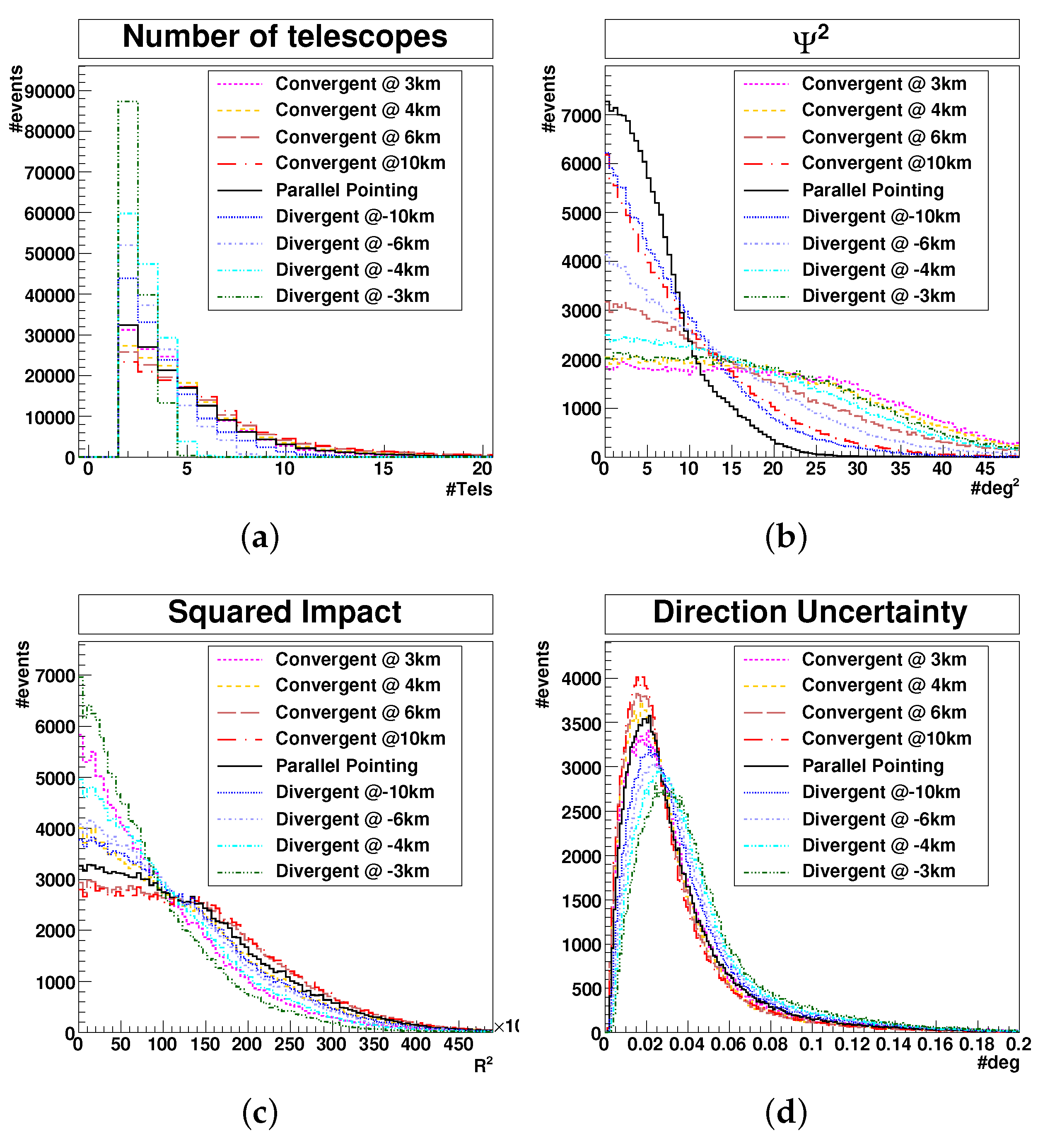

- Array operation and observational strategy: the way in which the array of telescopes is operated and optimised for a given physics goal (optimised on sensitivity or on field-of-view (FoV) width for instance).

- Event reconstruction and classification: separation of -like events from the much more numerous background-like events and construction of events classes.

- Background model: determination of the expected background in the field of view, taking into account the instrument response.

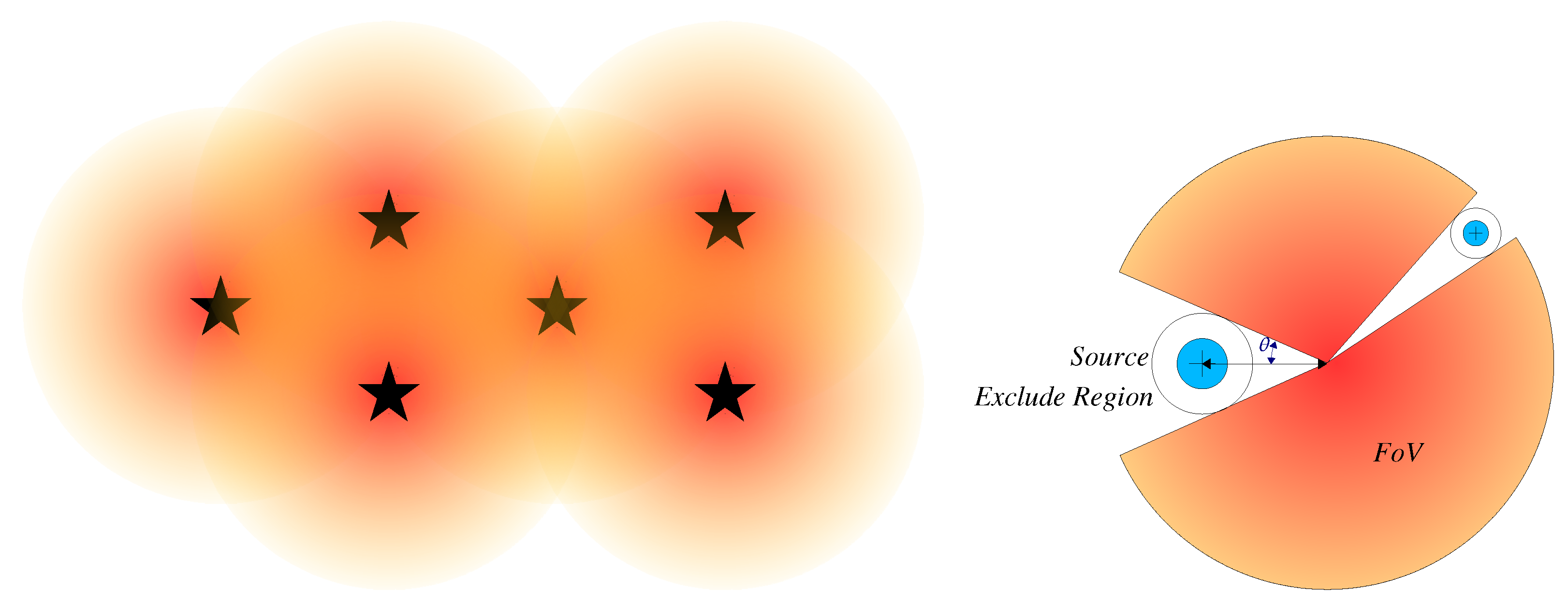

- Excluded region determination: identification of regions which are potentially contaminated by genuine -ray signal. These regions should not be used to estimate the remaining background in the subsequent background subtraction procedure, so as to avoid signal over-subtraction.

- Background subtraction: comparison of the number of events in a region of interest with the expected number of events (coming from the background model), to assess the potential existence of a localised excess.

- Automated catalogue pipeline: separation of regions of significant -ray emission into individual source components and extraction of their physical characteristics (flux, energy spectrum, morphology, temporal variability, …).

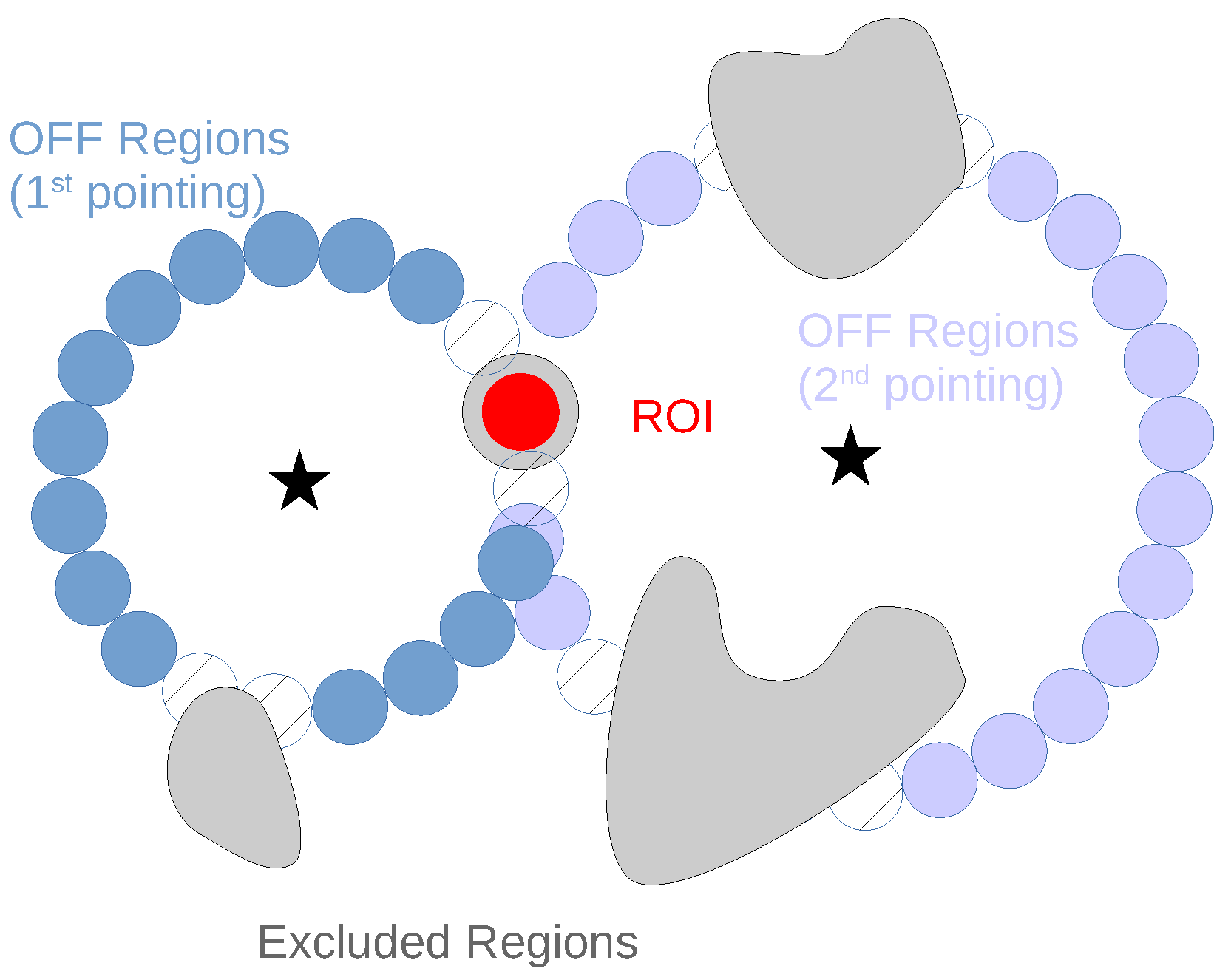

2.1. Observational Strategies

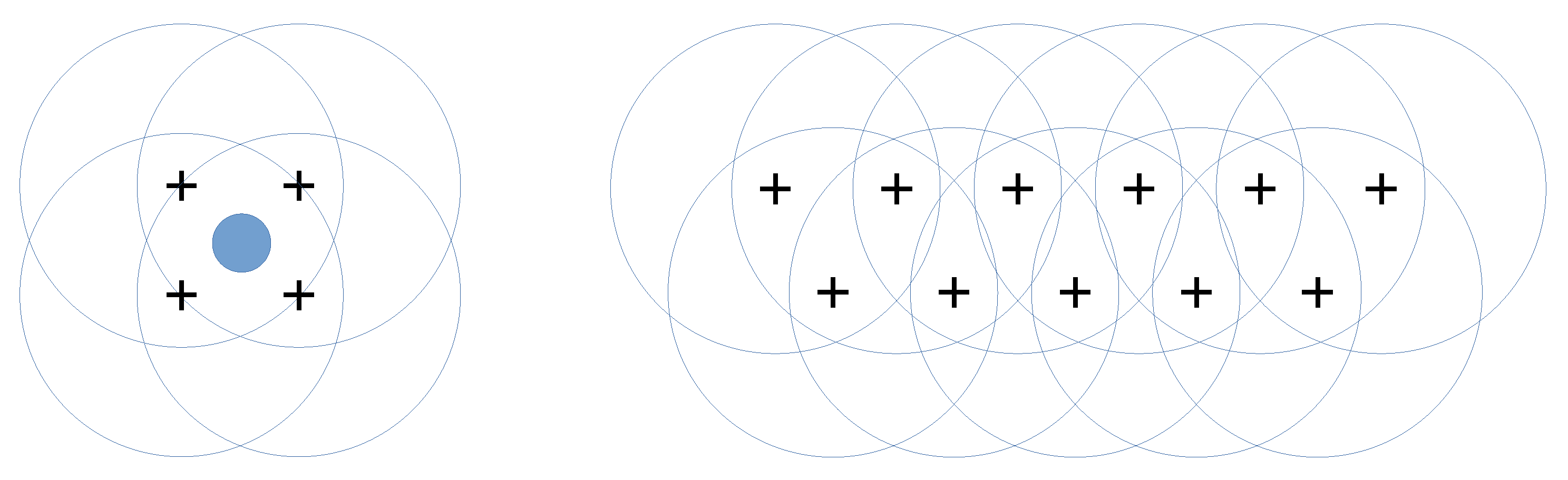

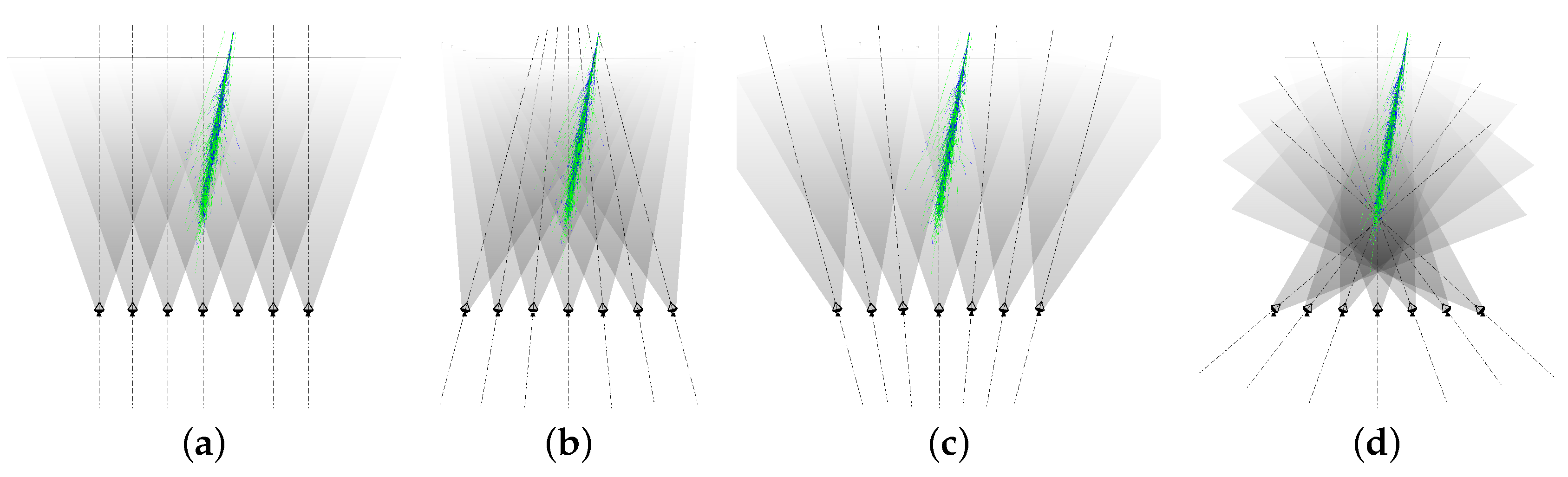

- Wobble mode of observation (Figure 1, left), where several observations are taken with different pointing directions around the source of interest. The source is displaced with respect to the centre of the field of view, to allow for proper background determination (Section 2.4.3). This mode of observation is appropriate for point-like or moderately large sources of known position, and in particular for targeted observations. Historically, the pointing positions were taken with a shift in the right ascension (RA) equal to the temporal separation between runs, in order to reproduce the exact same trajectory of the telescopes on the sky for each pair of runs. By doing so, no correction for the variation of telescope response had to be applied, simplifying a lot the analysis. Recent IACTs, using more elaborate background models (Section 2.3), dropped this observational constraint and combined observations with wobble offset in any direction (right ascension, declination or any other coordinate).

- Survey mode of observation (Figure 1, right), where a large region of the sky is scanned with observations overlapping each other (in order to minimise the background gradients). Several rows can be conducted in parallel or one after the other, and different spacing between pointing positions can be used. This mode of observation is usually optimised to maximise the sky coverage, while minimising the acceptance variations across the surveyed region.

2.2. Event Separation and Classification

- -like events: these events are very likely (probability depending on the analysis strategy) to originate from a genuine ray.

- background-like events: these events have a marginal, tiny probability of originating from a genuine ray, and most likely come from a charged cosmic ray.

2.3. Acceptance—Background Model

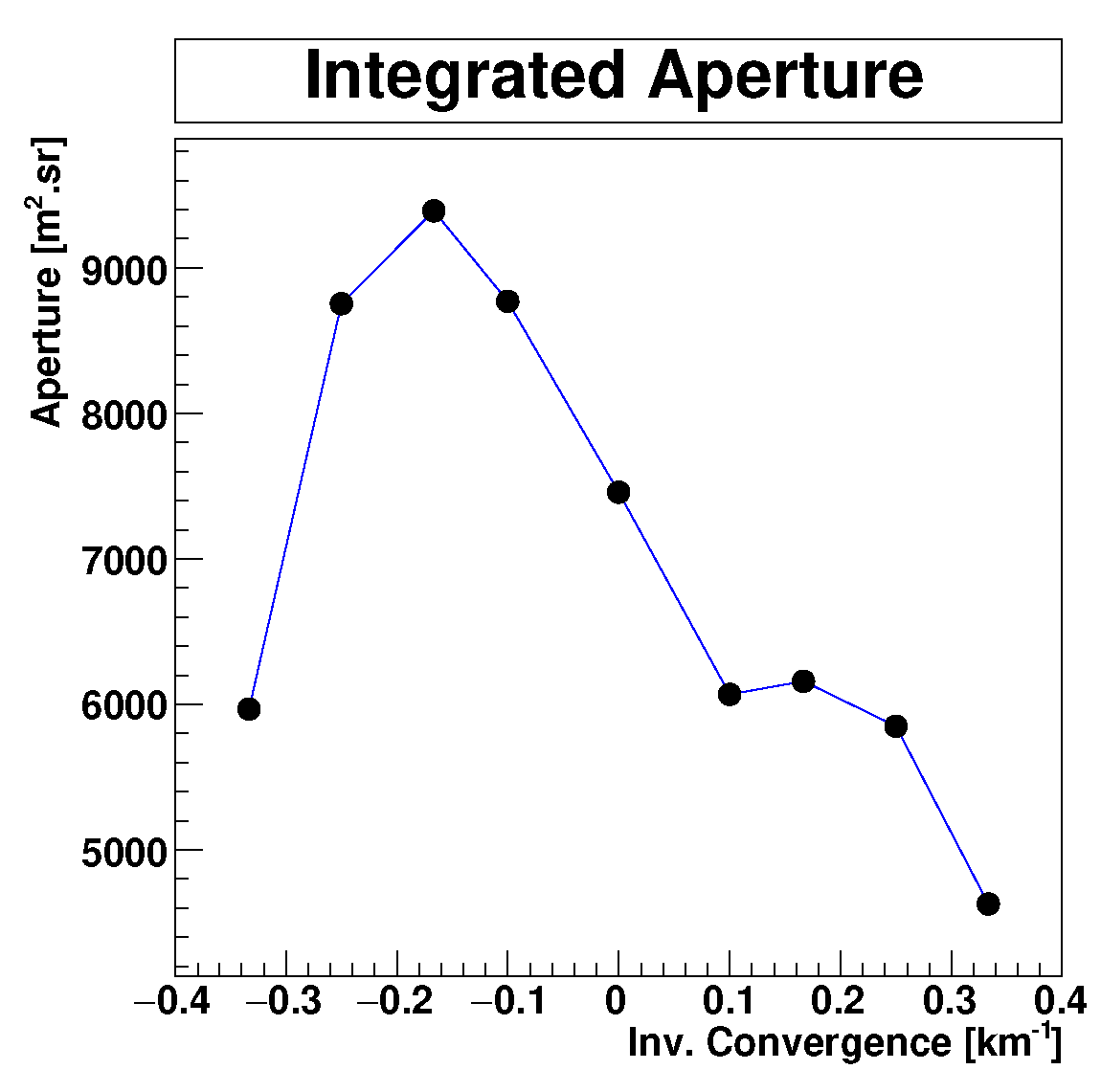

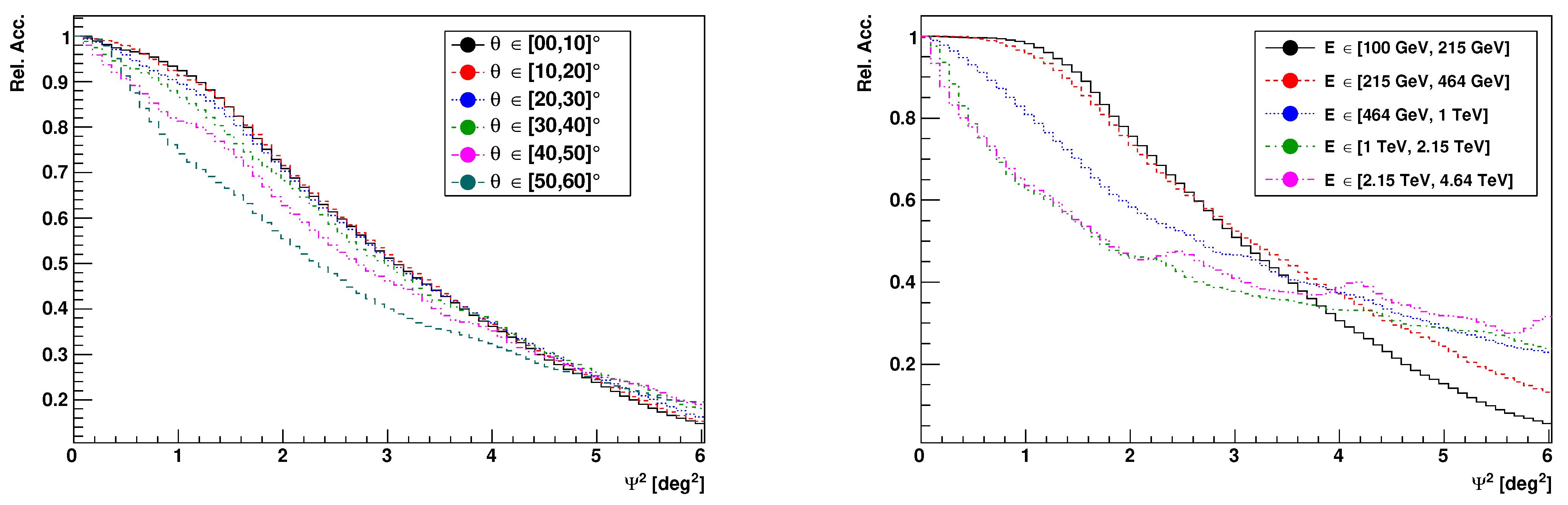

2.3.1. Radial Acceptance

- Conceptually easy

- Known sources (if not overlapping with the neater of the FoV) can be excluded easily

- Acceptance can be determined from the actual data set or from an alternate one

- Can be computed in energy slices and in zenith angle bands

- Simple gradients (zenith angle gradient) can be taken into account rather easily

- Does not take into account the non-symmetrical response of the camera, nor the actual array shape

- Does not take into account inhomogeneities of response

- Does not take into account varying conditions (NSB, etc.)

- Requires a significant amount of data to be already taken with the corresponding array configuration

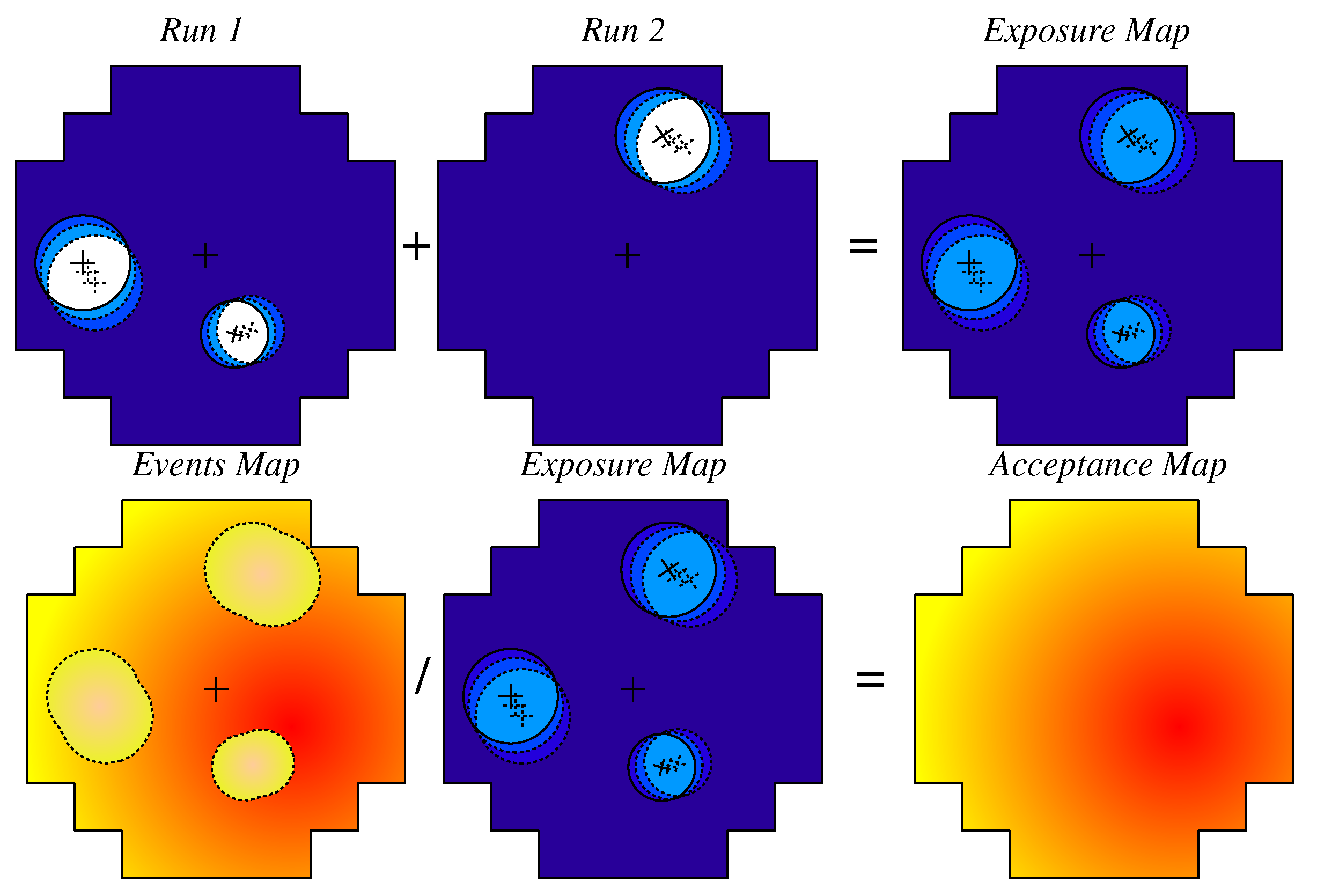

2.3.2. 2D Acceptance

- Takes into account actual camera shape and inhomogeneities of response

- Known sources can be excluded as soon as several different pointing positions are used in the data set (one needs to make sure, however, that no part of the FoV is excluded in all pointing positions)

- Acceptance can be determined from the actual data set or an alternate one (i.e., extragalactic observations)

- Can be computed in energy slices

- Simple gradients (zenith angle gradient) can be taken into account

- Technically more complicated

- Requires a minimum number of runs with sources at different locations

- Does not take into account varying conditions (NSB, optical efficiency, …)

- Requires a significant amount of data to be already taken with the corresponding array configuration

2.3.3. Simulated Acceptance

- Conceptually rather simple

- Takes into account the actual array configuration for each individual run

- Takes into account varying conditions (NSB, high voltage gradients, pixel gains, …) across the field of view

- Reproduces naturally the zenith angle gradients (no correction needs to be applied afterwards)

- One model per run, no need to generate zenith angle slices or whatsoever, nor to use a multidimensional interpolation scheme

- No need to exclude known or putative -ray sources, no risk of contamination by large scale diffuse emission

- Can be derived as soon as observations are made; no need for a large, pre-existing data set

- Computationally more intensive (in order to produce enough statistics)

- Needs to be produced for every run

- Requires some radial corrections due to the difference between cosmic-ray -like events and real gammas

2.3.4. Comparison Elements and Limits

2.4. Background Subtraction

- Reflected background, using -like events in regions at identical distances from the centre of the field of view, on a run-by-run basis,

- On-Off background, using -like events in identical regions of different, usually consecutive (but not always) observations,

- Ring background, using -like events in a ring around the ROI or around the centre of the field of view,

- Template background, using hadron-like events at the test position,

- Field-of-view background, using calculated acceptance as background,

- RunWise Simulated background, using completely simulated background.

2.4.1. Basics of Background Statistics

2.4.2. Excluded Regions

2.4.3. Reflected Regions

2.4.4. On-Off Background

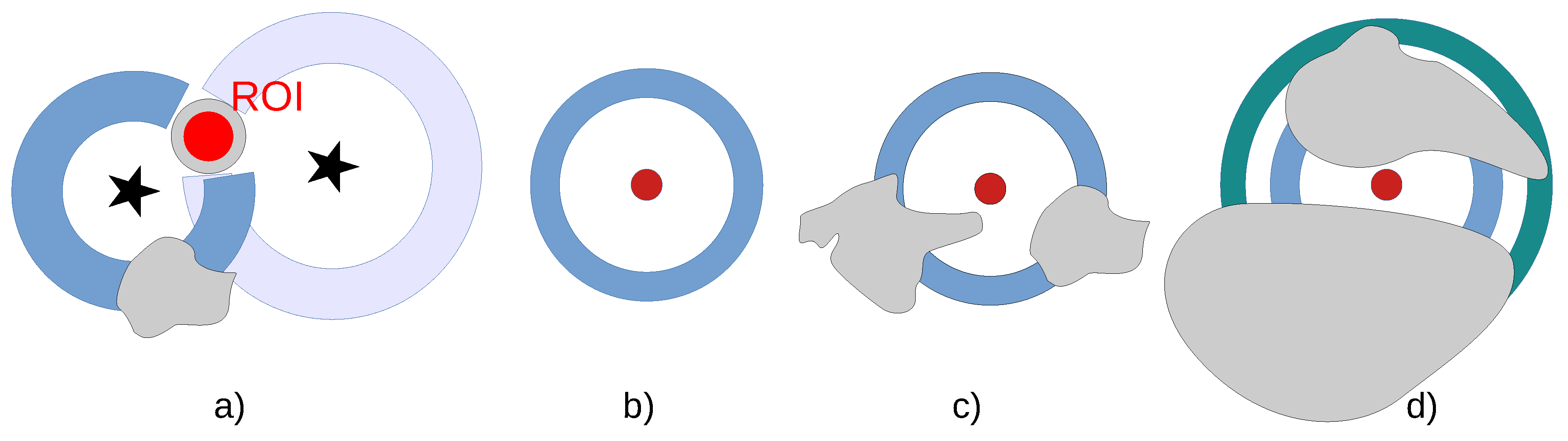

2.4.5. Ring Background

- The ring can be constructed around the pointing direction in the camera frame (Figure 12a), and then differ from run to run. This algorithm is then very similar to that of the reflected regions, and shares the same general properties (spectral reconstruction capabilities, …)

- The ring can be constructed around the ROI in the astronomical frame (equatorial, galactic, …, Figure 12b–d), and then uses the stacked data set, instead of individual runs to generate sky maps. The determination of the energy spectrum of the source is, however, very challenging in this version, because the ring around the ROI encompasses many different runs, corresponding to different observational conditions which need different response functions. The ring background can, however, be performed in energy slices (thus requiring the acceptance to also be determined in energy slices).

2.4.6. Adaptative Ring Background

2.4.7. Template Background

- -like events in the ROI (entering the ON sample), mostly made of hadronic and electronic cosmic rays within the -like selection

- true -ray events, corresponding to signal being sought (also entering the ON sample)

- hadron-like events in the ROI (entering the OFF sample)

2.4.8. Field-of-View Background

2.4.9. Assessment of Systematics

2.4.10. Comparison

2.5. Toward Template Fitting

- a model of isotropic diffuse emission, corresponding to extra-galactic diffuse rays, unresolved extra-galactic sources, and residual (misclassified) cosmic-ray emission.

- a model of the Galactic diffuse emission, which is developed using, in particular, spectroscopic HI and CO surveys as tracers of the interstellar gas, and diffusion codes such as GALPROP [26] (https://galprop.stanford.edu/, accessed on 28 October 2021) to derive the inverse Compton emission

- a source model, comprising the -ray source properties (morphology and energy spectrum) within the region of interest. Characteristics of the sources (position, shape, energy spectrum and brightness) can be fixed (for instance to the published values) or kept free, in which case they will be adjusted throughout the log-likelihood maximisation procedure.

2.6. Catalogue Pipelines

2.6.1. Requirements

- Selection of good quality data, based on instrumental and atmospheric measurements (stability of instrument trigger rate, cloud monitoring, atmospheric transparency measurement, …).

- Construction of an excluded regions mask, incorporating already-known -ray sources, but also new sources and/or possible diffuse contamination within the data set under investigation.

- Computation of acceptance.

- Construction of background subtracted maps (excess and significance maps) using the appropriate algorithm (adaptative ring background, …).

- Determination of source components and morphologies.

2.6.2. Completeness, Angular Resolution and Horizon

- For a homogeneous population of sources of the same luminosity and size, the maximum detection distance (horizon) scales as . For point-like source, it scales as . The horizon of a given survey therefore depends on the type of sources that one considers. It is usually defined for point-like sources, but can be reduced substantially for extended sources.

- The reduction of apparent size with increasing source distance d partially compensates for the decrease in flux. Indeed, the minimum detectable luminosity scales as for extended sources vs. for point-like ones. The survey depth depends on the source class considered. In the case of source class for which the extent varies with age (as for instance, for expanding shell-type supernova remnants), better flux limits can be obtained in the early ages, when the source is still rather compact, whereas the peak of the VHE emission can occur at later stages.

- The horizon scales as for extended sources and for point-like ones, and is currently still limited to a rather small fraction of the Milky Way. It is usually more effective to increase the spatial coverage of a survey (if possible) to collect more sources, rather than to increase its depth.

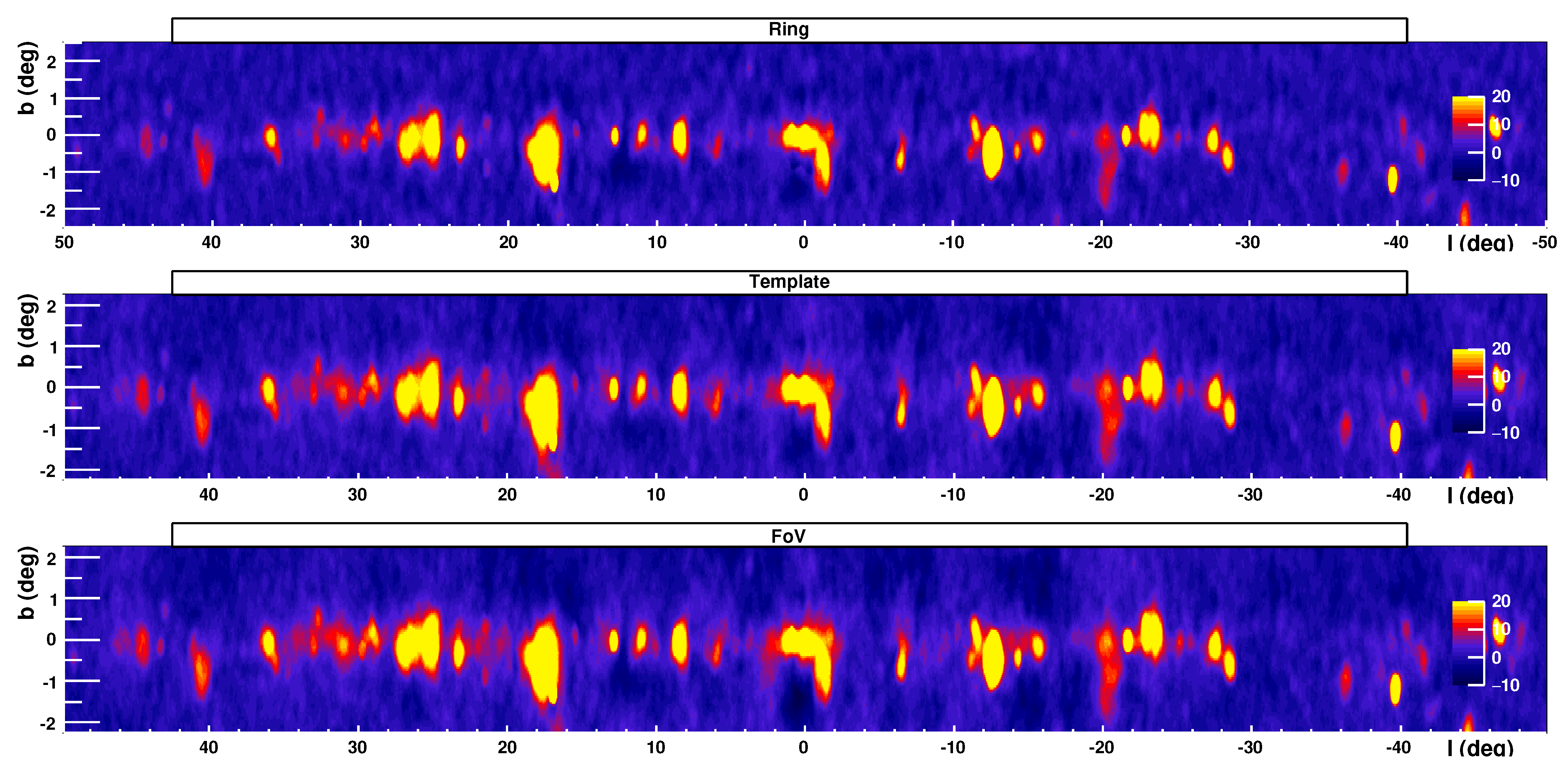

3. A New View on the Milky Way

3.1. Existing IACT Surveys

3.1.1. Early Times

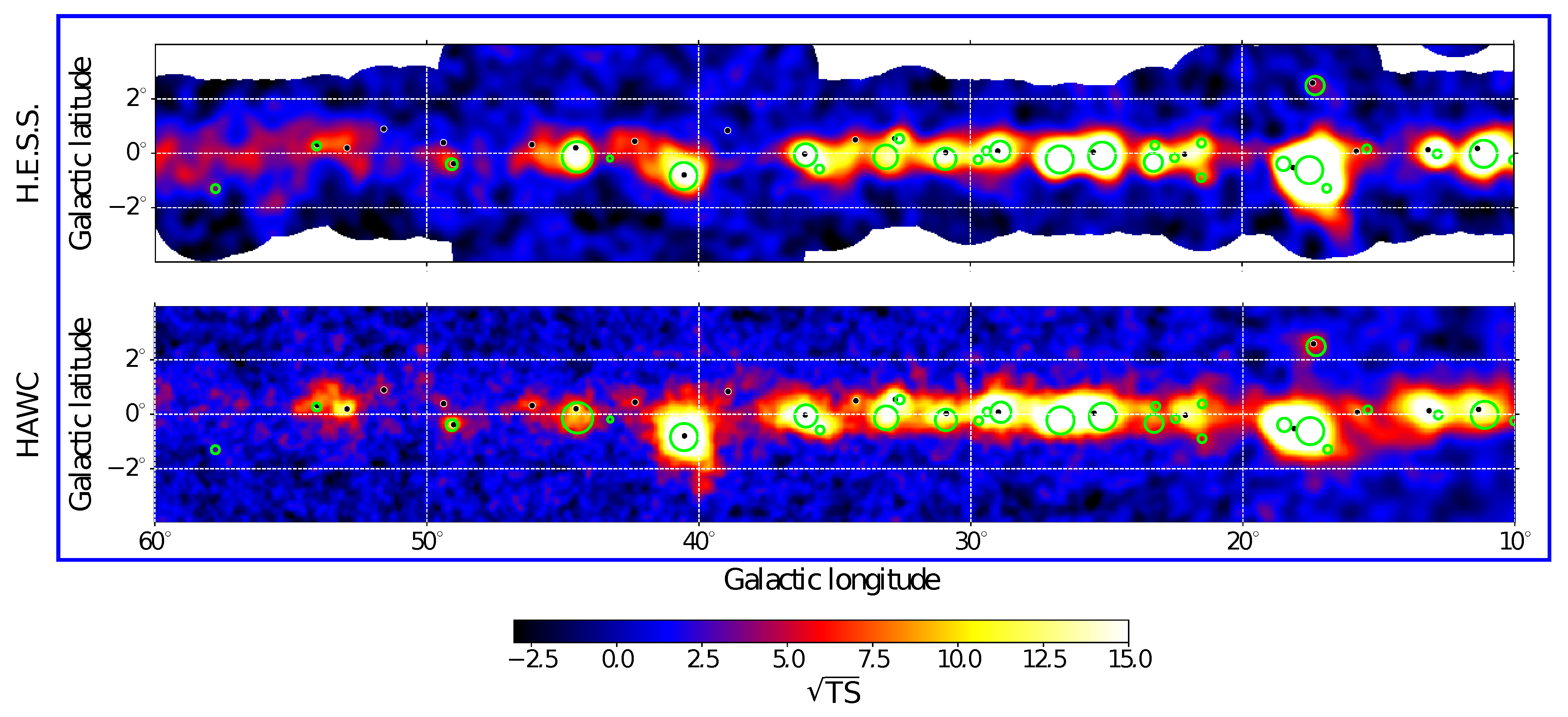

3.1.2. Galactic Plane Surveys

3.1.3. Particular Regions

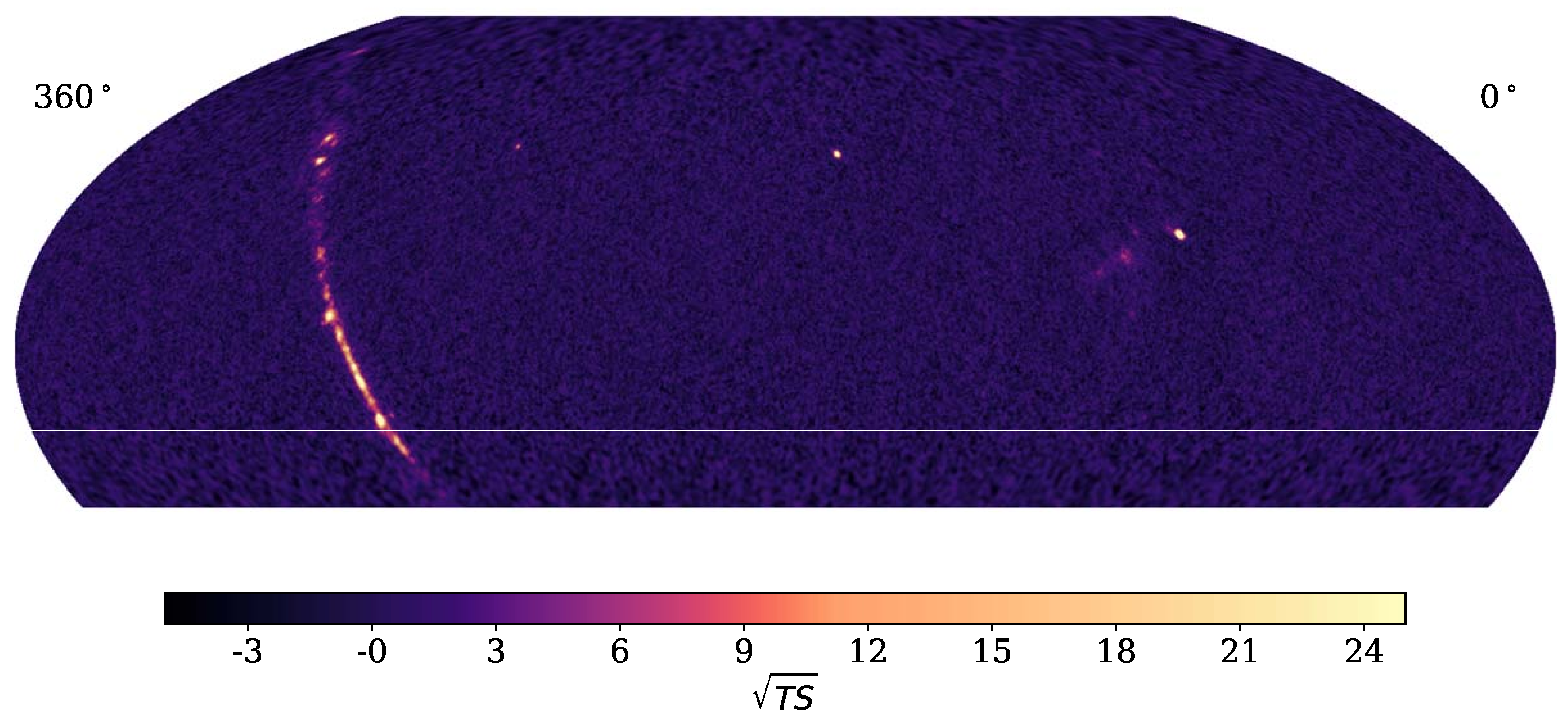

3.2. Results from Particle Array Survey Instruments

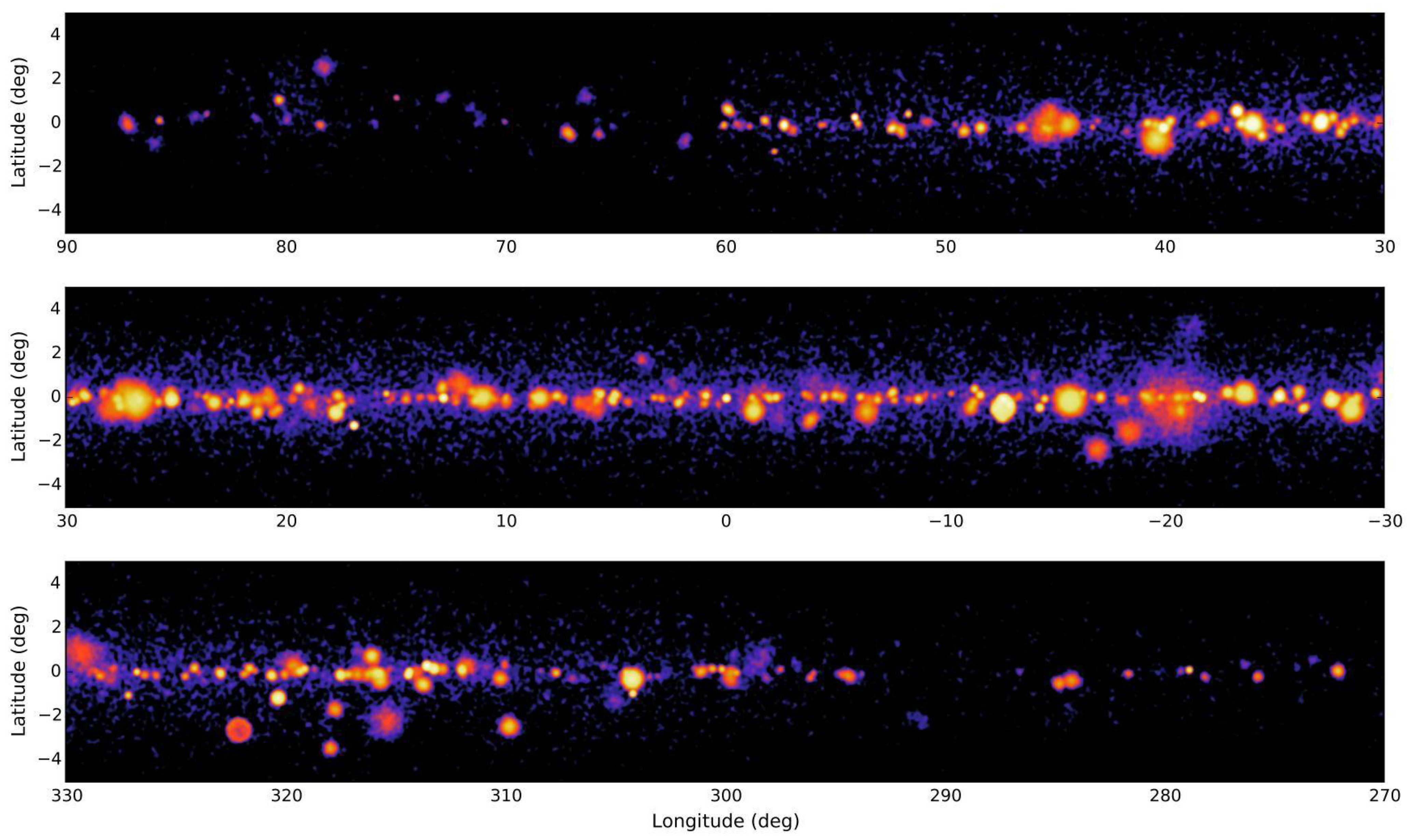

3.3. Meta-Catalogues and Population of VHE Sources

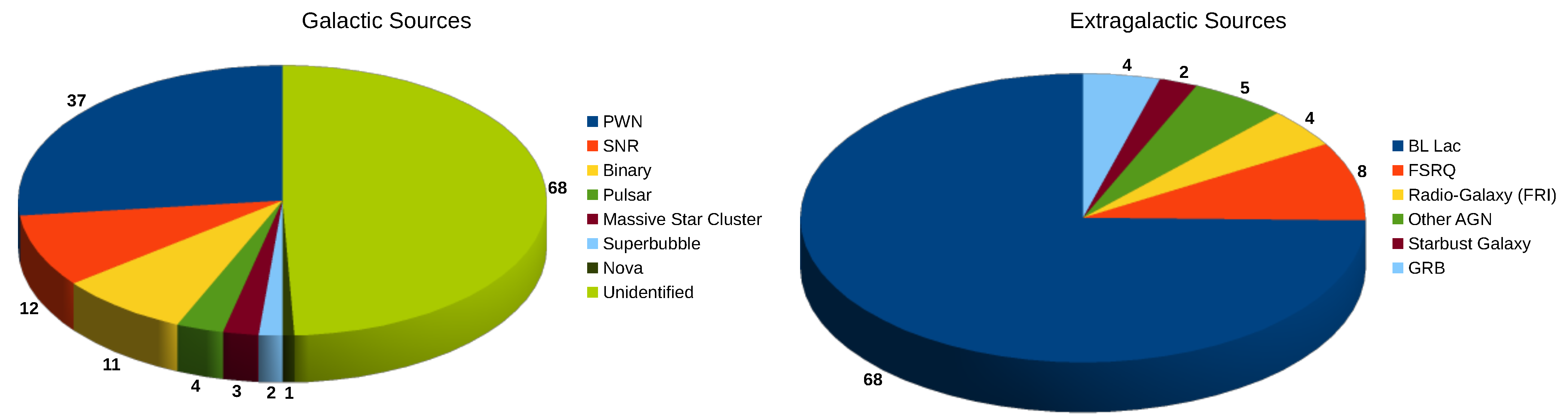

3.4. Population of VHE Sources

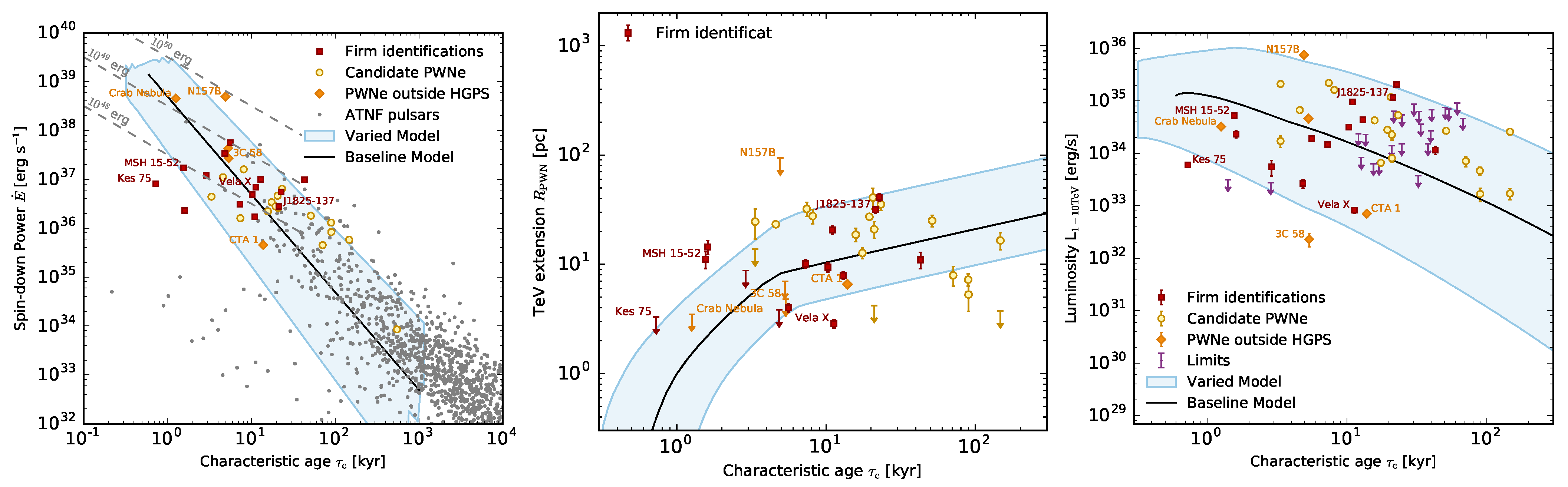

3.4.1. Population of Pulsar Wind Nebula

3.4.2. Supernova Remnant Populations

4. Perspectives and Outlook

4.1. CTA, the Next Generation IACT

- An extragalactic survey, covering 1/4 of the sky with a sensitivity of ∼ Crab in 1000 h of observations. This will provide an unbiased sample of active galactic nuclei and other possible extragalactic sources, and a snapshot of their activity (since AGNs are intrinsically variables at almost all timescales)

- A deep galactic plane survey, reaching ∼ Crab sensitivity in the inner regions (and Cygnus region) and ∼ in the entire plane region. This will provide a horizon of ∼ (point-like), thus covering a large fraction of the Galactic sources.

- A deep survey of the LMC region, aiming at an excellent angular resolution to resolve structures down to ∼, in order to be able to resolve individual objects and map the diffuse emission.

- The background systematics will most likely be the limiting factor for the sensitivity achievable, most notably for the (very) extended sources. Given the foreseen increase of the background rate by ∼, the state-of-the-art uncertainties in background estimation of 1–2% will need to be substantially improved by refining the acceptance models. Changes in the array layout (telescopes under maintenance, …), inhomogeneities of camera response and/or atmospheric effects (Section 2.3) should be studied carefully and, whenever possible, incorporated in the model. In this regard, the simulated acceptance being currently developed might be a promising approach. The mitigation techniques recently developed (Section 2.4.9) can certainly help, but they tend to reduce the sensitivity of the array. Further work is clearly needed to take into account the various sources of systematics in the calculation of the acceptance.

- With the detection of ∼ sources in the same field of view, up to several hundreds of sources, source confusion and overlap are expected to become a major issue, especially in the context of the unknown shapes of the sources and the unknown level of large scale diffuse emission. Some preliminary estimates performed with an extrapolation of the current – source distribution indicate a source confusion lower limit on the order of ∼ in the core CTA energy range [53]. Template fitting and 3D modelling of the sources (Section 2.5) can help with the separation of superimposed sources with different spectral characteristics.

4.2. Next Generation Particle Array Survey Instruments

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AGN | active galactic nucleus |

| CTA | Cherenkov telescope array |

| EBL | extragalactic background light |

| FoV | field of view |

| HGPS | H.E.S.S. Galactic Plane Survey |

| HE | high energy |

| IACT | imaging atmospheric Cherenkov telescope |

| LMC | large magellanic cloud |

| LST | large-sized telescope |

| MST | medium-sized telescope |

| NSB | night sky background |

| probability density function | |

| PWN | pulsar wind nebula |

| RA | right ascension |

| ROI | region of interest |

| RPC | resistive plate chamber |

| SNR | supernova remnant |

| SST | small-sized telescope |

| VHE | very high energy |

References

- Hess, V.F. Uber Beobachtungen der durchdringenden Strahlung bei sieben Freiballonfahrten. Z. Phys. 1912, 13, 1084. [Google Scholar]

- Cherenkov, P.A. Visible emission of clean liquids by action of γ radiation. Dokl. Akad. Nauk SSSR 1934, 2, 451. [Google Scholar]

- Blackett, P.M.S. A possible contribution to the night sky from the Cerenkov radiation emitted by cosmic rays. In The Emission Spectra of the Night Sky and Aurorae; The Physical Society: London, UK, 1948; p. 34. [Google Scholar]

- Galbraith, W.; Jelley, J.V. Light Pulses from the Night Sky associated with Cosmic Rays. Nature 1953, 171, 349–350. [Google Scholar] [CrossRef]

- Weekes, T.C.; Cawley, M.F.; Fegan, D.J.; Gibbs, K.G.; Hillas, A.M.; Kowk, P.W.; Lamb, R.C.; Lewis, D.A.; Macomb, D.J.; Porter, N.A.; et al. Observation of TeV Gamma Rays from the Crab Nebula Using the Atmospheric Cerenkov Imaging Technique. Astrophys. J. 1989, 342, 379. [Google Scholar] [CrossRef]

- Guy, J. Premiers Résultats de L’expérience HESS et étude du Potentiel de Détection de Matière Noire Supersymétrique. Ph.D. Thesis, Université Pierre et Marie Curie-Paris VI, Paris, France, 2003. [Google Scholar]

- de Naurois, M.; Rolland, L. A high performance likelihood reconstruction of γ-rays for imaging atmospheric Cherenkov telescopes. Astropart. Phys. 2009, 32, 231–252. [Google Scholar] [CrossRef] [Green Version]

- Szanecki, M.; Sobczyńska, D.; Niedźwiecki, A.; Sitarek, J.; Bednarek, W. Monte Carlo simulations of alternative sky observation modes with the Cherenkov Telescope Array. Astropart. Phys. 2015, 67, 33–46. [Google Scholar] [CrossRef] [Green Version]

- D’Amico, G.; Terzić, T.; Strišković, J.; Doro, M.; Strzys, M.; van Scherpenberg, J. Signal estimation in on/off measurements including event-by-event variables. Phys. Rev. D 2021, 103, 123001. [Google Scholar] [CrossRef]

- Mohrmann, L.; Specovius, A.; Tiziani, D.; Funk, S.; Malyshev, D.; Nakashima, K.; van Eldik, C. Validation of open-source science tools and background model construction in γ-ray astronomy. Astron. Astrophys. 2019, 632, A72. [Google Scholar] [CrossRef]

- De Naurois, M. Very High Energy Astronomy from H.E.S.S. to CTA. Opening of a New Astronomical Window on the Non-Thermal Universe. Habilitation Thesis, Université Pierre et Marie Curie-Paris VI, Paris, France, 2012. [Google Scholar]

- Klepser, S. A generalized likelihood ratio test statistic for Cherenkov telescope data. Astropart. Phys. 2012, 36, 64–76. [Google Scholar] [CrossRef] [Green Version]

- Holler, M.; Lenain, J.P.; de Naurois, M.; Rauth, R.; Sanchez, D.A. A run-wise simulation and analysis framework for Imaging Atmospheric Cherenkov Telescope arrays. Astropart. Phys. 2020, 123, 102491. [Google Scholar] [CrossRef]

- Holler, M.; Lenain, J.-P.; de Naurois, M.; Rauth, R.; Sanchez, D.A. Run-Wise Simulations for Analyses with Open-Source Tools in IACT Gamma-Ray Astronomy. Astropart. Phys. 2020, 123, 102491. [Google Scholar] [CrossRef]

- Berge, D.; Funk, S.; Hinton, J. Background modelling in very-high-energy γ-ray astronomy. Astron. Astrophys. 2007, 466, 1219–1229. [Google Scholar] [CrossRef] [Green Version]

- Li, T.P.; Ma, Y.Q. Analysis methods for results in gamma-ray astronomy. Astrophys. J. 1983, 272, 317–324. [Google Scholar] [CrossRef]

- Cash, W. Parameter estimation in astronomy through application of the likelihood ratio. Astrophys. J. 1979, 228, 939–947. [Google Scholar] [CrossRef]

- Abdalla, H. et al. [H.E.S.S. Collaboration] The H.E.S.S. Galactic plane survey. Astron. Astrophys. 2018, 612, A1. [Google Scholar] [CrossRef]

- Abramowski, A. et al. [H.E.S.S. Collaboration] Probing the extent of the non-thermal emission from the Vela X region at TeV energies with H.E.S.S. Astron. Astrophys. 2012, 548, A38. [Google Scholar] [CrossRef] [Green Version]

- Rowell, G.P. A new template background estimate for source searching in TeV gamma -ray astronomy. Astron. Astrophys. 2003, 410, 389–396. [Google Scholar] [CrossRef] [Green Version]

- Fernandes, M.V.; Horns, D.; Kosack, K.; Raue, M.; Rowell, G. A new method of reconstructing VHE γ-ray spectra: The Template Background Spectrum. Astron. Astrophys. 2014, 568, A117. [Google Scholar] [CrossRef] [Green Version]

- Abdalla, H. et al. [H.E.S.S. Collaboration] [HAWC Collaboration] TeV Emission of Galactic Plane Sources with HAWC and H.E.S.S. Astrophys. J. 2021, 917, 6. [Google Scholar] [CrossRef]

- Holler, M. Assessing Background Systematics in an Analysis Field of View. H.E.S.S. Internal Note 2001, 21. [Google Scholar]

- Spengler, G. Significance in gamma ray astronomy with systematic errors. Astropart. Phys. 2015, 67, 70–74. [Google Scholar] [CrossRef] [Green Version]

- Dickinson, H.; Conrad, J. Handling systematic uncertainties and combined source analyses for Atmospheric Cherenkov Telescopes. Astropart. Phys. 2013, 41, 17–30. [Google Scholar] [CrossRef] [Green Version]

- Strong, A.W.; Moskalenko, I.V. Propagation of Cosmic-Ray Nucleons in the Galaxy. Astrophys. J. 1998, 509, 212–228. [Google Scholar] [CrossRef] [Green Version]

- Vovk, I.; Strzys, M.; Fruck, C. Spatial likelihood analysis for MAGIC telescope data. From instrument response modelling to spectral extraction. Astron. Astrophys. 2018, 619, A7. [Google Scholar] [CrossRef] [Green Version]

- Knödlseder, J.; Mayer, M.; Deil, C.; Cayrou, J.B.; Owen, E.; Kelley-Hoskins, N.; Lu, C.C.; Buehler, R.; Forest, F.; Louge, T.; et al. GammaLib and ctools. A software framework for the analysis of astronomical gamma-ray data. Astron. Astrophys. 2016, 593, A1. [Google Scholar] [CrossRef] [Green Version]

- Aharonian, F.A. et al. [H.E.S.S. Collaboration] A search for TeV gamma-ray emission from SNRs, pulsars and unidentified GeV sources in the Galactic plane in the longitude range between −2 deg and 85 deg. Astron. Astrophys. 2002, 395, 803–811. [Google Scholar] [CrossRef]

- Aharonian, F. et al. [H.E.S.S. Collaboration] A New Population of Very High Energy Gamma-Ray Sources in the Milky Way. Science 2005, 307, 1938–1942. [Google Scholar] [CrossRef] [Green Version]

- Aharonian, F. et al. [H.E.S.S. Collaboration] The H.E.S.S. Survey of the Inner Galaxy in Very High Energy Gamma Rays. Astrophys. J. 2006, 636, 777–797. [Google Scholar] [CrossRef] [Green Version]

- Abramowski, A. et al. [H.E.S.S. Collaboration] Diffuse Galactic gamma-ray emission with H.E.S.S. Phys. Rev. D 2014, 90, 122007. [Google Scholar] [CrossRef] [Green Version]

- Steppa, C.; Egberts, K. Modelling the Galactic very-high-energy γ-ray source population. Astron. Astrophys. 2020, 643, A137. [Google Scholar] [CrossRef]

- Abramowski, A. et al. [H.E.S.S. Collaboration] The exceptionally powerful TeV γ-ray emitters in the Large Magellanic Cloud. Science 2015, 347, 406–412. [Google Scholar] [CrossRef] [Green Version]

- Abeysekara, A.U.; Archer, A.; Aune, T.; Benbow, W.; Bird, R.; Brose, R.; Buchovecky, M.; Bugaev, V.; Cui, W.; Daniel, M.K.; et al. A Very High Energy γ-Ray Survey toward the Cygnus Region of the Galaxy. Astrophys. J. 2018, 861, 134. [Google Scholar] [CrossRef] [Green Version]

- Bacci, C. et al. [ARGO-YBJ Collaboration] Results from the ARGO-YBJ test experiment. Astropart. Phys. 2002, 17, 151–165. [Google Scholar] [CrossRef]

- Amenomori, M. et al. [Tibet AS γ Collaboration] First Detection of Photons with Energy beyond 100 TeV from an Astrophysical Source. Phys. Rev. Lett. 2019, 123, 051101. [Google Scholar] [CrossRef] [Green Version]

- Atkins, R.; Benbow, W.; Berley, D.; Blaufuss, E.; Bussons, J.; Coyne, D.G.; Delay, R.S.; DeYoung, T.; Dingus, B.L.; Dorfan, D.E.; et al. Observation of TeV Gamma Rays from the Crab Nebula with Milagro Using a New Background Rejection Technique. Astrophys. J. 2003, 595, 803–811. [Google Scholar] [CrossRef] [Green Version]

- Abeysekara, A.U.; Albert, A.; Alfaro, R.; Alvarez, C.; Álvarez, J.D.; Arceo, R.; Arteaga-Velázquez, J.C.; Rojas, D.A.; Solares, H.A.; Barber, A.S.; et al. Daily Monitoring of TeV Gamma-Ray Emission from Mrk 421, Mrk 501, and the Crab Nebula with HAWC. Astrophys. J. 2017, 841, 100. [Google Scholar] [CrossRef]

- Cao, Z.; Aharonian, F.A.; An, Q.; Bai, L.X.; Bai, Y.X.; Bao, Y.W.; Bastieri, D.; Bi, X.J.; Bi, Y.J.; Cai, H.; et al. Ultrahigh-energy photons up to 1.4 petaelectronvolts from 12 γ-ray Galactic sources. Nature 2021, 594, 33–36. [Google Scholar] [CrossRef] [PubMed]

- Younk, P.W. et al. [HAWC Collaboration] A high-level analysis framework for HAWC. In Proceedings of the 34th International Cosmic Ray Conference (ICRC2015), The Hague, The Netherlands, 30 July–6 August 2015; Volume 34, p. 948. [Google Scholar]

- Atkins, R.; Benbow, W.; Berley, D.; Blaufuss, E.; Bussons, J.; Coyne, D.G.; DeYoung, T.; Dingus, B.L.; Dorfan, D.E.; Ellsworth, R.W.; et al. TeV Gamma-Ray Survey of the Northern Hemisphere Sky Using the Milagro Observatory. Astrophys. J. 2004, 608, 680–685. [Google Scholar] [CrossRef] [Green Version]

- Abeysekara, A.U. et al. [HAWC Collaboration] Search for TeV Gamma-Ray Emission from Point-like Sources in the Inner Galactic Plane with a Partial Configuration of the HAWC Observatory. Astrophys. J. 2016, 817, 3. [Google Scholar] [CrossRef] [Green Version]

- Abeysekara, A.U.; Albert, A.; Alfaro, R.; Alvarez, C.; Álvarez, J.D.; Arceo, R.; Arteaga-Velázquez, J.C.; Solares, H.A.; Barber, A.S.; Baughman, B.; et al. The 2HWC HAWC Observatory Gamma-Ray Catalog. Astrophys. J. 2017, 843, 40. [Google Scholar] [CrossRef] [Green Version]

- Albert, A. et al. [HAWC Collaboration] 3HWC: The Third HAWC Catalog of Very-high-energy Gamma-Ray Sources. Astrophys. J. 2020, 905, 76. [Google Scholar] [CrossRef]

- Abeysekara, A.U.; Albert, A.; Alfaro, R.; Alvarez, C.; Álvarez, J.D.; Arceo, R.; Arteaga-Velázquez, J.C.; Rojas, D.A.; Solares, H.A.; Barber, A.S.; et al. Extended gamma-ray sources around pulsars constrain the origin of the positron flux at Earth. Science 2017, 358, 911–914. [Google Scholar] [CrossRef] [Green Version]

- Patel, S.; Maier, G.; Kaaret, P. VTSCat—The VERITAS Catalog of Gamma Ray Observations. arXiv 2021, arXiv:2108.06424. [Google Scholar]

- Wakely, S.P.; Horan, D. TeVCat: An online catalog for Very High Energy Gamma-Ray Astronomy. Int. Cosm. Ray Conf. 2008, 3, 1341–1344. [Google Scholar]

- Abdalla, H. et al. [H.E.S.S. Collaboration] The population of TeV pulsar wind nebulae in the H.E.S.S. Galactic Plane Survey. Astron. Astrophys. 2018, 612, A2. [Google Scholar] [CrossRef]

- Abdalla, H. et al. [H.E.S.S. Collaboration] Population study of Galactic supernova remnants at very high γ-ray energies with H.E.S.S. Astron. Astrophys. 2018, 612, A3. [Google Scholar] [CrossRef] [Green Version]

- Acharya, B.S. et al. [CTA Consortium] Introducing the CTA concept. Astropart. Phys. 2013, 43, 3–18. [Google Scholar] [CrossRef] [Green Version]

- Bernlöhr, K. et al. [CTA Consortium] Monte Carlo design studies for the Cherenkov Telescope Array. Astropart. Phys. 2013, 43, 171–188. [Google Scholar] [CrossRef]

- Dubus, G. et al. [CTA Consortium] Surveys with the Cherenkov Telescope Array. Astropart. Phys. 2013, 43, 317–330. [Google Scholar] [CrossRef] [Green Version]

- Chaves, R.; Mukherjee, R.; Ong, R.A. KSP: Galactic Plane Survey. In Science with the Cherenkov Telescope Array; World Scientific Publishing Company: Singapore, 2019; Chapter 6; pp. 101–124. [Google Scholar] [CrossRef]

- Marandon, V.; Jardin-Blicq, A.; Schoorlemmer, H. Latest news from the HAWC outrigger array. In Proceedings of the 36th International Cosmic Ray Conference (ICRC2019), Madison, WI, USA, 24 July–1 August 2019; Volume 36, p. 736. [Google Scholar]

- Joshi, V.; Schoorlemmer, H. Air shower reconstruction using HAWC and the Outrigger array. In Proceedings of the 36th International Cosmic Ray Conference (ICRC2019), Madison, WI, USA, 24 July–1 August 2019; Volume 36, p. 707. [Google Scholar]

- Albert, A.; Alfaro, R.; Ashkar, H.; Alvarez, C.; Alvarez, J.; Arteaga-Velázquez, J.C.; Zhou, H. Science Case for a Wide Field-of-View Very-High-Energy Gamma-Ray Observatory in the Southern Hemisphere. arXiv 2019, arXiv:1902.08429. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Naurois, M. The Making of Catalogues of Very-High-Energy γ-ray Sources. Universe 2021, 7, 421. https://doi.org/10.3390/universe7110421

de Naurois M. The Making of Catalogues of Very-High-Energy γ-ray Sources. Universe. 2021; 7(11):421. https://doi.org/10.3390/universe7110421

Chicago/Turabian Stylede Naurois, Mathieu. 2021. "The Making of Catalogues of Very-High-Energy γ-ray Sources" Universe 7, no. 11: 421. https://doi.org/10.3390/universe7110421