The ATLAS Fast TracKer—Architecture, Status and High-Level Data Quality Monitoring Framework †

Abstract

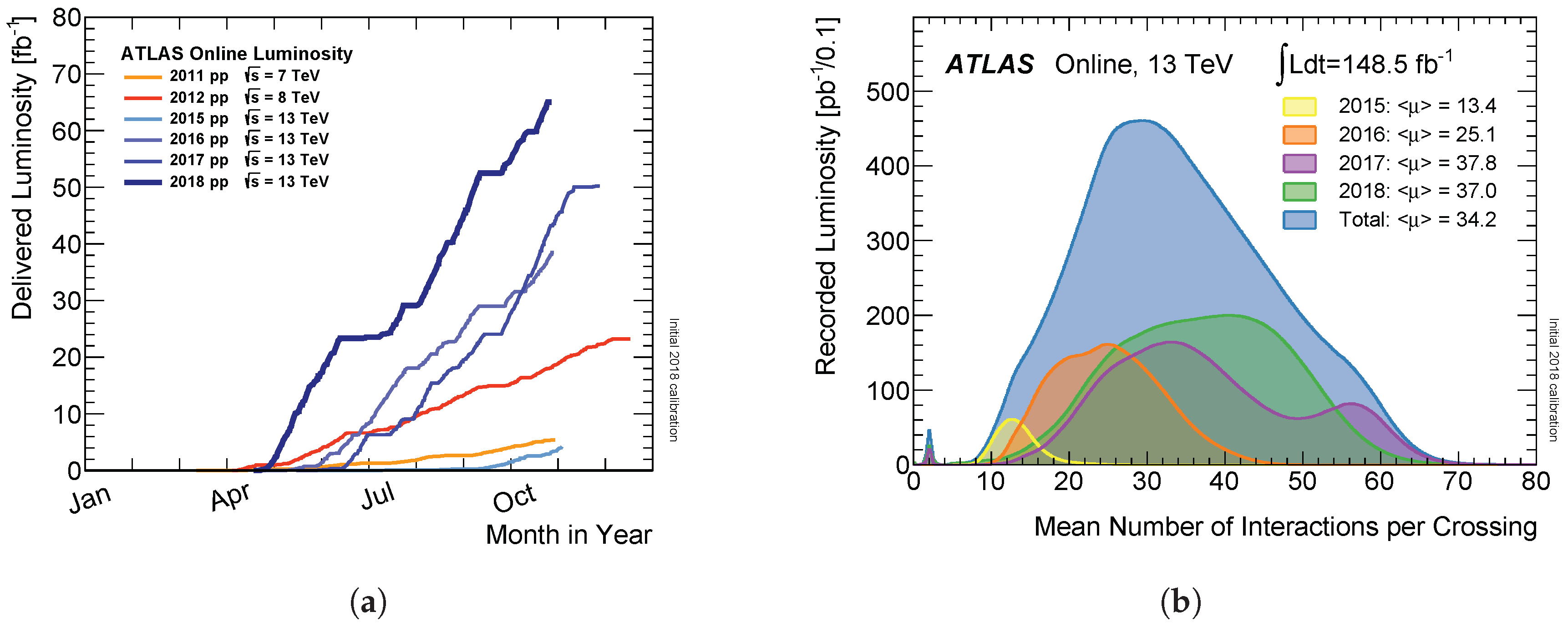

:1. Introduction

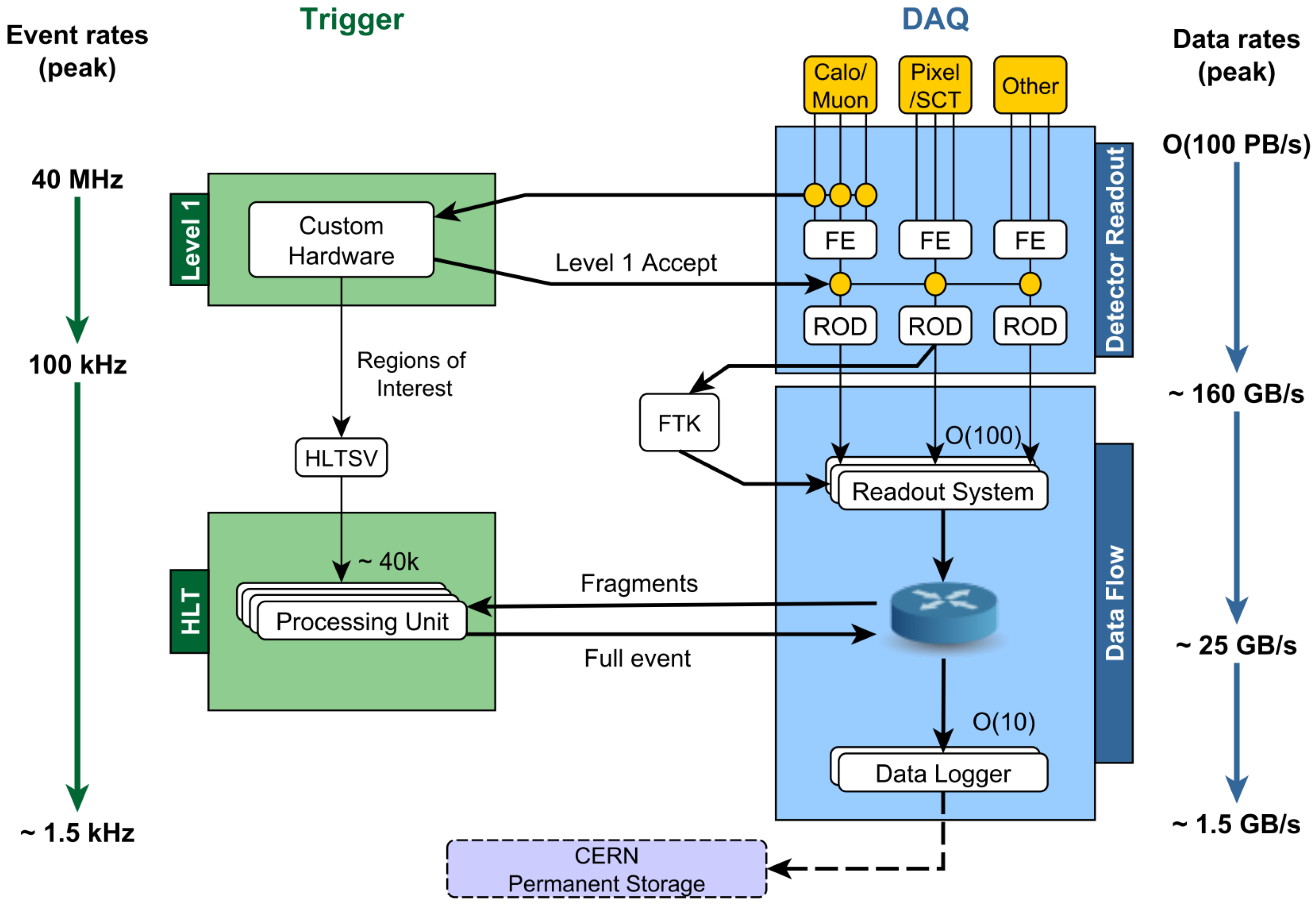

2. The ATLAS Fast Tracker

2.1. Operational Principle

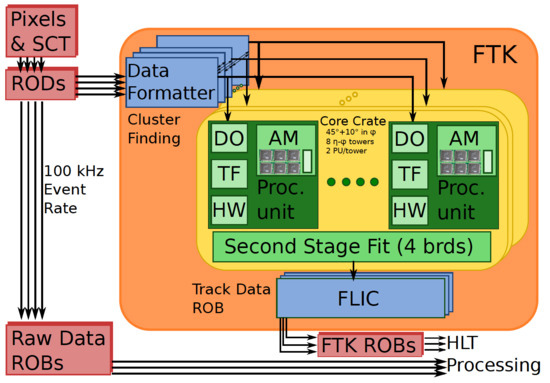

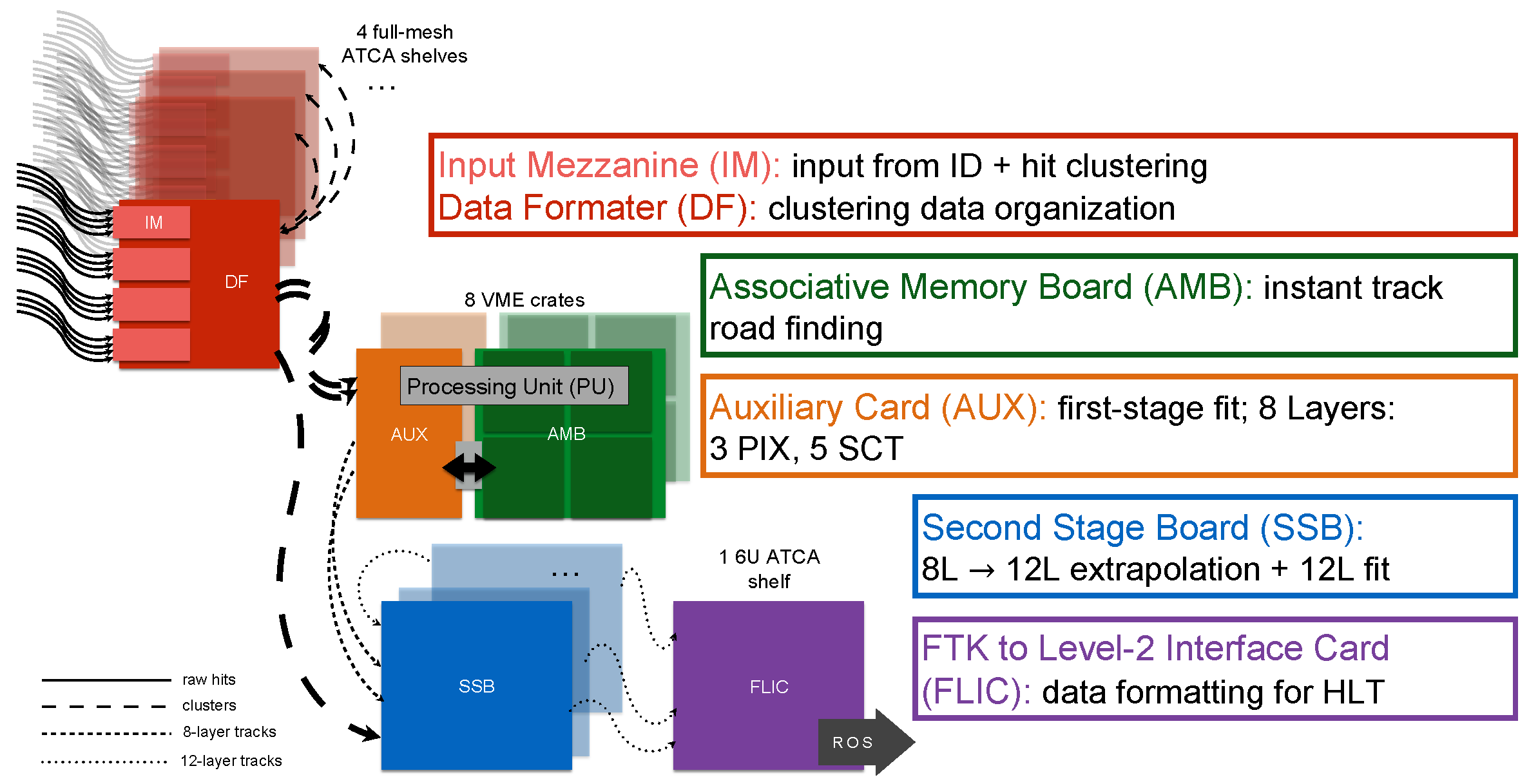

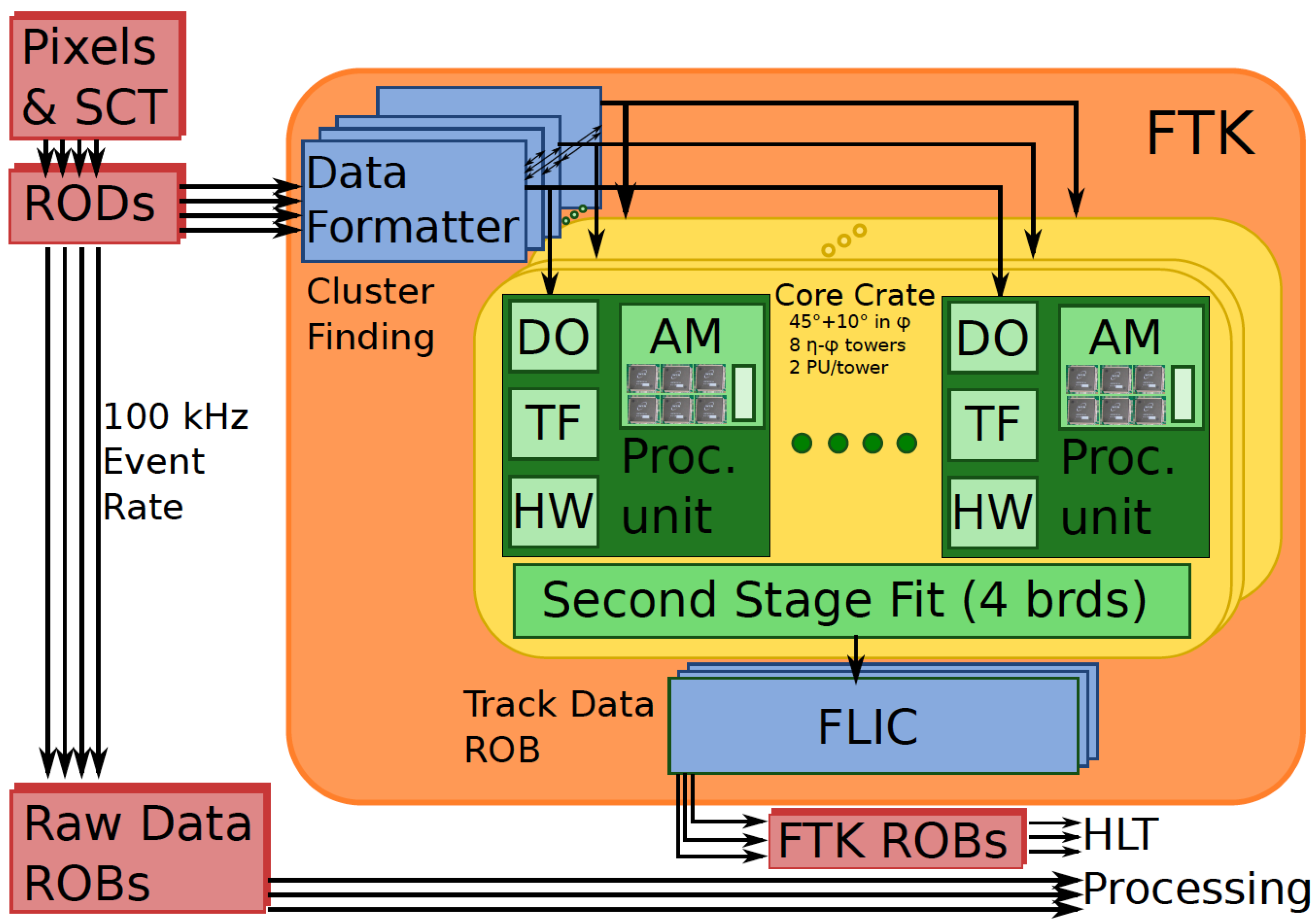

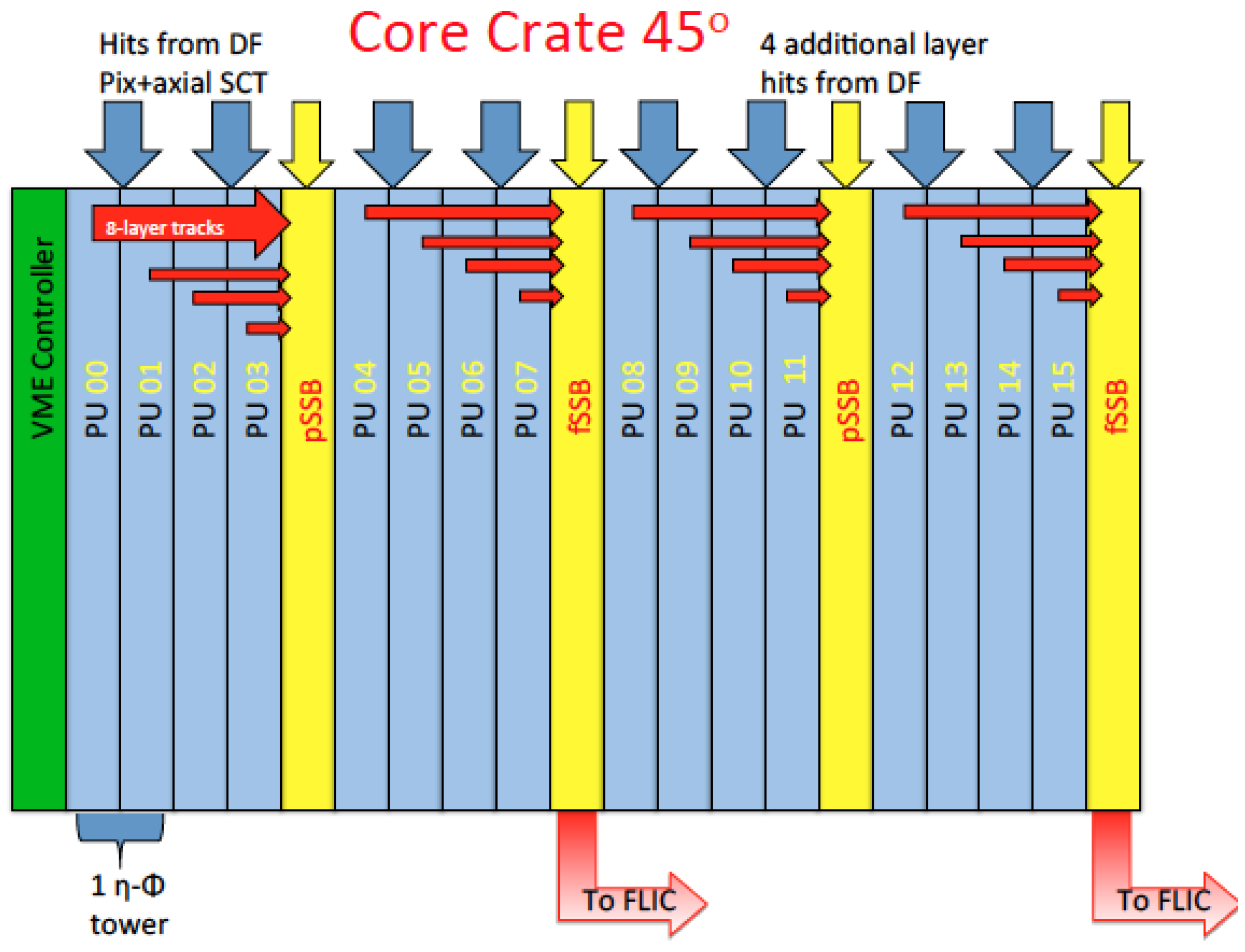

2.2. The FTK Architecture

2.3. FTK Infrastructure and Status

3. High-Level Data Quality Monitoring Framework

4. Conclusions

Funding

Conflicts of Interest

References

- ATLAS Collaboration. The ATLAS Experiment at the CERN Large Hadron Collider. J. Instrum. 2008, 3, S08003. [Google Scholar]

- ATLAS Collaboration. Observation of a new particle in the search for the Standard Model Higgs boson with the ATLAS detector at the LHC. Phys. Lett. B 2012, 716, 1–29. [Google Scholar] [CrossRef] [Green Version]

- ATLAS EXPERIMENT—Luminosity Public Results Run 2. Available online: https://twiki.cern.ch/twiki/bin/view/AtlasPublic/LuminosityPublicResultsRun2 (accessed on 30 November 2018).

- ATLAS Collaboration. ATLAS Level-1 Trigger: Technical Design Report; CERNLHCC-98-014, ATLAS-TDR-12. Available online: https://cds.cern.ch/record/381429 (accessed on 30 November 2018).

- ATLAS Collaboration. ATLAS High-Level Trigger, Data-Acquisition and Controls: Technical Design Report; CERN-LHCC-2003-022, ATLAS-TDR-16. Available online: https://cds.cern.ch/record/616089 (accessed on 30 November 2018).

- ATLAS Collaboration. ATLAS Inner Detector: Technical Design Report, 2; CERN-LHCC-97-017, ATLAS-TDR-5. Available online: https://cds.cern.ch/record/331064 (accessed on 30 November 2018).

- ATLAS IBL Collaboration. Production and Integration of the ATLAS Insertable BLayer. J. Instrum. 2008, 13, T05008. [Google Scholar]

- ATLAS Collaboration. Fast TracKer (FTK) Technical Design Report; CERN-LHCC-2013-007, ATLAS-TDR-025. Available online: https://cds.cern.ch/record/1552953 (accessed on 30 November 2018).

- Vazquez, W.P.; ATLAS Collaboration. The ATLAS Data Acquisition System in LHC Run 2. J. Phys. Conf. Ser. 2017, 898, 032017. [Google Scholar] [CrossRef] [Green Version]

- Annovi, A.; Castegnaro, A.; Giannetti, P.; Jiang, Z.; Luongo, C.; Pandini, C.; Roda, C.; Shochet, M.; Tompkins, L.; Volpi, G. Variable resolution Associative Memory optimization and simulation for the ATLAS FastTracker project. Proc. Sci. 2013, 13, 14. [Google Scholar] [CrossRef]

- Annovi, A.; Beretta, M.M.; Calderini, G.; Crescioli, F.; Frontini, L.; Liberali, V.; Shojaii, S.R.; Stabile, A. AM06: The Associative Memory chip for the Fast TracKer in the upgraded ATLAS detector. J. Instrum. 2017, 12, C04013. [Google Scholar] [CrossRef]

- ATLAS EXPERIMENT—FTK Public Results. Available online: https://twiki.cern.ch/twiki/bin/view/AtlasPublic/FTKPublicResults (accessed on 30 November 2018).

- Adelman, J.; Ancu, L.S.; Annovi, A.; Baines, J.; Britzger, D.; Ehrenfeld, W.; Giannetti, P.; Luongo, C.; Schmitt, S.; Stewart, G.; et al. ATLAS FTK Challenge: Simulation of a Billion-Fold Hardware Parallelism, ATL-DAQ-PROC-2014-030. Available online: https://cds.cern.ch/record/1951845 (accessed on 30 November 2018).

- Scholtes, I.; Kolos, S.; Zema, P.F. The ATLAS Event Monitoring Service—Peer-to-Peer Data Distribution in High-Energy Physics. IEEE Trans. Nucl. Sci. 2008, 55, 1610–1620. [Google Scholar] [CrossRef]

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marantis, A., on Behalf of the ATLAS Collaboration. The ATLAS Fast TracKer—Architecture, Status and High-Level Data Quality Monitoring Framework. Universe 2019, 5, 32. https://doi.org/10.3390/universe5010032

Marantis A on Behalf of the ATLAS Collaboration. The ATLAS Fast TracKer—Architecture, Status and High-Level Data Quality Monitoring Framework. Universe. 2019; 5(1):32. https://doi.org/10.3390/universe5010032

Chicago/Turabian StyleMarantis, Alexandros, on Behalf of the ATLAS Collaboration. 2019. "The ATLAS Fast TracKer—Architecture, Status and High-Level Data Quality Monitoring Framework" Universe 5, no. 1: 32. https://doi.org/10.3390/universe5010032