Information Science: Its Past, Present and Future

Abstract

: Early in its history and development, there were three types of classical information sciences: computer and information science, library and information science, telecommunications and information science. With the infiltration of the concept of information into various fields, an information discipline community of around 200 members was formed around the sub-fields of information theory or informatics or information science. For such a large community, a systematization, two trends of thought, some perspectives and suggestions are discussed in this paper.1. Introduction

We already live in an era with information being the sign of our time; undoubtedly, a science about this is capable of attracting many people. However, it is unlikely that most people can explain clearly what information science is. A non-professional could think that information science is a study about electronic information theory or computer science, whereas people who have a little deeper understanding about it will say it is a comprehensive discipline dealing with all information in computer science, telecommunications, electronic science, genetic engineering and so on. As opinions vary on that point, no conclusion can be reached right now.

As professionals in information science, do we know exactly what it is? Not necessarily! Among a variety of organizations and institutions of the information research set up around the world in recent years, the FIS (Foundation of Information Science) Group has had the greatest impact. After its inaugural meeting held in Madrid, 1994, convened by Michael Conrad and Pedro C. Marijuán, there have been heated debates on the issue of what its foundation consists of: three assemblies followed, thousands of BBS (bulletin board system) postings and mailing lists were transmitted and exchanged but no consensus has been reached. Therefore, a systematic combing and comprehensive analysis of the concept, history, present and future of information science may be significantly useful for its continuing growth and development.

2. The Concept of Information

What is information? This baffling question has been discussed for more than half a century by various types of people. During 2003–2005, many definitions were given within the FIS forum and a very profound review was posted by Marcin J. Schroeder [1]. Recently, an in-depth and systematic investigation was undertaken and presented by Mark Burgin [2,3]. So, here we only want to investigate some of its early origins and its basic meaning from an etymological viewpoint, which may be of some instructive significance to our later endeavors.

2.1. Some Ancient Human Practices and Basic Meaning of “Information” in Early English

The most primitive methods of early human communication probably were tying knots and drawing pictures. Starting around 3000 BC, the latter evolved into four different systems of writing: Hieroglyphics by Egyptians, Cuneiform by Mesopotamians, Maya by Central Americans, and Oracle-Bone Scripture by Chinese.

In order to meet the demand of overseas trade, around 1000 BC, the dwellers between the Mediterranean and the River Euphrates invented the Phoenician writing, based on the Egyptian Hieroglyphics and then they were spread far and wide. To the east, Hebrew and Arabic were formulated. To the west, the Hellenic came forth. In the Indo-European family, most language groups have a close relationship with Hellenic in origin, such as Latin, French, and English.

In Latin, there was a verb informare which means to inform, its noun form was information [4]. It was introduced into English via old French at a later stage.

In his A Dictionary of the Older Scottish Tongue from the Twelfth Century to the End of the Seventeenth, according to lexicographer William A. Craige, after the word information was introduced into the English from old French, its early spelling was not information but at least six other spelling forms have been integrated into English at different times: informatiou, informacioun, informatyoun, informacion, informacyon, and informatiod [5]. The spelling form information started to stabilize in the seventeenth century and became the dominant English term.

So, a consensus in most of the authoritative English dictionaries today is: in alignment with “to form-inform-information”, the form of “information” finally came into being. As to its original meaning, general conclusions include fact, news and knowledge. Based on these meanings, many similar concepts were derived afterwards. Recently, Wolfgang Hofkirchner has listed 19 of them: “structure, data, signal, message, signification, meaning, sense, sign, sign process, semiosis, psyche, intelligence, perception, thought, language, knowledge, consciousness, mind, wisdom ……” [6]. Here we can add: content, semantic, situation, code, pattern, index, order, indication, gene, intron, exon, cistor, entropy, etc. As to what its precise definition is, no one can provide an answer that could satisfy all sides right now nor, probably, will anyone achieve it in the near future either.

2.2. The History of Application of Information in Different Fields

In Craige's Dictionary, he had listed some applications of the word information by example in sentences. The following two examples were extracted from his Dictionary:

Letters purcheist… upone the sinister informatioun of James Leslie. (1655)

The counsell having… taken sufficient tryall and information of the supplicant. (1655)

From these two examples, we can draw the meaning of informatioun and information as “fact” or “news”.

The following examples came from English literature of different periods:

Not mentioning a word of my disgrace, because I had hitherto no regular information of it, and might suppose myself wholly ignorant of any such design [7]. (Jonathan Swift, 1727)

The meaning of information in this passage as “fact” or “news” is again clearly conveyed here.

His mouth certainly looked a good deal compressed, and the lower part of his face unusually stern and square, as the laughing girl gave him this information [8]. (Charlotte Brontë, 1847)

So far, we have “news” or “fact” as the early meaning of information but many of those examples only came from literature or general areas of human life, that is to say, its applications were restricted to those two areas in its original time. However, beginning from the early twentieth century, the term was gradually introduced into other non-literary and non-human life spheres.

The earliest application of information outside the above fields came from neuroscience by the famous Spanish anatomist, Santiago Ramón y Cajal in 1888 when he thought it was by “information” that two nerve cells could interact with each other [9].

In 1908, the German embryologist and philosopher, Hans Driesch introduced the term “information” into genetics at the same time as he put forward the concept of “positional information” [10]. It is still an important concept in modern genetics today.

The following example came from the famous statistician and geneticist, Ronald Aylmer Fisher:

The efficiency of a statistic is the ratio which its intrinsic accuracy bears to that of the most efficient statistic possible. It expresses the proportion of the total available relevant information of which that statistic makes use. [11] (Ronald Aylmer Fisher, 1921)

After the concept of information was introduced into literature, biology, statistics, it made the electronic engineers see new opportunities. The following example came from Ralph Vinton Lyon Hartley:

As commonly used, information is a very elastic term, and it will first be necessary to set up for it a more specific meaning as applied to the present discussion. [12] (Ralph Vinton Lyon Hartley, 1928)

In 1947, the most famous mathematician and computer scientist, John von Neumann and his collaborator, Oskar Morgenstern established their game theory; they used information in this form:

The only things he can be informed about are the choices corresponding to the moves preceding Щk—the moves Щ1–Щk−1, i.e., he may know the values of б1, ……., бk−1. But he need not know that much. It is an important peculiarity of Γ, just how much information concerning б1, ……, бk−1 the player kk is granted, when he is called upon to choose бk [13]. (John von Neumann and Oskar Morgenstern, 1947).

By 1948, Norbert Wiener created his famous Cybernetics [14] and Claude E. Shannon completed his Mathematical Theory of Communication [15] (Information Theory). With the advent of these two theories, the concept of information dramatically prevailed. Today, it is hard for us to find a subject that has not adopted the concept of information, including all of the humanities, social sciences, natural sciences and most parts of engineering science.

3. The History of Information Science

Accompanying the progressive diffusion of the word information into so many fields, the emergence of studies about these information-related disciplines is only a matter of time. In fact, all the disciplines related to information that we have seen today are the results that sprung from the inquiries about various types of information. Therefore, in the early history of information studies, three classical schools were formed during the 1950s–1980s, they are: the information science originating from computer science, the information science originating from library science, and the information science originating from telecommunications. However, in Japan, the origin of modern information science has a very close connection with journalism.

There are two reasons for them being named classical information science schools: (a) these subjects used the term “information science”; (b) in most cases, the term “information science” was used alone, that is, there isn't a determiner or qualifying word before the term “information science” in their articles, books, societies, conferences, and the name of a department or a college. Of course, the researchers in these fields were naturally often labeled “information science researchers”. So, the key problem is how to identify which one of them is a real information science.

3.1. Computer and Information Science

In 1959, the Moore School of Electronic Engineering, University of Pennsylvania of United States, first used the term Information Science but it only referred to a description of a computer program [16] and not a theory about information. In 1963, an international conference on computer science was held at Northwestern University, and the meeting adopted a new name: Computer and Information Sciences [17]. This phenomenon was noted by the Curriculum Committee on Computer Science of the Association for Computing Machinery in 1968, and it began to advocate calling this discipline Information Science, or a compromise, Computer and Information Science [18]. This is the origin of the concepts of and terms: Information Science or Computer and Information Science.

It is rare for researchers who work in this field to have the enthusiasm and patience to explain and discuss what information is or what information science is. Regardless, in the past and present, computer and information science has been represented as a strong school of information science both in academic circles and in the public mind; we believe everyone knows this fact very well. However, only one academic journal—Information Sciences, An International Journal—published by computer scientists, focused on two specialist subfields: computer science and intelligent systems applications [19].

3.2. Library and Information Science

In 1967, Manfred Kochen completed a simple but successful documentation search experiment by computer at IBM, and he named the experiment “Information Science Experiment” [20]. It aroused the great interest of the American Documentation Institute. In 1968, this Institute took action quickly to change its name to the American Society for Information Science [21] and then to the American Society for Information Science and Technology (ASIS&T) in 2000. Immediately following this action, most of the departments or schools of Library Science in the United States (and in some other countries) were renamed as departments or schools of Information Science or Library and Information Science. During the 1970s–1990s, more than 10 books under the broad titles of Foundation/Principal/Element/Introduction of/to Information Science were published. Up until the present moment, the organization of ASIS&T is the largest one related to information studies around the world and its official publication, Journal of the American Society for Information Science and Technology [22] possesses a large readership. As for the studies of Library and Information Science, their main interests include: computer literature retrieval, bibliometric study and documentation management. Facing the emergence of various information disciplines, the library and information science school is looking forward to a journey of discovery and adaptation to meet upcoming newchallenges.

3.3. Telecommunications and Information Science

From the 1980s, based on Shannon's information theory, some information researchers intended to extend its scope of study and hoped it could become a general information theory. With the unremitting efforts of more than 10 years, a Chinese information science pioneer, Yi-Xin Zhong—with an optimum background in telecommunications, mathematics and a burning ambition—completed his masterpiece: Principles of Information Science [23] in 1988. Afterwards, at least four different editions were published. In his book, based on Shannon's information theory, that is, syntactic information measurement by probability statistics, he integrated all of the syntactic, pragmatic and semantic measurements together comprehensively for the first time, and named it: “Comprehensive Information Theory, CIT”—the finest part of his work. Everyone engaged in information studies in China knows it well, due to its systematic, strict and quantitative features. However, it is regretful that only a few western scholars know his works because all of them were published only in Chinese. To my knowledge, at the same time, Howard L. Resnikoff, who once served in the Department of Information Science of the National Science Foundation of United States, also completed his Information Science monograph: The Illusion of Reality [24] based on Shannon's information theory in 1989. Entropy, uncertainty, information systems, signal detection and information processing were discussed, and were taught under the course title of “Introduction to Information Science” at Harvard University, only for one semester.

3.4. Information Exploration in Other Disciplines

In addition to the above three types of classical information science, there are still many other disciplines that have showed their great concerns about information issues after the 1950s, and innumerable achievements have been accomplished. Here we will list some of them to demonstrate.

In the liberal arts, not very long after the emergence of Shannon's information theory, Yehoshua Bar-Hillel and Rudolf Carnap [25] as well as Marvin L. Minsky [26] began to establish a set of formalized semantic information theories. Long after their exploration, this study has been and still is a thrilling topic in linguistics, logic and artificial intelligence. In 1983, Jon Barwise and John Perry of the Language, Cognition and Information Research Center of Stanford University published their book, Situation and Attitudes [27]. It acquired a good review in the circle of artificial intelligence and computational linguistics because situation semantics is regarded as a good overview of “information” thinking. In 1991, Keith Devlin, a mathematician from Maine State University of United States, developed an information semantic theory in his book, Logic and Information [28], and the method he adopted was exactly the theory that Barwise and Perry had proposed. Regarding the relationship between information and language, in 1991, linguist, Zellig Harris of Harvard University finally broke the traditional attitude of silence though widespread use of the information concept in linguistics and published his A Theory of Language and Information: A Mathematical Approach [29]. In addition to linguistics, Brenda D. Ruben, from the School of Communication and Information, Rutgers University of New Jersey of United States, had edited a series of “Information and Behavior” [30] since 1985, in which the information thinking was expressed by the human communication researchers.

In the mainstream of information research, the philosophy always keeps abreast of the current situation in information science. In this circle, one of the most influential research books is Knowledge and the Flow of Information [31] completed in 1981 by philosopher Fred Dretske of the University of Wisconsin of United States. This book once caused wide concern. In 1983, the journal, Behavioral and Brain Science of Cambridge University had designated a full page for open review by many famous guest scholars [32]. Once again, Fred Dretske's theory of the “flow of information” became the conference theme of “Information, Semantics and Epistemology” held by Sociedad Filosófica Ibero Americana in Mexico in 1988 [33]. About the philosophy of information, it was pushed ahead and further developed by many philosophers such as Kun Wu [34,35] in China, Luciano Floridi [36] in Italy and Konstantin Kolin [37] in Russia in the recent two decades. However, even earlier research about the relationship between philosophy and information had begun in the 1960s by Arkady D. Ursul [38] and others in the Soviet Union.

In the natural sciences, physicists seem to show more interest in information problems than other natural scientists in recent years. As we know, the late and well-respected Tom Stonier published his Information and the Internal Structure of the Universe—An Exploration into Information Physics [39] early in 1990. As a matter of fact, one of the most influential thoughts in information physics was developed by John A. Wheeler—the late most distinguished American astrophysicist. In 1989, he submitted a paper, Information, Physics, Quantum: The Search for Links [40] to a conference held at Santa Fe Institute of New Mexico, and then he changed the title to It from Bit, which then appeared in several publications. When often quizzed, he explained the meaning of this famous remark: “My life in understanding of physics is divided into three periods: In the first period, I was in the grip of the idea that Everything Is Particles … I call my second period Everything Is Fields … Now, I am in the grip of a new vision, that Everything Is Information” [41] that is, “It from Bit”.

In recent years, around the wisdom of Wheeler, a number of books which enunciated the relationship between physics and information have been published. B. Roy Frieden has discussed the complex relationship among “It from Bit”, “Fisher Information” and “Extreme Physical Information” in his books, Physics from Fisher Information: A Unification [42] in 1998 and Science from Fisher Information: A Unification in 2004 [43]. In Tom Siegfried's The Bit and the Pendulum: How the New Physics of Information is Revolutionizing Science, about the debate as to whether information is physical, he strongly supported the viewpoint of Rolf Landauer's “Information is a Physical Entity” [44]. Based on Wheeler's thoughts, Hans C. von Baeyer led us through a universe in which information is woven like threads in a cloth in his Information: The New Language of Science in 2003 [45].

Recently, some more astonishing statements have been put forward about information in natural science by Charles Seife, in his Decoding the Universe: How the New Science of Information Is Explaining Everything in the Cosmos, From Our Brain to Black Holes, he professed that “The laws of thermodynamics …… are …… laws about information…… The theory of relativity …… is actually a theory of information…… Each creature on …… Earth is a creature of information” [46]. These studies have given us the impression that the awareness of information by physicists has made information scientists who claim the knowledge of information, incompetent. It is clear that these information viewpoints from the circle of physics have pushed all information thinking to an extreme. We do not know how much room is available for us. In chemical informatics, since Jean-Marie Lehn issued his courageous conjecture: “Supramolecular chemistry has paved the way toward apprehending chemistry as an information science” [47] in 1995, we have not seen any specific advent to solve this conjecture until now. In our opinion, there are two main reasons for this: (a) chemical informatics is another name for supramolecular chemistry adopted by Lehn, but many supramolecular chemistry books have been published; and (b) coordination chemistry and host-guest chemistry are two disciplines similar to supramolecular chemistry, but many such books have been published already. As for the relationship between biology and information, in addition to the fact that numerous bioinformatics have been published in recent years, genetics and genomics are inevitably the branches of biological informatics and these kinds of books abound too. Recently, books on neuroinformatics and immunoinformatics have also already begun to appear.

In addition to the above three classical information science schools and information concerns from liberal arts and natural science, there are still many other fields of study about information, especially from the applications of computer and communications technology angles, by features, these subjects like adopting the name Informatics—a term created by a Russian scholar, and taken from the French term informatique with its strong technical flavor. The most typical of them are: medical informatics which first appeared in 1974, bioinformatics in 1987, and geoinformatics in 1992. Today, among the extraordinarily bustling information disciplines, they are in the majority.

From the time of Wiener's Cybernetics and Shannon's Information Theory, a large group of information subjects with the titles of Information Theory or Informatics or Information Science have been formed so a unification thought on Information has arisen along with the development of their argumentation. This will be a stirring adventure; the biggest part of this story has only just begun.

3.5. A Summary of the History of Information Science over the Past 50 Years

In 1959, the concept of “information science” was firstly proposed by computer scientists, but it was only used to represent a computer program. Ever since then, the computer science community did not perform any true information studies until now, thus causing it to remain merely as an indication or logo of information science. In the late 1960s, the rise of documentation retrieval by computer stimulated the interest of library scientists greatly; accordingly, they quickly replaced the traditional name of “Library Science” with “Information Science”. Thus, people began to adopt “Computer and Information Science” and “Library and Information Science” to distinguish the two so-called “Information Science” disciplines respectively. From the late 1980s, the third information science: “Telecommunications and Information Science” based on Shannon's information theory emerged and received much acclaim. Beginning in 1974, based on the applications of computer and communications technologies in a large number of practical fields, many applied information research disciplines came out with certain forms of the term “Informatics”, which led to both unprecedented prosperity and unprecedented chaos as well. Starting from the 1990s, a desire for unification thought that is of profound significance to the foundation of Information Science rose before the prosperity of the massive upsurge of information studies. This is the history of “Information Science” from the late 1950s to the early 21st century. Generally speaking, we can summarize the development of Information Science into the following four stages: first: the embryonic period of information science (1948–1959); second: the coexistence period of three classical information sciences (1959–the present); third: the prosperity period of sector informatics (1974–the present); fourth: the blueprint period of unified information study (1994–the present).

4. Contemporary Information Science

With the continuous penetration of the concept of information into different disciplines, coupled with new information ideas from Cybernetics and Information Theory, followed by the wide applications of computer and communications technology, starting from the 1980s, a scientific community by the name of “information theory” or “informatics” or “information science” has gradually emerged and become very powerful today.

4.1. An Overview on the Contemporary Information Disciplines

In 1982, the Austrian-American knowledge economist, Fritz Machlup, in one of his intended eight-volume series, Knowledge: Its Creation, Distribution and Economic Significance, discussed information science from the point of view of economics. Besides the so-called “Computer and Information Science” and “Library and Information Science”, he found that there were at least 39 information-related disciplines which included:

Bibliometrics, cybernetics, linguistics, phonetics, psycholinguistics, robotics, scientometrics, semantics, semiotics, systemics; cognitive psychology, lexicology, neurophysiology, psychobiology; brain science, cognitive science, cognitive neuroscience, computer science, computing science, communication science, library science, management science, speech science, systems science; systems analysis; automata theory, communication theory, control theory, decision theory, game theory, general system theory, artificial-intelligence research, genetic-information research, living-systems research, pattern-recognition research, telecommunications research, operation research, documentation, cryptography [48].

In 1994, at the First Conference on the Foundation of Information Science held in Madrid, Pedro C. Marijuán demonstrated more disciplines that had even closer relationships with information, and they are:

Formulation of the second law and the concept of entropy, measurement process in quantum theory, Shannon's information theory, non-equilibrium systems and non-linear dynamics, cellular DNA and the enzymatic processes, evolution of living beings and the status of Darwinism, measurement of ecological diversity, origins and evolution of nervous systems, functioning of the brain, nature of intelligence, representational paradigm of AI, logic, linguistics, epistemology and ontology, electronics, mass-media, library science, documentation management, economy, political philosophy ……[ 49].

Fifty years have passed since 1959 and when we now try to enumerate the disciplines that include an “information theory” or “informatics” or “information science” in their titles instead of the disciplines that are only information-related, as information researchers, we are astounded that there could now be as many as 172:

Advertisement informatics, agricultural informatics, agricultural information science, agricultural information theory, algebraic informatics, algebraic quantum information theory, algorithmic information theory, anesthesia informatics, archive informatics, archive information theory, arts informatics, autonomic informatics, bioinformatics, biological information science, biology informatics, biology medical informatics, biomedical informatics, biomolecular information theory, bio-stock information theory ….. (See a full alphabetical listing of the 172 in the Appendix).

Please note that this number we have calculated was subject to the following restrictions: (a) most of them are books, articles and reports are not included; and (b) except “information theory” and “information science”, those beginning with the word “information” in their titles, such as information economics, information sociology, information ethics, information pragmatics, information law, information management, information material science and so on, are not included. If these restrictions were swept aside, the total count could reach around 200, in our estimation.

From 1959 until now, many disciplines only introduced “information” into their fields and only treated it as an academic concept at the beginning, but today, they have evolved into a glamorous study-centered branch of information. Their basic line is: certain information-related theory, certain informatics, certain information science. To some of them, the establishment of a decent and credible information science is but a dream.

4.2. The System of Information Science

To face such a large information study community, we need a systematization of it, here, we plan to introduce two new concepts, one is “subbase” and another is “infoware”. A subbase is a physical unit that can accommodate signs; of course, a sign has the ability to accommodate information. Whereas, an infoware is a physical unit too, which has three elements: subbase, sign, and information, they are descent-nested: stage-by-stage. HD, CD, CPU, DNA, hormone, paper—even air—all of them could be used as a subbase; the study of the subbase is called “Information Material Science”. However, the current Information Material Science mainly concerns information technology material. The study of signs is called Semiotics or Semiology, according to Ferdinand de Saussure [50], Linguistics is a typical Semiotics study. The study of information is called “Information Science” or “Pure Information Science”. Information cannot exist nakedly and must be nested in a system of signs.

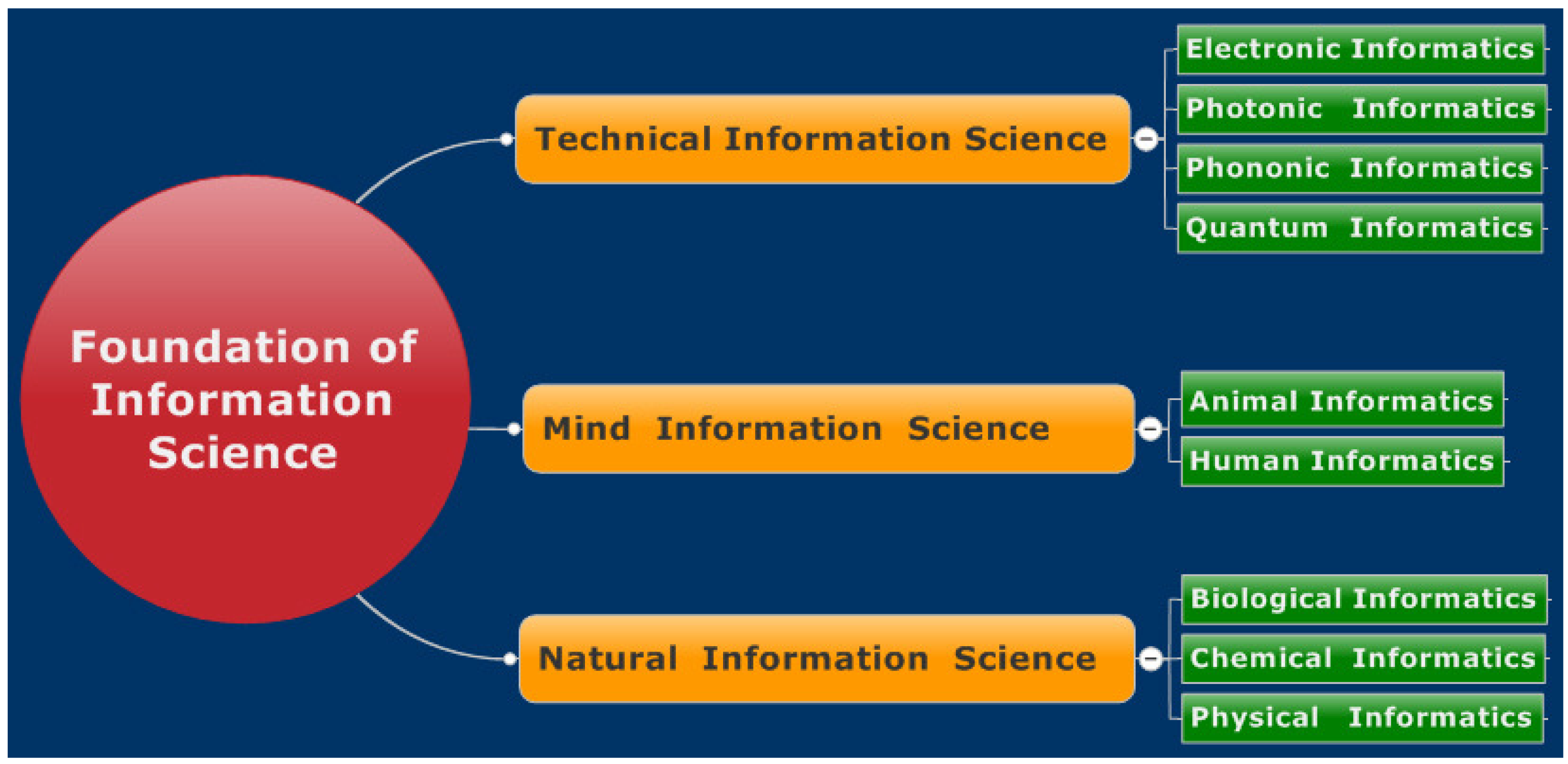

As for infoware, when it is at its artificial level, we call this kind of information studies, “Technical Information Science”. In the same way, when their infowares are at a brain level, we call them “Mind Information Science”. When at cell, molecule, atom, sub-atom or elementary particle level, we call these information studies, “Natural Information Science”. Thus, we may sort the approximate 200 information disciplines mentioned above into the following system:

The method we adopted to formulate this systematization is induction philosophy, due to an infoware possessing the three elements of subbase, sign, and information, we could arrive at three classes of information science followed by seven kinds of informatics:

Unary information science. This class of information science can form three kinds of information disciplines:

Subbase research: Information material science is available now;

Sign research: Semiotics is available now;

Information research: When we refer to it as a pure information science, Shannon's information theory, genomics, and some parts of human informatics in the future could be included. Pure information science is the most ideal information discipline among all the information studies;

Binary information science. This class of information science can form three kinds of information disciplines too:

- (4)

Subbase research + Sign research: Electronic informatics, photonic informatics, phononic informatics, quantum informatics are available now. These information disciplines are commonly known as technical information science; their basic characteristics are that they need not inquire what information is and what information content should be studied. It is so strange that a so-called information science does not have a corpus inquiry. We believe that we shall understand this more fully someday. An information science is not an information science unless an information corpus is considered;

- (5)

Subbase research + Information research: Not available definitely, because all information must be nested by sign;

- (6)

Sign research + Information research: This kind of information science is hard to explain clearly right now. Linguistics seems to part of this exploration, but according to our preceding discussion, linguist Saussure and other philosophers, it is a kind of Semiotics;

Ternary information science. This class of information science can only form one kind of information discipline:

- (7)

Subbase research + Sign research + Information research: The current physical informatics, chemical informatics, biological informatics are the standard paradigms of ternary information science. As to mind information science, it is another complex kind, hard to explain clearly now too. Mind information science has a complicated relationship with psychology, and psychology is a representation theory of neuroinformatics. However, neuroinformatics is a branch of biological informatics according to our systematization. In a sense, psychology is a bridge that connects neuroinformatics to the human informatics.

5. The Future of Information Science

As to the history and current status of information science, we hope our discussion here brings it to a temporary close for now. As to its future, especially its fundamentals, we are going to review two trends of thought that appeared during the past two decades: Comprehensive Information Theory (CIT) and Unified Theory of Information (UTI).

5.1. A Review on the Two Trends of Thought for Fundamental Exploration of Information

A. Comprehensive Information Theory (CIT)

CIT was born in China; it belongs to the first generation concern about the foundation of information science based on Shannon's information theory. As we know, when Shannon's information theory was surrounded by the sound of praise, it was also under suspicion about its effective application in general at the same time. Stepping forward to support Shannon, Warren Weaver bravely told the questioners that the current method of Shannon's information measurement is only based on the syntactic aspect, follow-up in the future would develop semantic and pragmatic information measurement as well and such an integrated measurement would solve the problems.

However, both Weaver and Shannon have not been able to fulfill their promise, to my knowledge, no Western information scientists has yet been able to advance this work, but Yi-Xin Zhong completed this tough task diligently in his book, Principles of Information Science. Employing the static probability statistical mathematics continually, he combined the syntactic, semantic, and pragmatic measurement together and obtained a series of conclusions and named it “Comprehensive Information Theory” or CIT. It is worth reading for those theory-oriented information science researchers, from its first edition in 1988 to now four more editions of his book have many loyal readers in China.

As for CIT, in fact, we also found that some inapplicability exists there; maybe the current mathematical method is insufficient to achieve this task very well. However, just as everyone has seen, the theme of FIS 2005 is still entropy theory—the variation of Shannon's information theory, the discussion about it is still a perennial topic in FIS mailing lists. Science is a continuous accumulation and updating process, we should not expect one researcher—even a generation of information scientists—could complete this work very well in a fixed time. However, we believe this orientation is still worth anticipating for revealing the secrets of information. Currently, another Chinese physicist, Xiu-San Xing, is advancing this orientation again [51]; he thinks that Zhong's study is static, while a better information measurement should employ a dynamic measure and should take static information measurement as an exception.

B. Unified Theory of Information (UTI)

Early in March 1994, Wolfgang Hofkirchner and five other authors put forward a new concept of “Unified Information Theory” or UIT [52] at an information science meeting held at Cottbus in Germany. Subsequently, at the FIS 1994 conference, Peter Fleissner and Wolfgang Hofkirchner explained its meaning systematically in their article, Emergent Information: Towards a Unified Information Theory [53]. Afterwards, in the proceedings of FIS 1996, they amended it as the new concept of “UTI: Unified Theory of Information” and discussed it in detail in a long dialogue with Rafael Capurro.

As we know, when Zhong's work was completed in 1988, as the information discipline, only Shannon's information theory, computer and information science, library and information science, and medical informatics existed, the others were only some kinds of information-related studies. Therefore, Zhong could concentrate his energy on the findings of CIT-centered principles and their applications. However, since the 1990s, a large number of information disciplines have emerged and evolved. If someone wanted to discuss general information science, he or she had to face them seriously; this is the context that Fleissner and Hofkirchner needed to be considered in 1994. In that important article, they thought optimistically, once a correct definition about information could be made, they could begin to deal with the philosophical essence of the background theory; implications of the background theory for system-theoretical considerations; the implications of the refashioned system-thinking for semiotic theory and the theory of cognition and human communication and so on. After all these works were completed, a unified information theory could most likely be set up.

5.2. The Future of Information Science

Comparing and reviewing the two thoughts of CIT and UTI that appeared during 1988–1994, if they can be seen as trends of information thinking, both having their strengths and weaknesses.

As for CIT, the problem it faces is if we could try some other different mathematical tools, perhaps the adoption of a traditional probability statistical theory is not the best one for the establishment of a foundation of information science. Viewing the history of some great achievements constituted by mathematics, “functional analysis” was adopted by John von Neumann to establish his “game theory”, “group theory” was adopted by Chen-Ning Yang to recognize the “nonconservation of parity” so it is clear that which mathematical tool is selected is crucial for a successful goal. Then which mathematical method is more appropriate for CIT? In this regard, continuous mathematics should be excluded. We suggest that the Fisher statistical method should be carefully re-considered. In recent years, some physicists, such as Frieden, proposed that the importance of Fisher Information should be re-examined, he thinks not only is it useful to information science, but also to physical science, even to science as a whole. No matter what happens, by research method, mathematics is still worth expecting. What great joy and encouragement it is to us that Mark Burgin's work has adopted a truly refreshing and unique new method! Not only has it continually advanced the mathematical exploration about information science, but also it has brought forward a new view and way of thinking about the very foundation of information science.

In considering the merits of UTI, when we touched this trend of thought initially, the impression it gave us was of a standard method for the creation of an information study: deductive method. Nevertheless, not long after its pronouncement, Rafael Capurro issued his “Capurro's Dilemma”, unfortunately, it has become a formidable obstacle to construct a UTI. In the Proceedings of the Second International Conference on the Foundations of Information Science, an interesting and meaningful dialogue: Is a Unified Theory of Information Feasible? A Trialogue [54] by Capurro, Fleissner, and Hofkirchner appeared, and “Capurro's Trilemma” was fully discussed there. By 2010, based on a series of lectures in University of León of Spain 1999, Hofkirchner later compiled them into a book, Twenty Questions about a Unified Theory of Information. In this book, on the topic of “Capurro's Trilemma”, he thought that there are three ways of thinking in information: reductionism, projectivism and disjunctivism that corresponded to Capurro's synonymity, analogy and equivocity, respectively. However, there is still another way of thinking in information: integrativism, which has a close connection with the fashionable study about complexity. Based on this, people can hold the “unity-through-diversity” principle, not only can it cope with “Capurro's Trilemma”, but also it provides a way to carry out UTI. Whether the results that can be achieved will be as good as he expects, we cannot make any prediction about that right now and it only can be proved by the practice in the future. However, this route is still very difficult to take. Whereas, for feasibility, the UTI may be the most hopeful direction to achieve in future.

While in the process of unified information study, can we divide it into two grades? (a) a delicate unification could be considered first, such as we can unify endocrinoinformatics, neuroinformatics, immunoinformatics, and geneinformatics as a delicate (unified) biological informatics; and (b) at last, we could deal with the grand unification of information. Both the CIT and UTI trends are the grand unification. Maybe an impatient effort toward the grand unification is unrealistic in the context that no one real information science is generally accepted at the present.

A researcher who dedicates himself or herself to information science cannot help pondering this question sometimes: why do so many researchers in different disciplines like connecting their studies to information? The application of computer or information technologies definitely is not the essential answer. In 2005, to predict the development of science in the future, Guang-Bi Dong, a historian of science, proposed that science will have three important transformations in the 21st century: The first transformation will be from matter theory orientation to information theory orientation [55]. The basic meaning of this is that the nature of (natural) science includes three aspects: change of matter, transformation of energy, and control of information. The exploration of unification of matter has gone on for more than 200 years, of energy, for more than 100 years too. However, the research on information is likely to be riding on and evolving from the latter. As a considerable concern, all the matter sciences—this concept once mentioned by Capurro in their trialogue in 1996—life science, and noetic science are implementing the transformation from matter and energy aspects to information. “It from Bit” of Wheeler is a typical and earnest attempt to find an informational foundation for physics.

By thinking deeply about these words, maybe we need not get so eagerly involved in a unified information study. Seriously considering the matter sciences, has somebody tried to unify physics, chemistry, biology and so on—as one? The answer is no. Unified mechanics was an unpleasant case on the road to unification for our reference. Faced with strong interaction force, weak interaction force, electromagnetic force and gravity, Einstein dedicated his entire later life to his pursuit of a unified mechanics and ended in failure, that made him lose many opportunities as a “standard-bearer” in physics in that era. However, in Einstein's era, gravitational field theory and electromagnetic field theory have completely emerged already. Whereas, information science is very different to physics, as far as the three major branches of information science mentioned in Figure 1 above, from the true information sense, not one of them is in the best shape right now, much less, most of the new “information disciplines”, if they can be regarded as real information science at all—or not—is still highly questionable.

Indeed, the unified study of information science is a magnificent pursuit, and it is also an arena for high intelligence. It represents the sublimation in understanding of a universal existence in the information age, but it requires deep understanding of so many information disciplines; only then one could extract the essential nature and element of information from them. Undoubtedly, only those most ambitious scholars are happy to engage in such work. To establish a unified information study is a tough cause, one needs to have substantial intellectual preparation in many fields. In one sense, it may be better and more satisfying for people to think thoroughly and deeply about the essence of most of the specific sector information disciplines with peace of mind—rather than to enthusiastically pursue the establishment of a unified information study right now.

Acknowledgments

I owe a great debt of gratitude to Pedro C. Marijuán for much help, especially for many of his profound insights that appeared in a series of FIS postings and mailing lists since 1997, they have been illuminating to me on many aspects. Many thanks also to Yi-Xin Zhong, Peter Fleissner, Wolfgang Hofkirchner, and Rafael Capurro for their pioneering work in the construction of the foundation of information science, which always has been and still is the great motivating force and source in my work. Many thanks also to Julie Ruth for her generous help and great encouragement.

Appendix: List of the 172 Information Disciplines

Advertisement informatics, agricultural informatics, agricultural information science, agricultural information theory, algebraic informatics, algebraic quantum information theory, algorithmic information theory, anesthesia informatics, archive informatics, archive information theory, arts informatics, autonomic informatics, bioinformatics, biological information science, biology informatics, biology medical informatics, biomedical informatics, biomolecular information theory, bio-stock information theory, brain informatics, budget informatics, business informatics, cancer informatics, chemoinformatics, clinical bioinformatics, clinical cardiac electric informatics, clinical informatics, clinical medical informatics, coastal informatics, cognitive informatics, cognitive information science, commerce informatics, communication informatics, community informatics, comprehensive information theory, computational arts and creative informatics, consumer health informatics, consumer informatics, conversational informatics, crime informatics, decision informatics, dental informatics, design informatics, documentation informatics, drug informatics, dynamic information theory, ecological informatics, ecological information science, economical information theory, economic informatics, education informatics, EEG informatics, electric informatics, electronic informatics, electronic information theory, engineering informatics, enterprise economy informatics, environmental informatics, evolutionary bioinformatics, finance information theory, financial and marketing informatics, financial informatics, fingernail life informatics, food informatics, functional informatics, general information theory, genome informatics, geographical information science, geohydroinformatics, geoinformatics, geo-spatial information science, glycome informatics, government affair informatics, government affair information science, health care informatics, health informatics, home informatics, human informatics, human information science, hydroinformatics, imaging informatics, immunoinformatics, industrial informatics, information theory, information science, infectious disease informatics, insurance informatics, intergovernmental informatics, land informatics, legal informatics, life information science, life science informatics, linguistic informatics, linguistic information theory, literature informatics, logistics informatics, machine informatics, management informatics, manufacture informatics, market economy informatics, market informatics, material informatics, medical imaging informatics, medical informatics, medical information science, medical information theory, metainformatics, microarray bioinformatics, military informatics, molecular bioinformatics, molecular informatics, molecular information theory, museum informatics, nature-inspired informatics, neuroinformatics, news informatics, nursing and clinical informatics, nursing informatics, ophthalmic informatics, optical information science, optical information theory, pathology informatics, pharmaceutical analytical informatics, philosophy information theory, physical training informatics, plant bioinformatics, post-genome informatics, procuratorial informatics, protein informatics, proteome bioinformatics, qualitative information theory, quantum bio-informatics, quantum informatics, quantum information science, quantum information theory, remote sensing informatics, resource environment informatics, RNA informatics, road transport informatics, scientific informatics, security informatics, semantic information theory, social informatics, sociological theory of information, space-time informatics, spatial informatics, spatial information science, spatial information theory, statistical information theory, statistics informatics, structural bioinformatics, structural informatics, surveying and land information science, systems informatics, telegeoinformatics, teleinformatics, terrorism informatics, theoretical informatics, theory of semantic information, tourism informatics, transportation information theory, trauma informatics, treasurer informatics, unified information science, unified theory of information, urban geoinformatics, urban hydroinformatics, urban informatics, utility information theory, vacuum information theory, veterinarian informatics, visual informatics.

References

- Schroeder, M.J. What is the definition of information? Available online: http://fis.iguw.tuwien.ac.at/mailings/2140.html (accessed on 2 September 2005).

- Burgin, M. Theory of Information: Fundamentality, Diversity and Unification; World Scientific Publishing Co. Pty, Ltd.: Singapore, 2010; pp. 2–45. [Google Scholar]

- Burgin, M. Information theory: A multifaceted model of information. Entropy 2003, 5, 146–160. [Google Scholar]

- Capurro, R.; Hjørland, B. The Concept of Information. Annu. Rev. Inf. Sci. Technol. 2003, 37, 343–411. [Google Scholar]

- Craige, W.A. A Dictionary of the Older Scottish Tongue from the Twelfth Century to the End of the Seventeenth; University of Chicago Press: Chicago, IL, USA, 1931. [Google Scholar]

- Hofkirchner, W. Twenty Questions about a Unified Theory of Information—A Short Exploration into Information from a Complex Systems View; Emergent Publications: Litchfield Park, AZ, USA, 2010; p. 9. [Google Scholar]

- Swift, J. Gulliver's Travels; Pan Books: London, UK, 1977. [Google Scholar]

- Bronte, C. Jane Eyre; Oxford University Press: London, UK, 1980. [Google Scholar]

- Xu, K. An Outline to Neurobiology (In Chinese); Science Press: Beijing, China, 2002; p. 86. [Google Scholar]

- Driesch, H. The Science and Philosophy of the Organism; I. Gilford Lectures, 1907; II. Gilford Lectures, 1908; A. & C. Black Ltd.: London, UK, 1929. [Google Scholar]

- Fisher, R.A. Accuracy of observation, a mathematical examination of the methods of determining, by the mean error and by the mean square error. Mon. Not. R. Astron. Soc. 1920, 80, 758–770. [Google Scholar]

- Hartley, R.V.L. Transmission of Information. Bell Syst. Tech. J. 1928, 7, 536. [Google Scholar]

- Von Neumann, J.; Morgenstern, O. Theory of Games and Economic Behavior; Princeton University Press: Princeton, NJ, USA, 1947; p. 23. [Google Scholar]

- Wiener, N. Cybernetics: or Control and Communication in the Animal and the Machine; Technology Press: Cambridge, MA, USA, 1948. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27. [Google Scholar]

- Wellisch, H. From information science to informatics. J. Libr. 1972, 4, 164. [Google Scholar]

- Julius, T.T.; Richard, H.W. Computer and Information Sciences: Collected Papers on Learning, Adaptation and Control in Information Systems of Computer and Information Science Symposium, 1963, Northwestern University; Spartan Books: Washington, DC, USA, 1964. [Google Scholar]

- ACM Curriculum Committee on Computer Science. Curriculum 68: Recommendations for academic programs in computer science. Commun. ACM 1968, 11, 151–197. [Google Scholar]

- Information Sciences. An International Journal. Available online: http://www.elsevier.com/wps/find/journaldescrip tion.cws_home/505730/description#description (accessed on 10 May 2011).

- Kochen, M. Some Problems in Information Science; Scarecrow Press: New York, NY, USA, 1965. [Google Scholar]

- History of the American Society for Information Science and Technology. Available online: http://www.asis.org/history.html (accessed on 10 May, 2011).

- Journal of the American Society for Information Science and Technology. Available online: http://www.asis.org/jasist.html (accessed on 10 May, 2011).

- Zhong, Y.-X. Principles of Information Science (In Chinese); Fujian People Press: Fuzhou, China, 1988. [Google Scholar]

- Resnikoff, H.L. The Illusion of Reality; Springer-Verlarg: New York, NY, USA, 1989. [Google Scholar]

- Bar-Hillel, Y.; Carnap, R. Semantic Information. B. J. Philos. Sci. 1953, 4, 147–157. [Google Scholar]

- Minsky, M.L. Semantic Information Processing; The MIT Press: Cambridge, MA, USA, 1968. [Google Scholar]

- Barwise, J.; Perry, J. Situation and Attitudes; The MIT Press: Cambridge, MA, USA, 1983. [Google Scholar]

- Devlin, K. Logic and Information; Cambridge University Press: New York, NY, USA, 1991. [Google Scholar]

- Harris, S. A Theory of Language and Information: A Mathematical Approach; Clarendon Press: Oxford, UK, 1991. [Google Scholar]

- Information and Behavior; Ruben, B.D., Lierouw, L.A., Eds.; Transaction: New Brunswick, NJ, USA, 1985; Volume 1–5.

- Dretske, F. Knowledge and the Flow of Information; MIT Press: Cambridge, MA, USA, 1981. [Google Scholar]

- Alston, W.P.; Arbib, M.A.; Armstrong, D.M. Open Peer Commentary on “Knowledge and the Flow of Information”. Behav. Brain. Sci. 1983, 1, 63–82. [Google Scholar]

- Villanueva, E. Information, Semantic and Epistemology; Basil Blackwell: Cambridge, MA, USA, 1990. [Google Scholar]

- Wu, K. Information Philosophy: A New Spirit of the Age (In Chinese); Shan Xi Normal University Press: Xi'an, China, 1989. [Google Scholar]

- Wu, K. Information Philosophy: Theory, System, Method (In Chinese); The Commercial Press: Beijing, China, 2005. [Google Scholar]

- Floridi, L. What is the philosophy of information? Metaphilosophy 2002, 33, 123–145. [Google Scholar]

- Kolin, K. The Philosophy Problems in Information Science (In Chinese); China Social Sciences Press: Beijing, China, 2011; Wu, Kun, Translator. [Google Scholar]

- Ursul, A.D. The Nature of the Information. Philosophical Essay; Politizdat: Moscow, Russia, 1968. [Google Scholar]

- Stonier, T. Information and the Internal Structure of the Universe—An Exploration into Information Physics; Springer-Verlag: London, UK, 1990. [Google Scholar]

- Wheeler, J.A. Information, Physics, Quantum: The Search for Links. In Complexity, Entropy, and the Physics of Information; SFI Studies in the Sciences of Complexity; Zurek, W., Ed.; Addison-Wesley: Redwood City, CA, USA, 1990; Volume VIII. [Google Scholar]

- Wheeler, J.A.; Ford, K. Geons, Black Holes, and Quantum Foam; Norton: New York, NY, USA, 1998; pp. 63–64. [Google Scholar]

- Frieden, B.R. Physics from Fisher Information: A Unification; Cambridge University Press: Cambridge, UK, 1998. [Google Scholar]

- Frieden, B.R. Science from Fisher Information: A Unification, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Siegfried, T. The Bit & the Pendulum: How the New Physics of Information Is Revolutionizing Science; John Wiley & Sons, Inc.: New York, NY, USA, 2000. [Google Scholar]

- Von Baeyer, H.C. Information: The New Language of Science; Weidenfeld & Nicolson: London, UK, 2003. [Google Scholar]

- Seife, C. Decoding the Universe: How the New Science of Information Is Explaining Everything in the Cosmos, from Our Brain to Black Holes; Penguin Group Inc.: Brockman, CA, USA, 2006. [Google Scholar]

- Lehn, J.-M. Supramolecular Chemistry: Concepts and Perspectives; Weinheim: New York, NY, USA, 1995. [Google Scholar]

- Machlup, F.; Mansfield, U. The Study of Information: Interdisciplinary Message; John Wiley & Sons: New York, NY, USA, 1983; p. 6. [Google Scholar]

- Marijuán, P.C. First conference on foundations of information science: From computers and quantum physics to cells, nervous systems, and societies. BioSystems 1996, 38, 87–96. [Google Scholar]

- Saussure, F. Course in General Linguistics; Owen: London, UK, 1960. [Google Scholar]

- Xing, X.-S. Dynamic Statistical Information Theory. Sci. China Ser. G 2006, 1, 1–37. [Google Scholar]

- Fenzl, N.; Fleissner, P.; Hofkirchner, W.; Jahn, R.; Stockinger, G. On the Genesis of Information Structures: A View that Is Neither Reductionistic Nor Holistic. In Information: New Questions to a Multidisciplinary Concept; Akademie Verlag: Berlin, Germany, 1996; p. 274. [Google Scholar]

- Fleissner, P.; Hofkirchner, W. Emergent Information: Towards A Unified Information Theory. BioSystems 1996, 38, 243–248. [Google Scholar]

- Capurro, R.; Fleissner, P.; Hofkirchner, W. Is a Unified Theory of Information Feasible? A Trialogue. In The Quest for a Unified Theory of Information, Proceedings of the Second International Conference on the Foundations of Information Science; Hofkirchner, W., Ed.; Gordon and Breach: Amsterdam, The Netherlands, 1999; pp. 9–30. [Google Scholar]

- Dong, G.-B. The Issues of the Intersection and Unification for Three Theory System Problems in Natural Science. In 100 Interdisciplinary Science Puzzles of the 21st Century (In Chinese); Li, X.X., Ed.; Science Press: Beijing, China, 2005; pp. 795–798. [Google Scholar]

© 2011 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Yan, X.-S. Information Science: Its Past, Present and Future. Information 2011, 2, 510-527. https://doi.org/10.3390/info2030510

Yan X-S. Information Science: Its Past, Present and Future. Information. 2011; 2(3):510-527. https://doi.org/10.3390/info2030510

Chicago/Turabian StyleYan, Xue-Shan. 2011. "Information Science: Its Past, Present and Future" Information 2, no. 3: 510-527. https://doi.org/10.3390/info2030510

APA StyleYan, X.-S. (2011). Information Science: Its Past, Present and Future. Information, 2(3), 510-527. https://doi.org/10.3390/info2030510