Designing a Chatbot for Contemporary Education: A Systematic Literature Review

Abstract

:1. Introduction

2. Related Work

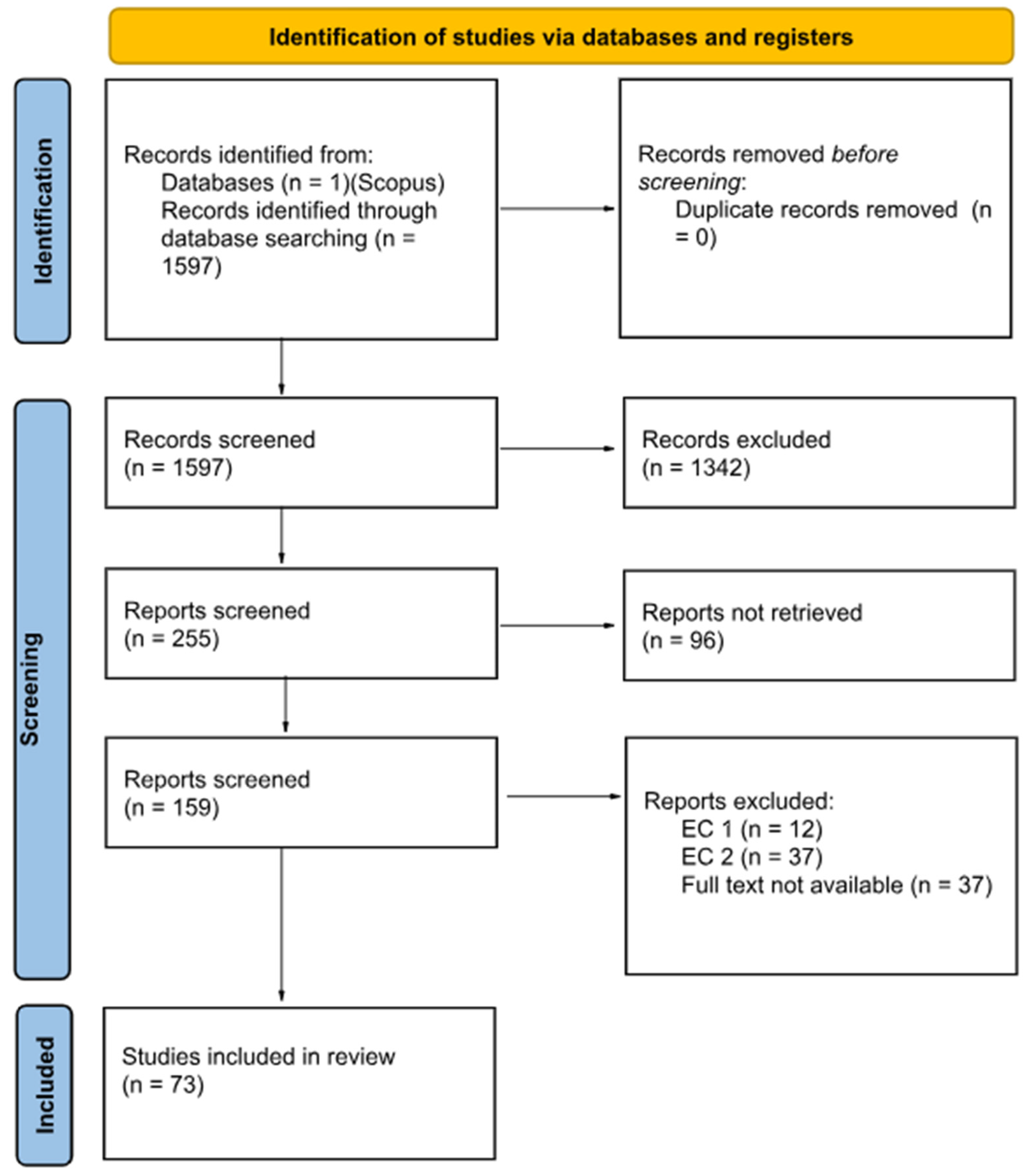

3. Methods

3.1. Eligibility Criteria

3.2. Information Sources

3.3. Search Strategy

3.4. Selection Process and Data Collection Process

3.5. Data Items

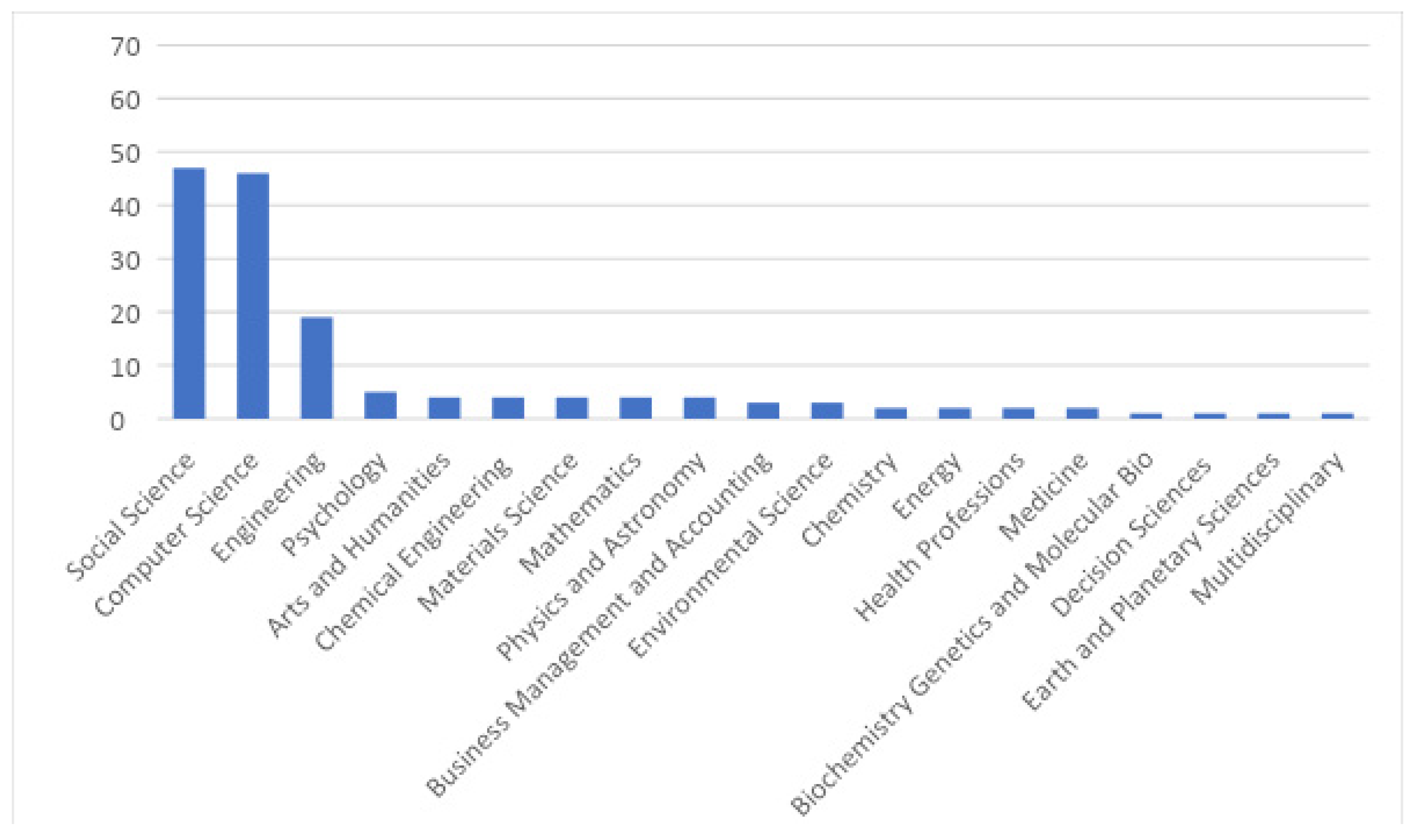

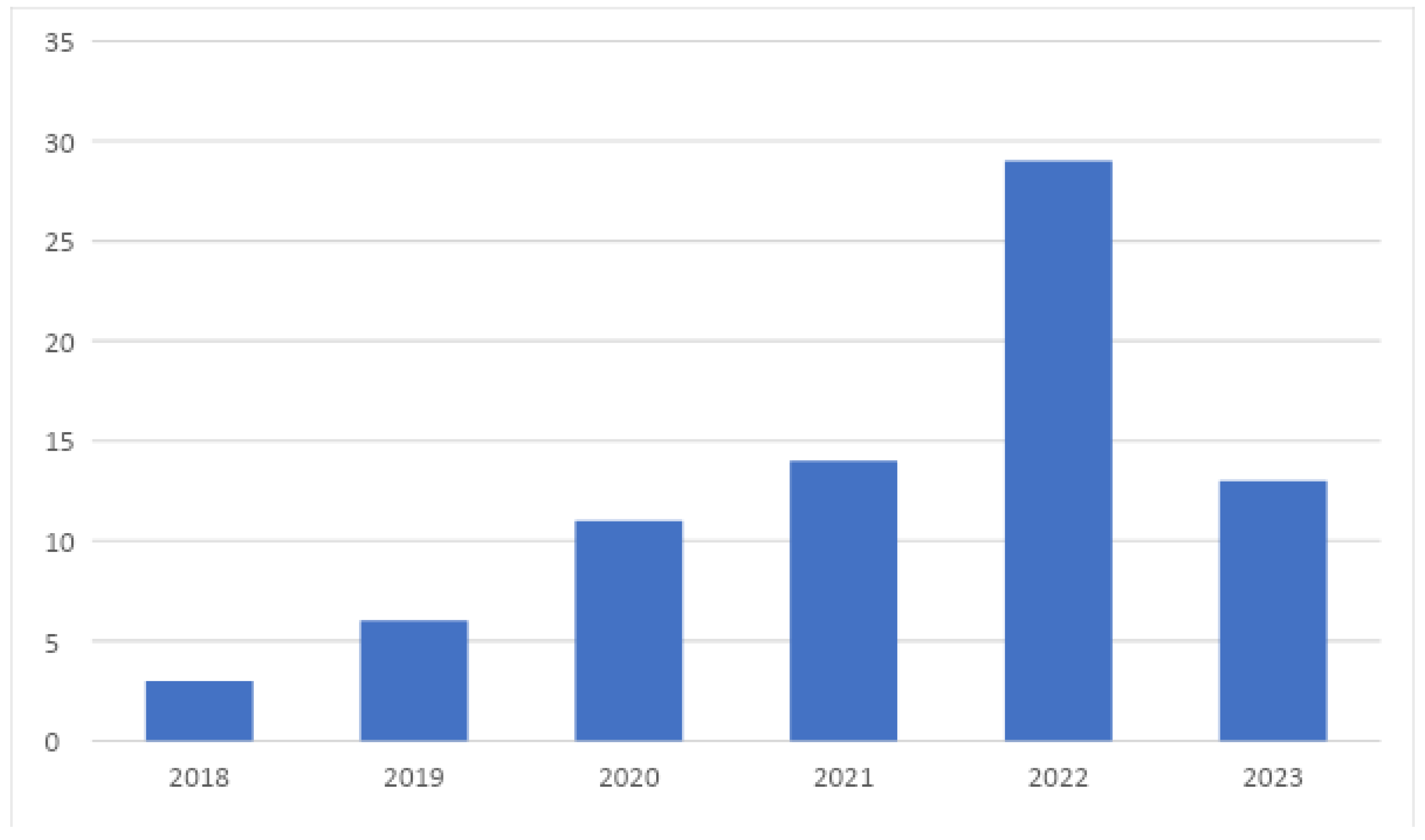

4. Results

4.1. Educational Grade Levels

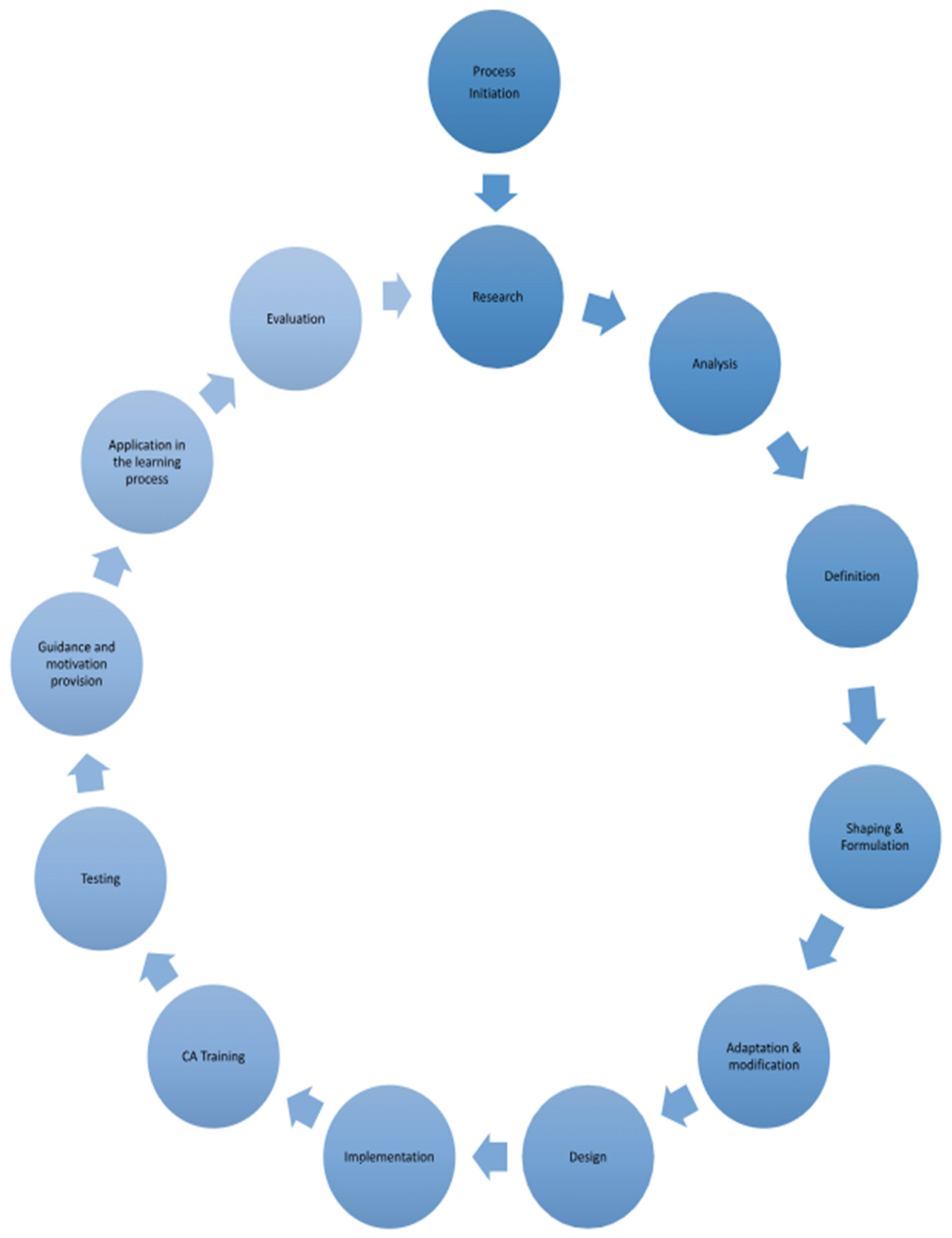

4.2. What Are the Steps for Designing an Educational Chatbot for Contemporary Education?

4.2.1. Research

4.2.2. Analysis

4.2.3. Definition

4.2.4. Shaping and Formulation

4.2.5. Adaptation and Modifications

4.2.6. Design Principles and Approaches

4.2.7. Implementation and Training of the ECA

4.2.8. Testing of the ECA

4.2.9. Guidance and Motivation Provision for the Adoption of the ECA from the Students

4.2.10. Application in the Learning Process

4.2.11. Evaluation of an ECA

5. Discussion

6. Limitations

7. Conclusions and Further Research

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Adamopoulou, E.; Moussiades, L. Chatbots: History, technology, and applications. Mach. Learn. Appl. 2020, 2, 100006. [Google Scholar] [CrossRef]

- Pérez, J.Q.; Daradoumis, T.; Puig, J.M.M. Rediscovering the use of chatbots in education: A systematic literature review. Comput. Appl. Eng. Educ. 2020, 28, 1549–1565. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Chatbots applications in education: A systematic review. Comput. Educ. Artif. Intell. 2021, 2, 100033. [Google Scholar] [CrossRef]

- Hwang, G.-J.; Chang, C.-Y. A review of opportunities and challenges of chatbots in education. Interact. Learn. Environ. 2021, 1–14. [Google Scholar] [CrossRef]

- Wollny, S.; Schneider, J.; Di Mitri, D.; Weidlich, J.; Rittberger, M.; Drachsler, H. Are We There Yet?—A Systematic Literature Review on Chatbots in Education. Front. Artif. Intell. 2021, 4, 654924. [Google Scholar]

- Kuhail, M.A.; Alturki, N.; Alramlawi, S.; Alhejori, K. Interacting with educational chatbots: A systematic review. Educ. Inf. Technol. 2023, 28, 973–1018. [Google Scholar] [CrossRef]

- Smutny, P.; Schreiberova, P. Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Comput. Educ. 2020, 151, 103862. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef]

- Abdelghani, R.; Oudeyer, P.-Y.; Law, E.; de Vulpillières, C.; Sauzéon, H. Conversational agents for fostering curiosity-driven learning in children. Int. J. Hum. Comput. Stud. 2022, 167, 102887. [Google Scholar] [CrossRef]

- Chien, Y.-Y.; Wu, T.-Y.; Lai, C.-C.; Huang, Y.-M. Investigation of the Influence of Artificial Intelligence Markup Language-Based LINE ChatBot in Contextual English Learning. Front. Psychol. 2022, 13, 785752. [Google Scholar] [CrossRef]

- Chiu, T.K.F.; Moorhouse, B.L.; Chai, C.S.; Ismailov, M. Teacher support and student motivation to learn with Artificial Intelligence (AI) based chatbot. Interact. Learn. Environ. 2023, 1–17. [Google Scholar] [CrossRef]

- Chuang, C.-H.; Lo, J.-H.; Wu, Y.-K. Integrating Chatbot and Augmented Reality Technology into Biology Learning during COVID-19. Electronics 2023, 12, 222. [Google Scholar] [CrossRef]

- Ericsson, E.; Sofkova Hashemi, S.; Lundin, J. Fun and frustrating: Students’ perspectives on practising speaking English with virtual humans. Cogent Educ. 2023, 10, 2170088. [Google Scholar] [CrossRef]

- Haristiani, N.; Dewanty, V.L.; Rifai, M.M. Autonomous Learning Through Chatbot-based Application Utilization to Enhance Basic Japanese Competence of Vocational High School Students. J. Tech. Educ. Train. 2022, 14, 143–155. [Google Scholar] [CrossRef]

- Kabiljagić, M.; Wachtler, J.; Ebner, M.; Ebner, M. Math Trainer as a Chatbot Via System (Push) Messages for Android. Int. J. Interact. Mob. Technol. 2022, 16, 75–87. [Google Scholar] [CrossRef]

- Katchapakirin, K.; Anutariya, C.; Supnithi, T. ScratchThAI: A conversation-based learning support framework for computational thinking development. Educ. Inf. Technol. 2022, 27, 8533–8560. [Google Scholar] [CrossRef]

- Mageira, K.; Pittou, D.; Papasalouros, A.; Kotis, K.; Zangogianni, P.; Daradoumis, A. Educational AI Chatbots for Content and Language Integrated Learning. Appl. Sci. 2022, 12, 3239. [Google Scholar] [CrossRef]

- Mathew, A.N.; Rohini, V.; Paulose, J. NLP-based personal learning assistant for school education. Int. J. Electr. Comput. Eng. 2021, 11, 4522–4530. [Google Scholar] [CrossRef]

- Sarosa, M.; Wijaya, M.H.; Tolle, H.; Rakhmania, A.E. Implementation of Chatbot in Online Classes using Google Classroom. Int. J. Comput. 2022, 21, 42–51. [Google Scholar] [CrossRef]

- Tärning, B.; Silvervarg, A. “I didn’t understand, i’m really not very smart”—How design of a digital tutee’s self-efficacy affects conversation and student behavior in a digital math game. Educ. Sci. 2019, 9, 197. [Google Scholar] [CrossRef]

- Deveci Topal, A.; Dilek Eren, C.; Kolburan Geçer, A. Chatbot application in a 5th grade science course. Educ. Inf. Technol. 2021, 26, 6241–6265. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Kim, H.; Lee, J.H.; Shin, D. Implementation of an AI chatbot as an English conversation partner in EFL speaking classes. ReCALL 2022, 34, 327–343. [Google Scholar] [CrossRef]

- Al-Sharafi, M.A.; Al-Emran, M.; Iranmanesh, M.; Al-Qaysi, N.; Iahad, N.A.; Arpaci, I. Understanding the impact of knowledge management factors on the sustainable use of AI-based chatbots for educational purposes using a hybrid SEM-ANN approach. Interact. Learn. Environ. 2022, 1–20. [Google Scholar] [CrossRef]

- Bailey, D.; Southam, A.; Costley, J. Digital storytelling with chatbots: Mapping L2 participation and perception patterns. Interact. Technol. Smart Educ. 2020, 18, 85–103. [Google Scholar] [CrossRef]

- Bagramova, N.V.; Kudryavtseva, N.F.; Panteleeva, L.V.; Tyutyunnik, S.I.; Markova, I.V. Using chat bots when teaching a foreign language as an important condition for improving the quality of foreign language training of future specialists in the field of informatization of education. Perspekt. Nauk. I Obraz. 2022, 58, 617–633. [Google Scholar]

- Belda-Medina, J.; Calvo-Ferrer, J.R. Using Chatbots as AI Conversational Partners in Language Learning. Appl. Sci. 2022, 12, 8427. [Google Scholar] [CrossRef]

- Cai, W.; Grossman, J.; Lin, Z.J.; Sheng, H.; Wei, J.T.-Z.; Williams, J.J.; Goel, S. Bandit algorithms to personalize educational chatbots. Mach. Learn. 2021, 110, 2389–2418. [Google Scholar] [CrossRef]

- Černý, M. Educational Psychology Aspects of Learning with Chatbots without Artificial Intelligence: Suggestions for Designers. Eur. J. Investig. Health Psychol. Educ. 2023, 13, 284–305. [Google Scholar] [CrossRef]

- Chaiprasurt, C.; Amornchewin, R.; Kunpitak, P. Using motivation to improve learning achievement with a chatbot in blended learning. World J. Educ. Technol. Curr. Issues 2022, 14, 1133–1151. [Google Scholar] [CrossRef]

- Chen, Y.; Jensen, S.; Albert, L.J.; Gupta, S.; Lee, T. Artificial Intelligence (AI) Student Assistants in the Classroom: Designing Chatbots to Support Student Success. Inf. Syst. Front. 2023, 25, 161–182. [Google Scholar] [CrossRef]

- Chien, Y.-Y.; Yao, C.-K. Development of an ai userbot for engineering design education using an intent and flow combined framework. Appl. Sci. 2020, 10, 7970. [Google Scholar] [CrossRef]

- Colace, F.; De Santo, M.; Lombardi, M.; Pascale, F.; Pietrosanto, A.; Lemma, S. Chatbot for e-learning: A case of study. Int. J. Mech. Eng. Robot. Res. 2018, 7, 528–533. [Google Scholar] [CrossRef]

- Coronado, M.; Iglesias, C.A.; Carrera, Á.; Mardomingo, A. A cognitive assistant for learning java featuring social dialogue. Int. J. Hum. Comput. Stud. 2018, 117, 55–67. [Google Scholar] [CrossRef]

- Essel, H.B.; Vlachopoulos, D.; Tachie-Menson, A.; Johnson, E.E.; Baah, P.K. The impact of a virtual teaching assistant (chatbot) on students’ learning in Ghanaian higher education. Int. J. Educ. Technol. High. Educ. 2022, 19, 28. [Google Scholar] [CrossRef]

- Fryer, L.K.; Nakao, K.; Thompson, A. Chatbot learning partners: Connecting learning experiences, interest and competence. Comput. Hum. Behav. 2019, 93, 279–289. [Google Scholar] [CrossRef]

- Durall Gazulla, E.; Martins, L.; Fernández-Ferrer, M. Designing learning technology collaboratively: Analysis of a chatbot co-design. Educ. Inf. Technol. 2023, 28, 109–134. [Google Scholar] [CrossRef]

- González, L.A.; Neyem, A.; Contreras-McKay, I.; Molina, D. Improving learning experiences in software engineering capstone courses using artificial intelligence virtual assistants. Comput. Appl. Eng. Educ. 2022, 30, 1370–1389. [Google Scholar] [CrossRef]

- González-Castro, N.; Muñoz-Merino, P.J.; Alario-Hoyos, C.; Kloos, C.D. Adaptive learning module for a conversational agent to support MOOC learners. Australas. J. Educ. Technol. 2021, 37, 24–44. [Google Scholar] [CrossRef]

- Han, J.-W.; Park, J.; Lee, H. Analysis of the effect of an artificial intelligence chatbot educational program on non-face-to-face classes: A quasi-experimental study. BMC Med. Educ. 2022, 22, 830. [Google Scholar] [CrossRef]

- Han, S.; Liu, M.; Pan, Z.; Cai, Y.; Shao, P. Making FAQ Chatbots More Inclusive: An Examination of Non-Native English Users’ Interactions with New Technology in Massive Open Online Courses. Int. J. Artif. Intell. Educ. 2022, 33, 752–780. [Google Scholar] [CrossRef]

- Haristiani, N.; Rifai, M.M. Chatbot-based application development and implementation as an autonomous language learning medium. Indones. J. Sci. Technol. 2021, 6, 561–576. [Google Scholar] [CrossRef]

- Hew, K.F.; Huang, W.; Du, J.; Jia, C. Using chatbots to support student goal setting and social presence in fully online activities: Learner engagement and perceptions. J. Comput. High. Educ. 2022, 35, 40–68. [Google Scholar] [CrossRef] [PubMed]

- Hsu, M.-H.; Chen, P.-S.; Yu, C.-S. Proposing a task-oriented chatbot system for EFL learners speaking practice. Interact. Learn. Environ. 2021, 1–12. [Google Scholar] [CrossRef]

- Hsu, H.-H.; Huang, N.-F. Xiao-Shih: A Self-Enriched Question Answering Bot with Machine Learning on Chinese-Based MOOCs. IEEE Trans. Learn. Technol. 2022, 15, 223–237. [Google Scholar] [CrossRef]

- Huang, W.; Hew, K.F.; Gonda, D.E. Designing and evaluating three chatbot-enhanced activities for a flipped graduate course. Int. J. Mech. Eng. Robot. Res. 2019, 8, 813–818. [Google Scholar] [CrossRef]

- Jasin, J.; Ng, H.T.; Atmosukarto, I.; Iyer, P.; Osman, F.; Wong, P.Y.K.; Pua, C.Y.; Cheow, W.S. The implementation of chatbot-mediated immediacy for synchronous communication in an online chemistry course. Educ. Inf. Technol. 2023, 28, 10665–10690. [Google Scholar] [CrossRef]

- Lee, Y.-F.; Hwang, G.-J.; Chen, P.-Y. Impacts of an AI-based chabot on college students’ after-class review, academic performance, self-efficacy, learning attitude, and motivation. Educ. Technol. Res. Dev. 2022, 70, 1843–1865. [Google Scholar] [CrossRef]

- Li, K.-C.; Chang, M.; Wu, K.-H. Developing a task-based dialogue system for english language learning. Educ. Sci. 2020, 10, 306. [Google Scholar] [CrossRef]

- Li, Y.S.; Lam, C.S.N.; See, C. Using a Machine Learning Architecture to Create an AI-Powered Chatbot for Anatomy Education. Med. Sci. Educ. 2021, 31, 1729–1730. [Google Scholar] [CrossRef]

- Liu, Q.; Huang, J.; Wu, L.; Zhu, K.; Ba, S. CBET: Design and evaluation of a domain-specific chatbot for mobile learning. Univers. Access Inf. Soc. 2020, 19, 655–673. [Google Scholar] [CrossRef]

- Mendez, S.L.; Johanson, K.; Conley, V.M.; Gosha, K.; Mack, N.; Haynes, C.; Gerhardt, R. Chatbots: A tool to supplement the future faculty mentoring of doctoral engineering students. Int. J. Dr. Stud. 2020, 15, 373–392. [Google Scholar] [CrossRef] [PubMed]

- Neo, M. The Merlin Project: Malaysian Students’ Acceptance of an AI Chatbot in Their Learning Process. Turk. Online J. Distance Educ. 2022, 23, 31–48. [Google Scholar] [CrossRef]

- Neo, M.; Lee, C.P.; Tan, H.Y.-J.; Neo, T.K.; Tan, Y.X.; Mahendru, N.; Ismat, Z. Enhancing Students’ Online Learning Experiences with Artificial Intelligence (AI): The MERLIN Project. Int. J. Technol. 2022, 13, 1023–1034. [Google Scholar] [CrossRef]

- Ong, J.S.H.; Mohan, P.R.; Han, J.Y.; Chew, J.Y.; Fung, F.M. Coding a Telegram Quiz Bot to Aid Learners in Environmental Chemistry. J. Chem. Educ. 2021, 98, 2699–2703. [Google Scholar] [CrossRef]

- Rodríguez, J.A.; Santana, M.G.; Perera, M.V.A.; Pulido, J.R. Embodied conversational agents: Artificial intelligence for autonomous learning. Pixel-Bit Rev. De Medios Y Educ. 2021, 62, 107–144. [Google Scholar]

- Rooein, D.; Bianchini, D.; Leotta, F.; Mecella, M.; Paolini, P.; Pernici, B. aCHAT-WF: Generating conversational agents for teaching business process models. Softw. Syst. Model. 2022, 21, 891–914. [Google Scholar] [CrossRef]

- Sáiz-Manzanares, M.C.; Marticorena-Sánchez, R.; Martín-Antón, L.J.; González Díez, I.; Almeida, L. Perceived satisfaction of university students with the use of chatbots as a tool for self-regulated learning. Heliyon 2023, 9, e12843. [Google Scholar] [CrossRef]

- Schmulian, A.; Coetzee, S.A. The development of Messenger bots for teaching and learning and accounting students’ experience of the use thereof. Br. J. Educ. Technol. 2019, 50, 2751–2777. [Google Scholar] [CrossRef]

- Suárez, A.; Adanero, A.; Díaz-Flores García, V.; Freire, Y.; Algar, J. Using a Virtual Patient via an Artificial Intelligence Chatbot to Develop Dental Students’ Diagnostic Skills. Int. J. Environ. Res. Public Health 2022, 19, 8735. [Google Scholar] [CrossRef]

- Vázquez-Cano, E.; Mengual-Andrés, S.; López-Meneses, E. Chatbot to improve learning punctuation in Spanish and to enhance open and flexible learning environments. Int. J. Educ. Technol. High. Educ. 2021, 18, 33. [Google Scholar] [CrossRef]

- Villegas-Ch, W.; Arias-Navarrete, A.; Palacios-Pacheco, X. Proposal of an Architecture for the Integration of a Chatbot with Artificial Intelligence in a Smart Campus for the Improvement of Learning. Sustainability 2020, 12, 1500. [Google Scholar] [CrossRef]

- Wambsganss, T.; Zierau, N.; Söllner, M.; Käser, T.; Koedinger, K.R.; Leimeister, J.M. Designing Conversational Evaluation Tools. Proc. ACM Hum.-Comput. Interact. 2022, 6, 1–27. [Google Scholar] [CrossRef]

- Wan Hamzah, W.M.A.F.; Ismail, I.; Yusof, M.K.; Saany, S.I.M.; Yacob, A. Using Learning Analytics to Explore Responses from Student Conversations with Chatbot for Education. Int. J. Eng. Pedagog. 2021, 11, 70–84. [Google Scholar] [CrossRef]

- Yildiz Durak, H. Conversational agent-based guidance: Examining the effect of chatbot usage frequency and satisfaction on visual design self-efficacy, engagement, satisfaction, and learner autonomy. Educ. Inf. Technol. 2023, 28, 471–488. [Google Scholar] [CrossRef]

- Yin, J.; Goh, T.-T.; Yang, B.; Xiaobin, Y. Conversation Technology with Micro-Learning: The Impact of Chatbot-Based Learning on Students’ Learning Motivation and Performance. J. Educ. Comput. Res. 2021, 59, 154–177. [Google Scholar] [CrossRef]

- Bakouan, M.; Kamagate, B.H.; Kone, T.; Oumtanaga, S.; Babri, M. A chatbot for automatic processing of learner concerns in an online learning platform. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 168–176. [Google Scholar] [CrossRef]

- Briel, A. Toward an eclectic and malleable multiagent educational assistant. Comput. Appl. Eng. Educ. 2022, 30, 163–173. [Google Scholar] [CrossRef]

- González-González, C.S.; Muñoz-Cruz, V.; Toledo-Delgado, P.A.; Nacimiento-García, E. Personalized Gamification for Learning: A Reactive Chatbot Architecture Proposal. Sensors 2023, 23, 545. [Google Scholar] [CrossRef]

- Janati, S.E.; Maach, A.; Ghanami, D.E. Adaptive e-learning AI-powered chatbot based on multimedia indexing. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 299–308. [Google Scholar] [CrossRef]

- Jimenez Flores, V.J.; Jimenez Flores, O.J.; Jimenez Flores, J.C.; Jimenez Castilla, J.U. Performance comparison of natural language understanding engines in the educational domain. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 753–757. [Google Scholar]

- Karra, R.; Lasfar, A. Impact of Data Quality on Question Answering System Performances. Intell. Autom. Soft Comput. 2023, 35, 335–349. [Google Scholar] [CrossRef]

- Kharis, M.; Schön, S.; Hidayat, E.; Ardiansyah, R.; Ebner, M. Mobile Gramabot: Development of a Chatbot App for Interactive German Grammar Learning. Int. J. Emerg. Technol. Learn. 2022, 17, 52–63. [Google Scholar] [CrossRef]

- Kohnke, L. A Pedagogical Chatbot: A Supplemental Language Learning Tool. RELC J. 2022, 1–11. [Google Scholar] [CrossRef]

- Lippert, A.; Shubeck, K.; Morgan, B.; Hampton, A.; Graesser, A. Multiple Agent Designs in Conversational Intelligent Tutoring Systems. Technol. Knowl. Learn. 2020, 25, 443–463. [Google Scholar] [CrossRef]

- Mateos-Sanchez, M.; Melo, A.C.; Blanco, L.S.; García, A.M.F. Chatbot, as Educational and Inclusive Tool for People with Intellectual Disabilities. Sustainability 2022, 14, 1520. [Google Scholar] [CrossRef]

- Memon, Z.; Aghian, H.; Sarfraz, M.S.; Hussain Jalbani, A.; Oskouei, R.J.; Jalbani, K.B.; Hussain Jalbani, G. Framework for Educational Domain-Based Multichatbot Communication System. Sci. Program. 2021, 2021, 5518309. [Google Scholar] [CrossRef]

- Nguyen, H.D.; Tran, D.A.; Do, H.P.; Pham, V.T. Design an Intelligent System to automatically Tutor the Method for Solving Problems. Int. J. Integr. Eng. 2020, 12, 211–223. [Google Scholar] [CrossRef]

- Pashev, G.; Gaftandzhieva, S. Facebook Integrated Chatbot for Bulgarian Language Aiding Learning Content Delivery. TEM J. 2021, 10, 1011–1015. [Google Scholar] [CrossRef]

- Schmitt, A.; Wambsganss, T.; Leimeister, J.M. Conversational Agents for Information Retrieval in the Education Domain: A User-Centered Design Investigation. Proc. ACM Hum.-Comput. Interact. 2022, 6, 1–22. [Google Scholar] [CrossRef]

| Reference | Type | Year | Number of Primary Studies/Number of Data Sources | Method | Subject |

|---|---|---|---|---|---|

| [4] | SLR | 2021 | 29/1 | Coding scheme based on Chang & Hwang (2019) and Hsu et al. (2012) | Learning domains of ECAs; Learning strategies used by ECAs; Research design of studies in the field; Analysis methods utilized in relevant studies; Nationalities of authors and journals publishing relevant studies; Productive authors in the field |

| [6] | SLR | 2023 | 36/3 | Guidelines based on Keele et al. (2007) | Fields in which ECAs are used; Platforms on which the ECAs operate on; Roles that ECAs play when interacting with students; Interaction styles that are supported by the ECAs; Principles that are used to guide the design of ECAs; Empirical evidence that exists to support the capability of using ECAs as teaching assistants for students; Challenges of applying and using ECAs in the classroom |

| [3] | SLR | 2021 | 53/6 | Methods based on Kitchenham et al. (2007), Wohlin et al. (2012) and Aznoli & Navimipour (2017) | The most recent research status or profile for ECA applications in the education domain; The primary benefits of ECA applications in education; The challenges faced in the implementation of an ECA system in education; The potential future areas of education that could benefit from the use of ECAs |

| [2] | SLR | 2020 | 80/8 | PRISMA framework | The different types of educational and/or educational environment chatbots currently in use; The way ECAs affect student learning or service improvement; The type of technology ECAs use and the learning result that is obtained from each of them; The cases in which a chatbot helps learning under conditions similar to those of a human tutor; The possibility of evaluating the quality of chatbots and the techniques that exist for that |

| [7] | SLR | 2020 | 47 CAs/1 | undefined | Qualitative assessment of ECAs that operate on Meta Messenger |

| [5] | SLR | 2021 | 74/4 | PRISMA framework | The objectives for implementing chatbots in education; The pedagogical roles of chatbots; The application scenarios that have been used to mentor students; The extent in which chatbots are adaptable to personal students’ needs; The domains in which chatbots have been applied so far |

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| IC1: The examined chatbot application was used in teaching a subject to students and contains information about designing, integrating or evaluating it, as well as mentioning the tools or environments used in order to achieve that. | EC1: The chatbot application was designed for the training of specific target groups but not students or learners of an educational institution |

| IC2: The publication year of the article is between 2018 and 2023 | |

| IC3: The document type is a journal article | EC2: Articles that are focused too much on the results for the learners and do not describe the role of the CA and how it contributes to the results |

| IC4: The retrieved article is written in English |

| Educational Grade Level | References |

|---|---|

| K-12 education (14) | [9,10,11,12,13,14,15,16,17,18,19,20,21,22] |

| Tertiary education (43) | [23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65] |

| Unspecified (16) | [6,7,66,67,68,69,70,71,72,73,74,75,76,77,78,79] |

| Category | References |

|---|---|

| Linear (28) | [12,18,19,21,22,29,30,34,36,38,39,42,46,52,57,58,59,60,62,64,65,68,71,72,73,75,78,79] |

| Iterative (3) | [9,41,63] |

| Kind of Information | References |

|---|---|

| A suitable methodology to develop an ECA. | [40] |

| Communication methods and strategies that are going to be used to form the function of the educational tutor. | [42] |

| Design requirements to develop an ECA. | [79] |

| Design principles to develop an ECA. | [79] |

| Empirical information by examining similar applications. | [9,36,57,60] |

| Learning methods that are going to be used to form the function of the educational tutor. | [58] |

| Mechanism prototypes that will be used to develop the educational agent. | [69] |

| Ready-to-use chatbots for the learning process. | [10,11,26] |

| Suitable environments and tools to develop an ECA. | [12,21,49,60,75] |

| Technical requirements to develop an ECA. | [44,75] |

| Theories relevant to the development of an ECA. | [25] |

| Requirements | References |

|---|---|

| Users’ needs and expectations. | [19,30,36,39,41,62,73,75,79] |

| Technical requirements. | [75] |

| Collection of students’ common questions and most searched topics to be used in the educational material. | [18,19,34,38,39,46,60,73] |

| Objects to Be Defined | References |

|---|---|

| An application flow to show the function of the ECA. | [41] |

| Communication channels the ECA is going to be accessible from. | Common to all |

| Education plan. | [11] |

| Learning material and items the chatbot is going to use. | Common to all |

| Learning methods and techniques that will be used to develop the agent. | [25,41] |

| Learning objectives, tasks and goals. | [39,41] |

| Student personas and conversational standards of the student–agent interaction. | [29] |

| Teaching and learning processes. | [12,54,64,65] |

| The conversational flow between the student and the chatbot. | [52,76] |

| The design principles of the chatbot. | [19,62,79] |

| The processing mechanisms of the chatbots that are going to be used. | [29,42] |

| The purpose of the educational CA. | [9,29,53,72] |

| Tools and environments or the ready solutions of already built CAs that are going to be used. | Common to all |

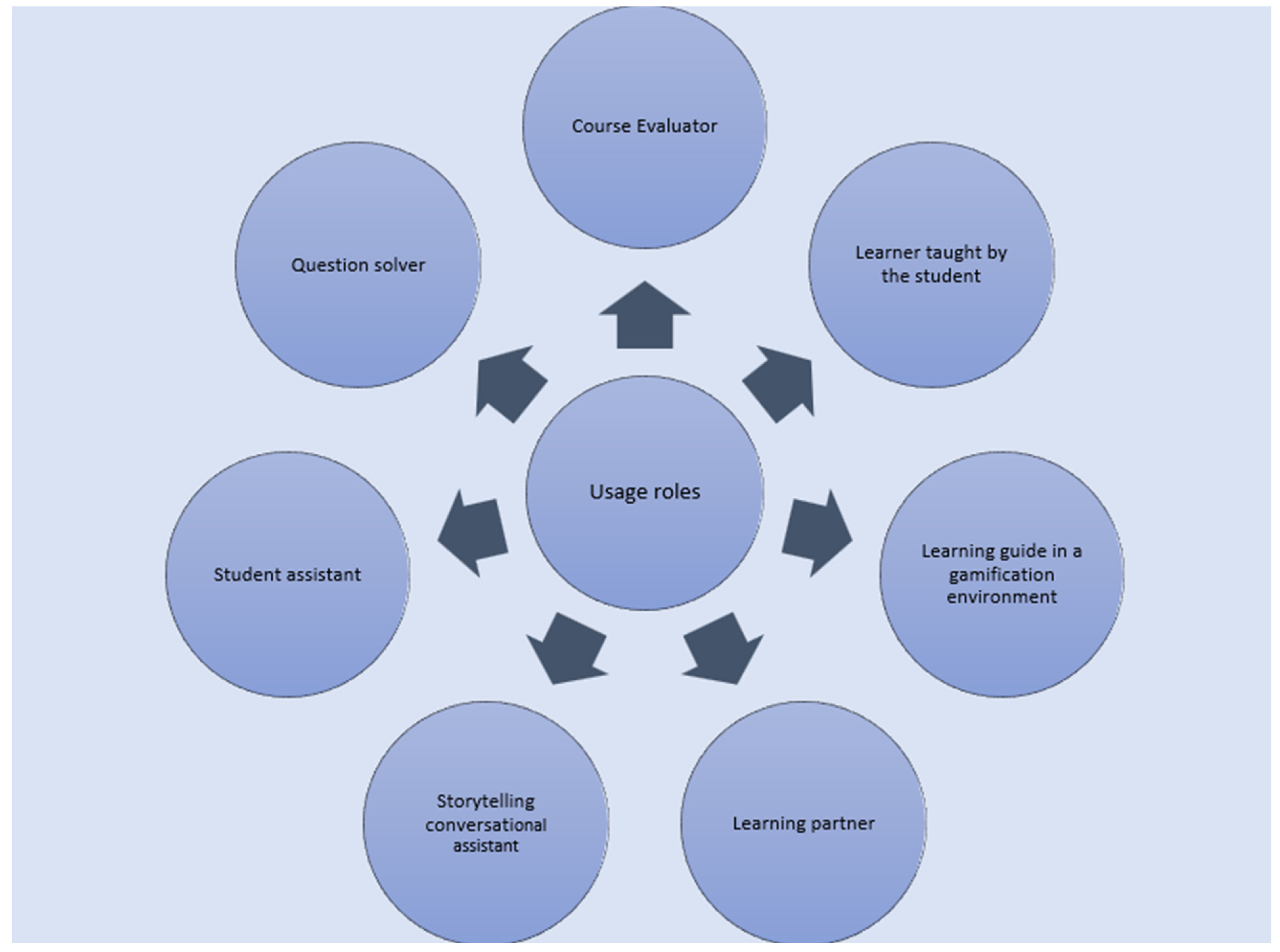

| Usage Roles of ECAs | References |

|---|---|

| Course evaluator | [62] |

| Learner taught by the student | [6,28] |

| Learning guide in a gamification environment | [68] |

| Self-Evaluator (learning partner/educational tutor) | [9,10,11,12,13,17,20,21,22,29,30,33,45,52,55,57,58,59,64,65,74,75,77,79] |

| Storytelling conversational assistant | [24] |

| Student assistant (provider of supportive educational material) | [11,19,37,38,46,53] |

| Question solver | [33,40] |

| Proposed Steps | References |

|---|---|

| Evaluating students’ questions to measure their complexity and bias rate. | [38] |

| Shaping of the final learning material that is going to be used from the educational tutor. | Common to all |

| Proposed Steps | References |

|---|---|

| Evaluating students’ competence in answering questions to modify the learning content accordingly. | [38] |

| Enriching the educational material with various forms of the educational material apart from text messages. | [21,52,53,65] |

| Studying the curriculum of the educational institute and adapting the design principles of the ECA to it. | [21,22,62] |

| Studying the curriculum of the educational institute and modifying the function of the ECA to it. | [21,22] |

| Adapting the function of the ECA to the teaching process. | [12] |

| Getting domain experts’ opinions to modify the function and the learning material used by the ECA. | [21,41] |

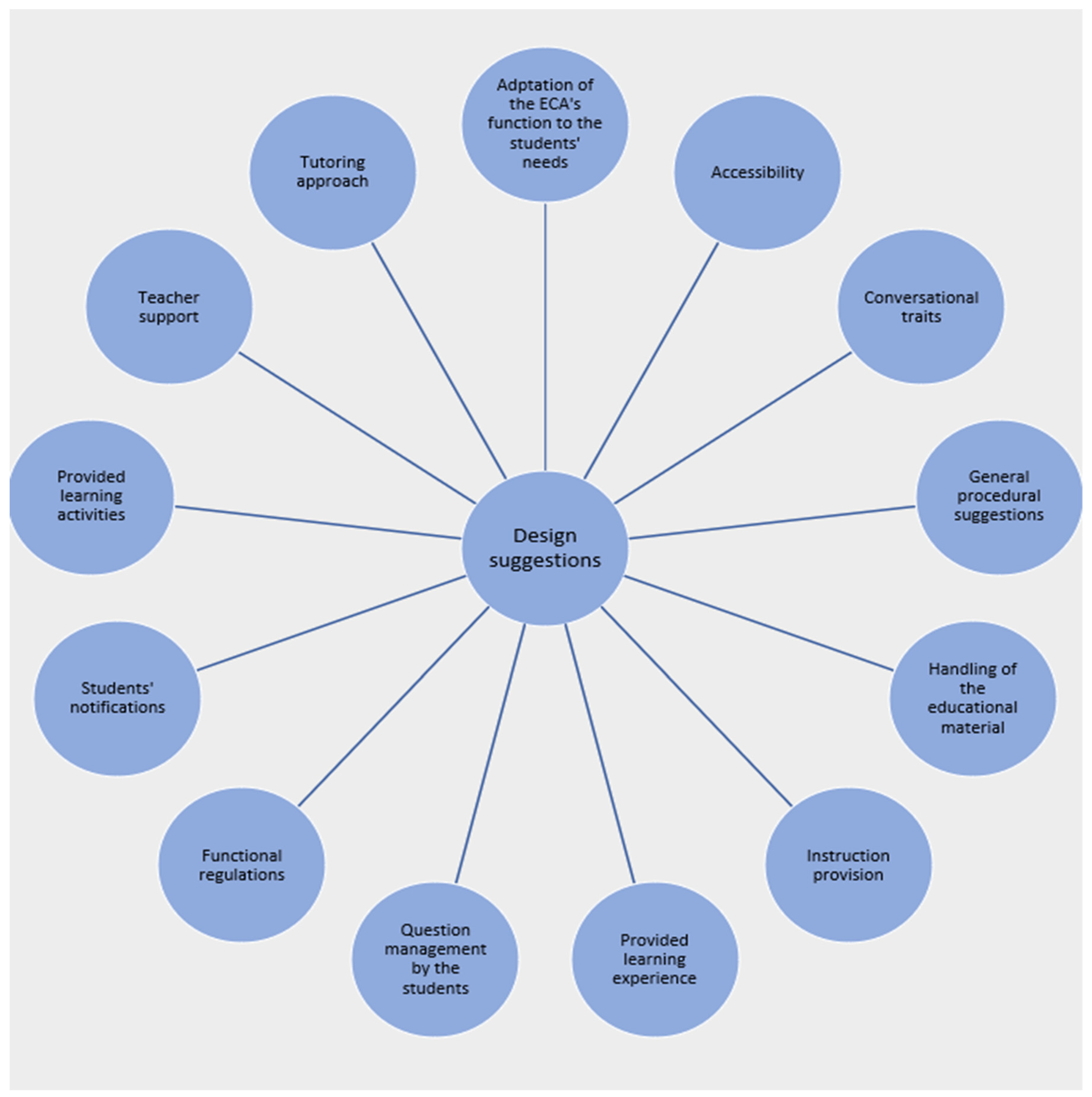

| Design Direction | Design Suggestion | References |

|---|---|---|

| Adaption of the ECA’s function to students’ needs | Adaptation of the ECA’s function to the emotional needs of the students | [79] |

| Alignment of the ECA’s function with students’ learning needs | [6,19,23,33,68] | |

| Adjustment of the ECA’s function to the curriculum of the students | [21,22] | |

| Modification of the ECA’s function to match user expectations | [23,30] | |

| Definition of the ECA’s vocabulary and expression style to be suitable with the students’ linguistic capabilities | [28] | |

| Construction of the ECA in order to be an inclusive educational tool (suitable for every learner) | [75] | |

| Accessibility | Alignment of the ECA’s function with the selected communication channels | [19] |

| The ECA should be accessible from various communication channels | [17,26] | |

| Guaranteed chatbot availability regardless of the external conditions | [23,68] | |

| Conversational traits | Equipment of the ECA with many variations for phrases with the same meaning | [19,55,59] |

| Acceptance of oral messages as input | [17,75] | |

| The ECA should address students by their name to provide personalized conversations | [46,52] | |

| Avoidance of spelling errors | [28] | |

| Capability to discuss wide range for discussion topics including casual, non-educational subjects | [6,23,46,62] | |

| The ECA should collect more information when the user cannot be understood by discussing with them to identify their intent | [17,28] | |

| The ECA should let the user define the conversational flow when the CA cannot respond | [31] | |

| Provision of messages about the limitation of the ECA when it cannot respond | [40] | |

| Provision of motivational comments and rewarding messages to students | [12,17,27,29,45,68,74] | |

| The ECA should produce quick responses | [20,46] | |

| Redirection of students to an educator’s communication channel when the ECA cannot respond | [46] | |

| Wise usage of humor in the user interaction | [26] | |

| Usage of easy-to-understand language in the response | [17] | |

| Utilization of human-like conversational characteristics such as emoticons, avatar representations and greeting messages | [27,28,29,46,62] | |

| Utilization of previous students’ responses to improve its conversational ability and provide personalized communication | [17,26,33,36,79] | |

| Usage of button-formed topic suggestion for quicker interaction | [17] | |

| Usage of “start” and “restart” interaction buttons | [17] | |

| Design process general suggestions | Engage every possible stakeholder of the development process to gain better results | [36] |

| Make the database of the system expendable | [33] | |

| Handling of the educational material | Explanation of the educational material from various perspectives | [27,52,74] |

| The ECA should predict different subjects the students did not comprehend and provide relevant supportive material | [46] | |

| Suggestion of external learning sources to students when it cannot respond | [33] | |

| Proposition of learning topics similar to the current to help students learn on their own | [33] | |

| The ECA should provide educational material in small segments with specific content | [28,38,52] | |

| Provision of educational material in various forms apart from text message | [12,17,26,29,52,53,64,65,68] | |

| Navigation buttons between the segments of the presented educational material | [52] | |

| Oral narration to accompany the offered learning material | [52] | |

| Handling of quizzes, tests or self-evaluation material | [9,10,11,12,13,17,21,22,28,29,30,33,45,52,55,56,58,59,64,65,74,75,77,79] | |

| Recommendation of suitable practice exercises to the students | [13] | |

| Instruction provision | The ECA should provide usage instructions through the functional environment of the ECA | [58,79] |

| Provided learning experience | Integration of other technologies such as AR to provide better user experience | [12] |

| The ECA should provide feedback to students | [12,13,20,27,45] | |

| The ECA should provide personalized learning experience | [68] | |

| Use of gamification elements | [68] | |

| Question handling to and control by the students | Addition of buttons so students can handle the questions they cannot answer | [20,28] |

| The ECA should allow students to trace back to previous exercises and definitions | [27] | |

| Provision of hints to students when they cannot answer a question | [28] | |

| Use of button-formed reappearance of wrong questions so as to be easier to answer | [28] | |

| Regulations for the function of the system | Alignment of the ECA’s function with the ethics policies and rules for the protection of the user data | [17,26,28,46,62,68] |

| Adjustment of the ECA’s function to the policies of the educational institution | [62,79] | |

| Students’ notifications | The ECA should provide updates to students for important course events such as deadlines | [29] |

| Traits of the provided learning activities | Provision of challenging and interesting student learning activities | [6,12,23] |

| The ECA should provide collaborative learning activities | [68] | |

| Utilization of competitive learning activities | [10,68] | |

| Teacher support | Alignment of the ECA’s function with the form of the teaching material | [19] |

| Adjustment of the ECA’s function to the teaching style of the educator | [68] | |

| The ECA should provide goal-setting possibilities to the teachers | [68] | |

| Tutoring approach | Alignment of the ECA’s function with specific learning theories | [46,58] |

| Adjustment of the ECA’s function to specific motivational framework | [12,29] | |

| Alignment of the ECA’s function with the learning purpose | [9] | |

| Design of the ECA as a student that learns from the students | [6] | |

| The ECA should utilize predefined learning paths | [56] | |

| Usage of students’ previous knowledge and skills to help them learn new information | [6] | |

| Utilization of learning motives such as students’ grades to increase students’ engagement willingness | [29] |

| Proposed Steps | References |

|---|---|

| Using previously collected or preconstructed material to train the chatbot | [31,48] |

| Training Method | References |

|---|---|

| Datasets of the development platforms | [70,75] |

| Existing corpora of data | [48] |

| Educational material (predefined sets of questions that students have done or formed by domain experts or educators) | [18,21,31,34,44,49,59,76] |

| Machine learning techniques | [27,63] |

| Proposed Steps | References |

|---|---|

| Trying a pilot application with a few students, teachers or domain experts to evaluate the first function of the ECA. | [9,18,19,31,36,41,42,72,73,75] |

| Applying modifications based on the testing results. |

| Testing Method | References |

|---|---|

| Domain expert or educator testing | [41,55] |

| Student testing | [9,24,31,36,42,73,75] |

| Testing using performance metrics | [19,76] |

| Proposed Steps | References |

|---|---|

| Providing guidance to the students on how to use the chatbot. | [10,11,22,24,40,54,57,58] |

| Motivating students to use the agent. | [12,24,30] |

| Proposed Steps. | References |

|---|---|

| Evaluating the chatbot based on system analytics and user evaluations. | common-to-all |

| Restarting the procedure from the design stage and using the evaluation data to improve the agent. |

| Evaluation Instruments | References |

|---|---|

| Interviews | [13,14,16,21,29,34,42,45,46,51,73] |

| Learning and interaction analytics | [9,13,15,16,17,20,24,25,32,33,39,42,43,48,58,60] |

| Questionnaires | [10,11,12,13,14,15,17,19,22,23,26,28,29,30,31,32,33,35,36,39,40,41,42,43,45,46,47,48,49,50,51,52,53,54,55,57,58,59,62,64,65,67,72] |

| Student performance | [16,27,29,34,43,61,67] |

| Technical performance metrics | [18,44,60,62,69,70,76] |

| Evaluation Method | References |

|---|---|

| Comparison of the current ECA with other ECAs | [44,79] |

| Functional assessments by domain experts | [39,49] |

| Student usage evaluation | Common to all |

| Usability evaluation | [19,32,42,49,53] |

| Workshops for users or stakeholders | [31] |

| Evaluation Category | Evaluation Metric | References |

|---|---|---|

| Interaction metrics | Acceptance of chat messages | [20] |

| Acceptance or indifference to the agent’s feedback | [7,20] | |

| Average duration of the interaction between the user and the ECA | [24,42] | |

| Capability of answering user questions and providing a pleasant interaction | [7] | |

| Capability of conducting a personalized conversation | [7] | |

| Capability of providing a human-like interaction and keeping a conversation going | [7] | |

| Capability of understanding and responding to the user | [7,9,19,32,53,62] | |

| Capability of understanding different uses of the language | [7] | |

| Periodicity of chat messages | [20] | |

| Quality and accuracy of responses | [18,76] | |

| The average number of words in the messages written by the students | [24] | |

| The number of propositions that were utilized by the learners | [33] | |

| The total duration of students’ time spent interacting with the ECA | [9,13,19] | |

| The total number of buttons that were pressed by the learners | [24] | |

| The total number of propositions the ECA offered to the learners. | [33] | |

| The total number of users that utilized the ECA | [33] | |

| The total number of words that were produced by the ECA | [24] | |

| The total number of words written by the students | [24] | |

| The number of user inputs that were formed using natural language and were understood by the chatbot | [33] | |

| Total number of interactions between the student and the ECA | [24,33] | |

| Total number of messages between the student and the ECA | [42] | |

| Support and scaffolding of the educational process | Capability of supporting the achievement of students’ learning goals and tasks | [7,13,42] |

| Fulfillment of the initial purpose of the agent | [55] | |

| Rate of irrelevant (in an educational context) questions asked by the students | [9] | |

| Students’ rate of correct answers (to questions posed by the chatbot) | [20] | |

| The quality of the educational material suggestions made by the ECA | [7,9,19,32,62] | |

| Technical suitability and performance | Compatibility with other software | [19] |

| Maintenance needs | [19] | |

| User experience | Students’ self-efficacy and learning autonomy | [64,79] |

| Students’ workload | [9] | |

| Usability | [19,32,42,49,53] | |

| User motivation | [9,53] | |

| User satisfaction | [33,51,58,64] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ramandanis, D.; Xinogalos, S. Designing a Chatbot for Contemporary Education: A Systematic Literature Review. Information 2023, 14, 503. https://doi.org/10.3390/info14090503

Ramandanis D, Xinogalos S. Designing a Chatbot for Contemporary Education: A Systematic Literature Review. Information. 2023; 14(9):503. https://doi.org/10.3390/info14090503

Chicago/Turabian StyleRamandanis, Dimitrios, and Stelios Xinogalos. 2023. "Designing a Chatbot for Contemporary Education: A Systematic Literature Review" Information 14, no. 9: 503. https://doi.org/10.3390/info14090503