Obstacles to Applying Electronic Exams amidst the COVID-19 Pandemic: An Exploratory Study in the Palestinian Universities in Gaza

Abstract

:1. Introduction

1.1. Objectives

- Identify the obstacles faced by university teachers and students at the Palestinian universities in the governorates of Gaza in the use of electronic examinations during the COVID-19 pandemic.

- Clarify agreement and differences in teacher and student perceptions of the identified obstacles.

- Establish the significance of the obstacles in the local context.

- Consider possible approaches to addressing these obstacles, suited to the local context.

1.2. Major Questions of This Study

- How were remote unproctored electronic written exams during the COVID-19 pandemic perceived by teachers and students at Gaza universities?

- Are there any differences in the obstacles identified by teachers and students at Gaza universities concerning the electronic exams during the COVID-19 pandemic?

2. Electronic Exams Theoretical Framework

2.1. The Concept of Electronic Exams

2.2. Electronic Exams—Characteristics and Advantages

2.3. Electronic Exams—Disadvantages

2.4. Earlier Research on Electronic Examination and Emergency Remote Teaching

2.5. Earlier Research on Attitudes and Barriers for Remote Electronic Examination

2.6. Higher Education and Socioeconomic Conditions in Gaza

3. Data Collection and Procedures

3.1. Study Approach and Method

3.2. Study Tool

3.3. Categorization and Coding

“widespread instances of cheating among students”(student)

“there was no real surveillance during the exams”(student)

“cheating was sometimes allowed”(teacher)

“the students may resort to a variety of sources to obtain answers”(teacher)

“weak internet for all and absence [of internet] in some houses”(student)

“weak internet connectivity”(student)

“internet problems”(teacher)

“malfunctioning of the internet network leads to an interruption”(teacher)

“several members of the same family sharing internet, with ensuing slow internet speed”(teacher)

3.4. Limitations

4. Results

4.1. Classification of Constraints

4.2. Obstacles Arrangement (Ordering)

4.3. Significance of Diverging Perceptions

5. Discussion

5.1. Personal Obstacles

5.2. Pedagogical Obstacles

5.3. Technical Obstacles

5.4. Financial and Organizational Obstacles

5.5. Extent of Agreement between Student and Teacher Responses

5.6. Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Abu Talib M., Bettayeb A.M., Omer R.I. Analytical study on the impact of technology in higher education during the age of COVID-19: Systematic literature review. Education and Information Technologies 2021. Doi: 10.1007/s10639-021-10507-1 |

| Adanir et. al. (a): Afacan Adanir G., Muhametjanova G., Celikbag M.A., Omuraliev A., Ismailova R. Learners’ preferences for online resources, activities, and communication tools: A comparative study of Turkey and Kyrgyzstan. E-Learning and Digital Media 2020, 17(2), 148 – 166. |

| Adanir et al. (b): Adanir G.A., Ismailova R., Omuraliev A., Muhametjanova G. Learners perceptions of online exams: A comparative study in Turkey and Kyrgyzstan. International Review of Research in Open and Distance Learning 2020, 21(3), 1 – 17. |

| Adzima K. Examining online cheating in higher education using traditional classroom cheating as a guide. Electronic Journal of e-Learning 2020, 18(6), 476 – 493. |

| Alfiras M., Bojiah J., Yassin A.A. COVID-19 pandemic and the changing paradigms of higher education: A Gulf university perspective. Asian EFL Journal 2020, 27(5.1), 339 – 347. |

| Almusharraf N.M., Khahro S.H. Students’ Satisfaction with Online Learning Experiences during the COVID-19 Pandemic. International Journal of Emerging Technologies in Learning 2020, 15(21), 246 – 267. |

| Alshurideh M.T., Al Kurdi B., AlHamad A.Q., Salloum S.A., Alkurdi S., Dehghan A., Abuhashesh M., Masa’deh R., Factors affecting the use of smart mobile examination platforms by universities’ postgraduate students during the COVID-19 pandemic: An empirical study. Informatics 2021, 8(2), 32. |

| Ana A. Trends in expert system development: A practicum content analysis in vocational education for over grow pandemic learning problems. Indonesian Journal of Science and Technology 2020, 5(2), 246-260. |

| Arora S., Chaudhary P., Singh R.K. Impact of coronavirus and online exam anxiety on self-efficacy: the moderating role of coping strategy. Interactive Technology and Smart Education 2021. Doi: 10.1108/ITSE-08-2020-0158 |

| Ayyash M.M., Herzallah F.A.T., Ahmad W. Towards social network sites acceptance in e-learning system: Students’ perspective at Palestine Technical University-Kadoorie International Journal of Advanced Computer Science and Applications 2020, 2, 312 – 320. |

| Bashitialshaaer, R.; Alhendawi, M.; Lassoued, Z. Obstacle Comparisons to Achieving Distance Learning and Applying Electronic Exams during COVID-19 Pandemic. Symmetry 2021, 13, 99. |

| Butler-Henderson, K., Crawford, J. A systematic review of online examinations: A pedagogical innovation for scalable authentication and integrity. Computers & Education, 2020, 159, 104024 |

| Cicek I., Bernik A., Tomicic I. Student thoughts on virtual reality in higher education—a survey questionnaire. Information 2021, 12(4), 151. |

| Coskun-Setirek A., Tanrikulu Z. M-Universities: Critical Sustainability Factors. SAGE Open 2021, 11(1), DOI 10.1177/2158244021999388 |

| Goff D., Johnston J., Bouboulis B.S. Maintaining academic standards and integrity in online business courses. International Journal of Higher Education 2020, 9(2), 248 – 257. |

| Goldberg D.M. Programming in a pandemic: Attaining academic integrity in online coding courses. Communications of the Association for Information Systems 2021, 48, 47 – 54. |

| Gormaz-Lobos D., Galarce-Miranda C., Hortsch H., Teacher Training’s Needs in University Context: A Case Study of a Chilean University of Applied Sciences. International Journal of Emerging Technologies in Learning 2021, 16(9), 119 – 132. |

| Gottipati S., Shankararaman V. Rapid transition of a technical course from face-to-face to online. Communications of the Association for Information Systems 2021, 48, 7 – 14. |

| Gudino Paredes S., Jasso Pena F.D.J., de La Fuente Alcazar J.M. Remote proctored exams: Integrity assurance in online education? Distance Education 2021. Doi: 10.1080/01587919.2021.1910495 |

| Harris L., Harrison D., McNally D., Ford C. Academic Integrity in an Online Culture: Do McCabe’s Findings Hold True for Online, Adult Learners? Journal of Academic Ethics 2020, 18(4), 419-434. |

| Jamieson M.V. Keeping a learning community and academic integrity intact after a mid-Term shift to online learning in chemical engineering design during the COVID-19 Pandemic. Journal of Chemical Education 2020, 97(9), 2768 – 2772. |

| Jia J., He Y. The design, implementation and pilot application of an intelligent online proctoring system for online exams. Interactive Technology and Smart Education 2021. Doi: 10.1108/ITSE-12-2020-0246 |

| Jaramillo-Morillo D., Ruiperez-Valiente J., Sarasty M.F., Ramirez-Gonzalez G. Identifying and characterizing students suspected of academic dishonesty in SPOCs for credit through learning analytics. International Journal of Educational Technology in Higher Education 2020, 17(11), 45. |

| Jawad Y.A.L.A., Shalash B. The impact of e-learning strategy on students’ academic achievement case study: Al-Quds Open University. International Journal of Higher Education 2020, 9(6), 44-53. |

| Kamal A.A.,Shaipullah N.M.,Truna L., Sabri M., Junaini S.N. Transitioning to online learning during COVID-19 Pandemic: Case study of a Pre-University Centre in Malaysia. International Journal of Advanced Computer Science and Applications 2020, 11(6), 217-223. |

| Kamber D.N. Personalized Distance-Learning Experience through Virtual Oral Examinations in an Undergraduate Biochemistry Course. Journal of Chemical Education 2021 98 (2), 395 – 399. |

| Khan Z.F., Alotaibi S.R. Design and implementation of a computerized user authentication system for e-learning. International Journal of Emerging Technologies in Learning 2020, 15(9), 4-18. |

| Khan M.A., Vivek, Nabi M.K., Khojah M., Tahir M. Students’ perception towards e-learning during covid-19 pandemic in India: An empirical study. Sustainability 2021, 13(1), 1-14. |

| Klyachko T.L., Novoseltsev A.V., Odoevskaya E.V., Sinelnikov-Murylev S.G. Lessons Learned from the Coronavirus Pandemic and Possible Changes to Funding Mechanisms in Higher Education. Voprosy Obrazovaniya 2021,1, 1 – 30. |

| Kuhlmeier V.A., Karasewich T.A., Olmstead M.C. Teaching Animal Learning and Cognition: Adapting to the Online Environment. Comparative Cognition and Behavior Reviews 2020, 15, 1-29. |

| Lassoued, Z.; Alhendawi, M.; Bashitialshaaer, R. An exploratory study of the obstacles for achieving quality in distance learning during the COVID-19 pandemic. Educ. Sci. 2020, 10, 232, doi:10.3390/educsci10090232. |

| Novikov P. Impact of COVID-19 emergency transition to on-line learning on international students’ perceptions of educational process at Russian University. Journal of Social Studies Education Research 2020, 11(3) 270-302. |

| Qashou A. Influencing factors in M-learning adoption in higher education. Education and Information Technologies 2021, 26(2), 1755 – 1785. |

| Tsekea, S., Chigwada, J.P. COVID-19: strategies for positioning the university library in support of e-learning. Digital Library Perspectives 2021, 37(1), 54-64. |

| Usher M., Hershkovitz A. Forkosh-Baruch A. From data to actions: Instructors decision making based on learners data in online emergency remote teaching. British Journal of Educational Technology 2021. doi: 10.1111/bjet.13108 |

| Wu W., Berestova A., Lobuteva A., Stroiteleva N. An Intelligent Computer System for Assessing Student Performance International Journal of Emerging Technologies in Learning 2021, 16(2) 31-45. |

References

- World Health Organization. Corona Virus Disease (COVID-19): Question and Answer. 2020. Available online: https://www.who.int/ar/emergencies/diseases/novel-coronavirus-2019/advice-for-public/q-a-coronaviruses (accessed on 17 May 2020).

- UNESCO. Startling Disparities in Digital Learning Emerge as COVID-19 Spreads: UN Education Agency. UN News, 21 April 2020. Available online: https://news.un.org/en/story/2020/04/1062232 (accessed on 21 March 2021).

- Khalaf, Z.N. Corona Virus and Digital Equality in Tele-Teaching in Emergency Situations. New Education Blog. Available online: https://www.new-educ.com// (accessed on 17 May 2020).

- García-Alberti, M.; Suárez, F.; Chiyón, I.; Mosquera Feijoo, C. Challenges and Experiences of Online Evaluation in Courses of Civil Engineering during the Lockdown Learning Due to the COVID-19 Pandemic. Educ. Sci. 2021, 11, 59. [Google Scholar] [CrossRef]

- Gewin, V. Pandemic burnout is rampant in academia. Nature 2021, 591, 489–491. [Google Scholar] [CrossRef]

- Bojovic, Z.; Bojovic, P.D.; Vujosevic, D.; Suh, J. Education in times of crisis: Rapid transition to distance learning. Comput. Appl. Eng. Educ. 2020, in press. [Google Scholar]

- Abdelhai, R.A. Distance Education in the Arab World and the Challenges of the Twenty-First Century; The Anglo-Egyptian Bookshop: Cairo, Egypt, 2010. [Google Scholar]

- Galvis, Á.H. Supporting decision-making processes on blended learning in higher education: Literature and good practices review. Int. J. Educ. Technol. High. Educ. 2018, 15, 1–38. [Google Scholar] [CrossRef]

- Castro, R. Blended learning in higher education: Trends and capabilities. Educ. Inf. Technol. 2019, 24, 2523–2546. [Google Scholar] [CrossRef]

- Bond, M.; Buntins, K.; Bedenlier, S.; Zawacki-Richter, O.; Kerres, M. Mapping research in student engagement and educational technology in higher education: A systematic evidence map. Int. J. Educ. Technol. High. Educ. 2020, 17, 2. [Google Scholar] [CrossRef]

- Shen, C.W.; Ho, J.T. Technology-enhanced learning in higher education: A bibliometric analysis with latent semantic approach. Comput. Hum. Behav. 2020, 104, 106177. [Google Scholar] [CrossRef]

- Hodges, C.; Moore, S.; Lockee, B.; Trust, T.; Bond, A. The difference between emergency remote teaching and online learning. Educ. Rev. 2020, 27, 1–12. [Google Scholar]

- Liguori, E.W.; Winkler, C. From offline to online: Challenges and opportunities for entrepreneurship education following the COVID-19 pandemic. Entrep. Educ. Pedagog. 2020. [Google Scholar] [CrossRef] [Green Version]

- Gonçalves, E.; Capucha, L. Student-Centered and ICT-Enabled Learning Models in Veterinarian Programs: What Changed with COVID-19? Educ. Sci. 2020, 10, 343. [Google Scholar] [CrossRef]

- Huang, R.H.; Liu, D.J.; Tlili, A.; Yang, J.F. Handbook on Facilitating Flexible Learning during Educational Disruption: The Chinese Experience in Maintaining Undisrupted Learning in COVID-19 Outbreak; Smart Learning Institute of Beijing Normal University: Beijing, China, 2020. [Google Scholar]

- Chakraborty, P.; Mittal, P.; Gupta, M.S.; Yadav, S.; Arora, A. Opinion of students on online education during the COVID-19 pandemic. Hum. Behav. Emerg. Tech. 2020, 3, 1–9. [Google Scholar] [CrossRef]

- Dutta, S.; Smita, M.K. The impact of COVID-19 pandemic on tertiary education in Bangladesh: Students’ perspectives. Open J. Soc. Sci. 2020, 8, 53–68. [Google Scholar]

- Shehab, A.; Alnajar, T.M.; Hamdia, M.H. A study of the effectiveness of E-learning in Gaza Strip during COVID-19 pandemic: The Islamic University of Gaza “case study”. In Proceedings of the 3rd Scientia Academia International Conference (SAICon-2020), Kuala Lumpur, Malaysia, 26–27 December 2020. [Google Scholar]

- Subedi, S.; Nayaju, S.; Subedi, S.; Shah, S.K.; Shah, J.M. Impact of E-learning during COVID-19 pandemic among nursing students and teachers of Nepal. Int. J. Sci. Healthc. Res. 2020, 5, 68–76. [Google Scholar]

- Baticulon, R.E.; Sy, J.J.; Alberto, N.R.I.; Baron, M.B.C.; Mabulay, R.E.C.; Rizada, L.G.T.; Tia, C.J.S.; Clarion, C.A.; Reyes, J.C.B. Barriers to online learning in the time of COVID-19: A national survey of medical students in the Philippines. Med. Sci. Educ. 2021, 31, 615–626. [Google Scholar] [CrossRef]

- Shraim, K. Online examination practices in higher education institutions: Learners’ perspectives. Turk. Online J. Distance Educ. 2019, 20, 185–196. [Google Scholar] [CrossRef]

- Basilaia, G.; Dgebuadze, M.; Kantaria, M.; Chokhonelidze, G. Replacing the classic learning form at universities as an immediate response to the COVID-19 virus infection in Georgia. Int. J. Res. Appl. Sci. Eng. Technol. 2020, 8, 101–108. [Google Scholar] [CrossRef]

- International Telecommunication Union ITU. The Last-mile Internet Connectivity Solutions Guide: Sustainable Connectivity Options for Unconnected Sites; International Telecommunication Union: Geneva, Switzerland, 2020. [Google Scholar]

- Kaliisa, R.; Michelle, P. Mobile learning policy and practice in Africa: Towards inclusive and equitable access to higher education. Australas. J. Educ. Technol. 2019, 35, 1–14. [Google Scholar] [CrossRef]

- International Telecommunication Union ITU. The affordability of ICT services 2020; ITU Policy Brief; ITU: Geneva, Switzerland, 2021; 8p. [Google Scholar]

- Alshurideh, M.T.; Al Kurdi, B.; AlHamad, A.Q.; Salloum, S.A.; Alkurdi, S.; Dehghan, A.; Abuhashesh, M.; Masa’deh, R. Factors affecting the use of smart mobile examination platforms by universities’ postgraduate students during the COVID-19 pandemic: An empirical study. Informatics 2021, 8, 32. [Google Scholar] [CrossRef]

- Qashou, A. Influencing factors in M-learning adoption in higher education. Educ. Inf. Technol. 2021, 26, 1755–1785. [Google Scholar] [CrossRef]

- OECD. Bridging the Digital Gender Divide, Include, Upskill, Innovate; OECD: Paris, France, 2018. [Google Scholar]

- Khlaif, Z.N.; Salha, S. The unanticipated educational challenges of developing countries in Covid-19 crisis: A brief report. Interdiscip. J. Virtual Learn. Med Sci. 2020, 11, 130–134. [Google Scholar]

- Guàrdia, L.; Crisp, G.; Alsina, I. Trends and challenges of e-assessment to enhance student learning in Higher Education. In Innovative Practices for Higher Education Assessment and Measurement; Cano, E., Ion, G., Eds.; IGI Global: Hershey, PA, USA, 2017; pp. 36–56. [Google Scholar]

- Torres-Madroñero, E.M.; Torres-Madroñero, M.C.; Ruiz Botero, L.D. Challenges and Possibilities of ICT-Mediated Assessment in Virtual Teaching and Learning Processes. Future Internet 2020, 12, 232. [Google Scholar] [CrossRef]

- Bennett, R.E. Online Assessment and the Comparability of Score Meaning; Educational Testing Service: Princeton, NJ, USA, 2003. [Google Scholar]

- Appiah, M.; Van Tonder, F. E-Assessment in Higher Education: A Review. Int. J. Bus. Manag. Econ. Res. 2018, 9, 1454–1460. [Google Scholar]

- Bahmawi, H.; Lamir, N. The Impact of Educational Evaluation Methods in the Context of Comparative Pedagogy with Competencies on the Pattern of Learner Achievement. Master’s Thesis, University of Adrar, Adrar, Algeria, 2014. [Google Scholar]

- Fluck, A.; Pullen, D.; Harper, C. Case study of a computer based examination. J. Educ. Technol. 2009, 25, 509–523. [Google Scholar] [CrossRef] [Green Version]

- Ranne, S.C.; Lunden, O.; Kolari, S. An alternative teaching method for electrical engineering course. Proc. IEEE Trans. Educ. 2008, 51, 423–431. [Google Scholar] [CrossRef]

- Lassoued, Z.; Alhendawi, M.; Bashitialshaaer, R. An exploratory study of the obstacles for achieving quality in distance learning during the COVID-19 pandemic. Educ. Sci. 2020, 10, 232. [Google Scholar] [CrossRef]

- Bashitialshaaer, R.; Alhendawi, M.; Lassoued, Z. Obstacle Comparisons to Achieving Distance Learning and Applying Electronic Exams during COVID-19 Pandemic. Symmetry 2021, 13, 99. [Google Scholar] [CrossRef]

- Rytkönen, A.; Myyry, L. Student Experiences on Taking Electronic Exams at the University of Helsinki. In Proceedings of the World Conference on Educational Multimedia, Hypermedia and Telecommunications, Tampere, Finland, 24 June 2014; pp. 2114–2121. [Google Scholar]

- Al-Khayyat, M. Students and instructor attitudes toward computerized tests in Business Faculty at the main campus of Al-Balqa Applied University. An-Najah Univ. J. Res. Hum. Sci. 2017, 31, 2041–2072. [Google Scholar]

- Tawafak, R.M.; Mohammed, M.N.; Arshah, R.B.A.; Romli, A. Review on the effect of student learning outcome and teaching technology in Omani’s higher education institution’s academic accreditation process. In Proceedings of the 2018 7th International Conference on Software and Computer Applications, CSCA 2018, Kuantan, Malaysia, 8–10 February 2018; pp. 243–247. [Google Scholar] [CrossRef]

- Tsiligiris, V.; Hill, C. A prospective model for aligning educational quality and student experience in international higher education. Stud. High. Educ. 2021, 46, 228–244. [Google Scholar] [CrossRef] [Green Version]

- Ahokas, K. Electronic Exams Save Teachers’ Time—and Nature. Available online: https://www.valamis.com/stories/csc-exam (accessed on 2 January 2021).

- Knowly. Advantages and Disadvantages of Online Examination System. 22 July 2020. Available online: https://www.onlineexambuilder.com/knowledge-center/exam-knowledge-center/advantages-and-disadvantages-of-online-examination-system/item10240 (accessed on 2 December 2020).

- Zozie, P.; Chawinga, W.D. Mapping an open digital university in Malawi: Implications for Africa. Res. Comp. Int. Educ. 2018, 13, 211–226. [Google Scholar] [CrossRef] [Green Version]

- Bennett, R.E. How the internet will help large-scale assessments reinvent itself. Educ. Policy Anal. Arch. 2001, 9, 1–23. [Google Scholar] [CrossRef] [Green Version]

- Ismail, R.; Safieddine, F.; Jaradat, A. E-university delivery model: Handling the evaluation process. Bus. Process. Manag. J. 2019, 25, 1633–1646. [Google Scholar] [CrossRef]

- Ryan, S.; Scott, B.; Freeman, H.; Patel, D. The Virtual University: The Internet and Resource-Based Learning; Kogan Page: London, UK, 2000. [Google Scholar]

- Clotilda, M. Features of Online Examination Software. 24 May 2020. Available online: https://www.creatrixcampus.com/blog/top-15-features-online-examination-software (accessed on 5 January 2021).

- Harrison, N.; Bergen, C. Some design strategies for developing an online course. Educ. Technol. 2000, 40, 57–60. [Google Scholar]

- Luck, J.A.; Chugh, R.; Turnbull, D.; Rytas Pember, E. Glitches and hitches: Sessional academic staff viewpoints on academic integrity and academic misconduct. High. Educ. Res. Dev. 2021, 41, 1–16. [Google Scholar] [CrossRef]

- Ngqondi, T.; Maoneke, P.B.; Mauwa, H. A secure online exams conceptual framework for South African universities. Soc. Sci. Humanit. Open 2021, 3, 100132. [Google Scholar]

- Jaramillo-Morillo, D.; Ruiperez-Valiente, J.; Sarasty, M.F.; Ramirez-Gonzalez, G. Identifying and characterizing students suspected of academic dishonesty in SPOCs for credit through learning analytics. Int. J. Educ. Technol. High. Educ. 2020, 17, 45. [Google Scholar] [CrossRef]

- Liu, I.F.; Chen, R.S.; Lu, H.C. An exploration into improving examinees’ acceptance of participation in online exam. Educ. Technol. Soc. 2015, 18, 153–165. [Google Scholar]

- Sarrayrih, M.A.; Ilyas, M. Challenges of Online Exam, Performances and problems for Online University Exam. IJCSI Int. J. Comput. Sci. Issues 2013, 10, 439–443. [Google Scholar]

- Sarrayrih, M.A. Implementation and security development online exam, performances and problems for online university exam. Int. J. Comput. Sci. Inf. Secur. 2016, 14, 24. [Google Scholar]

- Harris, L.; Harrison, D.; McNally, D.; Ford, C. Academic Integrity in an Online Culture: Do McCabe’s Findings Hold True for Online, Adult Learners? J. Acad. Ethics 2020, 18, 419–434. [Google Scholar] [CrossRef]

- Dawson, P. Five ways to hack and cheat with bring-your-own-device electronic examinations. Br. J. Educ. Technol. 2016, 47, 592–600. [Google Scholar] [CrossRef]

- Chirumamilla, A.; Sindre, G.; Nguyen-Duc, A. Cheating in e-exams and paper exams: The perceptions of engineering students and teachers in Norway. Assess. Eval. High. Educ. 2020, 45, 940–957. [Google Scholar] [CrossRef]

- Küppers, B.; Eifert, T.; Zameitat, R.; Schroeder, U. EA and BYOD: Threat Model and Comparison to Paper-based Examinations. In Proceedings of the CSEDU 2020—12th International Conference on Computer Supported Education, Prague, Czech Republic, 2–4 May 2020; pp. 495–502. [Google Scholar]

- Iglesias-Pradas, S.; Hernández-García, Á.; Chaparro-Peláez, J.; Prieto, J.L. Emergency remote teaching and students’ academic performance in higher education during the COVID-19 pandemic: A case study. Comput. Hum. Behav. 2021, 119, 106713. [Google Scholar] [CrossRef]

- Kuntsman, A.; Rattle, I. Towards a paradigmatic shift in sustainability studies: A systematic review of peer reviewed literature and future agenda setting to consider environmental (Un)sustainability of digital communication. Environ. Commun. 2019, 13, 567–581. [Google Scholar] [CrossRef] [Green Version]

- Wu, W.; Berestova, A.; Lobuteva, A.; Stroiteleva, N. An Intelligent Computer System for Assessing Student Performance. Int. J. Emerg. Technol. Learn. 2021, 16, 31–45. [Google Scholar] [CrossRef]

- Tsekea, S.; Chigwada, J.P. COVID-19: Strategies for positioning the university library in support of e-learning. Digit. Libr. Perspect. 2021, 37, 54–64. [Google Scholar] [CrossRef]

- Usher, M.; Hershkovitz, A.; Forkosh-Baruch, A. From data to actions: Instructors’ decision making based on learners’ data in online emergency remote teaching. Br. J. Educ. Technol. 2021. [Google Scholar] [CrossRef]

- Gormaz-Lobos, D.; Galarce-Miranda, C.; Hortsch, H. Teacher Training’s Needs in University Context: A Case Study of a Chilean University of Applied Sciences. Int. J. Emerg. Technol. Learn. 2021, 16, 119–132. [Google Scholar] [CrossRef]

- Jamieson, M.V. Keeping a learning community and academic integrity intact after a mid-term shift to online learning in chemical engineering design during the COVID-19 Pandemic. J. Chem. Educ. 2020, 97, 2768–2772. [Google Scholar] [CrossRef]

- Almusharraf, N.M.; Khahro, S.H. Students’ Satisfaction with Online Learning Experiences during the COVID-19 Pandemic. Int. J. Emerg. Technol. Learn. 2020, 15, 246–267. [Google Scholar] [CrossRef]

- Klyachko, T.L.; Novoseltsev, A.V.; Odoevskaya, E.V.; Sinelnikov-Murylev, S.G. Lessons Learned from the Coronavirus Pandemic and Possible Changes to Funding Mechanisms in Higher Education. Vopr. Obraz. 2021, 1, 1–30. [Google Scholar]

- Abu Talib, M.; Bettayeb, A.M.; Omer, R.I. Analytical study on the impact of technology in higher education during the age of COVID-19: Systematic literature review. Educ. Inf. Technol. 2021. [Google Scholar] [CrossRef]

- Butler-Henderson, K.; Crawford, J. A systematic review of online examinations: A pedagogical innovation for scalable authentication and integrity. Comput. Educ. 2020, 159, 104024. [Google Scholar] [CrossRef] [PubMed]

- Kamber, D.N. Personalized Distance-Learning Experience through Virtual Oral Examinations in an Undergraduate Biochemistry Course. J. Chem. Educ. 2021, 98, 395–399. [Google Scholar] [CrossRef]

- Gudino Paredes, S.; Jasso Pena, F.D.J.; de La Fuente Alcazar, J.M. Remote proctored exams: Integrity assurance in online education? Distance Educ. 2021. [Google Scholar] [CrossRef]

- Arora, S.; Chaudhary, P.; Singh, R.K. Impact of coronavirus and online exam anxiety on self-efficacy: The moderating role of coping strategy. Interact. Technol. Smart Educ. 2021. [Google Scholar] [CrossRef]

- Jawad, Y.A.L.A.; Shalash, B. The impact of e-learning strategy on students’ academic achievement case study: Al-Quds Open University. Int. J. High. Educ. 2020, 9, 44–53. [Google Scholar] [CrossRef]

- Alfiras, M.; Bojiah, J.; Yassin, A.A. COVID-19 pandemic and the changing paradigms of higher education: A Gulf university perspective. Asian EFL J. 2020, 27, 339–347. [Google Scholar]

- James, R. Tertiary student attitudes to invigilated, online summative examinations. Int. J. Educ. Technol. High. Educ. 2016, 13, 2–13. [Google Scholar] [CrossRef] [Green Version]

- Thomas, P.; Price, B.; Paine, C.; Richards, M. Remote electronic examinations: Student experiences. Br. J. Educ. Technol. 2002, 33, 537–549. [Google Scholar] [CrossRef]

- Ossiannilsson, E. Quality Models for Open, Flexible, and Online Learning. J. Comput. Sci. Res. 2020, 2, 19–31. [Google Scholar] [CrossRef]

- Elayyat, J. Assessment of the Higher Education Sector Needs in Palestine, in the Context of the National Spatial Plan 2025. Ph.D. Thesis, Birzeit University, Birzeit, Palestine, 2016. [Google Scholar]

- UN Population Division. World Population Prospects. World Population Prospects—Population Division—United Nations. Available online: https://population.un.org/wpp/ (accessed on 10 June 2021).

- General Administration of Planning, Ministry of Education and Higher Education. Statistical Yearbook of Education in the Governorates of Gaza 2019/2020, Gaza, Palestine. Available online: www.mohe.ps (accessed on 10 June 2021).

- Palestinian Central Bureau of Statistics. PCBS. Available online: http://www.pcbs.gov.ps/default.aspx (accessed on 10 June 2021).

- Middle East Monitor. Official: Unemployment Rate Reaches 82% in Gaza Strip. Available online: https://www.middleeastmonitor.com/20201124-official-unemployment-rate-reaches-82-in-gaza-strip/ (accessed on 10 June 2020).

- UNCTAD. Economic Costs of the Israeli Occupation for the Palestinian People: The Gaza Strip under Closure and Restrictions. Report Prepared by the Secretariat of the United Nations Conference on Trade and Development on the Economic costs of the Israeli Occupation for the Palestinian People: The Gaza Strip under closure and Restrictions; A/75/310; UNCTAD: Geneva, Switzerland, 13 August 2020. [Google Scholar]

- OCHAOPT. Food Insecurity in the Opt: 1.3 Million Palestinians in the Gaza Strip are Food Insecure. 14 December 2018. United Nations Office for the Coordination of Humanitarian Affairs—Occupied Palestinian Territory. Available online: ochaopt.org (accessed on 10 June 2021).

- Al-Azhar University. Available online: http://www.alazhar.edu.ps/eng/index.asp (accessed on 10 June 2021).

- Jawabreh, A. Gaza’s University Students Drop Out at an Accelerating Rate Due to the Pandemic. 5 October 2020. Available online: www.al-fanarmedia.org (accessed on 10 June 2021).

- Elessi, K.; Aljamal, A.; Albaraqouni, L. Effects of the 10-year siege coupled with repeated wars on the psychological health and quality of life of university students in the Gaza Strip: A descriptive study. Lancet 2019, 393. [Google Scholar] [CrossRef]

- Radwan, A.S. Anxiety among University Staff during Covid-19 Pandemic: A Cross-Sectional Study. IOSR J. Nurs. Health Sci. (IOSR-JNHS) 2021, 10, 1–8. [Google Scholar] [CrossRef]

- Al-Quds Open University. Available online: www.qou.edu (accessed on 10 June 2021).

- Alsadoon, H. Students’ Perceptions of E-Assessment at Saudi Electronic University. Turk. Online J. Educ. Technol. TOJET 2017, 16, 147–153. [Google Scholar]

- Falta, E.; Sadrata, F. Barriers to using e-learning to teach masters students at the Algerian University. Arab J. Media Child. Cult. 2019, 6, 17–48. [Google Scholar]

- Jadee, M. Attitudes of faculty members towards conducting electronic tests and the obstacles to their application at the University of Tabuk. Spec. Int. Educ. J. 2017, 6, 77–87. [Google Scholar]

- Mbatha, B.; Naidoo, L. Bridging the transactional gap in open distance learning (ODL): The case of the University of South Africa (Unisa). Inkanyiso J. Hum. Soc. Sci. 2010, 2, 64–69. [Google Scholar] [CrossRef] [Green Version]

- Alumari, M.M.; Alkhatib, I.M.; Alrufiai, I.M.M. The reality and requirements of modern education methods—Electronic Learning. Al-Dananeer Mag. 2016, 9, 37–56. [Google Scholar]

- Jawida, A.; Tarshun, O.; Alyane, A. Characteristics and objectives of distance education and e-learning—A comparative study on the experiences of some Arab countries. Arab J. Lit. Hum. 2019, 6, 285–298. [Google Scholar]

- Frost, J. Statistics by Jim. Available online: https://statisticsbyjim.com/regression/interpret-r-squared-regression/ (accessed on 7 January 2021).

- Al-Rantisi, A.M. Blind Students’ Attitudes towards the Effectiveness of Services of Disability Services Center at Islamic University of Gaza. Asian J. Hum. Soc. Stud. 2019, 7. [Google Scholar] [CrossRef] [Green Version]

- Akimov, A.; Malin, M. When old becomes new: A case study of oral examination as an online assessment tool. Assess. Eval. High. Educ. 2020, 45, 1205–1221. [Google Scholar] [CrossRef]

- Mandour, E.M. The effect of a training program for postgraduate students at the College of Education on designing electronic tests according to the proposed quality standards. J. Educ. Soc. Stud. 2013, 19, 391–460. [Google Scholar]

- Hamed, T.A.; Peric, K. The role of renewable energy resources in alleviating energy poverty in Palestine. Renew. Energy Focus 2020, 35, 97–107. [Google Scholar] [CrossRef]

- Moghli, M.A.; Shuayb, M. Education under Covid-19 Lockdown: Reflections from Teachers, Students and Parents; Center for Lebanese Studies, Lebanese American University: Beirut, Lebanon, 2020. [Google Scholar]

- Pastor, R.; Tobarra, L.; Robles-Gómez, A.; Cano, J.; Hammad, B.; Al-Zoubi, A.; Hernández, R.; Castro, M. Renewable energy remote online laboratories in Jordan universities: Tools for training students in Jordan. Renew. Energy 2020, 149, 749–759. [Google Scholar] [CrossRef]

- Barakat, S.; Samson, M.; Elkahlout, G. The Gaza reconstruction mechanism: Old wine in new bottlenecks. J. Interv. Statebuild. 2018, 12, 208–227. [Google Scholar] [CrossRef]

- Barakat, S.; Samson, M.; Elkahlout, G. Reconstruction under siege: The Gaza Strip since 2007. Disasters 2020, 44, 477–498. [Google Scholar] [CrossRef]

- Weinthal, E.; Sowers, J. Targeting infrastructure and livelihoods in the West Bank and Gaza. Int. Aff. 2019, 95, 319–340. [Google Scholar] [CrossRef] [Green Version]

- Al Mezan Center for Human Rights. News Brief: Annual Report on Access to Economic, Social, and Cultural Rights in Gaza Shows Decline due to COVID-19 Pandemic and Continued Unlawful Restrictions by Occupying Power. 18 March 2021. Available online: https://mezan.org/en/post/23940 (accessed on 10 June 2021).

- Aradeh, O.; Van Belle, J.P.; Budree, A. ICT-Based Participation in Support of Palestinian Refugees’ Sustainable Livelihoods: A Local Authority Perspective. In Information and Communication Technologies for Development. ICT4D 2020. IFIP Advances in Information and Communication Technology; Bass, J.M., Wall, P.J., Eds.; Springer: Cham, Switzerland, 2020; Volume 587. [Google Scholar] [CrossRef]

- UNESCO. How Many Students Are at Risk of Not Returning to School? Advocacy Paper 30 July 2020; UNESCO COVID-19 Education Response, Section of Education Policy; UNESCO: Paris, France, 2020. [Google Scholar]

| Overall Focus | Publications | Countries |

|---|---|---|

| Literature reviews | Abu Talib et al. [70]; Butler-Henderson & Crawford [71] | |

| Prospects of introducing ICT-mediated learning, technical acceptance | Cicek et al.; Alshurideh et al. [26]; Coskun-Setirek & Tanrikulu; Adanir et al. (a); Qashou [27], Ayyash et al. | Croatia, United Arab Emirates, Turkey, Kyrgyzstan, Palestine |

| ERT | Novikov, Klyachko et al. [69]; Kamal et al.; Ana; Kuhlmeier et al.; Jamieson [67]; Kamber [72]; Gudino Paredes et al. [73]; Gormaz-Lobos et al. [66]; Khan et al.; Arora et al. [74]; Gottipati & Shankararaman; Alshurideh et al. [26]; Jawad & Shalash [75]; Lassoued et al. [37]; Bashitialshaaer et al. [38]; Almusharraf & Khahro [68]; Alfiras et al. [76]; Usher et al. [65]; Tsekea & Chigwada [64] | Russia, Malaysia, Indonesia, Canada, USA, Mexico, Chile, India, Singapore, United Arab Emirates, Palestine, Arab states, Saudi Arabia, Bahrain, Israel, Zimbabwe |

| General experiences of electronic examination | Adanir et al. (b) | Turkey, Kyrgyzstan |

| Academic fraud and tools to prevent fraud | Goldberg; Harris et al. [57]; Goff et al.; Adzima; Jia & He; Khan & Alotaibi; Gudino Paredes et al. [73]; Jaramillo-Morillo et al. [53] | USA, China, Mexico, Colombia |

| Other tools for remote electronic examination | Wu et al. [63] | Russia, China |

| Intersection ERT and remote electronic examination | Alshurideh et al. [26]; Kamber [72]; Gudino Paredes et al. [73]; Arora et al. [74] | United Arab Emirates, USA, Mexico, India |

| University | Type | Established Year | Area Per St. (m2) * | Student Numbers | Academic Staff (2019–2020) *** | ||

|---|---|---|---|---|---|---|---|

| (2013–2014) * | (2015–2016) ** | (2019–2020) *** | |||||

| Al-Azhar | Public | 1991 | 5.5 | 14,453 | 12,473 | 226 | |

| Islamic University | Public | 1978 | 4.2 | 19,273 | 15,521 | 400 | |

| Al-Aqsa | Government | 1991 | 13.9 | 18,727 | 14,681 | 450 | |

| Al-Quds Open University | Public | 1975 | - | 60,230 (West Bank & Gaza) | 12,828 (five Gaza branches) | ||

| Targeted Members | Total Number | Percentage (%) | Percentage M/F (%) |

|---|---|---|---|

| University teachers | 97 | 63.8 | 70/30 |

| University students | 55 | 36.2 | 60/40 |

| Total | 152 | 100 | 66/34 |

| Obstacles Category (Groups) | Obstacles | Students Repetition (n = 55) | Teachers Repetition (n = 97) | Overall Repetition (n = 152) | Overall Percentage (%) |

|---|---|---|---|---|---|

| Personal obstacles | Difficulty and poor study in light of critical conditions. | 22 | 65 | 87 | 57.2 |

| Parents are not convinced of electronic exams (parents are not cooperating). | - | 40 | 40 | 26.3 | |

| Lack of time, many questions, and lack of experience. | 15 | - | 15 | 9.9 | |

| Electronic exams will not show the students’ real level and will not distinguish students from each other. | 13 | 56 | 69 | 45.4 | |

| Pedagogical obstacles | The answers are very similar among students. | 9 | 28 | 37 | 24.3 |

| Some teachers do not have sufficient experience to prepare and apply for the exams. | - | 35 | 35 | 23.0 | |

| The exams did not have a high quality of design and preparation. | 12 | 46 | 58 | 38.2 | |

| Technical obstacles | Power cuts (lack of electricity). | 26 | 75 | 101 | 66.4 |

| Internet unavailability and poor internet quality. | 20 | 68 | 88 | 57.9 | |

| Financial and organizational obstacles | There was no real surveillance, widespread cases of fraud. | 14 | 48 | 62 | 40.8 |

| Not communicating well between students and lecturers. | 10 | 29 | 39 | 25.7 | |

| Parents and students lack experience with technology. | - | 57 | 57 | 37.5 | |

| Lack of financial and technical capabilities of some students. | 29 | 81 | 110 | 72.4 |

| Type of Obstacles | Overall Percentage and Arrangement | Breakdown Percentage and Arrangement | ||||

|---|---|---|---|---|---|---|

| Students | Teachers | |||||

| (%) (n = 152) | Obstacle Order | (%) (n = 55) | Order | (%) (n = 97) | Order | |

| Lack of financial and technical capabilities of some students. | 72.4 | 1 | 52.7 | 1 | 83.5 | 1 |

| Power cuts (lack of electricity). | 66.4 | 2 | 47.3 | 2 | 77.3 | 2 |

| Internet unavailability and poor internet quality. | 57.9 | 3 | 36.4 | 4 | 70.1 | 3 |

| Difficulty and poor study in light of critical conditions. | 57.2 | 4 | 40.0 | 3 | 67.0 | 4 |

| Electronic exams will not show the students’ real level and will not distinguish students from each other. | 45.4 | 5 | 23.6 | 7 | 57.7 | 6 |

| There was no real surveillance, widespread cases of fraud. | 40.8 | 6 | 25.5 | 6 | 49.5 | 7 |

| The exams did not have a high quality of design and preparation. | 38.2 | 7 | 21.8 | 8 | 47.4 | 8 |

| Parents and students lack experience with technology. | 37.5 | 8 | - | - | 58.8 | 5 |

| Parents are not convinced of electronic exams (parents are not cooperating). | 26.3 | 9 | - | - | 41.2 | 9 |

| Not communicating well between students and lecturers. | 25.7 | 10 | 18.2 | 9 | 29.9 | 11 |

| The answers are very similar among students. | 24.3 | 11 | 16.4 | 10 | 28.9 | 12 |

| Some teachers do not have sufficient experience to prepare and apply for the exams. | 23.0 | 12 | - | - | 36.1 | 10 |

| Lack of time, many questions, and lack of experience. | 9.9 | 13 | 27.3 | 5 | - | - |

| Type of Obstacles | Overall Percentage and Arrangement | Breakdown Percentage and Arrangement | ||||

|---|---|---|---|---|---|---|

| Students | Teachers | |||||

| (%) (n = 152) | Obstacle Order | (%) (n = 55) | Order | (%) (n = 97) | Order | |

| Lack of time, many questions, and lack of experience. | 9.9 | 13 | 27.3 | 5 | - | - |

| Parents and students lack experience with technology | 37.5 | 8 | - | - | 58.8 | 5 |

| Parents are not convinced of electronic exams (parents are not cooperating) | 26.3 | 9 | - | - | 41.2 | 9 |

| Some teachers do not have sufficient experience to prepare and implement the exams | 23.0 | 12 | - | - | 36.1 | 10 |

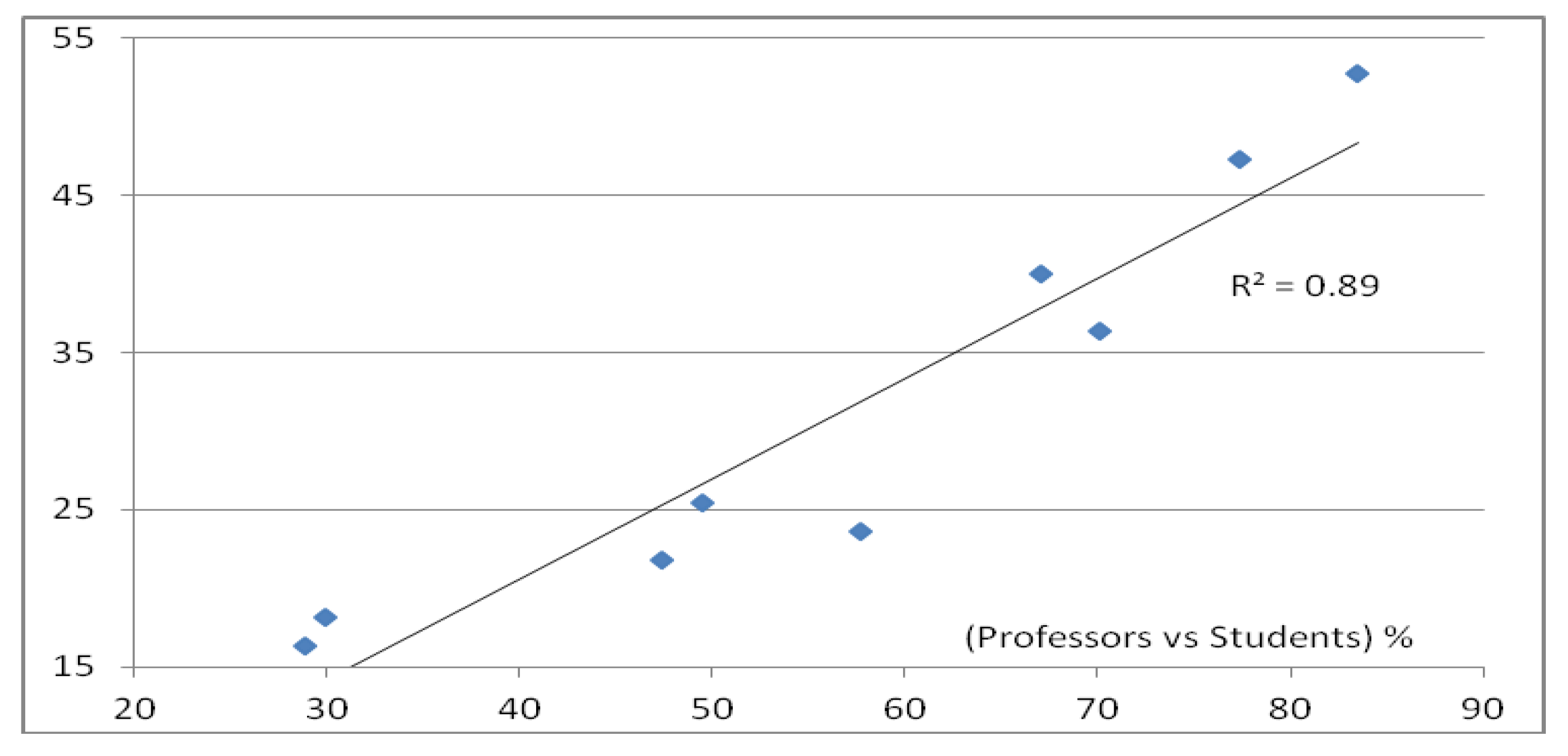

| Regression Statistics | |||||

|---|---|---|---|---|---|

| Multiple R | 0.94 | ||||

| R Square | 0.89 | ||||

| Adjusted R Square | 0.88 | ||||

| Standard Error | 4.64 | ||||

| Observations | 9 | ||||

| ANOVA | |||||

| df | SS | MS | F | Significance F | |

| Regression | 1 | 1243.0 | 1243.0 | 57.8 | 0.0001 |

| Residual | 7 | 150.6 | 21.5 | ||

| Total | 8 | 1393.6 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bashitialshaaer, R.; Alhendawi, M.; Avery, H. Obstacles to Applying Electronic Exams amidst the COVID-19 Pandemic: An Exploratory Study in the Palestinian Universities in Gaza. Information 2021, 12, 256. https://doi.org/10.3390/info12060256

Bashitialshaaer R, Alhendawi M, Avery H. Obstacles to Applying Electronic Exams amidst the COVID-19 Pandemic: An Exploratory Study in the Palestinian Universities in Gaza. Information. 2021; 12(6):256. https://doi.org/10.3390/info12060256

Chicago/Turabian StyleBashitialshaaer, Raed, Mohammed Alhendawi, and Helen Avery. 2021. "Obstacles to Applying Electronic Exams amidst the COVID-19 Pandemic: An Exploratory Study in the Palestinian Universities in Gaza" Information 12, no. 6: 256. https://doi.org/10.3390/info12060256