The on-board camera system was able to capture multispectral images for all UAS flight missions. Reference samples were taken and analyzed to ensure the comparison to real ground truth data. The regression analyses indicate valuable first results.

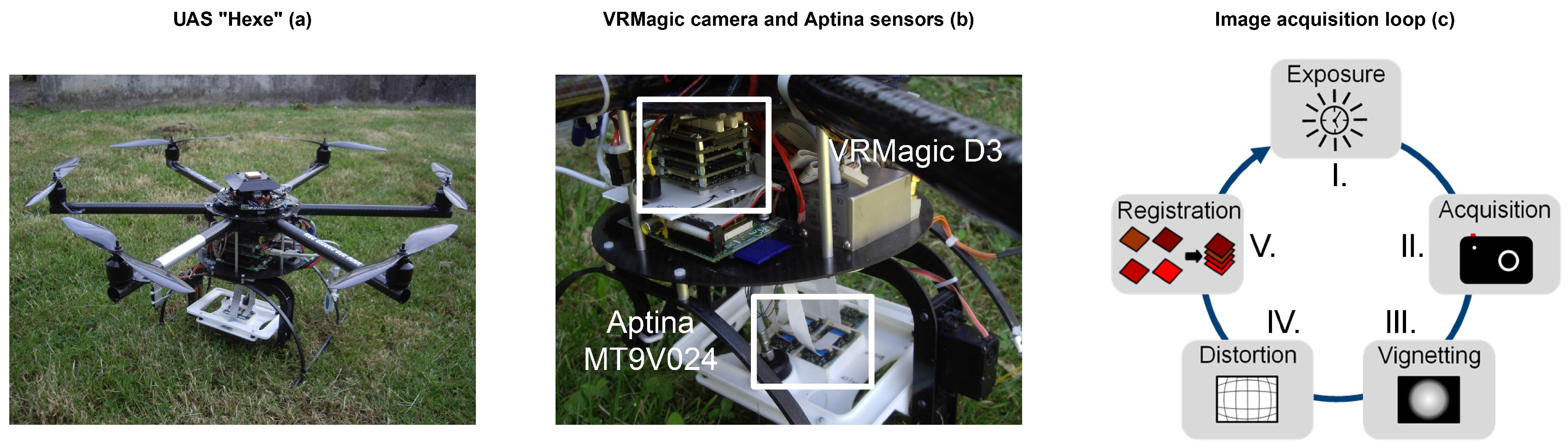

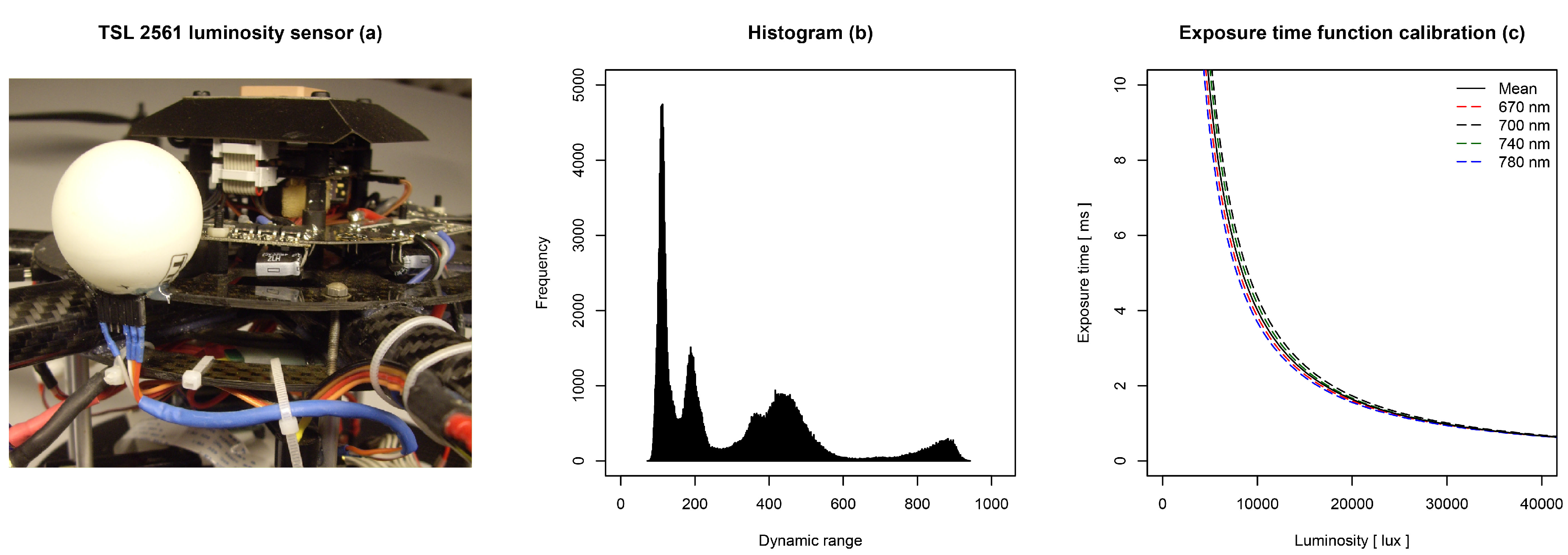

3.1. Image Acquisition Loop

The camera system worked as expected. It performed all steps of the acquisition loop during the flight mission. One iteration, comprising the steps from exposure time definition to image-to-image registration, took approximately 4 s of time. The exposure time function led to an acquisition of images with a contrast stretch ≥60% of the 10-bit dynamic range. Due to the approximated mean exposure time function, some images had clipping effects at the white reference target.

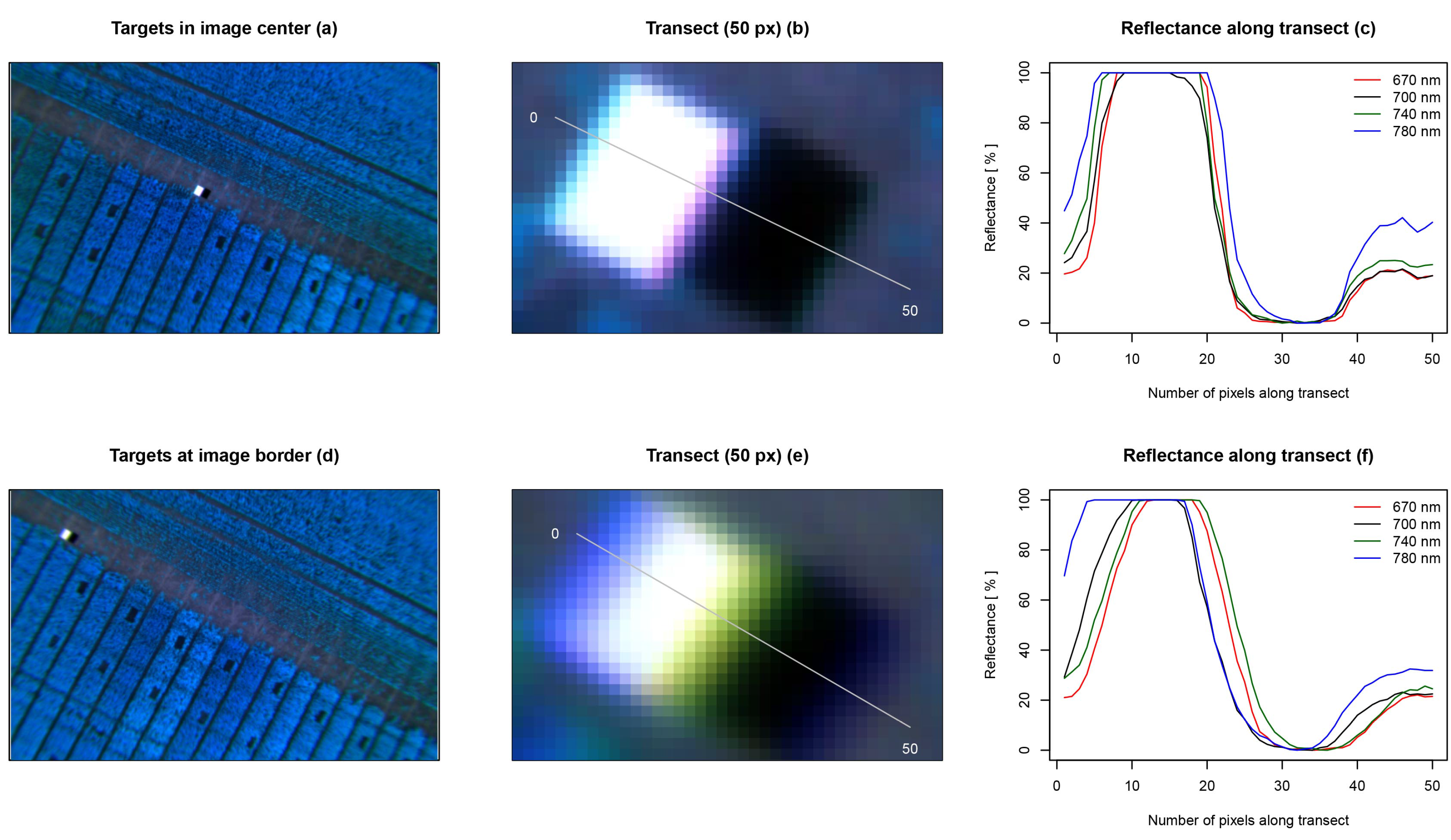

Registered multispectral images were cropped to a common extent of 732 × 464 px and showed a geometrical error in alignment accuracy (see

Figure 4). The error was unevenly distributed throughout the image. Objects that were near the image’s center showed a smaller displacement in alignment (~2 px), whereas objects at the image’s border showed a larger displacement (~6 px).

Figure 4.

Image-to-image registration accuracy at different locations within the image. Two registered multispectral images are presented as false color images. The first image sets focus on two black and white reference targets (a); whereas the second image captures the targets at its border (d); two transects of a length of 50 px were selected to investigate the spatial displacement of the four registered bands (b,e); the reflectance along the transects is shown on the right. The spatial displacement can be observed on the x-axis, and the reflectance can be observed on the y-axis. The figures indicate that the spatial alignment is better in the center of an image (~2 px) (c); and it is worse in the border region (~6 px) (f).

Figure 4.

Image-to-image registration accuracy at different locations within the image. Two registered multispectral images are presented as false color images. The first image sets focus on two black and white reference targets (a); whereas the second image captures the targets at its border (d); two transects of a length of 50 px were selected to investigate the spatial displacement of the four registered bands (b,e); the reflectance along the transects is shown on the right. The spatial displacement can be observed on the x-axis, and the reflectance can be observed on the y-axis. The figures indicate that the spatial alignment is better in the center of an image (~2 px) (c); and it is worse in the border region (~6 px) (f).

3.2. Measurements

The laboratory analysis of the samples from Z 39–41 to Z 69 are presented in

Table 4. Due to an error in the procedure, one sample could not be analyzed for Z 51 and Z 69, respectively. Average biomass showed an increase from 381.8–1351.3 g·m

over time. Mean N content was stable at Z 39–41 and Z 51 (1.5 g 100 g

), decreased at Z 61 (1.2 g 100 g

) and increased slightly at Z 69 (1.3 g 100 g

).

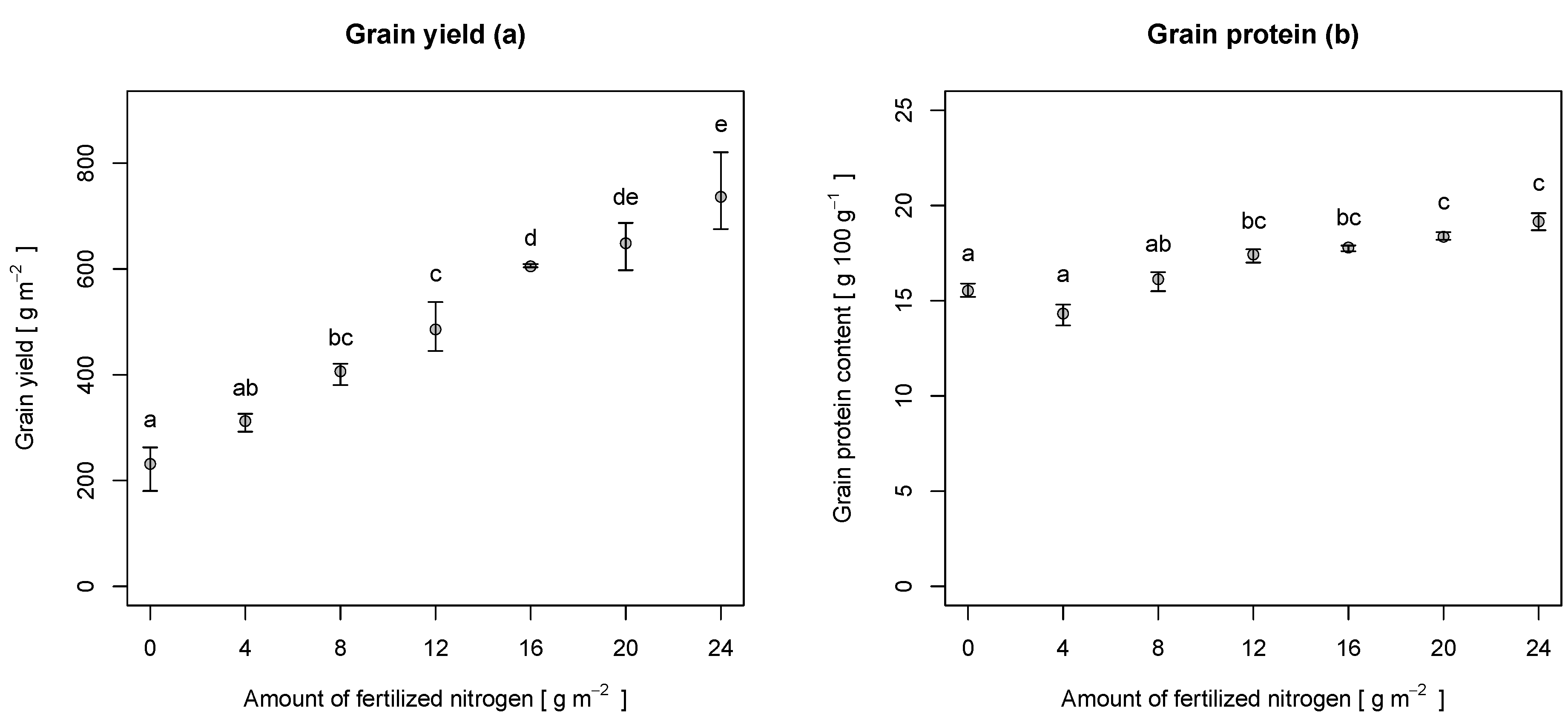

Table 4 also shows the results of the samples at harvest (Z 90). Grain yield ranged from 180.4–820.7 g·m

. The yield increased almost linearly with the amount of fertilized N (see

Figure 5a). Grain protein content ranged from 13.7–19.6 g 100 g

. The protein content did not increase linearly with the amount of fertilized N (see

Figure 5b). Its minimum was at an N level of 4 g·m

, whereas its maximum was reached at a level of 24 g·m

.

Table 4.

Descriptive statistics (minimum, mean, maximum and standard deviation (SD)) of above-ground biomass, N content, grain yield and grain protein content, sampled at different growth stages (Z).

Table 4.

Descriptive statistics (minimum, mean, maximum and standard deviation (SD)) of above-ground biomass, N content, grain yield and grain protein content, sampled at different growth stages (Z).

| Variable | Z | Minimum | Mean | Maximum | SD |

|---|

| Biomass (DM) (g·m) | 39–41 | 91.9 | 381.8 | 665.5 | 130.13 |

| 51 | 241.8 | 512.1 | 848.0 | 165.17 |

| 61 | 444.4 | 955.5 | 1447.3 | 324.26 |

| 69 | 486.1 | 1351.3 | 2076.0 | 432.97 |

| N content (g 100 g) | 39–41 | 1.1 | 1.5 | 2.0 | 0.30 |

| 51 | 1.1 | 1.5 | 2.2 | 0.36 |

| 61 | 0.9 | 1.2 | 1.9 | 0.28 |

| 69 | 0.9 | 1.3 | 1.7 | 0.24 |

| Grain yield (g·m) | 90 | 180.4 | 489.7 | 820.7 | 178.74 |

| Grain protein content (g 100 g) | 90 | 13.7 | 17.0 | 19.6 | 1.65 |

Figure 5.

Grain yield (a) and grain protein content (b) at different levels of fertilization, sampled at harvest (Z 90). The points represent the mean values, whereas the whiskers represent the minima and maxima. Letters indicate the results of a Tukey’s HSD multiple comparison test ().

Figure 5.

Grain yield (a) and grain protein content (b) at different levels of fertilization, sampled at harvest (Z 90). The points represent the mean values, whereas the whiskers represent the minima and maxima. Letters indicate the results of a Tukey’s HSD multiple comparison test ().

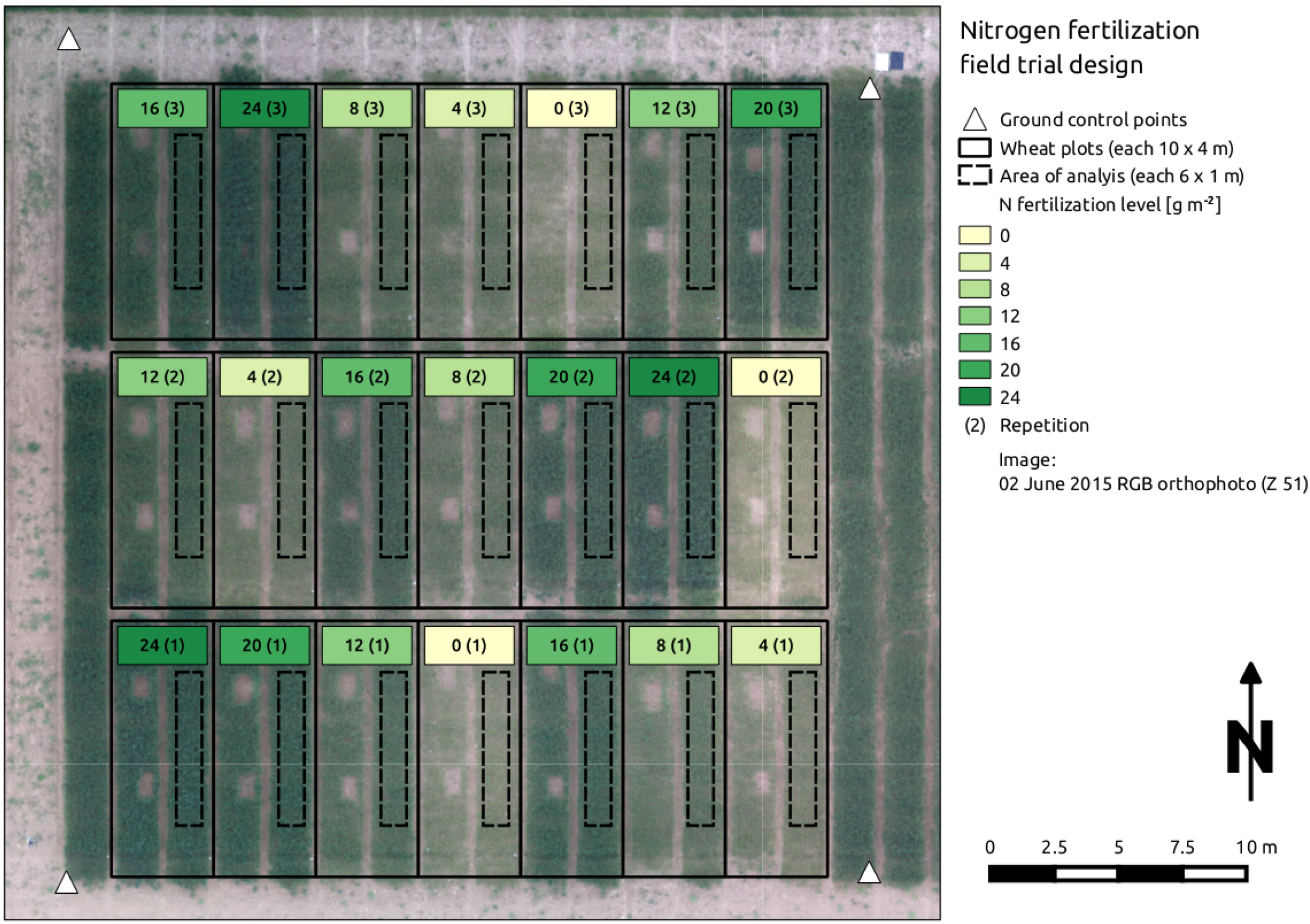

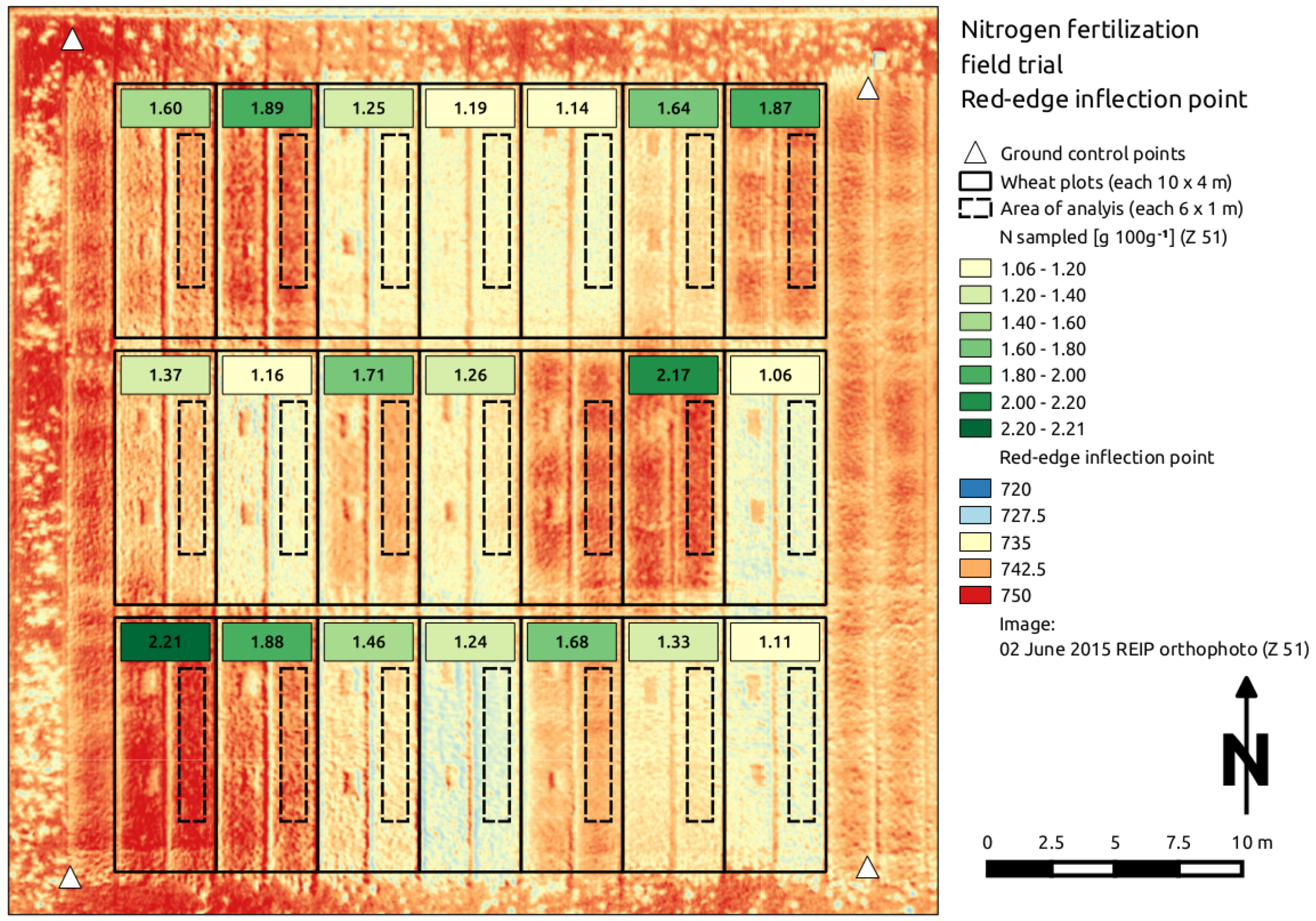

3.3. Image Processing

An orthoimage was computed from the acquired aerial imagery for each growth stage. The resulting RMSEs of the ground control point residuals ranged from 0.027–0.032 m in the horizontal and from 0.035–0.046 m in the vertical direction. The orthoimages were produced with a ground resolution of 0.04 m·px

, leading to an analysis at the canopy level with mixed signals, comprising soil and plant reflection [

44]. The signals were used to compute the NDVI and the REIP layer, which were analyzed for the selected plot areas (see

Figure 6). At Z 39–61, the average NDVI values were constant around 0.79 with a standard deviation of 0.07 and decreased at Z 69 (0.68 ± 0.10). The average REIP values were higher for Z 51 and Z 61 (~739 ± 4.2), whereas they were lower for Z 39–41 and Z 69 (~735 ± 4.7).

Figure 6.

Exemplary red-edge inflection point (REIP) orthoimage with sampled above-ground biomass N content values (g 100 g) at growth stage Z 51. One sample is missing due to an erroneous laboratory analysis.

Figure 6.

Exemplary red-edge inflection point (REIP) orthoimage with sampled above-ground biomass N content values (g 100 g) at growth stage Z 51. One sample is missing due to an erroneous laboratory analysis.

3.4. Regression Analysis

The regression results are grouped by the two aims of this analysis: (i) estimation of biomass and N content; and (ii) prediction of grain yield and grain protein content. All regressions were significant (

).

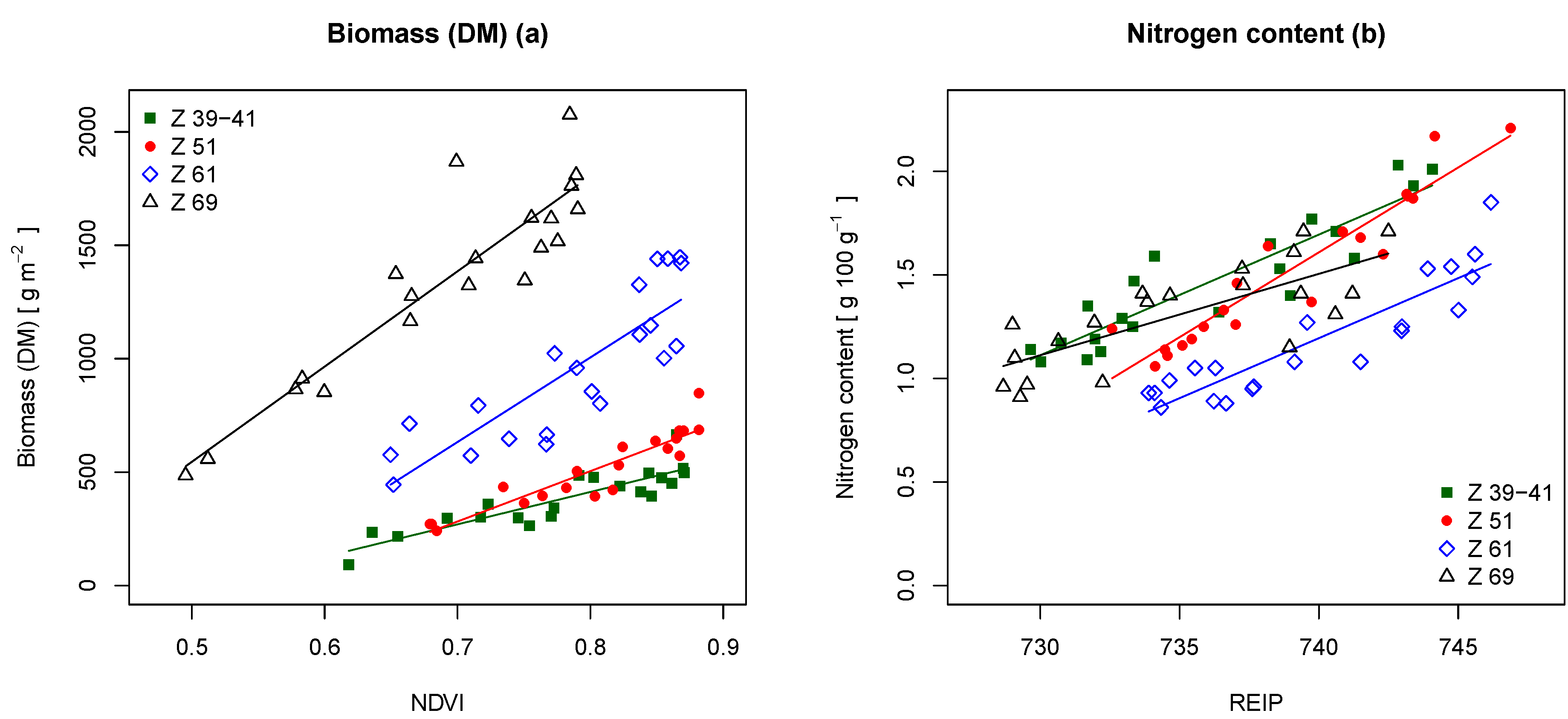

Table 5 shows the results of the biomass and N content estimation. The table indicates that the NDVI performed better than the REIP. The NDVI estimated the biomass best at Z 39–41, Z 51 and Z 69 with coefficients of determination (

R) of 0.78, 0.85 and 0.84 and relative RMSE values of 15.7%, 12.3% and 12.3%. The REIP estimated the biomass best at Z 61 with an

R of 0.77 and a relative RMSE of 15.8%.

Figure 7a displays the regression lines for the NDVI at the different growth stages. The relationship between NDVI and biomass appeared to be linear for all growth stages, whereas the slopes of the regression lines increased with the gain in biomass over time.

For N content estimation, the REIP gave the best results. The

R showed values of 0.83, 0.89, 0.81 and 0.58 with relative RMSE values of 8.3%, 7.6%, 10.3% and 11.7% (Z 39–69). The REIP performed best at growth stage Z 51 and worst at growth stage Z 69. The regression plots for the REIP are shown in

Figure 7b. The figure indicates a linear relationship of REIP and N content at all growth stages.

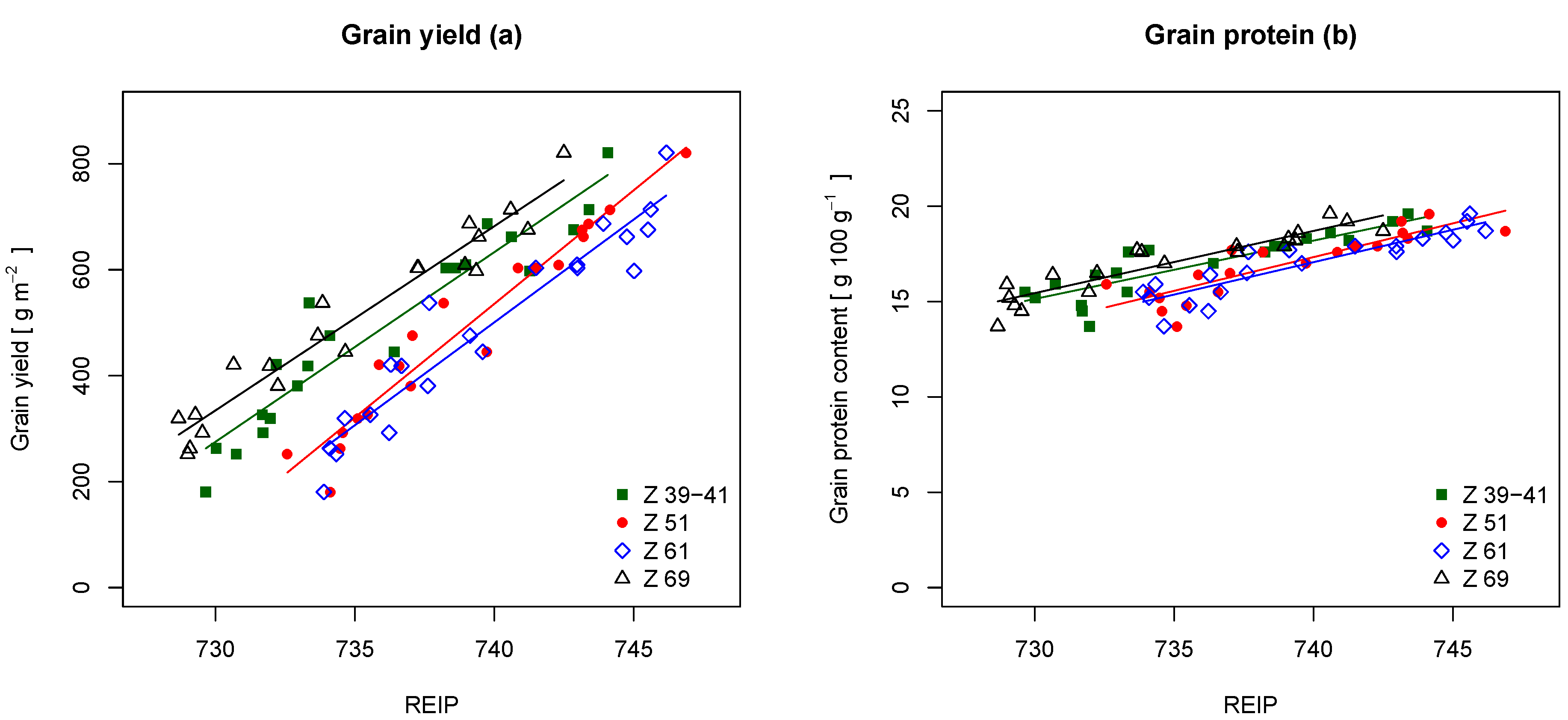

Table 6 comprises the results for the prediction of grain yield and grain protein content. The REIP performed better than the NDVI for the prediction of both, grain yield and grain protein content. For grain yield, the REIP showed

R values of 0.90, 0.92, 0.91 and 0.94 and relative RMSE values of 11.2%, 9.9%, 10.8% and 9.0%. The NDVI performed slightly worse with

R values of 0.89, 0.89, 0.90 and 0.91 and relative RMSE values of 11.6%, 12.1%, 11.0% and 10.9%. Although REIP and NDVI performed well at all growth stages, the prediction performance even improved with time.

Figure 8a displays the regression results for the REIP and grain yield, indicating a linear relationship in between the two variables. The regression lines at Z 51 and Z 69 followed a similar pattern, being only translated in parallel at different growth stages. At Z 51, the regression line showed an increased slope.

Table 5.

Results of linear regressions () at different growth stages (Z) with the above-ground biomass and N content as the dependent variable (DV), as well as the NDVI and the REIP as the independent variable (IDV). The table comprises the number of samples (n), the coefficient of determination (R), the RMSE, the relative RMSE, the bias and the RMSE of validation (RMSEV), derived from a leave-one-out cross-validation.

Table 5.

Results of linear regressions () at different growth stages (Z) with the above-ground biomass and N content as the dependent variable (DV), as well as the NDVI and the REIP as the independent variable (IDV). The table comprises the number of samples (n), the coefficient of determination (R), the RMSE, the relative RMSE, the bias and the RMSE of validation (RMSEV), derived from a leave-one-out cross-validation.

| DV | IDV | Z | n | R | RMSE | RMSE | Bias | RMSEV |

|---|

| Biomass (DM) (g·m) | NDVI | 39–41 | 21 | 0.78 | 59.9 | 15.7 | 0 | 66.4 |

| | 51 | 20 | 0.85 | 62.8 | 12.3 | 0 | 69.1 |

| | 61 | 21 | 0.72 | 168.1 | 17.6 | 0 | 185.4 |

| | 69 | 20 | 0.84 | 166.8 | 12.3 | 0 | 179.8 |

| REIP | 39–41 | 21 | 0.74 | 65.1 | 17.1 | 0 | 73.0 |

| | 51 | 20 | 0.81 | 69.7 | 13.6 | 0 | 80.4 |

| | 61 | 21 | 0.77 | 150.8 | 15.8 | 0 | 167.6 |

| | 69 | 20 | 0.70 | 230.6 | 17.1 | 0 | 253.7 |

| N content (g 100 g) | NDVI | 39–41 | 21 | 0.75 | 0.15 | 10.2 | 0 | 0.17 |

| | 51 | 20 | 0.73 | 0.18 | 11.9 | 0 | 0.20 |

| | 61 | 21 | 0.63 | 0.17 | 14.3 | 0 | 0.19 |

| | 69 | 20 | 0.53 | 0.16 | 12.5 | 0 | 0.19 |

| REIP | 39–41 | 21 | 0.83 | 0.12 | 8.3 | 0 | 0.13 |

| | 51 | 20 | 0.89 | 0.11 | 7.6 | 0 | 0.13 |

| | 61 | 21 | 0.81 | 0.12 | 10.3 | 0 | 0.14 |

| | 69 | 20 | 0.58 | 0.15 | 11.7 | 0 | 0.17 |

Figure 7.

Linear regressions with (a) the above-ground biomass as the dependent and the NDVI as the independent variable and (b) with the N content as the dependent variable and the REIP as the independent variable at different growth stages (Z). The regression lines are displayed with corresponding colors.

Figure 7.

Linear regressions with (a) the above-ground biomass as the dependent and the NDVI as the independent variable and (b) with the N content as the dependent variable and the REIP as the independent variable at different growth stages (Z). The regression lines are displayed with corresponding colors.

For the prediction of grain protein content, the REIP showed

R values of 0.77, 0.76, 0.82 and 0.86 and relative RMSE values of 4.5%, 4.7%, 4.1% and 3.6%. Again, it performed better than the NDVI at all growth stages.

Figure 5b shows a nonlinear distribution for the grain protein content. This pattern is also apparent in

Figure 8b. The simple linear regressions with the REIP as the independent variable approximated the overall trend of increasing protein content with higher N content. Nevertheless, they could not account for the drop in protein content at low N levels. The lines show a similar pattern as for the grain yield.

Table 6.

Results of linear regressions () at different growth stages (Z) with the final grain yield and grain protein content as the dependent variable (DV), as well as the NDVI and the REIP as the independent variable (IDV). The table comprises the number of samples (n), the coefficient of determination (R), the RMSE, the relative RMSE, the bias and the RMSE of validation (RMSEV), derived from a leave-one-out cross-validation.

Table 6.

Results of linear regressions () at different growth stages (Z) with the final grain yield and grain protein content as the dependent variable (DV), as well as the NDVI and the REIP as the independent variable (IDV). The table comprises the number of samples (n), the coefficient of determination (R), the RMSE, the relative RMSE, the bias and the RMSE of validation (RMSEV), derived from a leave-one-out cross-validation.

| DV | IDV | Z | n | R | RMSE | RMSE | Bias | RMSEV |

|---|

| Grain yield (g·m) | NDVI | 39–41 | 21 | 0.89 | 56.7 | 11.6 | 0 | 64.5 |

| | 51 | 21 | 0.89 | 59.1 | 12.1 | 0 | 65.8 |

| | 61 | 21 | 0.90 | 54.1 | 11.0 | 0 | 60.7 |

| | 69 | 21 | 0.91 | 53.5 | 10.9 | 0 | 59.9 |

| REIP | 39–41 | 21 | 0.90 | 54.8 | 11.2 | 0 | 60.4 |

| | 51 | 21 | 0.92 | 48.3 | 9.9 | 0 | 53.1 |

| | 61 | 21 | 0.91 | 52.9 | 10.8 | 0 | 58.7 |

| | 69 | 21 | 0.94 | 44.2 | 9.0 | 0 | 49.2 |

| Grain protein content (g 100 g) | NDVI | 39–41 | 21 | 0.72 | 0.86 | 5.1 | 0 | 0.96 |

| | 51 | 21 | 0.71 | 0.87 | 5.1 | 0 | 0.99 |

| | 61 | 21 | 0.72 | 0.85 | 5.0 | 0 | 0.95 |

| | 69 | 21 | 0.74 | 0.83 | 4.9 | 0 | 0.94 |

| REIP | 39–41 | 21 | 0.77 | 0.77 | 4.5 | 0 | 0.84 |

| | 51 | 21 | 0.76 | 0.79 | 4.7 | 0 | 0.89 |

| | 61 | 21 | 0.82 | 0.69 | 4.1 | 0 | 0.76 |

| | 69 | 21 | 0.86 | 0.61 | 3.6 | 0 | 0.68 |

Figure 8.

Linear regressions with (a) the grain yield and (b) the grain protein content as the dependent variable and the REIP as the independent variable at different growth stages (Z). The regression lines are displayed with corresponding colors.

Figure 8.

Linear regressions with (a) the grain yield and (b) the grain protein content as the dependent variable and the REIP as the independent variable at different growth stages (Z). The regression lines are displayed with corresponding colors.