1. Introduction

Nowadays, human senses and anatomical features are used in various applications. In particular, information collected from movement of hands, head and eyes are utilized as an input argument for different systems such as intelligent systems, robot control, security, assistive applications, and human diagnosis, etc. As a matter of fact, these inputs are essential for increasing the reliability of above-mentioned systems. Among these movements, eye movement is the most popular because of its crucial role in transmitting huge environmental data to the brain.

Human beings acquire 80–90% of the external world knowledge from their eyes [

1]. The human eye has communication with the brain at very high speed and wide band [

2]. Accordingly, vision is the most important part of our senses. Currently, it is likely that our behaviors and considerations might be recognizable with the help of the eye gaze. Where we place and how we move our gaze is associated with what we pay attention to [

3]. In this regard, the significance of visual information and information evaluators is growing.

Visual information can be acquired through eye gaze tracking. Gaze tracking technology includes a sophisticated device that tracks and records where people look, together with movements of the eye gaze. In the literature, the most popular eye tracking methods are electrooculogram (EOG) [

4], scleral magnetic search coils [

5] and noninvasive video-based pupil-corneal reflection tracking [

6].

A contemporary and widely-adopted method among traditional gaze tracking methods [

7,

8,

9] is video image based pupil center-corneal reflection method (PCCR) which does not require physical contact with the user’s eye area. As it is not intrusive, PCCR is considered to be the most improved gaze tracking technology in recent years [

10,

11]. In PCCR methods, pupil center relative position with respect to corneal reflection which is called “glint” is the main point. Besides this, gaze estimation is performed by detecting the center of the pupil and corneal reflections created by illuminators. Consequently, gaze estimations are done via using single [

12,

13] or multiple illuminator-based methods [

14,

15,

16] in many eye tracking studies.

Eye gaze tracking systems can employ visible or infrared (IR) light as an illuminator. When visible light is preferred, it is difficult to detect pupil center and distinguish the pupil from noisy reflections. On the other hand, IR light eases pupil detection by means of near infrared (NIR) cameras. Thus, many eye tracker applications use IR light with NIR cameras. According to the eye tracking processes, one or more NIR cameras are utilized to obtain 2D or 3D video images. Three-dimensional video image-based systems have higher accuracies but they have lower working frequencies.

Eye gaze estimation of users can be carried out using lots of techniques. Eye trackers have been designed as wearable or as remote gaze tracking systems. In the form of a wearable system, an eye tracking camera is mounted on the user’s head [

17,

18]. In the remote system, visual perception is acquired by a remote camera system [

19,

20]. This case is more convenient for the users.

Recently, several video-based pupil detection algorithms such as the Ellipse selector (ElSe) [

21], the Exclusive Curve Selector (ExCuSe) [

22], and the Convolutional Neural Network (CNN) [

23] have been proposed to estimate eye pupil at high detection rates and low computational costs. The Ellipse selector (ElSe) is based on ellipse evaluation of a filtered edge image and pupil contour validation. It is a robust, resource-saving approach that can be integrated in embedded architectures. ExCuSe analyses the images depending on reflections based on intensity histograms. Edge detectors, morphologic operations, and the angular integral projection function are applied to detect the pupil contour in this algorithm. CNN is a dual convolutional neural network pipeline. In the first stage, the pipeline performs pupil position determination using a convolutional neural network. Then, subregions are derived from the downscaled input image. The second pipeline stage employs another convolutional neural network to refine this pupil position by means of the subregions. These algorithms have contributed to create eye image-based data sets. These data sets include real world scenarios, such as illumination changes, reflections (on glasses), make-up, non-centered eye recording, and physiological eye characteristics. ExCuSe used Swirski data set and provided 17 new hand-labeled data sets. ElSe used Swirski and ExCuse data sets and provided seven new hand-labeled data sets. These studies were performed on approximately 200,000 frames [

24].

It is proven that eye gaze systems’ accuracies increase when the users’ head movements are restricted. To achieve high level accuracy values, many commercial companies use a chin rest to keep the head fixed while the gaze estimation process is performed with a desktop-mounted gaze tracking system. At the beginning of the gaze estimation, users are taken into the calibration process. Generally, users are asked to look at one, four or nine points located on the screen [

25,

26]. If the number of the calibration points is augmented, gaze estimation error decreases. Gaze estimation error is still a challenge in which linear or nonlinear functions are applied to overcome.

Gaze tracking systems have been extensively exploited in different application areas such as human computer interaction, aviation, medicine, robot control, production tests, augmented reality, psychoanalysis, sports training, computer games, training simulators, laser surgery, flight simulations, product development, fatigue detection, and in the military.

Another application area of eye gaze tracking system is pupillometry. Pupillometry is the measurement of eye pupil size. Normally, human pupil size range is 2–8 mm [

27]. Additionally, ambient light and environmental factors may affect pupil size changes. Besides that, pupillometry shows tonic and phasic changes in pupil size that play a significant role in observing normal and abnormal functioning of the nervous system [

28]. Recent research has revealed that pupil abnormalities are correlated with many diseases such as multiple sclerosis, migraines, diabetes, alcoholism, depression, anxiety/panic disorder, Alzheimer´s disease, Parkinson´s disease, and autism [

29].

Different disciplines may take advantage of differences in pupil size and eye movements. Also, eye movement analysis with fast systems are providing new opportunities in psychology, neuroscience, ophthalmology and affective computing.

Affective computing has multidisciplinary applications. Automatic detection of human emotional states increases the computer’s understanding of the human needs. Eye movements hold essential power for recognizing positive and negative emotions. Affective computing contributes to many areas. Some of these can be listed as follows. The cognitive load of a student can be found using eye tracking. Understanding the affective state of a student allows effective and improved learning. Someone’s mood can also be inferred from the eyes. This is currently used to monitor the emotional state of patients. Personalization of commercial products can be enhanced by recognizing the human’s mood. Affective computing enhances applications such as virtual reality and smart surveillance. Another point is the automatic recognition of emotions that could also be useful to support psychological studies. Such studies give a baseline for the emotional reaction of healthy subjects, which could be compared and used to diagnose mental disorders [

30]. Now it is possible that cars recommend music depending on the human’s mood and pull to the side of the road when they sense driver’s fatigue.

Recently, eye tracking technologies have revealed options useful for pupillometry. To have correct results in pupillometry, a high frequency and high resolution image-based eye tracking system is required. Nowadays, commercially available eye gaze tracking devices have monocular or binocular head movement-free applications and achieve up to 1000 fps (frames per second) frequencies [

31,

32]. Low resolution (up to 640 × 480) and low frequency (up to 30 Hz) cameras are selected to decrease the cost of design.

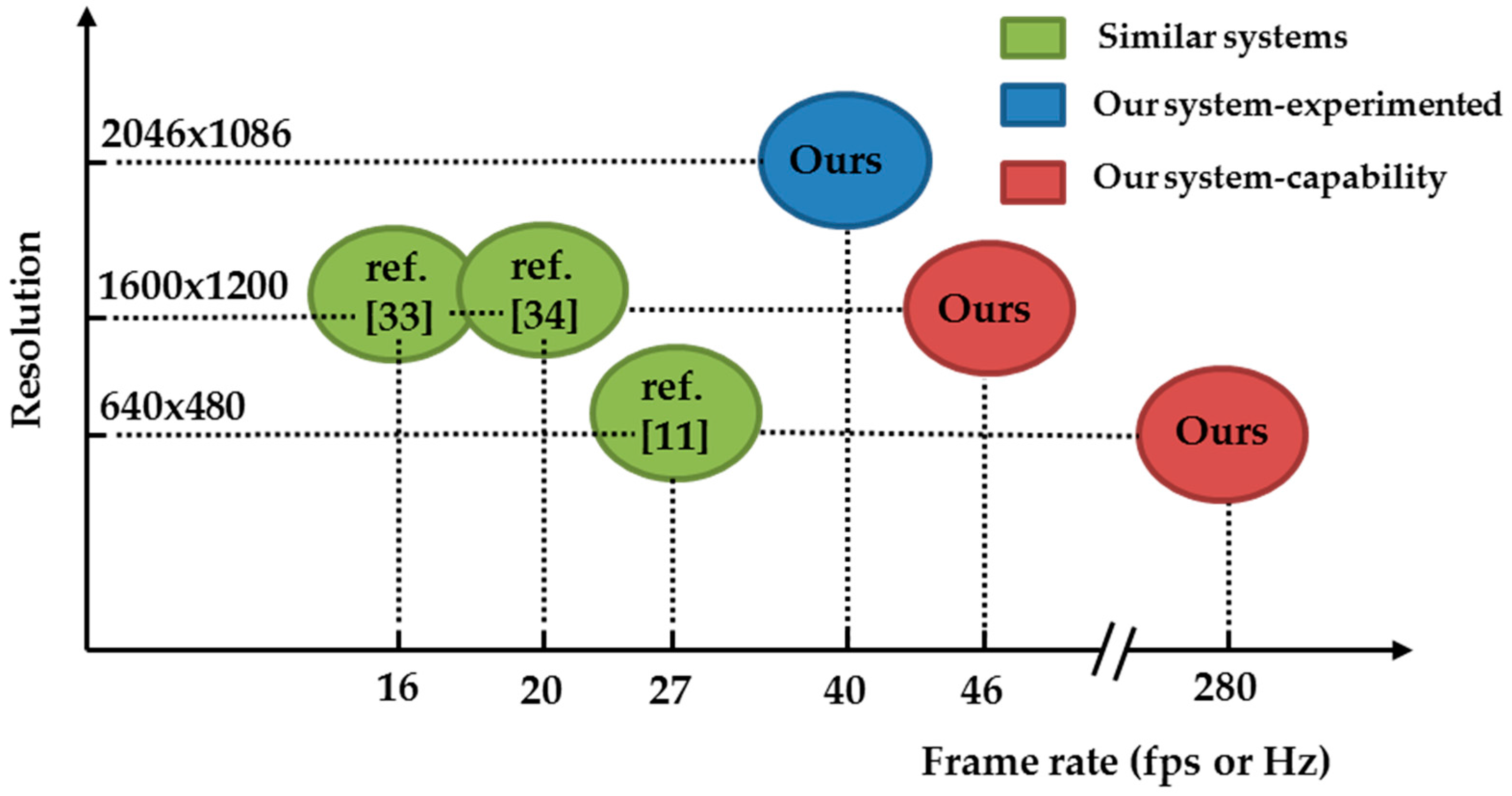

Su et al. [

33] proposed a monocular eye gaze tracking system which works at 15.7 Hz with 1600 × 1200 pixel images for users wearing glasses. Their study is based on the detection of user’s glasses and minimizing reflections on the surface of the glasses to facilitate pupil region detection by utilizing an illuminator controller device. Ji et al. [

11] presented a monocular head mounted eye gaze tracking system which works at 27 Hz with 640 × 480 pixel images. Their method is aimed at reducing points seen by the user. This is inconvenient for the user during calibration stage as it generates virtual calibration points. Also, Jong et al. [

34] proposed a new method for gaze estimation. They diminished the additional corneal reflections caused by external light using band pass filter located on front of the camera. Their system is a binocular remote eye tracker that operates at 20 Hz with 1600 × 1200 pixel images.

In this study, we presented a remote eye gaze tracking and record system that has the ability to process full high definition (full HD) images. The system frequency is 40 Hz for full HD images. This corresponds to 280 Hz for 640 × 480 size images. Outputs of our system are pupil sizes, pupil center positions, glint center positions and estimated gaze points. With these outputs, it is possible to interpret eye gaze and pupil information. The accuracy of our system is to less than one degree, which is a good level for single camera-based binocular systems. We developed this system by employing the LabVIEW program which is inherently parallel and has less time-consuming image processing algorithms. The remainder of this paper is organized as follows:

Section 2 describes the details of the proposed method.

Section 3 explains the experimental setup and results. Finally, the conclusions are presented in

Section 4.

2. Proposed Binocular Eye Gaze Tracking System

2.1. Proposed Gaze Tracking System

In our study, we designed an eye tracker system which consists of a camera link interface eye capture camera [

35], FPGA-frame grabber card [

36], an IR illuminator group and eye tracker/stimulus PCs as shown in

Figure 1.

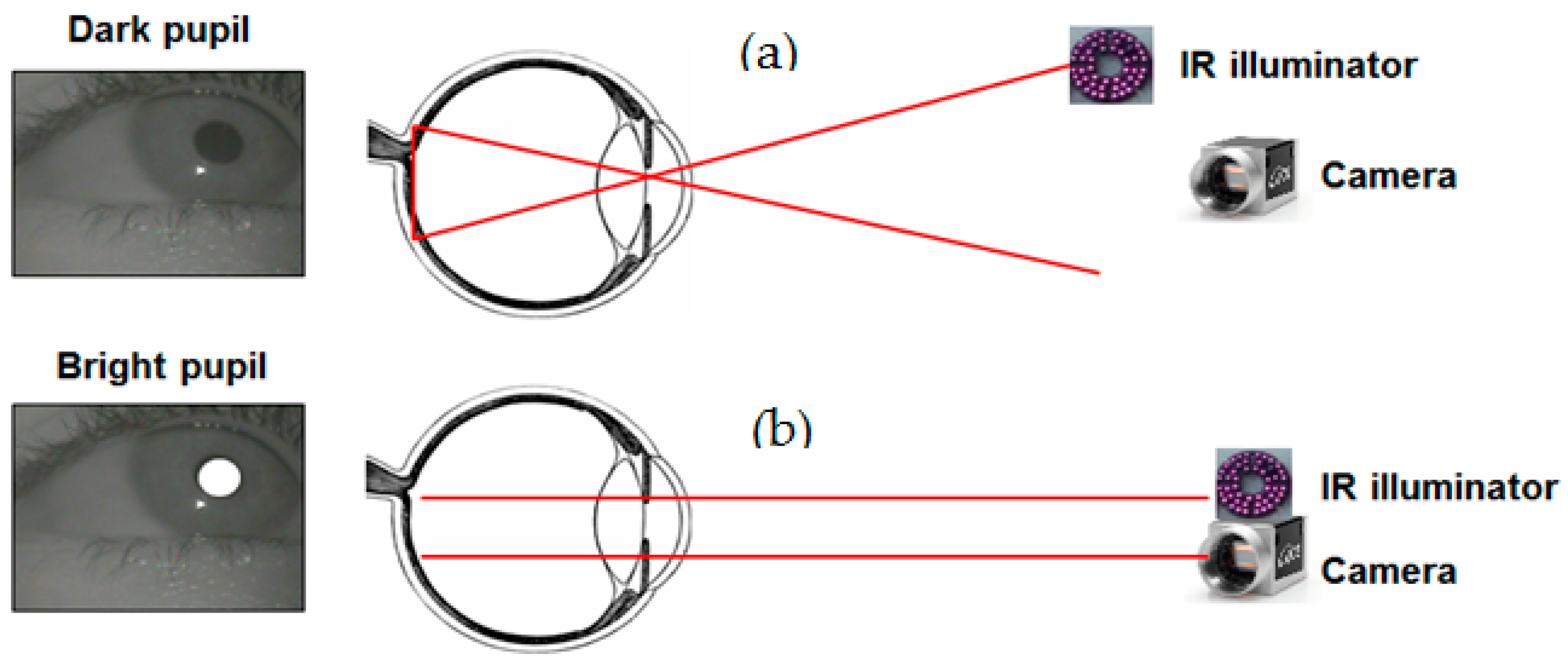

The camera is colorful and operates up to 340 fps with camera link interface. We adopted the camera with a lens which has a focal length of 16 mm, and vertical 23.7°–horizontal 30.7° field of view angles. IR illuminators are located around the eye capture NIR camera to ease the detection of pupil area in two ways. The first way is through dark pupil eye tracking, where an illuminator is placed away from the camera’s optical axis causing the pupil to appear darker than the iris as seen in

Figure 2a. The second way is through bright pupil eye tracking, where an illuminator is placed close to the optical axis of the camera causing the pupil to appear brighter than the iris as shown in

Figure 2b.

During remote eye tracking different factors can affect the pupil detection. Bright pupil eye tracking can be effected by environment illumination more than dark pupil tracking. Age and environmental illumination changes the size of the pupil in this method. Ethnicity is also another factor. Bright pupil eye tracking works very well for Hispanics and Caucasians but worse for Asians.

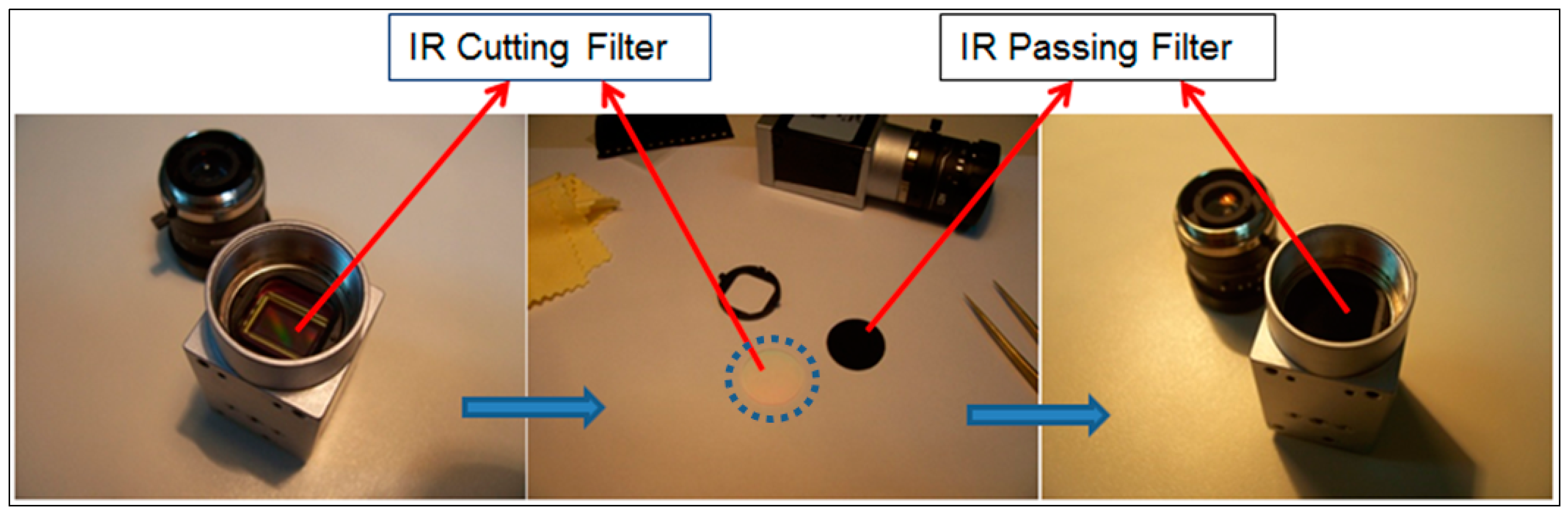

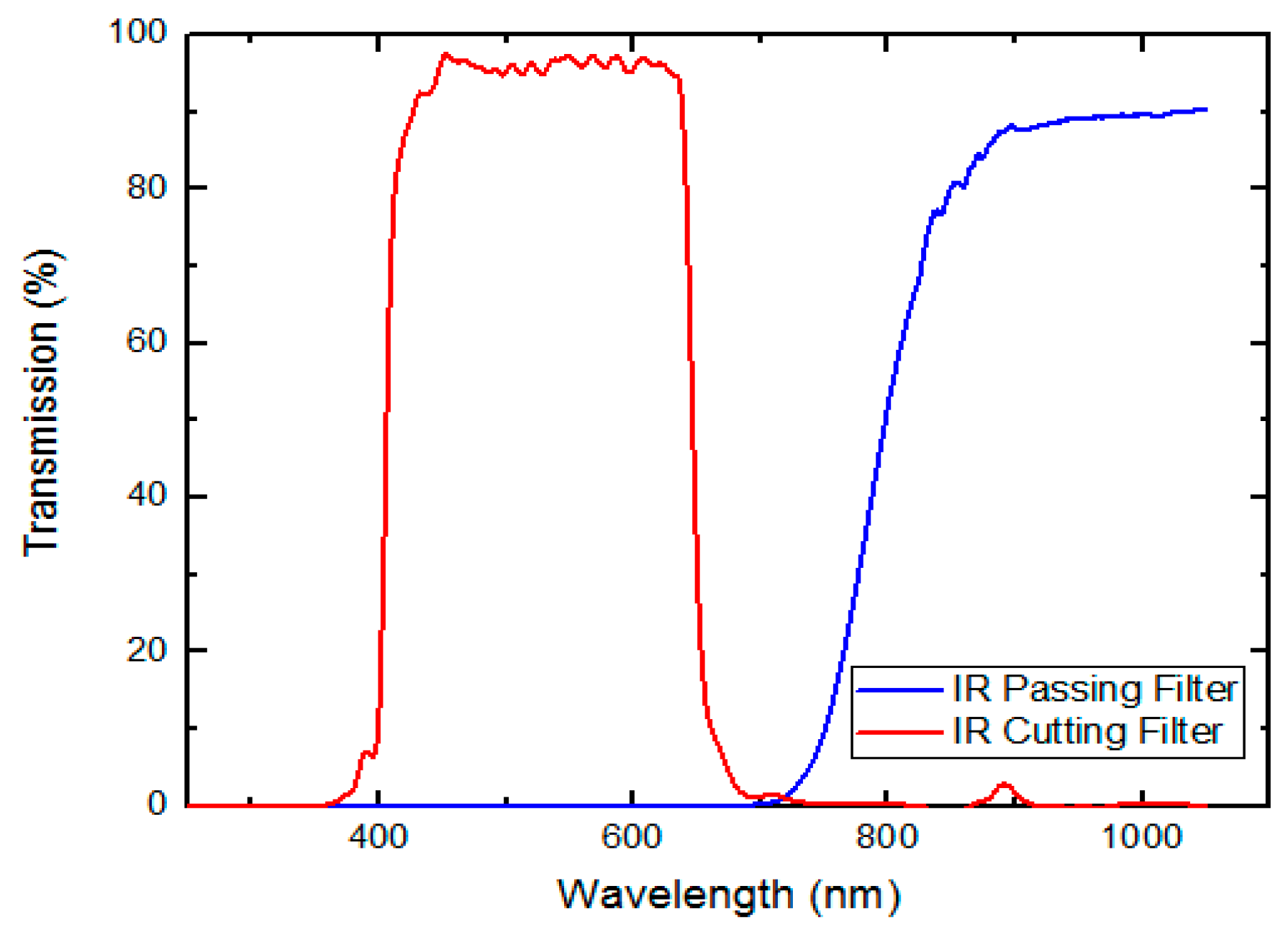

We preferred first way of IR illuminator location and our design is based on dark pupil eye tracking. To obtain IR images from a colorful camera, there is something which is performed on the camera to change the spectral response. The spectral response gives information about the characteristics of lens, light source, and IR cut filter. Our camera has an IR cut filter that passes only visible light wavelengths. The filter transmits in a range from 400 nm to 720 nm, and it cuts off from 720 nm to 1100 nm [

35].

IR illumination effect can be obtained by modifying the camera filter. We removed the IR cut filter and placed a positive film in front of the camera sensor as shown in

Figure 3. The camera spectral response is changed after the modification. The modified spectral response prevents undesired corneal reflections in our design.

Before and after the modification of the camera, we measured the spectral responses of the camera utilizing Shimadzu UV-3600 UV-VIS-NIR spectrophotometer (Shimadzu Corporation, Kyoto, Japan) and results are depicted in

Figure 4.

Our design contains two IR illuminators. Each illuminator includes 48 near infrared light emitting diodes (NIR LEDs) with wavelengths of 850 nm. The radiant intensity of each NIR LED is 40 mW/sr and the illuminators are harmless to human eyes [

37]. These two illuminators generate one corneal reflection.

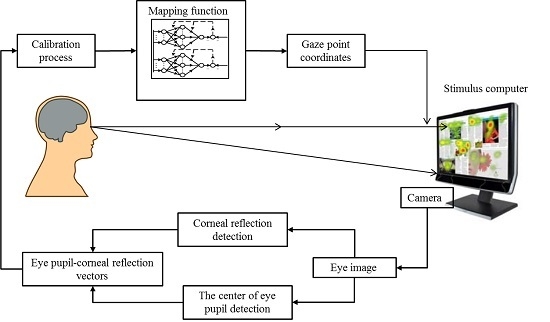

2.2. Proposed Gaze Tracking Method

Figure 5 shows a flow chart of the eye gaze tracking system developed in this study. It includes preprocessing of raw gray images, region of interest (ROI) area determination around glint centers, center detection of pupils, nonlinear mapping function for gaze estimation and size calculation of pupils.

2.3. Detection of Pupil and Corneal Reflection

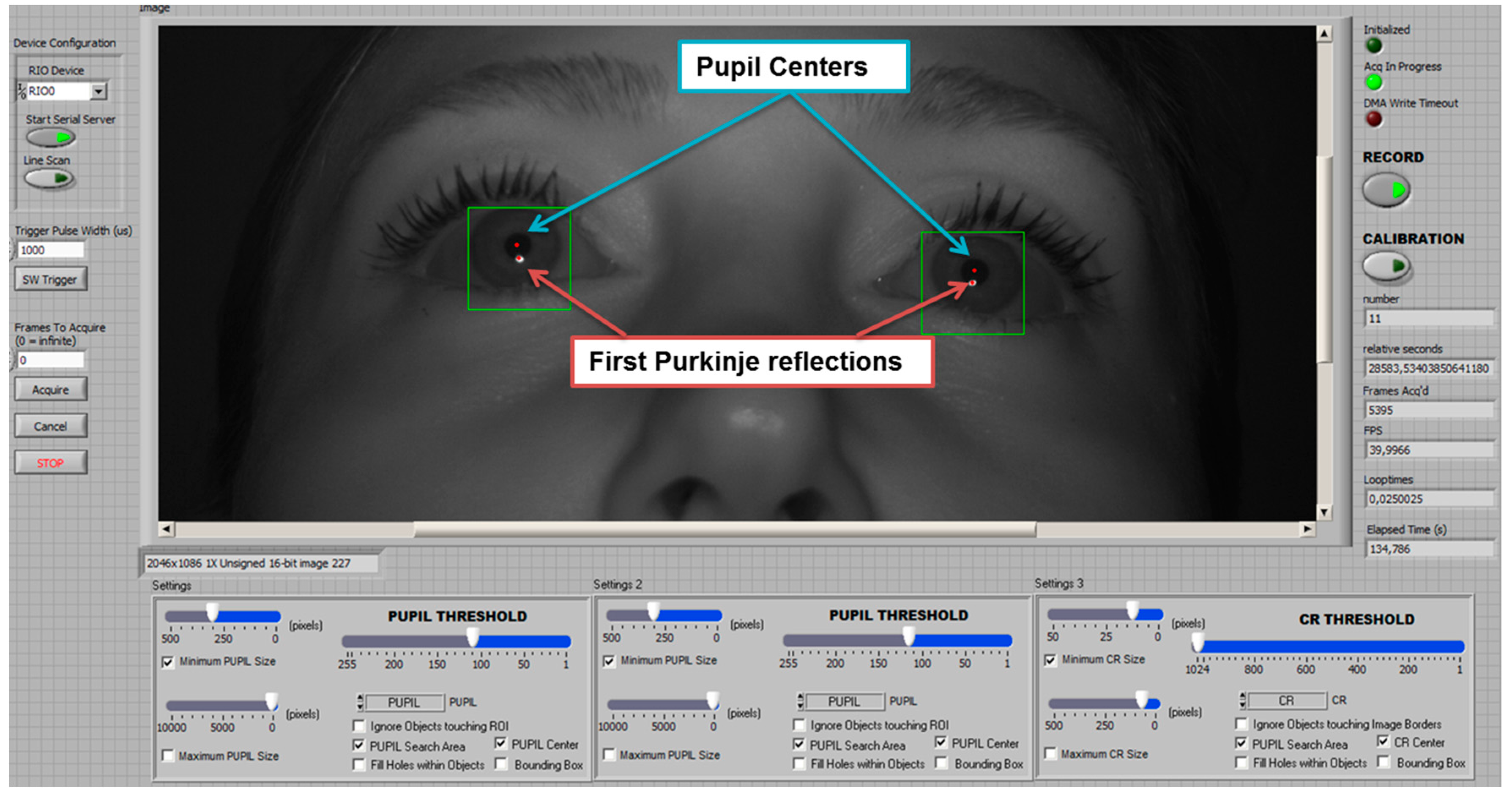

In this design, we grab images with NI PCIe-1473R FPGA frame grabber card located in the PCIe slot of the eye tracker desktop computer. Our software (its interface is shown in

Figure 6) reads image frames from the computer’s direct memory access (DMA) channels which transfer data from and to the frame grabber. With the DMA, the CPU first initiates the transfer, and then performs other operations while the transfer is in progress. It receives an interruption by the DMA controller when the operation is accomplished. DMA usage provides great convenience such that the CPU does not need to trace data transfer while performing other works.

In the beginning of the transferred image frame processing, we created a temporary memory location in the LabVIEW for the image and to save flowing gray level images for processing. After one image frame processing is complete, the memory is evacuated for the next frame.

In our design, we considered corneal reflections (first Purkinje reflection) as a reference point for pupil searching. IR passing filter fulfills this step for us. Corneal reflection is called ‘glint’ [

25]. It has the brightest value in the grabbed image, so it is easy to detect. Thus, a captured 10-bit resolution (1–1024) gray level image is firstly transformed into a binary image using Equation (1).

where,

f(x,

y) corresponds the pixel value at

x-

y axis coordinate in the gray level image while

g (x,

y) is the binary value at

x-

y axis coordinate of the image after thresholding process. Threshold level (

T) can be determined by automatically or manually. However, automatic algorithms increase the process time of the image. Therefore, we assumed that the determination of the glint threshold can be made experimentally since glint has high luminance. We manually selected glint threshold value as 1020 after experimental trials. Then, component labeling was applied to the segmented parts from background image. Small-sized parts were removed from the image to clear rough right and left eye glints with erosion operation utilizing morphological filter. These filters give the capability to ignore the objects smaller or larger than given sizes. We removed objects smaller than 15 pixels using the filter.

To find the center coordinates of corneal reflections, we used a weighted average technique which is implemented faster than elliptic and circular shape center detection methods. The weighted average is found by multiplying the location of the pixel by its value and then dividing it by the number of pixels in the labelled corneal reflection mass as in Equation (2) where x and y are the position of the pixel and I(x, y) is the pixel value, respectively. In addition, w represents the width of the image and h represents the height of the image in pixels. The results are x’ and y’-central coordinates for each glint.

It is likely that pupils are close to glints. After detection of the glints’ centers, we defined a 100 × 100 pixel square ROI area. Its center coordinates are same as the coordinates of the glint centers. Then, we extracted ROI area in the raw gray level image and saved it in the memory. Glint detection image processing steps are also applied when detecting center coordinates of the pupil.

Because pupils have the darkest value and the largest object in the extracted image, it is easy to detect them. Thus, a captured 10-bit resolution (0–1024) gray level image is transformed into a binary image using Equation (1). Extracted ROI area image histogram values were measured for 11 users and we saw that small differences occurred. Accordingly, depending on the minimum and maximum histogram values, we manually adjusted the pupil threshold value (

T) at 120 after experimental results. Then, component labeling is applied to the segmented objects from background image. Small size parts are removed from the image to clear rough right and left eye pupils with erosion operation utilizing a morphological filter. We removed the objects smaller than 250 pixels in size, which was determined experimentally. The object size value is obtained after the trials of the users’ small head movements which cause object size to change according to user to screen distance. Since human eye is not an exact circle, pupil size detection is not entirely possible with circular or elliptic methods. Hence, we used mass estimation of pupils. Thus, a weighted average technique (Equation (2)) was used to find the center coordinates of pupils. For the detection of glint mass and pupil mass center coordinates, we took advantage of LabVIEW Count Objects function. We presented our glint-pupil detection process in

Figure 7.

2.4. Calibration and Gaze Point Estimation

Eye tracker systems use linear or nonlinear methods when transforming eye image coordinates to user screen coordinates. In this study, we applied second-order polynomial equation to estimate user screen gaze points. In general, second-order polynomials are chosen as the mapping function shown in Equation (3).

Estimation of pupil center

x-

y coordinates position (

Sx,

Sy) on the user screen is obtained by Equation (3) as follows:

Equation (4) is a transform matrix of Equation (3). This expression is represented with Equations (5) and (6).

In Equations (5) and (6), ‘

A’ matrix values are unknown constants, ‘

B’ matrix values are coordinates of the calibration points on the user screen and ‘

X’ matrix values are pupil center

x-

y coordinates in the captured images. ‘

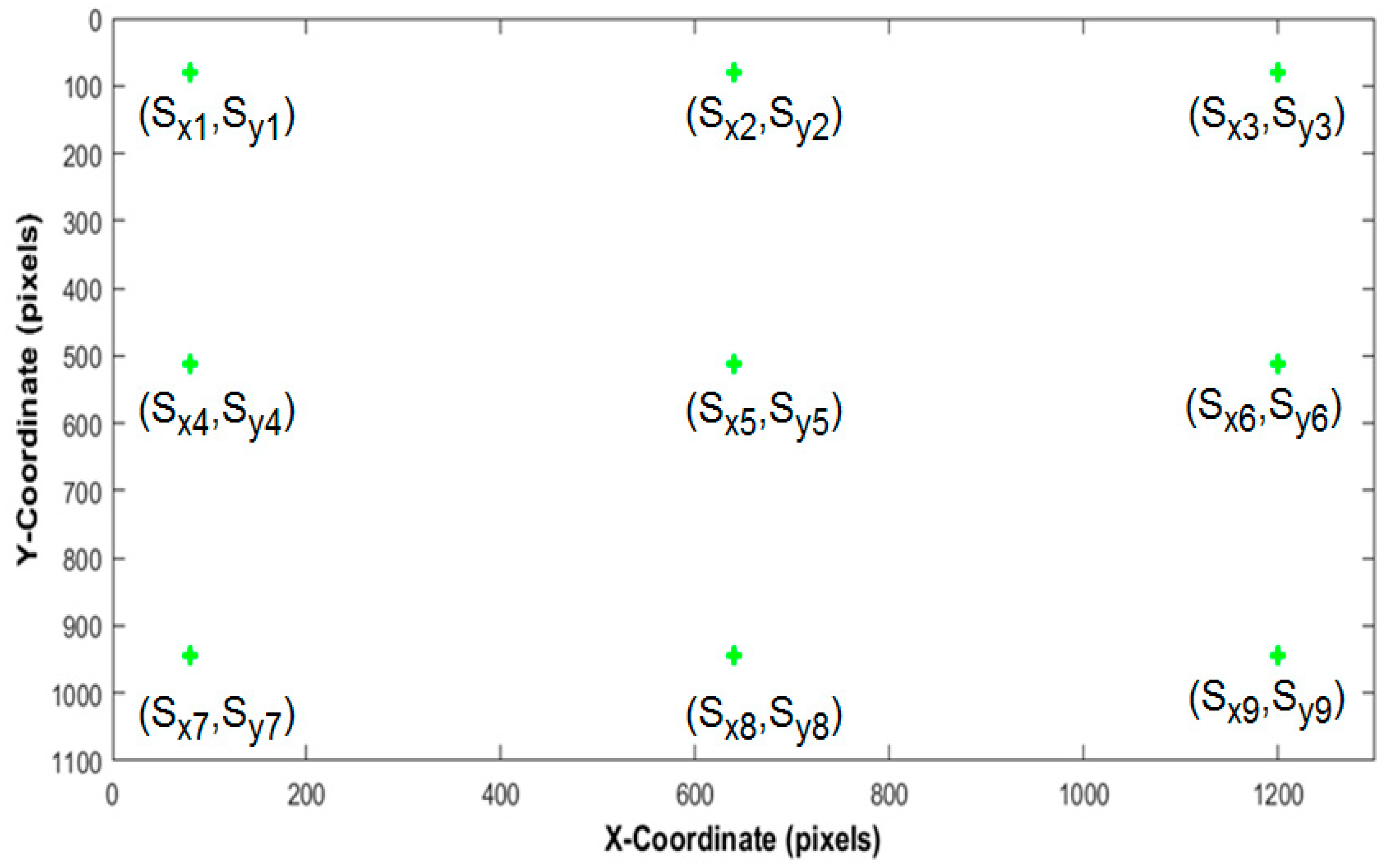

A’ matrix values are calculated during the calibration process. In our study, the calibration process is performed using nine points on the screen as shown in

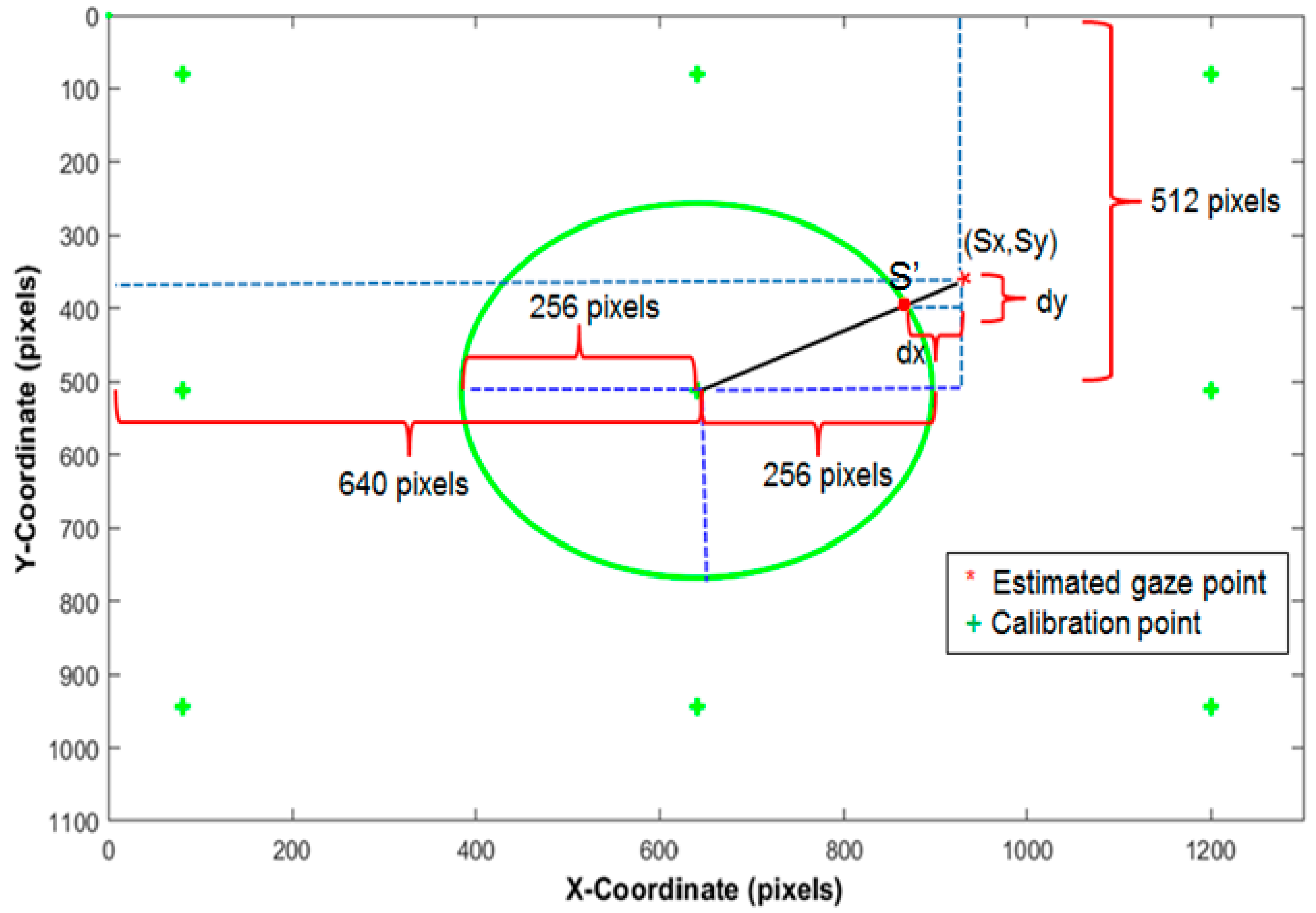

Figure 8.

x and y coordinates (pixels) of these nine calibration points from 1 to 9 are (80, 80), (640, 80), (1200, 80), (80, 512), (640, 512), (1200, 512), (80, 944), (640, 944) and (1200, 944), respectively. ‘X’ matrix values can be taken as a difference vector of glint (gx, gy) and pupil (px, py) center coordinates. Therefore, Equation (3) is reorganized as Equation (8) utilizing Equation (7).

After a calibration period, found constants (

A) are used for mapping the pupil center coordinates in the test image to the user screen positions in pixels as follows:

2.5. Stimulus Application

Stimulus signals hold significant space in eye tracker applications. System performance is tested and the behaviors of the user’s eyes can be observed at quantitative values. The measured reactions solve many medical, mental, psychological, educational problems of human beings. Accordingly, we created a visual stimulus set up in Matlab, using the Psychophysics Toolbox extensions [

38,

39,

40] as in

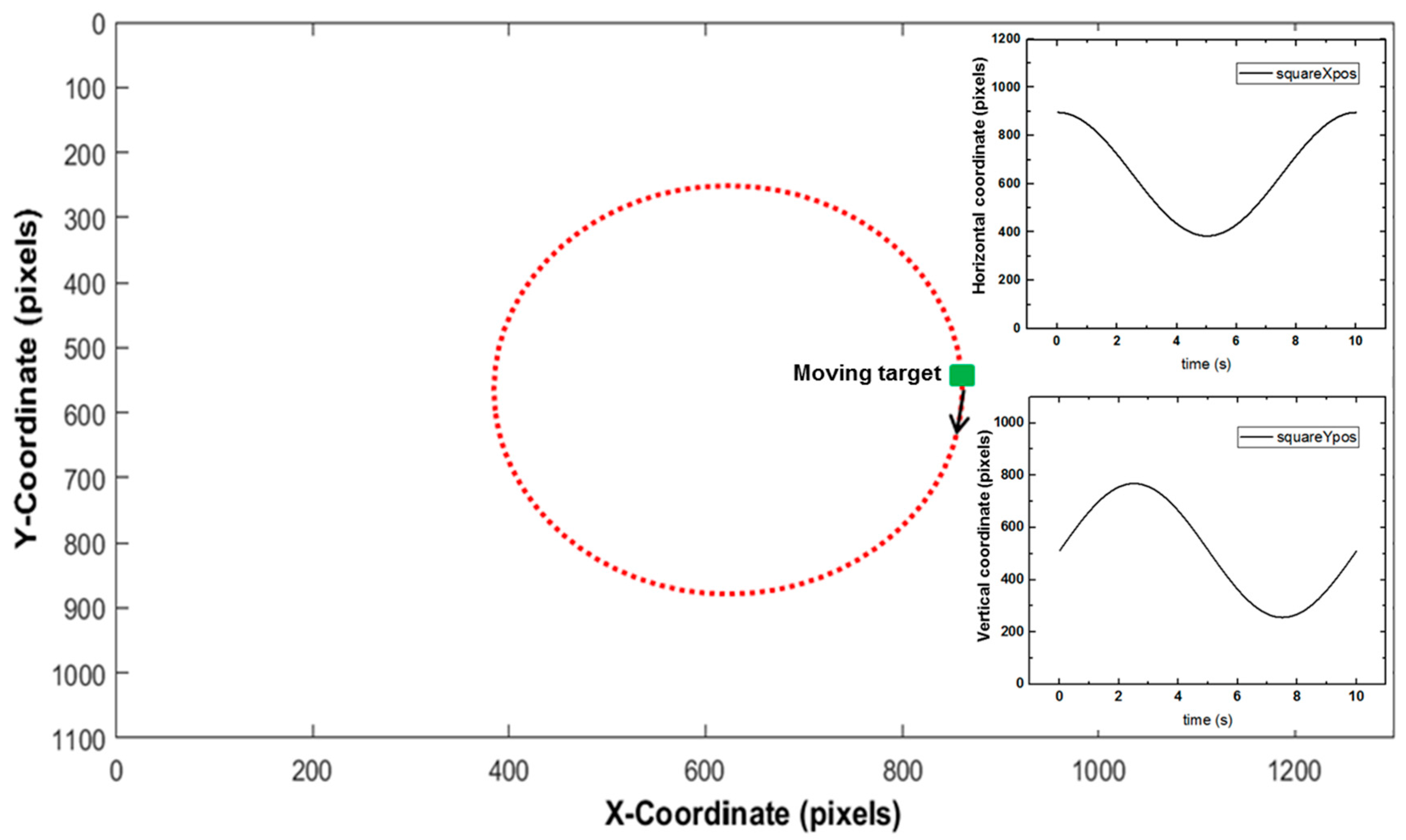

Figure 9.

The stimulus is a green square of 5 by 5 pixels (moving target) which circulates around the center

x-y coordinate of the monitor (640, 480).

Figure 9 inset (top right) shows

x-axis movement positions of the moving target on a full cycle.

Figure 9 inset (bottom right) shows the

y-axis movement positions of the moving target on a full cycle. According to our monitor specifications, initial values for calculation of moving target positions are presented in

Table 1.

The stimulus (moving target)

x and

y axes positions can be obtained as progress time (

t) intervals from Equations (11) and (12), respectively.

Here, squareXpos and squareYpos correspond to x and y pixel coordinates of the moving target. xCenter is monitor center coordinates’ x pixel value (640) and yCenter is monitor center coordinates’ y pixel value (512). ‘A’ is maximum amplitude value (radius) between the monitor center coordinate and the moving target.

3. Experimental Results

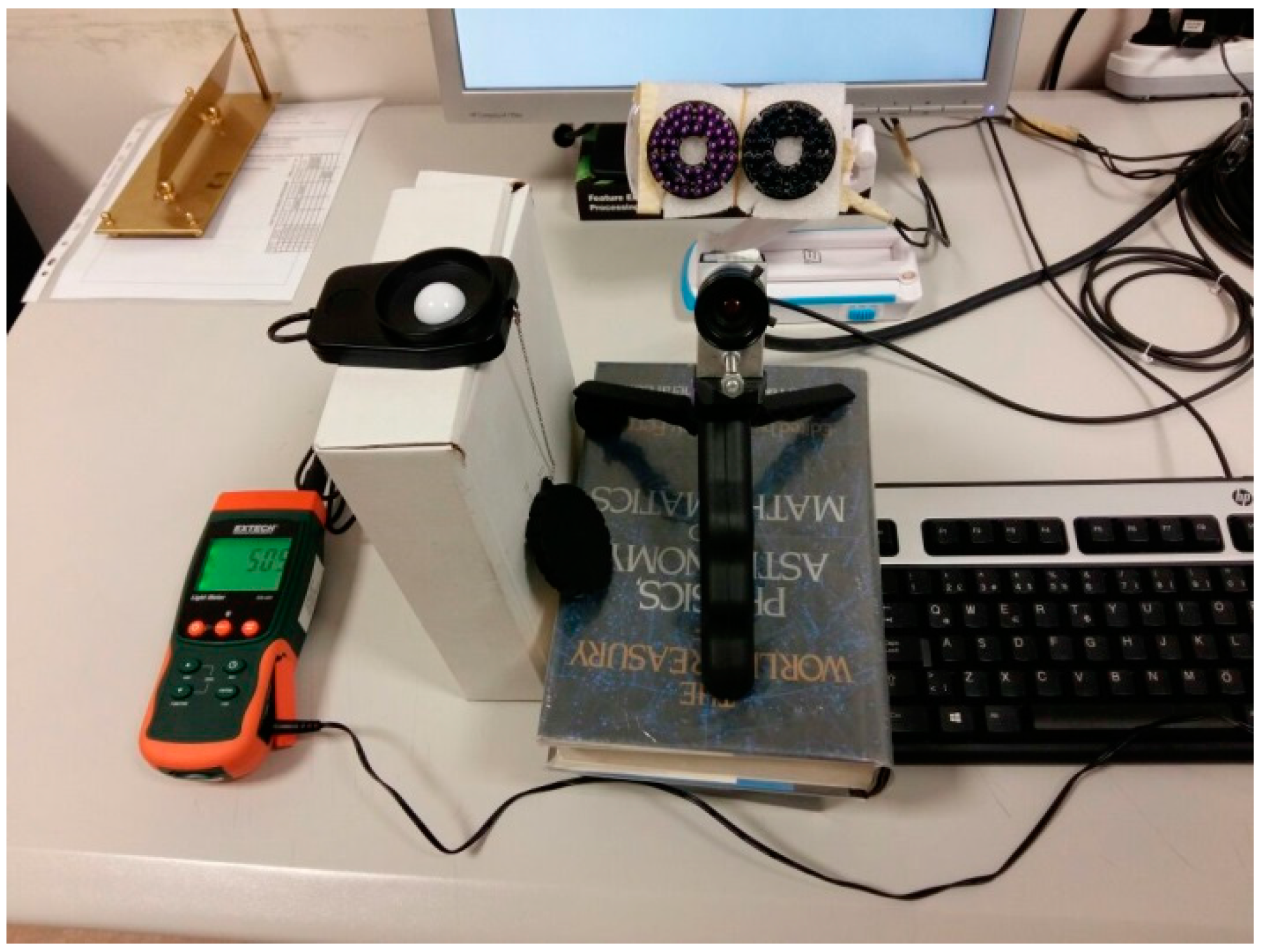

Figure 10 shows the measurement of the luminosity in the experimental environment. The luminosity of the room was measured with Extech LT300 light meter (FLIR Systems, Wilsonville, OR, USA) and its value was observed to be 505 lux.

The experiment was tested with a desktop computer containing a 3.4-GHz Intel

® core™ i7-3770 K CPU and 16 GB of RAM. The monitor resolution was 1280 × 1024. In addition, an 850-nm illuminator group was used. The camera was positioned right below the center of the monitor, and the illuminator was also positioned below the center of the monitor. The distance (

L) between the monitor and the user was roughly 700 mm. We tested our gaze tracking system with 11 participants whose ages were between 25 and 40. We performed the experiments with minimum 400 images per participant. Experimental results showed that the system can process 2046 × 1086 pixel images at 40 fps. Performance of the system compared to 640 × 480 pixel eye trackers, is approximately 280 fps as shown in

Figure 11.

To estimate eye gaze points, first users were asked to look at calibration points. Nine calibration points are not visible to users on the user screen simultaneously. When calibration starts, the first calibration point is seen on the screen. Then, users have to press PC mouse to see other calibration points. After nine points calibration ends up, users track at least one cycle movement of the moving target stimulus. Afterwards, we estimate that system accuracy is offline.

Figure 12 shows gaze point estimation geometry of our stimulus.

In general, the difference between the estimated (

Sx,

Sy) and the actual (

S’) gaze point coordinates is expressed as the error amount of the gaze tracking system as shown in Equation (14). Here,

corresponds to error amount,

L is the user to screen distance and

E(Sx,y) is Euclidean distance between estimated gaze point and the actual user screen coordinates. Equation (15) depicts absolute distance (

D) of the estimated gaze position (

Sx,

Sy) from stimulus center coordinates in pixel. Equations (16) and (17) are estimated gaze point (

Sx,

Sy)

x-axis deviation (

Edx) and

y-axis deviation (

Edy) from gazed point (

S’) actual coordinates. Finally, Equation (18) gives the expression of Euclidean error

E(Sx,y) between estimated gaze point and actual user screen coordinates.

To calculate the accuracy of the system in degrees, the error amount in pixels requires conversion to mm unit. For this conversion, we multiplied pixel size of our monitor (0.29 mm) with the errors

Edx and

Edy. Then the

x-axis,

y-axis and Euclidean errors in degrees are calculated with Equations (19)–(21).

Eleven users participated in our system test and the calculated accuracy of the system is presented in

Table 2 and

Table 3. The results show that the average accuracy for both eyes are smaller than 1° which is accepted well among eye tracker studies. The calculated mean square error (MSE) and root mean square error (RMSE) of the system is presented in

Table 4 and

Table 5 respectively.

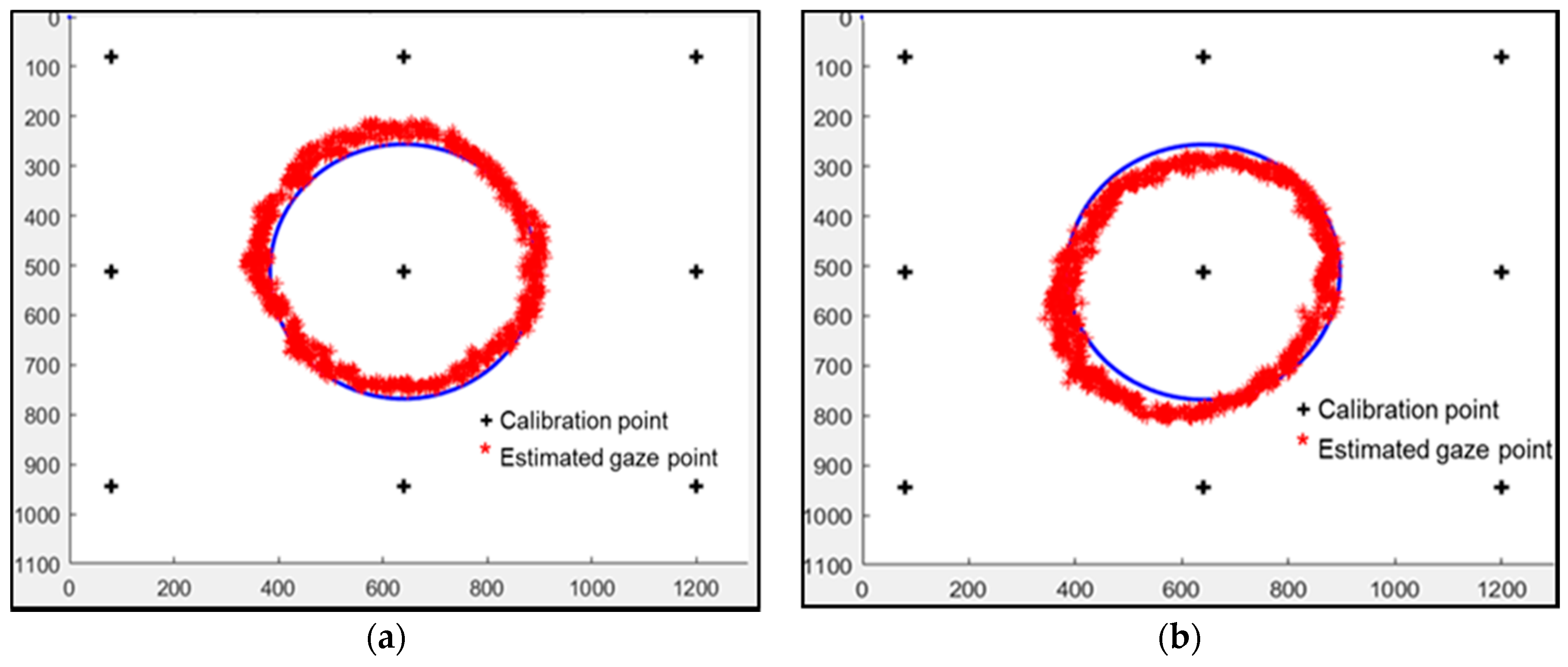

According to estimated gaze points of user 1,

Figure 13 shows left and right eye tracking graphics on the stimulus signal.

4. Conclusions

In this study, we proposed a new binocular full HD resolution eye gaze tracking system using LabVIEW (LabVIEW 2015, National Instruments Corporation, Austin, TX, USA) image processing algorithms. The experimental results show that average error of the gaze estimation system is 0.83 degree on a 1280 × 1024 pixel resolution user monitor. The system working frequency is 40 Hz. According to the systems utilizing 640 × 480 size images, our result corresponds to 280 fps approximately. We preferred fast binary image processing algorithms to achieve this frequency. After binarization, pupil area is made clearer with morphological methods, and center coordinates of pupil and corneal reflections are found with the weighted average technique which is implemented faster than elliptic and circular shape detection methods. Therefore, the reactions of pupil depending on the stimulus can be observed at small time slices. This kind of fast eye tracker system finds its use in many practical applications such as human computer interaction, aviation, medicine, robot control, production tests and augmented reality.

The system works under a camera viewing angle. Head movement area width is 38.42 cm and height is 29.37 cm. Eye pupil and corneal reflections can be detected in this area. This is a limitation of the system. The design was tested with 11 participants. One of the participants was a contact lens user. The user’s eye pupil and corneal reflections were detected without problems. Reflections caused by the NIR illuminator and environment illumination can occur on the glass depending on eyeglass materials. To test the system for eyeglass users, each set of eyeglasses requires testing. The design has the ability to adjust threshold value and the size of reflections in the software. Therefore, the system could handle eyeglasses.

In the future, we will study another method to increase the accuracy and we will try to implement image processing algorithms into our FPGA frame grabber card to overcome operating system delays and tracking frequency.