1. Introduction

A gyro-stabilized system is a system that uses information from a gyroscope to mechanically stabilize a camera assembly. This is carried out by using a gimbal mechanism to operate the camera rotation. The captured images can also be used to generate a reference for the autonomous tracking operation. Similar mechanisms also find applications in gun-turret control, missile guidance, drone gimbal photography, handheld cameras, etc. It is worth noting that while specific applications may use other optical sensors or weapon systems instead of vision cameras, controlling their line of sight (LOS) remains essential [

1,

2,

3]. In particular, controlling the LOS of the vision camera by the gimbal using the measurement from the camera itself is a type of visual servoing problem. Some fundamental studies on this problem have been established [

4,

5,

6], and many others are still open.

Locating the target of interest in optical imagery is the first control action to be performed. Here, an image tracker implemented with image processing techniques and computer vision algorithms tracks the target’s projection in the image plane frame-by-frame [

7,

8]. The projection reflects the location of the target in the coordinate fixed to the camera, or in other words, the deviation angle between the camera’s LOS and a vector pointing at the target. Then, the control requirements of pointing the camera’s LOS to the target can be expressed as bringing the target’s projection to the center of the image plane with zero steady-state error and a smooth transient response. However, the inevitable constraints of an image tracker significantly affect the efficiency of the system.

The gimbal motion control is the second action taken. Most of the existing works using visual data to control the gimbal are rather simple, despite the complexities in the characteristics of the gimbal and camera. A straightforward control approach is a proportional controller mentioned in [

4,

9]. Hurak et al. [

8] proposed a controller based on the classical image-based pointing and tracking approach. The study considered the couplings and under-actuation of the two-axis gimbal to control the gimbal’s angular rate. Unfortunately, the stability of the designed control system has not been proven and the completed form of the control law requires numerous measurements. The image-based tracking control approach for the gimbal camera was also adopted in [

10,

11]. In [

10], the image-based tracking control of a gimbal mounted on an unmanned aerial vehicle was compared to another classical approach, the position-based tracking. The study showed that the former provided higher tracking accuracy, and the latter was more robust but required sufficient measurements. The image-based control for a gimbal in a flying wing wildfire tracking in [

11] gave a similar remark, where a deviation between the position of the tracking target in the image and its center was noticeable in the task of gliding descent trajectory. F. Guo et al. [

12] proposed a feedback linearization control scheme with command planning for the problem of LOS tracking. J. Guo et al. [

13] introduced a cascade vision-based tracking control, consisting of a proportional lag compensator in the inner loop, and a feedback linearization-based sliding mode controller in the outer loop. However, in the presence of carrier disturbances and target movement, large deviations in tracking errors are created. T. Pham et al. [

14] adopted reinforcement learning to optimally train the parameters of an adaptive proportional–integral–derivative image-based tracking control with a two-axis gimbal system. Unfortunately, this approach is preferable for repetitive tasks and was only validated in simulations. It is also worth noting that the above studies deal with regulator problems, that is, the tracking target is stationary and its projection should die out at the desired rate.

Tracking a moving target, i.e., visual servoing, was considered by X. Liu et al. [

15]. They combined a model predictive controller with a disturbance observer to reduce the number of required measurements and obtain both disturbance rejection and good tracking performances. Furthermore, the model predictive control technique requires high computational load and high complexity, making it difficult to implement. In addition to the constraints in image streaming, the gimbal’s dynamics, vehicle maneuvers, and other disturbances are also worthy of concern. In their later studies [

16,

17], different control approaches were proposed for the visual servoing of gyro-stabilized systems. In [

16], a time-delay disturbance observer-based sampled-data control was developed to deal with the measurement delay generated by the acquisition and processing of the image information. On the other hand, an active disturbance compensation and variable gain function technique was proposed in [

17] for the two-axis system with output constraints and disturbances. In particular, all of these studies [

15,

16,

17] dealt with kinematics control only, i.e., the control inputs are the speed of the gimbal channels without considering their dynamics. Thus, all disturbances and constraints act as matched disturbances. Meanwhile, the completed representation of these kinds of systems contains both matched and unmatched disturbances, which is more challenging.

Considering the dynamic control of the gimbal systems, classical control strategies use an additional rate control loop to stabilize the LOS and isolate it from the above-mentioned influences. Hence, a cascade control arrangement is usually used [

3,

18]. Recent works rely on robust control techniques such that both target tracking and disturbance rejection are simultaneously achieved. Sliding mode control (SMC) [

19,

20],

H∞ control [

21], and active disturbance rejection control [

22] are popular schemes, thanks to their robustness and effectiveness. Nevertheless, the preferable approach for controlling a gimbal system is the combination of a disturbance observer and a robust control law, thanks to its capability of active disturbance rejection. Li et al. [

23] incorporated an integral SMC and a reduced-order cascade extended-state observer. The disturbance/uncertainty estimator-based integral SMC was introduced by Kurkcu et al. [

24]. The newest studies take advantage of the SMC’s robustness to design both the observer and controller. The higher-order sliding mode observers were incorporated with a terminal SMC [

19,

25] or a super-twisting SMC [

26]. Finite-time stability, that is, the convergence time is bounded by a predefined constant regardless of initial conditions, was desired and met. However, unmatched disturbance cannot be compensated, and the so-called chattering effect caused by high-frequency switching control still appears.

Therefore, this paper introduces a novel method for the finite-time visual servoing problem with the surveillance system. The proposed control system consists of two main components. Firstly, a single observer estimates both unmatched disturbances in the tracking target’s projection dynamics and matched disturbances in the gimbal dynamics. Then, a control law is designed to bring the projection to the center of the image plane and ensure the system’s finite-time stability, regardless of the target motion and the disturbances. Experiments are conducted for validation. The comparison to available approaches shows the superiority of the proposed control system. In short, the contributions of the paper can be summarized as follows:

- -

An observer is proposed to be able to estimate the states and disturbances of the gyro-stabilized surveillance system.

- -

A new visual servoing scheme ensures the boundedness and finite-time stability of the visual servoing system.

- -

Proof for the finite-time stability of the closed-loop system is provided. Experimental results evaluate the effectiveness of the proposed system.

Accordingly, the remainder is organized as follows. The complete model of the system with a vision camera is introduced in

Section 2. The observer is presented in

Section 3. The control law design and proof of stability are given in

Section 4. Experimental studies are conducted and their results are discussed in

Section 5, where the proposed system is compared with others. Finally, conclusions are drawn.

2. System Modeling

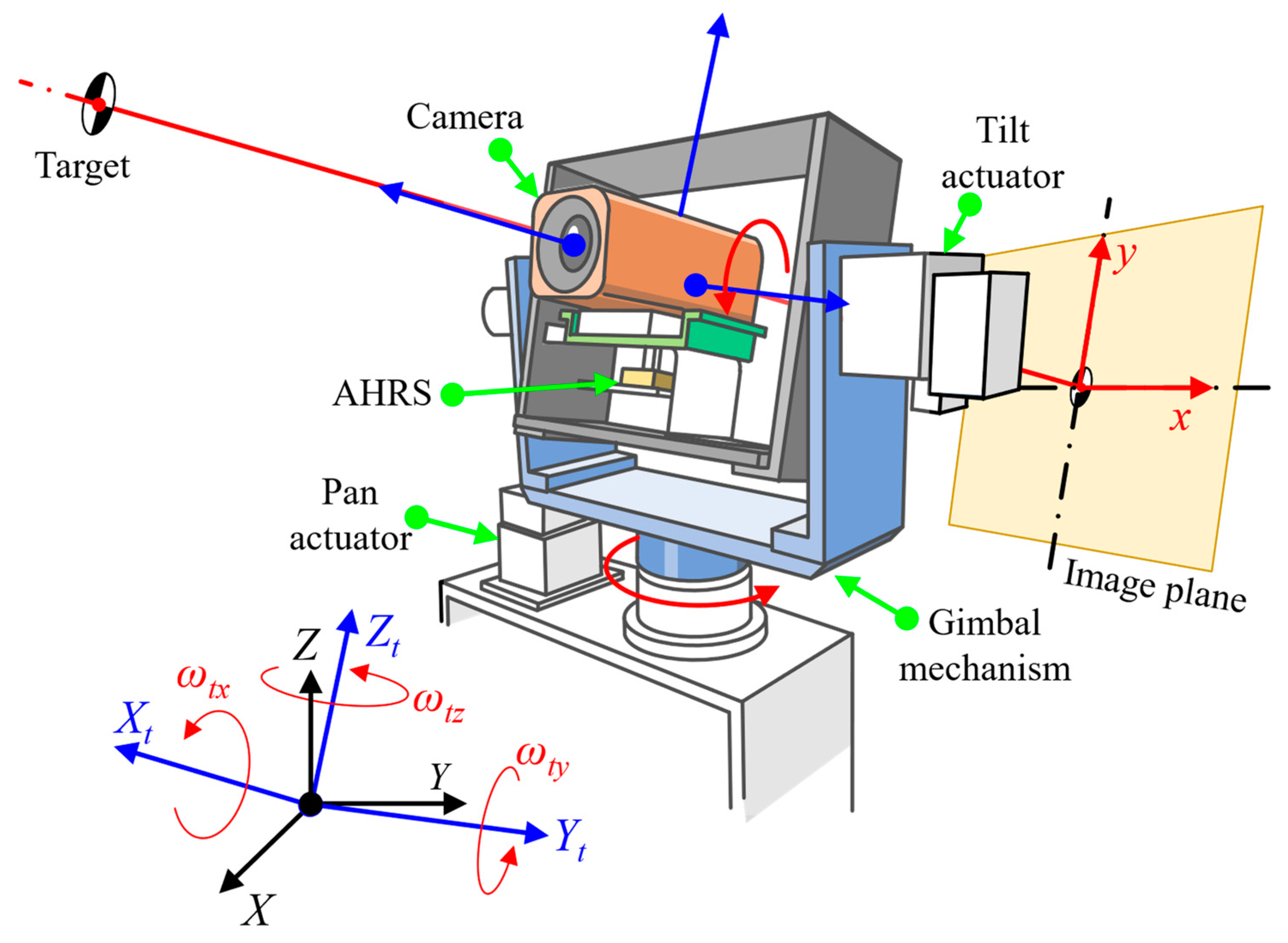

The structure of the gyro-stabilized system to be controlled is shown in

Figure 1. A completed representation of the system, with the dynamics of a two-axis gimbal and kinematics of a projection on the image plane, is as follows [

27]:

Vector indicates the location on the image plane of the projection of a given target in the 3-dimensional camera frame. is the vector of the angular rates about the tilt and pan axes of the inner gimbal, respectively. Meanwhile, is the rate of rotation about the roll axis, which is uncontrollable by the two-axis gimbal mechanism. The instantaneous linear velocity of the camera frame origin is given by . is the command vector for the gimbal’s actuators. Additionally, and are the vectors of kinematic and dynamic disturbances, respectively. In detail, is the result of the unpredictable movement of the tracking target on its projection’s location. contains external torques acting on the gimbal mechanism due to the motion of the carrying vehicle, the imbalance mass of the gimbal, the nonlinear friction between channels, etc. The time variable has been neglected in the equation for the sake of simplicity.

Meanwhile, from the so-called pinhole camera model, the interaction matrices between the system motions and the projected point’s velocity,

,

, and

are written as follows:

with

being the camera’s focal length and

Zc being the distance between the camera center and the tracking target. On the other hand, the system matrices of the gimbal mechanism are given by the following:

with

,

,

, and

being constant values and

being the relative angle between the inner and outer channels.

thanks to mechanical locks such that the gimbal lock phenomenon is avoided.

Moreover, let the system model (1) be rewritten as follows:

with

On and In are the n-by-n null matrix and identity matrix, respectively. Then, one can see the following properties:

- -

The system satisfies the observer matching condition [

28]:

- -

The nonlinear term satisfies the local Lipchitz condition in a region

Ω including the origin with respect to

X. That is, for any

X1 and

X2 in

Ω, there exists a positive

such that [

29]

3. State and Input Observer Design

To estimate the value of

, which consists of both unmatched and matched disturbances acting on the system, an observer is proposed such that its states match the system states and subsequently, the disturbances are asymptotically estimated. Assume that the disturbances are bounded and generated by a vector of exogenous signals

such that

where

is a diagonal positive definite matrix. Then, the observer is proposed in the form

where

and

are the estimated states and disturbances, respectively.

is the observer output. The matrices

and

and the positive scalar

are the gains of the observer.

Theorem 1. The gain of the observer is bounded by a positive if there exist the matrices , and and the positive scalar such that

holds.A Lyapunov function candidate is considered as follows:

where

and

are the observation errors. Taking the time derivative of

results in

Applying Young’s inequality and the inequality given in (7) for the not-quadratic terms of

yields the following:

Therefore, the derivative in (12) deduces to

, where

Additionally, consider the following inequality:

By substituting (14) into (15) and performing the Schur complement, inequality (10) is derived. Since

, (15) still holds if replacing

with

. The resultant inequality is equivalent to the boundedness of the

gain of the observer being as follows [

30]:

One can also see from (16) that the smallest upper bound of the gain is the result of minimizing in (10). Hence, the influence of is also minimized and the estimated states and disturbances follow closely their actual values. □

4. Finite-Time Visual Servoing Controller Design

Recall that the control objective is to bring the target’s projection to the center of the image frame in a finite time regardless of its initial location. This objective is reflected in two control errors defined by

where

and

is a diagonal positive definite matrix such that

is Hurwitz. Hence, the convergence of

leads to the convergence of both

and

.

is the filtered desired angular rates about the tilt and pan axes of the inner gimbal and is designed as follows:

In which

is a positive scalar, and

is the inverse of

from (2). The controller gains

so that

is the vector of estimated disturbances that correspond to those in the image plane.

and

are diagonal positive definite matrices.

and the operation

is defined by

with

and

being the signum function of

.

The dynamics of the control errors are then written as follows:

From (21), the control is designed as in (22) to ensure the convergence of both control errors:

Here,

,

is a positive scalar,

and

are diagonal positive definite matrices.

Theorem 2. When the control law (22) is conducted for the gyro-stabilized surveillance system whose dynamics are represented by (1), the finite-time stability of the visual servoing system is preserved with a proper choice of the controller gains.

The finite-time convergence of the proposed system is derived from the following Lyapunov function candidate:

With the dynamics (21) and the proposed control law (22), the time derivative of

is obtained as follows:

Based on Young’s inequality for products,

Thus, the differential Lyapunov function candidate is represented as follows:

where

One can see that only the errors of the observer and filter remain in the residual term

. Then, a proper choice of the controller’s gains is that

and

in (27) are positive. Based on Theorem 2 in [

31], there exists a scalar

such that the control errors converge to the following region:

in a finite time

given by

which can be seen as the functions of

and

. In other words, the finite-time stability of the proposed control system is preserved. □

On another note, the integral in (17) guarantees zero steady states of the projection’s motion; however, its accumulation can lead to a large overshoot and a long settling time. Therefore, an approximate integral

and the corresponding control error

are modified as follows [

32,

33]:

where

is a positive scalar and

denotes for the saturation function. One can see that inside the boundary of the saturation,

, above the positive boundary,

, and otherwise

. In other words, the integral action takes place completely only inside the saturation function’s boundary.

5. Experimental Studies

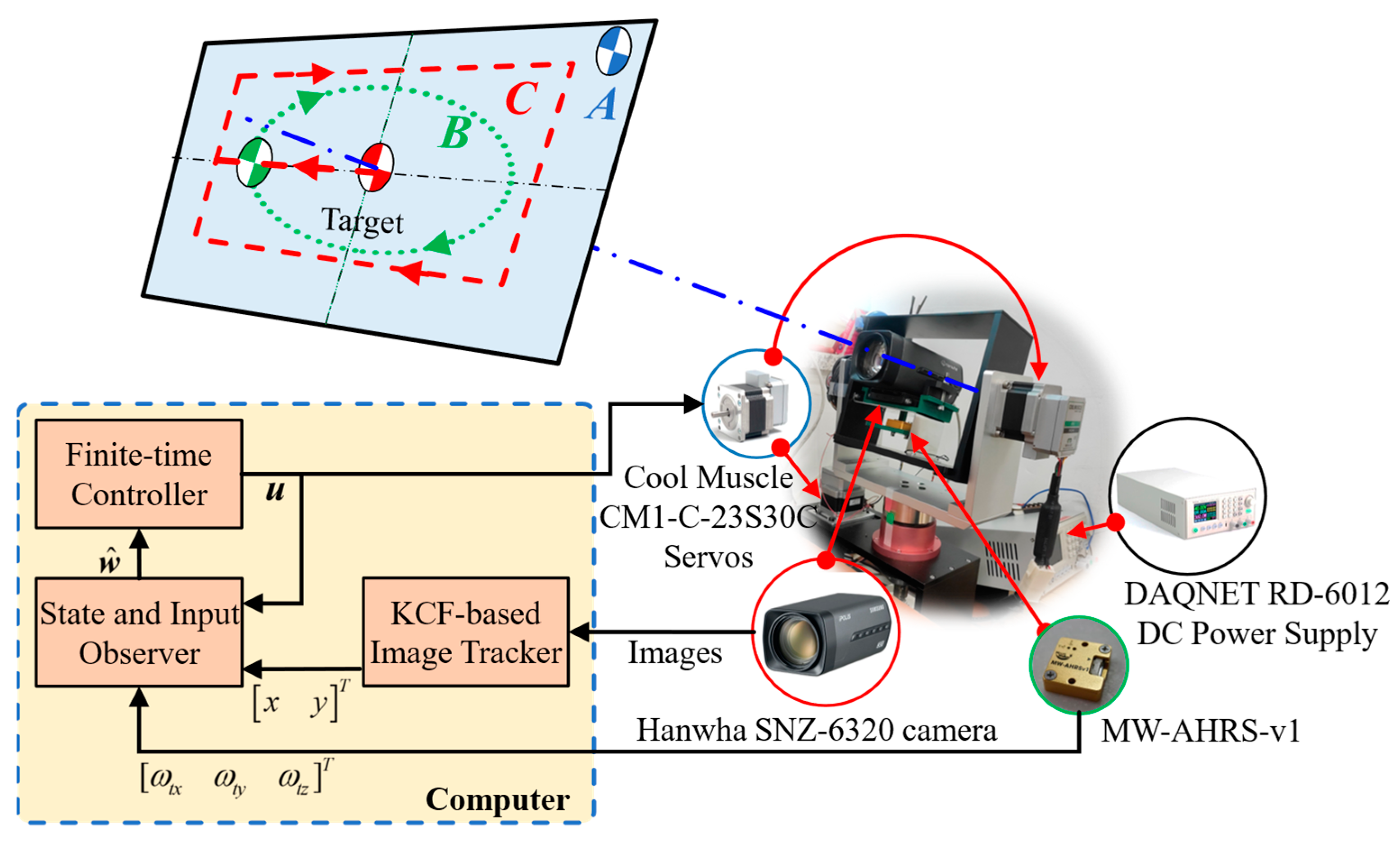

The experiments were conducted in order to validate the proposed control system. For this objective,

Figure 2 depicts the experimental apparatus that was used with the proposed control scheme and experiment scenarios. In detail, the experimental system consists of a two-channel gimbal prototype that carries the Hanwha SNZ-6320 camera on its inner channel. Given a captured image from the camera that contains the tracked object, an image tracker uses the KCF algorithm [

34] working with the histogram of oriented gradients (HOG) descriptor and the Gaussian kernel and returns the location of the object’s projection on this image. The rotational motions of two channels are operated by two servo systems CM1-C-23S30C from Muscle Corporation. The inner channel is directly driven by the servo, while the outer one adopts a belt drive with a gear ratio of 3:1. The orientation of the camera and the inner gimbal is sensed by an attitude heading reference system, MW-AHRS v1. Additionally, the image tracker, observer, and controller were executed by Matlab/Simulink on a desktop computer and interacted with the experimental apparatus. The gains of those are listed in

Table 1.

The experiments involved three scenarios, as illustrated in

Figure 2. In scenario A, the surveillance system had to track a stationary target whose projection’s initial location was out of the center of the image plane. In the other two scenarios, the system was required to track a moving target, i.e., a visual servoing problem. Different characteristics of the mentioned scenarios help validate different aspects of the proposed control system. In these experiments, unmatched disturbances arise from the unpredictable movement of the tracking target and constraints of the tracker, such as low-resolution measurement, long processing, and communication time. Match disturbances come from the imbalance mass of the inner gimbal, nonlinear frictions, and the actuator’s dead zone and saturation. For the sake of comparisons, the simple proportional controller from [

2], the image-based pointing controller proposed by Hurak et al. [

8], and the vision-based back-stepping control from [

27] were also taken into the tests. The results from the tests are shown in

Figure 3,

Figure 4 and

Figure 5, respectively. It is noted that the displayed unit of the variables

x and

y is [pixel], instead of [m], to correspond to displayed images in user interfaces. Additionally, the control action was taken from the 5th [s] of each test.

5.1. Experiment A

The initial location of the target’s projection is at

[pixel] in the image plane. The tilt angle of the inner channel that carries the camera was initialized at 04 [deg]. The tracking path depicted in

Figure 3a shows that the backstepping control system struggled to bring the projection to zero. The proportional control system was able to do so but had difficulty in following a linear path to the image central. The main reason is the kinematic interactions of all three rotations of the gimbal mechanism in each direction of the projection’s movement and they act as additive disturbances. This results in a curve in the tracking path. Meanwhile, in both the proposed system and Hurak’s one, the interaction matrices are taken into the control law. Thus, the projections were able to follow linear paths. This is the shortest path from the initial location to the image center. This also indicated that responses in both coordinates converge smoothly to zeros at the same time without any overshoot, as clearly seen in

Figure 3b,d. Additionally, the control signals in

Figure 3c hint that the proposed control law requires less effort in the tilt direction in comparison with the others. After settling time, all controllers effectively stabilize the system at a steady state. However, the backstepping controller failed to compensate for the steady-state error, while the others were able to correct it. This is because of the input-to-state stability of the backstepping control system, i.e., the system is bounded by a function of the size of the input disturbances that currently exist in the gimbal camera.

5.2. Experiment B

In this experiment, the target projection was at location

[pixel] in the image plane in the beginning. From the 5th [s], the target moved continuously on an ellipse line in a vertical plane facing the camera from a distance of 600 [mm]. The horizontal and vertical motions of the target were given by sinusoidal trajectories with a 0.01 [Hz] frequency and 410 [mm] and 210 [mm] amplitudes, respectively. The tracking paths in

Figure 4a show that all the controllers quickly brought the target’s projection to the center of the image plane and then kept it there, even with the motion of the target. However, a large variation around the image center in

Figure 4a demonstrated that the comparison controllers could not track the moving target effectively. In particular, in the x-coordinate, the proportional control system and Hurak’s system result in the largest variation. In the

y-coordinate, the proportional control and the backstepping control systems have the largest tracking errors, then the control system designed by Hurak et al. In contrast, the proposed control system guarantees the boundedness of the control errors in finite time, hence resulting in a small variation in the projection’s location in both coordinates during the test, which can easily be seen in

Figure 4a,b. The control inputs in

Figure 4c show the adjustment that the proposed controller made. Since the target followed an ellipse line, the tilt and pan rotations of the inner gimbal also followed sinusoidal trajectories, as seen in

Figure 4d, so that the LOS of the carried camera could track the desired target.

5.3. Experiment C

For this test, the target moved on the same plane in the previous scenario. It departed from the intersection point of the camera’s LOS and this plane, so the initial location of its projection was at the center of the image. The target followed a rectangular trajectory with a total distance of 480 [mm] horizontally and 250 [mm] vertically. This required the inner gimbal to rotate with trapezoidal trajectories, as seen in

Figure 5d. The time response in the

x-coordinate of the image plane shows that the backstepping control achieved the smallest tracking error in the first half of the test period. However, it then required a lot of adjustment and resulted in a large vibration in the second half of the test. It was also the worst one from the view in the

y-coordinate. The proportional approach also led to significant errors in the image plane. In particular, the tracking movement of the camera’s LOS was visibly lagging behind that of the target since this simple control strategy cannot compensate effectively for nonlinearities and disturbances in the system. Hurak’s system and the proposed one were quite comparable in the

x-coordinate, but the latter showed its superiority in the other direction.

For quantitative evaluation, the root-mean-square errors (RMSEs) of the projection were computed and are listed in

Table 2. The results of Experiment A imply that the proposed system is only the second best. However, the time responses from

Figure 3b indicate that these large values of RMSE are due to the longer rise time, especially in the

x-coordinate, though the settling times are almost the same. The reason is found to be due to the lower peak velocities of the gimbal during the transient period in the proposed system, which is better from the perspective of the image-tracking algorithm. Similarly, in Experiments B and C, the proposed system achieves a significantly better RMSE in the

y-coordinate, but only the third best in the other coordinate because of the large transient period of this coordination. If the transient period at the beginning of Experiment B is neglected, i.e., the zoom in

Figure 4b, the RMSE of the proposed system in the

x-coordinate is 0.1107 [pixel], just less than 0.0934 [pixel] of the backstepping control system. In Experiment C, apart from the transient period, the proposed system achieves the smallest steady-state error. Generally, the proposed controller shows its consistency and ought to perform better than the others. On another note, the proposed control law is quite complicated in comparison to the comparative controls, though it does not need more measurements than the others. The results of the three experiments also reveal that the proposed system requires more control adjustments, and so do the control efforts, especially in comparison to the proportional control and the control proposed by Hurak. Finally, although the proposed system achieves the smallest steady-state errors in most cases, finite-time boundedness is preserved but not the asymptotic stability.