1. Introduction

We are going to study the consistency of special complexity penalized least squares estimators for noisy observations of finite-dimensional signals on multi-dimensional domains, in particular, of images. The estimators discussed in the present paper are based on partitioning combined with piecewise smooth approximation. In this framework, consistency is proven and convergence rates are derived in

. Finally, the abstract results are applied to a couple of relevant examples, including popular methods, like interval, wedgelet or related partitions, as well as Delaunay triangulations.

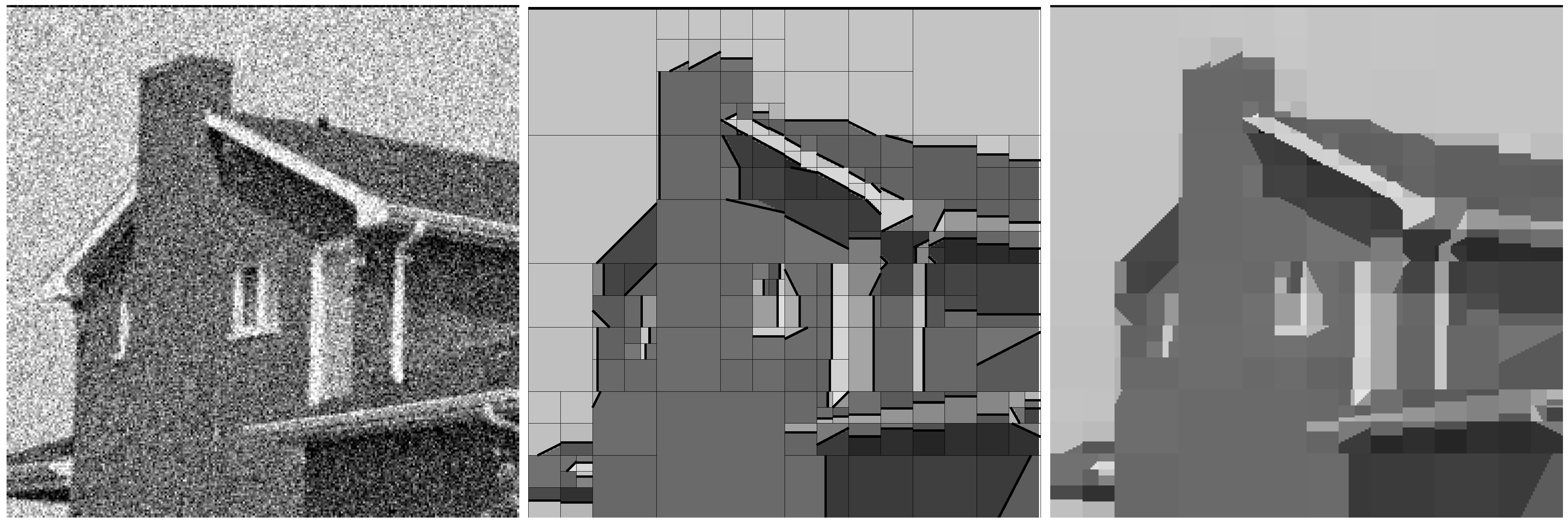

Figure 1 illustrates a typical wedgelet representation of a noisy image.

Figure 1.

A noisy image (left) and (right) a fairly rough wedgelet representation for ; the (middle) picture also shows the boundaries of the smoothness regions.

Figure 1.

A noisy image (left) and (right) a fairly rough wedgelet representation for ; the (middle) picture also shows the boundaries of the smoothness regions.

Consistency is a strong indication that an estimation procedure is meaningful. Moreover, it allows for structural insight, since a sequence of discrete estimation procedures is embedded into a common continuous setting and the quantitative behavior of estimators can be compared. It is frequently used as a substitute or approximation for missing or vague knowledge in the real finite sample situation. Plainly, one must be aware of various shortcomings and should not rely on asymptotics in case of a small sample size. Nevertheless, consistency is a broadly accepted justification of statistical methods. Convergence rates are of particular importance, since they indicate the quality of discrete estimates or approximations and allow for comparison of different methods.

Observations or data will be governed by a simple regression model with additive white noise: Let

be a finite discrete signal domain, interpreted as the discretization of the continuous domain,

. Data,

, are available for the discrete domains and generated by the model:

where

is a discretization of an original or “true” signal,

f, on

and

is white sub-Gaussian noise.

The present approach is based on a partitioning of the discrete signal domain into regions on each of which a smooth approximation of noisy data is performed. The choice of a particular partition is obtained by a complexity penalized least squares estimation, dependent on the data. Between the regions, sharp breaks of intensity may happen, which allow for edge-preserving piecewise smoothing. In one dimension, a natural way to model jumps in signals is to consider piecewise regular functions. This naturally leads to representations based on partitions consisting of intervals. The number of intervals on a discrete line of length, n, is of the polynomial order, .

In more dimensions, however, the definition of elementary fragments is much more involved. For example, in a discrete square of side-length, n, the number of all subregions is of the exponential order . When dealing with images, one of the difficulties consists in constructing reduced sets of fragments, which, at the same time, take into account the geometry of images and lead to computationally feasible algorithms for the computation of estimators.

The estimators adopted here are minimal points of complexity penalized least squares functionals: If

is a sample and

a tentative representation of

y, the functional:

has to be minimized in

x given

y; the penalty,

, is the number of subdomains into which the entire domain is divided and on which

x is smooth in a sense to be made precise by the choice of suitable function spaces (see

Section 2.1 and

Section 5);

γ is a parameter that reflects the tradeoff between the quadratic error and the size of the partition.

Due to the non-convexity of the

-type penalty, one has to solve hard optimization problems in general. If all possible partitions of the signal domain are admitted, such optimization problems are not computationally feasible. A popular attempt to circumvent this nuisance is simulated annealing; see, for instance, the seminal paper [

1]. This paper had a considerable impact on imaging; the authors transferred models from statistical physics to image analysis as prior distributions in the framework of Bayesian statistics. This approach was intimately connected with Markov Chain Monte Carlo methods, like Metropolis Sampling and Simulated Annealing [

2].

On the other hand, transferring spatial complexity to time complexity, like in such metaheuristics, does not remove the basic problem; it rather transforms it. Such algorithms are not guaranteed to find the optimum or even a satisfactory near-optimal solution [

2], Section 6.2. All metaheuristics will eventually encounter problems on which they perform poorly.

Moreover, if the number of partitions grows, at least, exponentially, it is difficult to derive useful uniform bounds on the projections of noise onto the subspaces induced by the partitions. Reducing the search space drastically allows the designing of exact and fast algorithms. Such a reduction basically amounts to restrictions on admissible partitions of the signal domain. There are various suggestions, some of them mentioned initially.

In one dimension, regression onto piecewise constant functions was proposed by the legendary [

3] who called respective representations regressograms. The Functional (2) is by some (including the authors) referred to as the Potts functional. It was introduced in [

4] as a generalization of the well-known Ising model [

5] from statistical physics from two or more spins. It was suggested by [

6] and penalizes the length of contours between regions of constant spins. In fact, in one dimension, a partition,

, into, say,

k intervals on which the signal is constant admits

jumps and, therefore, has contour-length,

.

The one-dimensional Potts model for signals was studied in detail in a series of theses and articles; see [

7,

8,

9,

10,

11,

12,

13,

14] Consistency was first addressed in [

10] and, later on, exhaustively treated in [

15,

16]. Partitions there consist of intervals. Our study of the multi-dimensional case started with the thesis [

8]; see also [

17].

In two or more dimensions, the model (2) differs substantially from the classical Potts model. The latter penalizes the length of contours—locations of intensity breaks—whereas (2) penalizes the number of regions. This allows, for instance, good performance on filamentous structures, albeit having long borders compared to their area.

Let us give an informal introduction into the setting. The aim is to estimate a function, f, on the d-dimensional unit cube, , from discrete data. To this end, and f are discretized to cubic grids, , , and functions, , on . For each n, data, , , is available, i.e., noisy observations of the .

In this paper, we prove almost sure convergence of the quadratic error associated with complexity penalized least squares estimators

, which are minimal points of functionals of the form (2) (see

Section 2.2). Note that the partition,

, is chosen among a suitable class of admissible partitions. Moreover, we derive almost sure convergence rates of quadratic errors, whenever a decay rate of the approximation errors is assumed in addition.

We are faced with three kinds of error: The error caused by noise, the approximation and the discretization error. Noise is essentially controlled regardless of the specific form of f. For the approximation and the discretization error, special assumptions on the function classes in question are needed.

The results presented here are closely related to—but differ from—those obtained by the classical model selection theory developed, for instance, in [

18,

19]. In fact, classical model selection works within the minimax setting, where the quadratic risk,

, is controlled. Let us stress that neither of both results directly implies the other.

Due to the approximation error term, there are deep connections to approximation theory. In particular, when dealing with piecewise regular images, non-linear approximation rates obtained by wavelet shrinkage methods are known to be suboptimal, as discussed in [

20,

21]. In the last decade, the challenging problem to improve upon wavelets has been addressed in very different directions.

The search for a good paradigm for detecting and representing curvilinear discontinuities of bivariate functions remains a fundamental issue in image analysis. Ideally, an efficient representation should use atomic decompositions, which are local in space (like wavelets), but also possess appropriate directional properties (unlike wavelets). One of the most prominent examples is given by curvelet representations, which are based on multiscale directional filtering combined with anisotropic scaling. [

22] proved that thresholding of curvelet coefficients provides estimators, which yield the minimax convergence rate up to a logarithmic factor for piecewise

functions with

boundaries. Another interesting representation is given by bandelets, as proposed in [

23]. Bandelets are based on optimal local warping in the image domain relative to the geometrical flow, and [

24] proved, also, the optimality of the minimax convergence rates of their bandelet-based estimator, for a larger class of functions, including piecewise

functions with

boundaries.

In

Section 5, we apply the abstract framework proposed in

Section 4 to bidimensional examples that rely on explicit geometrical constructions: In particular, the corresponding approaches are aimed at avoiding the pseudo-Gibbs artifacts produced by the above methods.

Wedgelet partitions were introduced by [

21] and belong to the class of shape-preserving image segmentation methods. The decompositions are based on local polynomial approximation on some adaptively selected leaves of a quadtree structure. The use of a suitable data structure allowed for the development of fast algorithms for wedgelet decomposition; see [

17].

An alternative is provided by anisotropic Delaunay triangulations, which have been proposed in the context of image compression in [

25]. The flexible design of the representing system allows for a particularly fine selection of triangles fitting the anisotropic geometrical features of images. In contrast to curvelets, such representations preserve the advantage of wavelets and are still able to approximate point singularities optimally; see [

26].

Both wedgelet representations and anisotropic Delaunay triangulations lead to optimal non-linear approximation rates for some classes of piecewise smooth functions. Note that the classes of (generalized horizon) functions considered in this paper contain and are larger than the above mentioned horizon functions (or boundary fragments). For a brief discussion on the generalization to more general piecewise regular functions, see

Section 6. In the present paper, we use this optimality to derive convergence rates of the estimators. We prove almost sure consistency rates for function classes where the piecewise regularity is controlled by a parameter,

α. More precisely, for these classes, we obtain that, for almost each

ω:

where

is the variance of noise.

In the minimax setting, decay rates similar to those in (3) are known to be optimal for the respective function classes. Note that, using slightly modified penalties, which are not merely proportional to the number of pieces, [

27] were able to show that optimal minimax rates may be achieved, optimally meaning that the rates are of the same order as in (3), but without the log factor. In the present paper, in contrast to [

27], we control the almost sure convergence instead of the

-risk. Moreover, we explicitly restrict our attention to the classical penalty given by the number of pieces (or, equivalently, the dimension of the model) as in (2), noting that this is strongly connected to the sparse ansatz, which is currently popular in the signal community. We refer to [

28] for a comprehensive review on sparsity. The generalization of the results in the present paper to other penalties is straightforward, but would be rather technical and, thus, might obscure the main ideas.

We address, first, noise and its projections to the approximation spaces; see

Section 3. In

Section 4, we derive convergence rates in the general context. Finally, in

Section 5, we illustrate the abstract results by specific applications. Dimension, 1, is included, thus generalizing the results from [

15] to piecewise polynomial regression and piecewise Sobolev classes. Our two-dimensional examples, wedgelets and Delaunay triangulations both rely on a geometric and edge-preserving representation. Our main motivation is the optimal approximation properties of these methods, the key feature to apply the previous framework being an appropriate discretization of these schemes.

3. Noise and Its Projections

For consistency, resolutions at infinitely many levels are considered simultaneously. Frequently, segmentations are not defined for all , but only for a cofinal subset of . Typical examples are all dyadic quad-tree partitions or dyadic wedgelet segmentations, where only indices of the form, , appear. Therefore, we adopt the following convention:

The symbol, , denotes any infinite subset of endowed with the natural order, ≤. is a totally ordered set, and we may consider nets, . For example, , , means that convergences to x along . We deal similarly with notions, like lim sup etc. Plainly, we might resort to subsequences instead, but this would cause a change of indices, which is notationally inconvenient.

3.1. Sub-Gaussian Noise and a Tail Estimate

We introduce now the main hypotheses on noise accompanied by a brief discussion. The core of the arguments in later sections is the tail Estimate Equation (9) below.

As Theorem 2 will show, the appropriate framework are sub-Gaussian random variables. A random variable, ξ, enjoys this property if one of the following conditions is fulfilled:

Theorem 1 The following two conditions on a random variable ξ are equivalent:- (a)

There is , such that: - (b)

ξ is centered and majorized in distribution by some centered Gaussian variable,

η,

i.e.:

This and most other facts about sub-Gaussian variables quoted in this paper are verified in the first few sections of the monograph, [

29]; one may also consult [

30],

Section 3.4.

The definition in (a) was given in the celebrated paper [

31], which uses the term generalized Gaussian variables. The closely related concept of semi-Gaussian variables, which requires symmetry of

ξ, seems to go back to [

32].

The class of all sub-Gaussian random variables living on a common probability space,

, is denoted by

. The sub-Gaussian standard is the number,

The infimum is attained and, hence, is a minimum. is a linear space, τ is a norm on if variables differing on a null-set only are identified. Equipped with the norm, τ, is a Banach space. It is important to note that is strictly contained in all spaces, , , the spaces of all centered variables with finite ordered absolute moments.

Remark 1 The most prominent sub-Gaussians are centered Gaussian variables, η, with standard deviation, σ, and . For them, Inequality (8) is an equality with . The specific characteristic of sub-Gaussian variables are tails lighter than those of Gaussians, as expressed in (b) of Theorem 1.

The following theorem is essential in the present context:

Theorem 2 For each , suppose that the variables, , , are independent. Then:- (a)

Suppose that there is a real number, , such that for each and real numbers, , , and each , the inequality:holds. Then, all variables are sub-Gaussian with a common scale factor, β. - (b)

Let all variables, , be sub-Gaussian. Suppose further that:Then, (a) is fulfilled with this factor, β.

This is probably folklore, and we skip the proof. A detailed proof can be found in the extended version [

33].

Remark 2 For white Gaussian noise, one has and, hence, .

3.2. Noise Projections

In this section, we quantify projections of noise. Choose for each a class, , of admissible segments over and a set, , of admissible partitions. As previously, for each , a linear function space, , is given. We shall denote the orthogonal -projection onto the linear space, , by .

The following result provides almost sure -estimates for the projections of noise to these spaces, as there are more and more admissible segments.

Proposition 1 Suppose that for all and each . Assume in addition that there is a number, , such that for some : Let . Then, we have: Moreover, for and for almost all ω, there is , such that for : This will be proven at a more abstract level. No structure of the finite sets,

, is required. Nevertheless, we adopt all definitions from

Section 1, mutatis mutandis. All Euclidean spaces,

, will be endowed with their natural inner products,

, and respective norms. The projection onto the linear subspace

will be denoted by

.

Theorem 3 Suppose that the noise variables, , fulfill (9) accordingly. Consider finite nonempty collections, , of linear subspaces in , and assume that the dimensions of all subspaces, , , are uniformly bounded by some number, . Assume in addition that there is a number, , such that for some : Let . Then, we have Note that is the Euclidean norm in the spaces, , since each is simply a vector. The assumption in the theorem can be reformulated as .

Proof. Choose

and

with

. Let

,

be some orthonormal basis of

. Observe that for any real number,

:

implies that:

We derive a series of inequalities:

where the first inequality follows from the introductory implication. By (9), we may continue with:

Let

. For

:

and the assertion is proven.

Now, let us prove Proposition 1:

Proof of Proposition 1. We apply Theorem 3 to the collections,

. Then,

. Since for each

, the spaces,

,

, are mutually orthogonal, one has for

that:

Applying Theorem 3 to the latter inequality Proves (11). Moreover, for

, we observe that the right hand side of (11) has a finite sum over

n. Thus, the Borel-Cantelli lemma yields:

This implies (12).

Let us finally illustrate the above concept in the classical case of Gaussian white noise.

Remark 3 Continuing from Remark 2, we illustrate the behavior of the lower bound for the constant,

C, in Proposition 1 and Theorem 3, in the case of white Gaussian noise and polynomially growing number of fragments,

i.e.,

is asymptotically equivalent to

. In this case, the estimate for the norm of noise projections takes the form, for almost all

ω and for

:

This underlines the dependency between the noise projections, the number of fragments, the noise variance, the dimension of the regression spaces and the size of the partitions.

3.3. Discrete and Continuous Functionals

We want to approximate functions,

f, on the continuous domain,

, by estimates on discrete finite grids,

. The connections between the two settings are provided by the maps,

and

, introduced in (5) and (6). Note first that:

where the inner product and norm on the respective left-hand sides are those on

, and on the right-hand sides, one has the Euclidean inner product and norm. Furthermore, one needs appropriate versions of the Functionals (7). Let now

be segmentation classes on the domains,

, and

a segmentation class on

. Set:

The two functionals are compatible.

Proposition 2 Let and and . Then, If, moreover, then: Proof. The identity is an immediate consequence of (13). Hence, let us turn to the equivalence of minimal points. The key is a suitable decomposition of the functional,

. The map,

, is the orthogonal projection of

onto the linear space,

, and for any

, the function,

h, is in

. Hence:

The quantity,

, does not depend on

. Therefore, a pair,

, minimizes

if and only if it minimizes

Setting in (2), this completes the proof.

3.4. Upper Bound for Projective Segmentation Classes

We compute an upper bound for the estimation error in a special setting: choose in advance a finite dimensional linear subspace,

of

. Discretization induces linear spaces,

and

, for any

, of functions on

. Let further for each

, a set,

, of admissible fragments and a family,

, of partitions with fragments in

be given. Set

. The induced segmentation class,

will be called projective

-segmentation class at stage,

n.

The following inequality is at the heart of later arguments since it controls the distance between the discrete M-estimates and the “true” signal. Note that this result is also central in the derivation of the results of the model selection theory.

Lemma 1 Let for a -projective segmentation class, , over be given and choose a signal, , and a vector, . Let furtherand . Then, Proof. Since

is a minimal point of

, the embedded segmentation,

, is a minimal point of

by Proposition 2 and, hence:

Expansion of squares yields that:

and hence:

By definition,

and

, which implies that

and, hence,

. We proceed with:

Since

, we conclude:

Putting this into Inequality (15) results in:

which implies the asserted inequality.