No-Reference Image Blur Assessment Based on Response Function of Singular Values

Abstract

:1. Introduction

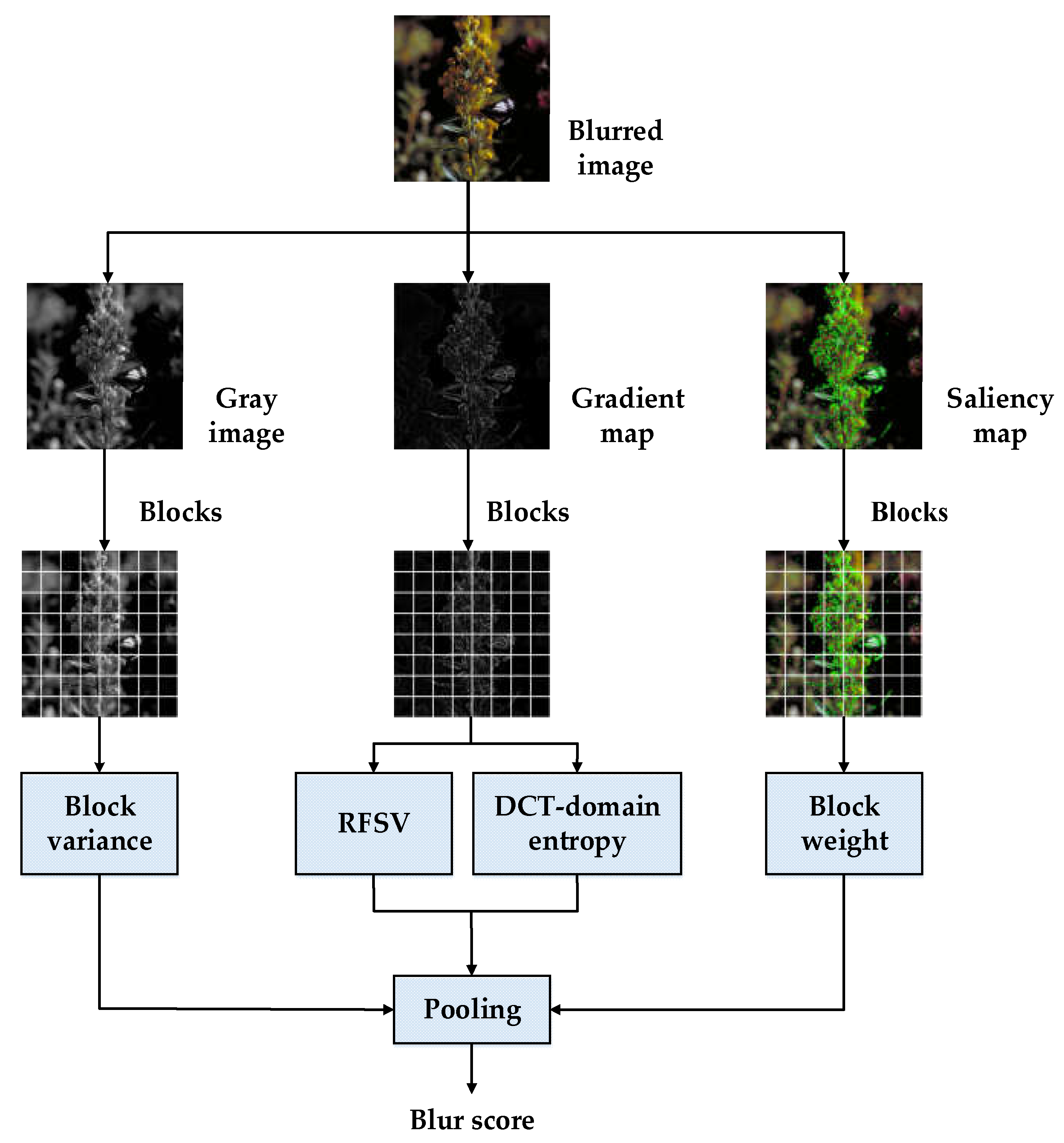

- We designed a response function of the singular values in the DCT domain, which is effective to characterize the image blur.

- We combine the spatial information of the blurred image and the spectral information of the gradient map, in order to reduce the impact of the image content.

- We assign SIFT-dependent weights to the image blocks, according to the characteristics of the human visual system (HVS).

2. The Proposed Methods

2.1. Computing Gray Image and Gradient Map

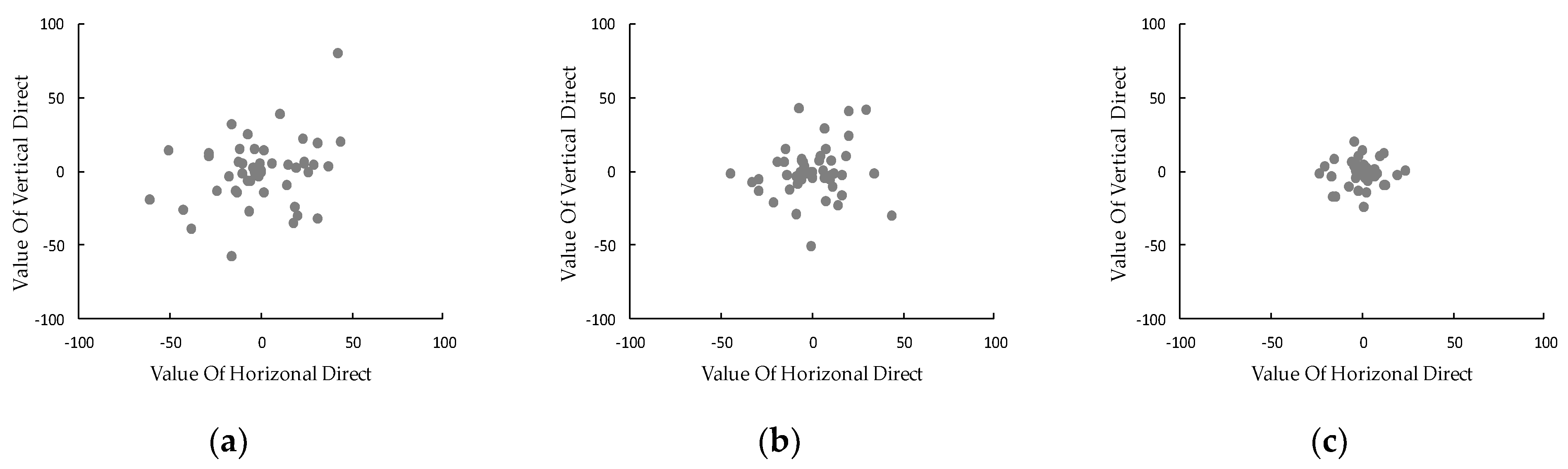

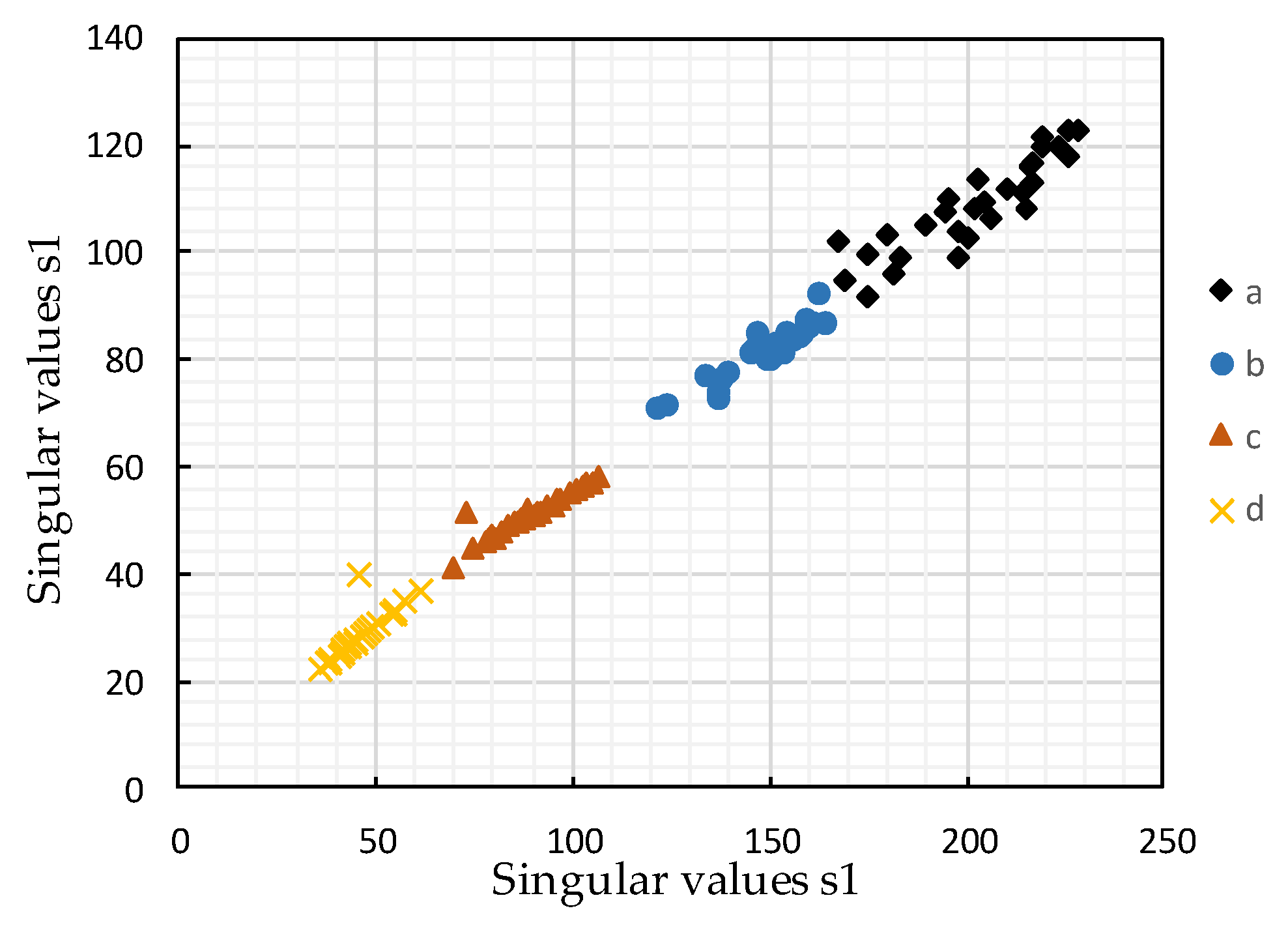

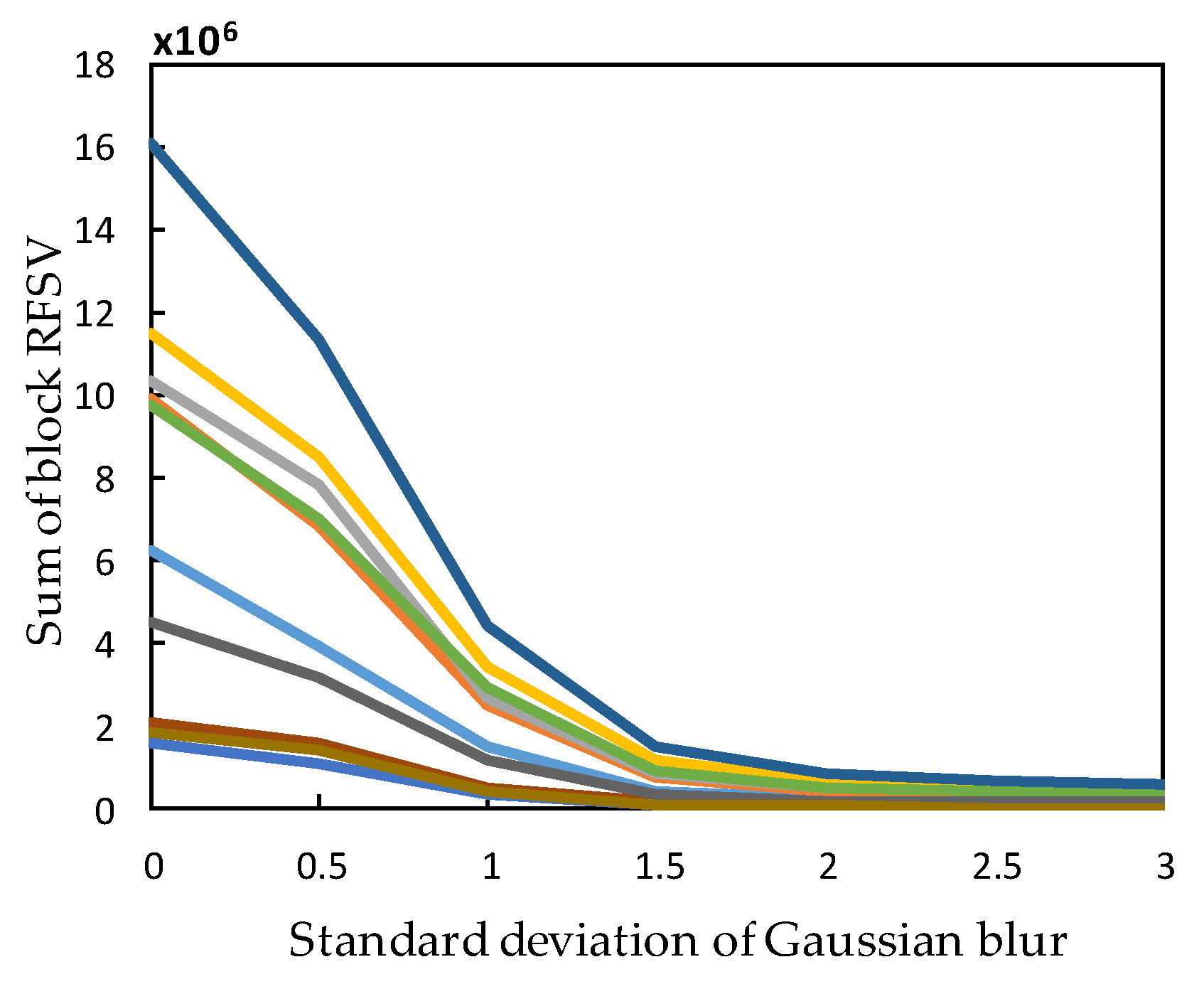

2.2. Computing RFSV for Every Block

2.3. Computing Variance and the DCT Domain Entropy for Every Block

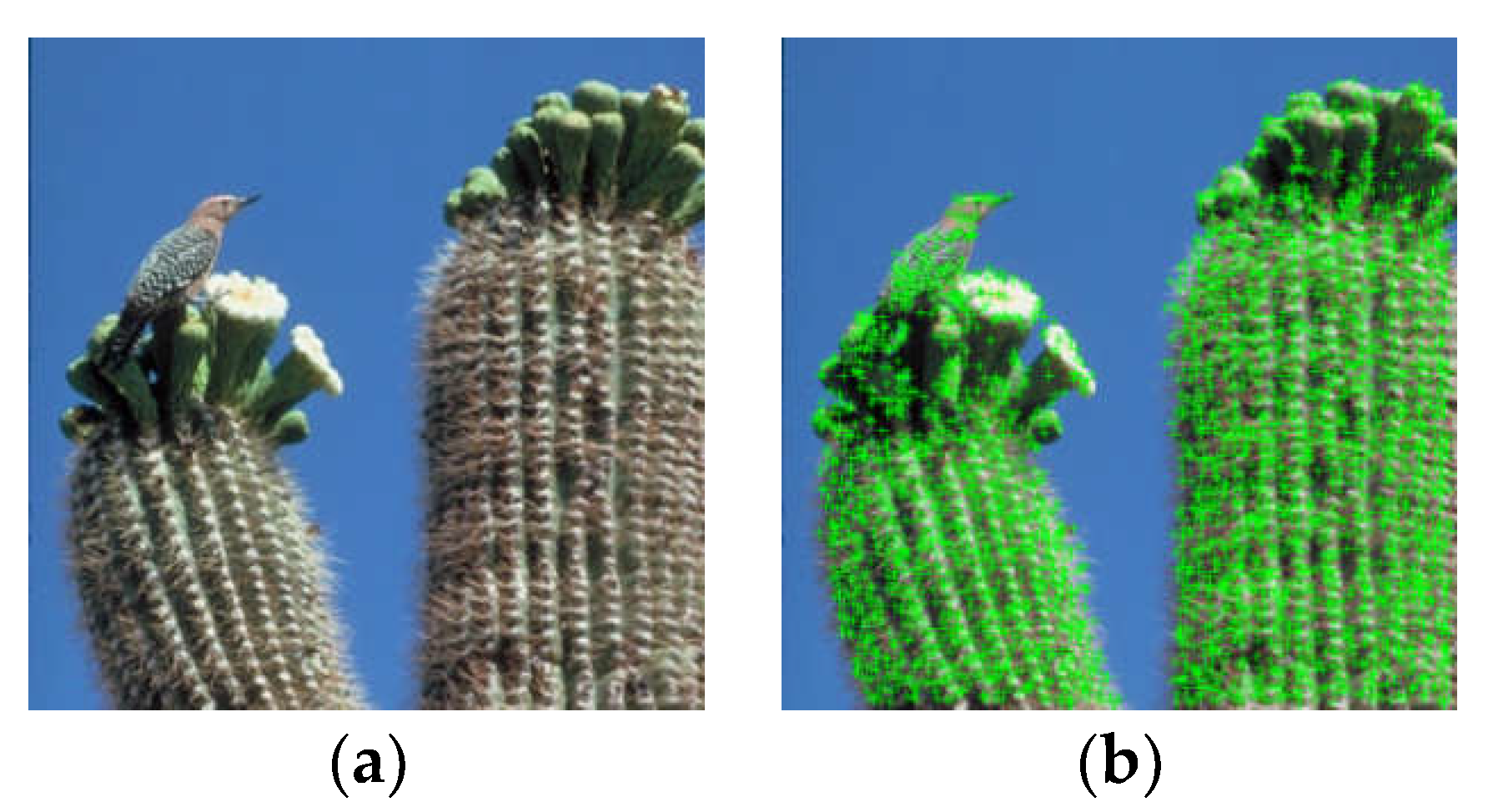

2.4. Computing Block Weight

3. Results

3.1. Experimental Settings

3.2. Results and Analysis

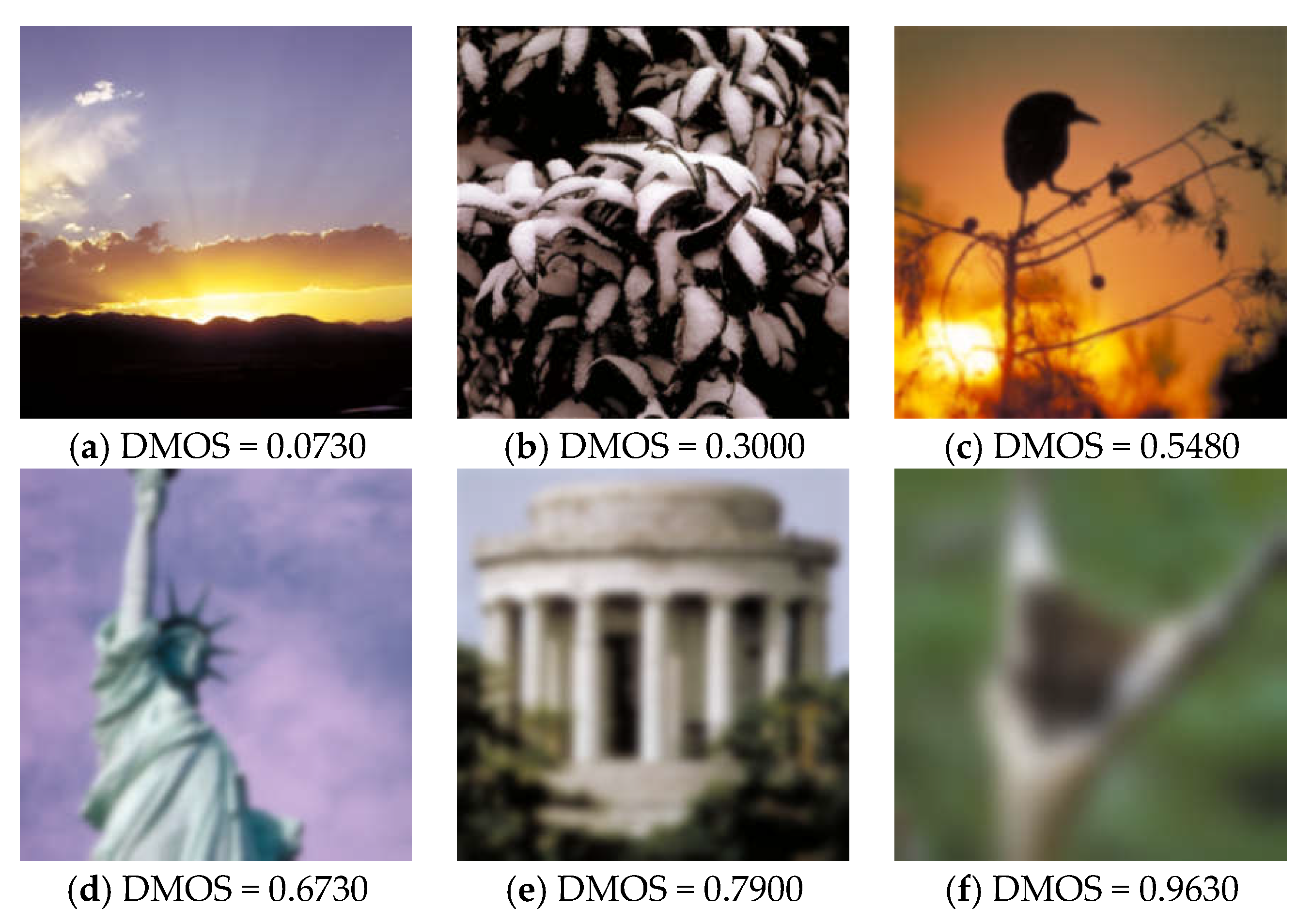

3.2.1. Image-Level Evaluation

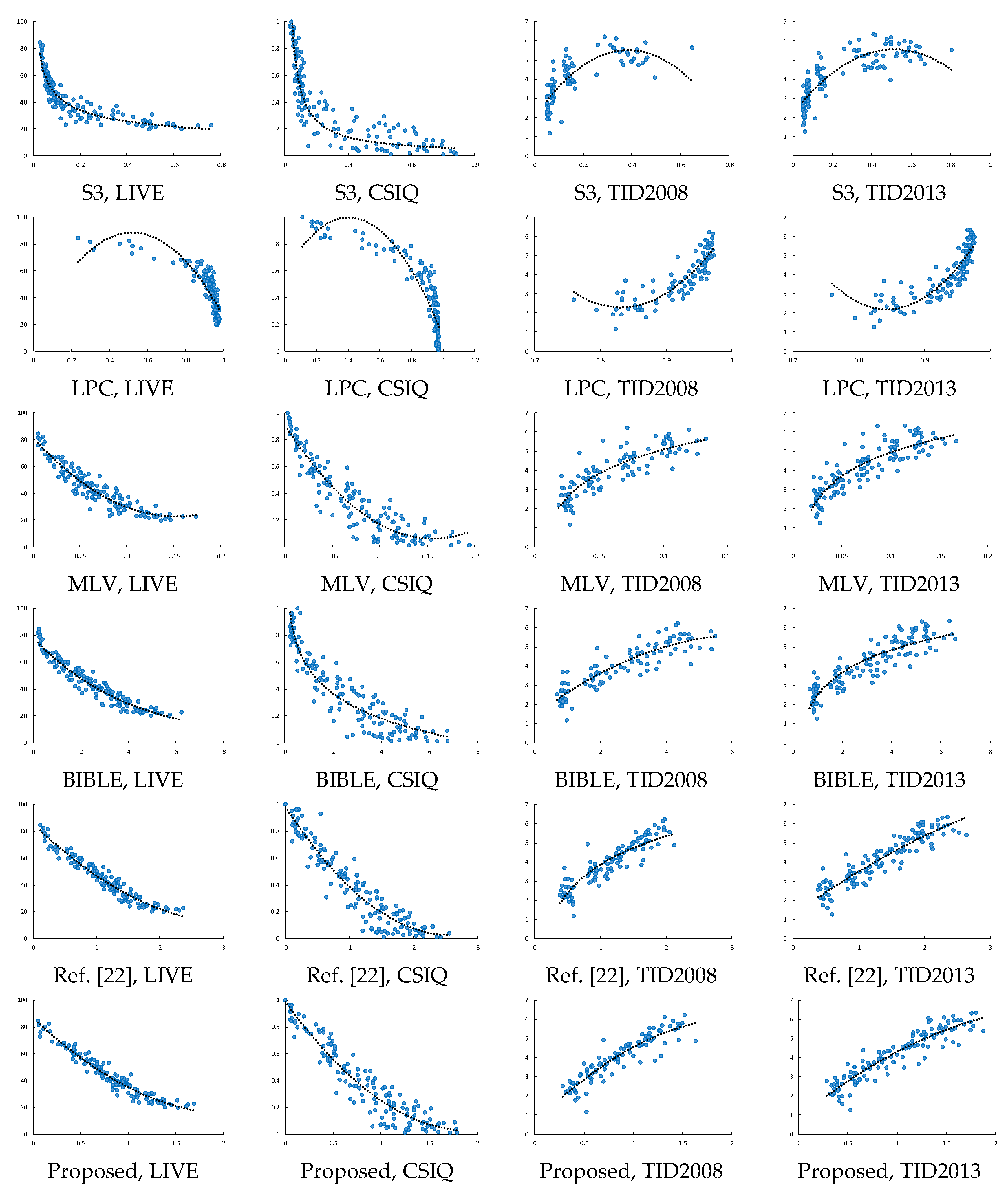

3.2.2. Database-Level Evaluation

3.2.3. Impact of Block Sizes

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Sheikh, H.R.; Bovik, A.C.; De, V.G. An information fidelity criterion for image quality assessment using natural scene statistics. IEEE Trans. Image Process. 2005, 14, 2117–2128. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Larson, E.C.; Chandler, D.M. Most apparent distortion: Full-reference image quality assessment and the role of strategy. J. Electron. Imaging 2010, 19, 011006. [Google Scholar]

- Zhang, L.; Zhang, L.; Mou, X.Q.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed]

- Xue, W.F.; Zhang, L.; Mou, X.Q.; Bovik, A.C. Gradient magnitude similarity deviation: A highly efficient perceptual image quality index. IEEE Trans. Image Process. 2013, 23, 684–695. [Google Scholar] [CrossRef] [PubMed]

- Gao, X.; Lu, W.; Tao, D.; Li, X. Image quality assessment based on multiscale geometric analysis. IEEE Trans. Image Process. 2013, 18, 1409–1423. [Google Scholar]

- Tao, D.; Li, X.; Lu, W.; Gao, X. Reduced-reference IQA in contourlet domain. IEEE Trans. Syst. Man Cybern. Part B 2009, 39, 1623–1627. [Google Scholar]

- Soundararajan, R.; Bovik, A.C. RRED indices: Reduced reference entropic differencing for image quality assessment. IEEE Trans. Image Process. 2012, 21, 517–526. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Li, S.N.; Zhang, F.; Ngan, K.N. Reduced-reference image quality assessment using reorganized DCT-based image representation. IEEE Trans. Multimed. 2011, 13, 824–829. [Google Scholar] [CrossRef]

- Wu, J.J.; Lin, W.S.; Shi, G.M.; Liu, A.M. Reduced-reference image quality assessment with visual information fidelity. IEEE Trans. Multimed. 2013, 15, 1700–1705. [Google Scholar] [CrossRef]

- Sun, T.; Ding, S.; Chen, W. Reduced-reference image quality assessment through SIFT intensity ratio. Int. J. Mach. Learn. Cybern. 2014, 5, 923–931. [Google Scholar] [CrossRef]

- Qi, F.; Zhao, D.B.; Gao, W. Reduced reference stereoscopic image quality assessment based on binocular perceptual information. IEEE Trans. Multimed. 2015, 17, 2338–2344. [Google Scholar] [CrossRef]

- Marziliano, P.; Dufaux, F.; Winkler, S.; Ebrahimi, T. Perceptual blur and ringing metrics: Application to JPEG2000. Signal Process. Image Commun. 2004, 19, 163–172. [Google Scholar] [CrossRef]

- Ferzli, R.; Karam, L.J. A no-reference objective image sharpness metric based on the notion of just noticeable blur (JNB). IEEE Trans. Image Process. 2009, 18, 717–728. [Google Scholar] [CrossRef] [PubMed]

- Narvekar, N.D.; Karam, L.J. A no-reference image blur metric based on the cumulative probability of blur detection (CPBD). IEEE Trans. Image Process. 2011, 18, 2678–2683. [Google Scholar] [CrossRef] [PubMed]

- Vu, C.T.; Phan, T.D.; Chandler, D.M. S3: A spectral and spatial measure of local perceived sharpness in natural images. IEEE Trans. Image Process. 2012, 21, 934–945. [Google Scholar] [CrossRef] [PubMed]

- Hassen, R.; Wang, Z.; Salama, M. Image sharpness assessment based on local phase coherence. IEEE Trans. Image Process. 2013, 22, 2798–2810. [Google Scholar] [CrossRef] [PubMed]

- Bahrami, K.; Kot, A.C. A fast approach for no-reference image sharpness assessment based on maximum local variation. IEEE Signal Process. Lett. 2014, 21, 751–755. [Google Scholar] [CrossRef]

- Kerouh, F.; Serir, A. Perceptual blur detection and assessment in the DCT domain. In Proceedings of the 4th International Conference on Electrical Engineering, Boumerdex, Algeria, 13–15 December 2015; pp. 1–4. [Google Scholar]

- Li, L.D.; Lin, W.S.; Wang, X.S.; Yang, G.B.; Bahrami, K.; Kot, A.C. No-reference image blur assessment based on discrete orthogonal moments. IEEE Trans. Cybern. 2016, 46, 39–50. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.Q.; Wu, T.; Xu, X.H.; Cheng, Z.M.; Pu, S.L.; Chang, C.C. No-reference image blur assessment based on SIFT and DCT. J. Inf. Hiding Multimed. Signal Process. 2018, 9, 219–231. [Google Scholar]

- Cai, H.; Li, L.D.; Qian, J.S. Image blur assessment with feature points. J. Inf. Hiding Multimed. Signal Process. 2015, 6, 2073–4212. [Google Scholar]

- Mukundan, R.; Ong, S.H.; Lee, P.A. Image analysis by Tchebichef moments. IEEE Trans. Image Process. 2001, 10, 1357–1364. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Gu, Z.Y.; Li, H.Y. SDSP: A novel saliency detection method by combining simple priors. In Proceedings of the 20th IEEE International Conference, Melbourne, Australia, 15–18 September 2013; pp. 171–175. [Google Scholar]

- Liu, L.; Liu, B.; Huang, H.; Bovik, A.C. No-reference image quality assessment based on spatial and spectral entropies. Signal Process. Image Commun. 2014, 29, 856–863. [Google Scholar] [CrossRef]

- Li, L.; Xia, W.; Lin, W.; Fang, Y.; Wang, S. No-reference and robust image sharpness evaluation based on multiscale spatial and spectral features. IEEE Trans. Multimed. 2017, 19, 1030–1040. [Google Scholar] [CrossRef]

- Sheikh, H.R.; Sabir, M.F.; Bovik, A.C. A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans. Image Process. 2006, 15, 3440–3451. [Google Scholar] [CrossRef] [PubMed]

- Ponomarenko, N.; Lukin, V.; Zelensky, A.; Egiazarian, K.; Carli, M.; Battisti, F. TID2008—A database for evaluation of full reference visual quality assessment metrics. Adv. Mod. Radioelectron. 2009, 10, 30–45. [Google Scholar]

- Ponomarenko, N.; leremeiew, O.; Lukin, V.; Egiazarian, K.; Jin, L.; Astola, L. Color image database TID2013: Peculiarities and preliminary results. In Proceedings of the European Workshop on Visual Information Processing (EUVIP), Paris, France, 10–12 June 2013; pp. 106–111. [Google Scholar]

- Virtanen, T.; Nuutinen, M.; Vaahteranoksa, M.; Oittinen, P.; Hakkinen, J. CID2013: A database for evaluating no-reference image quality assessment algorithms. IEEE Trans. Image Process. 2015, 24, 390–402. [Google Scholar] [CrossRef] [PubMed]

- Ciancio, A.; Da, C.A.; Da, S.E.; Said, A.; Samadani, R.; Obrador, P. No-reference blur assessment of digital pictures based on multifeature classifiers. IEEE Trans. Image Process. 2011, 20, 64–75. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D.G. Distinctive Image Features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

| Image | (a) | (b) | (c) | (d) | (e) | (f) |

|---|---|---|---|---|---|---|

| DMOS | 0.0730 | 0.3000 | 0.5480 | 0.6730 | 0.7900 | 0.9630 |

| JNB [15] | 2.8646 | 1.5821 | 1.3920 | 1.2821 | 0.8128 | 1.1096 |

| CPBD [16] | 0.1210 | 0.1017 | 0.0033 | 0 | 0 | 0 |

| S3 [17] | 0.1107 | 0.1520 | 0.0637 | 0.0408 | 0.0597 | 0.0257 |

| LPC [18] | 0.9613 | 0.9474 | 0.8607 | 0.6935 | 0.5735 | 0.2031 |

| MLV [19] | 0.0721 | 0.0815 | 0.0239 | 0.0156 | 0.0119 | 0.0044 |

| BIBLE [21] | 3.5098 | 2.6294 | 1.2471 | 0.5666 | 0.2031 | 0.2689 |

| Ref. [22] | 1.4592 | 1.4238 | 0.6613 | 0.4199 | 0.1892 | 0.1638 |

| Ours | 0.9931 | 0.9439 | 0.4674 | 0.4415 | 0.2005 | 0.0669 |

| Image | (a) | (b) | (c) | (d) | (e) | (f) |

|---|---|---|---|---|---|---|

| DMOS | 29.9480 | 30.8705 | 31.0057 | 32.4380 | 34.9790 | 35.0583 |

| JNB [15] | 4.1472 | 3.5025 | 4.5699 | 5.1309 | 3.8593 | 4.1892 |

| CPBD [16] | 0.3273 | 0.5576 | 0.4599 | 0.5438 | 0.3902 | 0.3971 |

| S3 [17] | 0.1586 | 0.2394 | 0.2656 | 0.3621 | 0.1674 | 0.1252 |

| LPC [18] | 0.9571 | 0.9755 | 0.9592 | 0.9605 | 0.9543 | 0.9583 |

| MLV [19] | 0.0913 | 0.0939 | 0.0874 | 0.1035 | 0.0806 | 0.0666 |

| BIBLE [21] | 3.6730 | 3.8631 | 3.5880 | 3.9721 | 3.5388 | 3.3057 |

| Ref. [22] | 1.6156 | 1.6946 | 1.4822 | 1.4156 | 1.3212 | 1.2756 |

| Ours | 1.2174 | 1.1844 | 1.1146 | 1.0966 | 1.0117 | 0.9461 |

| Database | Criterion | CPBD | S3 | LPC | MLV | BIBLE | Ref. [22] | Ours |

|---|---|---|---|---|---|---|---|---|

| LIVE | PLCC | 0.8956 | 0.9436 | 0.9017 | 0.9429 | 0.9622 | 0.9694 | 0.9739 |

| KRCC | 0.7652 | 0.8004 | 0.7149 | 0.7776 | 0.8328 | 0.8464 | 0.8561 | |

| SRCC | 0.9190 | 0.9441 | 0.8886 | 0.9316 | 0.9611 | 0.9671 | 0.9712 | |

| RMSE | 6.9929 | 5.2058 | 6.7972 | 5.2366 | 4.2815 | 3.8603 | 3.5713 | |

| CSIQ | PLCC | 0.8818 | 0.9107 | 0.9412 | 0.9488 | 0.9403 | 0.9492 | 0.9518 |

| KRCC | 0.7079 | 0.7294 | 0.7683 | 0.7713 | 0.7439 | 0.7678 | 0.7688 | |

| SRCC | 0.8847 | 0.9059 | 0.9224 | 0.9247 | 0.9132 | 0.9272 | 0.9294 | |

| RMSE | 0.1351 | 0.1184 | 0.0968 | 0.0905 | 0.0975 | 0.0902 | 0.0879 | |

| TID2008 | PLCC | 0.8235 | 0.8541 | 0.8903 | 0.8585 | 0.8929 | 0.9101 | 0.9151 |

| KRCC | 0.6310 | 0.6124 | 0.7155 | 0.6524 | 0.7009 | 0.7381 | 0.7640 | |

| SRCC | 0.8412 | 0.8418 | 0.8959 | 0.8548 | 0.8915 | 0.9075 | 0.9239 | |

| RMSE | 0.6657 | 0.6104 | 0.5344 | 0.6018 | 0.5284 | 0.4862 | 0.4731 | |

| TID2013 | PLCC | 0.8552 | 0.8813 | 0.8197 | 0.8827 | 0.9051 | 0.9264 | 0.9276 |

| KRCC | 0.6467 | 0.6397 | 0.7479 | 0.6810 | 0.7066 | 0.7479 | 0.7660 | |

| SRCC | 0.8518 | 0.8609 | 0.9191 | 0.8787 | 0.8988 | 0.9243 | 0.9327 | |

| RMSE | 0.6467 | 0.5896 | 0.7148 | 0.5865 | 0.5305 | 0.4699 | 0.4660 | |

| Weighted average | PLCC | 0.8680 | 0.9019 | 0.8912 | 0.9139 | 0.9288 | 0.9418 | 0.9451 |

| KRCC | 0.6944 | 0.7051 | 0.7384 | 0.7285 | 0.7515 | 0.7792 | 0.7915 | |

| SRCC | 0.8780 | 0.8943 | 0.9071 | 0.9021 | 0.9189 | 0.9350 | 0.9396 | |

| RMSE | 2.2724 | 1.7449 | 2.1976 | 1.7430 | 1.4509 | 1.3087 | 1.2240 |

| Database | Criterion | CPBD | S3 | LPC | MLV | BIBLE | Ref. [22] | OURS |

|---|---|---|---|---|---|---|---|---|

| CID2013 | PLCC | 0.5254 | 0.6863 | 0.7013 | 0.6890 | 0.6943 | 0.6770 | 0.7104 |

| SRCC | 0.4448 | 0.6460 | 0.6024 | 0.6206 | 0.6888 | 0.6685 | 0.6843 | |

| RMSE | 19.4530 | 16.6190 | 16.2474 | 16.5594 | 16.4794 | 16.8530 | 16.1160 | |

| BID | PLCC | 0.2704 | 0.4271 | 0.3901 | 0.3643 | 0.3606 | 0.3018 | 0.3915 |

| SRCC | 0.2717 | 0.4253 | 0.3161 | 0.3236 | 0.3165 | 0.2935 | 0.3352 | |

| RMSE | 1.2053 | 0.1320 | 1.1528 | 1.1659 | 1.1876 | 1.1935 | 1.1506 |

| Size | 4 × 4 | 6 × 6 | 8 × 8 | 10 × 10 | 12 × 12 |

|---|---|---|---|---|---|

| PLCC | 0.9191 | 0.9451 | 0.9407 | 0.9423 | 0.9408 |

| SRCC | 0.9100 | 0.9396 | 0.9335 | 0.9341 | 0.9319 |

| RMSE | 1.5724 | 1.2240 | 1.2279 | 1.2378 | 1.3086 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Li, P.; Xu, X.; Li, L.; Chang, C.-C. No-Reference Image Blur Assessment Based on Response Function of Singular Values. Symmetry 2018, 10, 304. https://doi.org/10.3390/sym10080304

Zhang S, Li P, Xu X, Li L, Chang C-C. No-Reference Image Blur Assessment Based on Response Function of Singular Values. Symmetry. 2018; 10(8):304. https://doi.org/10.3390/sym10080304

Chicago/Turabian StyleZhang, Shanqing, Pengcheng Li, Xianghua Xu, Li Li, and Ching-Chun Chang. 2018. "No-Reference Image Blur Assessment Based on Response Function of Singular Values" Symmetry 10, no. 8: 304. https://doi.org/10.3390/sym10080304