False Data Injection Attack Based on Hyperplane Migration of Support Vector Machine in Transmission Network of the Smart Grid

Abstract

:1. Introduction

- We analyze the vulnerability of the detector based on SVM, which is the adverse effect of the updating of the training set of the detector based on SVM.

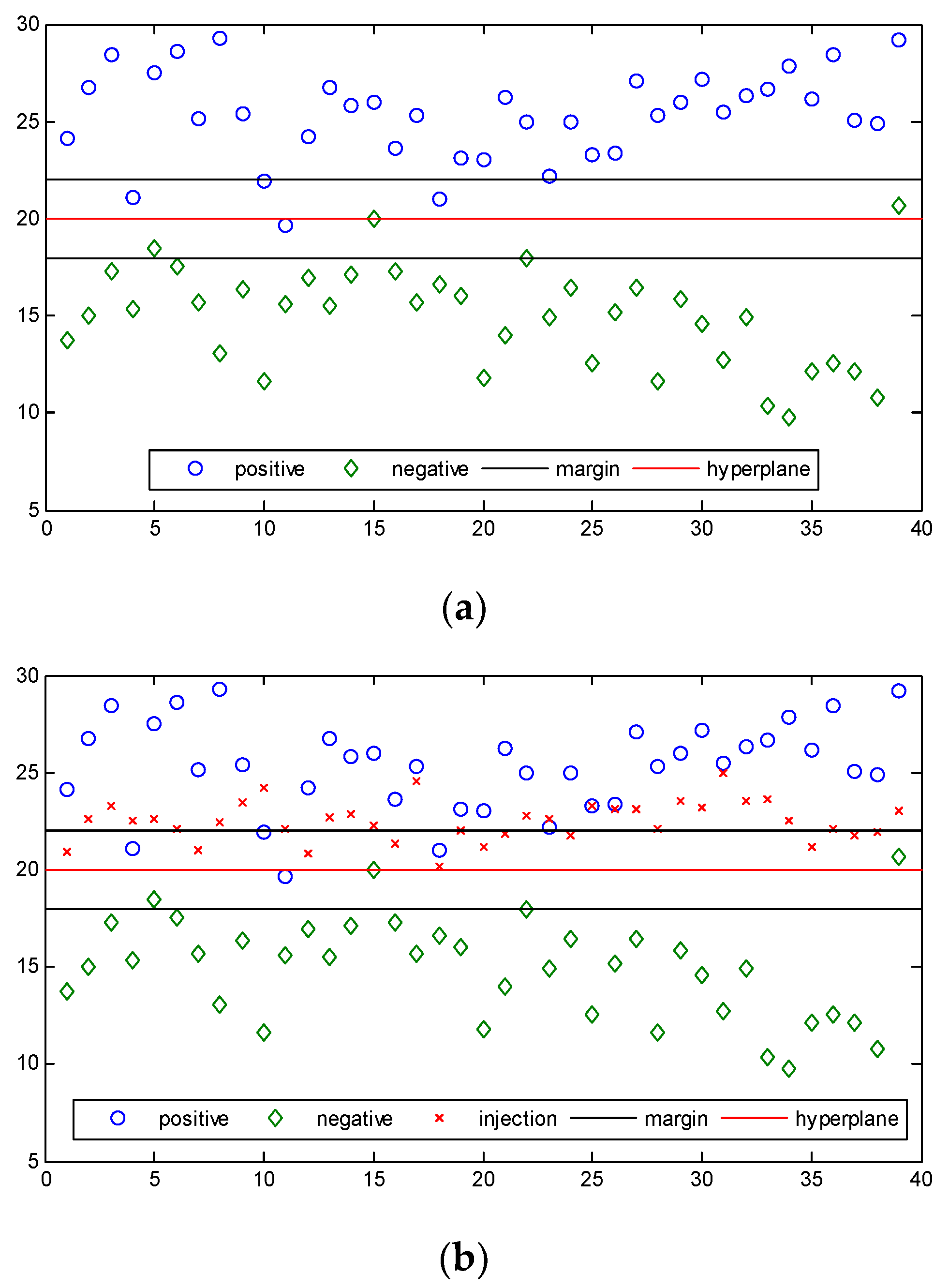

- Utilizing the above vulnerability, we propose an attack method that attackers can inject data into the positive sample space to shift the hyperplane towards the side of the negative sample space.

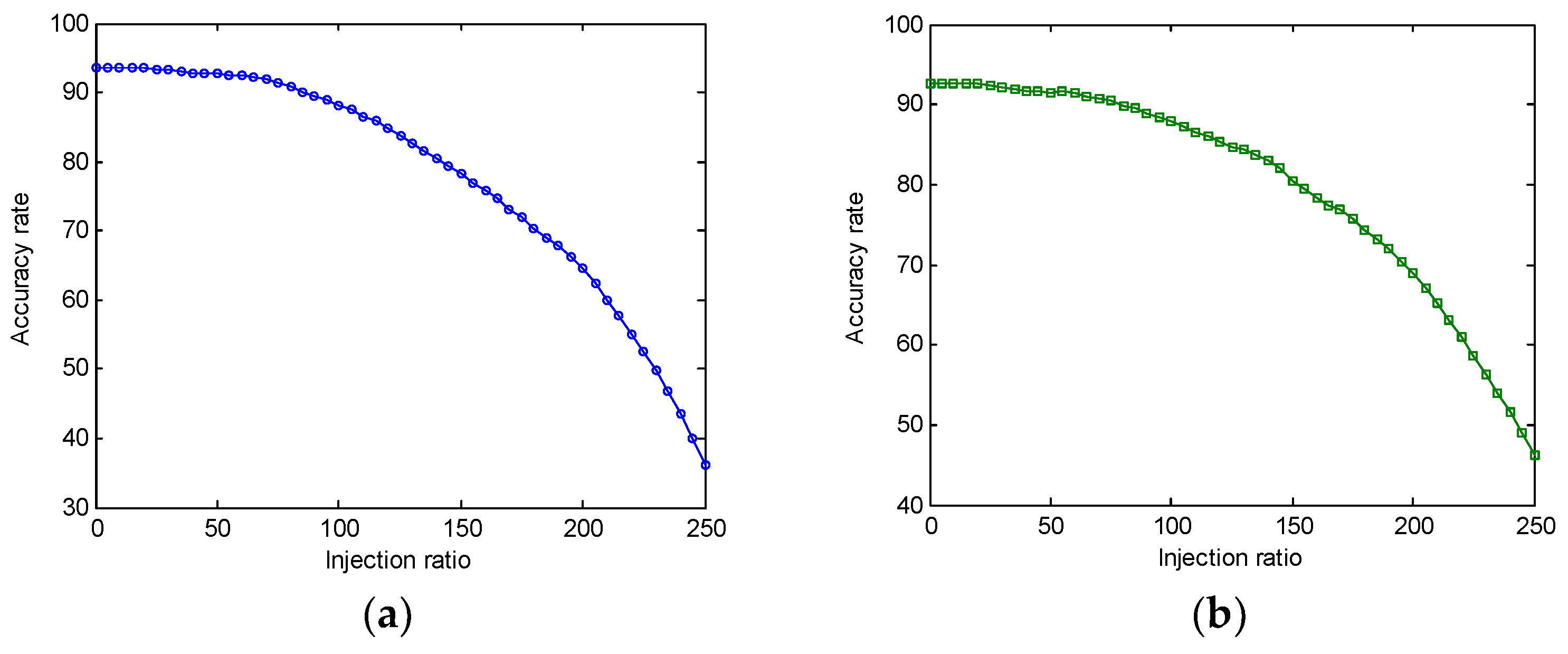

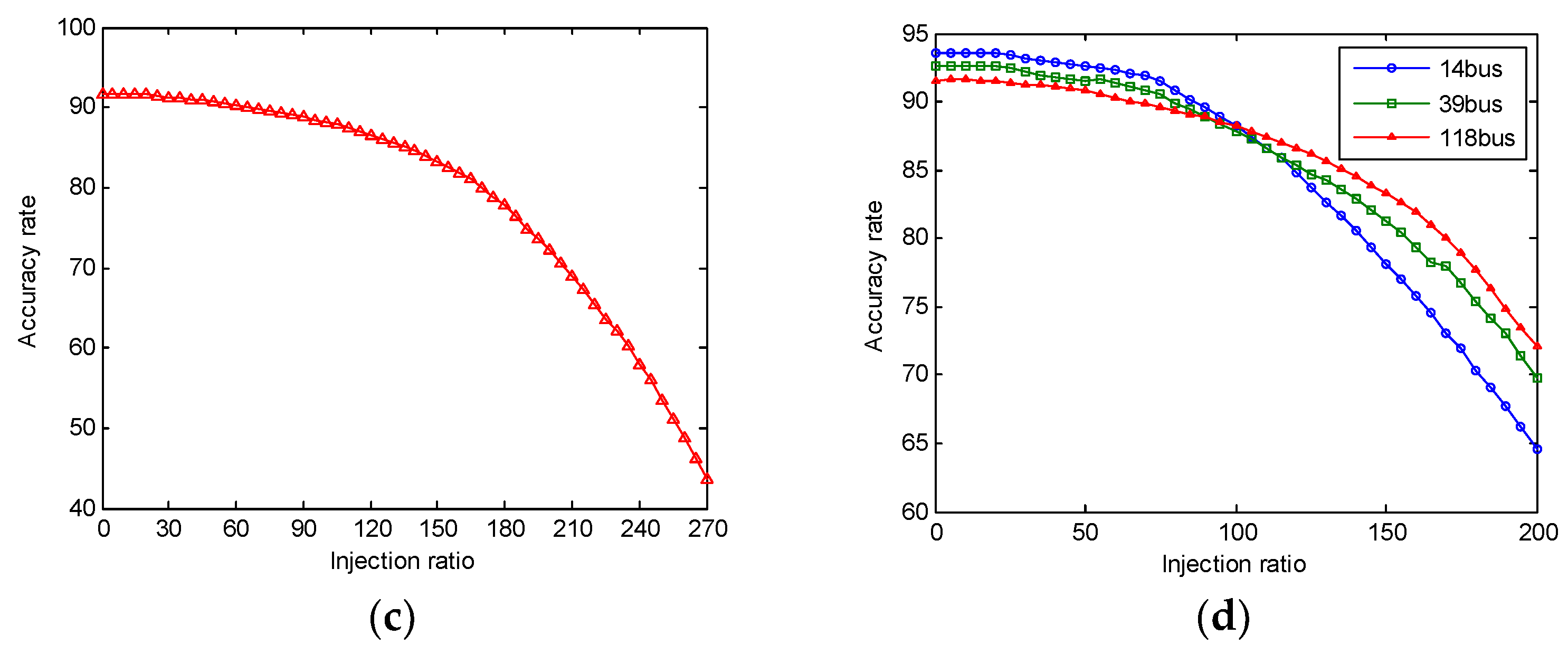

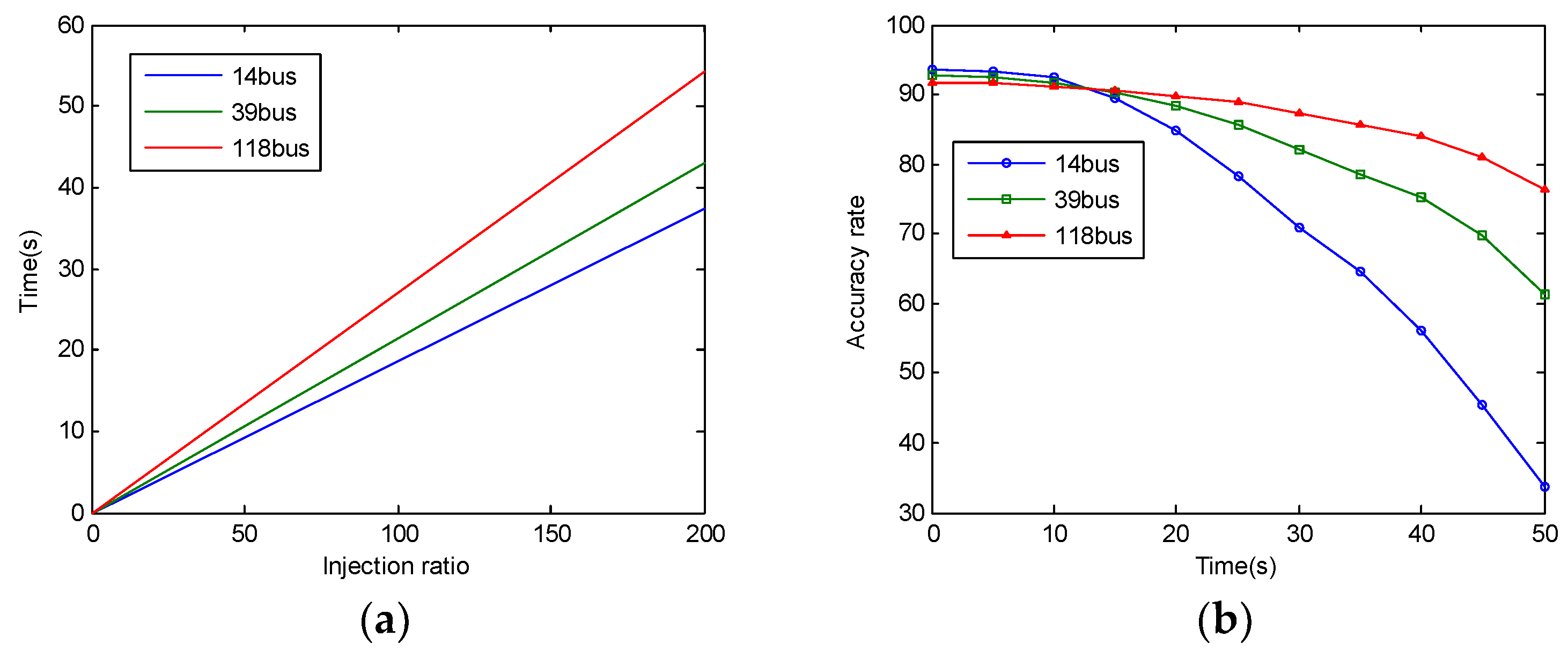

- We investigate two kinds of data injection modes: centralized injection and identically distributed injection, and analyze the impact of the two kinds of injection modes on the training set and the effectiveness of detecting them.

2. Preliminary

2.1. System Model

2.2. False Data Injection Attack

2.3. Detector Based on SVM

3. Attack and Detection Model

3.1. The Vulnerability Analysis of the Detector Based on SVM

3.2. Attack Method for the Detector Based on SVM

3.3. The Limitation of the Detector Based on SVM

3.4. The Detection Method

4. Numerical Results

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Sun, C.C.; Liu, C.C.; Xie, J. Cyber-Physical System Security of a Power Grid: State-of-the-Art. Electronics 2016, 5, 40. [Google Scholar] [CrossRef]

- Liu, Y.; Ning, P.; Reiter, M.K. False data injection attacks against state estimation in electric power grids. In Proceedings of the 16th ACM Conference on Computer and Communications Security, Chicago, IL, USA, 9–13 November 2009; pp. 21–32. [Google Scholar] [CrossRef]

- Beg, O.; Johnson, T.; Davoudi, A. Detection of False-data Injection Attacks in Cyber-Physical DC Microgrids. IEEE Trans. Ind. Inform. 2017. [Google Scholar] [CrossRef]

- Qin, Z.; Li, Q.; Chuah, M.C. Unidentifiable Attacks in Electric Power Systems. In Proceedings of the 2012 IEEE/ACM Third International Conference on Cyber-Physical Systems, Beijing, China, 17–19 April 2012; pp. 193–202. [Google Scholar] [CrossRef]

- Kosut, O.; Jia, L.; Thomas, R.J.; Tong, L. Malicious Data Attacks on the Smart Grid. IEEE Trans. Smart Grid 2011, 2, 645–658. [Google Scholar] [CrossRef]

- Kosut, O.; Jia, L.; Thomas, R.J.; Tong, L. Limiting false data attacks on power system state estimation. In Proceedings of the 2010 44th Annual Conference on Information Sciences and Systems (CISS), Princeton, NJ, USA, 17–19 March 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Huang, Y.; Li, H.; Campbell, K.A.; Han, Z. Defending false data injection attack on smart grid network using adaptive CUSUM test. In Proceedings of the 2011 45th Annual Conference on Information Sciences and Systems, Baltimore, MD, USA, 23–25 March 2011; pp. 1–6. [Google Scholar] [CrossRef]

- Gu, C.; Jirutitijaroen, P.; Motani, M. Detecting False Data Injection Attacks in AC State Estimation. IEEE Trans. Smart Grid 2015, 6, 2476–2483. [Google Scholar] [CrossRef]

- Mousavian, S.; Valenzuela, J.; Wang, J. Real-time data reassurance in electrical power systems based on artificial neural networks. Electr. Power Syst. Res. 2013, 96, 285–295. [Google Scholar] [CrossRef]

- Sandberg, H.; Teixeira, A.; Johansson, K.H. On Security Indices for State Estimators in Power Networks. In Proceedings of the Preprints of the First Workshop on Secure Control Systems, CPSWEEK 2010, Stockholm, Sweden, 12–16 April 2010. [Google Scholar]

- Dán, G.; Sandberg, H. Stealth Attacks and Protection Schemes for State Estimators in Power Systems. In Proceedings of the First IEEE International Conference on Smart Grid Communications, Gaithersburg, MD, USA, 4–6 October 2010; pp. 214–219. [Google Scholar] [CrossRef]

- Valenzuela, J.; Wang, J.; Bissinger, N. Real-time intrusion detection in power system operations. IEEE Trans. Power Syst. 2013, 28, 1052–1062. [Google Scholar] [CrossRef]

- Esmalifalak, M.; Liu, L.; Nguyen, N.; Zheng, R.; Han, Z. Detecting Stealthy False Data Injection Using Machine Learning in Smart Grid. IEEE Syst. J. 2014, 1–9. [Google Scholar] [CrossRef]

- He, X.; Yang, X.; Lin, J.; Ge, L.; Yu, W.; Yang, Q. Defending against Energy Dispatching Data integrity attacks in smart grid. In Proceedings of the 2015 IEEE 34th International Performance Computing and Communications Conference (IPCCC), Nanjing, China, 14–16 December 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Landford, J.; Meier, R.; Barella, R.; Wallace, S.; Zhao, X.; Cotilla-Sanchez, E.; Bass, R.B. Fast Sequence Component Analysis for Attack Detection in Synchrophasor Networks. Comput. Sci. 2015, 9, 1–8. [Google Scholar]

- Ozay, M.; Esnaola, I.; Yarman Vural, F.T.; Kulkarni, S.R.; Poor, H.V. Machine Learning Methods for Attack Detection in the Smart Grid. IEEE Trans Neural Netw. Learn. Syst. 2015, 27, 1773. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Amin, M.; Fu, J.; Moussa, H.B. A Novel Data Analytical Approach for False Data Injection Cyber-Physical Attack Mitigation in Smart Grids. IEEE Access 2017. [Google Scholar] [CrossRef]

- Srikantha, P.; Kundur, D. A DER Attack-Mitigation Differential Game for Smart Grid Security Analysis. IEEE Trans. Smart Grid 2017, 7, 1476–1485. [Google Scholar] [CrossRef]

- Hao, J.; Piechocki, R.J.; Kaleshi, D.; Chin, W.H.; Fan, Z. Sparse Malicious False Data Injection Attacks and Defense Mechanisms inSmart Grids. IEEE Trans. Ind. Inform. 2017, 11, 1–12. [Google Scholar] [CrossRef]

- Amin, S.; Lin, Z.S.; Huang, Y.L.; Huang, C.-Y.; Sastry, S. Attacks against process control systems: Risk assessment, detection, and response. In Proceedings of the 6th ACM Symposium on Information, Computer and Communications Security, Hong Kong, China, 22–24 March 2011; pp. 355–366. [Google Scholar] [CrossRef]

- Lin, J.; Yu, W.; Yang, X.; Xu, G.; Zhao, W. On False Data Injection Attacks against Distributed Energy Routing in Smart Grid. In Proceedings of the 2012 IEEE/ACM Third International Conference on Cyber-Physical Systems, Beijing, China, 17–19 April 2012; pp. 183–192. [Google Scholar] [CrossRef]

- Wood, A.J.; Wollenberg, B.F.; Sheblé, G.B. Power Generation, Operation, and Control, 3rd Edition. IEEE Power Energy Mag. 2013, 12, 90–93. [Google Scholar]

- Cuello-Reyna, A.A.; Cedeno-Maldonado, J.R. Differential Evolution-Based Weighted Least Squares State Estimation with Phasor Measurement Units. In Proceedings of the 2006 49th IEEE International Midwest Symposium on Circuits and Systems, San Juan, Puerto Rico, 6–9 August 2006; pp. 576–580. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, B.; Zhu, P.; Chen, Y.; Xun, P.; Zhang, Z. False Data Injection Attack Based on Hyperplane Migration of Support Vector Machine in Transmission Network of the Smart Grid. Symmetry 2018, 10, 165. https://doi.org/10.3390/sym10050165

Wang B, Zhu P, Chen Y, Xun P, Zhang Z. False Data Injection Attack Based on Hyperplane Migration of Support Vector Machine in Transmission Network of the Smart Grid. Symmetry. 2018; 10(5):165. https://doi.org/10.3390/sym10050165

Chicago/Turabian StyleWang, Baoyao, Peidong Zhu, Yingwen Chen, Peng Xun, and Zhenyu Zhang. 2018. "False Data Injection Attack Based on Hyperplane Migration of Support Vector Machine in Transmission Network of the Smart Grid" Symmetry 10, no. 5: 165. https://doi.org/10.3390/sym10050165