Demographic-Assisted Age-Invariant Face Recognition and Retrieval

Abstract

1. Introduction

- (1)

- Does demographic-estimation-based re-ranking improve the face identification and retrieval performance across aging variations?

- (2)

- What is the individual role of gender, race, and age features in improving face recognition and retrieval performance?

- (3)

- Do deeply learned asymmetric features perform better or worse compared to existing handcrafted features?

2. Related Works

2.1. Demographic Estimation

2.2. Recognition and Retrieval of Face Images across Aging Variations

2.3. Recognition of Face Images Based on Demographic Estimates

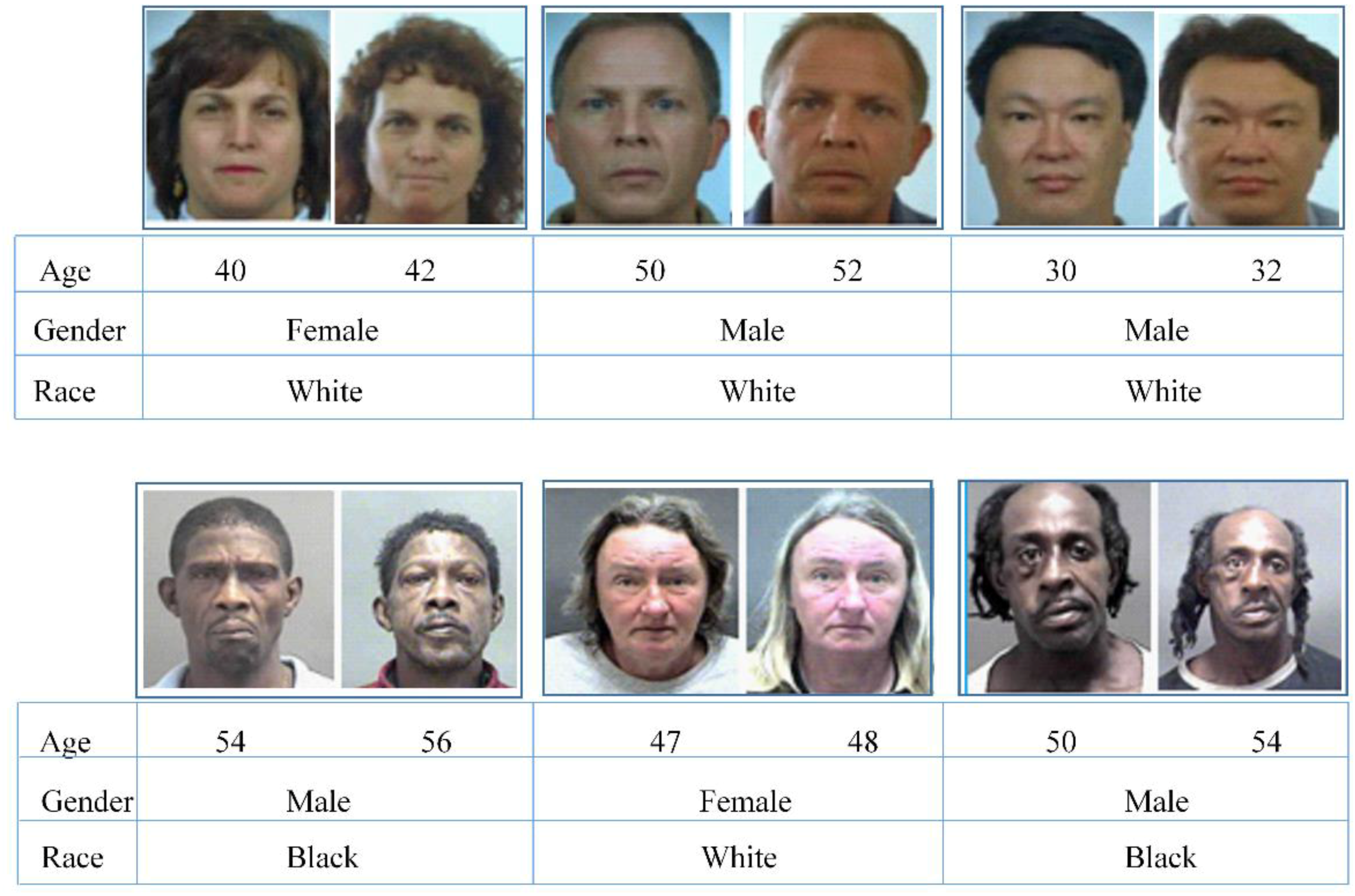

3. Demographic Estimation

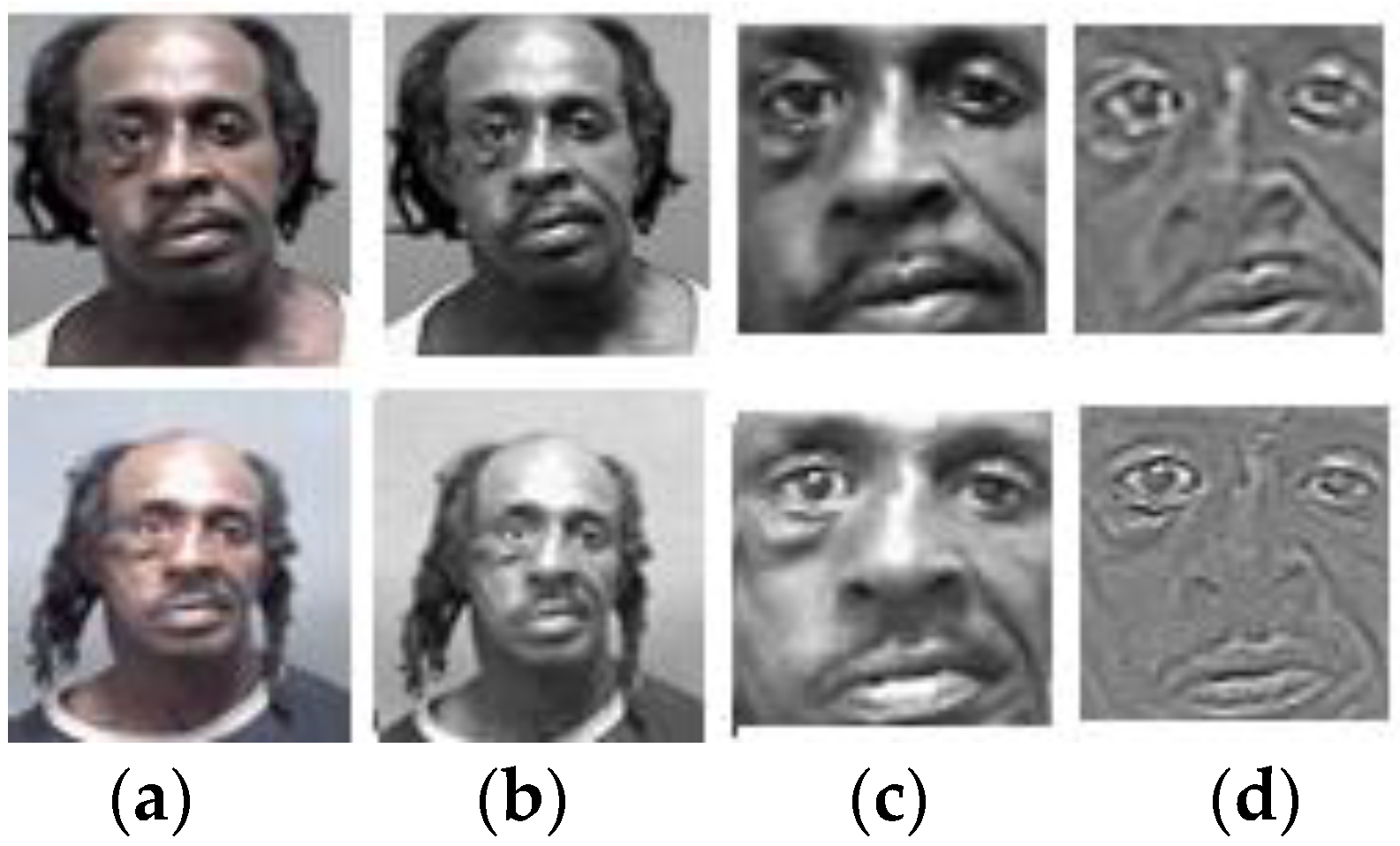

3.1. Preprocessing

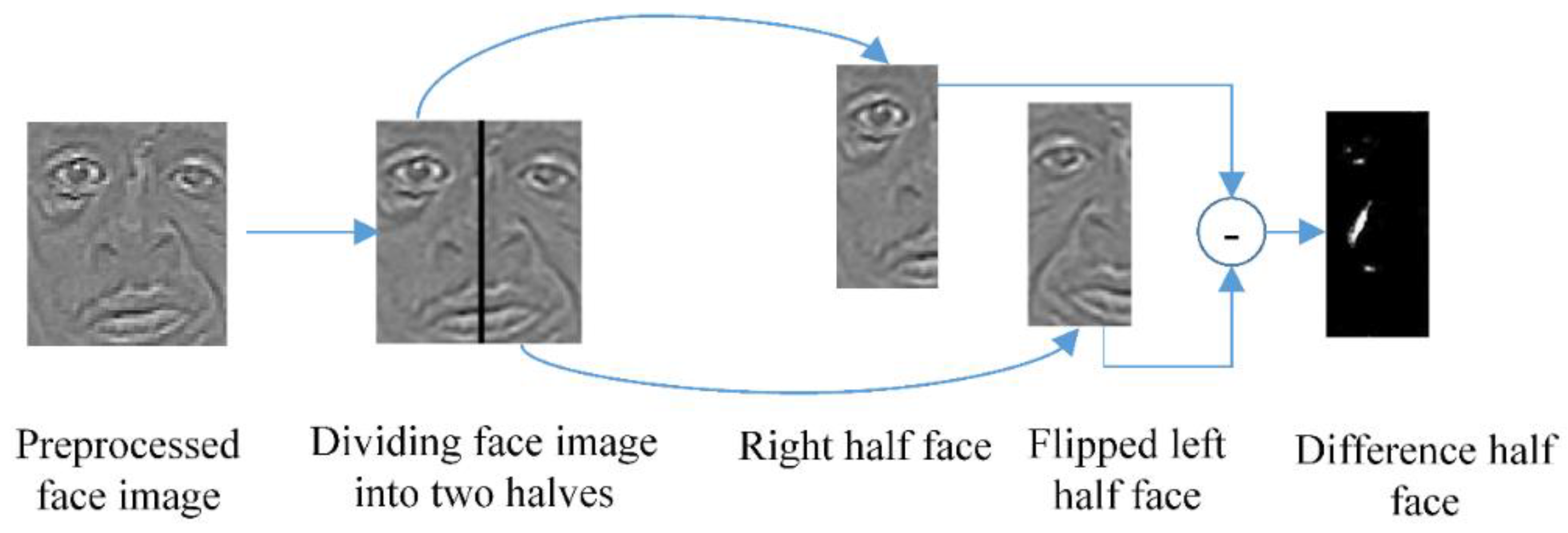

3.2. Demographic Features Extraction

3.3. Experimental Results of Demographic Estimation

3.3.1. Results on MORPH II Dataset

3.3.2. Results on FERET Dataset

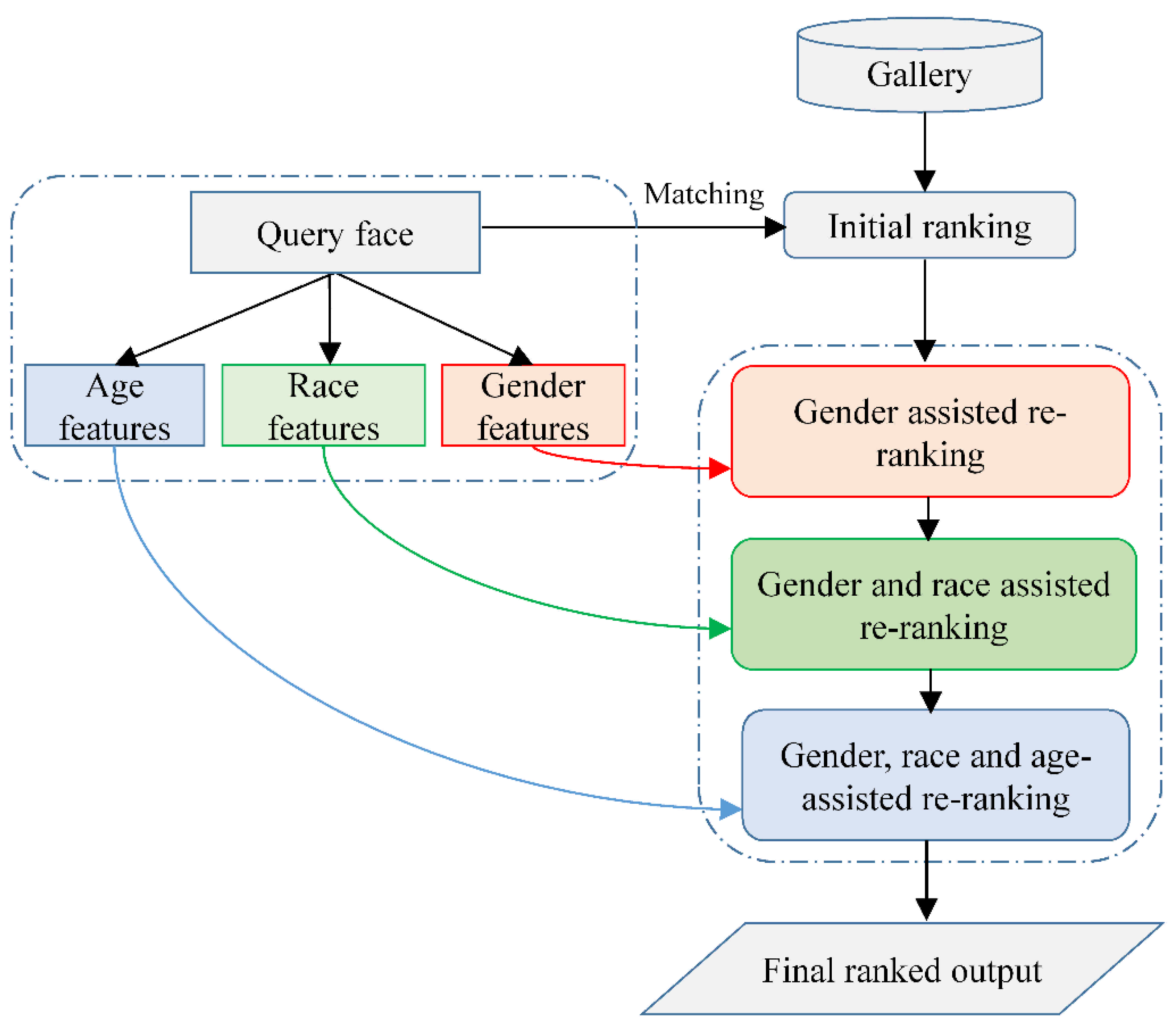

4. Recognition and Retrieval of Face Images across Aging Variations

- (i)

- A query face image is first matched against a gallery using deep CNN (dCNN) features. To extract these features, we used VGGNet [51]. Particularly, we used a variant of VGGNet called VGG-16, which contains 16 layers with learnable weights, including 13 convolution and 3 fully connected layers. The VGGNet used a filter of size 3 × 3. The combination of two 3 × 3 filters resulted in a receptive field of 5 × 5, simulating a larger filter but retaining the benefits of smaller filters. We selected VGGNet due to its better performance for the desired task with relatively simpler architecture. The matching stage returned top k matches from gallery against the query face image.

- (ii)

- Extract demographic features (age, gender, and race) using three CNNs A, G and R.

- (iii)

- The top k matched face images are then re-ranked by using gender, race and age features as shown in Figure 5.

- (i)

- Re-ranking by gender features: In this step, the top k matched face images are re-ranked based on the gender-specific features, , of the query face image, returning gender re-ranked top k matches. The re-ranking applied in this step helps to refine the initial top k matches based on the gender features of the query face image. The resulting re-ranked images are called gender re-ranked k matches.

- (ii)

- Re-ranking by race features: The gender re-ranked face images are again re-ranked using race-specific features, , resulting in the gender–race re-ranked top k matches. The re-ranking applied in this step helps to refine the gender re-ranked k matches obtained in the first step based on the race of the query face image. The resulting re-ranked images are called gender–race re-ranked k matches.

- (iii)

- Re-ranking by age features: Finally, the gender–race re-ranked face images are re-ranked using age-specific features, , returning gender–race–age re-ranked top k matches. The re-ranking applied in final step helps to refine the gender–race re-ranked face images based on the aging features of the query face image. The resulting re-ranked images are called gender–race–age re-ranked top k matches. This step produces the final ranked output as shown in Figure 5.

4.1. Evaluation

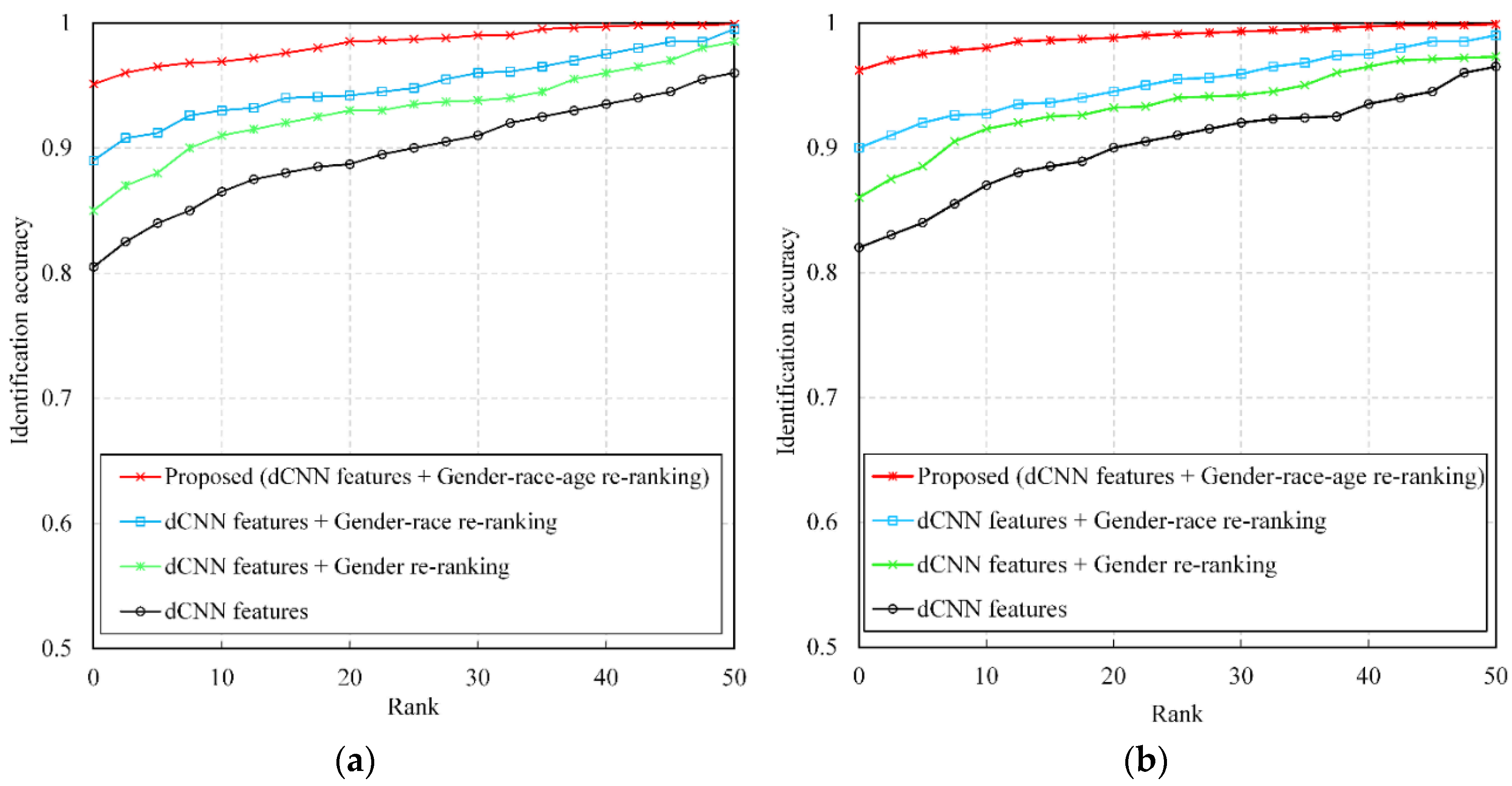

4.1.1. Face Recognition Experiments

4.1.2. Comparison of Face Recognition Results with State-Of-The-Art

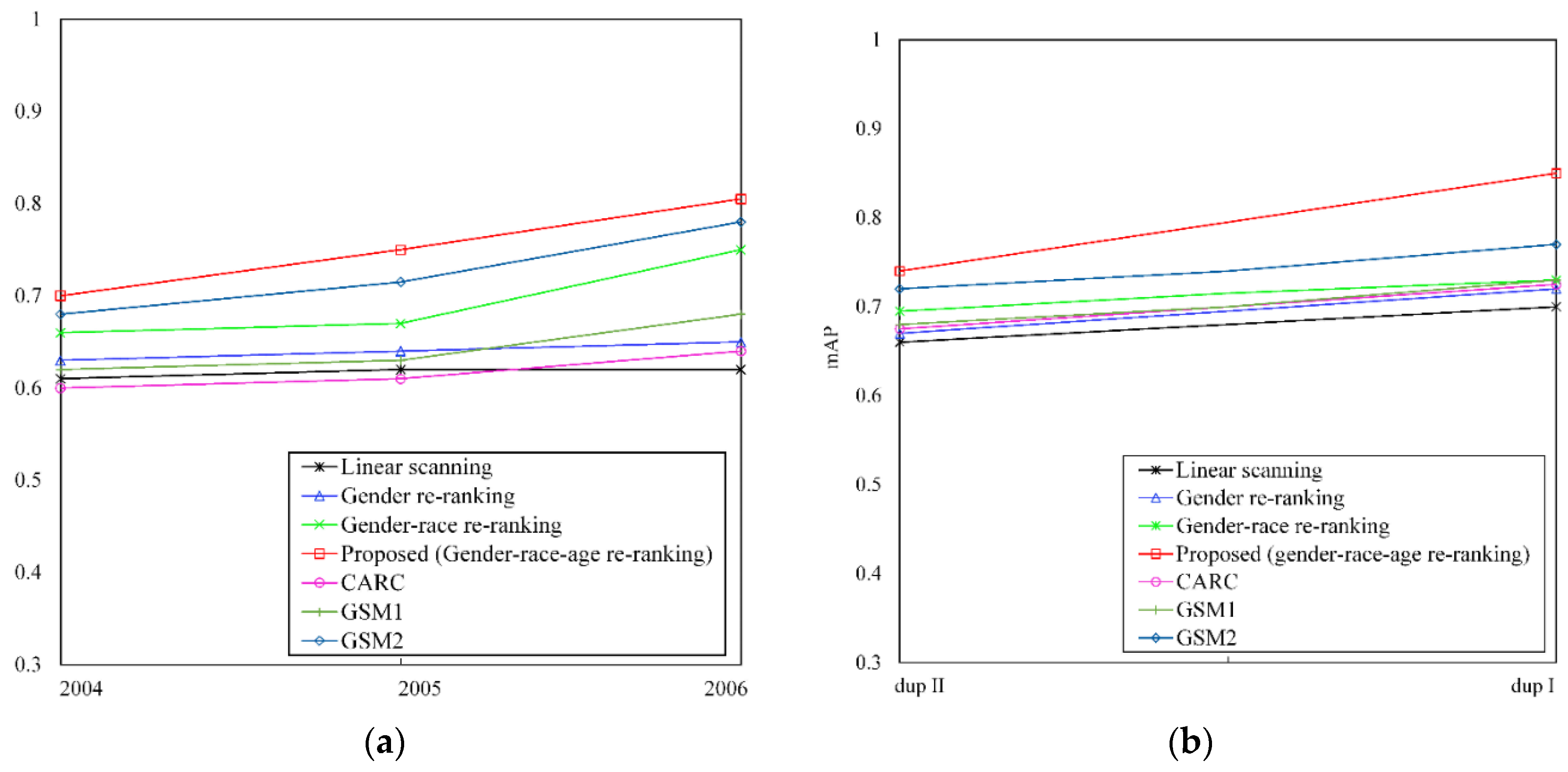

4.1.3. Face Retrieval Experiments

4.1.4. Comparison of Face Retrieval Results with State-Of-The-Art

5. Results Related Discussion

- (i)

- We have encountered more complicated tasks of demographic-assisted face recognition and retrieval compared to existing methods which consider either demographic estimation or face recognition.

- (ii)

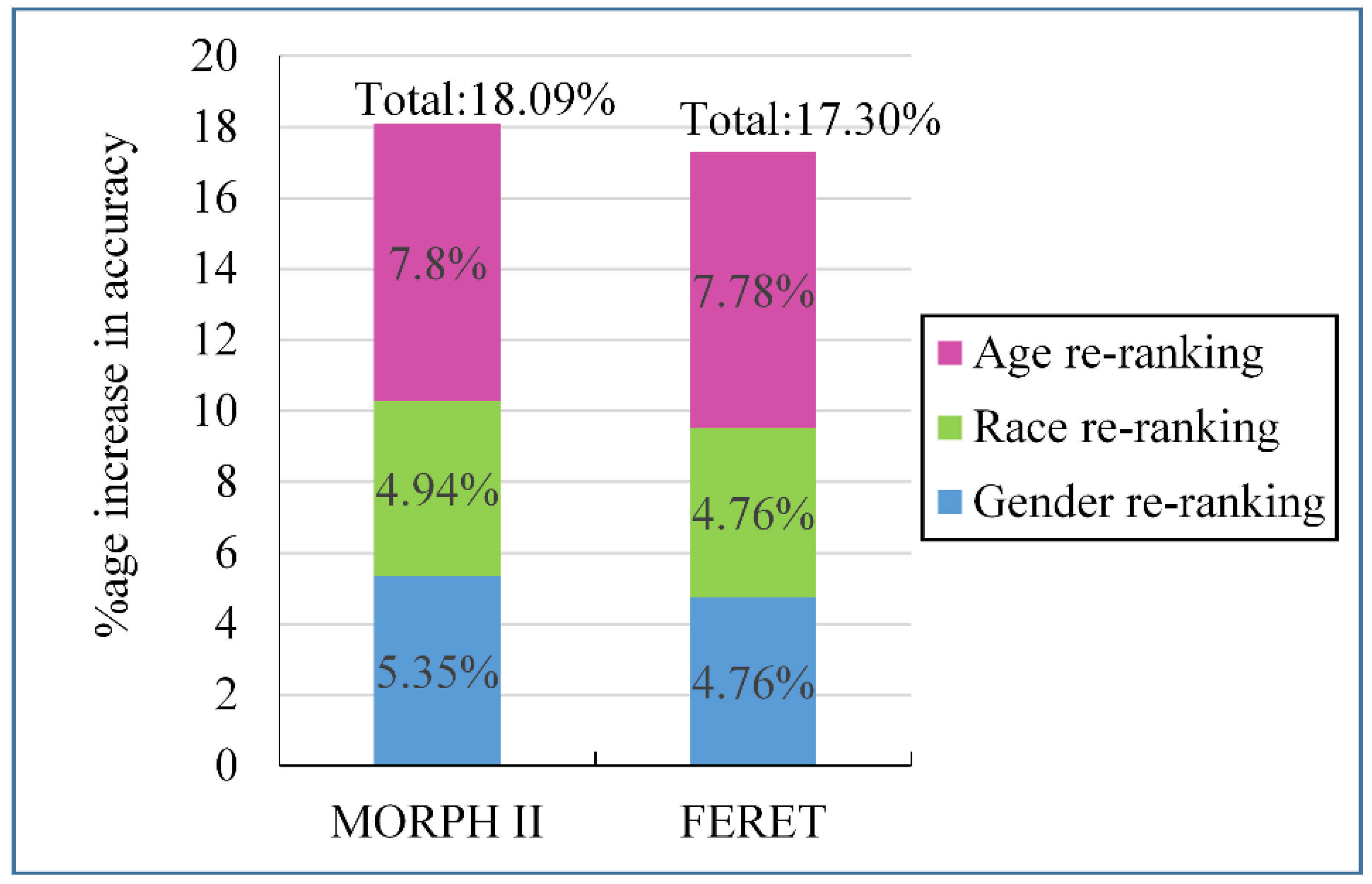

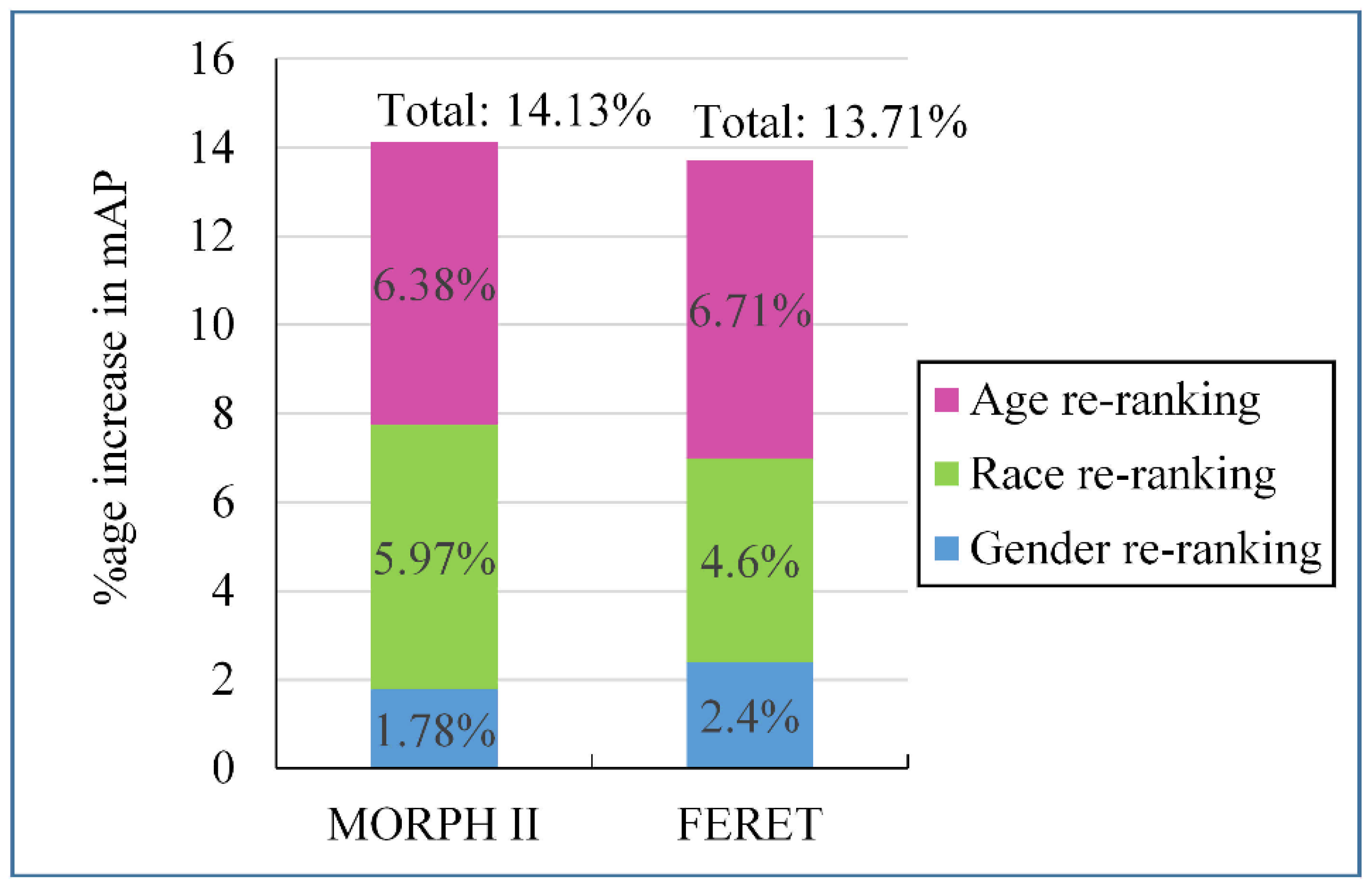

- The existing methods to age-invariant face recognition and retrieval lack the analysis of impact of demographic features on face recognition and retrieval performance. In contrast, the current study is the first of its kind analyzing the impact of demographic information on face recognition and retrieval performance. In Figure 9 and Figure 10, we illustrate the impact of gender-, race-, and aging-features-based re-ranking on recognition and retrieval accuracies, respectively. One can observe that aging features have the most significant impact on both the identification accuracy and mAP compared to the race and gender features for MORPH II and FERET datasets. This is because aging features are heterogeneous in nature, i.e., more person-specific [36] thus helpful in recognizing face images across aging. In contrast, race and gender features are more population-specific and less person specific, as suggested in [3,4].

- (iii)

- The comparative results for face recognition and retrievals tasks suggest that demographic re-ranking can improve the recognition accuracies effectively. In all presented experiments on MORPH II and FERET datasets, gender–race–age re-ranking yields superior accuracies compared to gender and gender–race re-ranking. This is because face images are first re-ranked-based on gender features. In this re-ranking stage, query face image is matched with face images of a specific gender only. The gender-re-ranked face images are then re-ranked-based on race features resulting in gender–race re-ranked results. In gender–race re-ranking, the query face image is matched with face images of a specific gender and race. Finally, the gender–race re-ranked face images are re-ranked-based on gender, race and age features. In this final re-ranking stage, query face image is matched with face images of a specific gender, race, and age group, yielding the highest recognition accuracies.

- (iv)

- The retrieval results suggest that demographic-assisted re-ranking yields superior mAPs compared to the linear scan approach, where a query face is matched against the entire gallery, resulting in lower mAP accuracies. In contrast, the proposed gender–race–age re-ranking makes it possible to match a query against face images of specific gender, race, and age group, resulting in higher mAPs. For example, the linear scan approach gives mAP of 61.00% for the MORPH II query set acquired in the year 2004 (refer to Figure 8a), compared to mAP of 70.00% obtained using the proposed approach for the same query set.

- (v)

- Motivated by the fact that facial asymmetry is a strong indicator of age group, gender, and race [7,8,9], we used demographic estimates to enhance recognition and retrieval performance. The demographic assisted face recognition and retrieval accuracies are benchmarked with accuracies obtained from a dCNN model. It is observed that demographic assisted accuracies are better than the benchmark results, suggesting the effectiveness of the proposed approach. To the best of our knowledge, the current work is the first of its kind that uses demographic features with face recognition and a retrieval algorithm to achieve better accuracies.

- (vi)

- Through extensive experiments, we have demonstrated the effectiveness of our approach against the existing methods including CARC [45], GSM1 [52], and GSM2 [52] on MORPH II and FERET datasets. The superior performance of our approach can be attributed to the deeply learned asymmetric facial features and demographic re-ranking strategy to recognize and retrieve face images across aging variations. In contrast, the most related competitors, including CARC, GSM1, and GSM2, consider only aging information to recognize and retrieve face images across aging variations.

- (vii)

- The superior performance of our method compared to the discriminative approach suggested in [10] can be attributed to two key factors. First, the proposed approach employs deeply learned face features in contrast to handcrafted features used in [10]. Second, the proposed approach uses the gender and race features in addition to the aging features. The demographic-assisted face recognition also surpasses the face identification results achieved by the age-assisted face recognition presented in [38], suggesting the discriminative power of the gender and race features in addition to aging features in recognizing face images with temporal variations.

- (viii)

- Face identification and retrieval results suggest that the proposed approach is well suited for age-invariant face recognition, owing to the discriminative power of population-specific gender and race features.

- (ix)

- To analyze the errors introduced by demographic estimation in the proposed face recognition and retrieval approach, we reported the rank-1 identification accuracies and mAPs for the scenario if actual age group, gender, and race information (ground truth) is used (refer to row viii of Table 4). The meager difference between accuracies obtained from actual and estimated age group, gender, and race features suggests the efficacy of the proposed demographic-estimation-based approach.

6. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Mayes, E.; Murray, P.G.; Gunn, D.A.; Tomlin, C.C.; Catt, S.D.; Wen, Y.B.; Zhou, L.P.; Wang, H.Q.; Catt, M.; Granger, S.P. Environmental and lifestyle factors associated with percieved age in Chinese women. PLoS ONE 2010, 5, e15273. [Google Scholar] [CrossRef] [PubMed]

- Balle, D.S. Anatomy of Facial Aging. Available online: https://drballe.com/conditions-treatment/anatomy-of-facial-aging-2 (accessed on 28 January 2017).

- Zhuang, Z.; Landsittel, D.; Benson, S.; Roberge, R. Facial anthropometric differences among gender, ethnicity, and age groups. Ann. Occup. Hyg. 2010, 54, 391–402. [Google Scholar] [PubMed]

- Farkas, L.G.; Katic, M.J.; Forrest, C.R. International anthropometric study of facial morphology in various ethnic groups/races. J. Craniofac. Surg. 2005, 16, 615–646. [Google Scholar] [CrossRef] [PubMed]

- Ramanathan, N.; Chellappa, R.; Biswas, S. Computational methods for modeling facial aging: A survey. J. Vis. Lang. Comput. 2009, 20, 131–144. [Google Scholar] [CrossRef]

- Bruce, V.; Burton, A.M.; Hanna, E.; Healey, P.; Mason, O.; Coombs, A. Sex discrimination: How do we tell the difference between male and female faces? Perception 1993, 22, 131–152. [Google Scholar] [CrossRef] [PubMed]

- Little, A.C.; Jones, B.C.; Waitt, C.; Tiddem, B.P.; Feinberg, D.R.; Perrett, D.I.; Apicella, C.L.; Marlowe, F.W. Symmetry is related to sexual dimorphism in faces: Data across culture and species. PLoS ONE 2008, 3. [Google Scholar] [CrossRef] [PubMed]

- Steven, W.; Randy, T. Facial masculinity and fluctuatinga symmetry. Evol. Hum. Behav. 2003, 24, 231–241. [Google Scholar]

- Morrison, C.S.; Phillips, B.Z.; Chang, J.T.; Sullivan, S.R. The Relationship between Age and Facial Asymmetry. 2011. Available online: http://meeting.nesps.org/2011/80.cgi (accessed on 28 April 2017).

- Sajid, M.; Taj, I.A.; Bajwa, U.I.; Ratyal, N.I. The role of facial asymmetry in recognizing age-separated face images. Comput. Electr. Eng. 2016, 54, 255–270. [Google Scholar] [CrossRef]

- Fu, Y.; Huang, T.S. Human age estimation with regression on discriminative aging manifold. IEEE Trans. Multimed. 2008, 10, 578–584. [Google Scholar] [CrossRef]

- Lu, K.; Seshadri, K.; Savvides, M.; Bu, T.; Suen, C. Contourlet Appearance Model for Facial Age Estimation. 2011. Available online: https://pdfs.semanticscholar.org/bc82/a5bfc6e5e8fd77e77e0ffaadedb1c48d6ae4.pdf (accessed on 28 April 2017).

- Bekios-Calfa, J.; Buenaposada, J.M.; Baumela, L. Revisiting linear discriminant techniques in gender recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 858–864. [Google Scholar] [CrossRef] [PubMed]

- Wu, T.; Turaga, P.; Chellappa, R. Age estimation and face verification across aging using landmarks. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1780–1788. [Google Scholar] [CrossRef]

- Hadid, A.; Pietikanen, M. Demographic classification from face videos using manifold learning. Neurocomputing 2013, 100, 197–205. [Google Scholar] [CrossRef]

- Guo, G.; Mu, G. Joint estimation of age, gender and ethnicity: CCA vs. PLS. In Proceedings of the 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Shanghai, China, 22–26 April 2013. [Google Scholar]

- Tapia, J.E.; Perez, C.A. Gender classification based on fusion of different spatial scale features selected by mutual information from histogram of LBP, intensity, and shape. IEEE Trans. Inf. Forensics Secur. 2013, 8, 488–499. [Google Scholar] [CrossRef]

- Choi, S.E.; Lee, Y.J.; Lee, S.J.; Park, K.R.; Kim, J.K. Age estimation using a hierarchical classifier based on global and local facial features. Pattern Recognit. 2011, 44, 1262–1281. [Google Scholar] [CrossRef]

- Geng, X.; Yin, C.; Zhou, Z.-H. Facial age estimation by learning from label distributions. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 35, 2401–2412. [Google Scholar] [CrossRef] [PubMed]

- Han, H.; Otto, C.; Liu, X.; Jain, A.K. Demographic estimation from face images: Human vs. machine performance. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1148–1161. [Google Scholar] [CrossRef] [PubMed]

- Hu, Z.; Wen, Y.; Wang, J.; Wang, M.; Hong, R.; Yan, S. Facial age estimation with age difference. IEEE Trans. Image Process. 2017, 26, 3087–3097. [Google Scholar] [CrossRef] [PubMed]

- Jadid, M.A.; Sheij, O.S. Facial age estimation under the terms of local latency using weighted local binary pattern and multi-layer perceptron. In Proceedings of the 4th International Conference on Control, Instrumentation, and Automation (ICCIA), Qazvin, Iran, 27–28 January 2016. [Google Scholar]

- Liu, K.-H.; Yan, S.; Kuo, C.-C.J. Age estimation via grouping and decision fusion. IEEE Trans. Inf. Forensics Secur. 2015, 10, 2408–2423. [Google Scholar] [CrossRef]

- Geng, X.; Zhou, Z.; Smith-Miles, K. Automatic age estimation based on facial aging patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 2234–2240. [Google Scholar] [CrossRef] [PubMed]

- Ling, H.; Soatto, S.; Ramanathan, N.; Jacobs, D. Face verification across age progression using discriminative methods. IEEE Trans. Inf. Forensics Secur. 2010, 5, 82–91. [Google Scholar] [CrossRef]

- Park, U.; Tong, Y.; Jain, A.K. Age-invariant face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 947–954. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Park, U.; Jain, A.K. A discriminative model for age-invariant face recognition. IEEE Trans. Inf. Forensics Secur. 2011, 6, 1028–1037. [Google Scholar] [CrossRef]

- Yadav, D.; Vatsa, M.; Singh, R.; Tistarelli, M. Bacteria foraging fusion for face recognition across age progression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Sungatullina, D.; Lu, J.; Wang, G.; Moulin, P. Discriminative Learning for Age-Invariant Face Recognition. In Proceedings of the IEEE International Conference and Workshops on Face and Gesture Recognition, Shanghai, China, 22–26 April 2013. [Google Scholar]

- Ramanathan, N.; Chellappa, R. Modeling age progression in young faces. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 387–394. [Google Scholar]

- Deb, D.; Best-Rowden, L.; Jain, A.K. Face recognition performance under aging. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Machado, C.E.P.; Flores, M.R.P.; Lima, L.N.C.; Tinoco, R.L.R.; Franco, A.; Bezerra, A.C.B.; Evison, M.P.; Aure, M. A new approach for the analysis of facial growth and age estimation: Iris ratio. PLoS ONE 2017, 12. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; Liu, Q.; Ye, M. Age invariant face recognition and retrieval by coupled auto-encoder networks. Neurocomputing 2017, 222, 62–71. [Google Scholar] [CrossRef]

- Park, U.; Tong, Y.; Jain, A.K. Face recognition with temporal invariance: A 3D aging model. In Proceedings of the 8th IEEE International Conference on Automatic Face & Gesture Recognition, Amsterdam, The Netherlands, 17–19 September 2008. [Google Scholar]

- Yadav, D.; Singh, R.; Vatsa, M.; Noore, A. Recognizing age-separated face images: Humans and machines. PLoS ONE 2014, 9. [Google Scholar] [CrossRef] [PubMed]

- Best-Rowden, L.L.; Jain, A.K. Longitudinal study of automatic face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 148–162. [Google Scholar] [CrossRef] [PubMed]

- Cheong, Y.W.; Lo, L.J. Facial asymmetry: Etiology, evaluation and management. Chang Gung Med. J. 2011, 34, 341–351. [Google Scholar] [PubMed]

- Sajid, M.; Taj, I.A.; Bajwa, U.I.; Ratyal, N.I. Facial asymmetry-based age group estimation: Role in recognizing age-separated face images. J. Forensic Sci. 2018. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.W.; Hong, H.G.; Park, K.R. Fuzzy system-based fear estimation based on symmetrical characteristics of face and facial fetaure points. Symmetry 2017, 9, 102. [Google Scholar] [CrossRef]

- Zhai, H.; Liu, C.; Dong, H.; Ji, Y.; Guo, Y.; Gong, S. Face verification across aging based on deep convolutional networks and local binary patterns. In International Conference on Intelligent Science and Big Data Engineering; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Wen, Y.; Li, Z.; Qiao, Y. Age invariant deep face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- El Khiyari, H.; Wechsler, H. Face recognition across time lapse using convolutional neural networks. J. Inf. Secur. 2016, 7, 141–151. [Google Scholar] [CrossRef]

- Liu, L.; Xiong, C.; Zhang, H.; Niu, Z.; Wang, M.; Yan, S. Deep aging face verification with large gaps. IEEE Trans. Multimed. 2016, 18, 64–75. [Google Scholar] [CrossRef]

- Lu, J.; Liong, V.E.; Wang, G.; Moulin, P. Joint feature learning for face recognition. IEEE Trans. Inf. Forensics Secur. 2015, 10, 1371–1383. [Google Scholar] [CrossRef]

- Chen, B.-C.; Chen, C.-S.; Hsu, W.H. Face recognition and retrieval using cross-age reference coding with cross-age celebrity dataset. IEEE Trans. Multimed. 2015, 17, 804–815. [Google Scholar] [CrossRef]

- Chen, B.-C.; Chen, C.-S.; Hsu, W.H. Cross-Age Reference Coding for Age-Invariant Face Recognition and Retrieval. 2014. Available online: http://bcsiriuschen.github.io/CARC/ (accessed on 28 January 2017).

- Ricanek, K.; Tesafaye, T. MORPH: A longitudinal image database of normal adult age-progression. In Proceedings of the 7th International Conference on Automatic Face and Gesture Recognition (FGR06), Southampton, UK, 10–12 April 2006. [Google Scholar]

- FERET Database. Available online: http://www.itl.nist.gov/iad/humanid/feret (accessed on 15 September 2014).

- Face++ API. Available online: http://www.faceplusplus.com (accessed on 30 January 2017).

- Ha, H.; Shan, S.; Chen, X.; Gao, W. A comparative study on illumination preprocessing in face recognition. Pattern Recognit. 2013, 46, 1691–1699. [Google Scholar]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A. Deep face recognition. In Proceedings of the British Machine Vision Conference, Swansea, UK, 7–10 September 2015. [Google Scholar]

- Lin, L.; Wang, G.; Zuo, W.; Xiangchu, F.; Zhang, L. Cross-domain visual matching via generalized similarity measure and feature learning. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1089–1102. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Ke, Q.; Sun, J.; Shum, H.-Y. Scalable face image retrieval with identity-based quantization and multireference reranking. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1991–2001. [Google Scholar] [PubMed]

- Jegou, H.; Douze, M.; Schmid, C. Product quantization for nearest neighbor search. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 117–128. [Google Scholar] [CrossRef] [PubMed]

| Attributes | MORPH II | FERET | ||

|---|---|---|---|---|

| Fa Set | Dup I Set | Dup II Set | ||

| # Subjects | 13,000+ | 1196 | 243 | 75 |

| # Images | 55,134 | 1196 | 722 | 234 |

| # Average images/subject | 4 | 1 | 2.97 | 3.12 |

| Temporal variations | Minimum: 1 day Maximum: 1681 days | N/A | Images were acquired later in a time with maximum temporal variation of 1031 days as compared to corresponding gallery images | Images were acquired at least 1.5 years later than corresponding gallery images |

| Age range | 16–77 | 10–60+ | ||

| Gender | Male, Female | Male, Female | ||

| Race | White, others | White, others | ||

| (a) | |||||||||||

| Actual Age Groups | Predicted Age Groups | ||||||||||

| Proposed | Face++ | ||||||||||

| 16–20 | 21–30 | 31–45 | 46–60+ | 16–20 | 21–30 | 31–45 | 46–60+ | ||||

| 16–20 | 93.45 | 5.58 | 0.49 | 0.48 | 88.80 | 7.54 | 2.43 | 1.21 | |||

| 21–30 | 1.68 | 95.02 | 2.50 | 0.80 | 3.59 | 92.04 | 2.32 | 2.04 | |||

| 31–45 | 0.20 | 1.42 | 96.00 | 2.38 | 0.75 | 3.93 | 93.53 | 1.78 | |||

| 46–60+ | 2.53 | 1.00 | 2.91 | 93.56 | 3.20 | 5.28 | 9.28 | 82.24 | |||

| Overall accuracy | 94.50 1.1 | 89.15 1.5 | |||||||||

| (b) | |||||||||||

| Actual Gender | Predicted Gender | ||||||||||

| Proposed | Face++ | ||||||||||

| Male | Female | Male | Female | ||||||||

| Male | 86.00 | 14.00 | 80.70 | 19.30 | |||||||

| Female | 21.23 | 78.77 | 25.09 | 74.91 | |||||||

| Overall accuracy | 82.35 0.6 | 77.80 0.4 | |||||||||

| (c) | |||||||||||

| Actual Race | Predicted Race | ||||||||||

| Proposed | Face++ | ||||||||||

| White | Others | White | Others | ||||||||

| White | 89.61 | 10.39 | 84.00 | 16.00 | |||||||

| Others | 29.19 | 70.81 | 31.00 | 69.00 | |||||||

| Overall accuracy | 80.21 1.18 | 76.50 1.2 | |||||||||

| (a) | |||||||||||

| Actual Age Groups | Predicted Age Groups | ||||||||||

| Proposed | Face++ | ||||||||||

| 10–20 | 21–30 | 31–45 | 46–60+ | 10–20 | 21–30 | 31–45 | 46–60+ | ||||

| 10–20 | 86.00 | 6.01 | 4.93 | 3.06 | 83.33 | 8.54 | 6.40 | 1.73 | |||

| 21–30 | 3.50 | 85.23 | 6.00 | 5.27 | 3.84 | 83.84 | 12.32 | 0 | |||

| 31–45 | 0.29 | 6.00 | 81.70 | 12.01 | 3.57 | 3.57 | 80.94 | 11.90 | |||

| 46–60+ | 0.20 | 5.20 | 12.00 | 82.60 | 0 | 8.67 | 13.33 | 78.00 | |||

| Overall accuracy | 83.88 1.00 | 81.52 1.1 | |||||||||

| (b) | |||||||||||

| Actual Gender | Predicted Gender | ||||||||||

| Proposed | Face++ | ||||||||||

| Male | Female | Male | Female | ||||||||

| Male | 83.00 | 17.00 | 78.94 | 21.06 | |||||||

| Female | 17.56 | 82.44 | 26.39 | 73.61 | |||||||

| Overall accuracy | 82.72 0.9 | 76.28 0.5 | |||||||||

| (c) | |||||||||||

| Actual Race | Predicted Race | ||||||||||

| Proposed | Face++ | ||||||||||

| White | Others | White | Others | ||||||||

| White | 85.61 | 14.39 | 82.00 | 18.00 | |||||||

| Others | 26.00 | 74.00 | 32.88 | 67.12 | |||||||

| Overall accuracy | 79.80 0.9 | 74.56 0.9 | |||||||||

| Approach | Rank-1 Recognition Accuracy (%) | |

|---|---|---|

| MORPH II | FERET | |

| (i) CARC [45] | 84.11 | 85.98 |

| (ii) GSM1 [52] | 83.33 | 85.00 |

| (iii) GSM2 [52] | 93.73 | 94.23 |

| (iv) Score-space-based fusion [10] | 72.40 | 66.66 |

| (v) Age-assisted face recognition [38] | 85.00 | 78.60 |

| (vi) dCNN features | 80.50 | 82.00 |

| (vii) dCNN features + gender re-ranking | 84.81 | 85.91 |

| (viii) dCNN features + gender–race re-ranking | 89.00 | 90.00 |

| (ix) Proposed approach with demographic re-ranking (actual age groups, gender, and race) | 96.53 | 97.01 |

| (x) Proposed approach with demographic re-ranking (estimated age groups, gender, and race) | 95.10 | 96.21 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sajid, M.; Shafique, T.; Manzoor, S.; Iqbal, F.; Talal, H.; Samad Qureshi, U.; Riaz, I. Demographic-Assisted Age-Invariant Face Recognition and Retrieval. Symmetry 2018, 10, 148. https://doi.org/10.3390/sym10050148

Sajid M, Shafique T, Manzoor S, Iqbal F, Talal H, Samad Qureshi U, Riaz I. Demographic-Assisted Age-Invariant Face Recognition and Retrieval. Symmetry. 2018; 10(5):148. https://doi.org/10.3390/sym10050148

Chicago/Turabian StyleSajid, Muhammad, Tamoor Shafique, Sohaib Manzoor, Faisal Iqbal, Hassan Talal, Usama Samad Qureshi, and Imran Riaz. 2018. "Demographic-Assisted Age-Invariant Face Recognition and Retrieval" Symmetry 10, no. 5: 148. https://doi.org/10.3390/sym10050148

APA StyleSajid, M., Shafique, T., Manzoor, S., Iqbal, F., Talal, H., Samad Qureshi, U., & Riaz, I. (2018). Demographic-Assisted Age-Invariant Face Recognition and Retrieval. Symmetry, 10(5), 148. https://doi.org/10.3390/sym10050148