1. Introduction

Intelligent environments have now been envisioned for over 20 years. The original vision of ubiquitous computing—machines that fit to the human environment instead of forcing humans to enter theirs—presented by Mark Weiser [

1] has been complemented over the years. The concept of Ambient Intelligence (AmI) was proposed by the ISTAG, European Commissions IST Advisory Group [

2]. Ambient Intelligence emphasizes the human viewpoint—user-friendliness, efficient service support, user-empowerment, and support for human interactions. In the scenarios presented in the ISTAG report [

2], people were surrounded by intelligent intuitive interfaces embedded in the environment and in different objects. The environment was capable of recognizing and responding to the presence of people in a seamless, unobtrusive and often invisible way. The concept of a smart or intelligent environment emphasizes technical solutions such as pervasive or mobile computing, sensor networks, artificial intelligence, robotics, multimedia computing, middleware solutions, and agent-based software [

3]. Those technical solutions acquire and apply knowledge about the environment and the people in it in order to improve their experience in that environment. Thus, intelligent environments target the same kinds of user experiences as does Ambient Intelligence. Aarts [

4] saw a focus shift in AmI visions from productivity in business environments to a consumer- and user-centered design approach. A major challenge remaining is to understand and anticipate what people really want and to build solutions that really impact their lives [

5]. Aarts and Grotenhuis [

5] propose revisions of the original ideas of Ambient Intelligence, emphasizing the need for meaningful solutions that balance mind and body rather than driving people to maximum efficiency.

The development, concretization and adaptation of large-scale and truly intelligent environments has been slow, and intelligent environments are still rare in actual use. Nevertheless, intelligence is gradually entering our environment, especially with the development of mobile technologies, social media and new interaction tools. As a result of people’s growing expectations of new technology and the on-going societal development towards an ‘experience economy’ [

6], it has become increasingly important to understand the expectations that people have of intelligent environments. User’s expectations reflect anticipated behavior, direct attention and interpretation [

7], exist as a norm against which the actual experience is compared [

8], and influence the user’s perceptions of the product [

9], thus having an influence on forming the actual user experience and acceptance of the product. For example, Karapanos

et al. [

10] state that “often, anticipating our experiences with a product becomes even more important, emotional, and memorable than the experiences

per se”. We argue that it is important to understand what people expect of intelligent environments, hence gaining new insights into the design targets, limitations, needs and other considerations that should be taken into account when designing intelligent environments.

Since the first visions, several research and development activities have been pursued. Many of those activities have included user evaluations, resulting in empirical knowledge of potential users’ expectations of the different facets of intelligent environments. The design focus on human-technology interaction has been extended from mere usability or identifying human factors to embracing a rich user experience [

11], hence emphasizing emotional and experiential aspects in addition to rational and instrumental ones. This has allowed the study of users’ expectations in a more comprehensive way, allowing identification of subjective meanings, long-term product attachment and emotional values such as pleasure and playfulness.

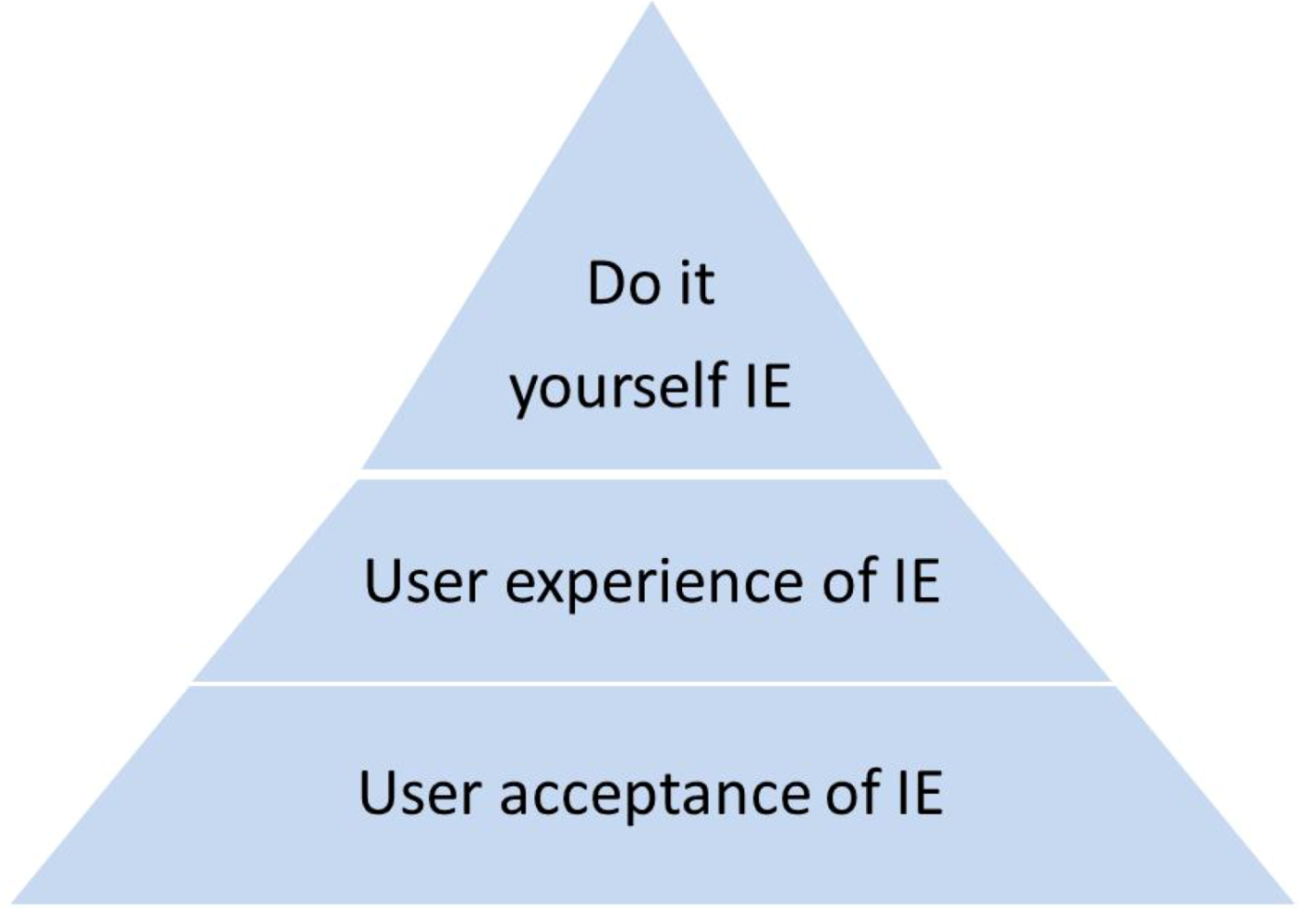

Figure 1.

The viewpoints of user expectations of intelligent environments (IE) in the paper.

Figure 1.

The viewpoints of user expectations of intelligent environments (IE) in the paper.

In this paper we deal with user expectations on three levels as illustrated in

Figure 1. Firstly, in

Section 3 we discuss

user acceptance of intelligent environments (IE): what do people value, what do they consider to be threats, and what other factors affect user acceptance? We review several user studies to analyze issues that affect the willingness of people to adopt intelligent environments. The second level is

user experience when acting in an actual intelligent environment.

Section 4 is devoted to understanding experiential aspects of the interaction. Intelligent environments may include different interaction technologies, but in this paper we focus on interaction tools that intertwine physical and virtual elements; tangible and embodied interaction techniques. These interaction tools are studied as examples of the kinds of user experience targets we can set for actual intelligent environments. The third, emerging level is the users’ own role as

co-crafters of intelligent environments—users are not just using intelligent environments but are living in them, and at the same time developing the environments themselves. Intelligent environments should allow users to shape the environments gradually as the needs of the users change. In

Section 5, we describe our approach to extending the users’ role of just using the given intelligent environments to actively design and build their own intelligent environments: do-it-yourself smart environments.

In

Section 3,

Section 4 and

Section 5 of this paper, the review focus is narrowing from general issues related to all intelligent environments (user acceptance) to specific issues of specific interaction concepts (user experience) to specific issues of individual environments (do-it-yourself (DIY) intelligent environment). The three viewpoints of our study can also be seen as representing different phases of the design process: before starting to design an intelligent environment, the designer needs to understand what could increase or decrease user acceptance. Once starting the design, concrete targets should be set for the user experience that the environment should provide. When the intelligent environment is in use, it should still provide the user with ways of shaping the environment according to user’s current needs and ever-changing expectations. Consequently, in the pyramid in

Figure 1, the upper level can be seen to be founded on the layers below it; for pleasurable UX to take place requires that acceptance issues are well-implemented, and the DIY approach assumes that the overall UX demonstrates other positive experiences in the human-technology interaction.

Regarding user expectations, we will analyze expectations of various facets of intelligent environments in a broad sense, based on published research results and our own experiences from user studies with concepts and prototypes. First, in

Section 2 we will discuss potential application fields for intelligent environments, focusing mainly on consumer services. Then in

Section 3,

Section 4 and

Section 5 we will review user expectations of intelligent environments from the three viewpoints presented in

Figure 1: user acceptance, user experience and users as designers of their own environments. Finally, based on the presented results of user expectations, we will discuss how user expectations should be dealt with in order to facilitate the take-off of large scale intelligent environments.

2. Characteristics of intelligent environments

In future visions [

2,

12,

13,

14,

15] intelligent environments are characterized by information and communication technology embedded so seamlessly into our physical environments and in various everyday objects that ICT-enabled features will become a natural part of our living and working environments. People are surrounded by intuitive interfaces, and the environment is capable of recognizing and proactively responding to the presence of different individuals in a seamless, unobtrusive and often invisible way. In the visions, people are given situation-aware support in their everyday activities; they gain new possibilities to act, and they are provided with new opportunities to interact with each other, irrespective of time and space.

In the ISTAG report [

2], 5 key technology requirements for Ambient Intelligence were identified: very unobtrusive hardware, seamless web-based communications infrastructure, dynamic and massively distributed device networks, a natural-feeling human interface as well as dependability and security. The technical enablers for intelligent environments can be classified in three groups: information and communication technology (ICT) embedded to our environment; advanced interaction possibilities; and algorithmic intelligence [

12] (

Table 1). Users experience embedded ICT so that everyday objects and environments are starting to have new, intelligent features. Advanced interaction possibilities appear to users as novel interaction concepts and as possibilities for interacting using high level concepts. Advanced interaction possibilities also make it possible for users to shape the environments themselves, instead of just using environments designed by others. Users experience algorithmic intelligence in such a way that the environment understands the user and the context of use and adapts accordingly. In addition, the environment learns from the past behavior of the user, and can proactively prepare for the user’s future needs [

12].

Table 1.

The three technology development paths required for intelligent environments [

12].

Table 1.

The three technology development paths required for intelligent environments [12].

| ICT everywhere | Advanced interaction | Algorithmic intelligence |

|---|

| Embedded information and communication technologies | Natural interaction | Context-awareness |

| Communication networks | High level concepts in interaction | Learning environment |

| Mobile technology | Environment evolving gradually both by design and use | Anticipating environment |

Intelligent environments are still rare as real life environments. Experimental intelligent environments have been built in enclosed spaces such as laboratories, homes and museums, where the technical arrangements are manageable and affordable, and where there are fewer stakeholders involved. Research results on intelligent environments have been described in the fields of housing [

16,

17,

18], health [

18,

19], assisted living [

20,

21,

22,

23], ambient media [

13,

24] as well as security and safety [

18,

23,

25,

26]. These application fields are often interconnected as, for instance, home services may include security features.

Kaasinen and Norros [

12] classify potential application fields for intelligent environments as living environments, service environments and production environments. The living environment is a personal environment in which an individual is managing his/her life. The environment does not represent a specific physical space, but moves with the user from home to work, to hobbies and so on. The service environment integrates several users and different infrastructures. A typical example is traffic. The production environment integrates several actors working towards a common goal. A typical example is a production process in a factory. Intelligent environments may include an ecosystem of services, where new services have to compete for their share of user attention with already existing services. The designers cannot design new services in a vacuum—in addition to designing the service itself, the designer also has to design how the new service fits into the service ecosystem.

The relationship of people with intelligent environments is not only a relationship of a user and a system. People use the services provided by the environments, but they do not “use” the environments themselves. Instead they are in them, and at the same time live with the smart systems that inhabit the environments. This calls for a holistic understanding of experience, and requires that the services are not only designed for users, but also take account of other people who share the environment [

27]. Roto [

28] makes a distinction between “user experience” and “experience”. In her definition, user experience requires the possibility of the user manipulating or controlling the system in a two-way interaction. This is what makes the person a user. Experience is a broader concept, including all the other ways people are experiencing the world they are living in. When studying and designing intelligent environments, both types of experience must be taken into account. Furthermore, many of the systems in intelligent environments do not require human interaction, but work autonomously, though still changing the environment and affecting people’s experience.

3. User acceptance of intelligent environments

Originally, the user’s acceptance of technology—the user’s intention to use and sometimes also the actual usage of a new technology—has been studied in organizational contexts in order to understand how workers adopt new information systems and to develop the means to support the adoption process in the organization [

29,

30,

31]. Lately, with the rapid expansion of personal computers and smart devices in the number and places in which they are used, research into user acceptance has been extended to include the voluntary contexts of consumer-oriented applications, as well as complex, inter-relating systems and ecosystems, which are critical in intelligent environments (e.g., [

32,

33]).

The user’s willingness to adopt a new technical system is based on their expectations of what the system would be like in use. These expectations arise from experiences and information the user acquires from the world around him/her—what the user perceives in their physical and social environment and what their possible hands-on experiences are with similar or related systems. In user acceptance theories, the user’s expectations and perceptions are categorized into so-called factors of user acceptance. With regard to intelligent environments, the following factors have been identified as making a significant impact on the user acceptance [

32,

33,

34,

35,

36]:

The list highlights the various psychological viewpoints from which people weigh intelligent environments when pondering the decision of whether to adopt. This list is not meant to be an inclusive acceptance check list for developers. Nor it is a framework for user evaluations of intelligent environments (for this purpose, see

e.g,. [

37]). User acceptance depends not just on technical features but also on the social and cultural context of the user as well as his/her individual characteristics. Furthermore, the importance of different factors naturally varies depending on the application field (for instance, usefulness as efficiency may be less critical at home than at work).

Nevertheless, it is useful for technology and application developers to understand which features of intelligent environments actually have been found to affect these factors, and so increase or decrease user acceptance. In the following, we review some representative research results in this domain.

3.1. Usefulness, value and benefit

The anticipated usefulness of a new technology is one of the critical factors in user acceptance of the technology (e.g., [

29]). In the context of consumer-oriented applications and voluntary use, where achieving certain results in an effective manner is less important than at work, this factor has been extended beyond rational usefulness, for example to “value” [

32] or “benefit” [

33]. The first comprises the consumer’s appreciation of and motivation for the technology, and the latter the user’s current need but also the future benefits the user expects to obtain from using the system.

In an interview study by Lee and Yoon [

26], the users expected the value of ubiquitous technology to be in helping them in effective decision-making, increasing personal and family safety, helping personal management, increasing personal freedom, improving ease of use, increasing the portability of services and decreasing the cost of living. For elderly people, the value of intelligent applications is especially in increased safety and more effortless health care activities at home [

21].

In a mass survey study of more than a thousand respondents, Allouch and colleagues [

38] discovered that people expected the benefits of an intelligent home to be entertainment and facilitation in everyday routines. In addition, intelligent applications would help the users to be “ahead of their time”. The users valued the feeling of being involved in technological development, being modern and acquiring higher social status with the intelligent applications at home.

Outside the home, usefulness or benefit means something different. For instance, efficiency is specifically related to work but less often to home or leisure time. Röcker [

39] asked users to evaluate scenarios for several typical smart office applications, including content adaptation, speech interface, ambient displays and personal reminders. Röcker was surprised that the level of expected usefulness and ease of use of the applications was only moderate. This indicates that the users did not expect that the intelligent office would help them a great deal to be more efficient at work, or otherwise support them in their tasks. In a museum environment, the value of an intelligent service was found to be in enriching the visitor’s museum experience with additional and multimodal information, in addition to assisting the user in finding interesting objects [

40].

3.2. Ease of use and control

Another significant feature that people expect from intelligent environments is that the applications and services in them are easy to use and control. For instance, Ziefle and Röcker [

41] studied the acceptance of smart medical systems among the middle-aged and elderly. The system, presented in scenarios, monitored the biosignals of the user, communicated automatically with health care personnel and if necessary activated an alarm. The most critical characteristics of the system were ease of use, controllability and communication with the device. The users were less interested in the design or appearance of the system, or whether the system could be recognized as medical by outsiders.

Sometimes the assumption is that an automatic system is the most easy to use. However, an automatic system may cause the user to feel that (s)he is not in control. Misker and colleagues [

42] studied experimentally different user interaction styles of combining various resources of an environment,

i.e., input and output interaction devices, ad hoc. The test subjects preferred a style in which they had control over the device selection, even if they had to use extra effort, compared to an automated selection. Zaad and Allouch [

22] conducted a study of a motion sensor system for elderly people and compared two versions of the system: a fully automatic system and one in which the system carried out checks with the user on whether the information detected by the system was correct. It was found that those elderly people who perceived having more control over the system and thus over their wellbeing had a greater intention of using it.

The intelligence—context-adaptive, learning, and proactive behavior—of a system may be a reason for the loss of user control. If the user does not understand the logic by which the system behaves, that is, the system lacks intelligibility, the result will be a loss of user trust, satisfaction and acceptance [

43]. For instance, Cheverst and colleagues [

44] tested this with an intelligent, learning-able office system prototype. The system allowed the user to scrutinize and control the rules that the system followed in action. In the questionnaire study, more than 90% of 30 participants expressed the need for controlling the system. Independent system action was sometimes found useful, as two third of the participants would have liked to switch between the two system modes, automatically or by prompt. The participants who had more expertise in computer knowledge expressed more interest in controlling the system.

Lim and colleagues [

43] studied if different types of explanations about a system's decision process would add intelligibility of a system for the user. They carried out a comparison experiment with over 200 users, and found that explanations clarifying why the system behaved in a certain way resulted in increased user understanding and trust.

Therefore, to prevent the threat of intelligent environments taking control over the user, the user needs to be provided with enough information and tools to control system actions. For this purpose, Bellotti and Edwards [

45] propose a design framework for context-aware systems. Their framework declares the following four principles to support intelligibility and accountability in design: 1) Inform the user of current contextual system capabilities and understandings, 2) Provide feedback (both feedforward and confirmation for actions), 3) Enforce identity and action disclosure particularly with sharing nonpublic (restricted) information, and 4) Provide control to the user. Also, the framework by Assad and colleagues [

46] for creating ubiquitous applications ensures that the applications will be such that the user has control over what information is modeled (especially about people) and how it is used.

Sometimes, users are willing to give up control for benefits. In a study by Barkhuus and Dey [

47], users felt a lack of control when using fully or partly autonomous mobile interactive services, but still preferred them over applications that required manual personalization. The usefulness of the autonomous applications overrode the diminished sense of control. However, Barkhuus and Dey warn that loss of control may still result in user frustration and turning off services.

Multimodality may increase the usability of intelligent environments. Blumendorf and colleagues [

48] found that multimodal interaction (voice, mouse, keyboard, and touchscreen) was preferred and more positively evaluated by users carrying out interactive tasks, compared to single modality interaction. Multimodality enables a more personal use and creates flexibility; for instance, speech-based interaction with office applications is avoided in public places but may be the most preferred interaction method elsewhere [

39].

However, multimodal interaction can be implemented in a more or less usable way. Badia and colleagues [

49] found that, if the interaction is as hidden and as natural as possible, the results and consequences of the interaction may actually be more difficult to understand.

Furthermore, some user groups such as the elderly may have difficulties in accepting an intelligent environment despite easy and flexible control and interaction. For the elderly, it is important that the intelligent applications and services integrate to and support the existing practices and routines of the user—who may never have used a computer [

21].

3.3. Trust and privacy

Trust is related to control, privacy and monitoring, but also to security and the data protection of intelligent systems [

32,

50]. Trust is a multi-dimensional, complex issue (for analytic definition, see e.g., [

51]) that may grow slowly but is easily lost [

32]. In a long-term experiment, the inhabitants of an intelligent home did not gain complete trust in the system even in six months [

17]. Lack of people’s trust and belief is a critical shortcoming regarding the acceptance of intelligent environments. One of the main challenges in gaining trust seems to be users’ fear of losing their privacy.

Intelligent environments face a privacy dilemma: the user’s personal data, current and historical, needs to be collected and stored to be able to provide intelligent, contextual and pro-active services for the user [

52]. Indeed, in Allouch and colleagues’ [

38] study of an intelligent home, users did not accept a smart mirror sending private health data to a doctor. Nor did they accept automated home services functioning without the user’s intervention. In other words, user acceptance is low if users cannot trust the system to protect their privacy and to be fully in control. Privacy and control are especially important at home. In Ziefle and Röcker’s study [

41], a medical system, which monitored users and automatically communicated data to health care staff was least accepted when integrated at home, but better accepted when implemented as a mobile or wearable system.

Privacy is also an issue outside the home. Röcker [

39] found that users were willing to use smart office applications which may reveal something personal about the user more in private places (a private office room) than in public offices. Because of privacy worries, gaining user acceptance for one of the core functions of intelligence environments, namely continuous monitoring, is a challenge. Moran and Nakata [

53] reviewed several studies of the effects of monitoring (as in data collection, with no surveillance) and concluded that in general, awareness of monitoring has a negative effect on user behavior. However, the studies were often conducted under laboratory conditions (“smart home labs”) which may cause different behavior compared to the real world. The researchers analyzed several factors that may impact behavior: the number of devices, disturbance of the devices in looks and positioning, control functions such as deletion, deactivation, inhibition and avoidance, the frequency of data collection, informing the user,

etc. Systematic studies of the effects of these factors are still lacking due to the rarity of real intelligent systems.

3.4. Cultural and individual differences

There are some research results showing that nations or cultures may differ in how people accept intelligent environments. Forest and Arhippainen [

54] studied the cultural aspects of the social acceptance of proactive mobile services. They discovered that the concept of labor was different in France and in Finland, which caused Finnish users to more readily accept solutions that organized the entire life of the user in order to improve his work efficiency. French users did not accept the approach where life was devoted to work, as it threatened their identity and the meaning of their life. Also, the roles of women and men in society were different, and this caused differences in acceptance of services for controlling the activities of other people. For Finnish people, it seemed to be more natural to accept living in symbiosis with a device ecosystem, whereas French people would have wanted a device environment where the human being can keep control of what is happening all the time. Röcker [

39] found that willingness to use intelligent office applications in private or public places differed more clearly with American users and less with German users. However, the difference was not put to a statistical test.

Individuals clearly differ in their willingness to adopt technologies and intelligent services. For example, gender, region of residence, job and usage of the Internet predict an acceptance of digital home care services [

18]. Other individual characteristics might be age, education, social status, wealth,

etc. Even personality characteristics such as conscientiousness, extraversion, neuroticism and agreeableness may matter [

55]. In the field of mobile Internet services, it seems that socially well networked and “personally innovative users” (those having a tendency to take risks) may find the services more useful and easy to use, and this way are also more willing to take them into use [

56].

A widely known description of how different types of individuals adopt new technologies is Rogers’ [

57] categories of adopters: innovators, early adopters, early majority, late majority and laggards. As Punie [

52] notes, it is difficult to believe that intelligent environments would be accepted by everyone. There will always be early adopters as well as late adopters and those who totally resist. However, it is important to develop intelligent technologies for a variety of user groups, not just those who appear to be the most ready and willing, to avoid the growth of a digital divide between those who can and those who cannot use intelligent environments.

4. User experiences of embodied interaction techniques for intelligent environments

In this section, we continue our analysis of user expectations of intelligent environments by analyzing user experience in interaction with intelligent environments. Related research into two novel interaction tools that can be seen as salient in intelligent environments—i.e., haptic interaction and augmented reality—are summarized and discussed from the perspective of experiential user expectations. Novel interaction tools in particular can embody new metaphors and interaction philosophies for the user, which, along with the novel functionalities and content, play an important role in how the overall user experience of intelligent services is created.

Interaction with intelligent environments in general has been proposed as natural, physical, and tangible [

14,

58]. Consequently, new interaction paradigms have been extensively researched and developed over the past few decades, centering especially around ‘embodied’ and ‘tangible’ interaction. Prominent examples of such are augmented reality (AR) and haptic interaction based especially on touch and kinesthetic modalities. In what follows, we discuss the possibilities and users’ expectations of specifically augmented reality and haptic interaction, as well as their potential as solutions for interacting with intelligent environments and services. The main focus in this section is on user experience provided by the interaction tools.

The growing extent of tangible and other related interfaces becomes apparent in the diversity of terminology that is used to describe such technologies: e.g., ‘graspable’, ‘manipulative’, ‘haptic’ and ‘embodied’ interaction (see, e.g., [

59]). Despite the complexity, most of these concepts include, first, touch and movement as input or/and output in some form and, second, the principles of physical representations being computationally coupled to underlying digital information and embodying mechanisms for interactive control [

14]. Furthermore, to conceptually clarify the terminology, Fishkin [

60] developed a taxonomy for tangible user interfaces. It consists of varying degrees of ‘tangibility’, based on two axes: 1) embodiment,

i.e., how close the input and output are to each other, and 2) metaphor,

i.e., how analogous the user interaction is to similar real-life action. Augmented reality and haptic interaction can be regarded as embodied interaction as well as tangible user interfaces (TUI) in many ways [

14]. For example, although AR is inherently based on the visual modality, natural body movements can be utilized to change the point of view and the augmented objects can even allow direct manipulation, hence extensively defining the way of providing user input (cf. [

60]).

A focus on this area of interaction techniques is justifiable as operating, reacting to and controlling intelligent environments in general often intends to mimic the embodied interactions that are familiar from interactions with other people and the physical world [

59]. Tangible interfaces have been most popularly applied in the fields of music and edutainment, but also in other areas such as planning, information visualization and social communication (see, for example, an extensive survey on tangible user interfaces by Shaer and Hornecker [

61]). In addition to AR and haptic interaction, other examples of tangible and embodied interaction do exist. For example, speech-based interaction can also be regarded as intuitive and even embodied interaction. However, this section focuses on AR and haptic interaction because (1) they serve as good examples of embodied interaction, involving two different modalities, (2) speech-based interaction has often been envisioned in the early scenarios of intelligent environments, and hence offers less novelty with regard to understanding new user-based requirements and expectations of such technology, (3) interaction with the physical environment is inherently visual and haptic, meaning that AR and haptic interaction embody potential emerging interaction metaphors, and most importantly, (4) in these two fields there is recent related work with a focus on the novel concept of user experience. In addition, ambient and peripheral displays are often discussed in relation to intelligent environments (e.g., [

62]) but this is similarly left out from the scope of this paper.

Despite the originally technological focus in building enabling technologies for embodied interaction, the research increasingly includes approaches to specific human factors like perception and cognition issues and user task performance (e.g., [

63]). However, user acceptance and user experience, as concepts that look at the human-centric aspects from a slightly more abstract and holistic perspective, have also lately received research interest (e.g., [

64,

65,

66]). Consequently, the concept of user experience (UX) is next specified in more detail.

User experience is regarded as a comprehensive concept describing the subjective experience resulting from the interaction with technology [

11]. Although the concept of UX is still rather young, there are a few common key assumptions that are widely accepted [

67,

68,

69]: UX is generally agreed to depend on the person (

i.e., subjective) and contextual factors like physical, social and cultural aspects in the situation of use, and to be dynamic and temporally evolving over the instances of use. As a multifaceted concept, user experience has various manifestations, consisting of subjective feelings, behavioral reactions such as approach, avoidance and inaction, expressive reactions like facial, vocal and postural expressions, and physiological reactions [

70]. Experience as a novel quality attribute of products and services is considered to be a critical asset in global business—in order to achieve success, companies must orchestrate memorable events for their customers, and that the memory itself becomes the product [

71]. Consequently, it is also important to research users’ expectations from the perspective of user experience. For example, the services built around AR or haptic interaction can be expected to allow such experiences and activities that people inherently desire but have not been possible with existing technologies and ways of interacting with technology.

4.1. User experience and expectations of haptic, tangible and tactile interaction

First, based on a review of relevant literature, the following highlights various aspects related to user experience of haptic, tangible and tactile interaction. Because of the fact that not much research literature exists on the user-centric aspects of haptics, various elements of experience are discussed based on papers on both actual experiences and expectations. This is crucial with regard to both expectations and experiences, as these two interplay closely with and influence each other; expectations affect the actual experiences, and understanding actual experiences can indicate aspects that would also be central in the users’ expectations.

To clarify the terminology first, in describing touch-based interaction, a widely accepted term is haptic interaction, which pertains to sensory information derived from both tactile (

i.e., cutaneous, skin sensor -based) and kinesthetic (limb movement -based) receptors [

72]. Examples of tactile interaction are vibration, pressure- or temperature-based output, and smoothing, pressing or squeezing as input. Kinesthetic gestures have been categorized as, for example, manipulative, conversational, iconic, metaphoric, or semaphoric [

73,

74]. In day-to-day interaction, touch and gestures are essential in a wide range of daily ad hoc tasks, such as in providing a feedback loop for manipulating objects, guiding our movements in three-dimensional space and various social interaction settings. Considering this and the fact of haptic being an underused modality, TUIs can also be considered to be a potential technology in the development of new ambient intelligence services. A system with haptic output provides the users with heads-up interaction—that is, letting the user visually scan their surroundings instead of reserving them for interaction with a handheld device. Incorporating the sense of touch into remote mobile communication has also been proposed as one solution in enriching multimodality in mobile communication [

75].

Touch and gestures could allow more accurate interaction with intelligent environments, for example initiating object-based services, downloading further embedded information, user registering to a service/location, selecting augmented information in an AR browsing view (see 4.2), etc. In addition, the haptic modality serves well in multimodal interaction, supporting and enriching interaction based mainly on other sensory modalities, such as vision or audition.

To start with, several studies indicate that tangible interaction can enhance creativity, communication and collaboration between people. Africano

et al. [

76], Ryokai

et al. [

77] and Zuckerman

et al. [

78] have tested tangible interaction concepts in the learning environment with children using hi-fidelity prototypes. All of the studies report that the tangible interfaces promoted collaboration and communication; the children were engaged in the interaction and considered it fun. Hornecker & Buur [

79] show examples of three case studies of tangible interfaces where the same applies to adults.

When tangible interfaces clearly enhance the social aspects of interaction, virtual and augmented reality tend to serve more solitary experiences, since the environment is generally viewed through a personal device such as goggles or a hand-held display device. These technologies have the potential to significantly change people’s perception and experiential relationship with the environment by immersing them in the interaction. Hornecker [

80] has compared experiences with two different kinds of exhibition installations in a museum environment. One installation consisted of a multi-touch interactive table and the other provided an augmented reality view of the museum environment with a telescope device. The ethnographic study indicated that, while the telescope installation provided a more immersive experience, the multi-touch table enabled more social and shared experiences.

With regard to tangible interaction, one of the earliest examples is by Ullmer and Ishii [

81], where user’s hands manipulate physical objects via physical gestures; a computer system detects this, alters its state, and provides feedback accordingly. More recently, RFID-based NFC (Near-field communication) ‘touching’ has generated greater interest in the research community. For example, Riekki

et al. [

82] and Välkkynen

et al. [

83] have built systems that allow the requesting of ubiquitous services by touching RFID tags. In the studies by Välkkynen

et al. [

83], touching and pointing turned out to be a useful method for selecting an object for interaction with a mobile device. Kaasinen

et al. [

84] studied physical selection as an interaction tool, both for selecting an object for further interaction, and for the device also scanning the environment and proposing objects for interaction. They found that in an environment with many tags, it will be hard to select the correct one from the list presented after scanning. Distance and directionality in tag interaction need to be precise, because indefiniteness in them can cause a confusing user experience. The results by Kaasinen

et al. [

84] indicated that the best technique for touching would probably be having a ‘touch mode’ that can be activated and deactivated either manually or automatically.

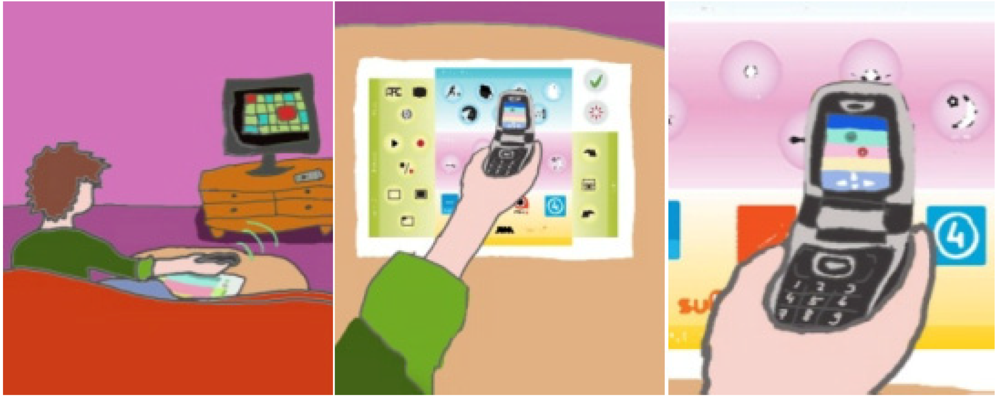

Figure 2.

Example scenarios of using near-field communication (NFC) to control a home media center [

85].

Figure 2.

Example scenarios of using near-field communication (NFC) to control a home media center [

85].

More recently, Kaasinen

et al. [

24] have studied the acceptance and user experience of touchable memory tags. Ease of upload and fun interaction were identified as the main strengths of such an interaction metaphor. The main concerns related to control over the interaction between the mobile phone and the memory tag as well as fears regarding security and reliability. Users should have ways to assess and ensure the trustworthiness of memory tag contents, and the user-generated content may require moderation. The studies by Kallinen [

85] also support these findings: touch-based interaction with NFC-enabled mobile phones was regarded as an efficient, intuitive and novel interaction technique that is suitable for a variety of domains and applications. Such interaction could be considered in, for example, smart home environments (see

Figure 2). It was found to be the most efficient, effective, practical, and simple method compared with speech and gesture-based interaction in both subjective and objective measures. In addition, touch-based interaction was considered to be socially very acceptable, exciting, and innovative. The biggest user concerns were device dependency and security, especially in use cases with payment and access control features. Furthermore, Isomursu [

86] identified a need for tag management. They propose that if NFC technology becomes common and if management practices are not used or available, tags can create “tag litter” that ruins the user experience by corrupting the trust in tags and tag-based services.

Also the potential users’ expectations of haptic interaction in interpersonal communication have been studied [

75,

87]. In these papers, the main benefits of tactile communication were seen to be the added richness and immersion it can bring to interpersonal communication. The interaction could become more holistic and thus richer information could be conveyed. The haptic modality was seen to add the currently missing contextual nuances and thus ease the use of mobile technology. For example, to convey an emotion, a tactile input could either enrich the multimodal message as tactile output or be transformed into another sense modality (e.g., represented in a certain type of sound). Another clear benefit was expected to be the spontaneity and speed of communication. Haptic communication was seen to be an easy, simple and fun way to convey sudden emotions. Furthermore, the added privacy in the unobtrusiveness of tactile interaction was seen as beneficial for remote communication. On the other hand, touch was regarded as such a private sense that the user must be in control of who can communicate with them via the haptic modality. The authors conclude that, to make it fluent, the interaction itself should resemble or have similar metaphors to our everyday use of the touch.

To summarize, the above-mentioned studies clearly confirm even the earliest researcher-originated expectations of touch-based interaction—intuitiveness and efficiency in interaction with real world objects. Publications about user research in this area are diverse and do not currently allow a holistic view of UX and expectations of haptic interaction. Nevertheless, the following provides an early consolidation of the UX-related aspects discussed above, mainly to be used as early guidance in the design of touch- and movement-based interactions in general and especially in AmI services:

The different submodalities in the haptic modality enable extensive ways of inputting information into a system and providing immersive and intuitive output for the user in a multi-modal, holistic way. Thus, touch-based interaction also has great potential to help make ambient intelligence a well-accepted technology and a central part of the everyday life. Currently, such interaction technologies becoming more general might mostly depend on how the possibilities of touch can be mapped into the input and output for a system in a universally understandable way, and what the first widely accepted services utilizing these technologies are to be.

4.2. Expected user experience of augmented reality

Augmented Reality (AR) is another central interaction tool that can be seen as a promising interface and interaction tool for intelligent environments, and has recently received considerable interest also from the user-centered perspective. Generally, AR can be considered to be one key technology in catalyzing the paradigm shift towards mobile and ubiquitous computing, happening “anytime, anywhere” [

1,

66,

88]. The general approach of AR is to combine real and computer-generated digital information into the user’s view of the physical and interactive real world in such a way that they appear to be one environment [

89,

90]. This allows integrating the realities of the physical world and the digital domain in meaningful ways: AR enables a novel and multimodal interface to the digital information in and related to the physical world. This new reality of mixed information can be interacted with in real time, enabling people to take advantage both of their own senses and skills and the power of networked computing, while naturally interacting in the everyday physical world [

91]. Therefore, it also serves as a versatile user interface for intelligent environments [

92]: for example, browsing the digital affordances embedded in the real world as well as manipulating the ambient information in a natural and immersive way. This aspect emphasizes that objects themselves including digital information can create ‘smart’ services, in which AR could be used as a paradigmatic interface metaphor (see, e.g., [

93]). On an abstract level, this is closely related to the concept of physical browsing,

i.e., accessing information and services by physically selecting common objects or surroundings [

94], but approaches the paradigm from a visual perspective.

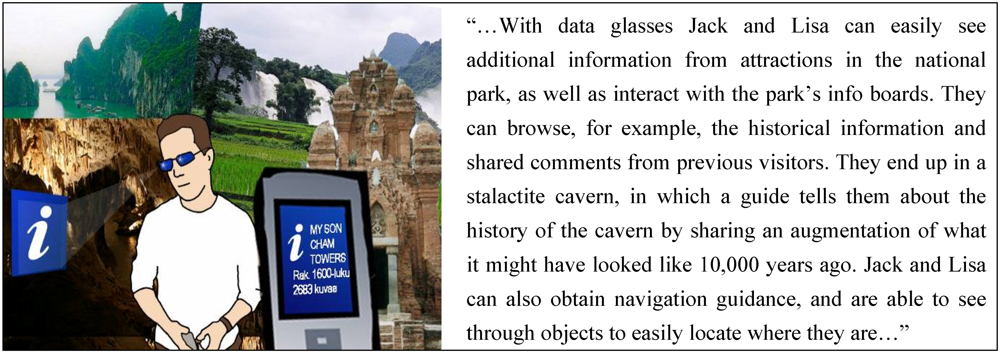

Augmented reality has been applied in diverse practical day-to-day use cases, in entertainment and gaming, as well as in tourism (

Figure 3) and navigation. Examples of augmentable targets have varied from surrounding buildings, machinery or other static objects to vehicles, people, and other dynamic objects.

The diversity of AR as a concept provides a powerful toolkit to design new services, but at the same time creates great challenges for the design of pleasurable user experiences. In our earlier research [

65,

66,

95] we have identified various categories of user experience that potential users expect to arise in interaction with mobile AR, considering it not only as an interaction technology but, rather, as a holistic service entity encompassing AR content and various ubicomp- and AmI-related functionalities. The most central experiential expectations reported in the papers are summarized in what follows (see [

66] for more detailed descriptions).

Figure 3.

An illustration and excerpt of the tourism scenario used as stimulus in focus groups [

95].

Figure 3.

An illustration and excerpt of the tourism scenario used as stimulus in focus groups [

95].

The expectations can be categorized in six main classes of experiences. First, various instrumental experiences were often expected, demonstrating sense of accomplishment, feeling of one’s activities being supported and enhanced by technology, and other pragmatic and utilitarian perceptions of service use. Second, cognitive and epistemic experiences relate to thoughts, conceptualization and rationality. Especially experiences of increased awareness of the digital content related to the environment and intuitiveness of the interaction were found to be much anticipated. Third, emotional experiences relate to the subjective, primarily emotional responses in the user: for example, amazement, positive surprises and playfulness were often expected from services with AR interaction.

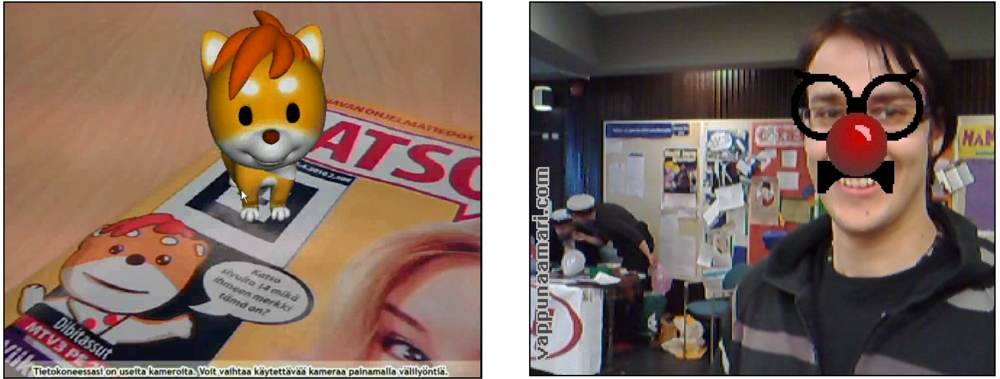

Figure 4 illustrates two examples of AR applications focusing especially on playful experiences. [

66]

Figure 4.

Examples of playful, leisure-oriented, or fun augmented reality (AR) applications. Left: Dibidogs augmented on paper magazines, Right: vappumask mobile application to create humoristic views of people. (VTT archive)

Figure 4.

Examples of playful, leisure-oriented, or fun augmented reality (AR) applications. Left: Dibidogs augmented on paper magazines, Right: vappumask mobile application to create humoristic views of people. (VTT archive)

Fourth, sensory experiences relate to sensory-perceptual experiences that are conceptually processed, originating from the service’s multimodal AR stimuli and influences on the user’s perception of the world around them. Fifth, social experiences relate to human-to-human interactions that are intermediated by technology and features providing a channel for self-expression or otherwise support social user values. For example, connectedness and collectivity relate to the expectation of mobile AR offering novel ways for reality-based mediated social interaction and communication. Social experiences would develop from the collective use and creation of the AR content, in utilizing the socially aggregated AR information in personal use, in co-located and collaborative use of the services, as well as in using the AR as a tool for communication. Finally, motivational and behavioral experiences are created when the use or ownership of an AR service causes a certain behavior in the users [

66]. Overall, the more detailed experiences under each class are summarized in

Table 2.

All in all, potential users attributed a diverse range of expectations to services based on mobile AR. Clearly, the end user’s perception of a service entity or an interaction technology is not limited by theoretical or technical definitions, but driven by their own needs and practices. For example, social experiences, community-created content or proactivity are perhaps not specific to mobile AR –based interaction per se but were expected nevertheless. In addition, many expected mobile AR services to include features like context-awareness and proactivity, which could also be seen as characteristics of intelligent environments. Hence, the expectations related to one aspect or embodiment of intelligent environments (here, mobile AR) can easily highlight issues that can be seen as equally important in other technologies. These anticipated experiences can be considered to be positive and satisfying experiences, that is, something for the user to pursue and the designer to target in their design, and something that could also be utilized in design activities around systems that more fundamentally represent intelligent environments.

To summarize, this section has introduced various expectations and experiential aspects that can be considered important in designing tangible and embodied interaction for intelligent environments. The results on augmented reality in particular imply that people’s expectations of new interaction tools and the experiences they can evoke display a very extensive spectrum of facets that demonstrate minor aspects of the overall UX—from instrumental experiences to emotional and epistemic, for example. The relevance and importance of the various aspects listed here most probably vary from case to case and from context to context. Consequently, the section aims simply to identify and highlight different aspects that should be considered, but the questions of “which of them”, “to what extent”, and “how to incorporate them” should be considered in each development case based on its context and other boundaries.

Overall, user experience includes several dimensions on different levels from instrumental needs for motivation. Even if the dimensions of user experience were identified with the selected interaction tools, the same framework can serve as a starting point when studying other interaction tools and the entities of intelligent environments. The overall user experience is partly based on the interaction tools and the whole interaction experience when acting in an intelligent environment, which makes this point of view on interaction-based experiences a relevant one.

In the next section, we will enhance our focus from mere interaction to the design of intelligent environments and the role of users as crafters of intelligent environments. User role as a crafter of intelligent environments in particular serves the emotional and motivational user experience goals, such as playfulness, inspiration and creativity.

Table 2.

Summary of the main experience classes and the experience categories representing them (see especially [

66] for details).

Table 2.

Summary of the main experience classes and the experience categories representing them (see especially [66] for details).

| Experience class | Category of UX characteristic | Short description |

|---|

| Instrumental | Empowerment | feelings of powerfulness and achievement, being offered new possibilities by technology, and expanding human perception |

| Efficiency | feeling of performing everyday activities and accomplishing practical goals with less effort and resources |

| Meaningfulness | mobile AR showing only the content that corresponds to the surrounding visible things in the real world, thus making it feel relevant and worthwhile in the current location |

| Cognitive and epistemic | Awareness | sense of becoming aware of, realizing something about or gaining a new insight into one’s surroundings |

| Intuitiveness | the interaction with AR feeling natural and human-like |

| Control | sense of controlling the mixing of the realities and the extent to which the service is proactive and knows about the user |

| Trust | being able to rely on the acquired AR content (e.g., faultlessness and timeliness of the content), as well as the realism and correspondence of digital when aiming to replace a traditionally physical activity with virtual |

| Emotional | Amazement | feeling of having experienced something extraordinary or novel |

| Surprise | positive astonishment, ‘wow-effects’ and a service surpassing one’s expectations in general |

| Playfulness | feelings of amusement and joy at the novel way of interacting with mobile AR |

| Liveliness | feeling of continuous change and accumulation of the service and the physical environment, hence feeling vivid and dynamic, and reviving pleasing memories |

| Sensory | Captivation | feeling of being immersed and engaged in the interaction with the mixed reality, possibly also creating feelings of presence in the mixed reality and flow in one’s activities |

| Tangibility | sense of physicality and the content seeming to be an integral part of the environment |

| Social | Connectedness | mobile AR offering novel ways for reality-based mediated social interaction and communication |

| Collectivity | sense of collective use and creation of the AR content |

| Privacy | 1) what information about them and their activity will be saved and where?, 2) how public is the interaction with the service, and how publicly the augmentations are delivered?, 3) who can eventually access the content?, and 4) can people be tracked or supervised by others? |

| Motivational and behavioral | Inspiration | feelings of being stimulated, curious about the new reality, and eager to try new things with the help of mobile AR |

| Motivation | being encouraged and motivated to participate in the service community and contribute to its content |

| Creativity | self-expressive and artistic feelings that AR could catalyze by triggering the imagination and serving as a fruitful interface to demonstrate artistic creativity by, for example, augmenting one’s appearance |

5. Users as co-crafters of intelligent environments

As the existing living and working environments are gradually acquiring intelligent features, it is clear that the existing users or inhabitants of the intelligent environments have to be taken into account in the change process. If people can themselves influence their environments, it may be easier for them to accept and adopt the changes. In this section we describe our approach to providing people with the means to build their own intelligent environments: a scene in which the inhabitants are actively taking part to the construction process; people are not merely consumers or users of ready-made artifacts and environments, but active crafters of their own environments. Consequently, the approach leads people to carry out some of the setting up, configurations and maintenance of their smart environments. We call this approach Do-it-yourself-smart-environment (DIYSE).

In terms of user expectations, the Do-it-Yourself (DIY) culture involves a lot of active enthusiasm. According to Kuznetsov and Paulos, the do-it-yourself culture aspires to explore, experiment, create and modify objects in application fields that range from software to music, and gadgets [

96]. They continue, that over the past few decades the integration of social computing, online sharing tools, and other HCI collaboration technologies have facilitated an interest in and a wider adoption of DIY cultures and practices through 1) easy access to and affordability of tools, and 2) the emergence of new sharing mechanisms. The more intelligent technology-driven DIY, which is the topic of this section, refers to approaches in which users themselves install and create the electronics, hardware and software, and share the knowledge and outcomes. Also with the technology-driven DIY, by doing something yourself allows people to identify and relate to objects on a much deeper level than merely the functional [

97]. Thus the aim of the DIY approach is to allow users to deploy intelligent systems and environments easily in a do-it-yourself fashion. Another aim is to lead developers to write applications and to build augmented artifacts in a more generic way regardless of the constraints of the environments [

98].

There are high expectations of empowering users to build the intelligent applications and environments by themselves. The advantages of constructing a smart environment in a DIY fashion, according to Beckmann

et al., relate to: lower cost, greater user-centric control, more acceptance, better personalization and frequent upgrade support [

99]. Active participation of users is expected to be particularly essential in domestic environments, as the DIY approach offers simplicity, better control, lower deployment costs and support for ad hoc personalization [

98].

The technology-aided DIY experiences were studied in the European research program DiYSE: Do-it-Yourself Smart Experiences (

http://diyse.org/), which aimed at enabling ordinary people to easily create, setup and control applications in their smart living environments as well as in public environments [

97]. User expectations were studied in more than 20 projects that defined intelligent environment as the main research objective, but the projects also focused on creating web-services and traditional PC applications. Correspondingly e.g., Beckmann, Consolvo and LaMarca have envisioned that the emergence of interactive ubiquitous environments makes it important that we consider how devices are placed within an environment, how combinations of these devices are practically managed, and how these devices work as an ensemble [

99]. In DiYSE projects, it was presumed that the foremost challenge would lie in the different characteristics of users operating with the systems, and with their competing expectations and needs for the self-crafted smart experiences.

5.1 Home as the smart installation environment

Home was seen to provide the perfect settings to consider the user expectations relating to DIY creation culture. According to Rodden and Benford, the essential property of home is its evolutionary nature and receptibility to continual change [

100]. Beckmann, Consolvo and LaMarca see that the need to support this change is critical to the successful uptake of ubiquitous devices in domestic spaces [

99]. They emphasize that the aim of using DIY methodology in the assembly of the ubiquitous devices (which share the environment) is to allow users to have more control in the space they live in.

Kawsar

et al. continue that, ideally, the inhabitants should carry out the installing and configuring of the devices [

98]. They have in-depth knowledge of the structure of their home and their activities, resulting in a better understanding of where and which physical artifacts and applications to deploy [

98]. System issues for involving end users in constructing and enhancing a smart home entail two requirements: 1) a general infrastructure for building plug and play augmented artifacts and pervasive applications (the architecture) and 2) simple, easy to use tools that allows end users to deploy the artifacts and the applications [

98]. To study these two approaches and consequent user expectations in the DiYSE program, we developed and carried out user research into a Home Control System [

101]. The use and expectations of pervasive applications and augmented artifacts were studied in Life Story Creation [

102] and Music Creation Tool [

103] research projects.

Figure 5 illustrates these projects.

Figure 5.

Left: visualization of the Home Control System, Right: Music Creation Tool and instruments (Kymäläinen 2012, with the contribution of Geneim Oy and Matti Luhtala).

Figure 5.

Left: visualization of the Home Control System, Right: Music Creation Tool and instruments (Kymäläinen 2012, with the contribution of Geneim Oy and Matti Luhtala).

The three projects were studied from August 2010 to January 2012. The Home Control System was developed for supporting elderly people in living more independently in their own homes and in a (smart) nursing home. The system was expected to enable the creation and combination of interactive operations in a sensor network-enabled intelligent environment. The user expectations were considered with a group of nurses (four users: 4 female and 1 male, varying in age between 28 and 42). The Life Story Creation -project was about constructing an easy do-it-yourself application for elderly people to create their personal retrospections. The acceptance and user experience factors were studied first with focus groups involving senior citizens (15 users: with an average age of 70), and later the application was co-designed with expert-amateur writers (five users: 4 female and 1 male, varying in age between 55 and 69 years). The aim of the Music Creation Tool was to allow disabled people to play music together. The tangible, interactive system was considered to provide an easy means of composing and configuring digital instruments. User expectations were studied with end-user observations (four users: all male, varying in age between 26 and 58) that focused on learning, independent action, supporting creativity and managing confusing situations.

Table 3.

Topics formed from the user studies and manifesto statements for constructing an ideal do-it-yourself smart system [

104].

Table 3.

Topics formed from the user studies and manifesto statements for constructing an ideal do-it-yourself smart system [104].

| Topic | Manifesto Description |

|---|

| 1. | Inspire creativity | The system should be a platform that inspires and supports people to be creative, to self-actualize in their projects. It should motivate them to think outside the box. |

| 2. | Characteristics of users | Support a spectrum of expertise in computational thinking by offering different layers of computational abstractions. The system should support at least three different types of users (the amateur, the professional-amateur and the professional). |

| 3. | Useful components | Instead of requiring programming skills from every user, the system enables the users to start from (sets of) useful components. The system helps people to create useful components. |

| 4. | Supporting ecosystem | The system does not teach how to program, but should provide an ecosystem to support people in creating ideas, solutions. It present the (sets of) useful components in such a way that their purpose is evident and that combining useful components is easy, for example by offering templates. |

| 5. | Variances in purpose | The system equally supports idea generation, material-inspired projects and projects based on other projects. The system takes into account different purposes, from clear purpose to a vague idea, and different personalities of users. |

| 6. | Playground and recycling opportunities | The system offers a playground providing leftovers from other projects and collectables. It allows both finished and unfinished projects to linger and users to tinker with these projects. |

| 7. | Sharing of evolving projects | Support sharing of unfinished or evolving projects. Users are able to share their projects in either the seeding phase, the flourishing phase or the finished phase. |

| 8. | Collaboration between users | Support and facilitate collaboration between users with various roles (creators, debuggers, cleaners, collectors, spectators/fans, etc.) in the creation process. |

| 9. | Subtle tuition | Help users to finish projects by subtle coaching without harassment. |

| 10. | User-preferred terminology | With knowledge of any domain users can employ their own terminology while using the system. The system learns to adapt to this terminology, resulting in a common terminology. |

| 11. | Multimodal systems and haptic interfaces | The system should provide multimodal interfaces to create (sets of) useful components and projects. Users are not restricted to PC-based applications only. Instead, they are stimulated to make use of their everyday interaction patterns with their body or with objects to provide input. |

| 12. | Confusing situations | The system should express and clarify ambiguous situations with the user. |

| 13. | Added value | The system should provide added value for all stakeholders. Hence it is necessary to understand the different expectations of all stakeholders at each step of the system. |

5.2. Emerging user expectations for the do-it yourself intelligent environments

User research, focusing on user acceptance and user expectations, was considered to help in constructing an ideal DIY smart system. The results from all user studies of the DiYSE program were refined into manifesto statements that were published after the project for opening up the technology-driven DIY creation to a wider audience [

104].

Table 3 presents the 13 topics of the manifesto for constructing an ideal do-it-yourself smart system.

To illustrate these manifesto statements and to consider them in the terms of user expectations of intelligent environments, in what follows they will be reflected to the three DiYSE -projects: Home Control System, Life Story Creation and Music Creation Tool.

At the core of the do-it-yourself culture is the creativity and craftsmanship that is expected to be provided from the part of users. When DIY is positioned in the intelligent environment context, support for self-actuality and creativity is equally essential. Two case projects, Life Story Creation and Music Creation Tool, provided an interactive writing application and tangible musical instruments that were explicitly intended be creativity supporting tools. User studies of the Life Story Creation focused on defining experiences relating to writing as a creative activity, but discovered user expectations also led to study the issues around the processes—the flexibility to extend and modify the writing process according to the flow of creativity; the different types of writing persons and how various kinds of creativity should be supported, and the difficult issues relating to sharing an intimate piece of writing.

Several different expectations were made of all the case DiYSE systems, as in each case users were different and had various levels of computational skills. The extreme case was the Music Creation Tool, in which there were many variations in the intellectual and adaptive skills of the people it was studied with. The Music Creation Tool designed for a special group, people with a mild or moderate (Diagnosis ICD-10) intellectual learning disability, had to be flexible and provide options according to various skill levels. The anticipated use of the system was considered at first with a music therapist; then observation studies confirmed the usage of the instruments and by analyzing the results the user expectations could be determined. Life Story Creation and Home Control System focused on finding ‘warm’ experts who would be the support persons for the DIY ecosystems. A ‘warm’ expert is a person who has some degree of emotional ties to the (end-) users of the application they help to design and/or implement [

105].

The principal expectations of the Home Control System user research related to the component creation—what kind of components users wanted to create and what they expected to be ready-made. The studies revealed that the nurses, the ‘warm’ expert of the ecosystem in question, preferred ready-made templates for constructing the setups, and their task would only be to fine-tune the templates. Also according to the results from Mackay [

106], when studying triggers and barriers to customizing software, simply providing a set of customization features does not ensure that users will take advantage of them. The process involves a trade-off—the choice between activities that accomplish work directly and activities that may increase future satisfaction or productivity. This was also our important finding relating to user expectations of the component creation.

According to Gershenfeld [

107],

digital recycling is about a digital processes being reversible, based on an assumption that the means to make something are distinct from the thing itself. And further, the construction of digital materials can contain the information needed for their deconstruction. In all DiYSE projects the possibility of recycling templates and projects were presented as an option, but the developed proof-of-concept prototypes concentrated more on speculating about meaningful opportunities for reuse. In the contemporary digital world it should be easy to organize sustainable conditions in which things are not thrown away, but rather (scrap) materials and older projects are kept in store for recycling.

As regards the culture of

collaboration, Kuznetsov and Paulos [

96] clarify that DIY communities invite individuals across all backgrounds and skill levels to contribute, resulting in: 1) rapid interdisciplinary skill building as people contribute and pollinate ideas across communities, and 2) increased participation supported by informal (“anything goes”) contributions such a comments, questions and answers. The study by Fischer

et al. [

108] also reveals that question asking and answering is the core process behind the propagation of methods and ideas,

i.e., participants tend to “learn more by teaching and sharing with others”. From the three DIYSE projects, Life Story Creation was the only project in which the users actually shared their work with each other during the evaluations, by providing instructions and comments or by just reading each other’s stories. According to our studies, collaboration has a central role when defining the efficiency and usefulness of the DIY system; collaboration provides most value for individual users. According to Kuznetsov and Paulos, DIY is a culture that strives to

share together while working alone [

96]. Social media was thought to be the entryway for publishing music in the Music Creation Tool and sharing memories in the Life Story Creation, and the people who were studied appreciated this opportunity. However, in both cases, the sharing of unfinished projects was seen to be unpleasant.

An important part of the user experience of the intelligent system is that it should motivate and support users to create the projects by means of subtle, non-harassing coaching. As a result, all users, regardless of their level of expertise, are able to use the system to the fullest [

104]. In the Home Control system, for

subtle tuition, there were various different attempts to employ diverse methods for the subtle tuition. The attempts comprised, e.g., step by step wizards that would guide the users through processes; a puzzle metaphor for connecting the components representing tasks and devices together; use of a sentence structure for supporting to create the setups; enlarging and diminishing grids, palette type of selection methods and ultimately, voice feedback for detecting the objects of the environment [

101]. It appeared that the level of expertise set most critical requirements for the tuition method.

Smith

et al. [

109] have claimed that any textual computer language represents an inherent barrier to user understanding. According to them, a programming language is an artificial language that deals with the arcane world of algorithms and data structures—people do not want to think like a computer, but they do want to use computers to accomplish tasks they consider meaningful. The above-mentioned numerous tuition methods illustrate how in the development of the Home Control System special attention was given to how the system would adapt to

user’s terminology.

To better make use of what is offered by the physical environment, DIY environment should be facilitated with

multimodal system inputs. The Music Creation Tool consisted of tangible instruments that provided musical experiences, and one of the key objectives was to observe how users interacted with them in their environment. Observations surprisingly revealed that there were several (learned) expectations of corresponding analogue, tangible objects. A fine example of the use of physical modality is one of the considered alternatives for configuring the Home Control System. The research group developed the idea of taking a snapshot of the environment by physically selecting devices: a switch could first be turned on, and then the state of it would become visible in the user interface. In this way the user could have a better cognition of the control environment. According to Fischer

et al. [

108], this responds to the aim of reducing the cognitive burden of learning by shrinking the conceptual distance between actions in the real world and programming.

At best, the Do-it-yourself approach should respond to the challenge of many different expectations of the different characteristics of users. The main value of the Home Control System, for the nurses, was the pre-configured tasks that they expected to ease their workload. An unexpected value, that was not thought initially, was that the system could also assist patients who cannot move from their beds. The Music Creation Tool observation period confirmed that the unexpected end-users’ needs were related to publishing music and performing for an audience. In the Life Story Creation, the interviewees anticipated that the system would respond to the need to trace back one’s personal history and learn about lost family ties.

In addition to the topics we identified in our DIYSE studies, other topics may also influence the value of DIY intelligent environments. One potential topic is context-awareness. Context-aware applications are applications that implicitly take their context of use into account by adapting to changes in a user's activities and environments [

110]. Context-awareness has been successfully introduced in projects such as iCAP [

111] and CAPella [

110]. iCAP is a tool that allows users to quickly define input devices that sense context, and output devices that support response, create application rules with them, and test the rules by interacting with the devices in the environment. CAPpella empowers users to build context-aware applications that depend on intelligence—making inferences based on sensed information about the environment.

6. Discussion

In ISTAG's vision of Ambient Intelligence [

2], the human viewpoint is central: AmI should pursue user-friendliness, efficient service support, user-empowerment, and support for human interactions. Modifying Weiser [

1], entering a properly implemented intelligent environment should, for the user, be as refreshing as taking a walk in the woods. And as Aarts and Grotenhuis [

5] propose, intelligent environments should provide meaningful solutions that balance mind and body rather than solutions that drive people to maximum efficiency. The progress of technology can certainly be headed towards these human-driven aims, but we need to know more about specifically what kind of user expectations the technology should meet.