1. Introduction

High-resolution multichannel satellite images with both high spatial resolution and spectral diversity are required in many image-processing applications, such as change detection, land-cover segmentation, and road extraction. However, due to the limits on the signal-to-noise ratio, these acquisitive images cannot be achieved only through a single sensor [

1]. Thus, most remote sensing satellites (e.g., WorldView-2, QuickBird, IKONOS) simultaneously provide a panchromatic (PAN) image with high spatial but low spectral resolution, and a multispectral (MS) image with complementary properties [

2,

3]. Then, a pan-sharpening algorithm is applied to merge the MS and PAN images to have a high spatial resolution MS image, preserving the spectral information of MS. When the spatial details are obtained from a multispectral/hyperspectral sequence, the pan- sharpening algorithm is called hyper-sharpening [

4].

In the last two decades, many pan-sharpening methods have been proposed to fuse MS and PAN images. A detailed comparison of the characteristics and performance of classical and famous pan-sharpening methods was presented in [

5]. Initial efforts mainly focus on the component substitution-based (CS) methods, such as the principal component analysis (PCA) method [

6], the intensity-hue-saturation (IHS) method [

7,

8] and the Gram–Schmidt (GS) method [

9]. The primary concept of these CS-based methods is to first transform the upsampled MS images to a new space. If one component of the new space contains equivalent structures to those of the PAN image, fusion occurs by totally or partially substituting this component with the PAN image. Finally, a corresponding inverse transform is performed to obtain the high-resolution pan-sharpened image. These CS-based methods are fast and easy to implement, but are not adaptable to the latest generation of high-resolution remote sensing images. A common problem that arises with these methods is the serious color change due to spectral distortion. The main reason for the spectral distortion is that the wavelength range of the new satellite PAN image is extended from the visible to the near infrared [

10]. A possible simple modification of these schemes, also applicable to CS methods, replaces the interpolated MS image with its deblurred version, where the deblurring kernel is matched to the modulation transfer function (MTF) of the MS sensor [

11]. Recently, pan-sharpening has also been formulated as a compressive sensing reconstruction problem, but this scheme has high computation complexity [

12,

13].

Multiresolution analysis (MRA)-based methods have attracted interest in the pan-sharpening field [

14,

15]. These include traditional pyramid-based methods such as Laplace transform (LP) [

16] and gradient transform [

17]; wavelet transform (WT)-based methods such as discrete wavelet transform (DWT) [

18]; and dual-tree complex wavelet transform (DTCWT) [

19], and burgeoning geometric analysis methods such as Curvelet transform [

20], Contourlet transform [

21] and Shearlet transform [

22]. The MRA approaches extract high-pass spatial detail information from the PAN image and then inject them into each band of the MS image, interpolating at the same resolution as the high-resolution PAN image [

23]. Compared to the CS methods, the MRA methods can preserve spectral characteristics in the sharpened image, but they usually suffer from spatial distortion problems, such as ringing or stair-casing phenomena [

24].

To overcome the limits of MRA and CS methods, many researchers jointly adopted the CS and MRA methods to fuse the MS and PAN images [

25]. These category methods are based on a CS frame. They fuse the representation component of the MS image and the PAN image by the use of various multiscale transforms. In addition, some predefined fusion rules are used in the fusion process. Then, the corresponding inverse transform is applied in the fused component and the other components of the MS image to obtain the final sharpened image. For example, Cheng et al. [

26] jointly adopted IHS and WT to fuse IKONOS and QuickBird satellite images, in which WT was applied to the intensity component of the MS images and PAN image. The spectral information of the resultant images was improved through this method. Dong et al. [

27] combined the IHS with the Curvelet transform to fuse remote sensing images, utilizing the better edge preservation property of Curvelet to enhance the spatial quality of the fused images. Shah et al. [

28] proposed an adaptive PCA and Contourlet transform-based pan-sharpening method, in which the adaptive PCA was used to reduce the spectral distortion. Ourabia et al. [

29] tried to find a compromise between the spatial resolution enhancement and the spectral information preservation at the same time by using enhanced PCA and nonsubsampled Contourlet transform (NSCT).

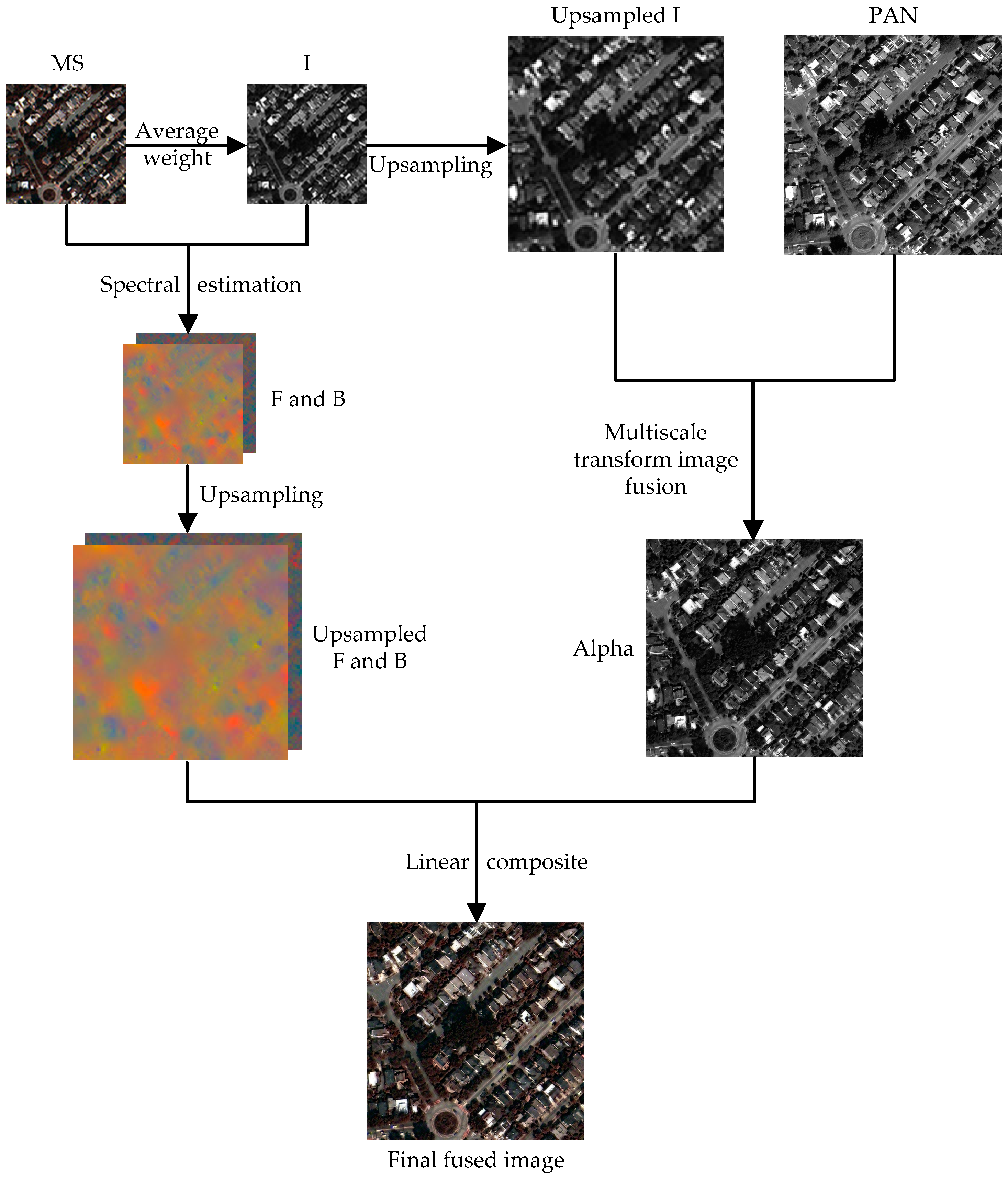

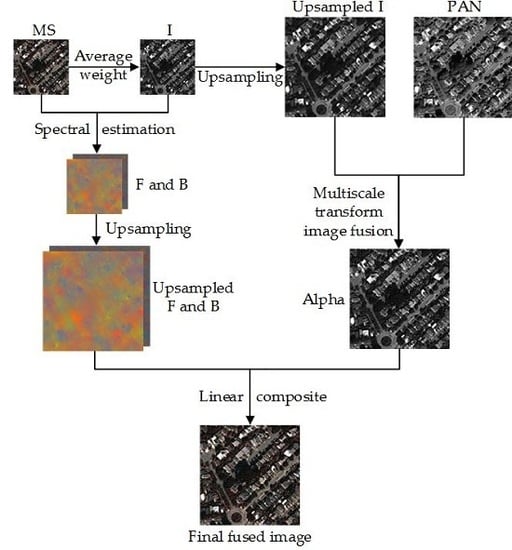

It can be seen that the foregoing hybrid methods improve performance to some extent, compared to the previous CS- or MRA-based pan-sharpening methods, but there are also some drawbacks to these frames. For example, the IHS transform-based frameworks are only feasible for three bands images, and the PCA transform-based frameworks result in serious spectral distortion. To improve the pan-sharpening performance, a novel pan-sharpening framework that is based on the matting model and multiscale transform is proposed in this paper. First, the I component of the MS image is selected as the alpha channel to estimate the foreground and background images with a local linear assumption. Then, the upsampled I component and the PAN image are fused by adopting a discretionary multiscale transform tool. Finally, a high-resolution sharpened MS image can be achieved by a composition operation on the fused image with the upsampled foreground and background images. The proposed framework has no limits regarding the amount of MS image bands. Thus, it can be directly applied for three, four, and even eight bands of MS images.

Furthermore, in terms of the multiscale transform, due to limits on directional selectivity, the classical WT can be sensitive to point-wise singularities, but cannot capture other types of salient features, such as lines. Therefore, WT often causes artifacts and Gibbs effects in the final fused results. To better represent high-order singular features, researchers have put forward a number of more effective multiresolution geometric analysis tools, such as the aforementioned Curvelet transform and Contourlet transform. These transforms are anisotropic and have good directional selectivity, so they can accurately represent the image edge information in different scales and directions. However, because there are down-sampling operations in their decompositions, they lack shift-invariance, resulting in the fused result being affected by the noise or mis-registration of source images. To overcome this disadvantage, Cunha et al. [

30] proposed the NSCT, which is an improved version of the Contourlet transform. However, the computational complexity of NSCT is high, and thus the fusion process consumes too much time.

In recent years, an excellent multiresolution analysis tool named nonsubsampled Shearlet transform (NSST) was put forward and has been applied extensively [

31]. The NSST is not only shift invariant, but also multiscale, and it exhibits multidirectional expansion; it maps the standard shearing filters from a false polarization grid system into a Cartesian coordinate system directly during the multidirectional decomposition procedure. This mapping process can maintain well deserved multiscale analyses properties while drastically reducing computation complexity. Thus, as an example, we choose the NSST in this paper due to its superiority compared to other multiscale transforms. Certainly, it can be extended to any other multiscale transform approach in the proposed framework if necessary.

In the multiscale transform image fusion process, the coefficient fusion rules are crucial. For the low-frequency coefficients, a gradient domain-based adaptive weighted averaging rule is proposed. Furthermore, as to the high-frequency coefficients, the simple but effective spatial frequency (SF) fusion rule is designed. Experiments on WorldView-2, QuickBird, and IKONOS satellite images demonstrate that the proposed method outperforms several state-of-the-art pan-sharpening methods in terms of both subjective and objective measures.

This paper is organized as follows. We address relevant works about the matting model and NSST theories (

Section 2), introduce the proposed method in detail (

Section 3), and display the evaluation metrics and fusion result discussion (

Section 4). Conclusions and some future works are presented in

Section 5.

4. Experiments and Discussion

To estimate the performance of the proposed pan-sharpening framework, some experiments have been performed on three different satellite datasets, which will be introduced in detail next. Several common evaluation indexes in the pan-sharpening field are explained. In addition, some comparison tests are implemented to compare the proposed framework with IHS- and PCA-based methods and other existing state-of-the-art pan-sharpening methods.

4.1. Datasets

In this paper, the proposed method has been conceived for very high-resolution datasets, and it has been tested on data acquired by some of the most advanced systems for remote sensing of optical data. These include WorldView-2, QuickBird, and IKONOS; their main characteristics are summarized in

Table 1 and

Table 2. This sensors selection allows us to study the robustness of the proposed method with respect to both spectral and spatial resolution [

35].

The WorldView-2 dataset used in this paper was obtained from the literature [

36], which is an open data share platform; it was collected by the Beijing key laboratory of digital media. The size of the MS images and PAN images in this dataset are

and

, respectively. Simultaneously, the reference images were also provided in this dataset, so we directly used the reference images as a standard for objective evaluation. However, WorldView-2 is an 8-band MS satellite; this database only provided the 5 (R), 3 (G), and 2 (B) bands of the MS image as the real color image. In order to check the performance of the proposed framework in the case of a multiband (more than three bands), two other types of datasets, IKONOS [

37] and QuickBird [

38], were utilized. These two datasets are all captured from the four-band MS satellite. The size of the MS image and PAN image of IKONOS used in this paper are

and

, respectively; those for QuickBird images are

and

, respectively. Because there is no high resolution MS image in these two datasets, we degrade the original MS and PAN images by a factor of 4 using the protocol in literature [

39] to yield the MS and PAN images of IKONOS with pixel size

and

, and yield the MS and PAN images of QuickBird with pixel size

and

. Then, the corresponding original MS images were used as the reference image to compare the fused image.

4.2. Quality Assessment of Fusion Results

Generally, we can measure the performance of an image fusion method through subjective and objective evaluations. The clarity of the objects and the proximity of the colors between the fused images and the original MS images are usually taken into account for subjective evaluation. However, it is difficult to compare the fusion quality accurately based solely on subjective evaluation.

To quantitatively evaluate the image fusion methods, several indexes are adopted to assess the performance of different fusion methods. In the experiments, seven well-known indexes are used and introduced in detail as follows:

- (1)

Correlation Coefficient (CC): The CC of the fused image and reference image reflects the similarity of spectral features. The two images are correlated when the CC is close to 1.

- (2)

Universal Image Quality Index (UIQI) [

40]: The UIQI is used to measure the inter-band spectral quality of the fused image, the optimum value of which is 1. A value closer to 1 indicates that the quality of the fused image is better.

- (3)

Mean Square Error (RMSE) [

41]: The RMSE is widely used to assess the difference between the fused image

and the reference image

by calculating the changes in pixel values. The smaller the RMSE, the closer the fused image to the reference image.

- (4)

Relative Average Spectral Error (RASE) [

42]: The RASE reflects the average performance of the fusion method in the spectral error aspect. The ideal value of RASE is 0.

- (5)

Spectral Angle Mapper (SAM) [

43]: The SAM reflects the spectral distortion between the fused image

and the reference image

. The smaller value of SAM denotes less spectral distortion in the fused image.

- (6)

Erreur Relative Global Adimensionnelle de Synthèse (ERGAS) [

44]: The ERGAS reflects the overall quality of the fused image. It represents the difference between the fused image

and the reference image

. A small ERGAS value means small spectral distortion.

- (7)

Quality with no reference (QNR) [

45]: The QNR, which is composed of the spectral distortion index

and the spatial distortion index

, reflects the overall quality of the fused image. The best value of QNR is 1. It is typically utilized to ensure that the quantitative evaluation is without a reference image. In this paper, we used the reference image instead of the original MS image for spectral assessment.

4.3. Performance Compariosn with IHS- and PCA-Based Pan-Sharpening Methods

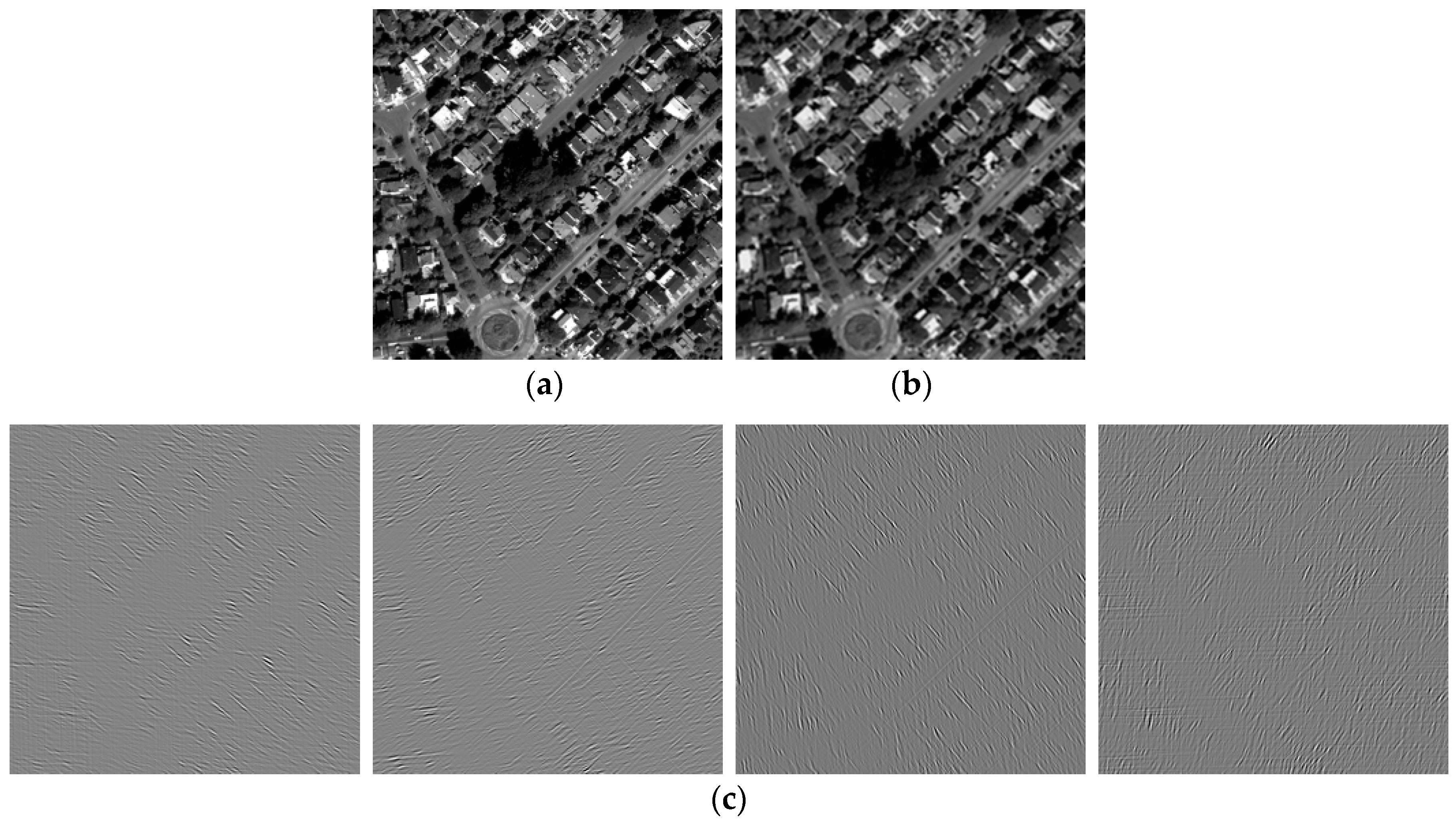

IHS- and PCA-based hybrid methods are classical methods in pan-sharpening, and a great number of studies are based on these two theories. To compare the performance of the IHS- and PCA-based joint frameworks with the proposed matting model framework, we experimented on a WorldView-2 dataset by immobilizing the multiscale transform and used only the simple three-level WT as the multiscale transform tool; the simple average and max approaches are used as the low- and high-frequency coefficient fusion rules, respectively. The fused results and their corresponding objective evaluation are shown in

Figure 6 and

Table 3, respectively. The CC, UIQI, RASE, RMSE, SAM, ERGAS, and QNR are utilized to assess the quality of the fused images. The numbers in the brackets refer to the ideal index values.

Figure 6a is the MS image sized

;

Figure 6b is the PAN image sized

;

Figure 6c is the reference image.

Figure 6d–f are the IHS, PCA, and proposed framework fused results. Due to the excellent effects, it is difficult to compare the performance only from visual contrast except that the IHS-fused image exhibits a bit of spectral distortion relative to the reference image. However, from

Table 3, the superiority of the proposed framework compared to the IHS- and PCA-based methods can be easily observed in terms of both the spatial and spectral objective quality evaluation indexes. The implementation efficiency is also high, despite the proposed pan-sharpening framework being inferior to the IHS and PCA methods due to the optimization algorithm in the matting model, and this problem will be improved with the development of graphics processing unit (GPU) technology. Thus, the proposed framework is feasible and effective in the pan- sharpening field.

4.4. Comparison with State-of-the-Art Methods

Several experiments have been carried out to compare the pan-sharpening performance of these methods, which include the proposed framework and some existing state-of-the-art approaches. Moreover, the source images acquired by different satellite datasets and different types of land cover were utilized to test the robust performance of the proposed framework. In this part, the proposed framework is respectively combined with DWT (set as experimental, as noted previously) and NSST to compete with the following excellent pan-sharpening approaches, which have for the most part appeared in recent years.

- (1)

DWT–SR: DWT and Sparse Representation-based method [

26];

- (2)

Curvelet: Curvelet transform-based method [

27];

- (3)

NSST–SR: NSST and Sparse Representation-based method [

46];

- (4)

GF: Guided Filter-based method [

47];

- (5)

AWLP: Additive Wavelet Luminance Proportional method, a generalization of AWL [

48];

- (6)

BFLP: Bilateral Filter Luminance Proportional method [

49].

- (7)

MM: Matting model and component substitution-based method [

33].

- (8)

MM–DWT: The proposed framework and DWT-based method.

- (9)

MM–NSST: The proposed framework and NSST-based method.

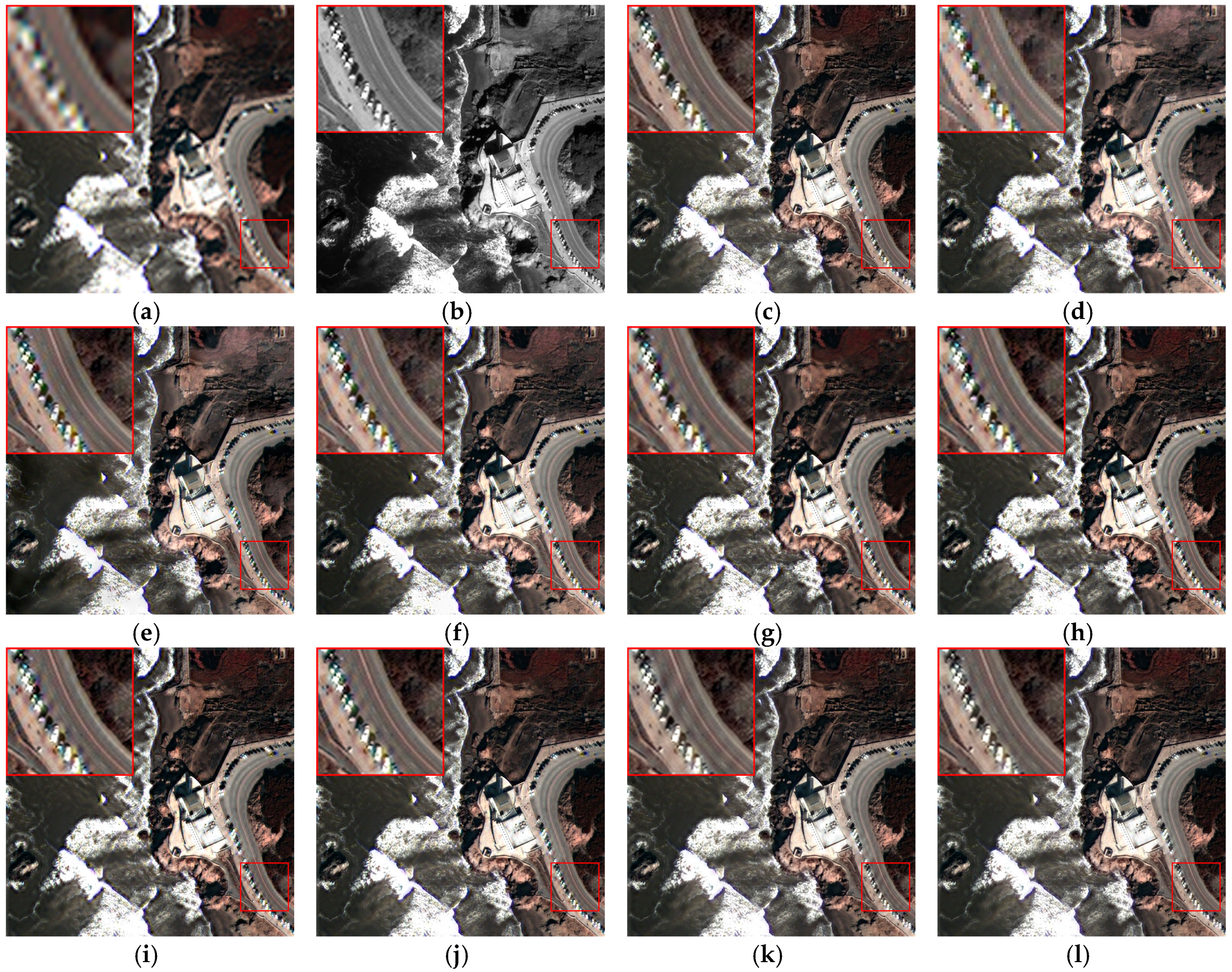

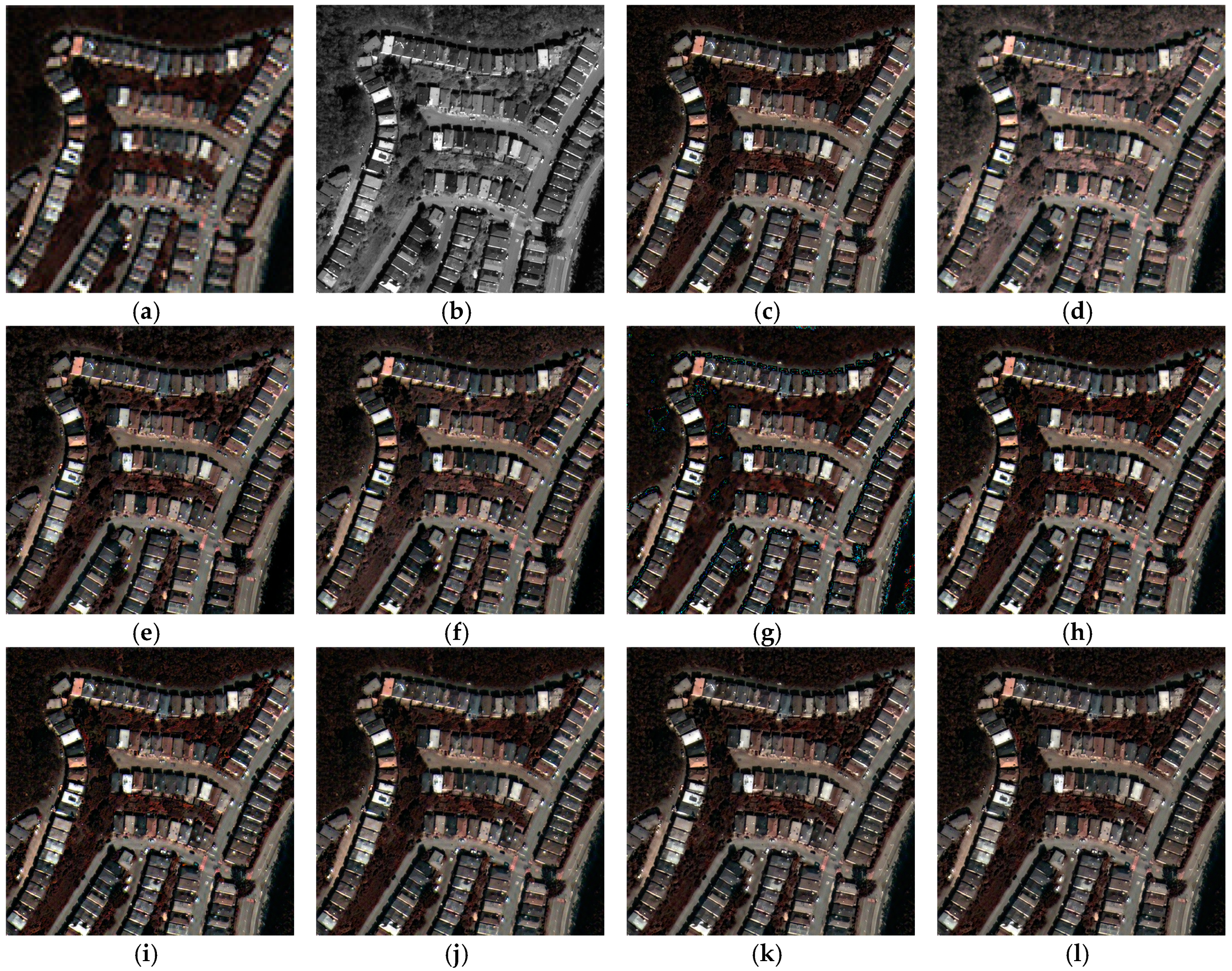

The first group experiment was performed on the WorldViwe-2 datasets coast area.

Figure 7 shows the source images and their fusion results;

Figure 7a,b are the MS image and PAN image;

Figure 7c is the ground truth image;

Figure 7d–l are the fusion results of different pan-sharpening methods. To observe the fusion results more clearly, we cut a sub-image placing at the top left corner in the fused images. A visual comparison indicates that the DWT–SR and Curvelet results exhibit spectral distortion phenomena; the result of the NSST–SR method shows improvement, but not remarkably. The GF, AWLP, and BFLP methods obtained better spectral information overall. However, from the sub-images, it is apparent that some serious artifacts occurred around the cars. The fusion results of the MM, MM–DWT, and MM–NSST methods are all close to the reference image, particularly the spectral aspect, which indicates the superiority of the matting model in spectral preservation. Undeniably, the MM–DWT result has spatial distortion in the road area. Due to the productive shift invariance in NSST, the proposed MM–NSST method can overcome the artifacts effectively; thus, apart from the outstanding spectral information, this method can obtain high spatial quality as well.

Table 4 presents the objective evaluation results in

Figure 7; although the largest SAM value is achieved by the MM method, the proposed MM–NSST method obtains the best values in other indexes as well as the second largest SAM value. In summary, the proposed pan-sharpening framework can obtain outstanding performance in terms of both spatial acquirement and spectral preserving.

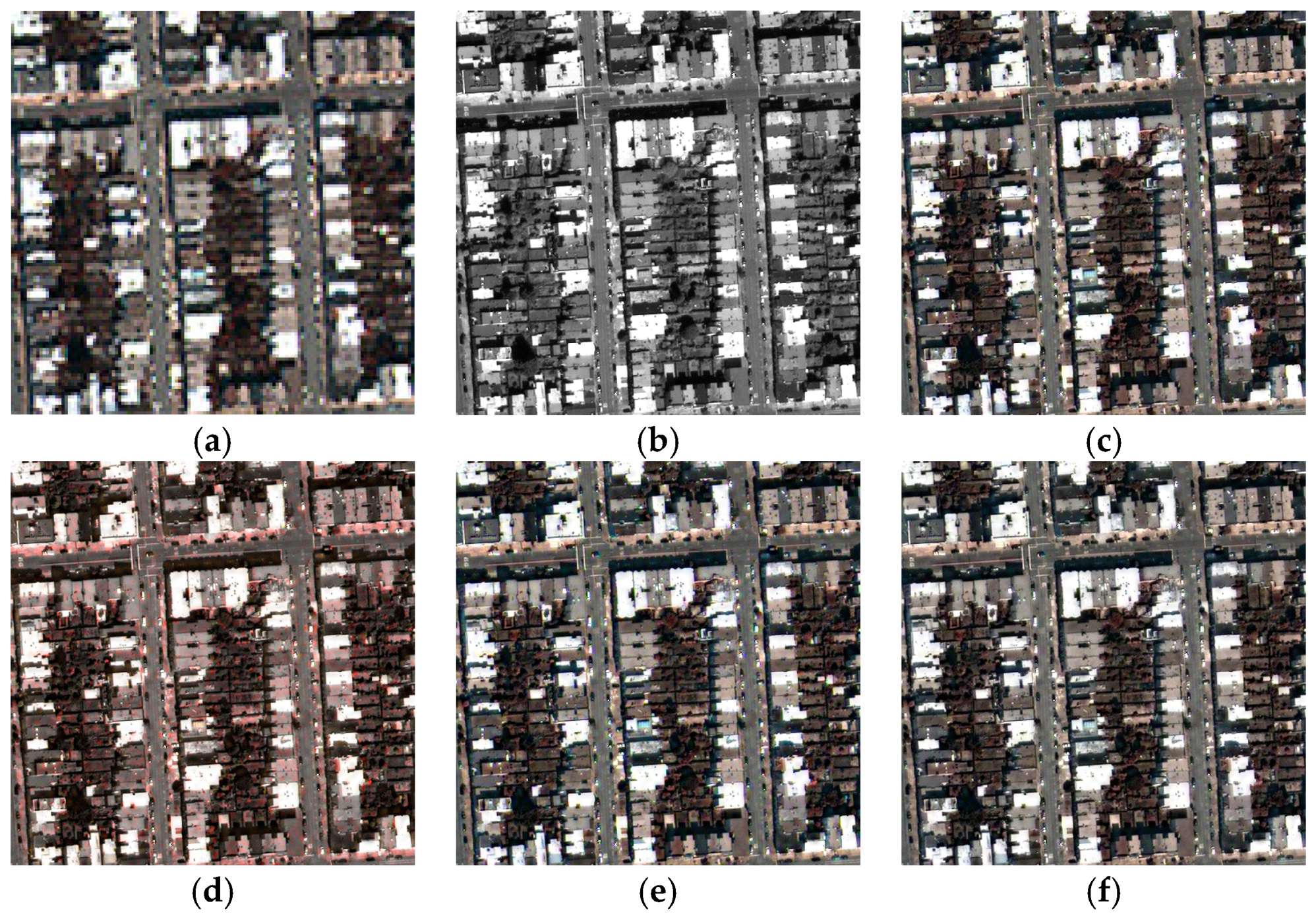

Different land cover types were considered with respect to performance, and the second group experiment was tested on the WorldView-2 datasets with urban land cover.

Figure 8a–c are the source MS and PAN images and reference image, respectively.

Figure 8d–l and

Table 5 display the fusion result images and the objective evaluation results of different methods. Spatial and spectral information analyses were performed. Due to this area object’s characteristics, apart from the fusion result of the DWT–SR method suffering from slight spectral distortions and the AWLP, BFLP methods have some degree of spatial blurring, and the fusion results of the other methods obtained fused images with good visual performance, which approximate the reference image. Therefore, there was no obvious comparison with subjective evaluation. However, the objective quality comparison results shown in

Table 5 indicate that the proposed method obtained the best values for all evaluation indexes; thus, the proposed method achieves the highest spatial quality while maintaining the best spectral information.

The third group experiment was performed on an uptown area from the WorldView-2 dataset. Usually, suburban areas contain abundant vegetation cover, which is commonly used in spectral contrast.

Figure 9a is the low-resolution MS image, and

Figure 9c is the high-resolution MS reference image. Using these two MS images as spectral references, we can visually compare the spectral maintaining performance of different pan-sharpening approaches. The spectral distortion of the DWT–SR method is the most serious; the vegetation area in particular is affected, although it obtains preferable spatial quality. The Curvelet and NSST–SR methods show some improvement. The GF method exhibits varying degrees of spectral aberrance and introduces some spectral information that does not exist in the source MS image. The AWLP, BFLP, MM, and proposed methods achieve better progress in spectral maintaining.

Table 6 shows the objective quality assessments of this group experiment, from which we can see that the proposed MM–NSST method obtains the best effect compared with other methods.

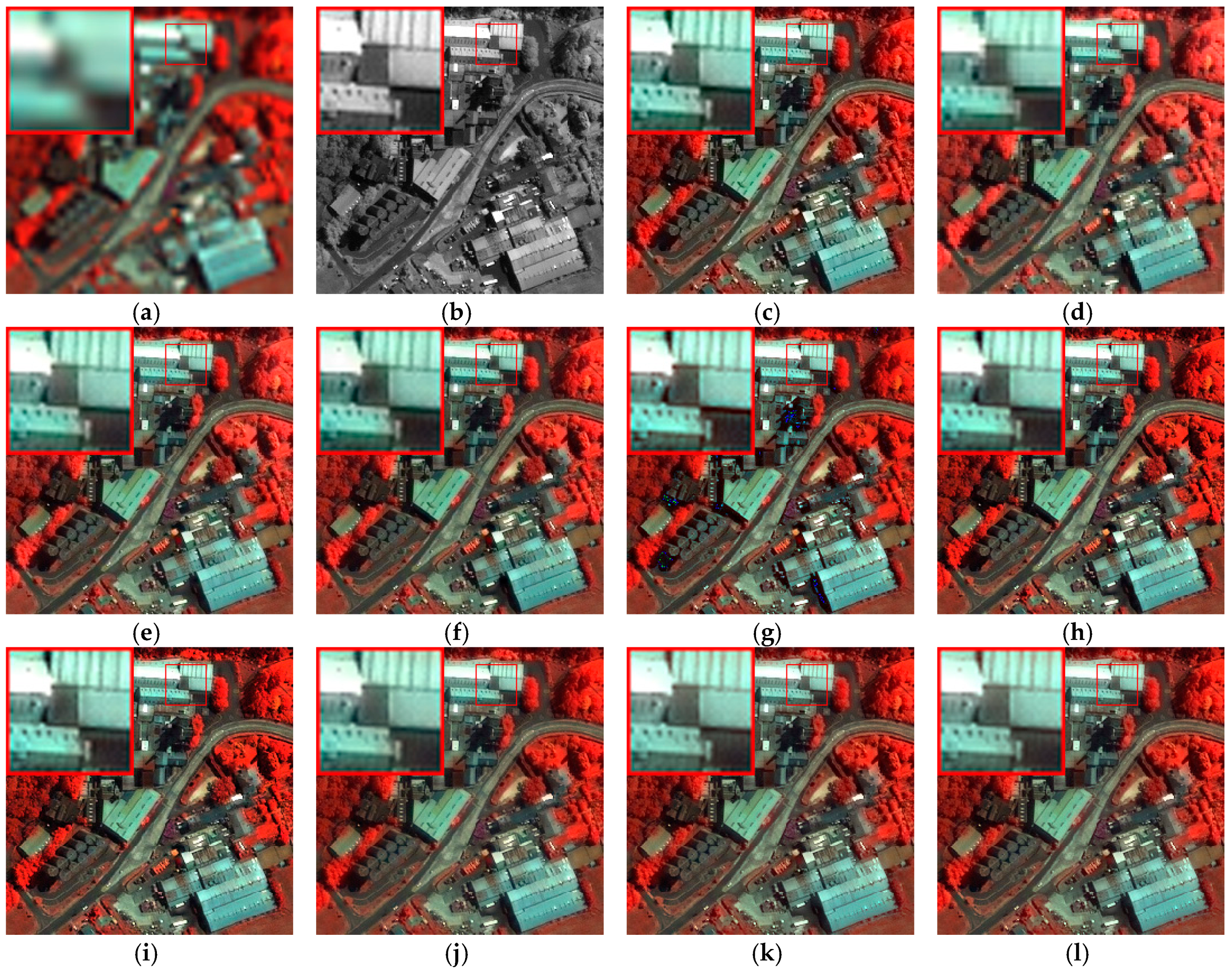

The IKONOS dataset was used in the fourth group experiment to compare the performance of the proposed framework on different satellite images. The MS image of this dataset includes four bands (nir red, red, green, and blue). We used the nir red, green and blue bands for display.

Figure 10a,b are the degraded MS image and PAN image;

Figure 10c is the original MS image and is used as a reference image. Similarly, we cut the sub-images and placed them at the top left corner in the fused images as well. Compared with the reference image, the fusion performance of the DWT–SR method is unsatisfactory both in the spatial and spectral aspects. The Curvelet and NSST–SR methods improved with respect to the spatial aspect, but the spectral side needs further improvement. The GF method result still suffers from spectral distortion. Other resultant images look similar to the original MS image. The objective evaluation results shown in

Table 7 indicate that the proposed MM–NSST method excels in six indexes and the QNR value is the second largest; this is testament to the superiority of our framework on the IKONOS dataset.

The last group dataset used in this paper is obtained from the QuickBird satellite; it also consists of four bands in the MS image. We displayed the three band color images as in the IKONOS set. These experimental data focus on the suburban regions, which have a great deal of vegetation. Thus, it is more convenient for spectral information comparison. From

Figure 11, it can be seen that all the pan-sharpening methods achieved decent spatial quality. The DWT–SR and Curvelet methods have slight spectral distortion compared to the reference image, and the spectral aberrance of the GF method result is much better in this dataset. Other methods maintain the spectral information of the MS image effectively.

Table 8 shows that the proposed MM–DWT method obtains the best performance in the QNR index and the proposed MM–NSST can obtain the best values in other indexes. Thus, the superiority of the proposed pan-sharpening framework is demonstrated again.

4.5. Contrast on Running Time of Multiscale Transform-Based Methods

In this part, the operating efficiency of the proposed method is analyzed. Because the proposed pan-sharpening framework is based on the multiscale transform, comparison with the non-multiscale transform method is meaningless. Thus, only the multiscale transform-based methods are compared.

Table 9 shows a comparison of the average running time of the preceding five groups of the multiscale transform-based methods in part 4.4. It can be seen that the running time of the multiscale transform-based pan-sharpening approaches is mainly decided by the transform method and the fusion rules. Obviously, the SR-based fusion rule is time consuming. Due to the more complex decomposition and reconstruction procedures, the NSST requires more time in multiscale transform image fusion than the DWT and Curvelet approaches, but NSST has been proven effective in the foregoing part. In addition, the proposed framework combined with DWT method is the fastest compared to other multiscale transform-based methods. Meanwhile, it should be noticed that in the previous five groups experiments, this method also achieved the desired performance (particularly for those groups on the IKONOS and QuickBird datasets), although the simplest fusion rules were used. Thus, the proposed framework can simultaneously meet the conditions of satisfaction in efficiency and effectiveness if the appropriate multiscale transform method and fusion rules are utilized.

4.6. Implementation Details

In this section, we provide some implementation details for the proposed method and other comparative methods. All codes are implemented in MATLAB 8.3 (running on a Windows 10 system PC with Intel Core i5-350 3.30-GHz processor and 8-GB memory). All of the resample operations in this paper are bicubic interpolation algorithms. In our method, three-level NSST decomposition with a (30, 40, 60) shearing filter matrix and (2, 3, 4) direction parameters are applied to the upsampled I component and matched PAN image, respectively. The pyramid filter is ‘pyrexc’ wavelet. The parameter in NSST low-frequency coefficients fusion is 99, and the window size of the local SF in high-frequency coefficient fusion is . The other pan-sharpening techniques for performance comparison are well known; the experiment parameters in these methods are set as the references.

5. Conclusions

Spectral image pan-sharpening is an important process in remote sensing research and applications. Addressing existing problems in the pan-sharpening area, this paper presented a new pan-sharpening framework by exploiting the matting model and multiscale transform. The proposed framework first obtains the spectral foreground and spectral background of the low- resolution MS image with a matting model, in which the I component of the MS image was used as the alpha channel. Then, the PAN image and the upsampled I component were fused by using a proposed multi-scale image fusion method. The fused image can be used as a new alpha channel to obtain a high-resolution MS image based on a linear combination with upsampled spectral foreground and spectral background images. In this paper, the NSST was introduced in detail as a multi-scale transform example. Through our framework, the PAN and MS image fusion problem was solved. To improve the performance of our method, an adaptive weighted averaging in gradient field rule and a local SF rule were proposed to fuse the low-frequency and high-frequency sub-band coefficients, respectively. Experimental results on different satellite datasets and different land cover certify that the proposed method can achieve superior spatial detail from the PAN image while preserving more spectral information from the MS image compared to some existing pan-sharpening methods. Therefore, the results produced by the proposed method could be better used in the remote sensing applications such as image interpretation, water quality evaluation and vegetated areas research. Importantly, the proposed framework is suitable for any multiscale transform tool to cope with the pan-sharpening research; thus, this framework has bright prospects.

In future works, we would like to improve the effectiveness of the proposed framework by constructing some more powerful multiresolution analysis tools with different Wavelet basis functions. We would also like to extend the application ranges to diverse sensor data, such as optical and radar remote sensing images, thermal infrared and PAN images. Moreover, as in most pan-sharpening literature, we used completely registered satellite images for experiments. We plan to collect some datasets with temporal and instrumental change, and analyze the robustness of the proposed framework on them.