Performance Evaluation of Downscaling Sentinel-2 Imagery for Land Use and Land Cover Classification by Spectral-Spatial Features

Abstract

1. Introduction

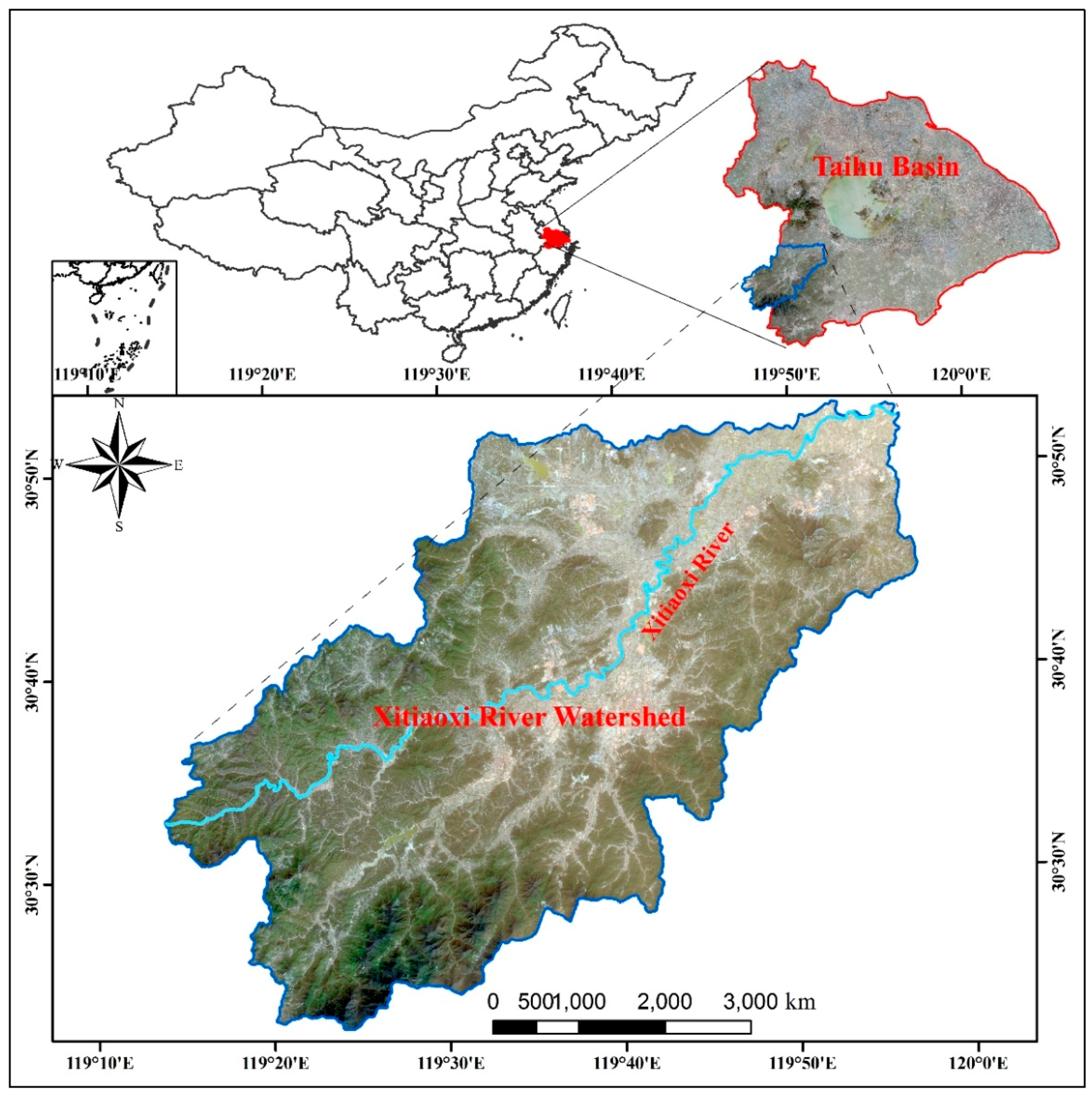

2. Study Area and Data

2.1. Study Area

2.2. Data

3. Methods

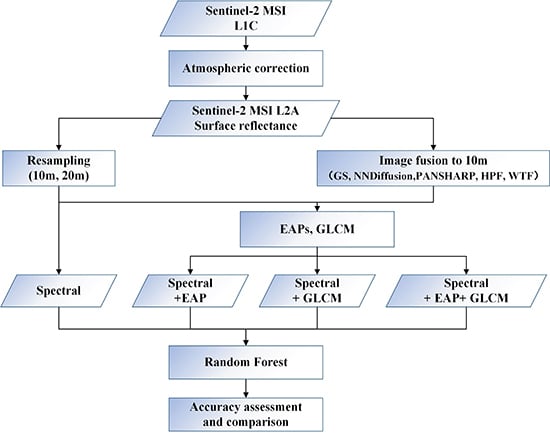

3.1. LUCC Classification Procedure

3.2. Geometric Unification Schemes by Upscaling and Downscaling

3.3. Feature Sets

3.4. Random Forest Classifier

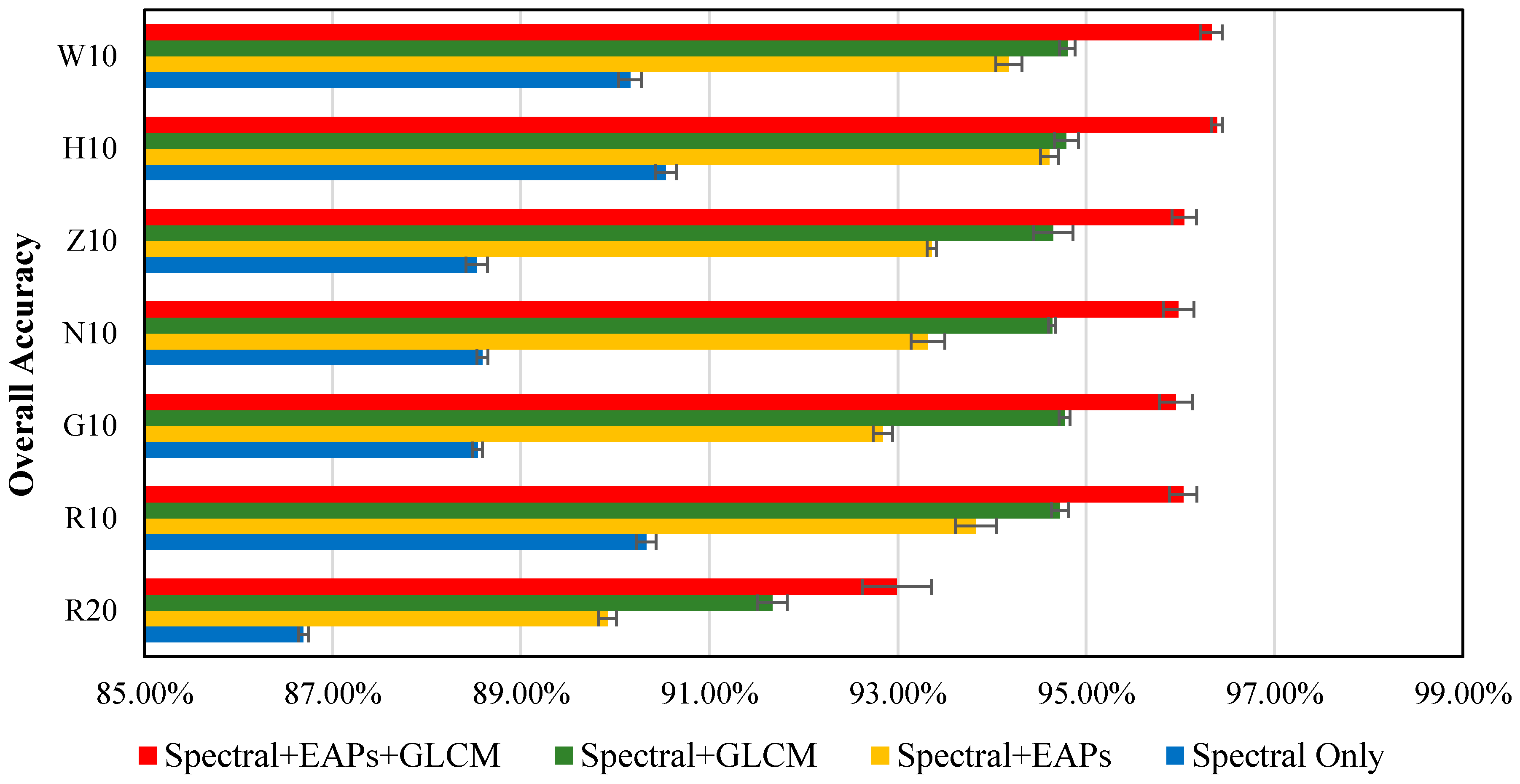

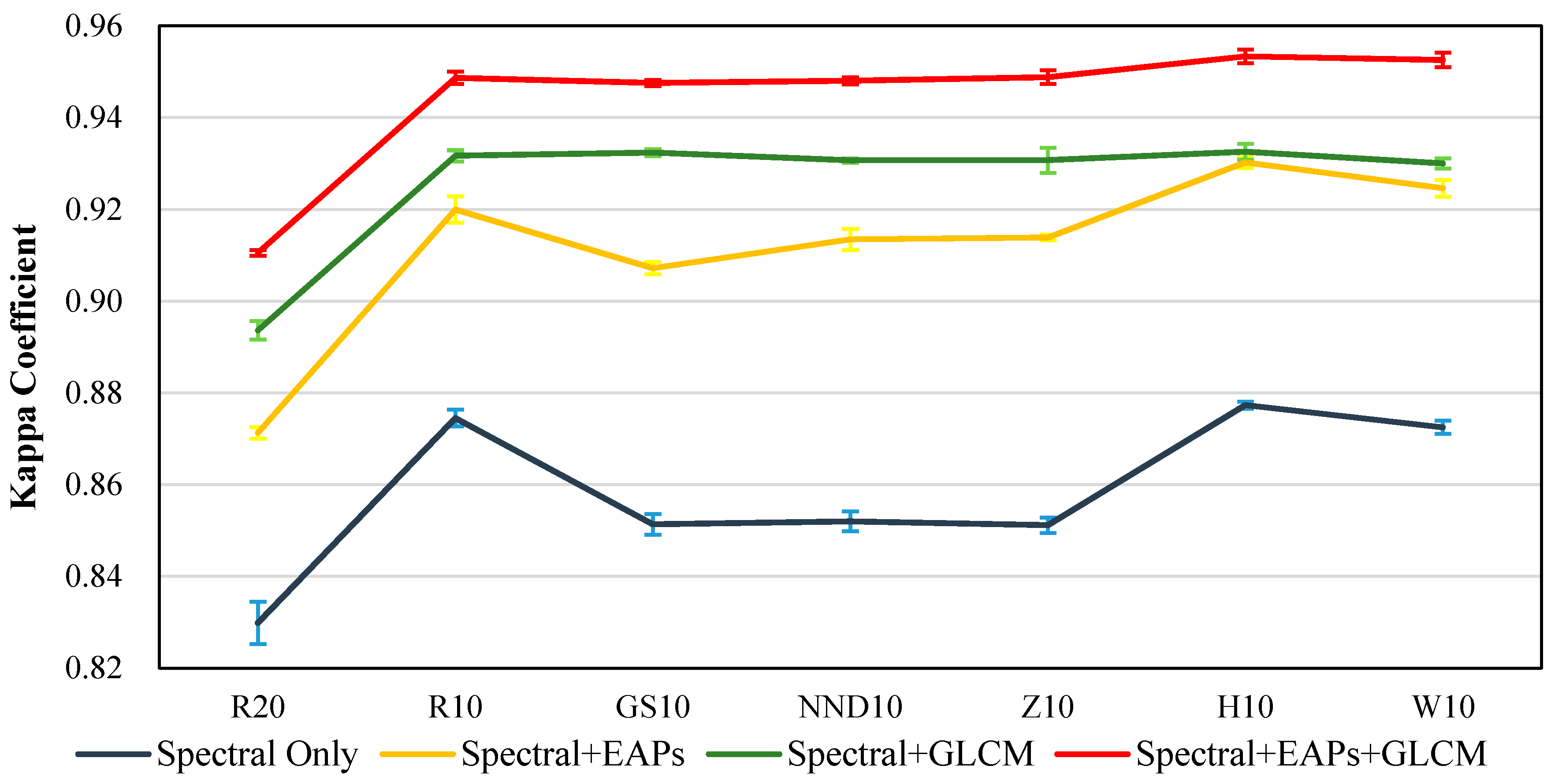

4. Classification Results and Discussion

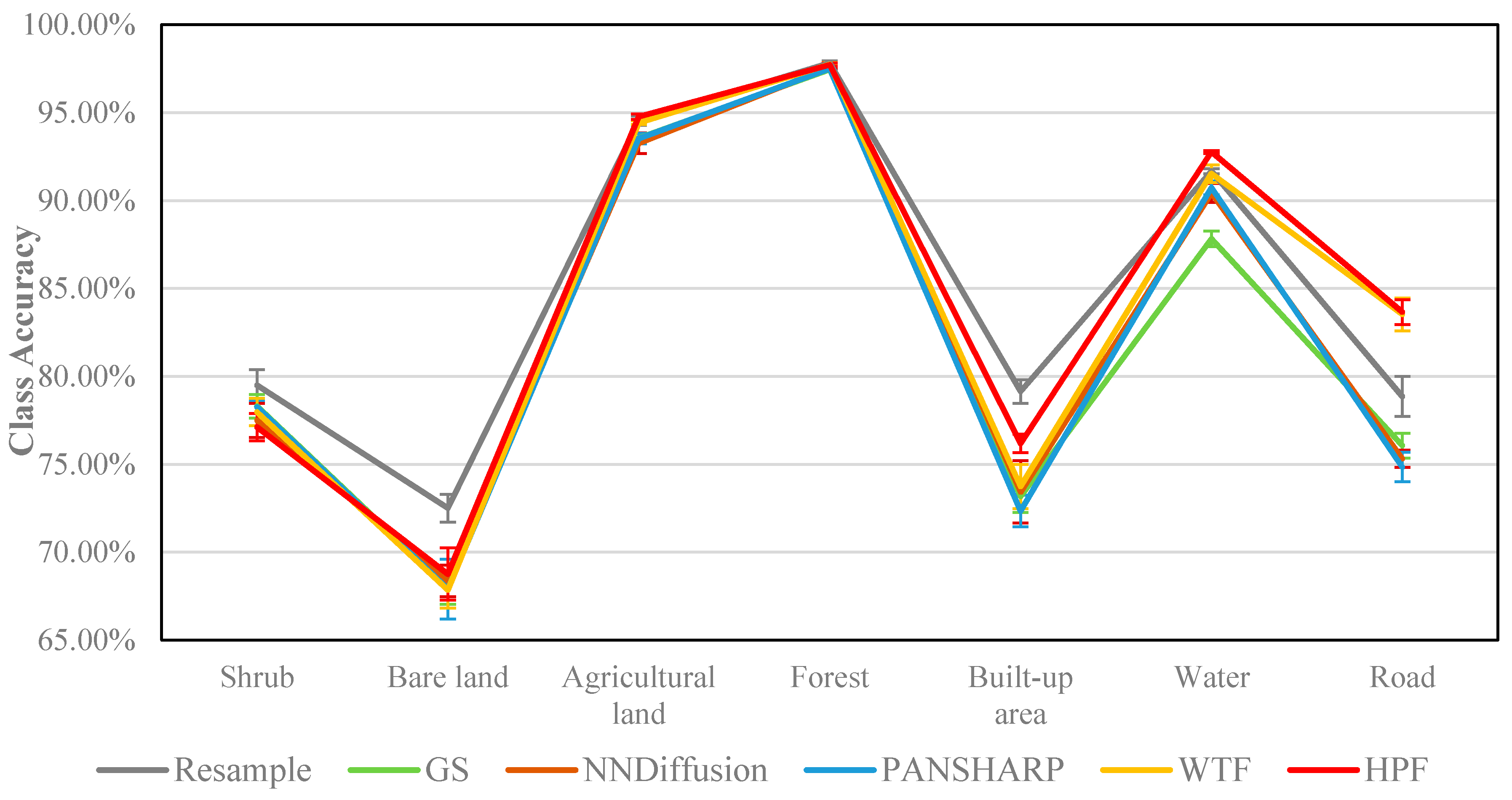

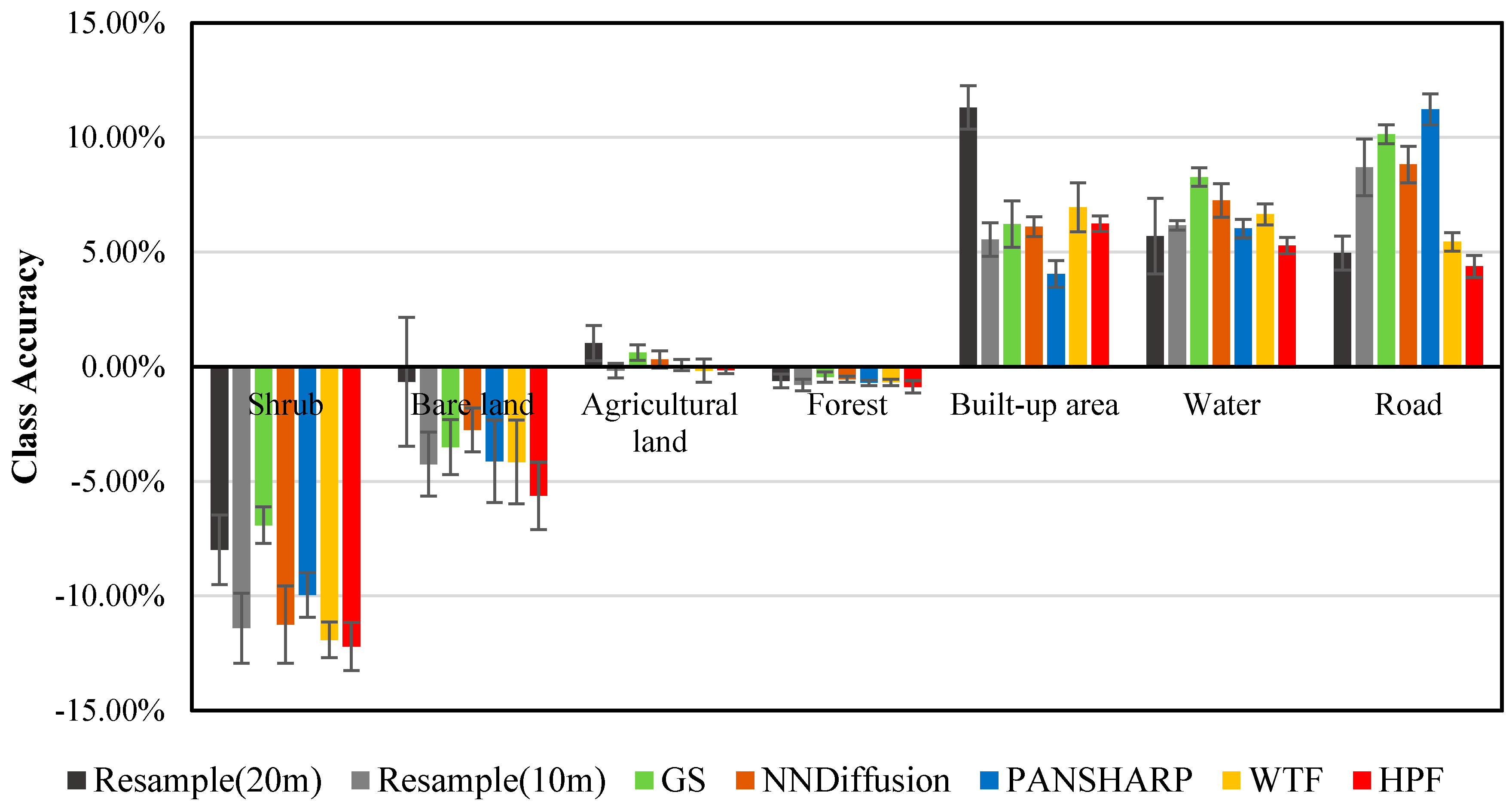

4.1. Differences between Upscaling and Downscaling

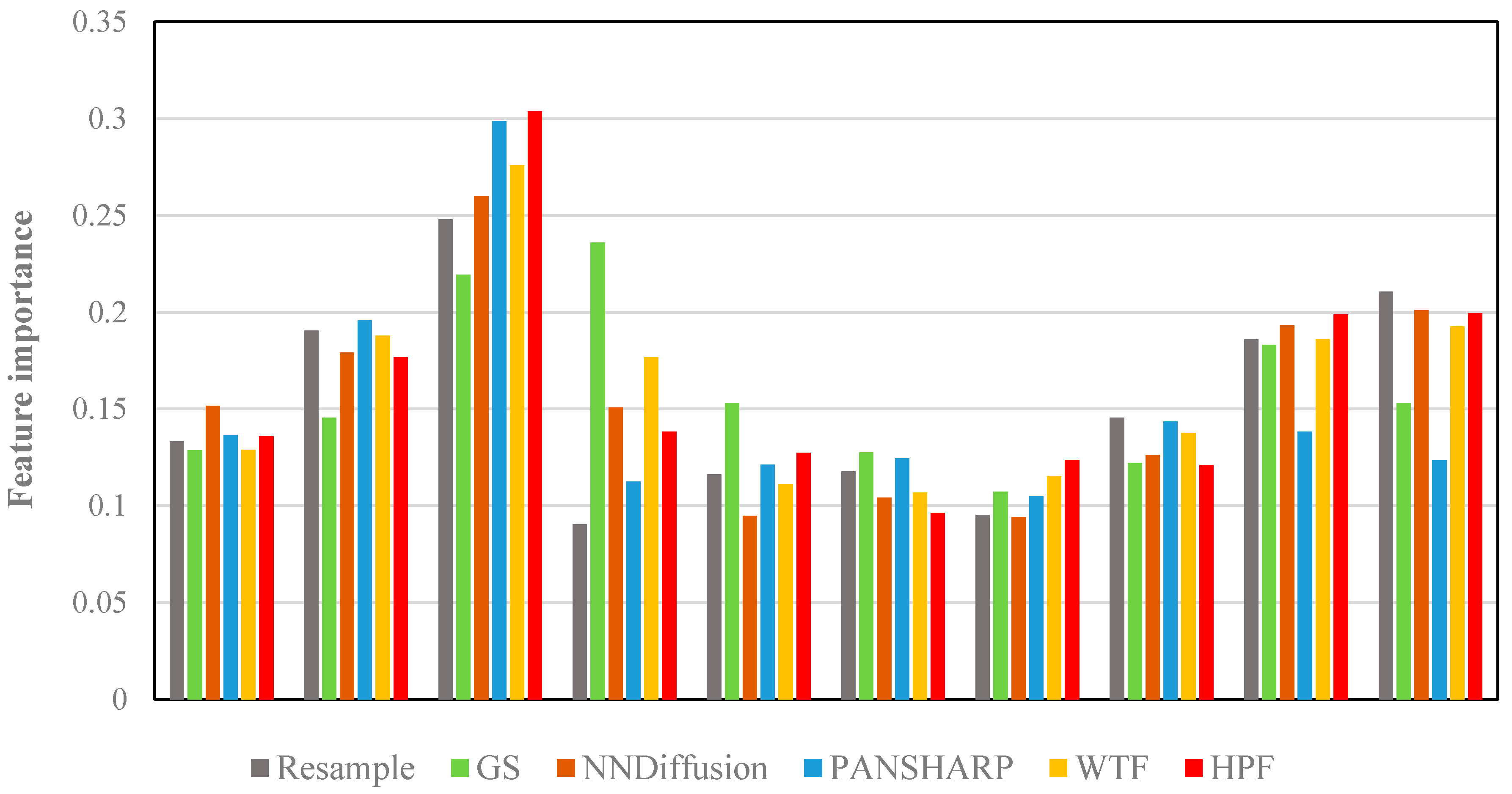

4.2. Effects of Downscaling Algorithms

4.3. Effects of Spatial Features

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Cihlar, J. Land cover mapping of large areas from satellites: Status and research priorities. Int. J. Remote Sens. 2000, 21, 1093–1114. [Google Scholar] [CrossRef]

- Rogan, J.; Chen, D. Remote sensing technology for mapping and monitoring land-cover and land-use change. Prog. Plan. 2004, 61, 301–325. [Google Scholar] [CrossRef]

- Wang, Q.; Blackburn, G.A.; Onojeghuo, A.O.; Dash, J.; Zhou, L.; Zhang, Y.; Atkinson, P.M. Fusion of landsat 8 oli and sentinel-2 MSI data. IEEE Trans. Geosci. Remote Sens. 2017. [Google Scholar] [CrossRef]

- Vincini, M.; Amaducci, S.; Frazzi, E. Empirical estimation of leaf chlorophyll density in winter wheat canopies using sentinel-2 spectral resolution. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3220–3235. [Google Scholar] [CrossRef]

- Fernandes, R.; Weiss, M.; Camacho, F.; Berthelot, B.; Baret, F.; Duca, R. Development and assessment of leaf area index algorithms for the sentinel-2 multispectral imager. In Proceedings of the 2014 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Quebec City, QC, Canada, 13–18 July 2014; pp. 3922–3925. [Google Scholar]

- Toming, K.; Kutser, T.; Laas, A.; Sepp, M.; Paavel, B.; Nõges, T. First experiences in mapping lake water quality parameters with sentinel-2 MSI imagery. Remote Sens. 2016, 8, 640. [Google Scholar] [CrossRef]

- Du, Y.; Zhang, Y.; Ling, F.; Wang, Q.; Li, W.; Li, X. Water bodies’ mapping from sentinel-2 imagery with modified normalized difference water index at 10-m spatial resolution produced by sharpening the swir band. Remote Sens. 2016, 8, 354. [Google Scholar] [CrossRef]

- Kaplan, G.; Avdan, U. Object-based water body extraction model using sentinel-2 satellite imagery. Eur. J. Remote Sens. 2017, 50, 137–143. [Google Scholar] [CrossRef]

- Novelli, A.; Aguilar, M.A.; Nemmaoui, A.; Aguilar, F.J.; Tarantino, E. Performance evaluation of object based greenhouse detection from sentinel-2 MSI and landsat 8 oli data: A case study from almería (Spain). Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 403–411. [Google Scholar] [CrossRef]

- Pesaresi, M.; Corbane, C.; Julea, A.; Florczyk, A.J.; Syrris, V.; Soille, P. Assessment of the added-value of sentinel-2 for detecting built-up areas. Remote Sens. 2016, 8, 299. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with sentinel-2 data for crop and tree species classifications in central europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Sibanda, M.; Mutanga, O.; Rouget, M. Examining the potential of sentinel-2 MSI spectral resolution in quantifying above ground biomass across different fertilizer treatments. ISPRS J. Photogramm. Remote Sens. 2015, 110, 55–65. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Atkinson, P.M.; Zhao, Y. Downscaling modis images with area-to-point regression kriging. Remote Sens. Environ. 2015, 166, 191–204. [Google Scholar] [CrossRef]

- Rowan, L.C.; Mars, J.C. Lithologic mapping in the mountain pass, california area using advanced spaceborne thermal emission and reflection radiometer (ASTER) data. Remote Sens. Environ. 2003, 84, 350–366. [Google Scholar] [CrossRef]

- Pour, A.B.; Hashim, M. Identification of hydrothermal alteration minerals for exploring of porphyry copper deposit using ASTER data, se iran. J. Asian Earth Sci. 2011, 42, 1309–1323. [Google Scholar] [CrossRef]

- Atkinson, P.M. Downscaling in remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2013, 22, 106–114. [Google Scholar] [CrossRef]

- Parker, J.A.; Kenyon, R.V.; Troxel, D.E. Comparison of interpolating methods for image resampling. IEEE Trans. Med. Imaging 1983, 2, 31–39. [Google Scholar] [CrossRef] [PubMed]

- Roy, D.; Dikshit, O. Investigation of image resampling effects upon the textural information content of a high spatial resolution remotely sensed image. Int. J. Remote Sens. 1994, 15, 1123–1130. [Google Scholar] [CrossRef]

- Sirguey, P.; Mathieu, R.; Arnaud, Y.; Khan, M.M.; Chanussot, J. Improving modis spatial resolution for snow mapping using wavelet fusion and arsis concept. IEEE Geosci. Remote Sens. Lett. 2008, 5, 78–82. [Google Scholar] [CrossRef]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Amro, I.; Mateos, J.; Vega, M.; Molina, R.; Katsaggelos, A.K. A survey of classical methods and new trends in pansharpening of multispectral images. EURASIP J. Adv. Signal Process. 2011, 2011, 79. [Google Scholar] [CrossRef]

- Zhang, Y. Understanding image fusion. Photogramm. Eng. Remote Sens. 2004, 70, 657–661. [Google Scholar]

- Pohl, C.; van Genderen, J. Review article multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Alparone, L.; Wald, L.; Chanussot, J.; Thomas, C.; Gamba, P.; Bruce, L.M. Comparison of pansharpening algorithms: Outcome of the 2006 grs-s data-fusion contest. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3012–3021. [Google Scholar] [CrossRef]

- Welch, R.; Ehlers, M. Merging multiresolution spot hrv and landsat tm data. Photogramm. Eng. Remote Sens. 1987, 53, 301–303. [Google Scholar]

- Kim, S.-H.; Kang, S.-J.; Lee, K.-S. Comparison of fusion methods for generating 250 m modis image. Korean J. Remote Sens. 2010, 26, 305–316. [Google Scholar]

- Gilbertson, J.K.; Kemp, J.; Van Niekerk, A. Effect of pan-sharpening multi-temporal landsat 8 imagery for crop type differentiation using different classification techniques. Comput. Electron. Agric. 2017, 134, 151–159. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Li, Z.; Atkinson, P.M. Fusion of sentinel-2 images. Remote Sens. Environ. 2016, 187, 241–252. [Google Scholar] [CrossRef]

- Pereira, M.J.; Ramos, A.; Nunes, R.; Azevedo, L.; Soares, A. Geostatistical data fusion: Application to red edge bands of sentinel 2. In Proceedings of the 2016 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 15–17 December 2016; pp. 758–761. [Google Scholar]

- Vaiopoulos, A.D.; Karantzalos, K. Pansharpening on the narrow vnir and swir spectral bands of sentinel-2. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B7, 723–730. [Google Scholar] [CrossRef]

- Blaschke, T.; Lang, S.; Lorup, E.; Strobl, J.; Zeil, P. Object-oriented image processing in an integrated gis/remote sensing environment and perspectives for environmental applications. Environ. Inf. Plan. Politics Public 2000, 2, 555–570. [Google Scholar]

- Yan, G.; Mas, J.F.; Maathuis, B.H.P.; Xiangmin, Z.; Van Dijk, P.M. Comparison of pixel-based and object-oriented image classification approaches—A case study in a coal fire area, Wuda, Inner Mongolia, China. Int. J. Remote Sens. 2006, 27, 4039–4055. [Google Scholar] [CrossRef]

- Pedergnana, M.; Marpu, P.R.; Dalla Mura, M.; Benediktsson, J.A.; Bruzzone, L. Classification of remote sensing optical and lidar data using extended attribute profiles. IEEE J. Sel. Top. Signal Process. 2012, 6, 856–865. [Google Scholar] [CrossRef]

- Du, P.; Samat, A.; Waske, B.; Liu, S.; Li, Z. Random forest and rotation forest for fully polarized SAR image classification using polarimetric and spatial features. ISPRS J. Photogramm. Remote Sens. 2015, 105, 38–53. [Google Scholar] [CrossRef]

- Marceau, D.J.; Howarth, P.J.; Dubois, J.-M.M.; Gratton, D.J. Evaluation of the grey-level co-occurrence matrix method for land-cover classification using spot imagery. IEEE Trans. Geosci. Remote Sens. 1990, 28, 513–519. [Google Scholar] [CrossRef]

- Zhang, Y. Optimisation of building detection in satellite images by combining multispectral classification and texture filtering. ISPRS J. Photogramm. Remote Sens. 1999, 54, 50–60. [Google Scholar] [CrossRef]

- Gong, P.; Marceau, D.J.; Howarth, P.J. A comparison of spatial feature extraction algorithms for land-use classification with spot hrv data. Remote Sens. Environ. 1992, 40, 137–151. [Google Scholar] [CrossRef]

- Puissant, A.; Hirsch, J.; Weber, C. The utility of texture analysis to improve per-pixel classification for high to very high spatial resolution imagery. Int. J. Remote Sens. 2005, 26, 733–745. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Pesaresi, M.; Amason, K. Classification and feature extraction for remote sensing images from urban areas based on morphological transformations. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1940–1949. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Palmason, J.A.; Sveinsson, J.R. Classification of hyperspectral data from urban areas based on extended morphological profiles. IEEE Trans. Geosci. Remote Sens. 2005, 43, 480–491. [Google Scholar] [CrossRef]

- Dalla Mura, M.; Atli Benediktsson, J.; Waske, B.; Bruzzone, L. Extended profiles with morphological attribute filters for the analysis of hyperspectral data. Int. J. Remote Sens. 2010, 31, 5975–5991. [Google Scholar] [CrossRef]

- Dalla Mura, M.; Benediktsson, J.A.; Waske, B.; Bruzzone, L. Morphological attribute profiles for the analysis of very high resolution images. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3747–3762. [Google Scholar] [CrossRef]

- Dalla Mura, M.; Villa, A.; Benediktsson, J.A.; Chanussot, J.; Bruzzone, L. Classification of hyperspectral images by using extended morphological attribute profiles and independent component analysis. IEEE Geosci. Remote Sens. Lett. 2011, 8, 542–546. [Google Scholar] [CrossRef]

- Ghamisi, P.; Benediktsson, J.A.; Cavallaro, G.; Plaza, A. Automatic framework for spectral-spatial classification based on supervised feature extraction and morphological attribute profiles. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2147–2160. [Google Scholar] [CrossRef]

- Ghamisi, P.; Benediktsson, J.A.; Sveinsson, J.R. Automatic spectral-spatial classification framework based on attribute profiles and supervised feature extraction. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5771–5782. [Google Scholar] [CrossRef]

- Qin, B.; Zhu, G.; Gao, G.; Zhang, Y.; Li, W.; Paerl, H.W.; Carmichael, W.W. A drinking water crisis in lake taihu, china: Linkage to climatic variability and lake management. Environ. Manag. 2010, 45, 105–112. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Yin, Y.; Liu, X.; Shi, Z.; Feng, L.; Liu, M.; Zhu, G.; Gong, Z.; Qin, B. Spatial-seasonal dynamics of chromophoric dissolved organic matter in lake taihu, a large eutrophic, shallow lake in china. Org. Geochem. 2011, 42, 510–519. [Google Scholar] [CrossRef]

- Wan, R.; Cai, S.; Li, H.; Yang, G.; Li, Z.; Nie, X. Inferring land use and land cover impact on stream water quality using a bayesian hierarchical modeling approach in the Xitiaoxi River Watershed, China. J. Environ. Manag. 2014, 133, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P. Sentinel-2: Esa’s optical high-resolution mission for gmes operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Muller-Wilm, U.; Louis, J.; Richter, R.; Gascon, F.; Niezette, M. Sentinel-2 level 2a prototype processor: Architecture, algorithms and first results. In Proceedings of the 2013 ESA Living Planet Symposium, Edinburgh, UK, 9–13 September 2013; pp. 9–13. [Google Scholar]

- Zhang, Y.; Mishra, R.K. A review and comparison of commercially available pan-sharpening techniques for high resolution satellite image fusion. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Munich, Germany, 22–27 July 2012; pp. 182–185. [Google Scholar]

- Sun, W.; Chen, B.; Messinger, D.W. Nearest-neighbor diffusion-based pan-sharpening algorithm for spectral images. Opt. Eng. 2014, 53, 013107. [Google Scholar] [CrossRef]

- Lemeshewsky, G.P. Multispectral multisensor image fusion using wavelet transforms. Proc. SPIE Int. Soc. Opt. Eng. 1999, 3716, 214–222. [Google Scholar] [CrossRef]

- Gangkofner, U.G.; Pradhan, P.S.; Holcomb, D.W. Optimizing the high-pass filter addition technique for image fusion. Photogramm. Eng. Remote Sens. 2008, 74, 1107–1118. [Google Scholar] [CrossRef]

- Nunez, J.; Otazu, X.; Fors, O.; Prades, A.; Pala, V.; Arbiol, R. Multiresolution-based image fusion with additive wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1204–1211. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. Context-driven fusion of high spatial and spectral resolution images based on oversampled multiresolution analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2300–2312. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Breiman, L. Random forest. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Gomariz-Castillo, F.; Alonso-Sarría, F.; Cánovas-García, F. Improving classification accuracy of multi-temporal landsat images by assessing the use of different algorithms, textural and ancillary information for a mediterranean semiarid area from 2000 to 2015. Remote Sens. 2017, 9, 1058. [Google Scholar] [CrossRef]

- Genuer, R.; Poggi, J.-M.; Tuleau-Malot, C. Variable selection using random forests. Pattern Recognit. Lett. 2010, 31, 2225–2236. [Google Scholar] [CrossRef]

- Dietterich, T.G. Ensemble methods in machine learning. Mult. Classif. Syst. 2000, 1857, 1–15. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Thomlinson, J.R.; Bolstad, P.V.; Cohen, W.B. Coordinating methodologies for scaling landcover classifications from site-specific to global: Steps toward validating global map products. Remote Sens. Environ. 1999, 70, 16–28. [Google Scholar] [CrossRef]

- Irons, J.R.; Markham, B.L.; Nelson, R.F.; Toll, D.L.; Williams, D.L.; Latty, R.S.; Stauffer, M.L. The effects of spatial resolution on the classification of thematic mapper data. Int. J. Remote Sens. 1985, 6, 1385–1403. [Google Scholar] [CrossRef]

- Teruiya, R.; Paradella, W.; Dos Santos, A.; Dall’Agnol, R.; Veneziani, P. Integrating airborne SAR, landsat TM and airborne geophysics data for improving geological mapping in the amazon region: The cigano granite, Carajás Province, Brazil. Int. J. Remote Sens. 2008, 29, 3957–3974. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classification of remotely sensed data. Work. Pap. 1991, 119, 270–279. [Google Scholar] [CrossRef]

- Colditz, R.R.; Wehrmann, T.; Bachmann, M.; Steinnocher, K.; Schmidt, M.; Strunz, G.; Dech, S. Influence of image fusion approaches on classification accuracy: A case study. Int. J. Remote Sens. 2006, 27, 3311–3335. [Google Scholar] [CrossRef]

| Geometric Unification Schemes | Methods | Resolution | Abbreviation |

|---|---|---|---|

| Spatial interpolation | Nearest neighbor resample | 20 m | R20 |

| Nearest neighbor resample | 10 m | R10 | |

| Pan-sharpening | GS | G10 | |

| NNDiffusion | N10 | ||

| PANSHARP | P10 | ||

| HPF fusion | H10 | ||

| Wavelet fusion | W10 |

| Low-Resolution Band | Like-PAN Band Based on Center Wavelength Proximity | Like-PAN Band Based on Band Correlation | ||

|---|---|---|---|---|

| Band5 | Band4 | Band4 | Band4 | Band8 |

| Band6 | Band8 | |||

| Band7 | Band8 | Band8 | ||

| Band8a | ||||

| Band11 | ||||

| Band12 | Band4 | |||

| Input Variable | R20 | R10 | G10 | N10 | P10 | H10 | W10 | |||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | F2 | F3 | F4 | F1 | F2 | F3 | F4 | F1 | F2 | F3 | F4 | F1 | F2 | F3 | F4 | F1 | F2 | F3 | F4 | F1 | F2 | F3 | F4 | F1 | F2 | F3 | F4 | |

| Spectral | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● |

| EAPs | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ||||||||||||||

| GLCM | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ● | ||||||||||||||

| Training Pixels | Validation Pixels | Percentage | |

|---|---|---|---|

| Classes | Number | Number | % |

| Shrub | 288 | 2587 | 6.2 |

| Bare land | 183 | 1646 | 3.94 |

| Agricultural land | 1182 | 10,636 | 25.47 |

| Forest | 1520 | 13,675 | 32.75 |

| Built-up area | 425 | 3825 | 9.16 |

| Water | 344 | 3094 | 7.41 |

| Road | 699 | 6293 | 15.06 |

| Total | 4641 | 41,756 | 100 |

| OA | Kappa | Shrub | Bare Land | Agriculture Land | Forest | Built up Area | Water | Road | ||

|---|---|---|---|---|---|---|---|---|---|---|

| F1 | R20 | 86.69 | 0.83 | 73.26 | 58.43 | 92.30 | 96.71 | 70.65 | 90.76 | 76.13 |

| R10 | 90.33 | 0.87 | 79.49 | 72.50 | 94.53 | 97.82 | 79.14 | 91.67 | 79.85 | |

| G10 | 88.54 | 0.85 | 78.31 | 68.00 | 93.54 | 97.45 | 73.17 | 87.81 | 76.06 | |

| N10 | 88.59 | 0.85 | 77.50 | 68.37 | 93.26 | 97.57 | 73.43 | 90.33 | 75.32 | |

| P10 | 88.53 | 0.85 | 78.26 | 67.90 | 93.52 | 97.50 | 72.35 | 90.73 | 74.83 | |

| W10 | 90.16 | 0.87 | 77.97 | 67.85 | 94.43 | 97.74 | 73.73 | 91.53 | 83.52 | |

| H10 | 90.54 | 0.88 | 77.11 | 68.75 | 94.77 | 97.72 | 76.18 | 92.77 | 83.65 | |

| F2 | R20 | 89.92 | 0.87 | 84.15 | 71.62 | 94.04 | 97.43 | 74.84 | 90.28 | 82.92 |

| R10 | 93.82 | 0.92 | 92.15 | 83.69 | 96.92 | 98.63 | 84.13 | 92.02 | 85.97 | |

| G10 | 92.84 | 0.91 | 88.84 | 83.99 | 96.54 | 98.32 | 82.85 | 90.24 | 83.39 | |

| N10 | 93.32 | 0.91 | 91.33 | 82.41 | 96.69 | 98.50 | 83.17 | 91.13 | 84.86 | |

| P10 | 93.36 | 0.91 | 90.77 | 85.01 | 96.74 | 98.61 | 83.26 | 92.35 | 83.49 | |

| W10 | 94.18 | 0.92 | 92.04 | 83.90 | 97.24 | 98.69 | 82.60 | 91.83 | 88.90 | |

| H10 | 94.61 | 0.93 | 92.68 | 83.52 | 97.29 | 98.82 | 84.15 | 93.52 | 89.62 | |

| F3 | R20 | 91.67 | 0.89 | 76.16 | 70.96 | 95.07 | 96.81 | 86.15 | 95.98 | 87.75 |

| R10 | 94.72 | 0.93 | 80.75 | 79.44 | 96.75 | 97.84 | 89.68 | 98.18 | 94.67 | |

| G10 | 94.77 | 0.93 | 81.93 | 80.48 | 97.15 | 97.88 | 89.07 | 98.52 | 93.53 | |

| N10 | 94.64 | 0.93 | 80.08 | 79.66 | 97.00 | 97.96 | 89.27 | 98.38 | 93.68 | |

| P10 | 94.65 | 0.93 | 80.82 | 80.89 | 96.82 | 97.91 | 87.31 | 98.38 | 94.71 | |

| W10 | 94.80 | 0.93 | 80.12 | 79.74 | 97.07 | 98.01 | 89.55 | 98.47 | 94.35 | |

| H10 | 94.79 | 0.93 | 80.47 | 77.88 | 97.14 | 97.94 | 90.39 | 98.79 | 94.00 | |

| F4 | R20 | 92.99 | 0.91 | 82.29 | 73.54 | 96.32 | 97.01 | 86.95 | 95.38 | 90.60 |

| R10 | 96.03 | 0.95 | 89.16 | 82.78 | 97.83 | 98.32 | 91.91 | 97.79 | 95.14 | |

| G10 | 95.95 | 0.95 | 86.81 | 85.55 | 97.97 | 98.25 | 91.17 | 98.26 | 94.91 | |

| N10 | 95.98 | 0.95 | 88.33 | 83.06 | 97.87 | 98.33 | 91.50 | 97.99 | 95.15 | |

| P10 | 96.04 | 0.95 | 87.07 | 85.93 | 97.89 | 98.39 | 91.35 | 98.02 | 95.19 | |

| W10 | 96.33 | 0.95 | 89.32 | 83.68 | 98.17 | 98.48 | 92.94 | 98.11 | 95.55 | |

| H10 | 96.39 | 0.95 | 88.46 | 83.33 | 98.33 | 98.49 | 93.22 | 98.29 | 95.55 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, H.; Du, P.; Chen, J.; Xia, J.; Li, E.; Xu, Z.; Li, X.; Yokoya, N. Performance Evaluation of Downscaling Sentinel-2 Imagery for Land Use and Land Cover Classification by Spectral-Spatial Features. Remote Sens. 2017, 9, 1274. https://doi.org/10.3390/rs9121274

Zheng H, Du P, Chen J, Xia J, Li E, Xu Z, Li X, Yokoya N. Performance Evaluation of Downscaling Sentinel-2 Imagery for Land Use and Land Cover Classification by Spectral-Spatial Features. Remote Sensing. 2017; 9(12):1274. https://doi.org/10.3390/rs9121274

Chicago/Turabian StyleZheng, Hongrui, Peijun Du, Jike Chen, Junshi Xia, Erzhu Li, Zhigang Xu, Xiaojuan Li, and Naoto Yokoya. 2017. "Performance Evaluation of Downscaling Sentinel-2 Imagery for Land Use and Land Cover Classification by Spectral-Spatial Features" Remote Sensing 9, no. 12: 1274. https://doi.org/10.3390/rs9121274

APA StyleZheng, H., Du, P., Chen, J., Xia, J., Li, E., Xu, Z., Li, X., & Yokoya, N. (2017). Performance Evaluation of Downscaling Sentinel-2 Imagery for Land Use and Land Cover Classification by Spectral-Spatial Features. Remote Sensing, 9(12), 1274. https://doi.org/10.3390/rs9121274