A Workflow for Automated Satellite Image Processing: from Raw VHSR Data to Object-Based Spectral Information for Smallholder Agriculture

Abstract

:1. Introduction

- Produce higher processing level products, which can support various scientific purposes. The surface reflectance products aim specifically at vegetation studies.

- Prepare the data for object-specific statistical information extraction to derive on-demand results and feed a spectral library.

- Showcase the feasibility of an automated workflow, as a case study of monitoring smallholder agriculture farms from space.

2. Materials and Methods

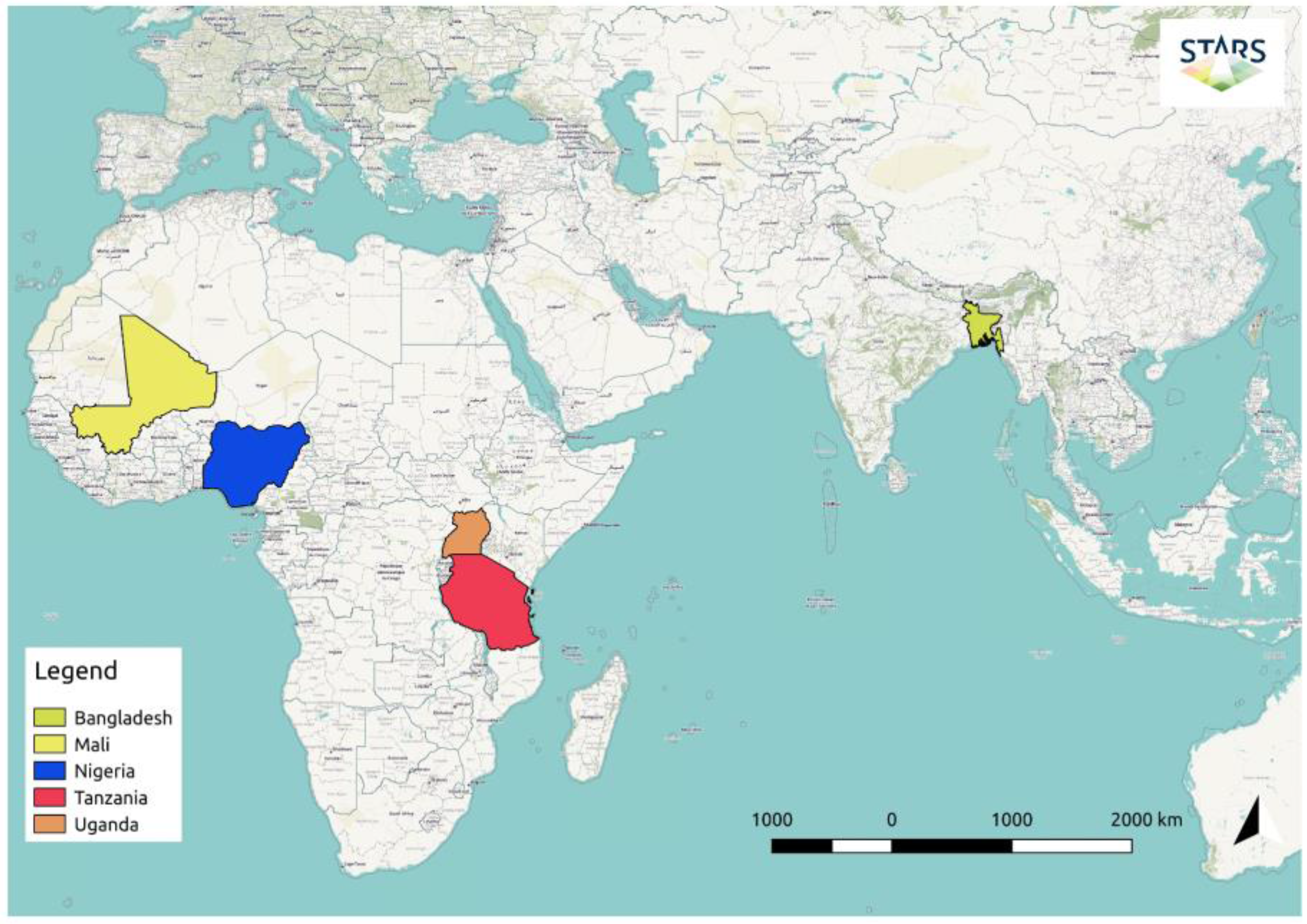

2.1. Data

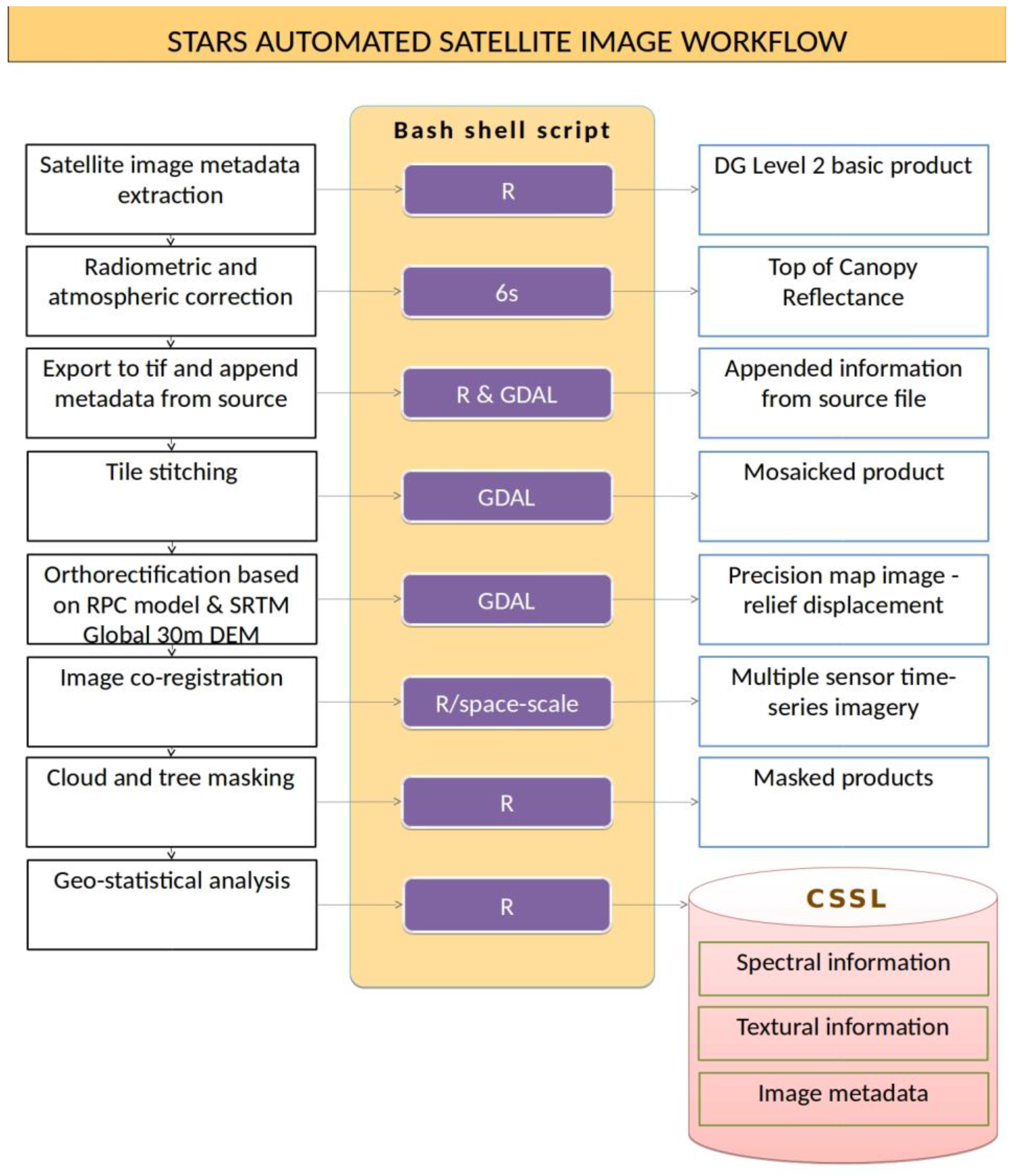

2.2. The STARS Image Processing Workflow

- is founded on free and open-source software,

- requires minimal user interaction,

- supports VHSR satellite image processing, and

- is tailored to smallholder farming applications.

2.3. Module Description

- High spatial resolution satellite images of the study area. In cases in which we have an image time series, one of the images is declared as the master image, onto which all other images are geometrically registered.

- A DEM that covers the satellite image footprint and that is used in the orthorectification phase, if that phase is necessary.

2.3.1. Atmospheric Correction

2.3.2. Tile Stitching

2.3.3. Orthorectification

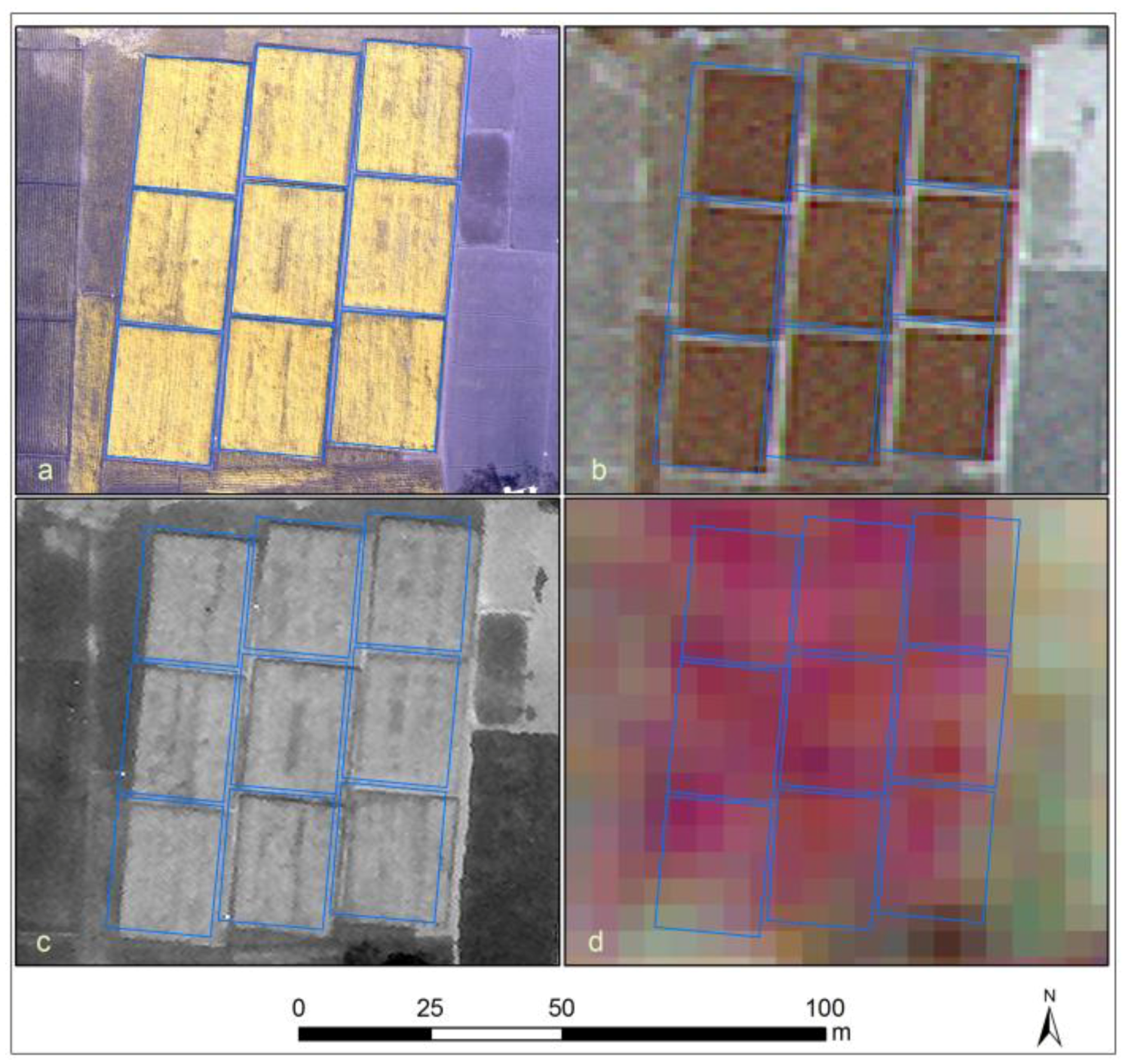

2.3.4. Image Co-Registration

2.3.5. Cloud Masking

2.3.6. Tree Masking

3. Results and Discussion

3.1. Field and Crop Statistics

3.2. Crop Spectrotemporal Signature Library (CSSL)

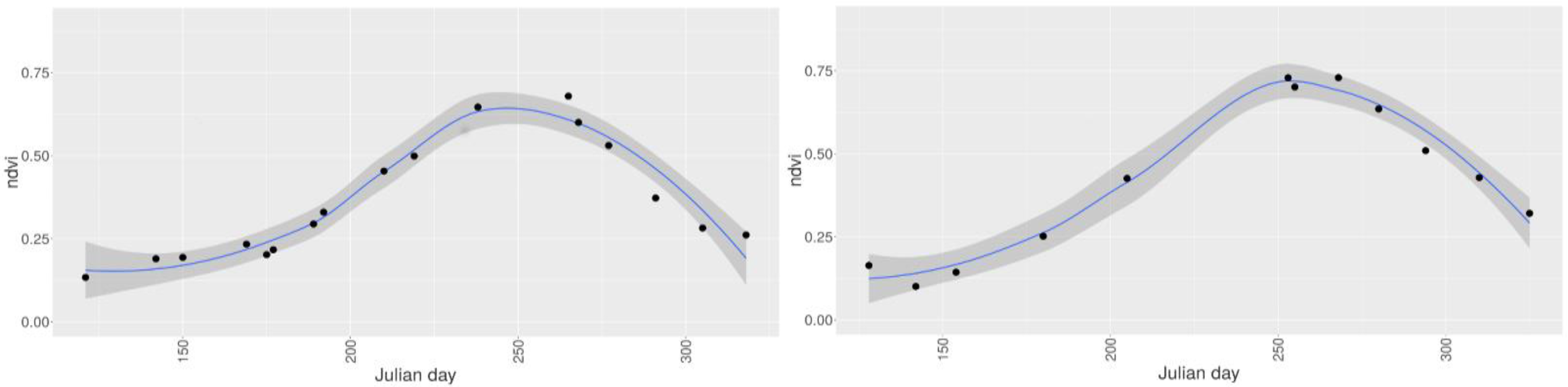

3.3. Monitoring Crop Phenology

3.4. Alternative Workflow Implementation through GNU Makefile

- addition of image sources to the collection and the automatic processing of only these by the workflow,

- the replacement of a workflow step and have this lead to reprocessing of image half-products leading to up-to-date end-products, and

- the parallelization of the execution of the workflow through intelligent rules.

../,$(atcor_list_src))))),$(addprefix /,$(notdir $(atcor_list_src))))

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- United Nations, Department of Economic and Social Affairs, Population Division. World Population Prospects: The 2015 Revision, Key Findings & Advance Tables; Working Paper No. ESA/WP.241; United Nations: New York, NY, USA, 2015. [Google Scholar]

- Gerland, P.; Raftery, A.E.; Ikova, H.S.; Li, N.; Gu, D.; Spoorenberg, T.; Alkema, L.; Fosdick, B.K.; Chunn, J.; Lalic, N.; et al. World population stabilization unlikely this century. Science 2014, 346, 234–237. [Google Scholar] [CrossRef] [PubMed]

- Tilman, D.; Balzer, C.; Hill, J.; Befort, B.L. Global food demand and the sustainable intensification of agriculture. Proc. Natl. Acad. Sci. USA 2011, 108, 20260–20264. [Google Scholar] [CrossRef] [PubMed]

- Seelan, S.K.; Laguette, S.; Casady, G.M.; Seielstad, G.A. Remote sensing applications for precision agriculture: A learning community approach. Remote Sens. Environ. 2003, 88, 157–169. [Google Scholar] [CrossRef]

- Jackson, R.D. Remote sensing of vegetation characteristics for farm management. In Proceedings of the 1984 Technical Symposium East, Arlington, VA, USA, 16 October 1984; pp. 81–97. [Google Scholar]

- Sandau, R.; Paxton, L.; Esper, J. Trends and visions for small satellite missions. In Small Satellites for Earth Observation; Springer: Dordrecht, The Netherlands, 2008; pp. 27–39. [Google Scholar]

- Idso, S.B.; Jackson, R.D.; Reginato, R.J. Remote sensing of crop yields. Science 1977, 196, 19–25. [Google Scholar] [CrossRef] [PubMed]

- Tenkorang, F.; Lowenberg-DeBoer, J. On-farm profitability of remote sensing on agriculture. J. Terr. Obs. 2008, 1, 50–59. [Google Scholar]

- Lowder, S.K.; Skoet, J.; Raney, T. The Number, Size and Distribution of Farms, Smallholder Farms, and Family Farms Worldwide. World Dev. 2016, 87, 16–29. [Google Scholar] [CrossRef]

- Löw, F.; Duveiller, G. Defining the Spatial Resolution Requirements for Crop Identification Using Optical Remote Sensing. Remote Sens. 2014, 6, 9034–9063. [Google Scholar] [CrossRef]

- Whitcraft, A.K.; Becker-Reshef, I.; Killough, B.D.; Justice, C.O. Meeting Earth Observation requirements for global agricultural monitoring: An evaluation of the revisit capabilities of current and planned moderate resolution optical observing missions. Remote Sens. 2015, 7, 1482–1503. [Google Scholar] [CrossRef]

- Oštir, K.; Čotar, K.; Marsetič, A.; Pehani, P.; Perše, M.; Zakšek, K.; Zaletelj, J.; Rodič, T. Automatic Near-Real-Time Image Processing Chain for Very High Resolution Optical Satellite Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-7/W3, 669–676. [Google Scholar]

- Scheffler, D.; Sips, M.; Behling, R.; Dransch, D.; Eggert, D.; Fajerski, J.; Freytag, J.C.; Griffiths, P.; Hollstein, A.; Hostert, P.; et al. Geomultisens—A common automatic processing and analysis system for multi-sensor satellite data. In Proceedings of the Second joint Workshop of the EARSeL Special Interest Group on Land Use & Land Cover and the NASA LCLUC Program: “Advancing Horizons for Land Cover Services Entering the Big Data Era”, Prague, Czech Republic, 6–7 May 2016. [Google Scholar]

- Morris, D.E.; Boyd, D.S.; Crowe, J.A.; Johnson, C.S.; Smith, K.L. Exploring the potential for automatic extraction of vegetation phenological metrics from traffic webcams. Remote Sens. 2013, 5, 2200–2218. [Google Scholar] [CrossRef]

- Inglada, J.; Arias, M.; Tardy, B.; Hagolle, O.; Valero, S.; Morin, D.; Dedieu, G.; Sepulcre, G.; Bontemps, S.; Defourny, P.; Koetz, B. Assessment of an Operational System for Crop Type Map Production Using High Temporal and Spatial Resolution Satellite Optical Imagery. Remote Sens. 2015, 7, 12356–12379. [Google Scholar] [CrossRef]

- Clewley, D.; Bunting, P.; Shepherd, J.; Gillingham, S.; Flood, N.; Dymond, J.; Lucas, R.; Armston, J.; Moghaddam, M. A Python-Based Open Source System for Geographic Object-Based Image Analysis (GEOBIA) Utilizing Raster Attribute Tables. Remote Sens. 2014, 6, 6111–6135. [Google Scholar] [CrossRef]

- Grippa, T.; Lennert, M.; Beaumont, B.; Vanhuysse, S.; Stephenne, N.; Wolff, E. An Open-Source Semi-Automated Processing Chain for Urban Object-Based Classification. Remote Sens. 2017, 9, 358. [Google Scholar] [CrossRef]

- Google Earth Engine: A Planetary-Scale Platform for Earth Science Data & Analysis—Powered by Google’s Cloud Infrastructure. Available online: https://earthengine.google.com (accessed on 14 July 2017).

- DigitalGlobe Platform—Actionable Insights. Global Scale. Available online: https://platform.digitalglobe.com/gbdx (accessed on 14 July 2017).

- Tiede, D.; Baraldi, A.; Sudmanns, M.; Belgiu, M.; Lang, S. ImageQuerying—Automatic real-time information extraction and content-based image retrieval in big EO image databases. In Proceedings of the Second joint Workshop of the EARSeL Special Interest Group on Land Use & Land Cover and the NASA LCLUC Program: “Advancing Horizons for Land Cover Services Entering the Big Data Era”, Prague, Czech Republic, 6–7 May 2016. [Google Scholar]

- Amazon EC2—Secure and Resizable Compute Capacity in the Cloud. Launch Applications When Needed without Upfront Commitments. Available online: https://aws.amazon.com/ec2 (accessed on 14 July 2017).

- Microsoft Azure—Global. Trusted. Hybrid. Available online: https://azure.microsoft.com/en-us (accessed on 14 July 2017).

- STARS. Available online: http://www.stars-project.org/en (accessed on 14 July 2017).

- Delrue, J.; Bydekerke, L.; Eerens, H.; Gilliams, S.; Piccard, I.; Swinnen, E. Crop mapping in countrieswith small-scale farming: A case study for West Shewa, Ethiopia. Int. J. Remote Sens. 2013, 34, 2566–2582. [Google Scholar] [CrossRef]

- Collier, P.; Dercon, S. African agriculture in 50 years: Smallholders in a rapidly changing world? World Dev. 2014, 63, 92–101. [Google Scholar] [CrossRef]

- Chand, R.; Prasanna, P.A.L.; Singh, A. Farm size and productivity: Understanding the strengths of smallholders and improving their livelihoods. Econ. Political Wkly Suppl. Rev. Agric. 2011, 46, 5–11. [Google Scholar]

- Aplin, P.; Boyd, D.S. Innovative technologies for terrestrial remote sensing. Remote Sens. 2015, 7, 4968–4972. [Google Scholar] [CrossRef]

- R Foundation for Statistical Computing. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2014. [Google Scholar]

- Warren, M.A.; Taylor, B.H.; Grant, A.G.; Shutler, J.D. Data processing of remotely sensed airborne hyperspectral data using the Airborne Processing Library (APL): Geocorrection algorithm descriptions and spatial accuracy assessment. Comput. Geosci. 2013, 54, 24–34. [Google Scholar] [CrossRef] [Green Version]

- Pehani, P.; Čotar, K.; Marsetič, A.; Zaletelj, J.; Oštir, K. Automatic Geometric Processing for Very High Resolution Optical Satellite Data Based on Vector Roads and Orthophotos. Remote Sens. 2016, 8, 343. [Google Scholar] [CrossRef]

- Ahern, F.J.; Brown, R.J.; Cihlar, J.; Gauthier, R.; Murphy, J.; Neville, R.A.; Teillet, P.M. Review article radiometric correction of visible and infrared remote sensing data at the Canada Centre for remote sensing. Int. J. Remote Sens. 1987, 8, 1349–1376. [Google Scholar] [CrossRef]

- Vermote, E.F.; Tanré, D.; Deuzé, J.L.; Herman, M.; Morcrette, J.-J. Second Simulation of the Satellite Signal in the Solar Spectrum, 6S: An Overview. IEEE Trans. Geosci. Remote Sens. 1997, 35, 675–686. [Google Scholar] [CrossRef]

- Vermote, E.; Justice, C.; Claverie, M.; Franch, B. Preliminary analysis of the performance of the Landsat 8/OLI land surface reflectance product. Remote Sens. Environ. 2016, 185, 46–56. [Google Scholar] [CrossRef]

- Main-Knorn, M.; Pflug, B.; Debaecker, V.; Louis, J. Calibration and validation plan for the L2A processor and products of the Sentinel-2 mission. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, W3/XL.7, 1249–1255. [Google Scholar] [CrossRef] [Green Version]

- Hagolle, O.; Huc, M.; Pascual, D.V.; Dedieu, G.A. multi-temporal method for cloud detection, applied to FORMOSAT-2, VENµS, LANDSAT and SENTINEL-2 images. Remote Sens. Environ. 2010, 114, 1747–1755. [Google Scholar] [CrossRef] [Green Version]

- Aguilar, M.A.; del Mar Saldaña, M.; Aguilar, F.J. Assessing geometric accuracy of the orthorectification process from GeoEye-1 and WorldView-2 panchromatic images. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 427–435. [Google Scholar] [CrossRef]

- Hoja, D.; Schneider, M.; Muller, R.; Lehner, M.; Reinartz, P. Comparison of orthorectification methods suitable for rapid mapping using direct georeferencing and RPC for optical satellite data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, XXXVII, 1617–1623. [Google Scholar]

- Willneff, J.; Poon, J. Georeferencing from orthorectified and non-orthorectified high-resolution satellite imagery. In Proceedings of the 13th Australasian Remote Sensing and Photogrammetry Conference: Earth Observation from Science to Solutions, Canberra, Australia, 21–24 November 2006. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the IEEE International Conference on Computer Vision, Corfu, Greece, 20–27 September 1999. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In Proceedings of the 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006. [Google Scholar]

- Lindeberg, T. Feature Detection with Automatic Scale Selection. Int. J. Comput. Vis. 1998, 30, 79–106. [Google Scholar] [CrossRef]

- Le Moigne, J.; Netanyahu, N.S.; Eastman, R.D. (Eds.) Image Registration for Remote Sensing; Cambridge University Press: New York, NY, USA, 2011. [Google Scholar]

- Zaletelj, J.; Burnik, U.; Tasic, J.F. Registration of satellite images based on road network map. In Proceedings of the 8th International Symposium on Image and Signal Processing and Analysis, Trieste, Italy, 4–6 September 2013. [Google Scholar]

- Ardila, J.P.; Bijker, W.; Tolpekin, V.A.; Stein, A. Quantification of crown changes and change uncertainty of trees in an urban environment. ISPRS J. Photogramm. Remote Sens. 2012, 74, 41–55. [Google Scholar] [CrossRef]

- Tolpekin, V.; Bijker, W.; Zurita Milla, R.; Stratoulias, D.; de By, R.A. Automatic co-registration of very high resolution satellite images of smallholder farms using a 3D tree model. Manuscript in preparation 2017. [Google Scholar]

- Asner, G.P. Cloud cover in Landsat observations of the Brazilian Amazon. Int. J. Remote Sens. 2001, 22, 3855–3862. [Google Scholar] [CrossRef]

- Loveland, T.R.; Irons, J.R. Landsat 8: The plans, the reality, and the legacy. Remote Sens. Environ. 2016, 185, 1–6. [Google Scholar] [CrossRef]

- Le Hégarat-Mascle, S.; André, C. Use of Markov random fields for automatic cloud/shadow detection on high resolution optical images. ISPRS J. Photogramm. Remote Sens. 2009, 64, 351–366. [Google Scholar] [CrossRef]

- Tseng, D.C.; Tseng, H.T.; Chien, C.L. Automatic cloud removal from multi-temporal SPOT images. Appl. Math. Comput. 2008, 205, 584–600. [Google Scholar] [CrossRef]

- Sedano, F.; Kempeneers, P.; Strobl, P.; Kucera, J.; Vogt, P.; Seebach, L.; San-Miguel-Ayanz, J. A cloud mask methodology for high resolution remote sensing data combining information from high and medium resolution optical sensors. ISPRS J. Photogramm. Remote Sens. 2011, 66, 588–596. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Huete, A.R.; Liu, H.Q.; Batchily, K.; van Leeuwen, W. A comparison of vegetation indices global set of TM images for EOS-MODIS. Remote Sens. Environ. 1997, 59, 440–451. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of leaf area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanre, D. Atmospherically resistant vegetation index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Haralick, R.; Shanmugam, K.; Dinstein, I. Texture features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Herold, M.; Liu, X.; Clarke, K.C. Spatial metrics and image texture for mapping urban land use. Photogramm. Eng. Remote Sens. 2003, 69, 991–1001. [Google Scholar] [CrossRef]

- Zhang, X.; Friedl, M.A.; Schaaf, C.B.; Strahler, A.H.; Hodges, J.C.F.; Gao, F.; Reed, B.C.; Huete, A. Monitoring vegetation phenology using MODIS. Remote Sens. Environ. 2003, 84, 471–475. [Google Scholar] [CrossRef]

- Stallman, R.M.; McGrath, R.; Smith, P. GNU Make: A Program for Directing Recompilation, GNU make Version 3.80; Free Software Foundation: Boston, MA, USA, 2016. [Google Scholar]

| Quickbird | GeoEye | WV-2 | WV-3 | RapidEye | |

|---|---|---|---|---|---|

| Provider | DigitalGlobe | DigitalGlobe | DigitalGlobe | DigitalGlobe | RapidEye/BlackBridge |

| Dynamic range | 11 bit | 11 bit | 11 bit | 11 bit (14 bit for SWIR) | 12 bit |

| Panchromatic resolution (nominal) | 0.65 m | 0.46 m | 0.46 m | 0.31 m | - |

| Multispectral bands | 4 | 4 | 8 | 8 (+8 SWIR) | 5 |

| Multispectral resolution (nominal) | 2.62 m | 1.84 m | 1.84 m | 1.24 m (3.70 m) | 6.50 m |

| Blue, Green, Red, Near-IR 1 | • | • | • | • | • |

| Cyan (coastal), Yellow, Near-IR 2 | • | • |

| Image Sample | Satellite Sensor | Number of Bands | Ground Sampling Distance (m) | Number of Pixels | Delivery Size (MB) | Processing Time (min) | Atmospheric Correction | Subset and Mosaic | Tie Point Detection | Image Matching | Tree Mask | Spectral Statistics | Textural Statistics 64 gl | Textural Statistics 256 gl |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | WV-2/3 | 8 | 2 | 16,777,216 | 400 | 60 | 42% | 2% | 51% | 2% | 2% | 0% | - | - |

| 1b | WV-2/3 pan | 1 (pan) | 0.5 | 2,016,020,167 | 750 | 140 | - | 1% | - | 0% | 6% | - | 27% | 65% |

| 2 | RE | 5 | 6.5 | 116,967,735 | 960 | 30 | 0% | 8% | 62% | 5% | 21% | 0% | - | - |

| 3 | QB | 4 | 2.4 | 41,933,143 | 106 | 40 | 54% | 2% | 37% | 2% | 2% | 0% | - | - |

| 3b | QB pan | 1 (pan) | 0.6 | 210,857,584 | 405 | 60 | - | 0% | - | 1% | 14% | - | 22% | 59% |

| 4 | GeoEye | 4 | 2 | 50,405,041 | 200 | 52 | 36% | 2% | 58% | 1% | 2% | 0% | - | - |

| 4b | GeoEye pan | 1 (pan) | 0.5 | 406,566,720 | 780 | 68 | - | 1% | - | 0% | 5% | - | 23% | 71% |

| Statistical Attribute | Image Provider | Orthorectified | Co-Registered |

|---|---|---|---|

| MeanX of absolute residual values (m) | 5.288 | 11.375 | 1.319 |

| Standard deviationX of absolute residual values (m) | 4.026 | 1.888 | 0.82 |

| MeanY of absolute residual values (m) | 1.875 | 1.511 | 0.324 |

| Standard deviationY of absolute residual values (m) | 0.205 | 0.169 | 0.162 |

| MinX residual (m) | −0.136 | −1.084 | 0.046 |

| MaxX residual (m) | 17.1 | −13.527 | 2.872 |

| MinY residual (m) | 1.416 | 1.086 | −0.004 |

| MaxY residual (m) | 2.318 | 1.785 | 0.635 |

| RMSEX (m) | 6.616 | 11.527 | 1.547 |

| RMSEY (m) | 1.886 | 1.521 | 0.361 |

| Statistical Moment | Explanation |

|---|---|

| 0 | Number of pixels within the polygon |

| 0 | Number of pixels contributing to the statistics (masked pixels excluded) |

| 1 | Mean |

| 2 | Variance |

| 3 | Skewness |

| mixed | Band-to-band correlation (i,j) |

| mixed | Band-to-band covariance (i,j) |

| Index | Reference | Formula |

|---|---|---|

| NDVI (Normalized Difference Vegetation Index) | [52] | |

| Green NDVI (Green Normalized Difference Vegetation Green Index) | [53] | |

| EVI (Enhanced Vegetation Index) | [54] | |

| TCARI (Transformed Chlorophyll Absorption Ratio Index) | [55] | |

| Simple ratio (NIR/RED) | [56] | |

| SARVI (Soil and Atmospherically Resistant Vegetation Index) | [57] | where |

| SAVI (Soil Adjusted Vegetation Index) | [58] | |

| MSAVI2 (Improved Soil Adjusted Vegetation Index) | [59] | |

| NDVI (Normalized Difference Vegetation Index) based on NIR2 | [52] |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stratoulias, D.; Tolpekin, V.; De By, R.A.; Zurita-Milla, R.; Retsios, V.; Bijker, W.; Hasan, M.A.; Vermote, E. A Workflow for Automated Satellite Image Processing: from Raw VHSR Data to Object-Based Spectral Information for Smallholder Agriculture. Remote Sens. 2017, 9, 1048. https://doi.org/10.3390/rs9101048

Stratoulias D, Tolpekin V, De By RA, Zurita-Milla R, Retsios V, Bijker W, Hasan MA, Vermote E. A Workflow for Automated Satellite Image Processing: from Raw VHSR Data to Object-Based Spectral Information for Smallholder Agriculture. Remote Sensing. 2017; 9(10):1048. https://doi.org/10.3390/rs9101048

Chicago/Turabian StyleStratoulias, Dimitris, Valentyn Tolpekin, Rolf A. De By, Raul Zurita-Milla, Vasilios Retsios, Wietske Bijker, Mohammad Alfi Hasan, and Eric Vermote. 2017. "A Workflow for Automated Satellite Image Processing: from Raw VHSR Data to Object-Based Spectral Information for Smallholder Agriculture" Remote Sensing 9, no. 10: 1048. https://doi.org/10.3390/rs9101048