Multi-Feature Object-Based Change Detection Using Self-Adaptive Weight Change Vector Analysis

Abstract

:1. Introduction

2. Theory of Self-Adaptive Weight Change Vector Analysis

2.1. Magnitude and Direction of Change Vectors

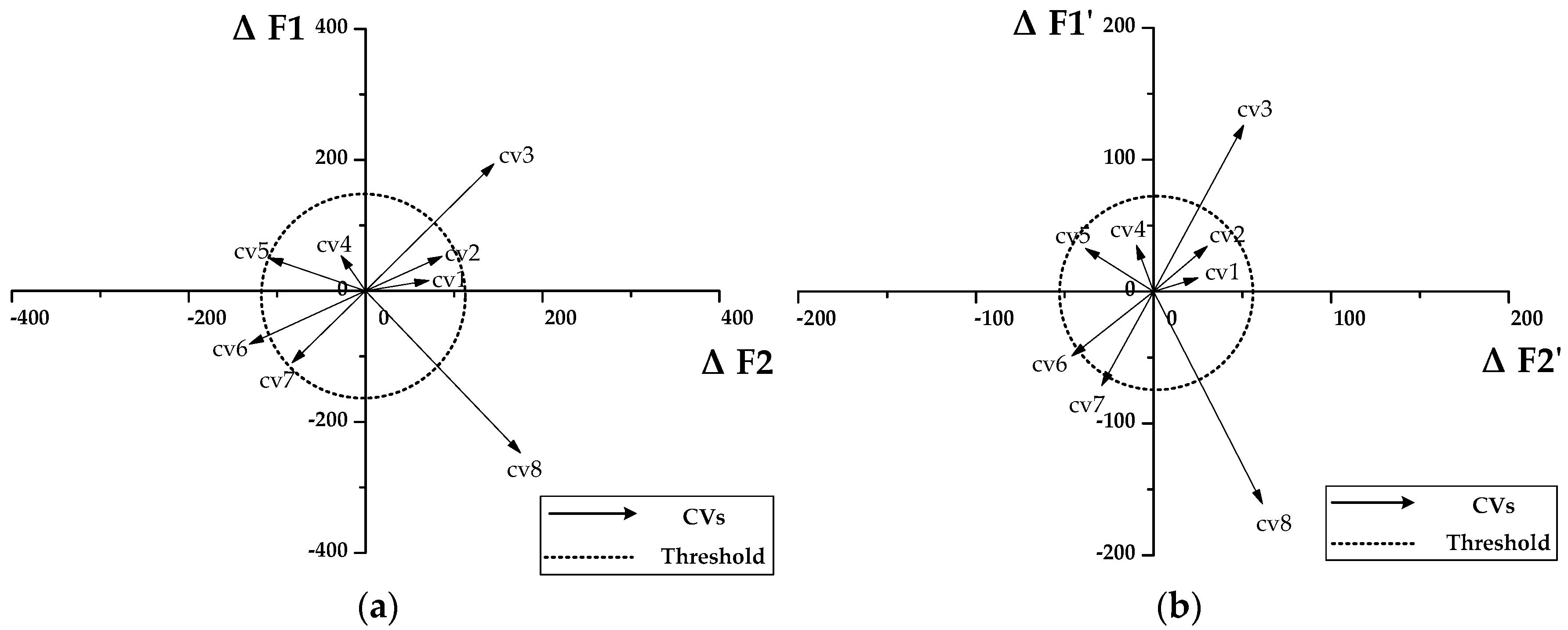

2.2. Proposed Self-Adaptive Weight

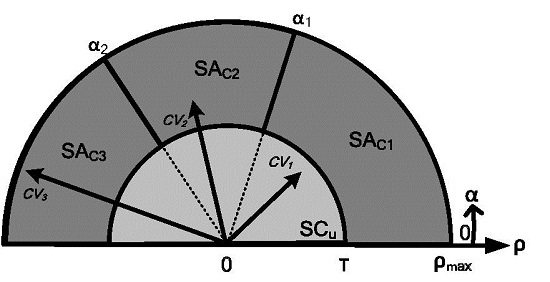

2.3. Representation of Proposed SAW-CVA

2.4. Proposed Technique for Multi-Feature Object-Based Change Detection

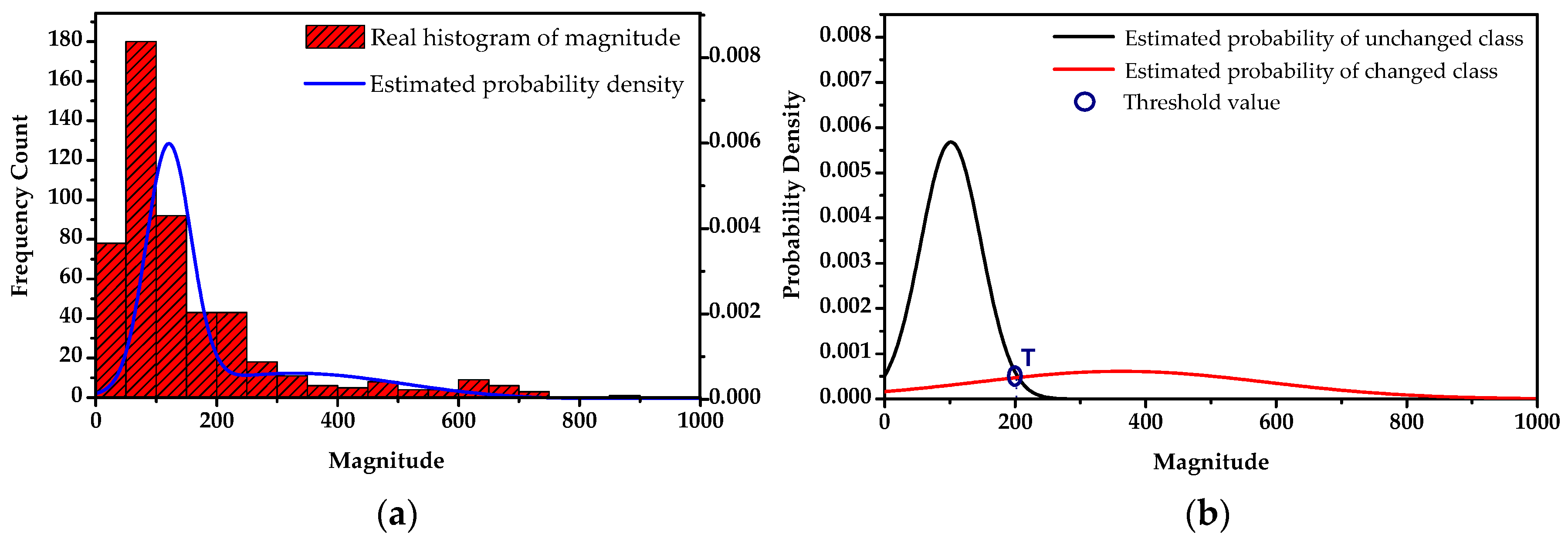

2.4.1. Extraction of Changed Objects

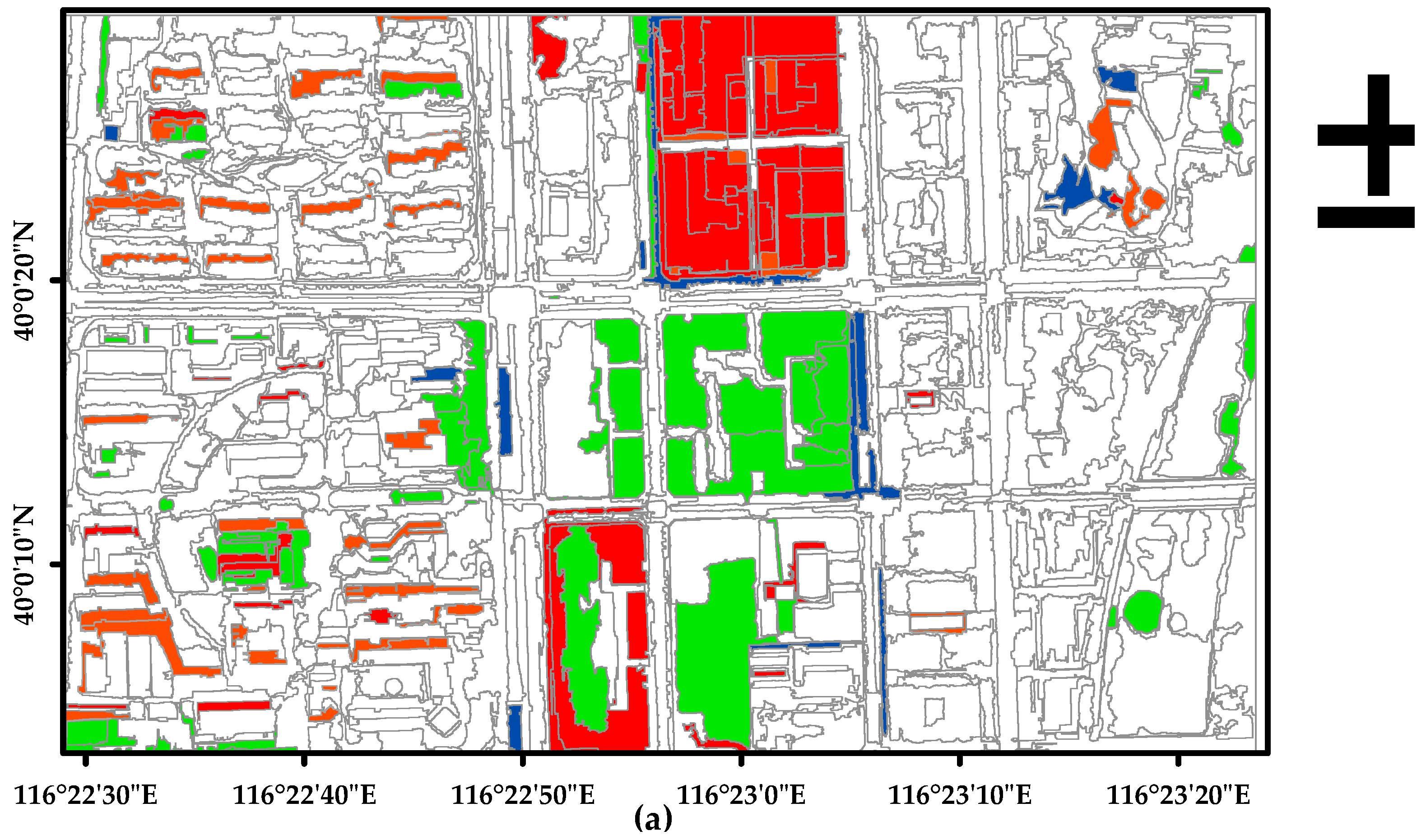

2.4.2. Identification of Different Types of Change

3. Experimental Results and Discussion

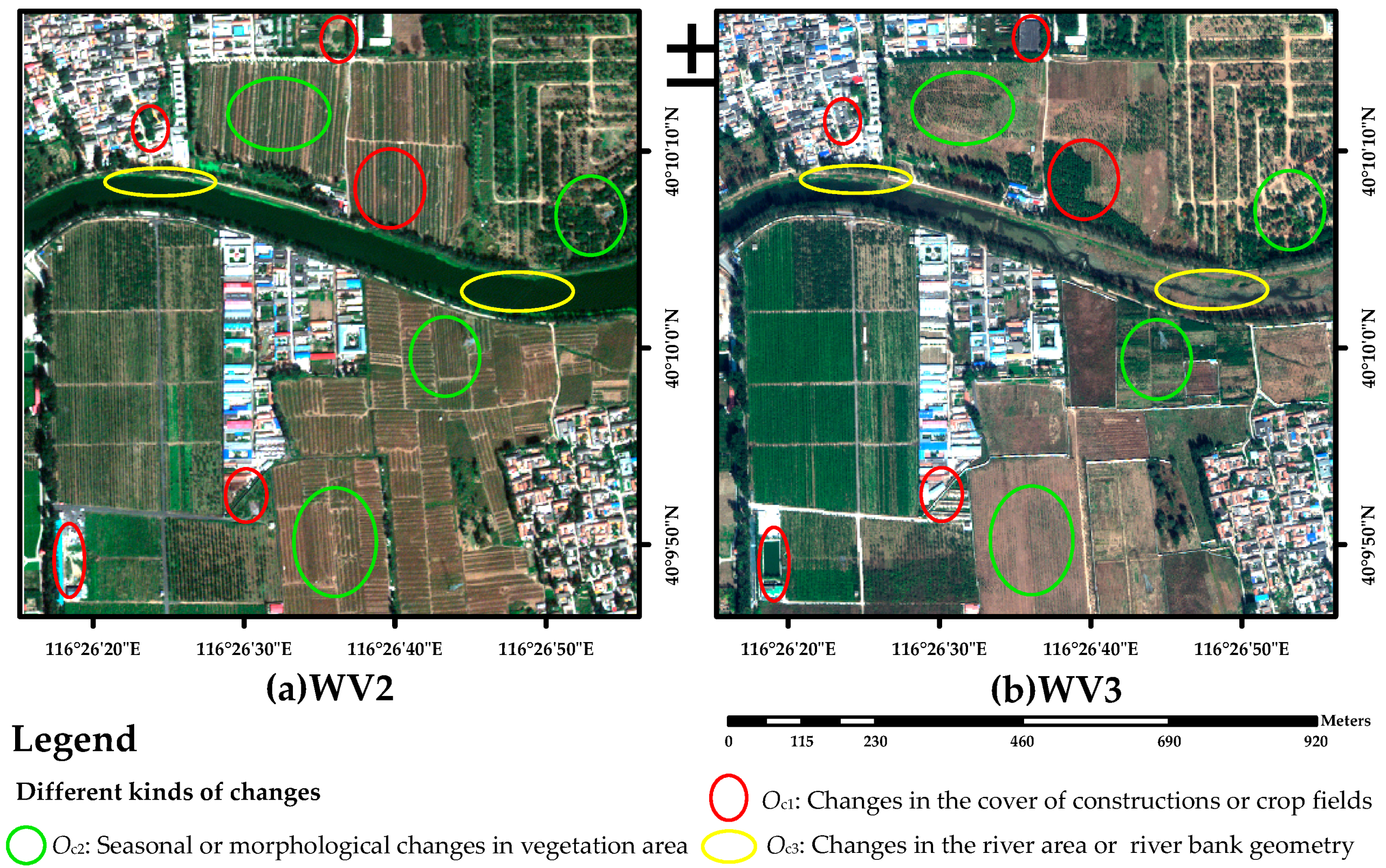

3.1. Case 1: Change Detection of Farmlands Using WV-3 VHR Multispectral Images

3.1.1. Material and Study Area

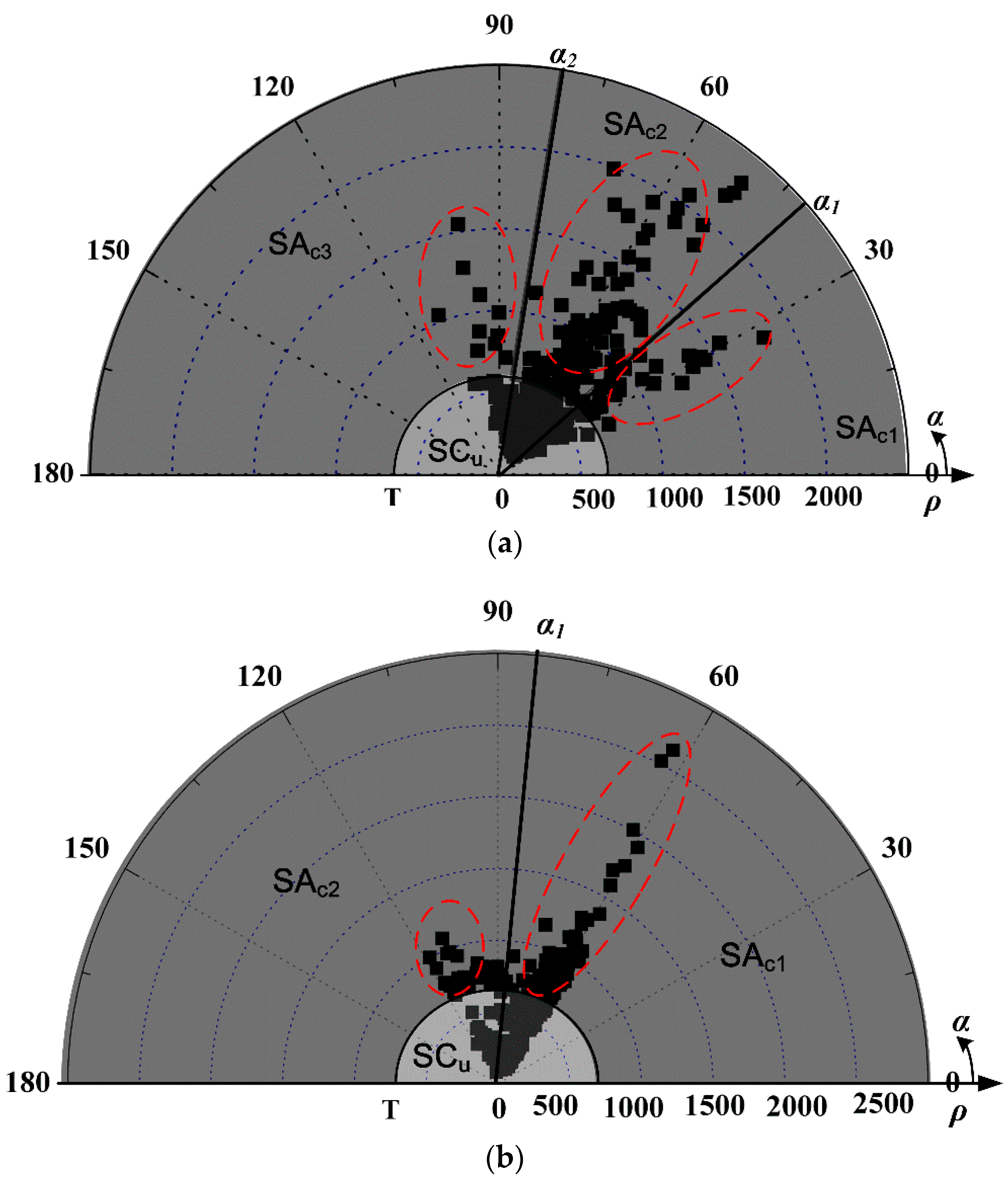

3.1.2. Procedures and Results

3.1.3. Analysis and Discussion

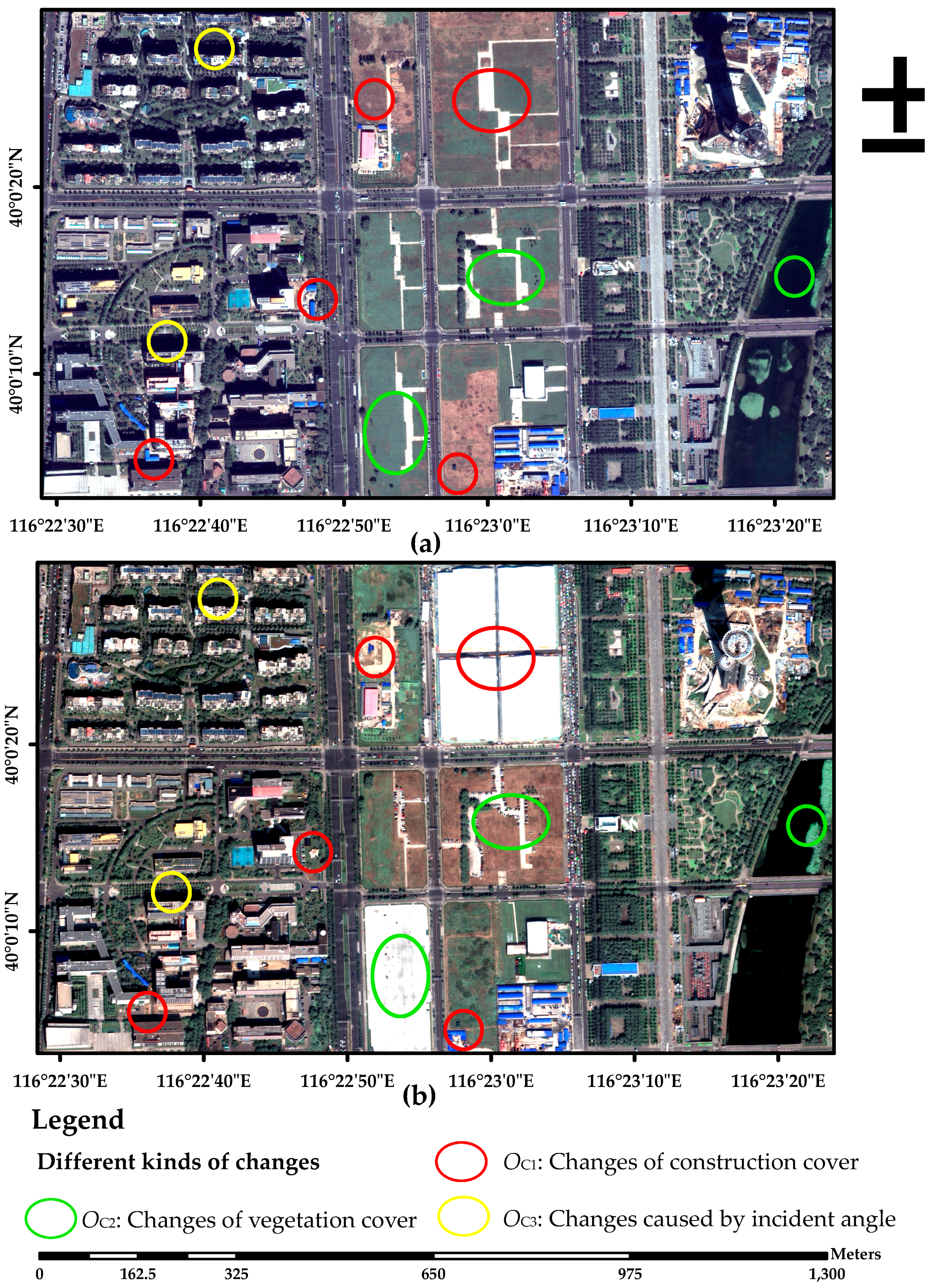

3.2. Case 2: Change Detection for Construction Sites Using WV-2 VHR Panchromatic Fusion Images

3.2.1. Material and Study Area

3.2.2. Procedures and Results

3.2.3. Analysis and Discussion

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic object-based image analysis—Towards a new paradigm. ISPRS J. Photogramm. 2014, 87, 180–191. [Google Scholar] [CrossRef] [PubMed]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Malila, W.A. Change vector analysis: An approach for detecting forest changes with landsat. In Proceedings of 6th Annual Symposium on Machine Processing of Remotely Sensed Data Soil Information Systems and Remote Sensing and Soil Survey, West Lafayette, IN, USA, 3–6 June 1980.

- Tewkesbury, A.P.; Comber, A.J.; Tate, N.J.; Lamb, A.; Fisher, P.F. A critical synthesis of remotely sensed optical image change detection techniques. Remote Sens. Environ. 2015, 160, 1–14. [Google Scholar] [CrossRef]

- Zhuang, H.; Deng, K.; Fan, H.; Yu, M. Strategies combining spectral angle mapper and change vector analysis to unsupervised change detection in multispectral images. IEEE Geosci. Remote Sens. 2016, 13, 681–685. [Google Scholar] [CrossRef]

- Nackaerts, K.; Vaesen, K.; Muys, B.; Coppin, P. Comparative performance of a modified change vector analysis in forest change detection. Int. J. Remote Sens. 2005, 26, 839–852. [Google Scholar] [CrossRef]

- Chen, J.; Gong, P.; He, C.; Pu, R.; Shi, P. Land-use/land-cover change detection using improved change-vector analysis. Photogramm. Eng. Rem. Sens. 2003, 69, 369–379. [Google Scholar] [CrossRef]

- Johnson, R.D.; Kasischke, E.S. Change vector analysis: A technique for the multispectral monitoring of land cover and condition. Int. J. Remote Sens. 1998, 19, 411–426. [Google Scholar] [CrossRef]

- Maeda, E.E.; Arcoverde, G.F.B.; Pellikka, P.K.E.; Shimabukuro, Y.E. Fire risk assessment in the brazilian amazon using modis imagery and change vector analysis. Appl. Geogr. 2011, 31, 76–84. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A theoretical framework for unsupervised change detection based on change vector analysis in the polar domain. IEEE Trans. Geosci. Remote 2007, 45, 218–236. [Google Scholar] [CrossRef]

- Bovolo, F.; Marchesi, S.; Bruzzone, L. A framework for automatic and unsupervised detection of multiple changes in multitemporal images. IEEE Trans. Geosci. Remote 2012, 50, 2196–2212. [Google Scholar] [CrossRef]

- Xiaolu, S.; Bo, C. Change detection using change vector analysis from landsat TM images in Wuhan. Procedia Environ. Sci. 2011, 11, 238–244. [Google Scholar] [CrossRef]

- Chen, Y.H.; Xiao bing, L.I.; Chen, J.; Shi, P.J. The change of NDVI time series based on change vector analysis in china, 1983–1992. J. Remote Sens. 2002, 6, 12–18. [Google Scholar]

- Allen, T.R.; Kupfer, J.A. Application of spherical statistics to change vector analysis of Landsat data : Southern appalachian spruce–fir forests. Remote Sens. Environ. 2000, 74, 482–493. [Google Scholar] [CrossRef]

- Warner, T. Hyperspherical direction cosine change vector analysis. Int. J. Remote Sens. 2005, 26, 1201–1215. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L.; Marconcini, M. A novel approach to unsupervised change detection based on a semisupervised SVM and a similarity measure. IEEE T. Geosci. Remote 2008, 46, 2070–2082. [Google Scholar] [CrossRef]

- He, C.; Wei, A.; Shi, P.; Zhang, Q.; Zhao, Y. Detecting land-use/land-cover change in rural–urban fringe areas using extended change-vector analysis. Int. J. Appl. Earth Obs. Geoinformation 2011, 13, 572–585. [Google Scholar] [CrossRef]

- Thonfeld, F.; Feilhauer, H.; Braun, M.; Menz, G. Robust change vector analysis (RCVA) for multi-sensor very high resolution optical satellite data. Int. J. Appl. Earth Obs. Geoinform. 2016, 50, 131–140. [Google Scholar] [CrossRef]

- Xian, G.; Homer, C. Updating the 2001 national land cover database impervious surface products to 2006 using landsat imagery change detection methods. Remote Sens. Environ. 2010, 114, 1676–1686. [Google Scholar] [CrossRef]

- Carvalho Júnior, O.A.; Guimarães, R.F.; Gillespie, A.R.; Silva, N.C.; Gomes, R.A.T. A new approach to change vector analysis using distance and similarity measures. Remote Sens. 2011, 3, 2473–2493. [Google Scholar] [CrossRef]

- Singh, A. Review article digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef]

- Bruzzone, L.; Prieto, D.F. Automatic analysis of the difference image for unsupervised change detection. IEEE Trans. Geosci. Remote 2000, 38, 1171–1182. [Google Scholar] [CrossRef]

- Prieto, L.B.; Fernandez, D. A minimum-cost thresholding technique for unsupervised change detection. Int. J. Remote Sens. 2000, 21, 3539–3544. [Google Scholar]

- Celik, T.; Ma, K.K. Unsupervised change detection for satellite images using dual-tree complex wavelet transform. IEEE Trans. Geosci. Remote 2010, 48, 1199–1210. [Google Scholar] [CrossRef]

- Radke, R.J.; Srinivas, A.; Omar, A.K.; Badrinath, R. Image change detection algorithms: A systematic survey. IEEE Trans. Image Process. 2005, 14, 294–307. [Google Scholar] [CrossRef] [PubMed]

- Wang, A.; Wang, S.; Lucieer, A. Segmentation of multispectral high-resolution satellite imagery based on integrated feature distributions. Int. J. Remote Sens. 2010, 31, 1471–1483. [Google Scholar] [CrossRef]

- Hu, X.; Tao, C.V.; Prenzel, B. Automatic segmentation of high-resolution satellite imagery by integrating texture, intensity, and color features. Photogramm. Eng. Remote Sens. 2005, 71, 1399–1406. [Google Scholar] [CrossRef]

- Liang, L.I.; Ning, S.; Kai, W.; Yan, G. Change detection method for remote sensing images based on multi-features fusion. Acta Geod. Cart. Sin. 2014. [Google Scholar] [CrossRef]

- Chen, K.M.; Chen, S.Y. Color texture segmentation using feature distributions. Pattern Recognit. Lett. 2002, 23, 755–771. [Google Scholar] [CrossRef]

- Canty, M.J.; Nielsen, A.A. Visualization and unsupervised classification of changes in multispectral satellite imagery. Int. J. Remote Sens. 2006, 27, 3961–3975. [Google Scholar] [CrossRef]

- Chen, J.; Chun yang, H.E.; Shi, P.J.; Chen, Y.H.; Ma, N. Land use/cover change detection with change vector analysis (CVA): Change magnitude threshold determination. J. Remote Sens. 2001, 16, 307–318. [Google Scholar]

- Macqueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the 5th Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, CA, USA, 21 June–18 July 1965.

- Jin, Y.Q.; Chen, F.; Luo, L. Automatic detection of change direction of multi-temporal ERS-2 SAR images using two-threshold EM and MRF algorithms. Imaging Sci. J. 2004, 52, 234–241. [Google Scholar] [CrossRef]

- Wang, G.T.; Liang, W.Y.; Cheng, J.L. Change detection method of multiband remote sensing images based on fast expectation-maximization algorithm and fuzzy fusion. J. Infrared Millim. Waves 2010, 29, 383–388. [Google Scholar] [CrossRef]

- Ya-Ping, L.I.; Yang, H.; Chen, X. Determination of threshold in change detection based on histogram approximation using expectation maximization algorithm and bayes information criterion. J. Remote Sens. 2008, 12, 85–91. [Google Scholar]

- Rahman, M.R.; Saha, S.K. Multi-resolution segmentation for object-based classification and accuracy assessment of land use/land cover classification using remotely sensed data. J. Indian Soc. Remote Sens. 2008, 36, 189–201. [Google Scholar] [CrossRef]

- Drăguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. 2014, 88, 119–127. [Google Scholar] [CrossRef] [PubMed]

- Drǎguţ, L.; Tiede, D.; Levick, S.R. Esp: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Honeycutt, C.E.; Plotnick, R. Image analysis techniques and gray-level co-occurrence matrices (GLCM) for calculating bioturbation indices and characterizing biogenic sedimentary structures. Comput. Geosci. 2008, 34, 1461–1472. [Google Scholar] [CrossRef]

- Palubinskas, G. Fast, simple, and good pan-sharpening method. J. Appl. Remote Sens. 2013, 7, 1–12. [Google Scholar] [CrossRef]

| SAW-CVA | True Class | FPR or User Accuracy | ||||

|---|---|---|---|---|---|---|

| Ou | Oc1 | Oc2 | Oc3 | |||

| Resulting Class | Ou | 136 | 3 | 3 | 2 | 94.44% |

| Oc1 | 3 | 78 | 2 | 2 | 91.76% | |

| Oc2 | 8 | 21 | 67 | 2 | 68.37% | |

| Oc3 | 0 | 2 | 1 | 20 | 86.96% | |

| FNR or Producer Accuracy | 92.52% | 75.00% | 91.78% | 76.92% | ||

| Overall Accuracy | 86.03% | Kappa | 0.7976 | |||

| Standard CVA | True Class | FPR or User Accuracy | ||||

|---|---|---|---|---|---|---|

| Ou | Oc1 | Oc2 | Oc3 | |||

| Resulting Class | Ou | 130 | 2 | 25 | 0 | 82.80% |

| Oc1 | 10 | 100 | 38 | 1 | 67.11% | |

| Oc2 | 0 | 0 | 0 | 0 | 0.00% | |

| Oc3 | 7 | 2 | 10 | 25 | 56.82% | |

| FNR or Producer Accuracy | 88.44% | 96.15% | 0.00% | 96.15% | ||

| Overall Accuracy | 75.86% | Kappa | 0.6283 | |||

| SAW-CVA | True Class | FPR or User Accuracy | |||||

|---|---|---|---|---|---|---|---|

| Ou | Oc1 | Oc2 | Oc3 | Oc4 | |||

| Resulting Class | Ou | 346 | 4 | 3 | 2 | 0 | 97.46% |

| Oc1 | 2 | 38 | 6 | 3 | 0 | 77.55% | |

| Oc2 | 6 | 5 | 36 | 5 | 0 | 69.23% | |

| Oc3 | 8 | 2 | 2 | 32 | 0 | 72.73% | |

| Oc4 | 10 | 1 | 0 | 1 | 0 | 0.00% | |

| FNR or Producer Accuracy | 93.01% | 76.00% | 76.60% | 74.42% | 0.00% | ||

| Overall Accuracy | 88.28% | Kappa | 0.7508 | ||||

| CVA | True Class | FPR or User Accuracy | ||||

|---|---|---|---|---|---|---|

| Ou | Oc1 | Oc2 | Oc3 | |||

| Resulting Class | Ou | 356 | 6 | 4 | 9 | 94.93% |

| Oc1 | 3 | 38 | 2 | 24 | 56.72% | |

| Oc2 | 13 | 6 | 41 | 10 | 58.57% | |

| Oc3 | 0 | 0 | 0 | 0 | 0.00% | |

| FNR or Producer Accuracy | 95.70% | 76.00% | 87.23% | 0.00% | ||

| Overall Accuracy | 84.96% | Kappa | 0.6601 | |||

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Q.; Chen, Y. Multi-Feature Object-Based Change Detection Using Self-Adaptive Weight Change Vector Analysis. Remote Sens. 2016, 8, 549. https://doi.org/10.3390/rs8070549

Chen Q, Chen Y. Multi-Feature Object-Based Change Detection Using Self-Adaptive Weight Change Vector Analysis. Remote Sensing. 2016; 8(7):549. https://doi.org/10.3390/rs8070549

Chicago/Turabian StyleChen, Qiang, and Yunhao Chen. 2016. "Multi-Feature Object-Based Change Detection Using Self-Adaptive Weight Change Vector Analysis" Remote Sensing 8, no. 7: 549. https://doi.org/10.3390/rs8070549