1. Introduction

Higher agricultural yields will be required in the future in order to fulfill food supply needs [

1]. Added to this, bio-fuel production and urban growth are also increasing the pressure on agricultural lands [

2,

3,

4]. All these factors will also have consequences on natural ecosystems [

5,

6].

In this context, crop area extent estimates and crop type maps provide crucial information for agricultural monitoring and management. Remote sensing imagery in general and, in particular, high temporal and high spatial resolution data as the ones which will be available with recently launched systems such as Sentinel-1 [

7] and Sentinel-2 [

8] constitute a major asset for this kind of application. We will use the term

dense time series to refer to revisit rates which allow to capture the evolution of the signal of interest. In the case of crop type mapping, this corresponds to at least two images per month.

Recent works have shown that dense multi-temporal optical imagery as the one that will be provided by the Sentinel-2 satellites is able to provide accurate crop type mapping over different climates and diverse crop systems [

9]. However, since optical imagery is affected by cloud cover, the performances of the crop type mapping system can be hindered in some cases, even with a 5-day revisit cycle. Furthermore, SAR data may improve the discrimination of some crop types which are difficult to distinguish with optical imagery alone.

Added to annual mapping as the one presented in [

9], early crop type detection before the end of the season is needed for yield forecasting and irrigation management. Except for tropical or very arid areas, most of crop systems in the world have an annual cycle with possibly 2 sub-cycles (winter and summer crops). By

early in the season we refer to the possibility of providing an annual crop type map between months 6 and 9 of the annual cycle. In this case, image availability and multi-sensor information is still more crucial.

Optical data is usually preferred to SAR imagery because of the better understanding of the link between the observations and vegetation phenology. However, the all-weather acquisitions provided by SAR and recent advances in the understanding of the underlying physical phenomena, make multi-temporal SAR imagery an interesting candidate for crop type mapping.

For instance, Skriver

et al. [

10] showed the interest of multi-temporal polarimetric signatures of crops. Schotten

et al. [

11] used ERS-1 multi-temporal data in order to perform field level classification to discriminate 12 crop types, achieving 80% accuracy. Their approach needed the selection of the best image subset among the 14 images available during the agricultural season.

Recently, Balzter

et al. [

12] showed that Sentinel-1 could be used to recognise some land cover classes of the Corine Land Cover nomenclature, although their study used only two dates and the results seemed to depend to a great extent on topography information.

The selection of optimal dates was also used by Skriver

et al. [

13] with the EMISAR airborne system which provided monthly polarimetric acquisitions achieving a 20% error rate for their particular study site.

In the perspective of an operational crop type map production system as the one presented for instance in [

9], date selection is not possible and all available data has to be used.

The literature shows that in order to achieve high quality mapping results, full polarimetric SAR data is needed, although different authors came to different conclusions depending on their experiments. For instance, Moran

et al. [

14] concluded that full polarimetry with a revisit of 3 to 6 days is needed for crop identification and that with single or dual polarimetry, phenology is accessible, but crop type is not. On the other hand, Schotten

et al. [

11], found that the cross-polarised channel

is correlated with NDVI for some crops, allowing for their identification.

Nowadays, the only satellite system providing full polarimetric SAR data is Radarsat-2, but due to its wide range of acquisition modes, it is difficult to obtain dense time series with global coverage.

Skriver

et al. [

15] found that multi-temporal SAR data provided a better trade-off than polarimetric imagery. Sentinel-1 does not provide full-polarimetric imagery. On the other hand, it will make available dense time series (6-day revisit cycle with two satellites), and it is therefore a good candidate for operational crop type mapping. McNairn

et al. [

16] investigated the possibility of early season monitoring for two classes (soybean and corn) using TerraSAR-X and Radarsat-2 time series. They highlighted the usefulness of multi-temporal speckle filtering.

Multi-sensor data fusion [

17] approaches that combine SAR and high resolution optical sensors have clearly demonstrated an increased mapping accuracy.

SAR imagery for crop type mapping has been used together with optical data. For instance, McNairn

et al. [

18] used 2 SAR (Envisat-ASAR) images and one optical (SPOT4) to achieve acceptable accuracies. Zhu

et al. [

19] proposed a Bayesian formulation for the fusion of Landsat TM and ERS SAR (two images of each type). Their approach was based on building a specific statistical link between the two types of data for a particular set of acquisition dates, which is not general enough to be implemented in operational settings. However, their results showed the complementarity of the two types of data. The two works cited above used a small number of images. Sentinel-1 and Sentinel-2, with their short revisit cycle will offer improved possibilities.

Some works in the literature have already explored the use long time series, but they used a dense time series of one of the sensors (either optical or SAR) and a few images coming from the other modality.

For instance, Blaes

et al. [

20] used 15 SAR images (ERS and Radarsat) and three optical ones (Landsat TM). They performed a field level classification together with photo-interpretation schemes. They showed an improvement of the accuracy thanks to the use of SAR data, with respect to the accuracy achieved with three optical images. As most approaches in the literature, a date selection was used, which is incompatible with fully automatic operational systems.

Le Hégarat

et al. [

21] reached similar conclusions as [

20] using other methods (Markov Random Fields and Dempster Shafer fusion). Their set up also used a SAR time series and only three Landsat images. They concluded that SAR SITS alone yields lower accuracies than three optical images alone, but that the synergy between the two types of data gives the best accuracy. Similar conclusions are reported by Chust

et al. [

22] using ERS and SPOT imagery and similar fusion methods.

Unlike the literature cited above, in this paper we assess the joint use of dense SITS of both SAR and optical imagery in order to devise a strategy for the operational exploitation of both Sentinel-1 and Sentinel-2 data in the frame of crop type mapping at early stages of the agricultural season. Several similar contributions have recently appeared in the literature. Forkuor

et al. [

23] investigated the contribution of X band polarimetric SAR images to crop mapping when used as a complement to high resolution optical SITS. Skakun

et al. [

24] investigated the use of C band Radarsat-2 dual polarimetric images together with Landsat8 time series as a preparation of the upcoming availability of Sentinel-1 images. However they used two different incidence angles and did not investigate the extraction of local features as textures or local statistics. Villa

et al. [

25] proposed an expert-based decision tree which is able to combine Landsat8 and X-band COSMO-SkyMed SAR image time series for in-season crop mapping.

Although these recent contributions give interesting and useful insights for the problem of crop mapping using optical and SAR SITS together, they do not address some key issues for the implementation of operational processing chains for early crop mapping. Indeed, the global availability of dense image time series makes Sentinel-1 data much more interesting than existing counterparts (Radarsat-2, TerraSAR-X, COSMO-SkyMed) for 2 main reasons:

the images are available free of charge under an open license;

the definition of a main acquisition mode (Interferometric Wide Swath) with constant viewing angles for the same point on the ground provides consistent time series with the same characteristics.

It is therefore interesting to investigate how to integrate these time series together with well known and used optical time series for crop mapping. In particular, it will be interesting to select the best image features and analyze the classification accuracy along the season in order to be able to provide early crop maps.

Similar approaches have recently been applied to forest monitoring mainly for tropical areas [

26,

27,

28,

29,

30], but these approaches work in multi-year configurations which can not be applied for croplands, since the same field can change from one crop type to a different one in successive years.

The work on grasslands by Schuster

et al. [

31] showed the potential and complementarity of optical and SAR data for intra-year phenology monitoring, but the joint use of both sensor was not attempted.

The work presented here builds upon the results reported in [

9], where the optical image exploitation work-flow was assessed. Therefore, the focus of the present work is on the integration of multi-temporal SAR data into the existing optical processing chain. In order to do that, the SAR image processing and feature extraction will be investigated. Since at the time of this writing Sentinel-2 has not reached its full acquisition capabilities, no time series covering a full crop season are available. In this work, we will use Landsat8 time series instead.

The paper is organized as follows.

Section 2 introduces the study site and the data sets used.

Section 3 describes the methodology applied and the different configurations used to assess the contribution of SAR time series to the crop type mapping quality.

Section 4 presents the detailed results and discusses them. Finally,

Section 5 draws final conclusion and suggests further research to improve the approach.

4. Results and Discussion

In this section, we report the results of the experiments described in

Section 3.

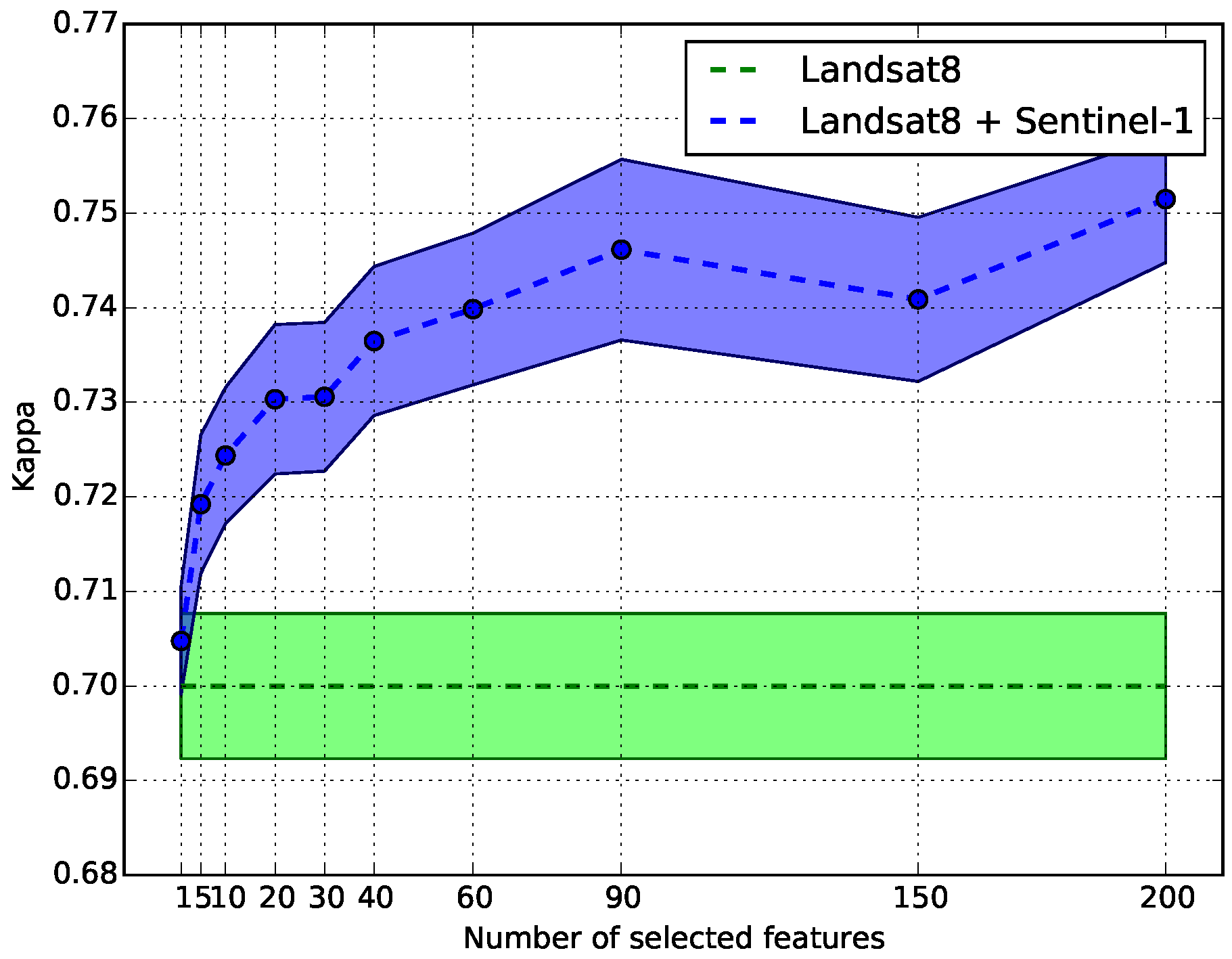

4.1. Feature Selection

The feature selection approach described in

Section 3.2 was applied in order to sort the different SAR features by importance. The reader must bear in mind that no feature selection is applied on the features extracted from the optical images, but that all features (optical and SAR) are used in the importance computation. This allows to select the SAR features as a complement to the optical SITS. The use of SAR features alone might yield different results.

Figure 2 shows the evolution of the classification accuracy as a function of the number of SAR features selected. For this experiment, the complete time series was used and features were added in decreasing order of importance. One can observe that the first 20 most important features allow to achieve an increase of about 3 percentage points of

κ. The improvement continues up to 60 features, although slowly and stabilizes afterwards.

As a trade-off between accuracy and computational cost, we choose to keep the 40 most important features in the following experiments.

In order to study the most pertinent features for the classification, feature importance is computed for each time point in the time series using all the available images up to the given time point. The incremental classification set up described in

Section 3.1 is used.

Figure 3 presents the 40 first features sorted by decreasing importance when the complete time series is used. A color coding is used in order to group features by family (raw images in red, polarimetry ratios in cyan, Haralik textures in green, local statistics in light brown and SFS textures do not appear among the 40 first features). The horizontal axis is labelled with a key containing whether the image was in intensity (I, the modulus square of the complex pixel) or amplitude (A, the square root of I), its polarization (VV or VH), the acquisition date and the name of the texture computed.

It can be seen that local statistics and Haralik textures are the most frequently present and that SFS do not appear among the first 40 features. Among Haralik textures, the most frequent are Energy, Entropy and Inverse Difference Moment. In terms of local statistics, none of the higher moments appear and only the local mean is present. Finally, there seems to be no significant difference between amplitude or intensity for the raw images. In terms of polarimetric information, VV polarization appears as more important and VH polarization has only 8 occurrences over 37. This result may seem surprising. One could have expected that VH polarization, which contains volume scattering information, would be selected as more important than VV polarization. However, features are selected as a complement to the optical ones, and VH polarization and NDVI can be correlated [

11]. Three occurrences of the polarimetric ratio are present among the 40 most important features.

In terms of dates, it is not surprising to see that spring dates are the most present, since most of the evolution of the vegetation happens in this period when winter crops are mature and summer crops are just emerging, but this may be correlated with cloud cover. There is also more cloud cover in February, but this is a vegetation dormancy period in the study area. Some occurrences of dates in November appear when soil work for winter crops takes place in the area.

The analysis of feature importance for other time points (using the incremental classification approach) yields the same results in terms of importance for the different families of features.

In the following experiments, the 40 most important SAR features are kept for every classification performed.

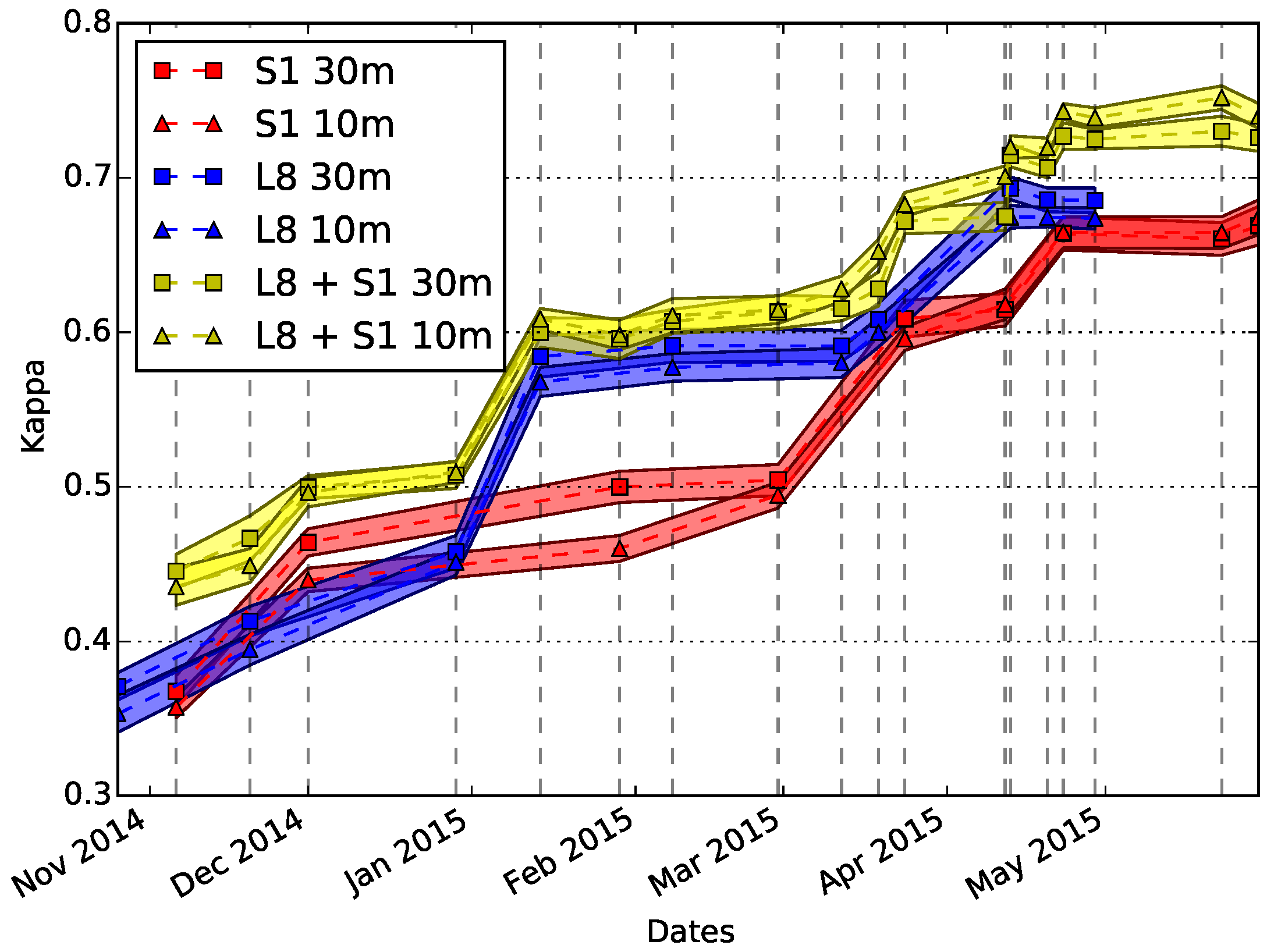

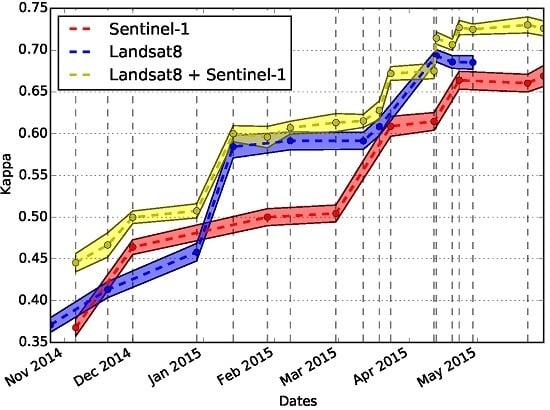

4.2. Impact of SAR Images in the Classification

Figure 4 summarizes the accuracy results of the real-time classification simulation for 3 scenarios:

use of optical imagery alone (blue);

use of SAR imagery alone (red);

joint use of optical and SAR imagery (yellow).

The plots show the mean value of κ over 10 random trials together with 95% confidence intervals.

First of all, we observe an expected pattern of increasing accuracy as the season progresses and more data is available. This behavior is the same for the three scenarios. As one can observe, optical data outperforms SAR time series for crop mapping. This is expected, since crop growth is mainly characterized by the time profile of the vegetative activity for which NDVI is a good proxy. There is a short interval in which SAR imagery yields better results during late autumn when ploughing occurs before sowing winter crops. Nevertheless, the red curve shows that SAR image time series alone are able to catch up with optical time series performances at some key dates of the season (April and May).

The joint use of the two types of imagery yields always better results than optical imagery alone (except for one data point in mid April which will be discussed below). Its also remarkable that at the beginning of April, the fusion of the two modalities achieves results which are equivalent to those obtained by the optical imagery alone 1 month later. This an important gain for early crop mapping.

Finally, when the last optical data is available, the gain achieved by the joint use of optical and SAR time series is higher than 0.04 in κ coefficient, with very narrow confidence intervals.

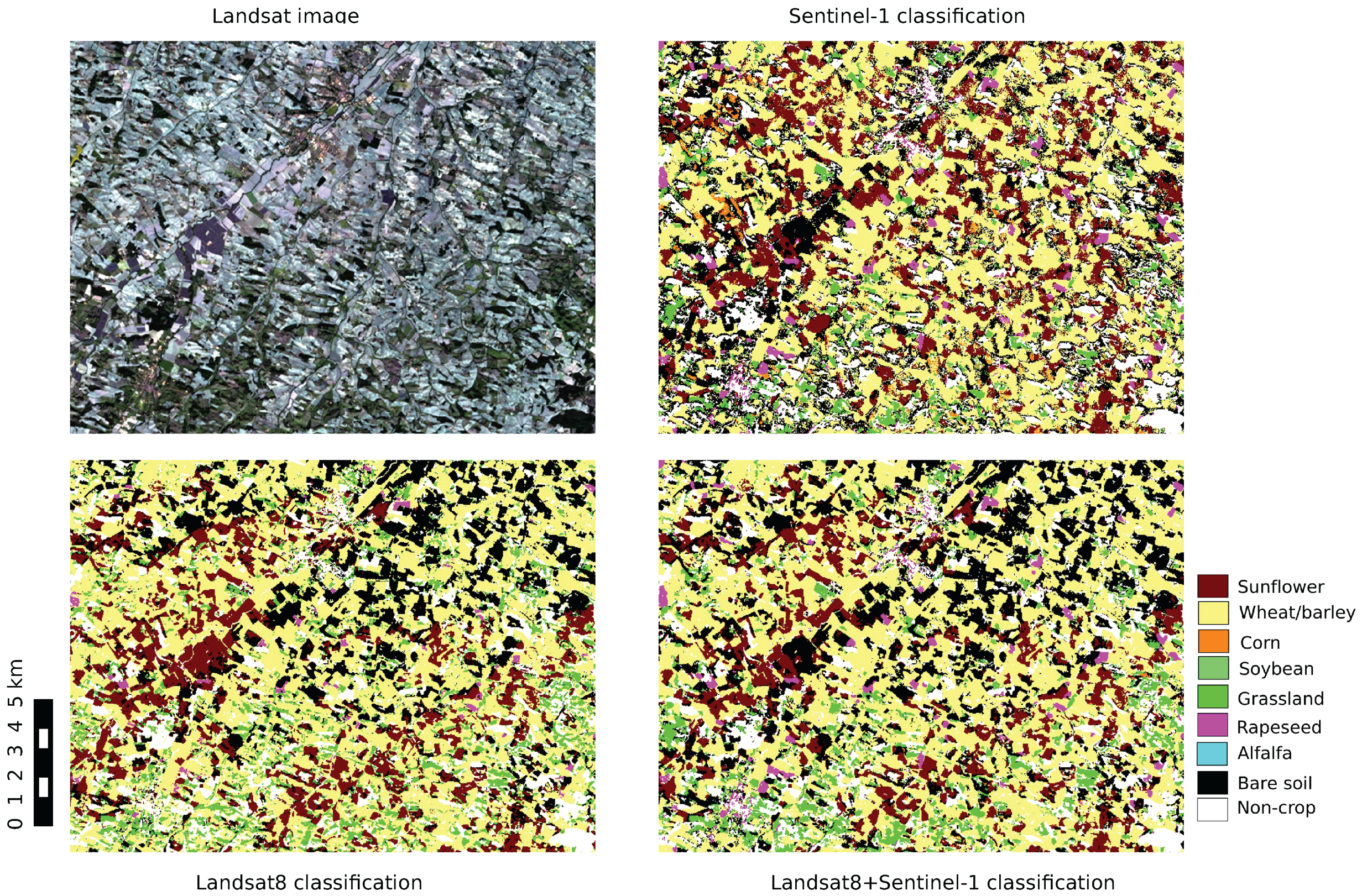

Figure 5 shows an extract of the land cover maps obtained at the end of each time series (Sentinel-1 only, Landsat8 only and both). One can observe that the Sentinel-1 map is noisier than the maps produced using Landsat8 images. Globally, the maps are consistent, but they do not allow a precise interpretation of the differences of behavior between the different configurations.

We analyze in detail the results looking at the confusion matrices. These present the reference class labels in rows and the labels predicted by the classifier in columns. The results are expressed in percentages with respect to the reference labels, and therefore, values in the diagonal represent Producers Accuracy. User Accuracy (UA) values are presented in the bottom row of each matrix. The κ coefficient is given in the legend of each matrix.

Table 6,

Table 7 and

Table 8 show respectively the confusion matrices using Sentinel-1 alone, Landsat8 alone and the two time series together up to 29-01-2015. At this point in time, there is a very small improvement by the use of radar imagery which is mainly concentrated in the better discrimination of sunflower by decreasing the confusion with wheat/barley. Since sunflower is a summer crop, it is not still present, but SAR imagery allows to characterize soil work which precedes the seeding of the summer crops. However, the use of SAR data introduces a low decrease in the accuracy for rapeseed which is confused with bare soil. As expected, soybean and alfalfa are not recognized by any of the classifications. Soybean is predicted as sunflower (another summer crop) or wheat/barley (in the cases where biomass residues from the previous crop are present in the fields). Alfalfa is mostly predicted as grass, which is expected because of the similarity between these two classes.

Table 9,

Table 10 and

Table 11 show the confusion matrices for 24-03-2015 using Sentinel-1 alone, Landsat8 alone and both time series together respectively. This date is particularly interesting since the use of SAR data allows a significant increase of

κ.

Again, there is an important improvement for sunflower strongly decreasing the confusion with wheat/barley still present in the case of Landsat8. The other noticeable improvement appears on the grass class, which has the same accuracy for each of the two sensors used independently, and its good classification increases about 8% when the two sensors are used together.

It is interesting to note the behavior of the soybean class. While the optical classification makes the same confusions as in the previous studied date, the SAR classification assigns many pixels to the bare soil class. This is due to the fact that, in March, soil work for the summer crops has started. This behavior also appears when the two sensors are used together.

Finally we analyse the confusion matrices for 23-04-2015 (

Table 12,

Table 13 and

Table 14 for Sentinel-1, Landsat8 and both sensors together respectively). For this case, we observe a degradation of the accuracy in sunflower with respect to the previous period, which is mainly due to an increasing confusion in the SAR case with bare soil, since at this point in time sunflower has emerged in some fields and not in others. There is also a decrease in the Landsat8 case, where the confusion with corn increases (both are summer crops starting to emerge at this point in time).

The increase in

κ observed for this date in

Figure 4 are distributed among all the classes and it is difficult to point out a particular class.

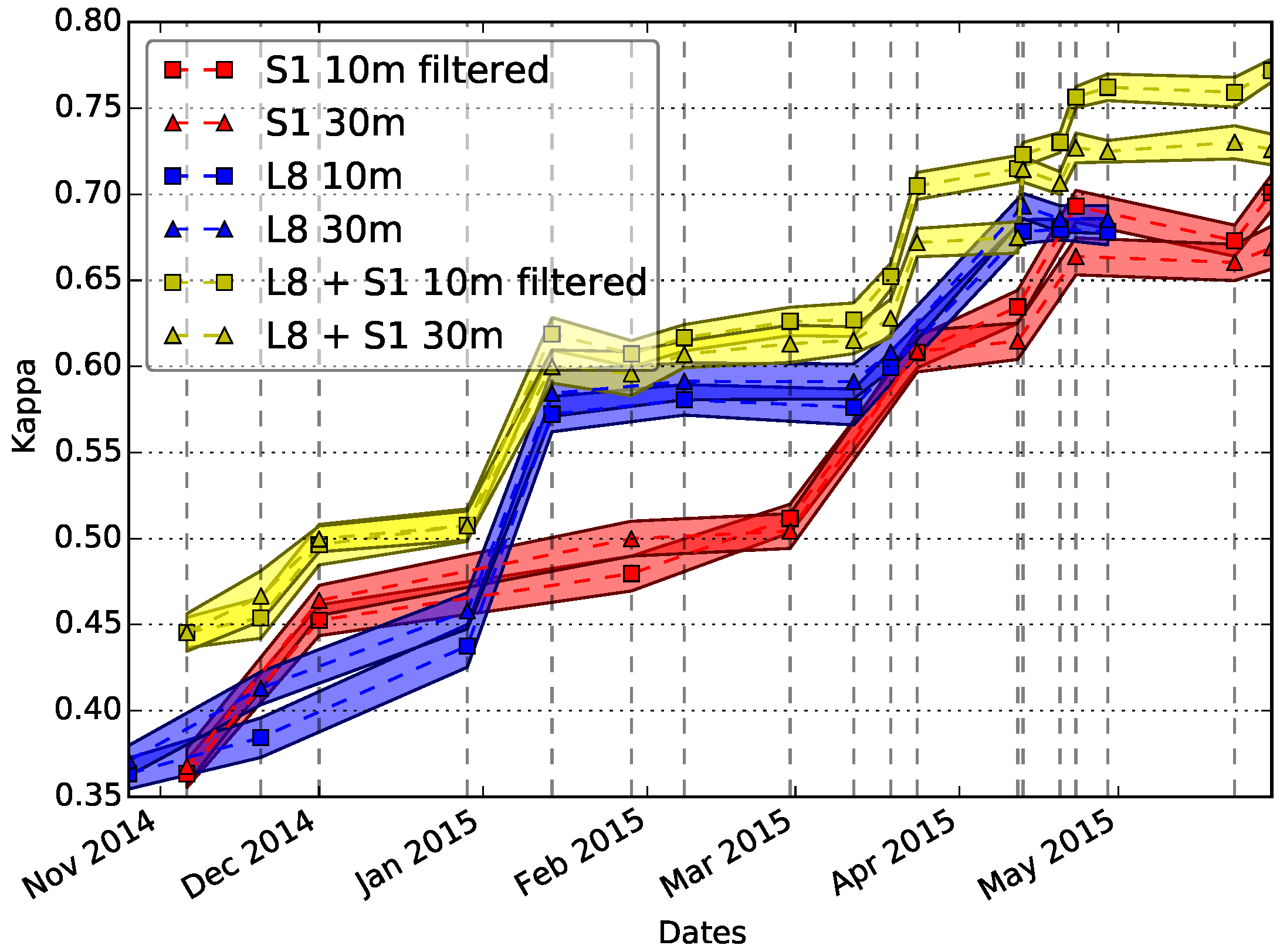

4.3. Impact of Image Resolution and Speckle Filtering

In this section, the influence of the spatial resolution (30 m or 10 m) is investigated.

Figure 6 compares the two resolution scenarios: SAR imagery resampled to the Landsat8 pixel size, and optical imagery resampled to the Sentinel-1 10 m pixel size. We see that there is no significant difference from a statistical point of view (overlapping confidence intervals) between the two resolutions, except for the winter period in the case where only SAR data is used. In this case, the 30 m resolution seems more appropriate, probably due to the despeckling effect of the averaging performed by the resampling at 30 m This result can be explained by the field size in the study area which, as

Table 1, is larger than 2 ha for most of the classes.

Figure 7 shows the classification accuracy for the data resampled to 10 m and shows the influence of speckle filtering. One can observe that, when SAR and optical data are used together, the speckle filtering introduces a statistically significant small improvement towards the end of the season.

Resampling all the data to a 10 m grid involves high computational times due to the resampling itself, but also to the larger volumes of data to be processed.

Figure 8 shows the plots of classification accuracy for the case of data at 10 m and speckle filtering and data at 30 m without speckle filtering. Better performances are obtained by working at the finer resolution and using the speckle filtering. As in the previous case, the improvement is observed even in the case where optical and SAR data are jointly used. The largest improvement is observed at the end of the season.

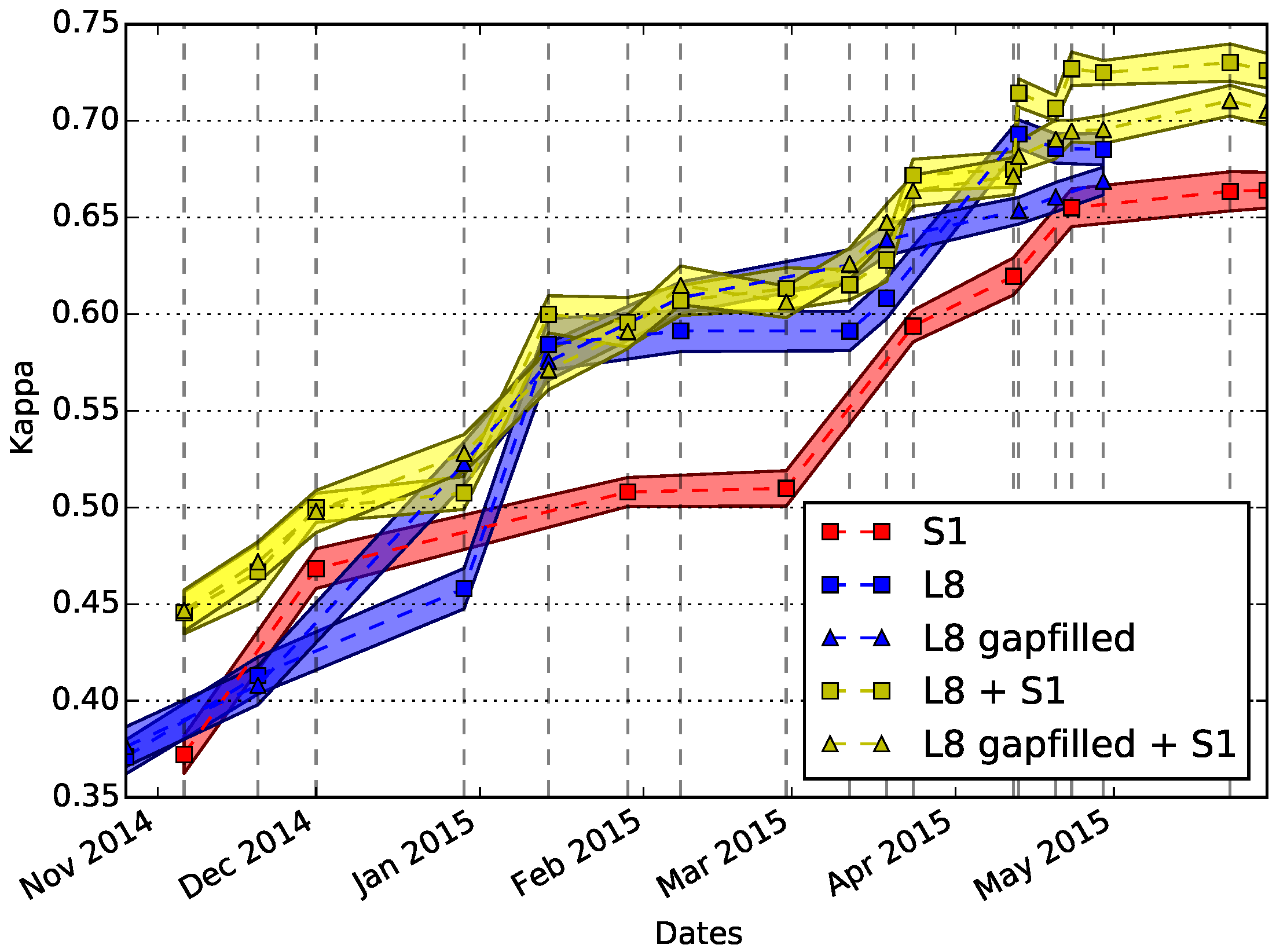

4.4. Impact of Gap-Filling

This section presents the evaluation of the gap-filling applied to the optical SITS to clean cloudy pixels as described in

Section 3.4.

Figure 9 summarizes the results of the experiment. First of all, it is interesting to note that for the optical data alone, the gap-filled time series yields better results than the series without gap-filling until the image acquired on April 13th. An investigation in the data set showed that errors in the cloud screening procedure masked a large extent of the reference data for this date and missed some clouds in the following dates producing incorrect interpolated values, which strongly affected the quality of the classifier. The trend of the curves for the optical data indicate that this error may be compensated by the additional images acquired later. However, this kind of error can have an important impact in the classification. Before this date, one can observe that gap-filled optical data yields performances statistically equivalent to those of the joint use of optical and SAR data. Nevertheless, at the beginning of the season, using the two time series together has still a positive impact on the crop type identification. In the case of the joint use of the two time series, one can observe that the gap-filling does not bring any improvement even for the periods on which the gap-filling was useful for the optical data alone. After the dates where the gap-filling artifact is present, as in the optical SITS alone, the non gap-filled case allows to achieve better performances.

Therefore, we can conclude that the joint use of SAR and optical time series does not benefit from a temporal gap-filling processing. This simplifies the processing chain and reduces the computational cost, which is a key element of an operational land cover map production system.

5. Conclusions

In this paper, the impact of high temporal resolution SAR satellite image time series (SITS) as a complement to high temporal and spatial resolution optical imagery for early crop type mapping has been studied.

Sentinel-1 SAR image time series were used with Landsat8 SITS showing that a significant improvement in classification accuracy can be achieved allowing to obtain land cover mapping earlier in the season with respect to the case where optical imagery is used alone.

The most pertinent features derived from SAR imagery where analyzed, showing that Haralik textures (Entropy, Inertia), the polarization ratio and the local mean together with the VV imagery contain most of the information needed for an accurate classification.

The influence of image resolution and speckle filtering where also investigated, leading to the conclusion that working at 10 m resolution and using speckle filtering improves the results.

Finally, the effect of temporal gap-filling of the optical SITS was investigated, revealing that errors in the cloud screening procedure can have high impact in the classification accuracy if they coincide with ground reference data used for the classifier training. The use of SAR imagery allows to use optical data without gap-filling yielding results which are equivalent to the use of gap-filling in the case of perfect cloud screening, and better results in the case of cloud screening errors.

These results are relevant for the upcoming availability of Sentinel-1 and Sentinel-2 dense image time series which will provide respectively a 6-day and 5-day revisit cycle during their full capacity operational phase.