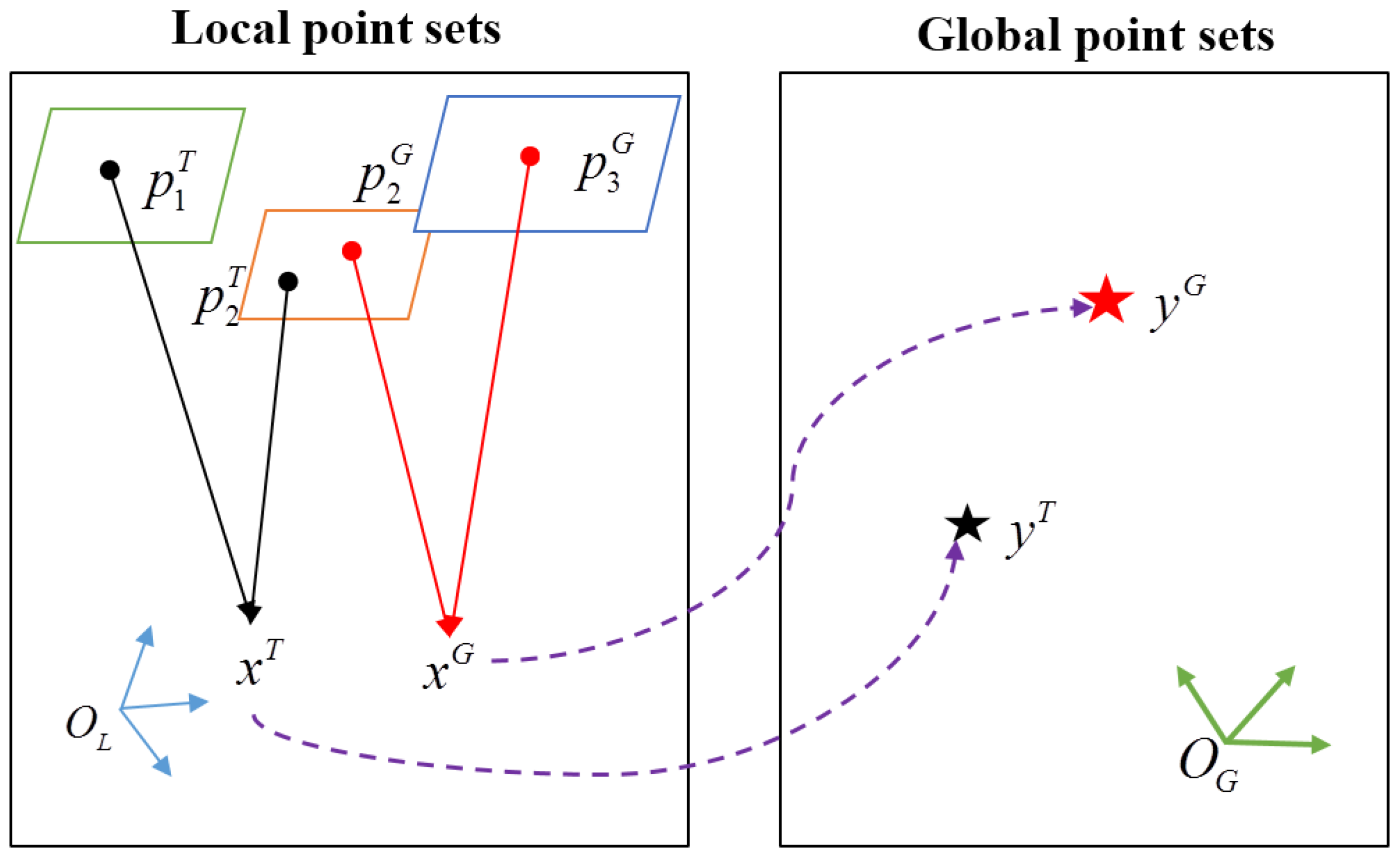

3.1. Absolute Orientation Based on Various Sets of Similarity Transformation Parameters

In the classical method, the set of seven similarity transformation parameters, uniquely describing the transformation between two point sets in two coordinate systems, can be estimated when the points of GCPs in the local and global coordinate systems are available. In this section, for each TP

, we introduce a new absolute orientation method to obtain the transformed point

using the individual set of similarity transformation parameters

, as shown here:

where

and

represent the computed scaling factor, rotation matrix and translation vectors used to transform TP

into

, respectively. The calculated transformation parameters are significantly different for different TPs. For the pair of points

, the set of similarity transformation parameters is expressed by

instead of

.

should be different for different pairs of points. Supposing that there are

n TPs to be transformed, the number of sets of transformation parameters calculated should be

n. In other words,

k in

ranges from one to

n.

To accurately transform a TP

in the local coordinate system to the global coordinate system, we should consider the influence of the distances between

and the GCPs. Thus, we first exchange

and

in Equation (

7) to obtain the new observation function as follows:

where

and

are the scaling factor, rotation matrix and translation vector used to transform

into

. The GCPs close to

should play a more important role in determining the global point of

. Thus, in contrast to the least squares problem in (

3), a least squares problem with weighting matrices is constructed to find the optimal set of similarity transformation parameters as follows:

When the set of transformation parameters in Equation (

8) is computed,

for

can be calculated using:

The weighting matrix

should be used to describe the uncertainty of the measurement

. In the classical method, this weighting matrix is set as the identity matrix, namely

(

I denotes the identity matrix). For the least squares problem in Equation (

9), the weighting matrix is exploited to describe the relevance between the point

and

in the space, instead of the uncertainty of

. The weighting matrix is calculated based on the distance kernel functions, which serve as a function of the distance between

and

in the local coordinate system.

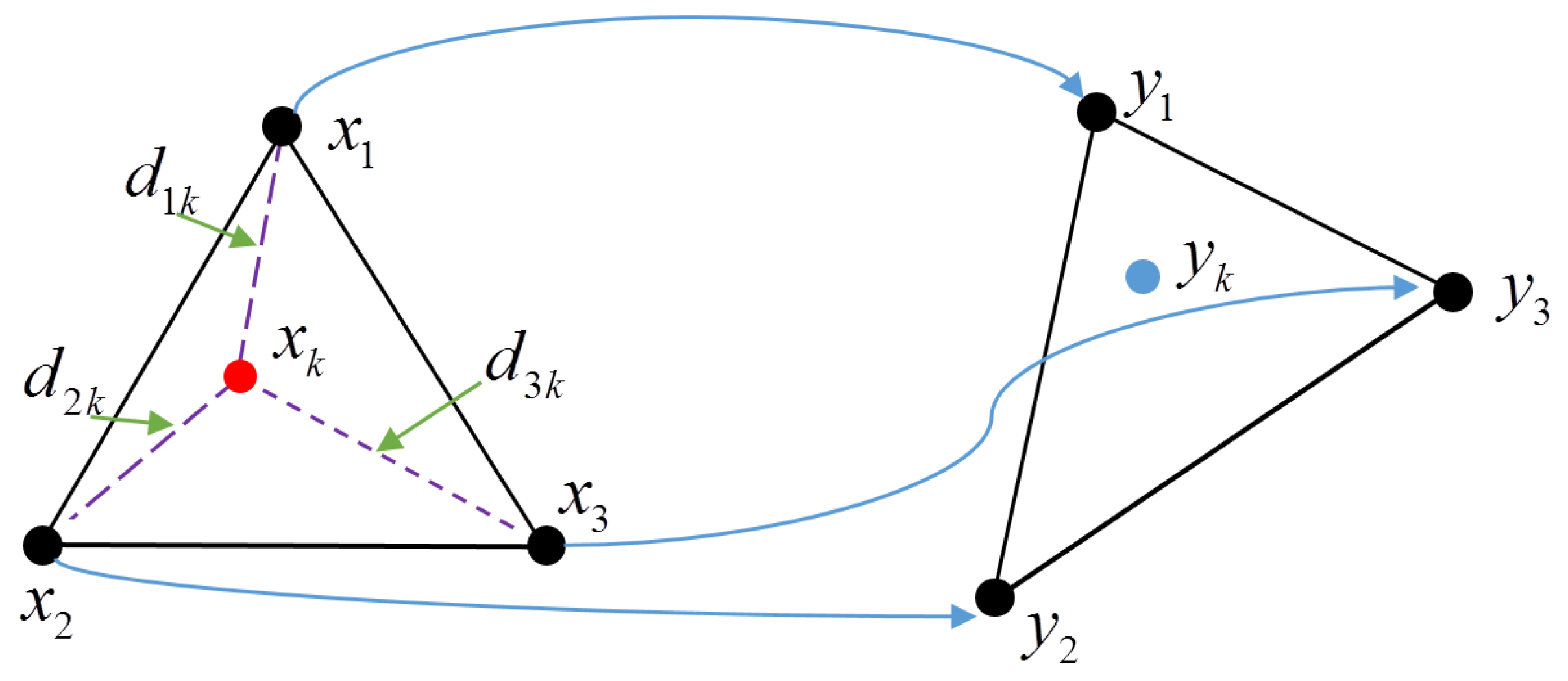

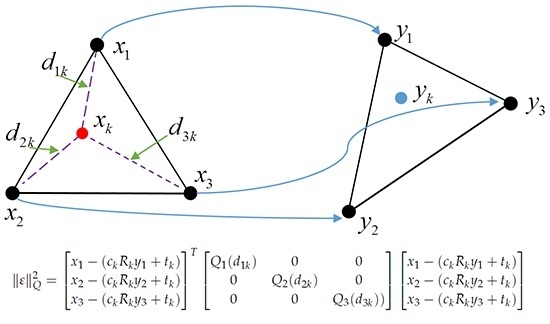

In the simple example illustrated in

Figure 1, the red local point

should be transformed to obtain the projected blue global point

using Equation (

7), when given three pairs of GCPs drawn with black points in two coordinate systems. The distances between the red point and the local points of the GCPs are

. The distances will make varying contributions to determining the global point

.

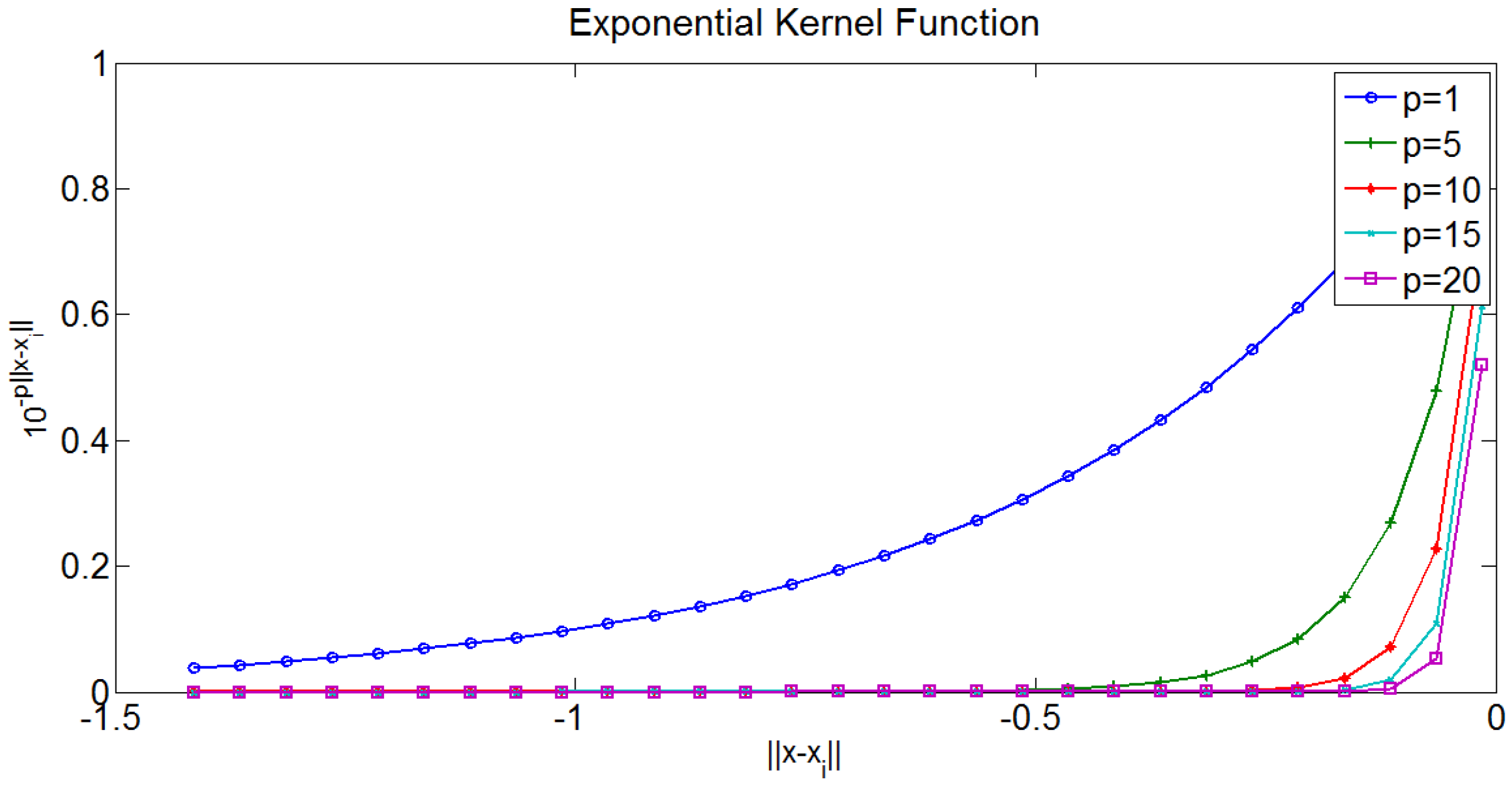

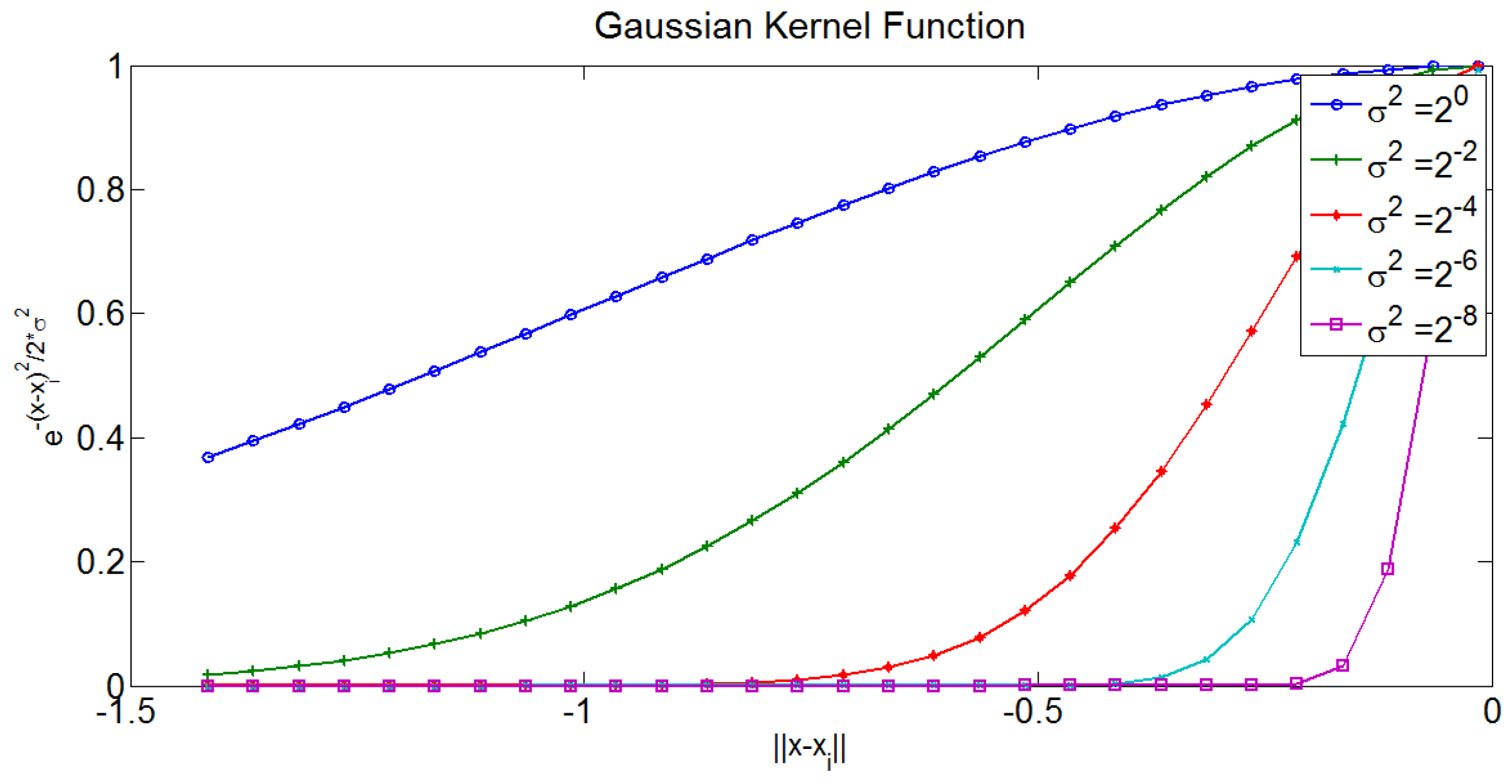

The objective function of the least squares problem is:

where

, a

matrix, is a weighting matrix that is a function of the distance

and serves as a variable for distance kernel functions. After finding the best similarity transformation parameters

, we can perform a similarity transformation to obtain

using Equations (

7) and (

10).

To avoid numerical instability when the iterative solution is adopted for this non-linear solution, data normalization should be required. The normalization matrices of two sets of points in two coordinate systems can be expressed as follows:

where

and

are the centres of two point sets and

and

are the covariance of two point sets. Furthermore, the centres and variance can be computed using the following:

After the original points are subject to data normalization, the maximum distance between a point and the centre is

. The normalization matrices are applied to two sets of points, and the normalized points are treated as new measurements to estimate the optimal similarity transformation parameters

as described by:

Now, the final similarity transformation parameters

, with which we can transform

, can be computed via multiplication between the normalization matrices and

, so that:

3.2. Initialize Similarity Transformation Parameters Using Affine Transformation

To solve the non-linear problem in Equation (

9), an affine transformation, which approximates the similarity transformation, will be utilized to provide the initialization. The affine transformation between a pair of points can be defined in the form of the homogeneous vector:

where the

matrix

A and the

vector

a consist of twelve affine transformation parameters. To determine the best unknown parameters, Equation (

16) can be expressed with another linear equation as a function of affine transformation parameters:

where

refers to the elements of the

i-th row of matrix

A. If more than four

pairs of GCPs are available, a linear system can be constructed as follows:

to find the solution of Equation (

17); where:

Then, the SVD algorithm can be adopted to solve the linear system:

and the affine transformation parameters can be obtained as:

where:

when the affine transformation parameters are known, the similarity transformation parameters can be calculated via: