1. Introduction

Vegetation is a vital component, and plays an important role in the environmental function, of wetland ecosystems [

1]. The mapping and monitoring of vegetation status are key technical tasks required for the sustainable management of wetland ecosystems. In addition, detectable changes in vegetation structure and distribution in wetlands may be indicators of system change which maybe natural or the result of degradation. Satellite multispectral imagery can be used to detect the spectral characteristics of features on the Earth’s surface which in turn can be linked to bio-physical parameters of the region under investigation. Remote sensing has been a popular tool for mapping wetlands [

2,

3] and has advantages over field-based techniques, which can be resource intensive and problematic when the study area is remote and hazardous.

There have been a number of recent reviews of remote sensing of wetlands [

2,

4,

5,

6]. Remote sensing examples show that Landsat imagery has been used to successfully map many wetlands across the planet [

7,

8,

9]. Aerial photography has been used to map wetlands with success, and despite the limited spectral information, is the preferred option for some researchers over moderate resolution satellite imagery such as Landsat. The finer spatial resolution of aerial photography enables the detection of features and classification of a large number of classes [

5,

10,

11]. Synthetic aperture radar (SAR) data has also been used to map wetland vegetation and is often preferred due to the sensitivity of microwave energy to soil moisture and its ability to penetrate vegetative canopies [

4]. L-band SAR data has been successful in discriminating inundated areas that are vegetated, such as

Melaleuca swamps [

12]. In addition, the fusion of optical and radar data has been shown to be effective in mapping long term vegetation and inundation dynamics of tropical floodplains [

12].

The optical remote sensing of wetland vegetation can be problematic. For example, medium spatial resolution (MSR) imagery (with pixels with a ground sample distance (GSD) of 10–30 m), such as Landsat TM data, have proven insufficient for discriminating vegetation species in detailed wetland environments [

10,

13,

14]. According to Adam

et al. [

2], MSR imagery is spatially and spectrally too coarse to distinguish the fine ecological divisions and gradients between vegetation units in wetland ecosystems. Boyden

et al. [

9] identified a number of challenges for remote sensing of monsoonal wetland environments, mostly associated with the highly variable annual rainfall and subsequent variation in water extent and levels within the floodplain. The use of high spatial resolution (HSR) multispectral satellite data (GSD < 5 m) should address the issues raised by Adam

et al. [

2]. However, HSR data displays greater within-class spectral variability than MSR data, and consequently, it is more difficult to discriminate spectrally mixed land covers using per-pixel classifications.

To reduce the spurious classification of pixels in HSR imagery, geographical object-based image analysis (GEOBIA) methods are widely used [

15,

16], whereby imagery is initially segmented into homogenous image objects that reflect spatial patterns in the imagery prior to classification. While the concepts of image segmentation and object classification have been around for several decades [

17], the emergence of robust object-oriented approaches to the automated classification of satellite imagery appears to be one of the major advances in image processing in the last ten years [

18]. GEOBIA has recently been identified as a paradigm [

15] and is finding increasing popularity particularly when applied to HSR satellite imagery [

19]. Within HSR imagery, pixels more closely approximate these landscape objects or their components [

20].

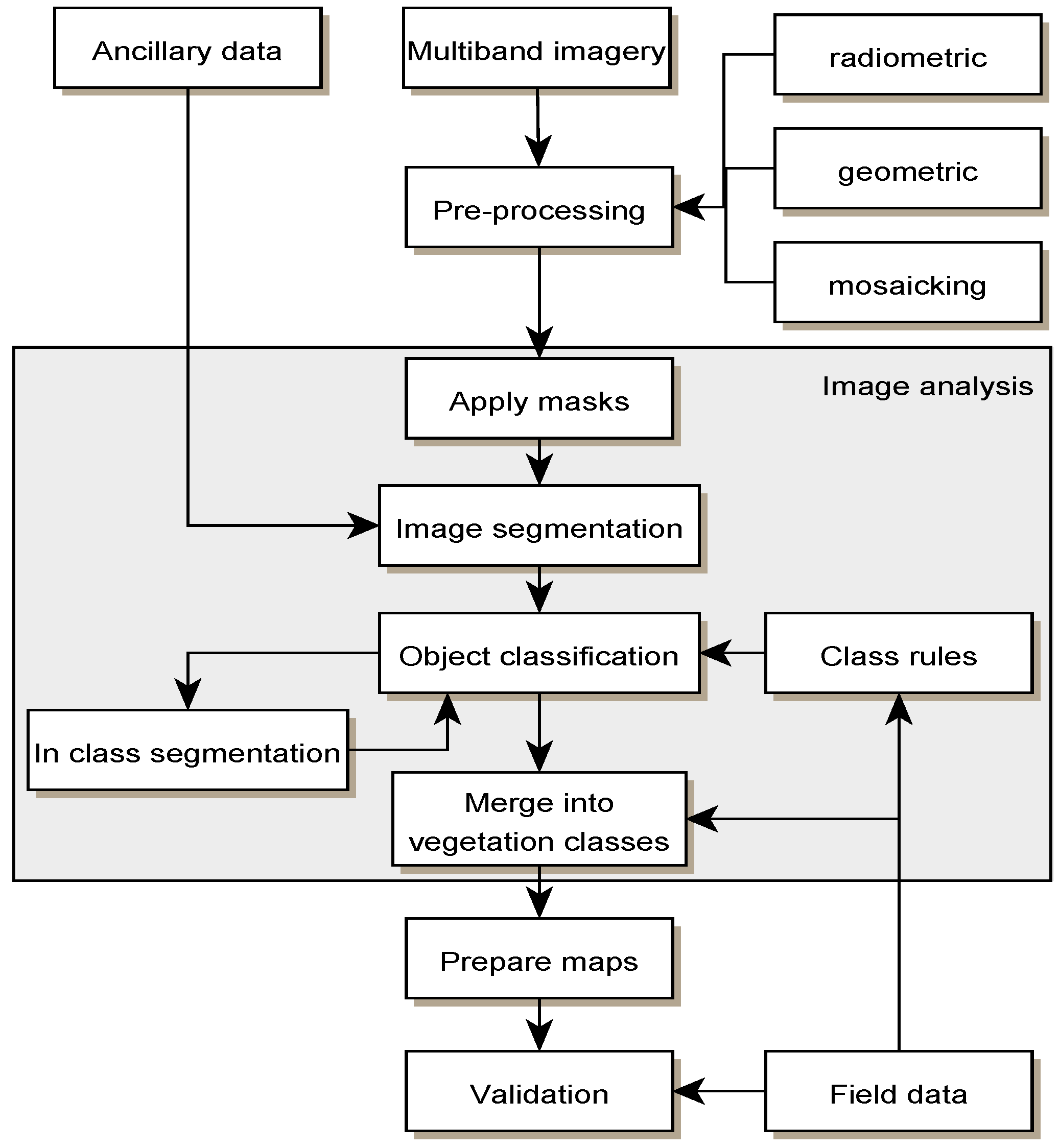

GEOBIA involves the partitioning of remotely sensed imagery into spectrally homogeneous image objects and using the spectral, spatial and topological features of the objects to assist with image classification. The classification of the image objects then not only uses the spectral features of the objects but also uses topological and hierarchical relationships between the image objects [

18,

21]. Ancillary data, such as topographic information, may also be included to improve classification accuracies. The steps of segmentation and classification are typically iterative through an analysis creating a step-wise approach to classification.

Coincident with the increasing use of GEOBIA applications for classifying HSR imagery has been the utilisation of sophisticated algorithms from machine learning, such as Random Forests (RF) as methods of interpreting HSR data [

22]. RF is an ensemble classifier that builds a forest of classification trees, using a different bootstrapped training sample and randomly selected set of predictor variables for each tree. Unweighted voting is then used to produce an overall prediction for each site in the sample [

22,

23]. RF has been previously used for high accuracy vegetation classification in a number of mapping applications, including forest communities [

24], invasive species [

25,

26,

27], and the estimation the distribution of rare species [

25]. It has also been shown to perform well in comparison to decision trees and other ensemble classifiers [

28] and can capture complex, non-linear interactions among noisy, non-normal predictor variables [

24,

25]. In addition, RF provides measures of variable importance that can be used for further interpretation [

24,

25,

28].

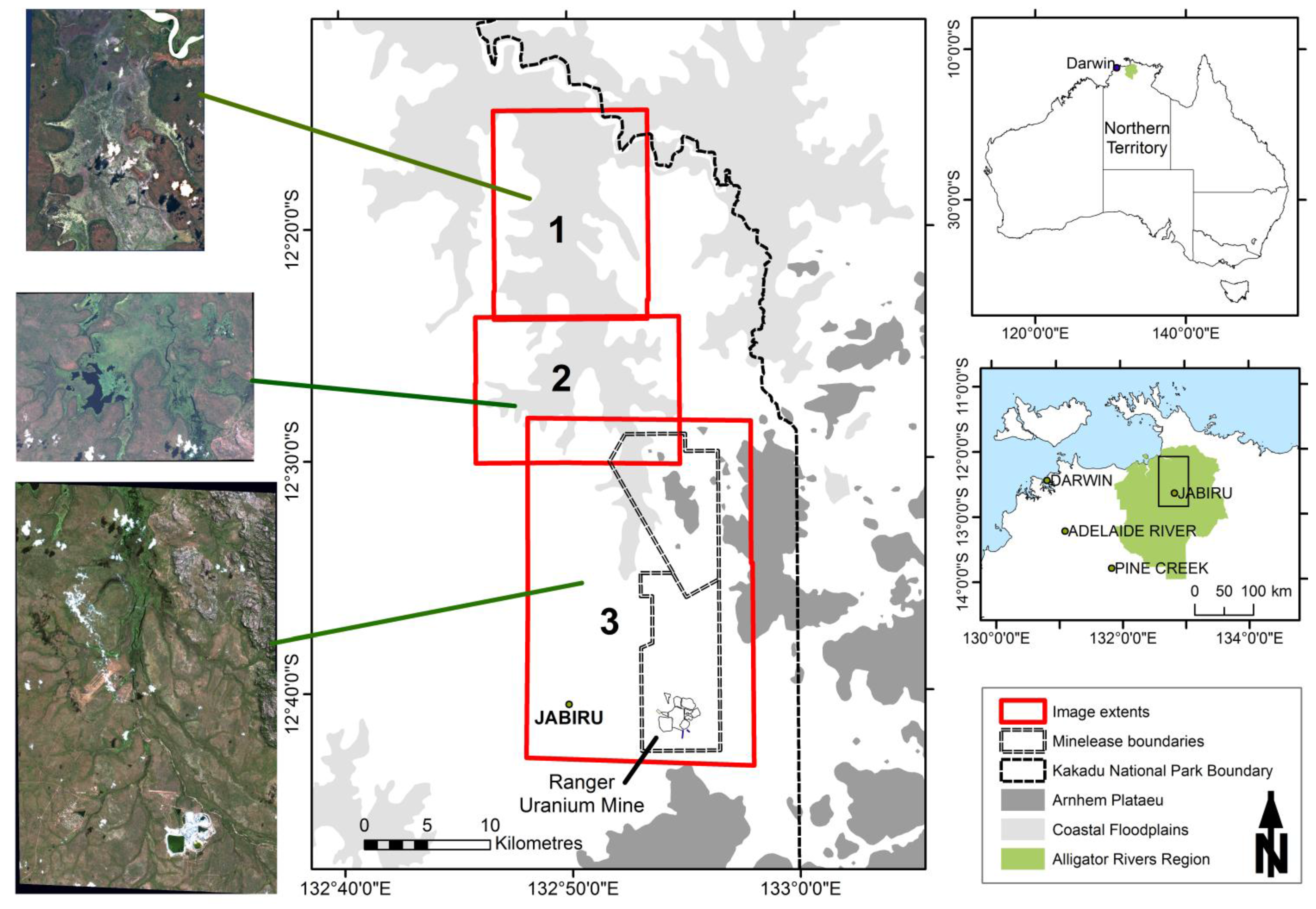

According to the criteria of the Ramsar Wetlands Convention, the wetlands within Kakadu National Park (KNP), Northern Australia have been designated as internationally important [

29]. The wetlands, including the floodplain within the Magela Creek catchment are significant not only in their biogeographical context, but also for the diversity of plant communities [

29] as well as habitat refuges for abundant and diverse waterbird populations [

30,

31]. KNP’s World Heritage listing in part refers to the natural heritage value of the diversity and endemism of the wetland vegetation [

29]. Current landscape level ecological risks within the region are identified as weeds, feral animals and unmanaged wildfire [

32]. In addition, the floodplain is a downstream receiving environment for Ranger Uranium Mine.

The spatial distribution of a number of the vegetation communities is annually dynamic, although reasons for the dynamics are not fully understood. The annual change has been observed as a naturally occurring phenomenon and, despite the importance of these wetlands, there has been little research of the dynamics [

29]. Finlayson

et al. [

33] have postulated that the major determinant in the composition of flora was the duration and period of inundation, with lesser contributions from other factors such as water flow velocity and depth.

Mapping of the communities within the floodplain at the appropriate spatial and temporal scale can provide information to determine the drivers of the dynamics and establish a baseline for natural variability, which will be useful for monitoring this offsite environment when rehabilitation of Ranger uranium mine takes place. Vegetation community mapping of the floodplain will also inform ecological risk assessment that forms a basis for park management [

32].

The aim of this project is to develop and test the utility of a decision-based methodology to accurately classify the vegetation communities of the Magela Creek floodplain using high spatial resolution multispectral satellite imagery and LiDAR-derived ancillary data. The procedure involves the application of GEOBIA techniques to segment and classify the imagery and data. To test the performance of the decision-based method, the results are compared against a Random Forests classification of the same data.

4. Discussion

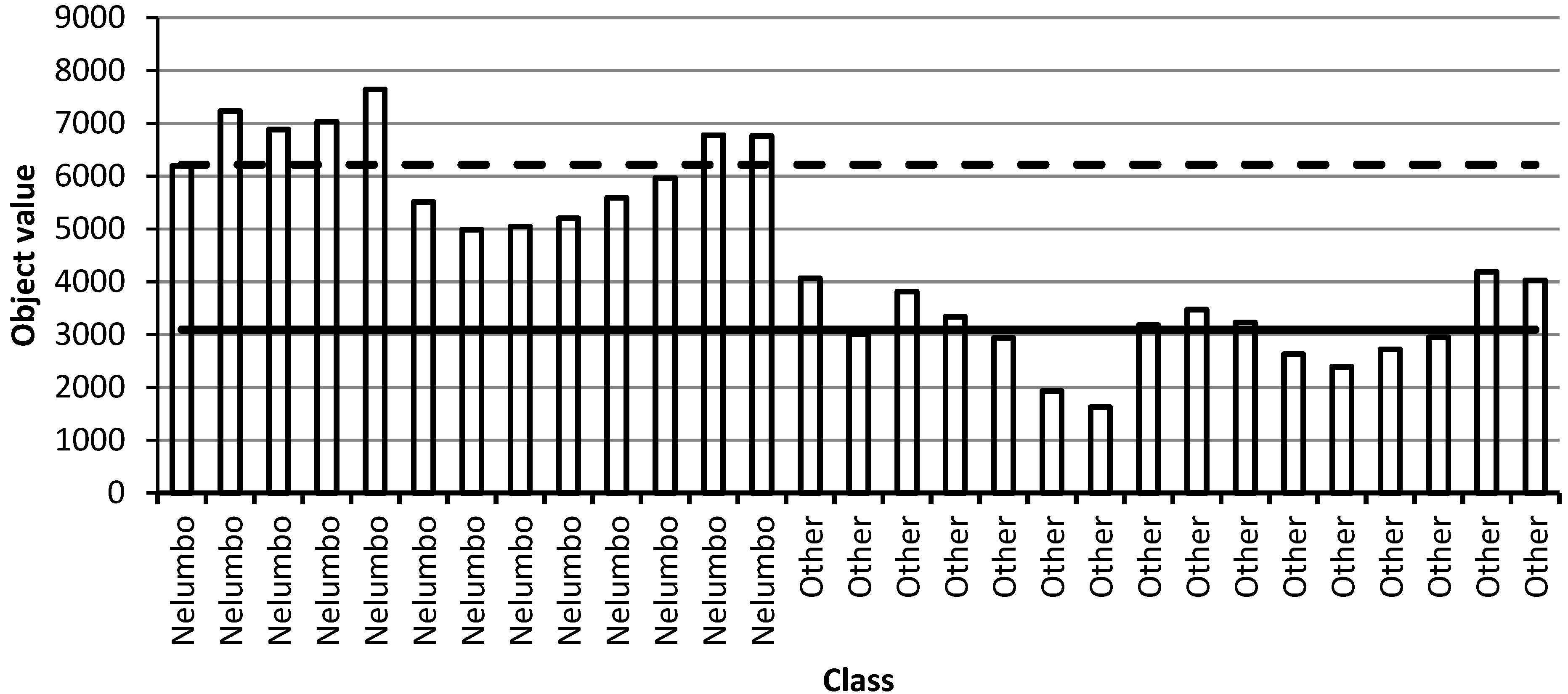

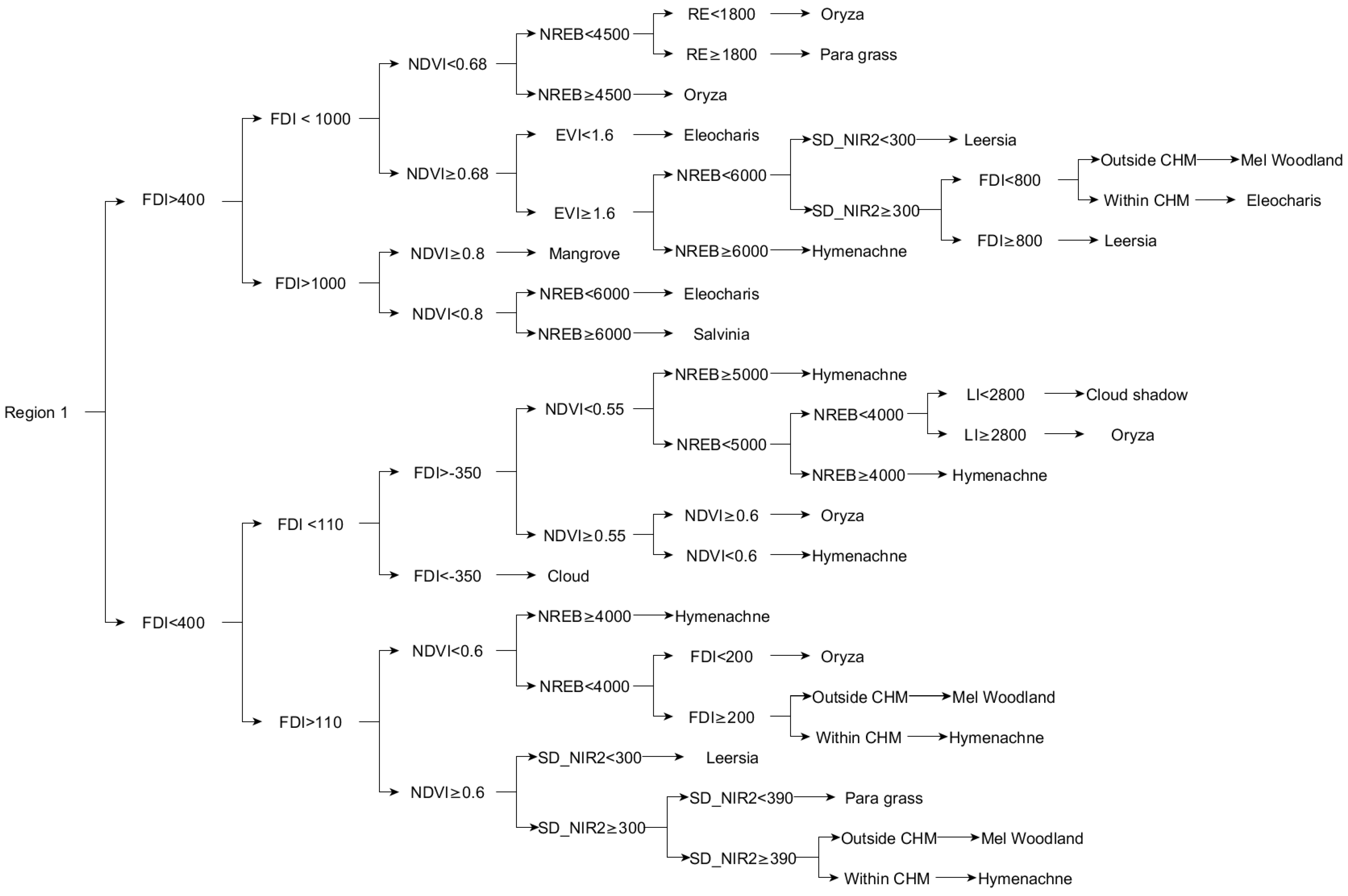

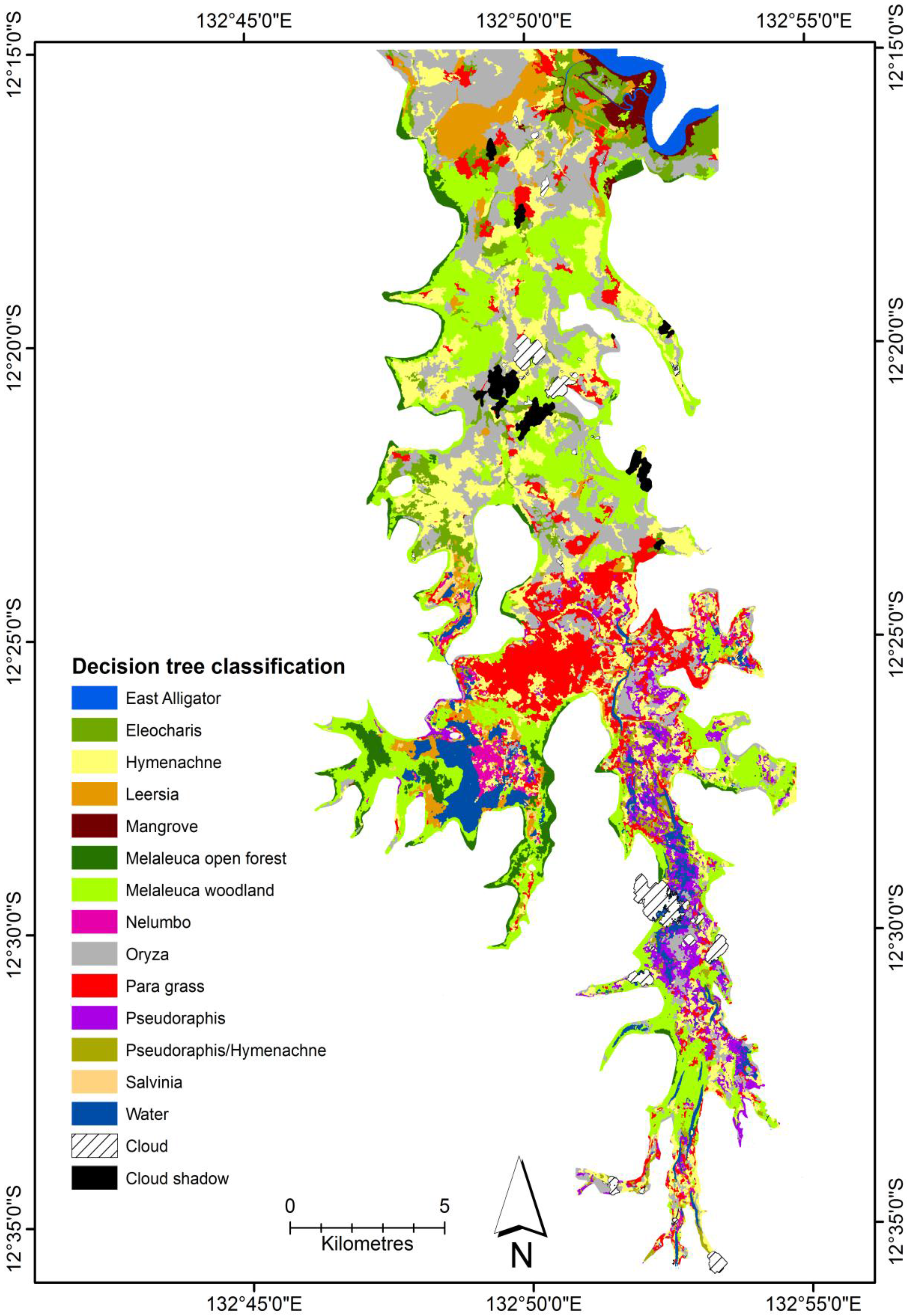

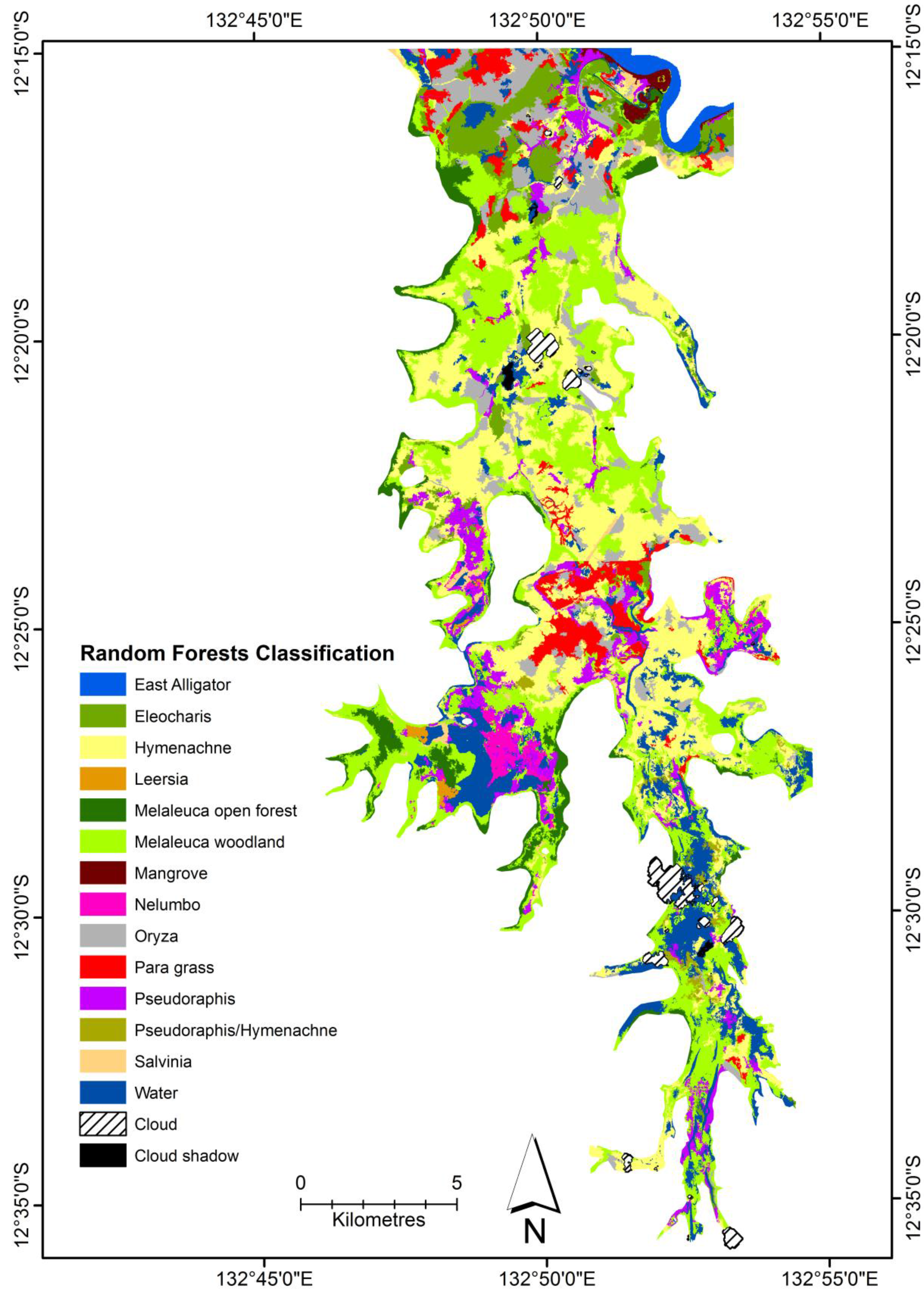

For this study, a decision tree classification was developed for classifying WorldView-2 imagery to map the vegetation on the Magela Creek floodplain. In addition, the results were compared to a RF classification of the same data. Overall, the DT classification clearly outperformed the RF classification as shown in the statistical tests undertaken. In addition, most classes were more accurately classified in the DT classification. This is most likely because the RF classification was reliant on purely on the statistical information from the samples selected, whereas in the DT classification the operator was able to select what feature to use and specify the threshold value to apply. Therefore, a manually derived decision tree does have merit especially where there are classes that are difficult to map based on purely using statistical methods. The performance of the rule set over the state of the art supervised classification method, Random Forests, suggests that the DT method may be a useful alternative for areas where wetland maps created using a supervised classification have less than satisfactory accuracies. The method is temporally transferrable, having been used to map vegetation on the Magela Creek floodplain for subsequent years 2011–2013 with only minor adjustments to thresholds to account for spectral variation due to slight differences in view angle and seasonality [

47]. One way to test whether the method is spatially transferrable would be to apply it other wetlands in the region, which are quite different in composition [

29].

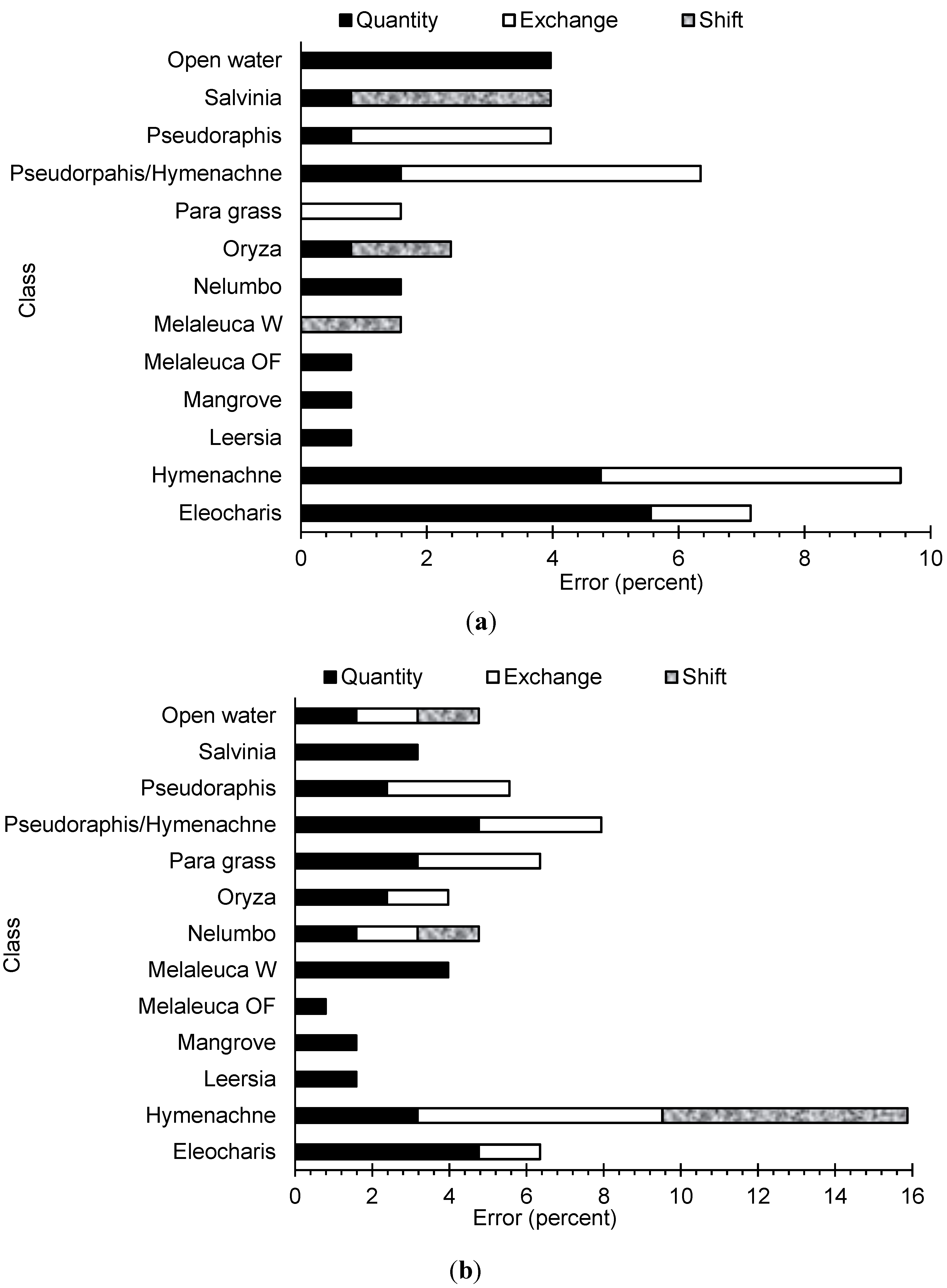

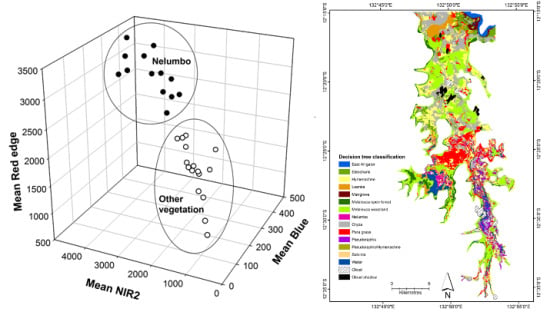

Both vegetation classification processes were able to distinguish between the spectrally and structurally distinct vegetation communities within the floodplain. The use of multiple indices and ratios were able to differentiate between classes that appeared spectrally similar. From the summaries of the confusion matrix, there were a number of instances where objects were either not detected or misclassified. There was some difficulty in distinguishing between vegetation classes that are spectrally similar, most notably between the classes dominated by grasses. Previously, there has been noted some spectral similarity between different covers namely

Oryza and Para grass [

36]. Most of the uncertainty in the maps is due to the confusion between grass classes. By merging the three grass classes (

Pseudopraphis,

Pseudoraphis/

Hymenachne and

Hymenachne), the overall accuracies were for both classifications were increased by over 7%. In addition, there were objects that were of the same class of vegetation cover that were spectrally different. This is more than likely due to differences in growth phases as a result of water availability. For example, the floodplain margin will dry out quicker and grasses senesce or die earlier than those in the central floodplain. In addition, the inclusion and analysis of the CHM was successfully able to differentiate between spectrally similar but structurally different communities. Initial analysis, not presented here, based on the decision tree without the CHM saw overall accuracies 10% less, due to confusion particularly between trees and patches of

Eleocharis.

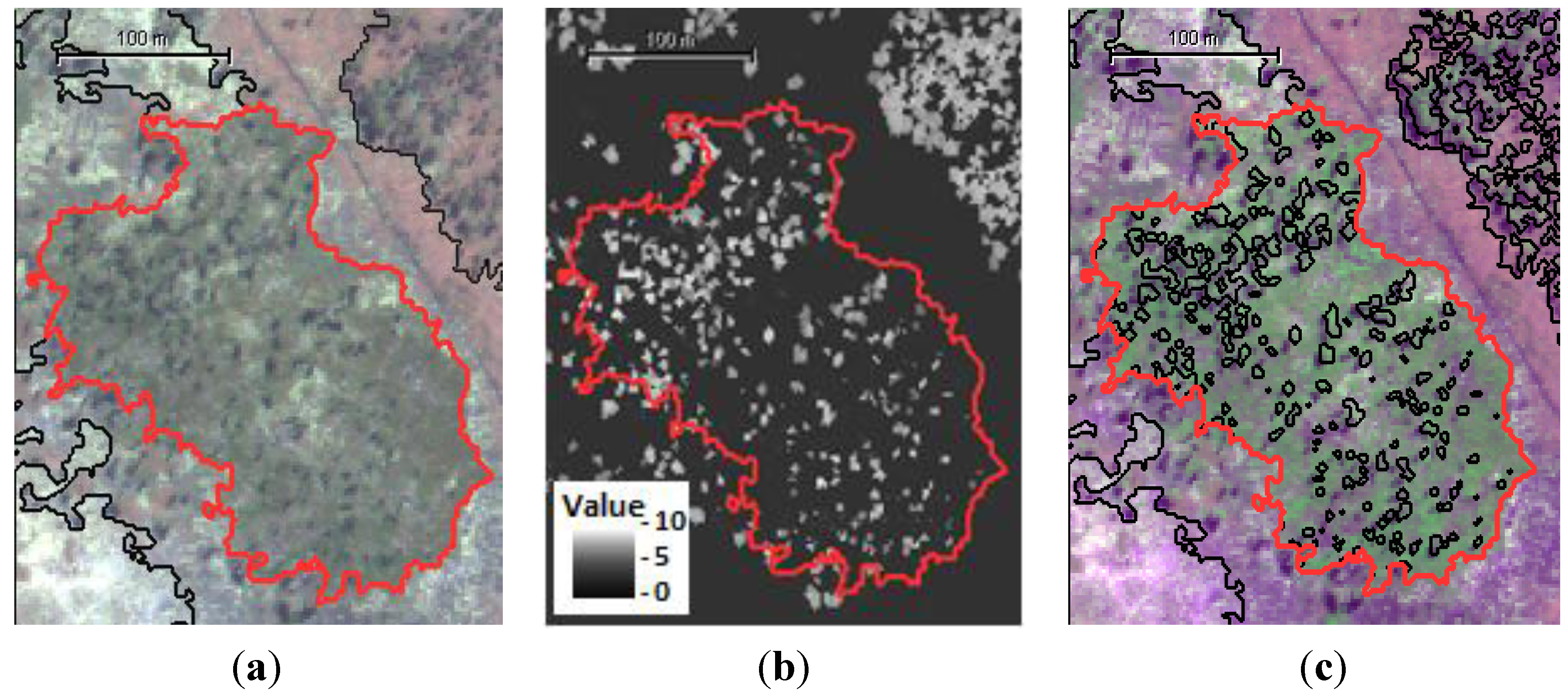

One outcome of interest from this research is the potential utility of the band ratio, NREB, for the classification of stands of emergent vegetation with floating leaves such as lilies. The use of the NREB ratio has been successful for mapping these stands on the floodplain over multiple dates using WV-2 imagery [

47]. Further testing of this ratio could be conducted to assess its potential in this regard for wetlands in other regions.

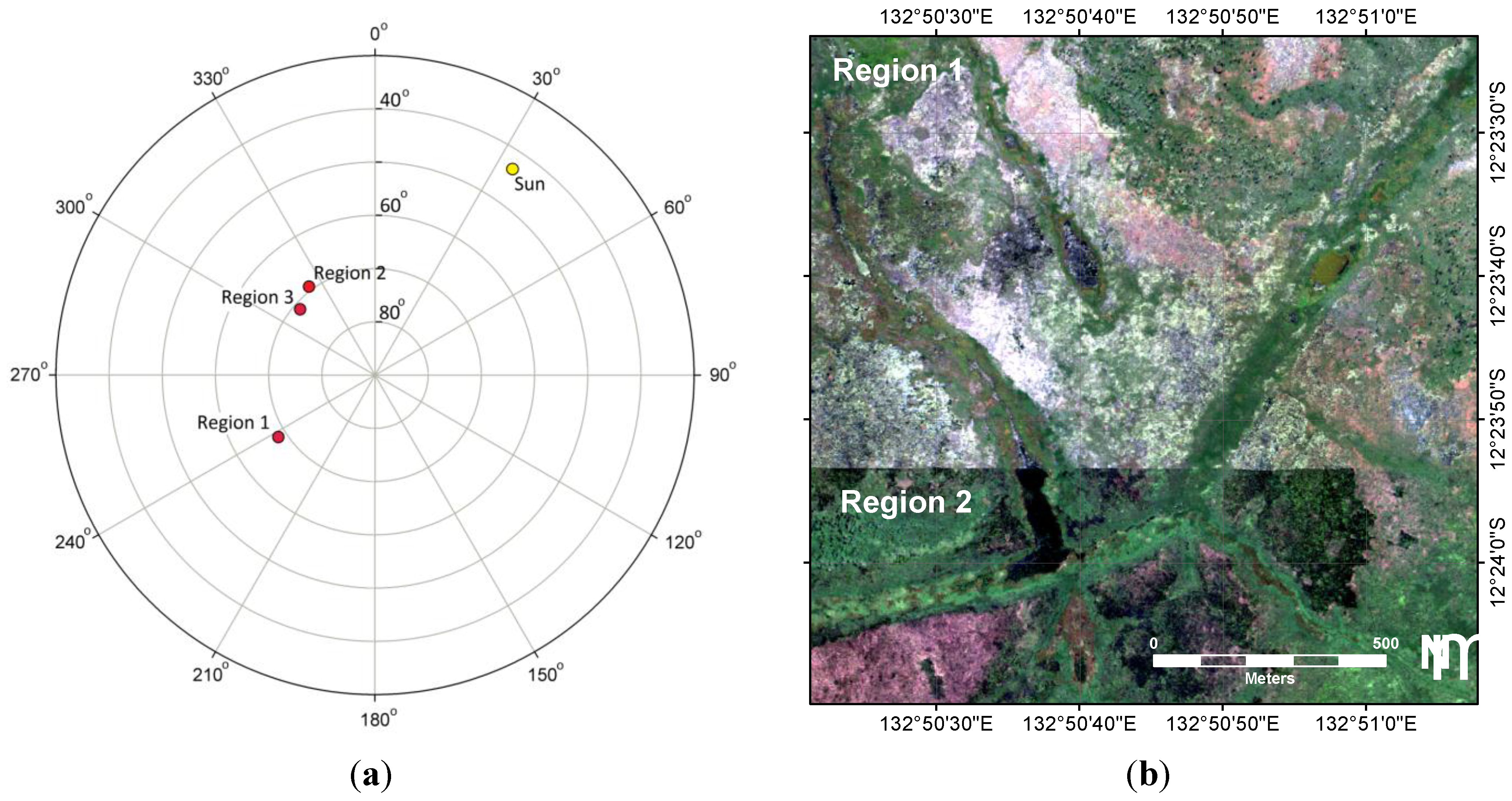

Due to sun glint, it was difficult to discern open water and floating vegetation within Region 1. In addition, due to changes in reflectance associated with the view angle, the class rulesets developed for Regions 2 and 3 did not satisfactorily detect the classes in Region 1. This required a modified set of rules and threshold values for Region 1. Although the FLAASH atmospheric correction algorithm accounts for view angle, it is difficult to correct for sun glint on floodplains. Ensuring the imagery is captured along a single path will prevent this issue from reoccurring.

Floodplain boundary delineation was useful to limit classification to the relevant vegetation communities and would have not been possible using only WV-2 imagery due to spectral similarities between floodplain and non-floodplain surfaces. The inclusion of DEM data was useful in providing an initial delineation of the floodplain boundary that could easily be manually adjusted. Both DEMs contain uncertainty in elevation, with the 30 m resolution DEM too coarse and the 10 m DEM suffering from vegetation effects in the northern section. Consequently, a manual modification of the boundary was necessary based upon visual interpretation of the multispectral imagery.

The classification of HSR imagery does lead to an interesting problem associated with the scale and resolution. The high resolution (GSD = 2 m) of the WV-2 imagery produced a map scale including a level of detail that may mean some small objects only contained one individual of a species. For example, an object of 25 pixels classified as Melaleuca open forest might be a single tree. Objects of that scale may not be suitable for broader landscape analysis where community level classification is needed, therefore classes would need to be more specific to describe an individual organism of a single species. The hierarchical grouping of objects within GEOBIA would be one means to address this issue.

The vegetation maps were representative of the vegetation that existed on the floodplain in May 2010. The seasonal variation that is known to occur [

9,

33] has not been captured within this map, such as changes in community composition associated with the varying water level and soil moisture in the floodplain. The amount and periodicity of rainfall varies annually leading to different water levels and soil moisture availability means community distributions can vary greatly within and between years. Although previously undertaken for the 2006 dry season using a time-series of four sets of Landsat imagery [

9], mapping vegetation at this temporal scale can be problematic. For a large interval of the year, optical satellite data of a suitable quality is unavailable due to either cloud cover or smoke haze and, as the year progresses, so does the area of fire-affected land cover. In addition, using HSR data from a commercial satellite means data collection of such temporal intensity would be cost prohibitive. Although it was not possible to directly compare the results of this study to the map of Boyden

et al. [

9] due to temporal, spatial and methodological differences, a couple of observations can be made. Due to the higher spatial resolution of the imagery, the map produced for this study had better delineation of class boundaries. This map however had less success at discriminating between the various grass classes because unlike Boyden

et al. [

9], this study was not able to analyse the temporal changes in spectral responses of grass dominated land cover.

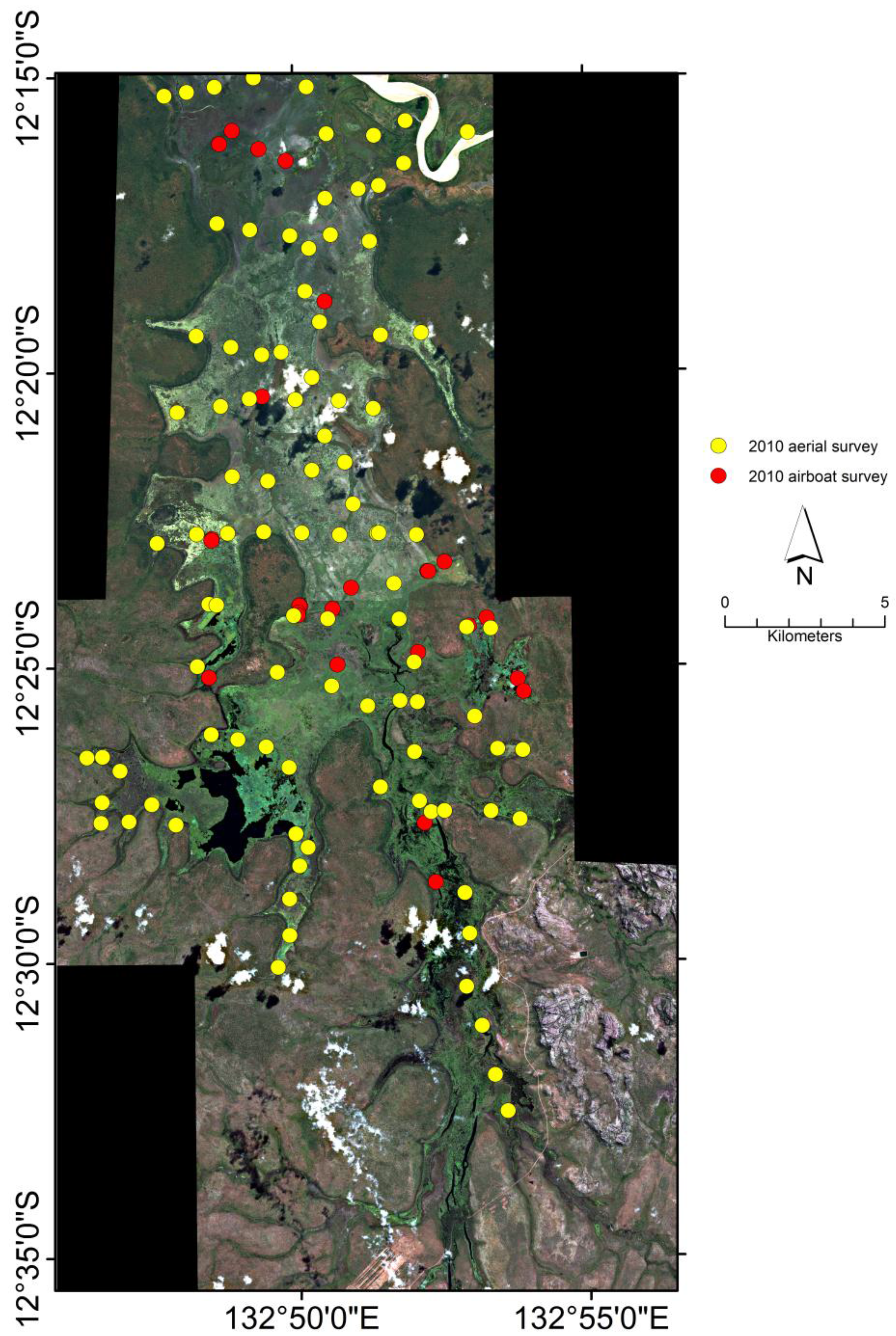

For reference data, it would be preferable to have more points to increase the rigour of the accuracy assessment, as several of the classes have limited reference data. However, gaining sufficient reference data is difficult using standard observation techniques due to accessibility and hazard issues associated with the remote environment, and resource limitations. For HSR image analysis, a number of studies have used the visual analysis of the base imagery to provide sufficient reference data for ground truthing providing what has been referred to as a pseudo accuracy assessment [

53]. While this may be possible to undertake for easily discernible land covers, again, spectrally and texturally similar vegetation may be difficult to differentiate resulting in error, and bias may also be introduced by user influence [

59]. New techniques for reference data collection using helicopter-based GPS enabled videography and still photography at higher spatial resolutions than the satellite imagery have been trialed for subsequent data captures to enable an increased number of reference sites relative to field sampling effort.

5. Conclusions

This research tests the application of a decision tree-based GEOBIA for mapping floodplain vegetation using WorldView-2 high spatial resolution imagery and ancillary data produced a vegetation map of the Magela Creek floodplain for May 2010. Based on the confusion matrix built using the field reference data, the overall accuracy of the decision tree classification was 78%. Half the overall disagreement was quantity disagreement, with the remainder mostly being exchange with the majority of error being associated with confusion between grass dominant classes that were spectrally similar but different species composition. The overall accuracy of the RF classification was 64% with half the overall disagreement being quantity disagreement and the remainder mostly exchange. Again there was mostly confusion between the grass classes that were spectrally similar. Results of the z-test and McNemar’s test showed that there was a significant difference between the results of the DT and RF classifications and that for this project the DT method clearly outperformed the RF. These results suggests that the method may be useful for classifying wetlands that are difficult to map using supervised classification methods, such as RF.

The maps, however, are representative of a point in time and do not account for any temporal variability (either seasonal or annual) in the extent and distribution of the vegetation communities on the floodplain.

Due to the performance of the DT approach, the method described here (with the

Pseudorpahis,

Hymenachne and

Pseudorpahis/

Hymenachne classes merged into a single class) has been applied to mapping the floodplain vegetation in over a time-series (2010–2013) to monitor the annual variation in distribution and extent of the communities and changes in open water [

48]. This work forms an integral component of an operational landscape scale off-site monitoring program for the rehabilitation of Ranger Uranium Mine.