1. Introduction

Fractional vegetation cover (FVC) is widely used to describe vegetation quality and ecosystem changes and is a controlling factor in transpiration, photosynthesis and other terrestrial processes [

1,

2,

3]. Estimating FVC in field measurements is critical because it provides a baseline for improving remote sensing algorithms and validating products.

Visual estimation, sampling [

4], photography [

5] and other techniques are commonly used in field measurements. Among these, photography with digital cameras is one of the most important methods. Analyzing digital images to calculate FVC is efficient and accurate in most circumstances [

3,

5,

6,

7,

8,

9,

10,

11]. The parts of an image that contain vegetation can be determined based on their physical, shape and color characteristics and other features [

6,

7,

8,

12]. In general, these methods can be grouped into two classes: (1) cluster analysis based on training samples, e.g., supervised and unsupervised classifications [

13,

14] and object-based image analysis methodology (OBIA) [

15,

16]; and (2) threshold-based methods according to the vegetation index, such as the color index of vegetation extraction (CIVE) [

17], the excess green index (ExG) [

18,

19], excess green minus excess red (ExG−ExR) [

20], and many other indices [

11,

21]. When these two types of methods are used for image classification, different color spaces are usually introduced and analyzed, for example red green blue (RGB) and hue saturation intensity (HSI) color spaces [

12], for the mean-shift-based color segmentation method and the Commission Internationale d’Eclairage L*a*b* (LAB) color space for the documented FVC estimation algorithm named LABFVC [

22] and LAB2 [

23].

However, shadows should be addressed when FVC is extracted from a digital image. Shadows projected by vegetation increase the contrast in an image, alter the color in shaded areas and affect the image analysis. Shadows occur not only on the soil, but also inside the vegetation canopy [

15].

Although a series of methods have been proposed, the shadow problem has not been perfectly solved. Visual interpretation using the supervised classification tool via commercial image processing software can perfectly distinguish vegetation in shadows through human to computer interaction. However, this method requires many manual steps and is less automatic and efficient [

13,

14,

16]. Manual steps can cause bias and inconsistency between observers [

24]. Methods based on physical characteristics [

15,

16] and feature space analysis [

12] have been proposed to solve the shadow problem, but are time consuming and unsuitable for real-time applications. Using artificial shelters to change the illumination conditions and to reduce the contrast between sunlit and shaded areas can avoid the shadow effect in small areas [

21,

25]. However, shading a large area is difficult. Extremely dark shadows compared with sunlit leaves complicate the classification of shaded leaves using threshold-based methods and certain methods based on physical characteristics [

15,

23,

26]. Extremely dark shadows are most likely to occur when photos of dense vegetation are taken on a sunny day.

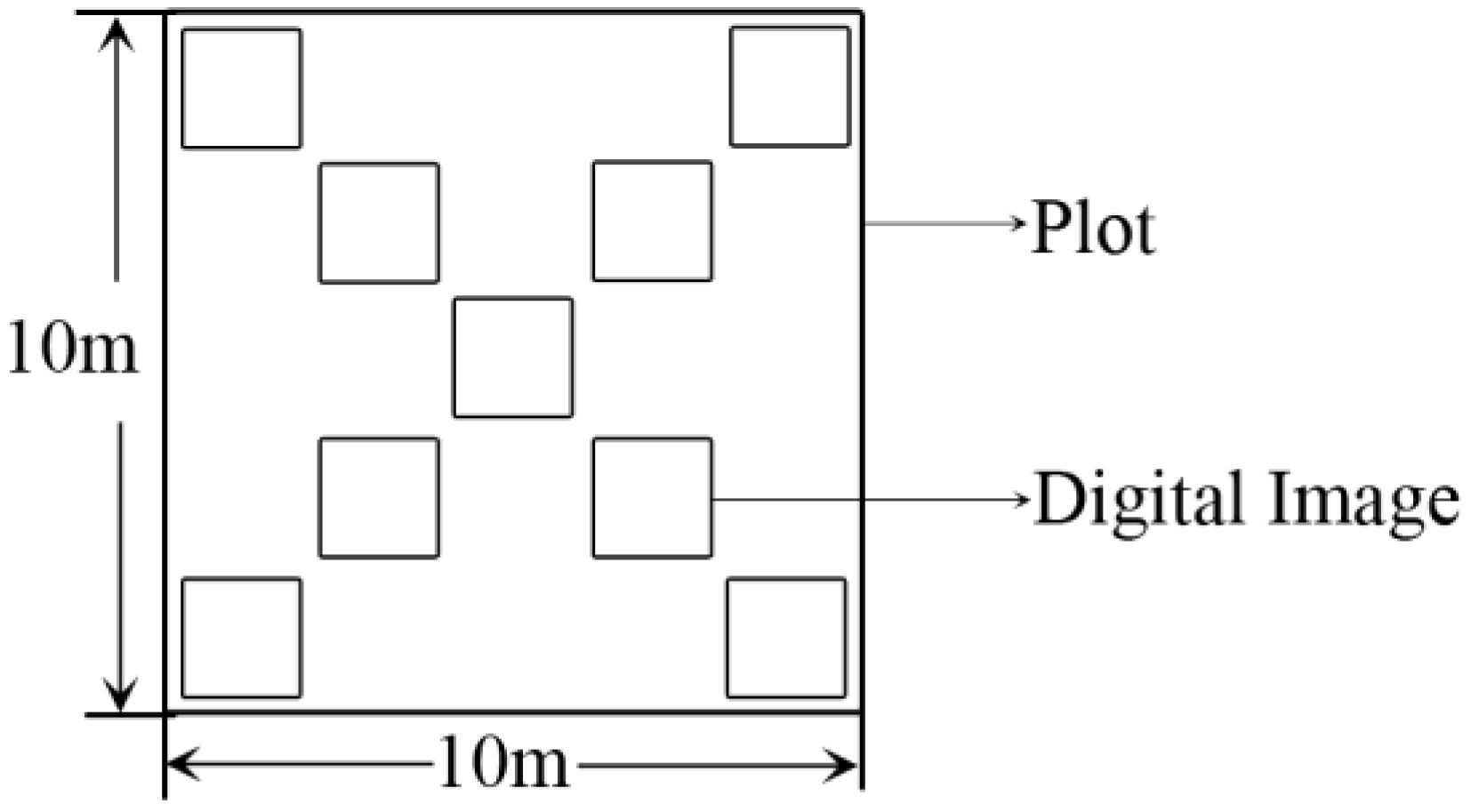

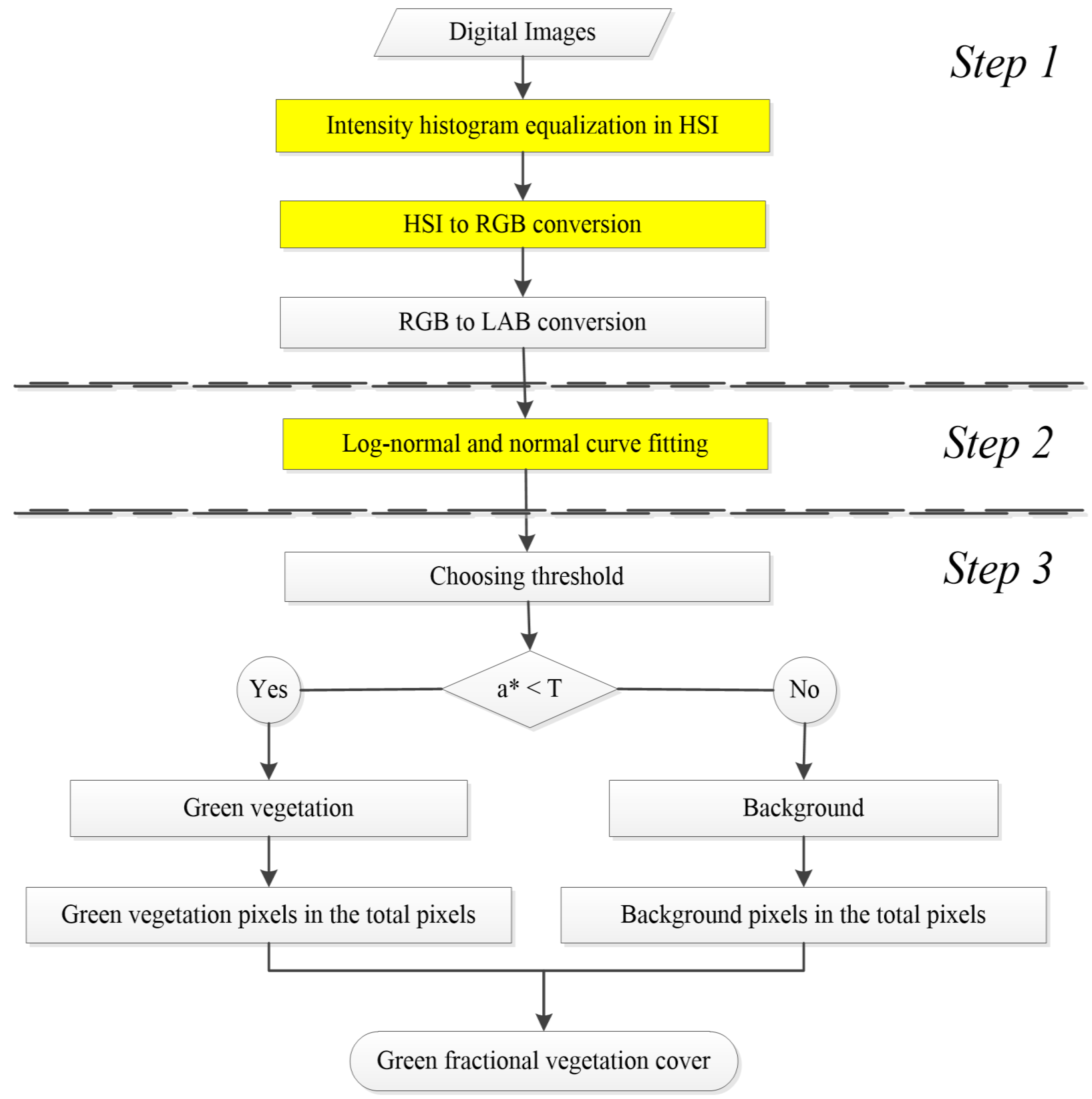

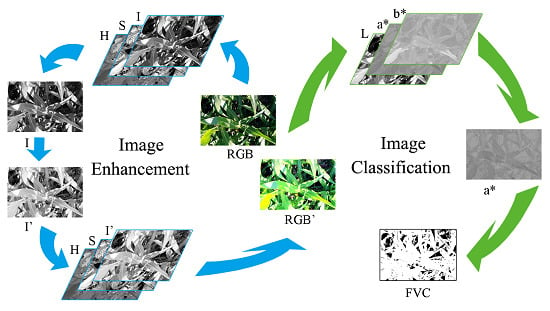

In this study, we propose a modified LABFVC algorithm that is shadow resistant and can classify green vegetation with reasonable accuracy. The whole method is realized in Matrix Laboratory (MATLAB; the MathWorks, USA) and can extract FVC automatically and efficiently. The accuracy of our method was evaluated using real and synthesized images.

5. Discussion

Shadows occur due to obstructions from terrain topography, cloud cover or dense vegetation and can cause errors in image classification. In this study, we proposed an automatic shadow-resistant FVC extraction method (SHAR-LABFVC) that can classify green vegetation efficiently and achieve stable results with reasonable accuracy.

SHAR-LABFVC was developed based on LABFVC, which was chosen because of its automaticity and efficiency [

26]. SHAR-LABFVC can automatically determine the threshold to classify green vegetation and to compute FVC from digital images. In contrast to the supervised classification [

13,

14] and other image analysis approaches based on physical characteristics [

15,

16], neither LABFVC nor SHAR-LABFVC require manual steps to process images.

The time required to extract FVC using LABFVC is less than 12 seconds per image, much faster than other automated image classification methods based on physical characteristics (PC-based) [

16] and approaches for feature space analysis (FSA-based) [

12] (

Table 8). The time required to extract FVC using SHAR-LABFVC is approximately five seconds per image. SHAR-LABFVC is thus sufficiently efficient for real-time application.

Table 8.

Average computing time of the different methods.

Table 8.

Average computing time of the different methods.

| Approach | Time (per image) | Computer Processor | Random-AccessMemory (RAM) | Program/Software |

|---|

| PC-based * | 15 min | - | - | eCognition 8.7 (Trimble Navigation Ltd. USA) |

| FSA-based * | 91.9 s | 1.2 GHz | 512 MB | VC++ |

| LABFVC | 12.0 s | 3.1 GHz | 4 GB | MATLAB |

| SHAR-LABFVC | 5.0 s | 3.1 GHz | 4 GB | MATLAB |

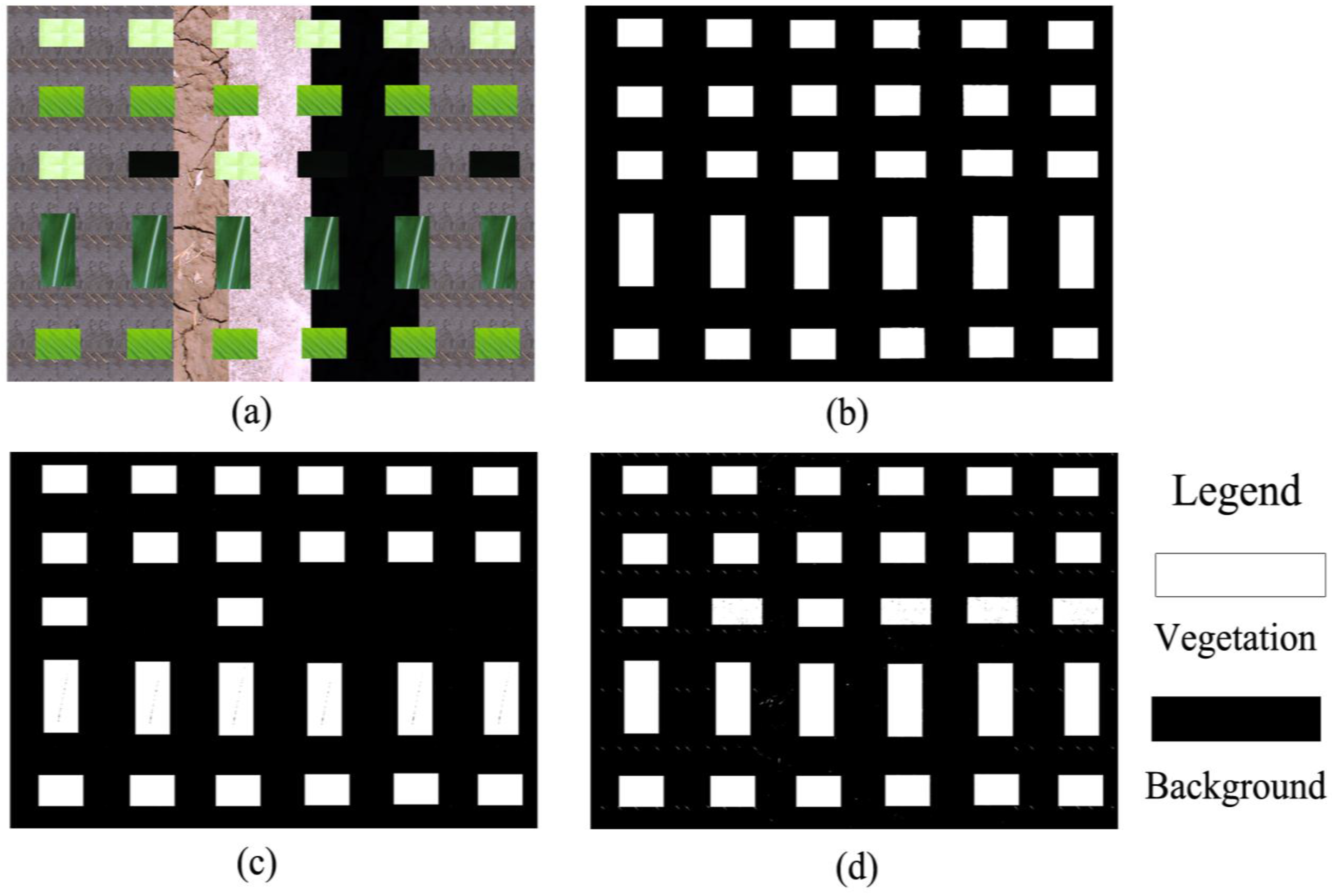

Real and synthesized image evaluation results were presented in

Section 4.2 and

Section 4.3. The classification accuracy of the real images (

Table 4) and the process of image synthesis (

Section 2.2.1) indicate a high reliability of the reference values.

The LABFVC algorithm works well when the vegetation is sparse. However, LABFVC is more sensitive to the contrast between sunlit parts and shadows, which causes systematic errors in classification. With the use of image enhancement and lognormal distribution, SHAR-LABFVC is much less sensitive to the shadow effect than LABFVC. Image enhancement was introduced to decrease the shadow effect, which is more convenient to implement than using artificial shelters to change the illumination conditions [

21,

25]. In contrast to other image analysis approaches [

15,

16], SHAR-LABFVC brightens and separates the shaded vegetation and background rather than classifying shadow as another class. This process also improves the accuracy of obtained green FVC.

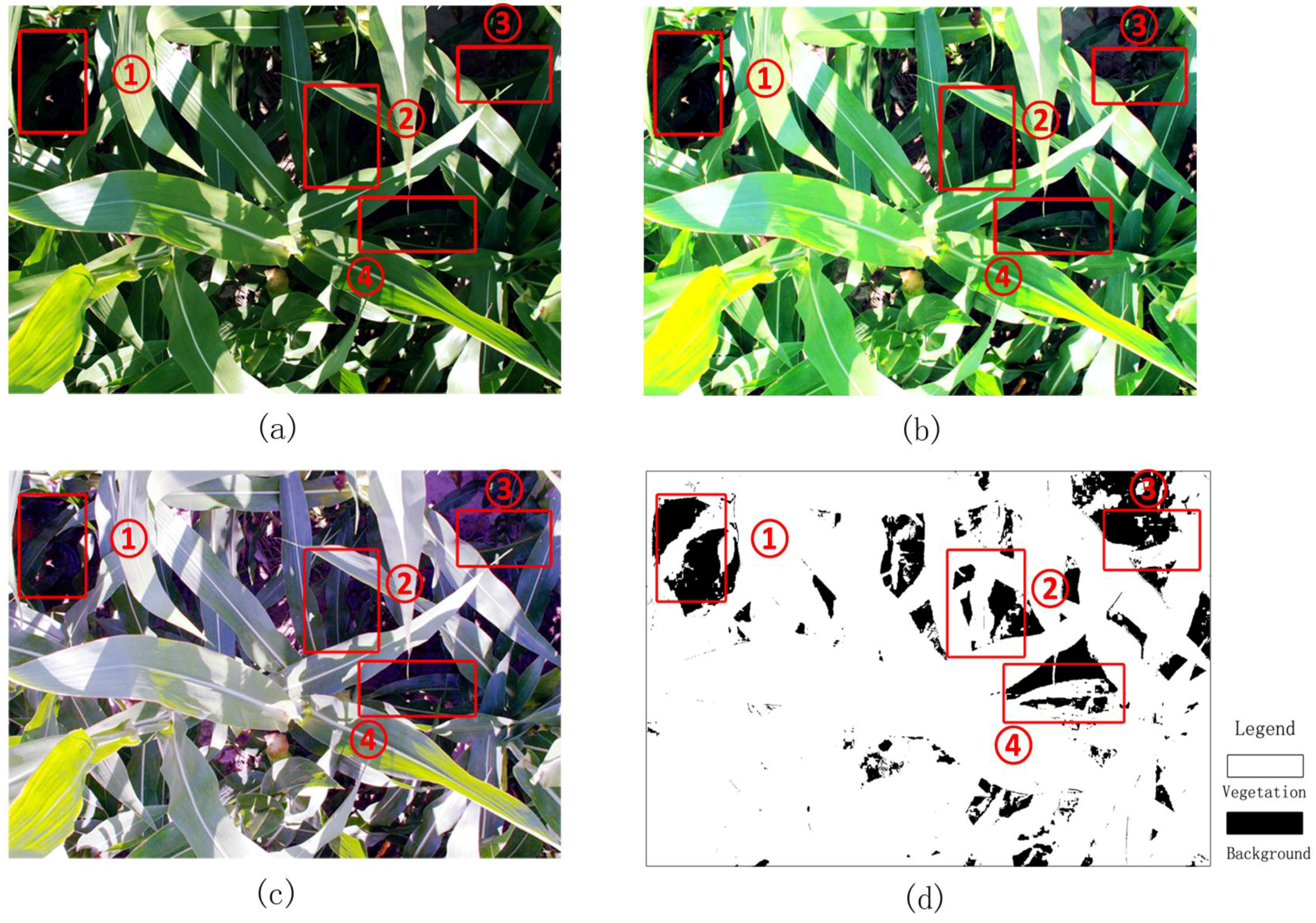

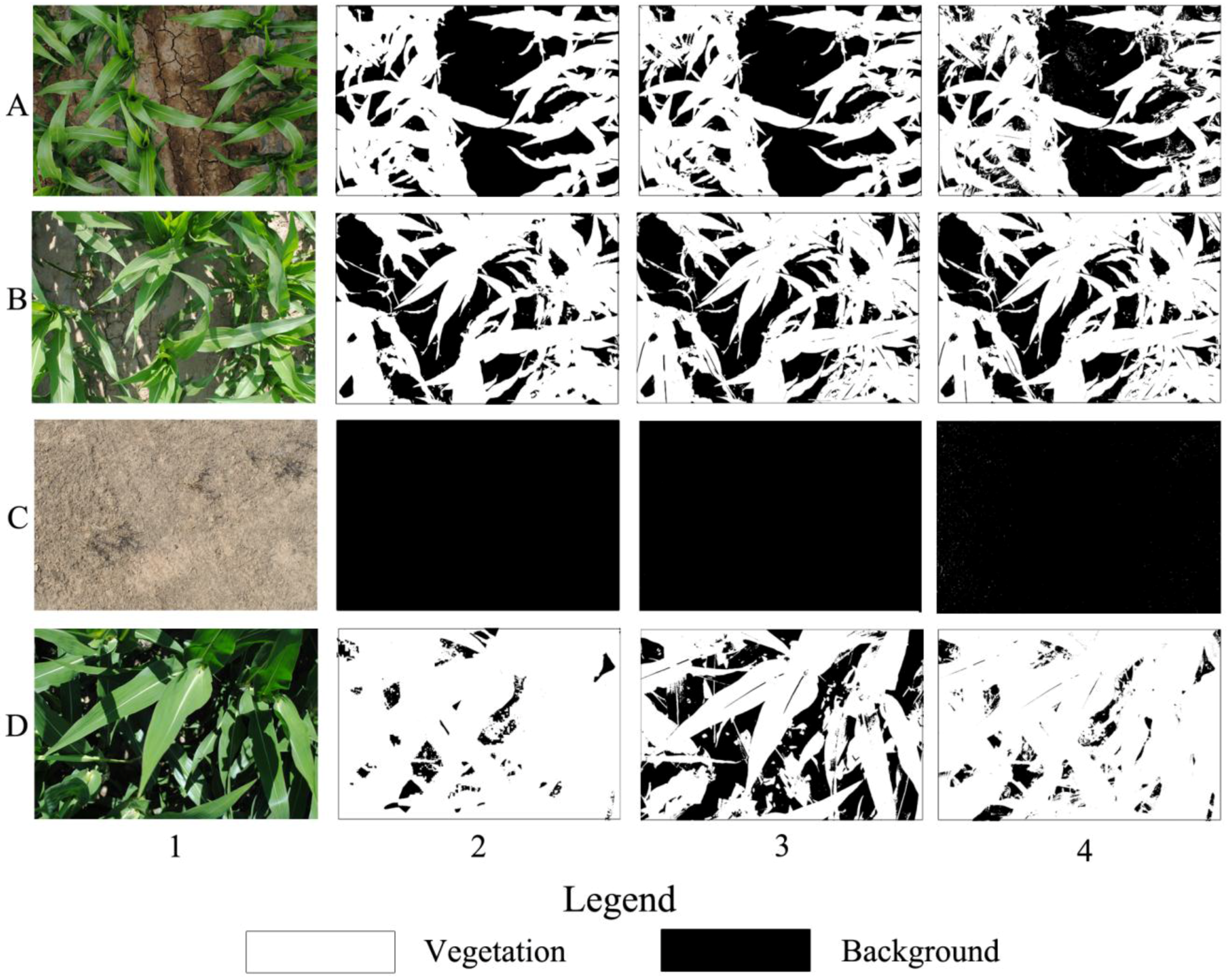

SHAR-LABFVC was designed to extract FVC from the images taken with a digital camera. Photography is a commonly-used method for obtaining FVC from crop, grassland, low shrub areas and understory in sparse forests. When the digital image contains heavily-shaded parts, LABFVC cannot obtain vegetation efficiently. Compared to LABFVC, SHAR-LABFVC obtains results more similar to the reference FVC, particularly when heavily-shaded components are present. Representing the extreme situations that may appear in these areas, the classification results for images with no shadow, many shadows, no leaves and many leaves with deep shadows are shown in

Figure 9. When no shadow or a few shadows cover the vegetation or background (

Figure 9A1, B1 and C1), the differences among the results of LABFVC, SHAR-LABFVC and visual interpretation are small. When the leaves heavily shade one another (

Figure 9D1), shadows tend to be dark and LABFVC significantly underestimates the vegetation (

Figure 9D3).

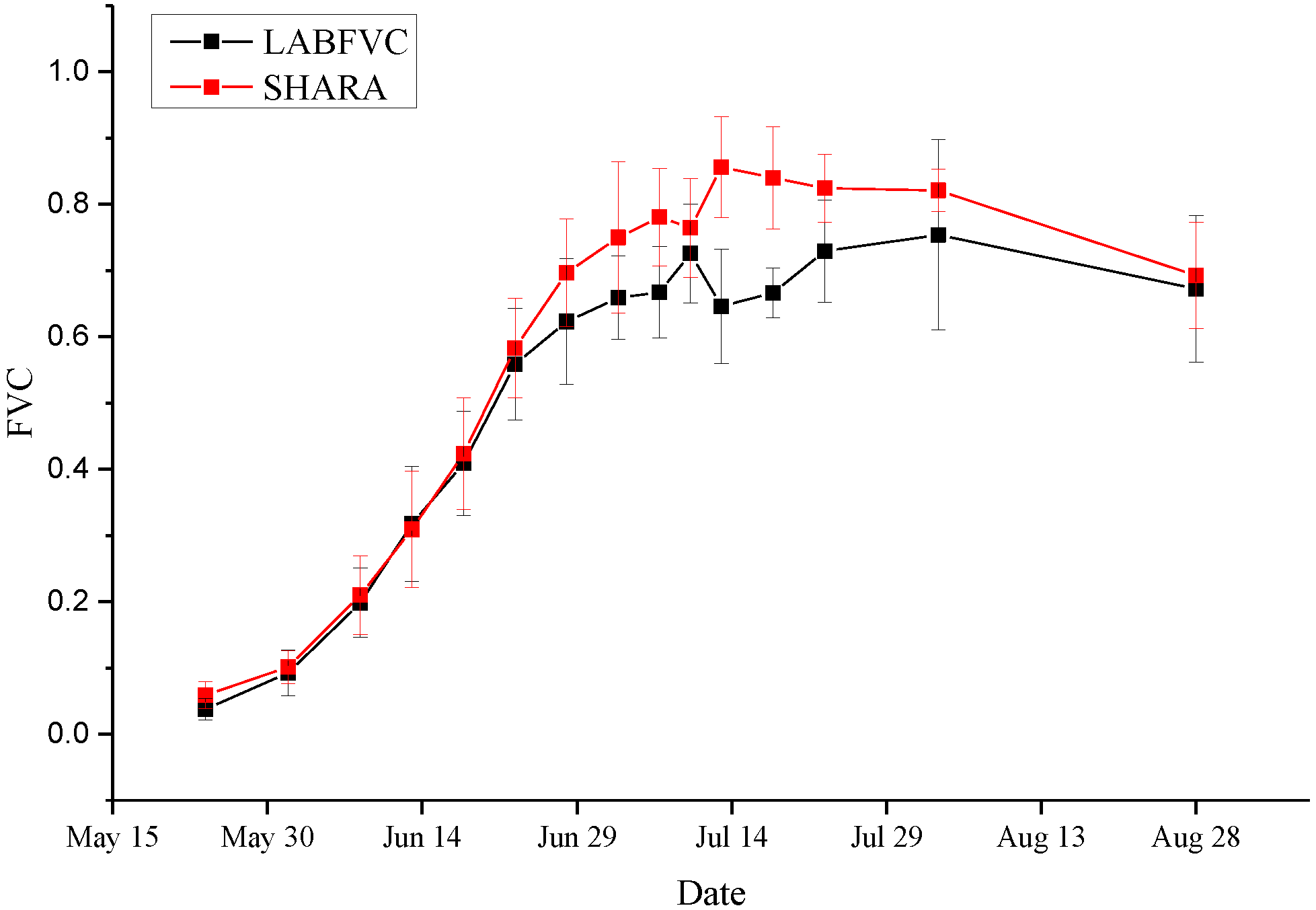

Time series images of corn were processed to evaluate the accuracy of SHAR-LABFVC (

Figure 10). The results of SHAR-LABFVC and LABFVC are quite similar during both the budding period and the period when leaves are withered. When the crops mature, leaves significantly shade the incoming light from the Sun and the sky. Deep shadows occur during this period, and the advantages of SHAR-LABFVC are apparent.

SHAR-LABFVC is shadow-resistant and reliable based on the evaluations. However, there are still some shortfalls in SHAR-LABFVC.

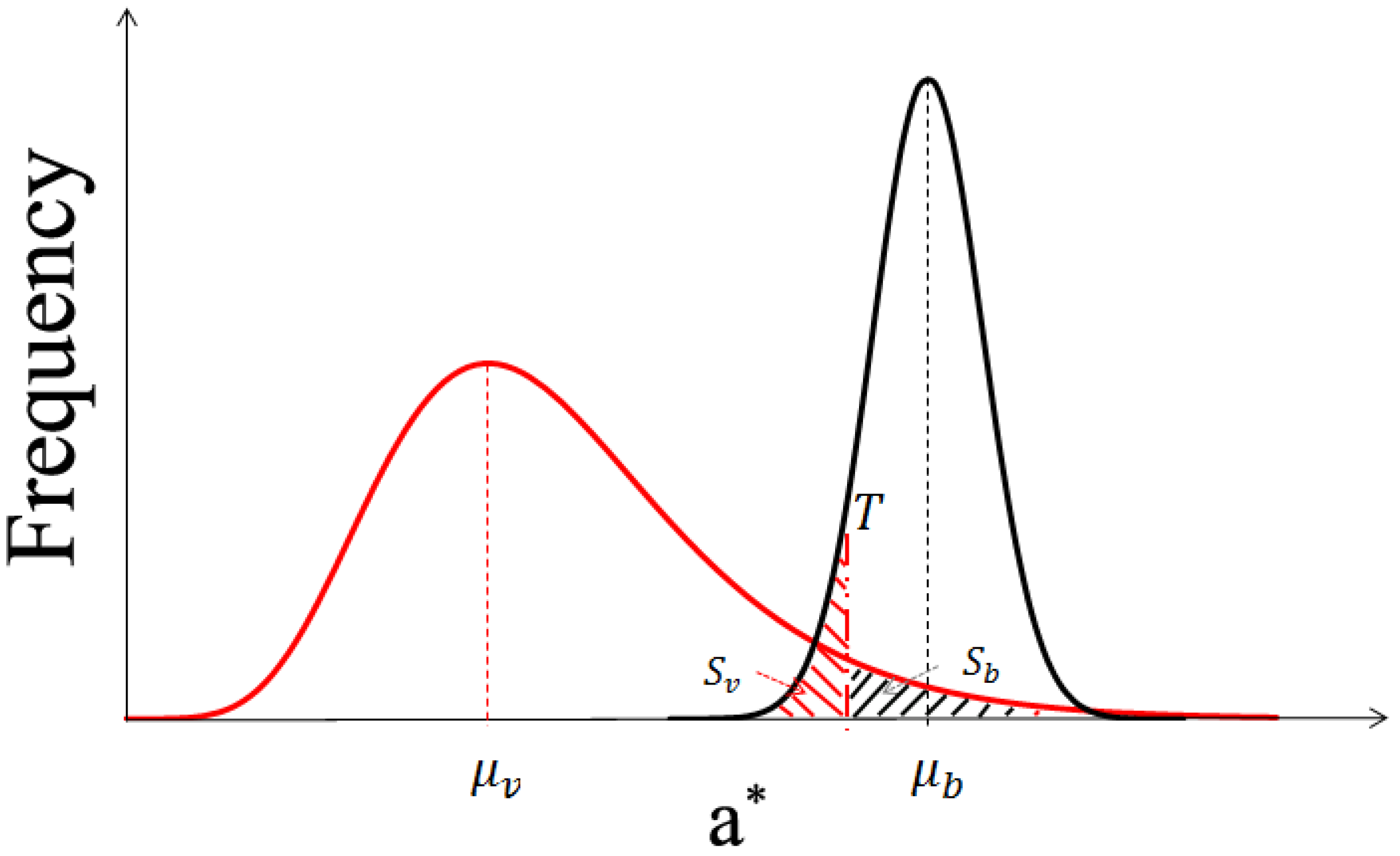

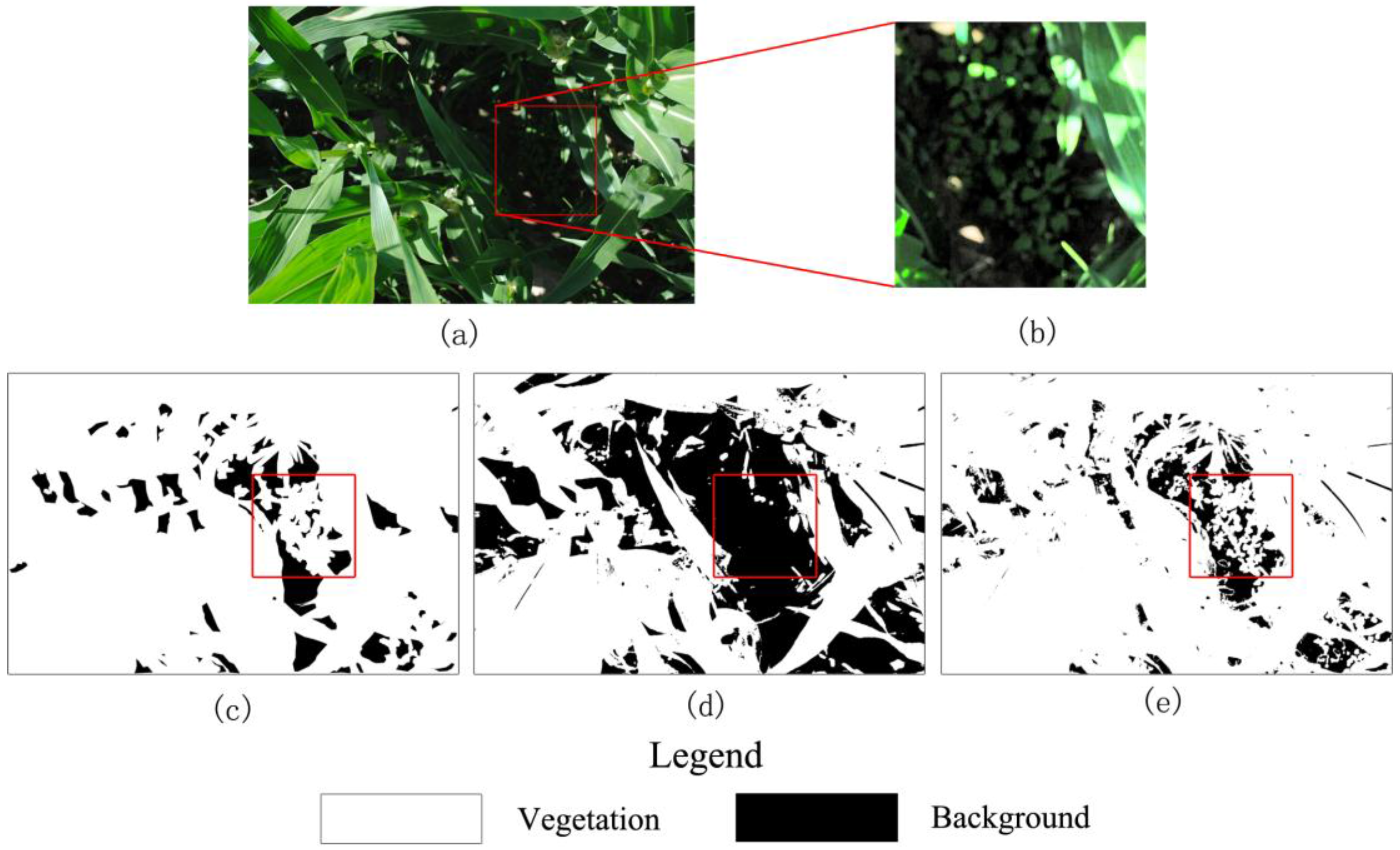

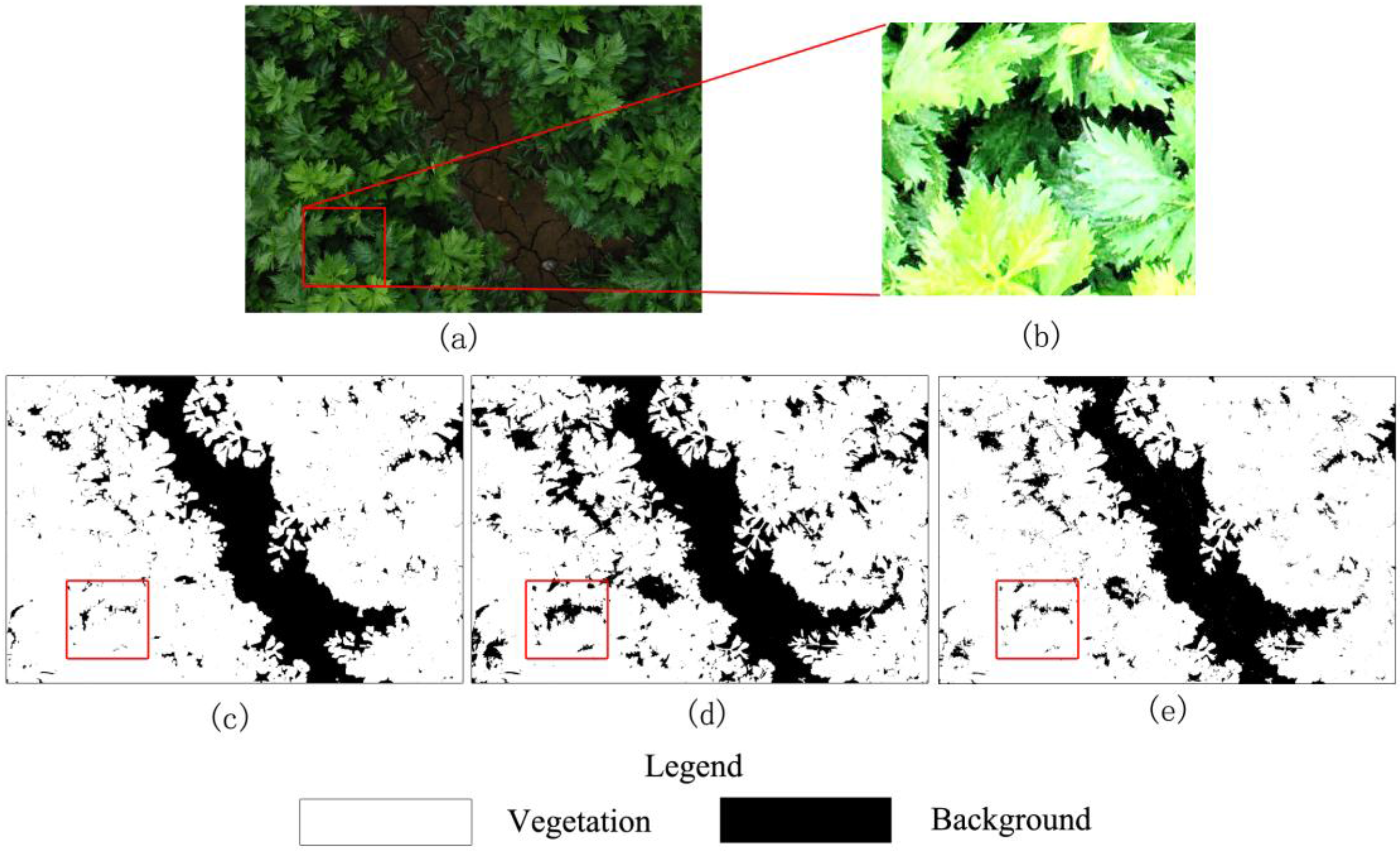

Figure 7 presents a case in which the corn plants are tall and project many deep shadows that cover more than half of the image.

Figure 7c shows that visual interpretation produces a smooth classification image. The main differences between the classification methods can be observed in the area in which the leaves are crimped or overlap with one another and generate dark shadows. Some shaded leaves are too dark to be stretched by SHAR-LABFVC. The leaf veins and solar glints also cause classification errors because they are extremely bright and nearly white. After adjusting the intensity, these leaves are still saturated and far from green. These situations correspond to the parts of the soil and vegetation histograms that are mixed in the image’s a* component (areas

sv and

sb in

Figure 3), resulting in misclassification. Generally, the FVC estimates provided by SHAR-LABFVC are fairly close to those of visual interpretation under all possible illumination conditions.

6. Conclusions

Deep shadows severely affect the results of digital image classification, particularly when vegetation growth is at its peak. In this study, we developed a shadow-resistant LABFVC algorithm (SHAR-LABFVC) to extract FVC from digital images. SHAR-LABFVC improves the documented LABFVC method and resists shadows by equalizing the histogram of the image’s intensity component in the HSI color space and brightening the shaded parts of the leaves and background. Generally, the histogram of the vegetation’s green red component exhibits a lognormal distribution because of the shaded leaves. This property is considered when dividing the images into vegetation and background regions in the LAB (the Commission Internationale d’Eclairage L*a*b*) color space.

The evaluation of SHAR-LABFVC revealed high accuracy similar to that of visual interpretation. The latter is assumed to be an accurate classification method that allows a reference FVC to be obtained from a digital image. As demonstrated by the results of this study, the RMSE of SHAR-LABFVC based on visual interpretation is 0.025, indicating similar performances of SHAR-LABFVC and visual interpretation. However, SHAR-LABFVC is more pragmatic and automatic. Compared to other automatic classification methods based on physical characteristics or feature space analysis, SHAR-LABFVC is less time consuming. Thus, SHAR-LABFVC can be used to obtain the FVCs of a large number of images.

Shadows caused errors of up to 0.2 when estimating FVC at moderate resolutions (e.g., the scale of ASTER) in the flourishing vegetation period. This underestimation of FVC caused by shadow effects will also affect the evaluation of present global FVC products at coarse resolutions (e.g., 1 km), because the systematic errors of moderate-spatial resolution FVC cannot be eliminated in the up-scaling process. Therefore, the development of shadow-resistant algorithms for field measurements is required. Given the uncertainties in our algorithm, further research is needed to investigate additional vegetation types and data sources. However, SHAR-LABFVC is expected to facilitate the validation of satellite-based products in this context due to its efficiency.