Using RapidEye and MODIS Data Fusion to Monitor Vegetation Dynamics in Semi-Arid Rangelands in South Africa

Abstract

:1. Introduction

- Is the ESTARFM algorithm applicable for generating time series using the combination of RapidEye and MODIS?

- Is a time series combining real RapidEye with ESTARFM-computed synthetic images appropriate for detecting highly dynamic vegetation changes at different small scale bush density classes in semi-arid rangelands in South Africa?

2. Material and Methods

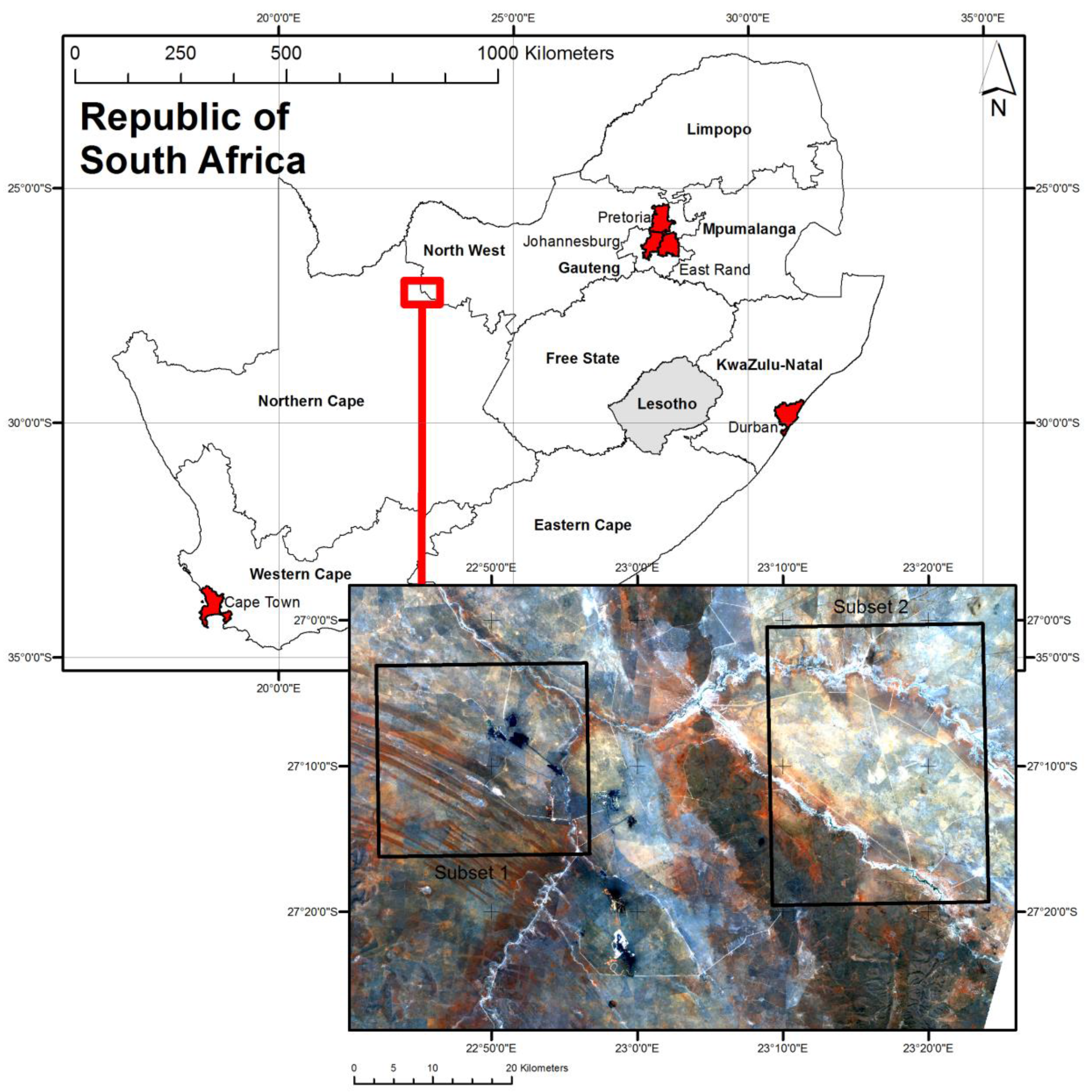

2.1. The Study Area

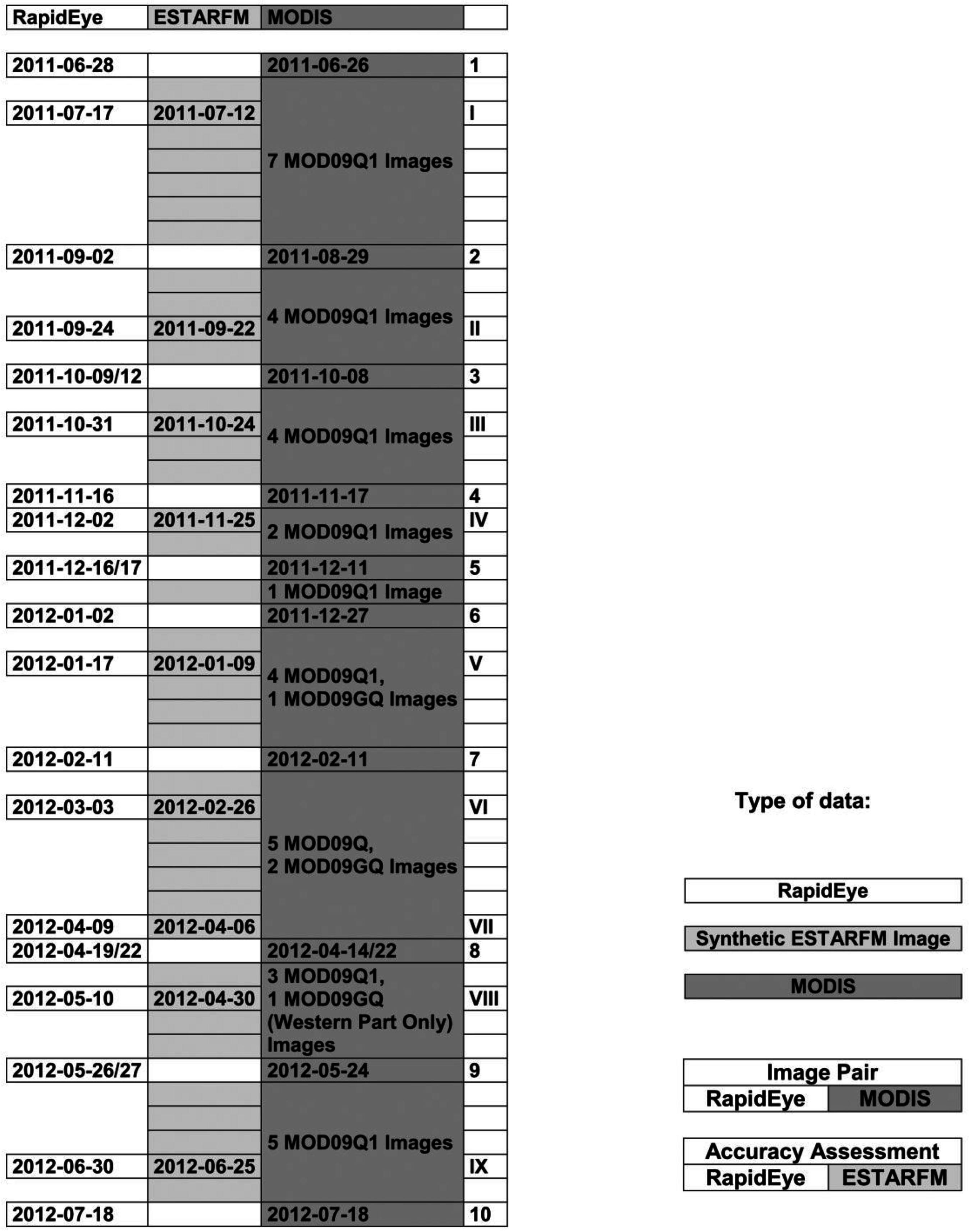

2.2. Data

2.2.1. The RapidEye Data

| Band Name | Band No | Spectral Range [nm] | ||

|---|---|---|---|---|

| RapidEye | MODIS | RapidEye | MODIS | |

| Blue | 1 | 3 | 440–510 | 459–479 |

| Green | 2 | 4 | 520–590 | 545–565 |

| Red | 3 | 1 | 630–685 | 620–670 |

| Red Edge | 4 | - | 690–730 | - |

| NIR | 5 | 2 | 760–850 | 841–876 |

2.2.2. The MODIS Data

2.3. The ESTARFM Algorithm

2.4. ESTARFM Implementation

2.5. Accuracy Assessment of ESTARFM Images

- The bias as well as its value relative to the mean value of the observed image should ideally be 0. The bias is the difference between the mean value of the observed RapidEye and predicted ESTARFM image.

- The standard deviation of the difference image in relative value, i.e., divided by the mean of the reference image, should ideally be 0. This measure indicates the level of error at any pixel, throughout the entire image (thus hereafter referred to as per-pixel level of error).

- On a band by band basis, the coefficient of determination (R2) between the observed RapidEye and the synthetic ESTARFM image should be as close as possible to 1. This measures the pixel-wise similarity in the observed versus the predicted image.

2.6. Bush Density Information

2.7. Monitoring Vegetation Dynamics

3. Results

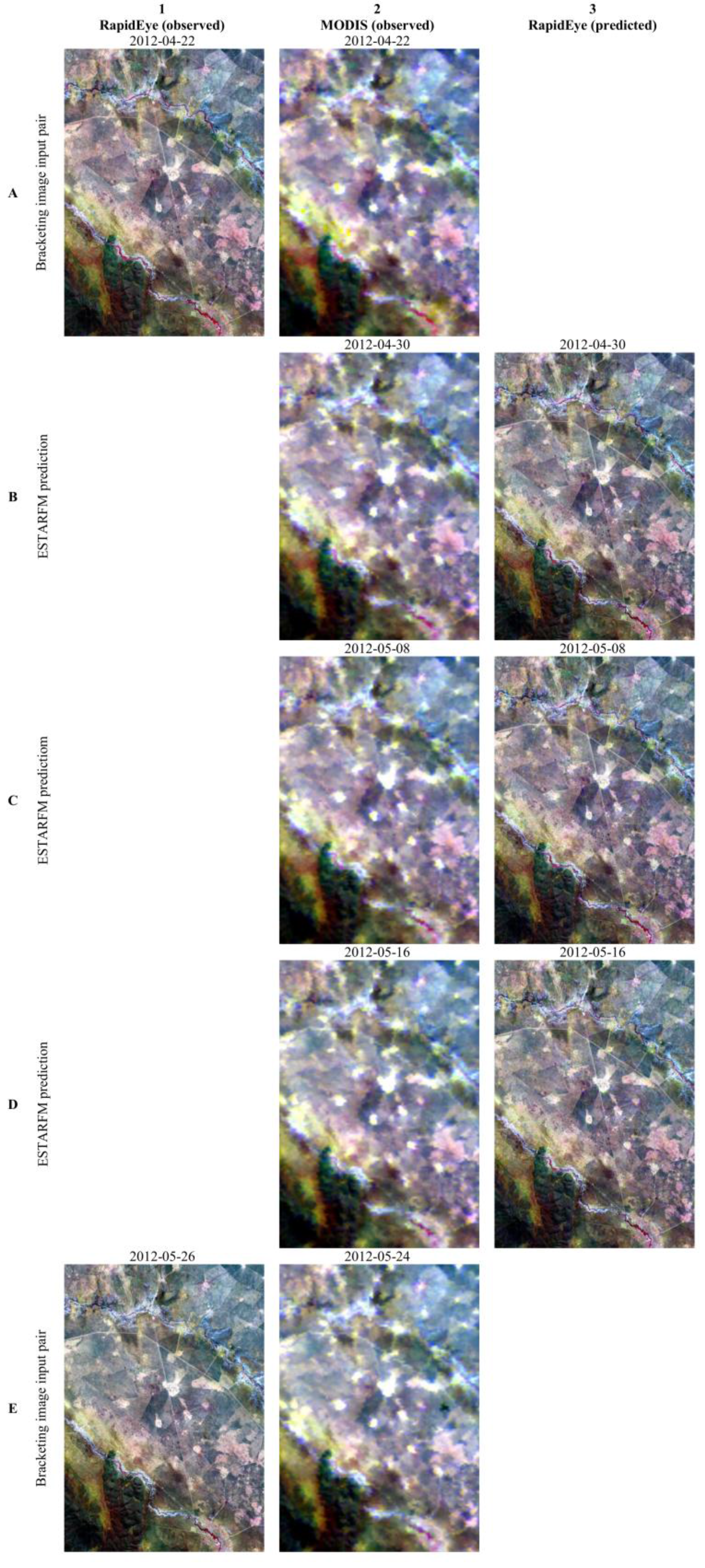

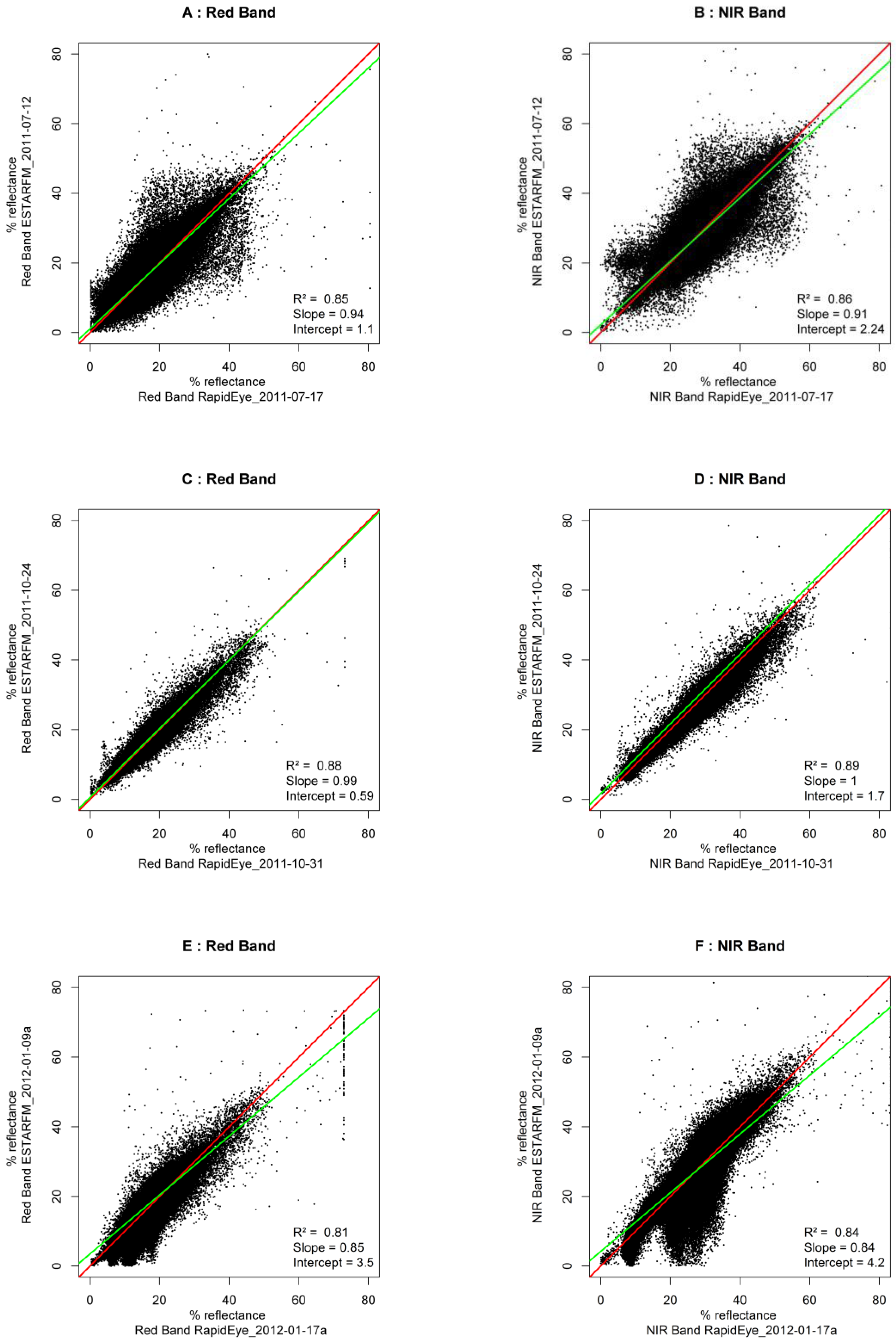

3.1. ESTARFM Prediction Results

| RapidEye | MODIS | Subset | Band | Absolute Mean Bias | Relative Mean Bias | Per-Pixel Level of Error | R2 |

|---|---|---|---|---|---|---|---|

| 17-07-2011 | 12-07-2011 | 2 | Red | −14.18 | −0.01 | 0.12 | 0.85 |

| NIR | −2.15 | 0.00 | 0.08 | 0.86 | |||

| 24-09-2011 | 22-09-2011 | 2 | Red | 43.82 | 0.03 | 0.07 | 0.91 |

| NIR | 19.05 | 0.01 | 0.04 | 0.93 | |||

| 31-10-2011 | 24-10-2011 | 1 | Red | −656.42 | −0.39 | 0.06 | 0.88 |

| NIR | −1102.68 | −0.40 | 0.05 | 0.89 | |||

| 02-12-2011 | 25-11-2011 | 1 | Red | 118.9 | 0.07 | 0.07 | 0.87 |

| NIR | 161.42 | 0.06 | 0.05 | 0.92 | |||

| 17-01-2012a | 09-01-2012a | 1 | Red | −115.52 | −0.07 | 0.09 | 0.80 |

| NIR | 52.47 | 0.02 | 0.07 | 0.84 | |||

| 17-01-2012b | 09-01-2012b | 2 | Red | −295.36 | −0.15 | 0.10 | 0.81 |

| NIR | −31.98 | −0.01 | 0.07 | 0.83 | |||

| 03-03-2012 | 26-02-2012 | 2 | Red | −240.98 | −0.16 | 0.09 | 0.89 |

| NIR | −173.41 | −0.06 | 0.05 | 0.92 | |||

| 09-04-2012 | 06-04-2012 | 2 | Red | −139.35 | −0.10 | 0.10 | 0.89 |

| NIR | −190.03 | −0.07 | 0.05 | 0.92 | |||

| 10-05-2012 | 30-04-2012 | 1 | Red | −40.25 | −0.04 | 0.11 | 0.82 |

| NIR | −9.63 | 0.00 | 0.06 | 0.90 | |||

| 30-06-2012 | 25-06-2012 | 2 | Red | 21.6 | 0.01 | 0.09 | 0.91 |

| NIR | 92.57 | 0.04 | 0.05 | 0.92 |

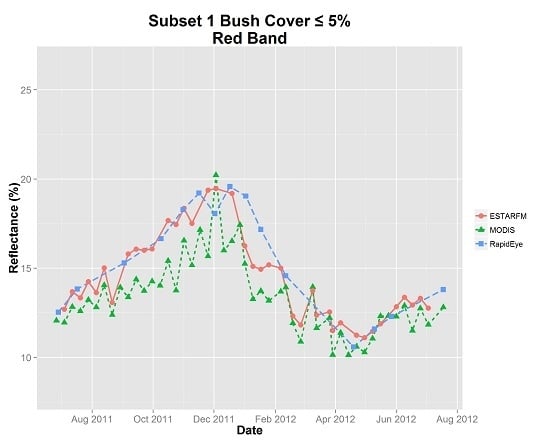

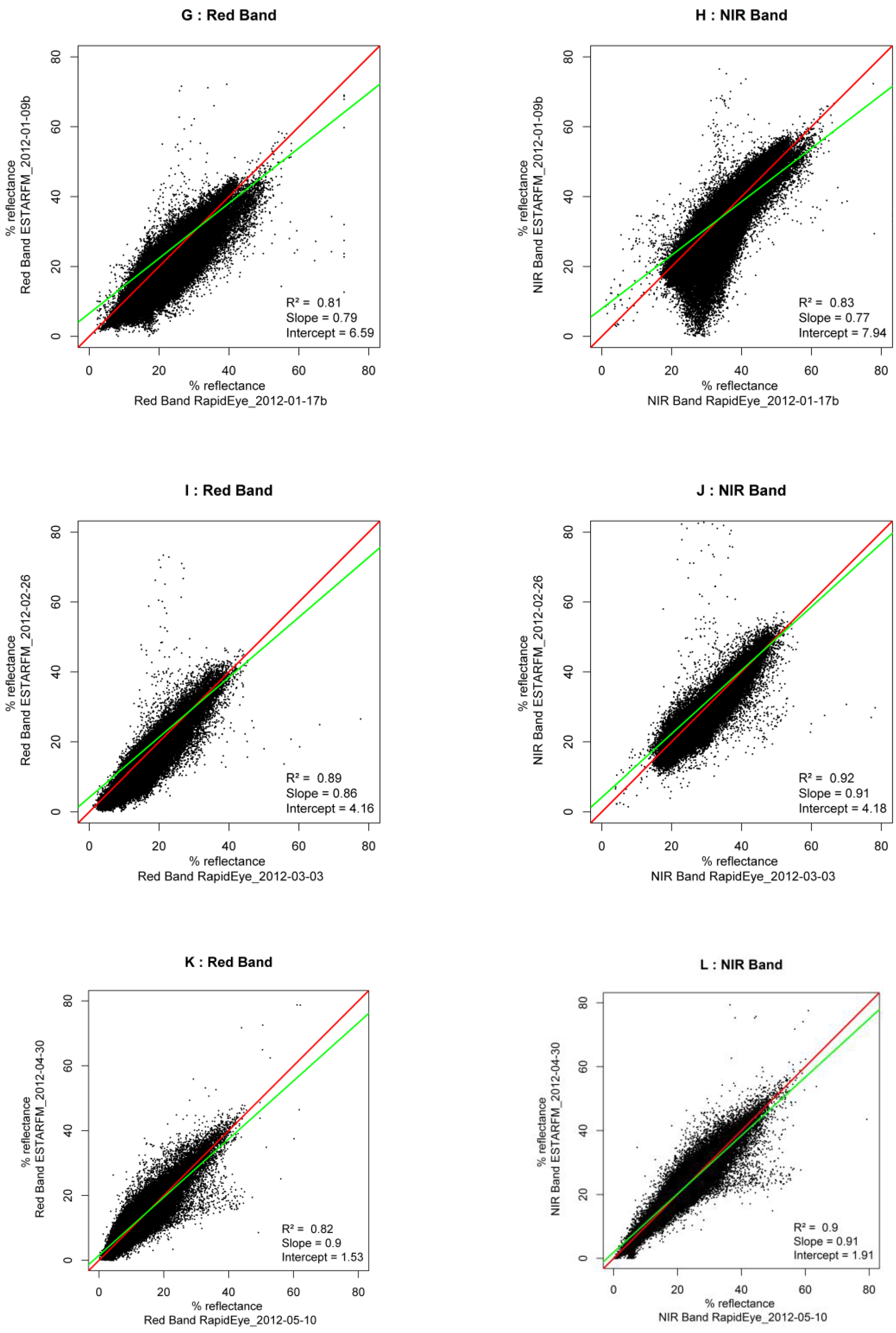

3.2. Analysis of Reflectances Time Series

| Bush Density | Band | Date | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 31-10-2011 | 02-12-2011 | 17-01-2012 | 10-05-2012 | ||||||

| R2 | Bias | R2 | Bias | R2 | Bias | R2 | Bias | ||

| ≤5%(79,354) | Red | 0.70 | −0.05 | 0.79 | 0.07 | 0.83 | −0.12 | 0.71 | −0.04 |

| NIR | 0.75 | −0.04 | 0.87 | 0.08 | 0.83 | −0.03 | 0.88 | 0.00 | |

| >5%, ≤20%(356,336) | Red | 0.74 | −0.04 | 0.83 | 0.06 | 0.69 | −0.08 | 0.73 | −0.08 |

| NIR | 0.82 | −0.07 | 0.90 | 0.06 | 0.74 | −0.01 | 0.87 | 0.01 | |

| >20%, ≤35%(569,186) | Red | 0.76 | −0.03 | 0.85 | 0.06 | 0.60 | −0.07 | 0.77 | −0.08 |

| NIR | 0.83 | −0.06 | 0.91 | 0.05 | 0.70 | 0.01 | 0.88 | 0.01 | |

| > 35%, ≤50%(553,589) | Red | 0.77 | −0.03 | 0.85 | 0.07 | 0.62 | −0.09 | 0.80 | −0.07 |

| NIR | 0.79 | −0.07 | 0.89 | 0.05 | 0.74 | 0.01 | 0.90 | 0.00 | |

| > 50%, ≤65%(634,875) | Red | 0.82 | −0.03 | 0.85 | 0.07 | 0.70 | −0.09 | 0.77 | −0.06 |

| NIR | 0.86 | −0.07 | 0.90 | 0.05 | 0.82 | 0.01 | 0.89 | 0.00 | |

| > 65%, ≤80%(862,093) | Red | 0.84 | −0.03 | 0.86 | 0.07 | 0.72 | −0.10 | 0.78 | −0.06 |

| NIR | 0.86 | −0.07 | 0.91 | 0.05 | 0.82 | 0.01 | 0.89 | 0.00 | |

| > 80%, ≤95%(1,620,701) | Red | 0.80 | −0.02 | 0.83 | 0.08 | 0.73 | −0.09 | 0.78 | −0.05 |

| NIR | 0.82 | −0.06 | 0.90 | 0.05 | 0.83 | 0.02 | 0.87 | 0.00 | |

| > 95%(27,787) | Red | 0.80 | −0.02 | 0.79 | 0.08 | 0.69 | −0.08 | 0.80 | −0.04 |

| NIR | 0.86 | −0.07 | 0.90 | 0.05 | 0.86 | 0.03 | 0.86 | 0.00 | |

| Bush Density | Band | Date | |||||

|---|---|---|---|---|---|---|---|

| 17-07-2011 | 24-09-2011 | 17-01-2012 | |||||

| R2 | Bias | R2 | Bias | R2 | Bias | ||

| ≤5%(375,469) | Red | 0.58 | −0.02 | 0.73 | 0.06 | 0.47 | −0.10 |

| NIR | 0.68 | 0.00 | 0.79 | 0.02 | 0.53 | 0.01 | |

| > 5%, ≤20%(903,438) | Red | 0.67 | −0.02 | 0.81 | 0.05 | 0.55 | −0.10 |

| NIR | 0.75 | 0.00 | 0.86 | 0.01 | 0.55 | 0.01 | |

| > 20%, ≤35%(884,540) | Red | 0.66 | −0.01 | 0.81 | 0.04 | 0.59 | −0.10 |

| NIR | 0.74 | 0.00 | 0.85 | 0.01 | 0.60 | 0.01 | |

| > 35%, ≤50%(725,780) | Red | 0.66 | −0.01 | 0.81 | 0.04 | 0.59 | −0.11 |

| NIR | 0.73 | 0.00 | 0.85 | 0.01 | 0.53 | 0.00 | |

| > 50%, ≤65%(838,079) | Red | 0.64 | −0.01 | 0.79 | 0.03 | 0.64 | −0.12 |

| NIR | 0.73 | 0.00 | 0.84 | 0.01 | 0.58 | 0.00 | |

| > 65%, ≤80%(963,657) | Red | 0.62 | −0.01 | 0.77 | 0.03 | 0.60 | −0.13 |

| NIR | 0.71 | 0.00 | 0.82 | 0.00 | 0.53 | 0.00 | |

| > 80%−≤ 95%(1,098,853) | Red | 0.57 | −0.01 | 0.75 | 0.02 | 0.59 | −0.14 |

| NIR | 0.67 | 0.00 | 0.79 | 0.00 | 0.53 | 0.00 | |

| > 95%(453,262) | Red | 0.57 | −0.01 | 0.63 | 0.02 | 0.56 | −0.19 |

| NIR | 0.63 | 0.00 | 0.62 | 0.01 | 0.48 | −0.04 | |

| 03-03-2012 | 09-04-2012 | 30-06-2012 | |||||

| R2 | Bias | R2 | Bias | R2 | Bias | ||

| ≤5% | Red | 0.77 | −0.14 | 0.83 | −0.09 | 0.77 | 0.01 |

| NIR | 0.89 | −0.05 | 0.88 | −0.06 | 0.88 | 0.04 | |

| > 5%, ≤20% | Red | 0.73 | 0.14 | 0.81 | −0.09 | 0.77 | 0.01 |

| NIR | 0.86 | −0.05 | 0.87 | −0.06 | 0.87 | 0.04 | |

| > 20%, ≤35% | Red | 0.75 | −0.15 | 0.80 | −0.10 | 0.79 | 0.01 |

| NIR | 0.87 | −0.06 | 0.87 | −0.07 | 0.88 | 0.04 | |

| > 35%, ≤50% | Red | 0.68 | −0.16 | 0.80 | −0.10 | 0.77 | 0.01 |

| NIR | 0.83 | −0.06 | 0.87 | −0.07 | 0.86 | 0.04 | |

| > 50%, ≤65% | Red | 0.71 | −0.16 | 0.78 | −0.10 | 0.78 | 0.01 |

| NIR | 0.85 | −0.07 | 0.87 | −0.07 | 0.87 | 0.04 | |

| > 65%, ≤80% | Red | 0.70 | −0.17 | 0.78 | −0.11 | 0.76 | 0.02 |

| NIR | 0.85 | −0.07 | 0.87 | −0.07 | 0.85 | 0.04 | |

| > 80%, ≤95% | Red | 0.71 | −0.18 | 0.76 | −0.11 | 0.74 | 0.02 |

| NIR | 0.84 | −0.08 | 0.86 | −0.07 | 0.84 | 0.04 | |

| >95% | Red | 0.71 | −0.24 | 0.74 | −0.11 | 0.72 | 0.03 |

| NIR | 0.84 | −0.09 | 0.83 | −0.08 | 0.80 | 0.04 | |

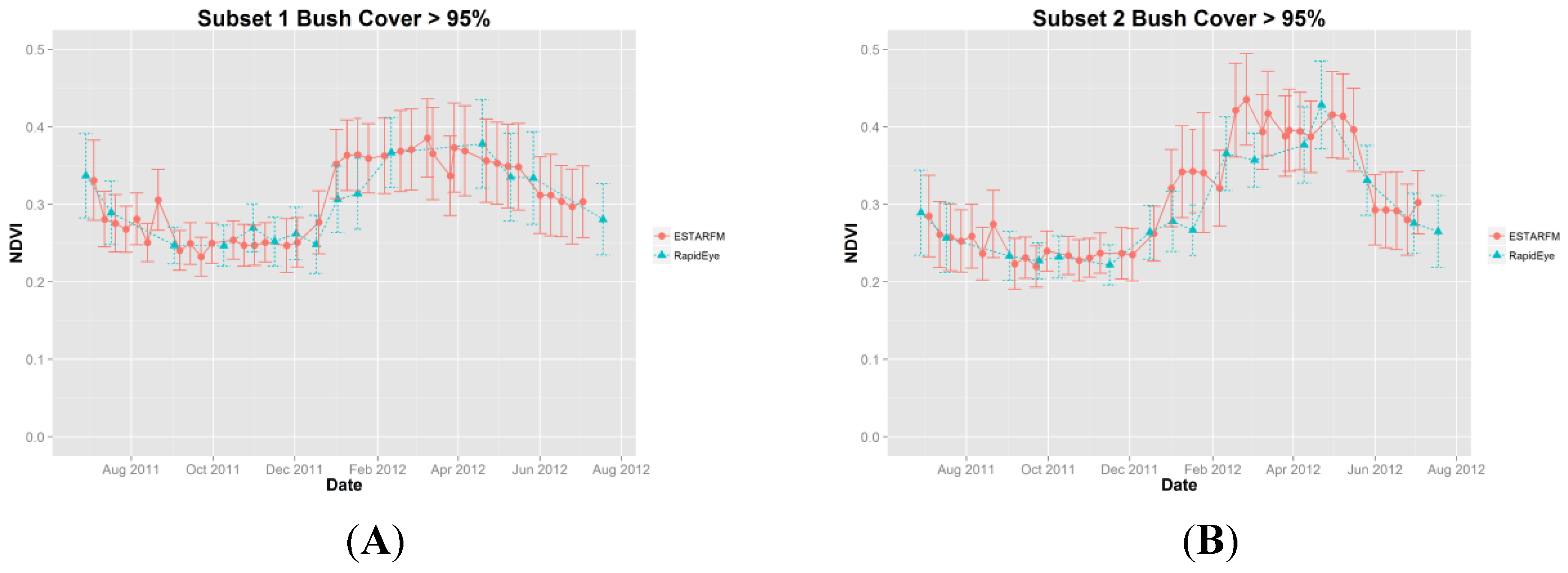

3.3. Vegetation Index Time Series Analysis

4. Discussion

4.1. Band Differences

4.2. Evaluation of NDVI Time Series

4.3. BRDF Effects

4.4. Co-Registration of MODIS and RapidEye Images

5. Conclusions and Outlook

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Fensholt, R.; Sandholt, I.; Rasmussen, M.S. Evaluation of MODIS LAI, fAPAR and the relation between fAPAR and NDVI in a semi-arid environment using in situ measurements. Remote Sens. Environ. 2004, 91, 490–507. [Google Scholar] [CrossRef]

- Pettorelli, N.; Vik, J.O.; Mysterud, A.; Gaillard, J.-M.; Tucker, C.J.; Stenseth, N.C. Using the satellite-derived NDVI to assess ecological responses to environmental change. Trends Ecol. Evol. 2005, 20, 503–510. [Google Scholar] [CrossRef] [PubMed]

- Reed, B.C.; Brown, J.F.; VanderZee, D.; Loveland, T.R.; Merchant, J.W.; Ohlen, D.O. Measuring phenological variability from satellite imagery. J. Veg. Sci. 1994, 5, 703–714. [Google Scholar] [CrossRef]

- Busetto, L.; Meroni, M.; Colombo, R. Combining medium and coarse spatial resolution satellite data to improve the estimation of sub-pixel NDVI time series. Remote Sens. Environ. 2008, 112, 118–131. [Google Scholar] [CrossRef]

- Aplin, P. Remote sensing: Ecology. Prog. Phys. Geogr. 2005, 29, 104–113. [Google Scholar] [CrossRef]

- Brüser, K.; Feilhauer, H.; Linstädter, A.; Schellberg, J.; Oomen, R. J.; Ruppert, J. C.; Ewert, F. Discrimination and characterization of management systems in semi-arid rangelands of South Africa using RapidEye time series. Int. J. Remote Sens. 2014, 35, 1653–1673. [Google Scholar] [CrossRef]

- Watts, J. D.; Powell, S. L.; Lawrence, R. L.; Hilker, T. Improved classification of conservation tillage adoption using high temporal and synthetic satellite imagery. Remote Sens. Environ. 2011, 115, 66–75. [Google Scholar] [CrossRef]

- Schmidt, M.; Udelhoven, T.; Gill, T.; Röder, A. Long term data fusion for a dense time series analysis with MODIS and Landsat imagery in an Australian Savanna. J. Appl. Remote Sens. 2012, 6. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Walker, J.J.; de Beurs, K.M.; Wynne, R.H.; Gao, F. Evaluation of Landsat and MODIS data fusion products for analysis of dryland forest phenology. Remote Sens. Environ. 2012, 117, 381–393. [Google Scholar] [CrossRef]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Seitz, N.; White, J.C.; Gao, F.; Masek, J.G.; Stenhouse, G. Generation of dense time series synthetic Landsat data through data blending with MODIS using a spatial and temporal adaptive reflectance fusion model. Remote Sens. Environ. 2009, 113, 1988–1999. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Kim, J.; Hogue, T.S. Evaluation and sensitivity testing of a coupled Landsat-MODIS downscaling method for land surface temperature and vegetation indices in semi-arid regions. J. Appl. Remote Sens. 2012, 6. [Google Scholar] [CrossRef]

- Pohl, C.; van Genderen, J.L. Review article Multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Hansen, M.C.; Roy, D.P.; Lindquist, E.; Adusei, B.; Justice, C.O.; Altstatt, A. A method for integrating MODIS and Landsat data for systematic monitoring of forest cover and change in the Congo Basin. Remote Sens. Environ. 2008, 112, 2495–2513. [Google Scholar] [CrossRef]

- Walker, J.J.; de Beurs, K.M.; Wynne, R.H. Dryland vegetation phenology across an elevation gradient in Arizona, USA, investigated with fused MODIS and Landsat data. Remote Sens. Environ. 2014, 144, 85–97. [Google Scholar] [CrossRef]

- Emelyanova, I.V.; McVicar, T.R.; Niel, T.G.V.; Li, L.T.; van Dijk, A.I.J.M. Assessing the accuracy of blending Landsat—MODIS surface reflectances in two landscapes with contrasting spatial and temporal dynamics: A framework for algorithm selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar] [CrossRef]

- Zhang, F.; Zhu, X.; Liu, D. Blending MODIS and Landsat images for urban flood mapping. Int. J. Remote Sens. 2014, 35, 3237–3253. [Google Scholar] [CrossRef]

- Schmidt, M.; Lucas, R.; Bunting, P.; Verbesselt, J.; Armston, J. Multi-resolution time series imagery for forest disturbance and regrowth monitoring in Queensland, Australia. Remote Sens. Environ. 2015, 158, 156–168. [Google Scholar] [CrossRef]

- Settle, J.J.; Drake, N.A. Linear mixing and the estimation of ground cover proportions. Int. J. Remote Sens. 1993, 14, 1159–1177. [Google Scholar] [CrossRef]

- Mucina, L.; Rutherford, M.C. The Vegetation of South Africa, Lesotho and Swaziland; South African National Biodiversity Institute: Pretoria, South Africa, 2006. [Google Scholar]

- Van Rooyen, N.; Bezuidenhout, H.; De Kock, E. Flowering plants of the Kalahari dunes; Ekotrust: Pretoria, South Africa, 2001. [Google Scholar]

- Palmer, A.R.; Ainslie, A.M. Grasslands of South Africa. In Grasslands of the World; Suttie, J.M., Reynolds, S.G., Batello, C., Eds.; Food and Agriculture Organization of the United Nations: Rome, Italy, 2005; pp. 77–120. [Google Scholar]

- Trodd, N.M.; Dougill, A.J. Monitoring vegetation dynamics in semi-arid African rangelands: Use and limitations of Earth observation data to characterize vegetation structure. Appl. Geogr. 1998, 18, 315–330. [Google Scholar] [CrossRef]

- Tyc, G.; Tulip, J.; Schulten, D.; Krischke, M.; Oxfort, M. The RapidEye mission design. Acta Astronaut. 2005, 56, 213–219. [Google Scholar] [CrossRef]

- RapidEye AG Satellite Imagery Product Specifications, Version 4.1. Available online: http://www.gisat.cz/images/upload/c1626_rapideye-image-product-specs-april-07.pdf (accessed on 2 September 2014).

- Krauß, T.; d’ Angelo, P.; Schneider, M.; Gstaiger, V. The fully automatic optical processing system CATENA at DLR. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W1, 177–183. [Google Scholar] [CrossRef]

- Richter, R.; Schläpfer, D. Atmospheric/Topographic Correction for Satellite Imagery: ATCOR-2/3 User Guide; Version 8.2.3; ReSe Applications Schlapfer: Langeggweg, Switzerland, 2012. [Google Scholar]

- MODIS MOD09Q1 Product Description. Available online: https://lpdaac.usgs.gov/products/modis_products_table/mod09q1 (accessed on 21 May 2015).

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Thomas, C.; Wald, L. Comparing distances for quality assessment of fused images. In New Developments and Challenges in Remote Sensing; Bochenek, E., Ed.; Millpress: Varsovie, Poland, 2007; pp. 101–111. [Google Scholar]

- Wagenseil, M.; Samimi, C. Woody vegetation cover in Namibian savannahs: A modelling approach based on remote sensing. Erdkunde 2007, 61, 325–334. [Google Scholar] [CrossRef]

- Scholes, R.J.; Walker, B.H. An African Savanna: Synthesis of the Nylsvley Study; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- Schmidt, H.; Karnieli, A. Remote sensing of the seasonal variability of vegetation in a semi-arid environment. J. Arid Environ. 2000, 45, 43–59. [Google Scholar] [CrossRef]

- Weiss, J.L.; Gutzler, D.S.; Coonrod, J.E.A.; Dahm, C.N. Long-term vegetation monitoring with NDVI in a diverse semi-arid setting, central New Mexico, USA. J. Arid Environ. 2004, 58, 249–272. [Google Scholar] [CrossRef]

- Mather, P.M. Computer Processing of Remotely-Sensed Images, 3rd ed.; John Wiley & Sons, Ltd: Chichester, UK, 2004. [Google Scholar]

- Roy, D.P.; Ju, J.; Lewis, P.; Schaaf, C.; Gao, F.; Hansen, M.; Lindquist, E. Multi-temporal MODIS-Landsat data fusion for relative radiometric normalization, gap filling, and prediction of Landsat data. Remote Sens. Environ. 2008, 112, 3112–3130. [Google Scholar]

- Singh, D. Evaluation of long-term NDVI time series derived from Landsat data through blending with MODIS data. Atmósfera 2012, 25, 43–63. [Google Scholar]

- Hird, J.N.; McDermid, G.J. Noise reduction of NDVI time series: An empirical comparison of selected techniques. Remote Sens. Environ. 2009, 113, 248–258. [Google Scholar] [CrossRef]

- Bréon, F.-M.; Vermote, E. Correction of MODIS surface reflectance time series for BRDF effects. Remote Sens. Environ. 2012, 125, 1–9. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tewes, A.; Thonfeld, F.; Schmidt, M.; Oomen, R.J.; Zhu, X.; Dubovyk, O.; Menz, G.; Schellberg, J. Using RapidEye and MODIS Data Fusion to Monitor Vegetation Dynamics in Semi-Arid Rangelands in South Africa. Remote Sens. 2015, 7, 6510-6534. https://doi.org/10.3390/rs70606510

Tewes A, Thonfeld F, Schmidt M, Oomen RJ, Zhu X, Dubovyk O, Menz G, Schellberg J. Using RapidEye and MODIS Data Fusion to Monitor Vegetation Dynamics in Semi-Arid Rangelands in South Africa. Remote Sensing. 2015; 7(6):6510-6534. https://doi.org/10.3390/rs70606510

Chicago/Turabian StyleTewes, Andreas, Frank Thonfeld, Michael Schmidt, Roelof J. Oomen, Xiaolin Zhu, Olena Dubovyk, Gunter Menz, and Jürgen Schellberg. 2015. "Using RapidEye and MODIS Data Fusion to Monitor Vegetation Dynamics in Semi-Arid Rangelands in South Africa" Remote Sensing 7, no. 6: 6510-6534. https://doi.org/10.3390/rs70606510

APA StyleTewes, A., Thonfeld, F., Schmidt, M., Oomen, R. J., Zhu, X., Dubovyk, O., Menz, G., & Schellberg, J. (2015). Using RapidEye and MODIS Data Fusion to Monitor Vegetation Dynamics in Semi-Arid Rangelands in South Africa. Remote Sensing, 7(6), 6510-6534. https://doi.org/10.3390/rs70606510