An Observation Capability Metadata Model for EO Sensor Discovery in Sensor Web Enablement Environments

Abstract

:1. Introduction

2. Observation Capability Information Description Requirements

2.1. Satisfying the Collaborative Planning Scenarios

| Steps | |||||||

|---|---|---|---|---|---|---|---|

| Sensor Filtration | Sensor Optimization | Sensor Dispatch | |||||

| Observation Capability sets | Observation principle | Observation range | Observation cycle | Observation quality | Observation coverage | Observation application | Observation adjustment |

| Observation Capability elements | Measures; Band types; isActive; isMobile; | Temporal/ Spectral/ GroundResolutionRange; Swath; FOV | Sample interval; revisit period | Temporal/ ground/ spectral/ Radiation accuracy | Coverage rates; | Sensor designed Application; band Main Application | CanSide Swing; SideSwing Angle; IFOV |

| Examples | Existing DB: SrawCollection Query Operation: Measures = “Remote Sensing”; isActive = “Yes”; SpectralResolutionRange = “0.1 µm–0.8 µm”… Output1: Soutput1 | Input DB: Soutput1 Query Operation: Radiationaccuracy = “”; bandMainApplication = “Multipurpose imaging | water surface”… Output1: Soutput2 | Input DB: Soutput2 Query Operation: CanSideSwing = “Yes”; SideSwingAngle = “15”…Output1: Slastoutput | ||||

2.2. Based on Existing Related Metadata

| Features | Types | ||||

| ISO 19130 | NGA CSM | SSNO | SensorML 1.0 Discovery Profile | StarFL | |

| Main aspects | |||||

| Observation principle | ✕ | ✕ | ○ | ○ | ○ |

| Observation range | ○ | ○ | √ | ○ | ○ |

| Observation cycle | ✕ | ✕ | ✕ | ✕ | ✕ |

| Observation quality | ○ | ○ | √ | ✕ | ○ |

| Observation application | ✕ | ✕ | ○ | ✕ | ○ |

| Observation adjustment | √ | √ | ✕ | ✕ | ✕ |

| Focus | Imagery Sensor Model | Community Sensor Model | Semantic Sensor Web | Restricting the sensor description | Restricting the sensor description |

| Usage | Geopostioning of imagery data | Implementation of each imagery sensor geopositioning | Linked sensor data | Sensor discovery | Sensor discovery |

| Encoding Schema | N/A | N/A | OWL | Xml | UML |

2.3. Use of EO Sensor Observation Discovery

- Sensor observation information representation elements

- Sensor observation information description model

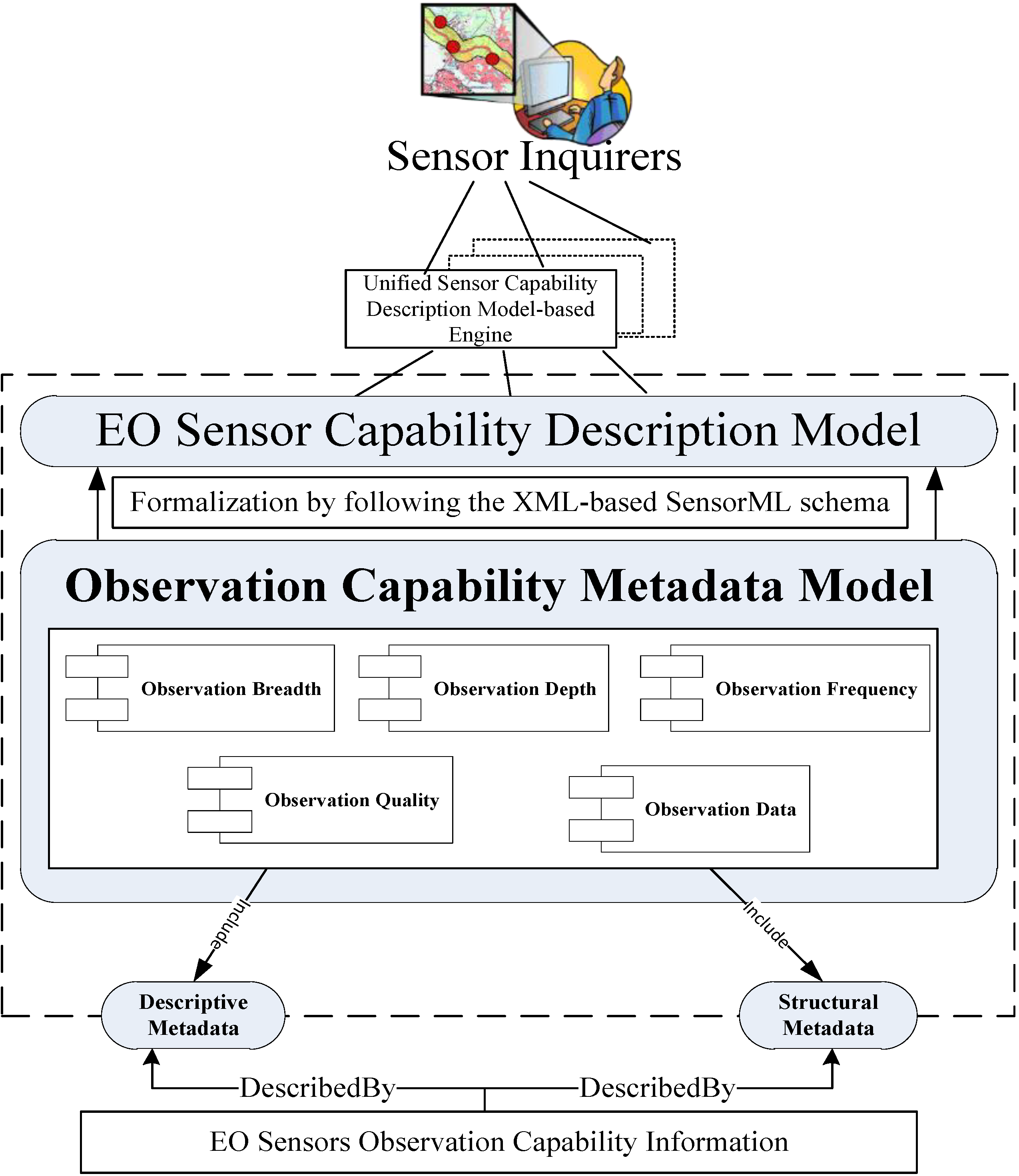

3. Metadata Model Framework

3.1. Architecture and Sub-Modules

- (1)

- Comprehensive and non-redundant representation: This principle aims to describe the observation capability information of EO sensors with the least complexity. This representation does not intend to consider every detail of the observation capability information of EO sensors, but only covers the common and important facets required in accurate discovery and collaborative observation.

- (2)

- Geo-event-centric reflection: This principle indicates that the observation-related temporal, spatial and thematic facets should be contained; these facets are the key elements used to reflect the observation requirements of a geo-event.

- (3)

- Extensibility: This principle maintains maximum reusability, but allows extension to satisfy the higher requirements of individual communities.

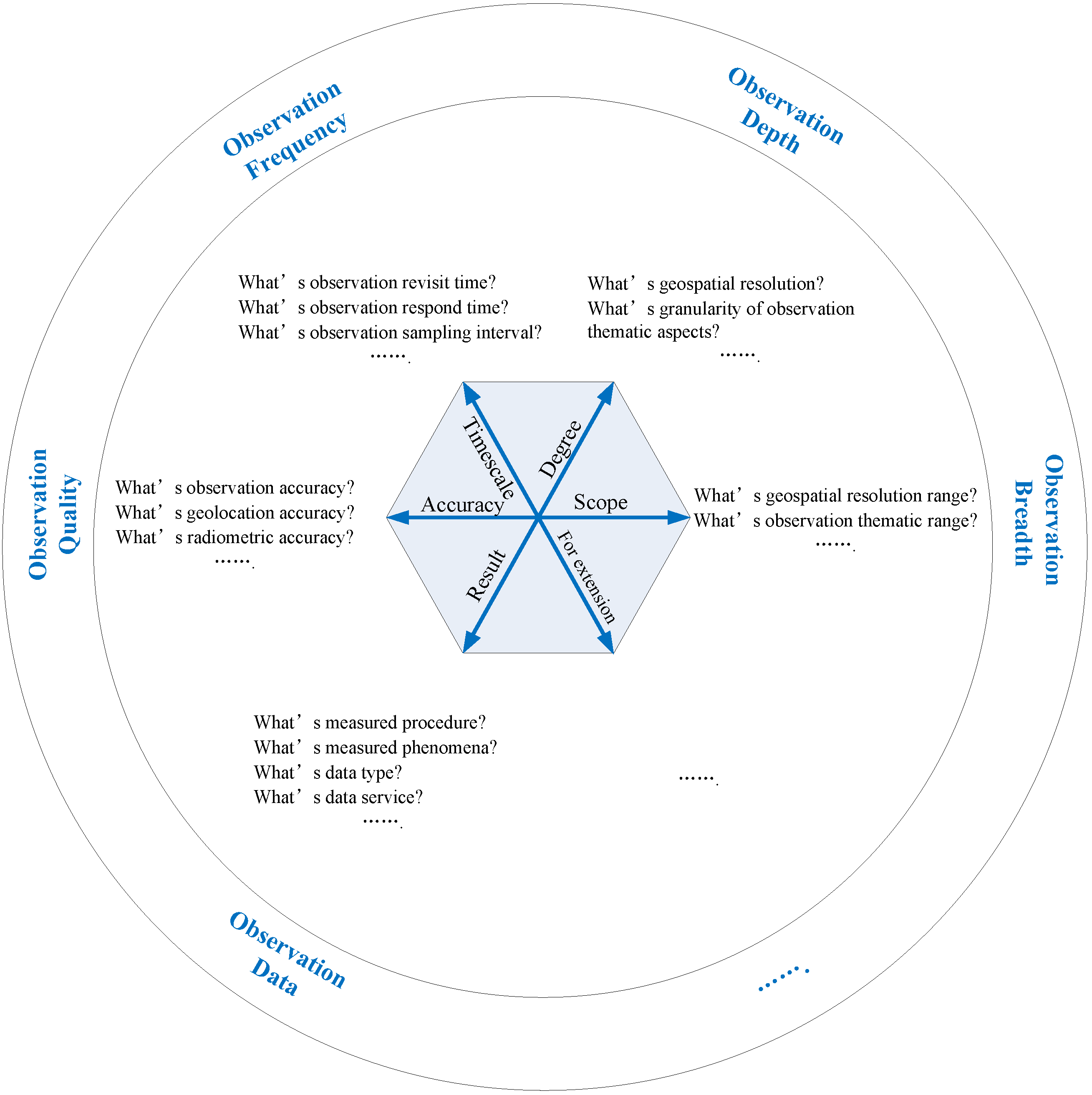

- (1)

- ObservationBreadth is derived from the scope dimension, which starts from the horizontal scales of observation. This module should contain observation range parameters in geospatial and thematic fields (e.g., ground resolution range, band categories and spectral range), i.e., the included elements in this module determine the observation range.

- (2)

- ObservationDepth is derived from the degree dimension, which starts from the vertical scales of sensor observation. All elements that represent the depth of observation can be included in the ObservationDepth module. Unlike the elements in ObservationBreadth, which present the observation range, the elements in ObservationDepth reflect the fine granularity of spatial- and thematic-related observation aspects (e.g., ground resolution, specific band type and band-associated application) and determine the observation degree.

- (3)

- ObservationFrequency is derived from the timescale dimension, because evaluation of the time efficiency of sensor observation is vital. The ObservationFrequency module focuses on observation time.

- (4)

- ObservationQuality is derived from the accuracy dimension. The elements that represent the quality of observation can be considered in this module, which determines the quality of sensor observation.

- (5)

- ObservationData: The EO sensors are used to perform a particular observation task. The accessed observation data are used in subsequent observation warning, emergency analysis or decision-making. Therefore, ObservationData is an essential module derived from the observation result dimension.

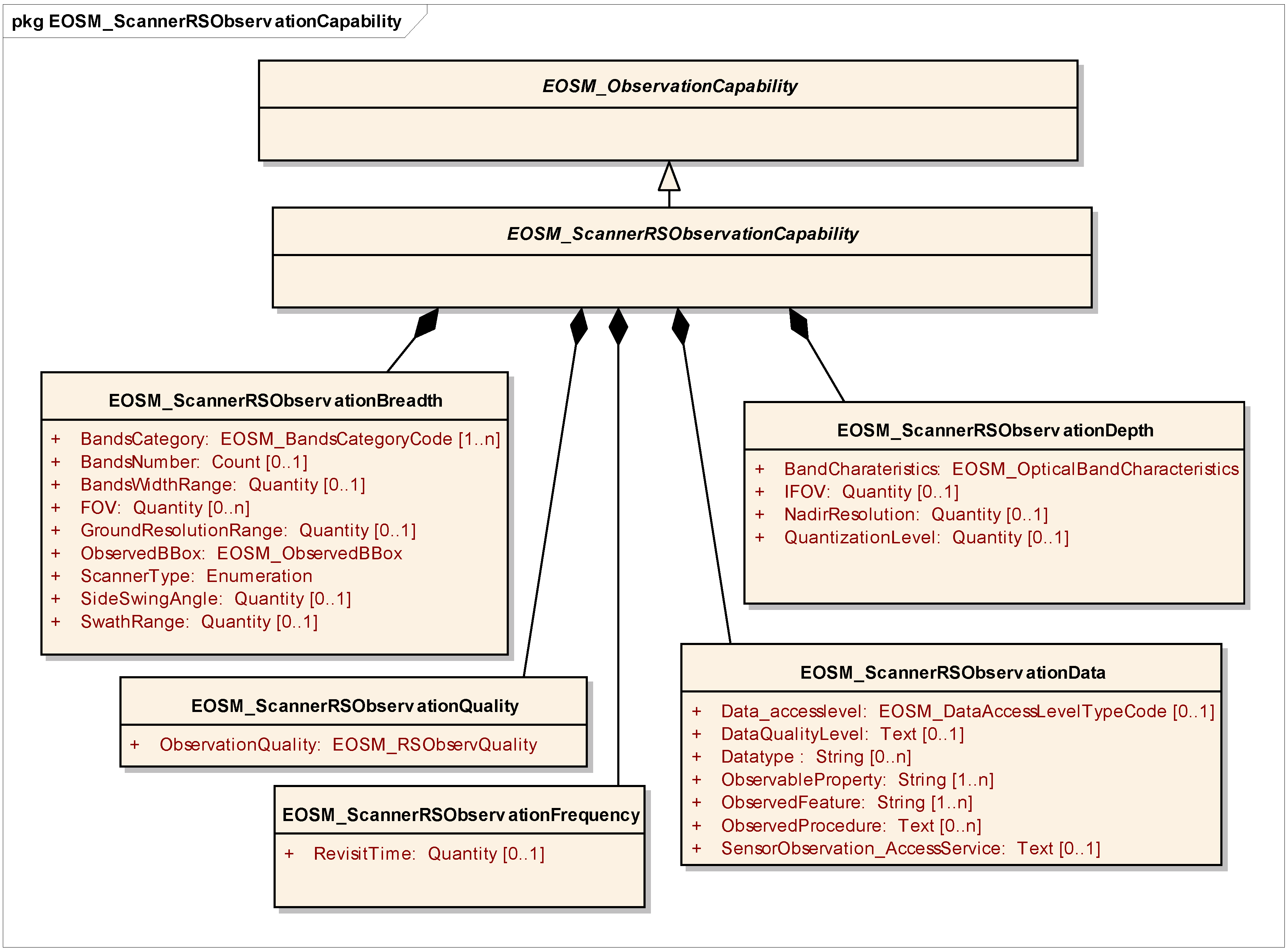

3.2. Contents of the Proposed Metadata Model

- EOSM_RSObservationCapability,

- EOSM_in-situObservationCapability.

- EOSM_RSObservationBreadth,

- EOSM_RSObservationDepth,

- EOSM_RSObservationFrequency,

- EOSM_RSObservationQuality,

- EOSM_RSObservationData.

- EOSM_in-situObservationBreadth,

- EOSM_in-situObservationDepth,

- EOSM_in-situObservationFrequency,

- EOSM_in-situObservationQuality,

- EOSM_in-situObservationData.

| Metadata Sub-Modules | Metadata Fields | Existing Metadata Standards Reused |

|---|---|---|

| EOSM_RSObservationBreadth | SwathRange | ISO 19130 |

| EOSM_RSObservationDepth | IFOV | |

| EOSM_RSObservationQuality | GeolocationAccuracy, RadiometricAccuracy | |

| common observation capability of EOSM_ObservationCapability | SensorIsMobile, SensorIsActive | SensorML profile for discovery |

| EOSM_RSObservationBreadth | ObservedBBox | |

| EOSM_ScannerRSObservationDepth | NadirResolution | StarFL |

| EOSM_OpticalBandCharateristic | GroundResolution, RadiationResolution | |

| EOSM_in-situObservationBreadth | ObservationResolution | |

| EOSM_in-situObservationDepth | ObservationRange | |

| EOSM_AllObservData | ObservedProcedure, ObservedFeature, ObservedProperty | ISO 19156 |

- EOSM_FrameRSObservationDepth,

- EOSM_ScannerRSObservationDepth,

- EOSM_RadarRSObservationDepth.

4. Instances and Applications

4.1. Metadata Instances for Diverse EO Sensors

- EOSM_ScannerRSObservationCapability,

- EOSM_RadarRSObservationCapability,

- EOSM_in-situObservationCapability.

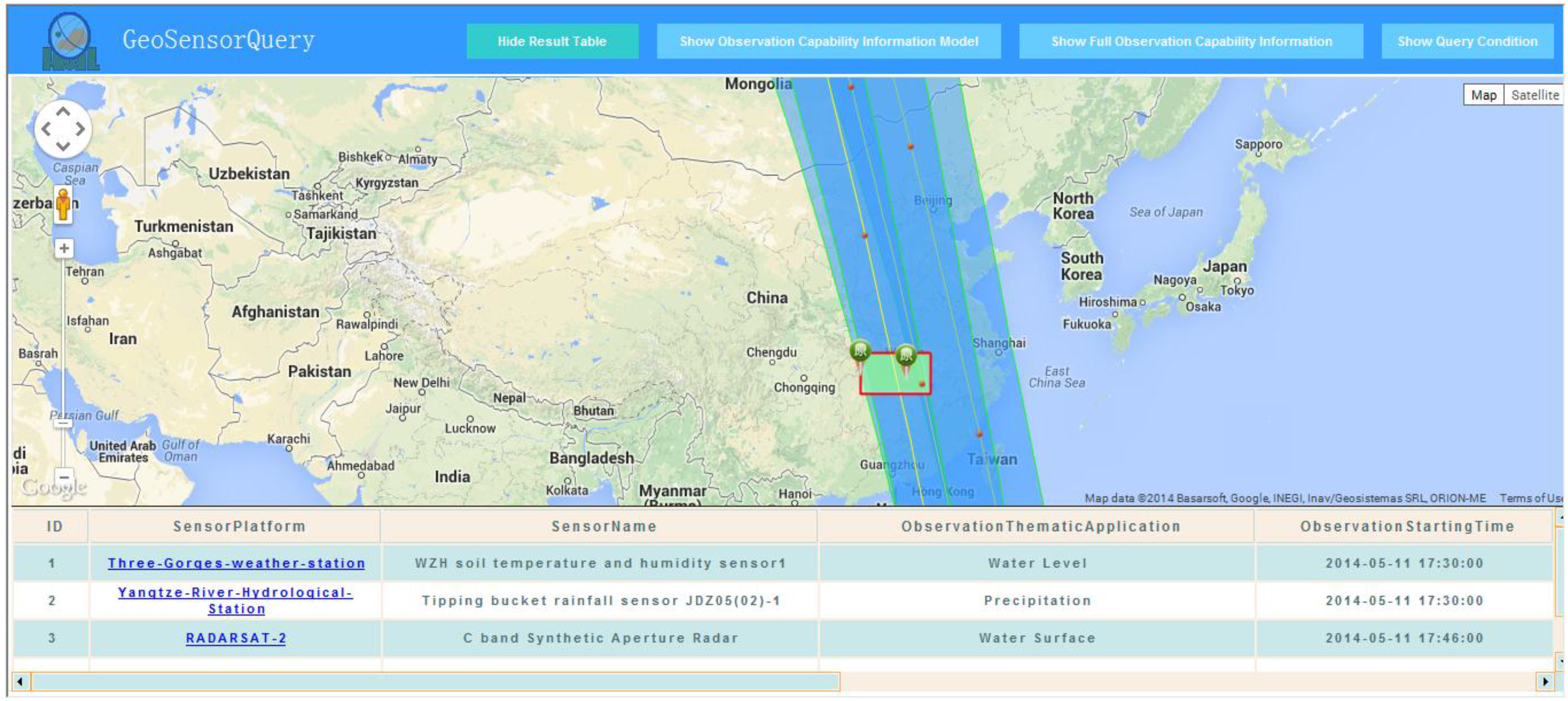

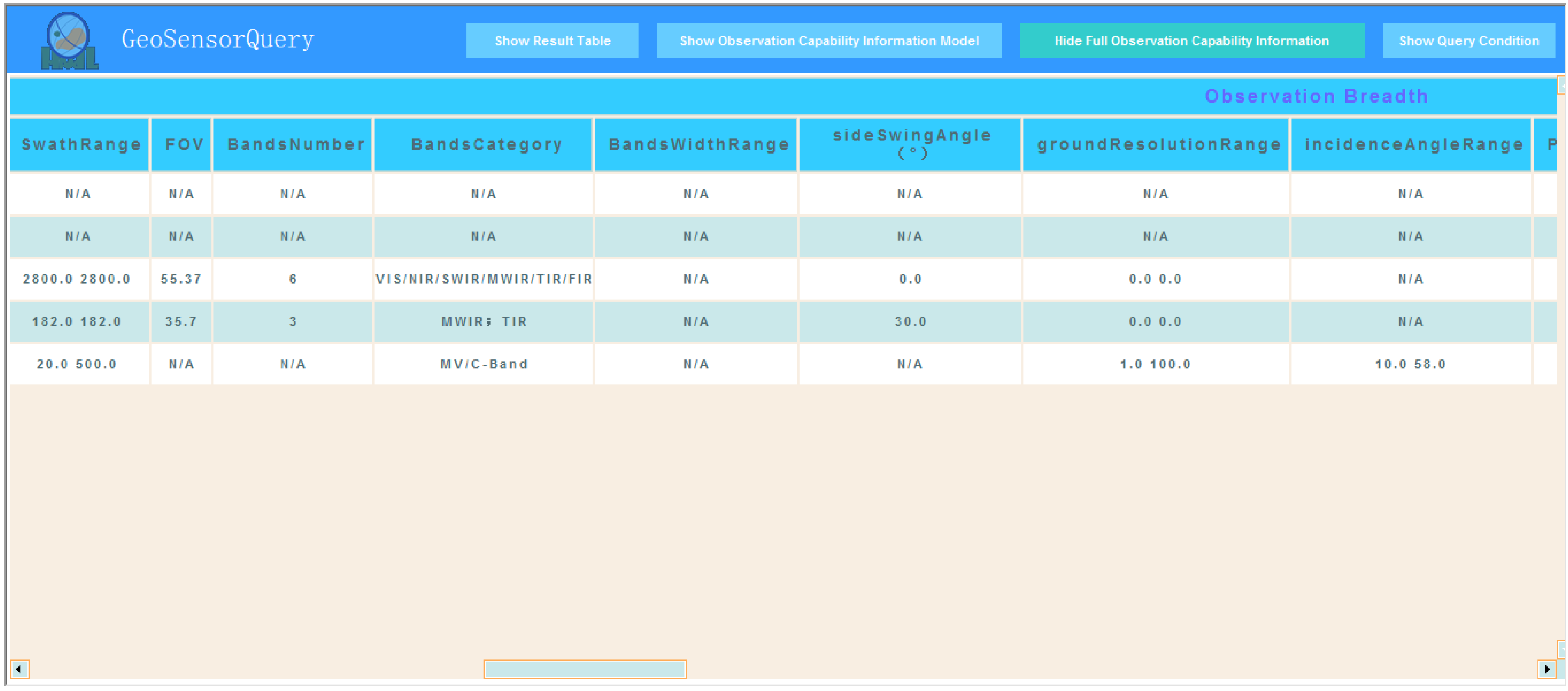

4.2. Applications in Discovery

5. Discussions

5.1. Comprehensive and Extensible Metadata Model

5.2. Support for the Current Sensor Registry/Discovery Service

5.3. Satisfaction of Efficient Discovery and Collaborative Planning Scenarios

5.4. Support for the Formulation of the SensorML 2.0 Profile for Describing Observation Capabilities

6. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Gross, N. The Earth will Don an Electronic Skin. Business Week Online. 1999. Available online: http://www.businessweek.com (accessed on 28 May 2014).

- Teillet, P.M.; Gauthier, R.P.; Chichagov, A.; Fedosejevs, G. Towards integrated Earth sensing: Advanced technologies for sensing in the context of Earth observation. Can. J. Remote Sens. 2002, 28, 713–718. [Google Scholar] [CrossRef]

- Perera, C.; Zaslavsky, A.; Liu, C.; Compton, M.; Christen, P.; Georgakopoulos, D. Sensor search techniques for sensing as a service architecture for the internet of things. IEEE Sens. J. 2014, 14, 406–420. [Google Scholar] [CrossRef]

- Bröring, A. Automated On-the-Fly Integration of Geosensors with the Sensor Web. Ph.D. Thesis, University of Twente, Enschede, The Netherlands, 2012. [Google Scholar]

- Kenda, K.; Fortuna, C.; Moraru, A.; Mladenić, D.; Fortuna, B.; Grobelnik, M. Mashups for the web of things. In Semantic Mashups; Endres-Niggemeyer, B., Ed.; Springer: Berlin, Germany, 2013; pp. 145–169. [Google Scholar]

- Botts, M.; Percivall, G.; Reed, C.; Davidson, J. OGC® Sensor Web Enablement: Overview and High Level Architecture (OGC 07-165); Open Geospatial Consortium: Wayland, MA, USA, 2007. [Google Scholar]

- Bröring, A.; Echterhoff, J.; Jirka, S.; Simonis, I.; Everding, T.; Stasch, C.; Liang, S.; Lemmens, R. New generation sensor web enablement. Sensors 2011, 11, 2652–2699. [Google Scholar] [CrossRef] [PubMed]

- Jirka, S.; Bröring, A.; Stasch, C. Discovery mechanisms for the sensor web. Sensors 2009, 9, 2661–2681. [Google Scholar] [CrossRef] [PubMed]

- Liang, S.H.; Huang, C.Y. GeoCENS: A geospatial cyberinfrastructure for the world-wide sensor web. Sensors 2013, 13, 13402–13424. [Google Scholar] [CrossRef] [PubMed]

- Simonis, I.; Echterhoff, J. GEOSS and the sensor web. In Proceedings of the GEOSS Task DA 07-04, Geneva, Switzerland, 15–16 May 2008.

- Chen, C.; Helal, S. Sifting through the jungle of sensor standards. IEEE Pervasive Comput. 2008, 7, 84–88. [Google Scholar] [CrossRef]

- Metadata management for holistic data governance. Informatica—The Data Integration Company. 2014. Available online: http://www.informatica.com/Images/02163_metadata-management-data-governance_wp_en-US.pdf (accessed on 28 April 2014).

- Di, L.; Moe, K.; Yu, G. Metadata requirement analysis for the emerging sensor web. Int. J. Digit. Earth 2009, 2, 3–17. [Google Scholar] [CrossRef]

- Chen, N.; Wang, K.; Xiao, C.; Gong, J. A heterogeneous sensor web node meta-model for the management of flood monitoring system. Environ. Model. Softw. 2014, 54, 222–237. [Google Scholar] [CrossRef]

- Botts, M.; Robin, A. OpenGIS Sensor Model Language (SensorML) Implementation Specification; Open Geospatial Consortium: Wayland, MA, USA, 2007. [Google Scholar]

- Hu, C.; Li, J.; Chen, N.; Guan, Q. An object model for integrating diverse remote sensing satellite sensors: A case study of union operation. Remote Sens. 2014, 6, 677–699. [Google Scholar] [CrossRef]

- Botts, M. OGC® SensorML: Model and XML Encoding Standard; Open Geospatial Consortium: Wayland, MA, USA, 2014. [Google Scholar]

- SensorML 2.0 Examples. SensorML. 2014. Available online: http://www.sensorml.com/sensor ML-2.0/examples/ (accessed on 15 September 2014).

- Jirka, S.; Stasch, C.; Bröring, A. OGC Lightweight SOS Profile for Stationary In-Situ Sensors Discussion Paper (OGC 11-169); Open Geospatial Consortium: Wayland, MA, USA, 2011. [Google Scholar]

- Jirka, S.; Bröring, A. OGC Discussion Paper 09-033—SensorML Profile for Discovery; Open Geospatial Consortium: Wayland, MA, USA, 2009. [Google Scholar]

- Na, A.; Priest, M. OGC Implementation Specification 06-009r6: OpenGIS Sensor Observation Service (SOS); Open Geospatial Consortium: Wayland, MA, USA, 2007. [Google Scholar]

- Nüst, D.; Stasch, C.; Pebesma, E. Connecting R to the sensor web. In Advancing Geoinformation Science for a Changing World; Geertman, S., Reinhardt, W., Toppen, F., Eds.; Springer: Berlin, Germany, 2011; pp. 227–246. [Google Scholar]

- Botts, M. OpenGeo Sensor Web Enablement (SWE) Suite. Boundless Official Site. 2011. Available online: http://boundlessgeo.com/whitepaper/opengeo-sensor-web-enablement-swe-suite/ (accessed on 3 April 2014).

- Hu, C.; Chen, N.; Li, J. Geospatial web-based sensor information model for integrating satellite observation: An example in the field of flood disaster management. Photogramm. Eng. Remote Sens. 2013, 79, 915–927. [Google Scholar] [CrossRef]

- Nebert, D.; Whiteside, A.; Vretanos, P. OpenGIS® Catalog Services Specification (Version 2.0.2) 07-006r; Open Geospatial Consortium: Wayland, MA, USA, 2007. [Google Scholar]

- Chen, N.; Wang, X.; Yang, X. A direct registry service method for sensors and algorithms based on the process model. Comput. Geosci. 2013, 56, 45–55. [Google Scholar] [CrossRef]

- Zhai, X.; Zhu, X.; Lu, X.; Yuan, J.; Li, M.; Yue, P. Metadata harvesting and registration in a geospatial sensor web registry. Trans. GIS 2012, 16, 763–780. [Google Scholar] [CrossRef]

- Jirka, S.; Nuest, D. OGC Discussion Paper 10-171: Sensor Instance Registry; Open Geospatial Consortium: Wayland, MA, USA, 2010. [Google Scholar]

- Stoimenov, L.; Bogdanovic, M.; Bogdanovic-Dinic, S. ESB-based sensor web integration for the prediction of electric power supply system vulnerability. Sensors 2013, 13, 10623–10658. [Google Scholar] [CrossRef] [PubMed]

- Malewski, C.; Bröring, A.; Maué, P.; Janowicz, K. Semantic matchmaking & mediation for sensors on the sensor web. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 7, 929–934. [Google Scholar] [CrossRef]

- Yoo, B.; Harward, V.J. Visualization and level-of-detail of metadata for interactive exploration of sensor web. Int. J. Digit. Earth 2013, 7, 1–23. [Google Scholar]

- Di, L.; Kresse, W.; Kobler, B. The current status and future plan of the ISO 19130 project. In Proceedings of the XXth ISPRS Congress, Istanbul, Turkey, 12–23 July 2004.

- ISO/TS 19130-2:2014, 2014. International Organization for Standardization (ISO). Available online: http://www.iso.org/iso/iso_catalogue/catalogue_ics/catalogue_detail_ics.htm?ics1=35&ics 2= 240&ics3=70&csnumber=56113 (accessed on 3 April 2014).

- NGA/CSM: Community Sensor Model Work Group Standards. Geospatial Intelligence Standards Working Group (GWG), 2010. Available online: http://www.gwg.nga.mil/csmwg.php/ (accessed on 10 April 2014).

- Malewski, C.; Simonis, I.; Terhorst, A.; Bröring, A. StarFL—A modularised metadata language for sensor descriptions. Int. J. Digit. Earth 2012, 7, 450–469. [Google Scholar] [CrossRef]

- Compton, M.; Barnaghi, P.; Bermudez, L.; García-Castro, R.; Corcho, O.; Cox, S.; Graybeal, J.; Hauswirth, M.; Henson, C.; Herzog, A.; et al. The SSN ontology of the W3C semantic sensor network incubator group. Web Semant. Sci. Serv. Agent. World Wide Web 2012, 17, 25–32. [Google Scholar] [CrossRef] [Green Version]

- Barnaghi, P.; Presser, M.; Moessner, K. Publishing linked sensor data. In Proceedings of the 3rd International Workshop on Semantic Sensor Networks, Shanghai, China, 7–11 November 2010.

- Janowicz, K.; Bröring, A.; Stasch, C.; Schade, S.; Everding, T.; Llaves, A. A restful proxy and data model for linked sensor data. Int. J. Digit. Earth 2013, 6, 233–254. [Google Scholar] [CrossRef]

- Havlik, D.; Bleier, T.; Schimak, G. Sharing sensor data with sensorsa and cascading sensor observation service. Sensors 2009, 9, 5493–5502. [Google Scholar] [CrossRef] [PubMed]

- Andersen, P.D.; Jørgensen, B.H.; Rasmussen, B. Sensor Technology Foresight; European Foresight Monitoring Network: Roskilde, Denmark, 2005. [Google Scholar]

- McGhee, J.; Henderson, I.A.; Sydenham, P.H. Sensor science—Essentials for instrumentation and measurement technology. Measurement 1999, 25, 89–113. [Google Scholar] [CrossRef]

- ISO 19156:2011, 2011. International Organization for Standardization (ISO). Available online: http://www.iso.org/iso/iso_catalogue/catalogue_tc/catalogue_detail.htm?csnumber=32574 (accessed on 3 April 2014).

- Gasperi, J.; Houbie, F.; Woolf, A.; Smolders, S. Earth Observation Metadata Profile of Observations & Measurements (Version 1.0); Open Geospatial Consortium: Wayland, MA, USA, 2012. [Google Scholar]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, C.; Guan, Q.; Chen, N.; Li, J.; Zhong, X.; Han, Y. An Observation Capability Metadata Model for EO Sensor Discovery in Sensor Web Enablement Environments. Remote Sens. 2014, 6, 10546-10570. https://doi.org/10.3390/rs61110546

Hu C, Guan Q, Chen N, Li J, Zhong X, Han Y. An Observation Capability Metadata Model for EO Sensor Discovery in Sensor Web Enablement Environments. Remote Sensing. 2014; 6(11):10546-10570. https://doi.org/10.3390/rs61110546

Chicago/Turabian StyleHu, Chuli, Qingfeng Guan, Nengcheng Chen, Jia Li, Xiang Zhong, and Yongfei Han. 2014. "An Observation Capability Metadata Model for EO Sensor Discovery in Sensor Web Enablement Environments" Remote Sensing 6, no. 11: 10546-10570. https://doi.org/10.3390/rs61110546

APA StyleHu, C., Guan, Q., Chen, N., Li, J., Zhong, X., & Han, Y. (2014). An Observation Capability Metadata Model for EO Sensor Discovery in Sensor Web Enablement Environments. Remote Sensing, 6(11), 10546-10570. https://doi.org/10.3390/rs61110546