In this section, we first present two novel definitions, namely semantic geospatial graph and skeleton graph. The semantic geospatial graph helps us to address the challenge of less connected nodes by injecting semantic edges. The skeleton graph enables us to overlook the errors introduced by the positioning devices and allow us to focus on the primary structure of the geospatial graph. Next, we propose a graph contrastive learning method that learns a representation for both the semantic geospatial graph and the skeleton graph. Finally, we aggregate the two representations to obtain the final representation.

4.1. Data Preparation

The geospatial graph does not utilize the rich semantic information in the features and in the user activity set. To incorporate such semantic information, we construct a semantic geospatial graph as follows.

Definition 3 (Semantic Relationship). Given two nodes and from the geospatial graph, we say is semantically related to if either (1) the similarity between their features is larger than a threshold, i.e., , where γ is the threshold, or (2) there exists a user o that has visited both and , i.e., .

With the semantic relationship defined, we are ready to introduce the semantic geospatial graph.

Definition 4 (Semantic Geospatial Graph). Given geospatial graph , a semantic geospatial graph is denoted as , where V and E are the same set of nodes and edges in G, and is the set of semantic edges, i.e., two nodes and are connected by an semantic edge, i.e., , if and are semantically related. We refer to E in as structural edges, and in as semantic edges.

Compared with a geospatial graph, a semantic geospatial graph is injected with many semantic edges. As a result, a less connected node in the geospatial graph is likely to be connected to other nodes through semantic edges, which addresses the challenge of sparse connection.

As positioning devices may introduce errors, we next propose the skeleton graph to only preserve the primary structure of the graph and disregard the fine-grained details.

Definition 5 (Skeleton graph). Given a semantic geospatial graph , a skeleton graph is denoted as , where each node corresponds to a cluster , in which the distance between each node pair and is less than a given threshold , is the set of structural edges and is the set of semantic edges. The two nodes and are connected by a structural/semantic edge if there exist and such that and are connected by a structural/semantic edge, i.e., /.

In order to construct the skeleton graph from the original geospatial graph efficiently, we adopt the following strategy: Firstly, we impose a grid on the space. The size of each cell is

. This guarantees that the distance between any two nodes in a cell is no larger than

. Secondly, for each cell, we merge the set

of nodes inside as a new node

in the skeleton graph. Finally, given two nodes

and

in the skeleton graph, let

and

be the corresponding node sets of

and

, respectively. If there exists

and

, such that

is an edge in the original geospatial graph, we add an edge between

and

in the skeleton graph. Then, the skeleton graph is successfully constructed.

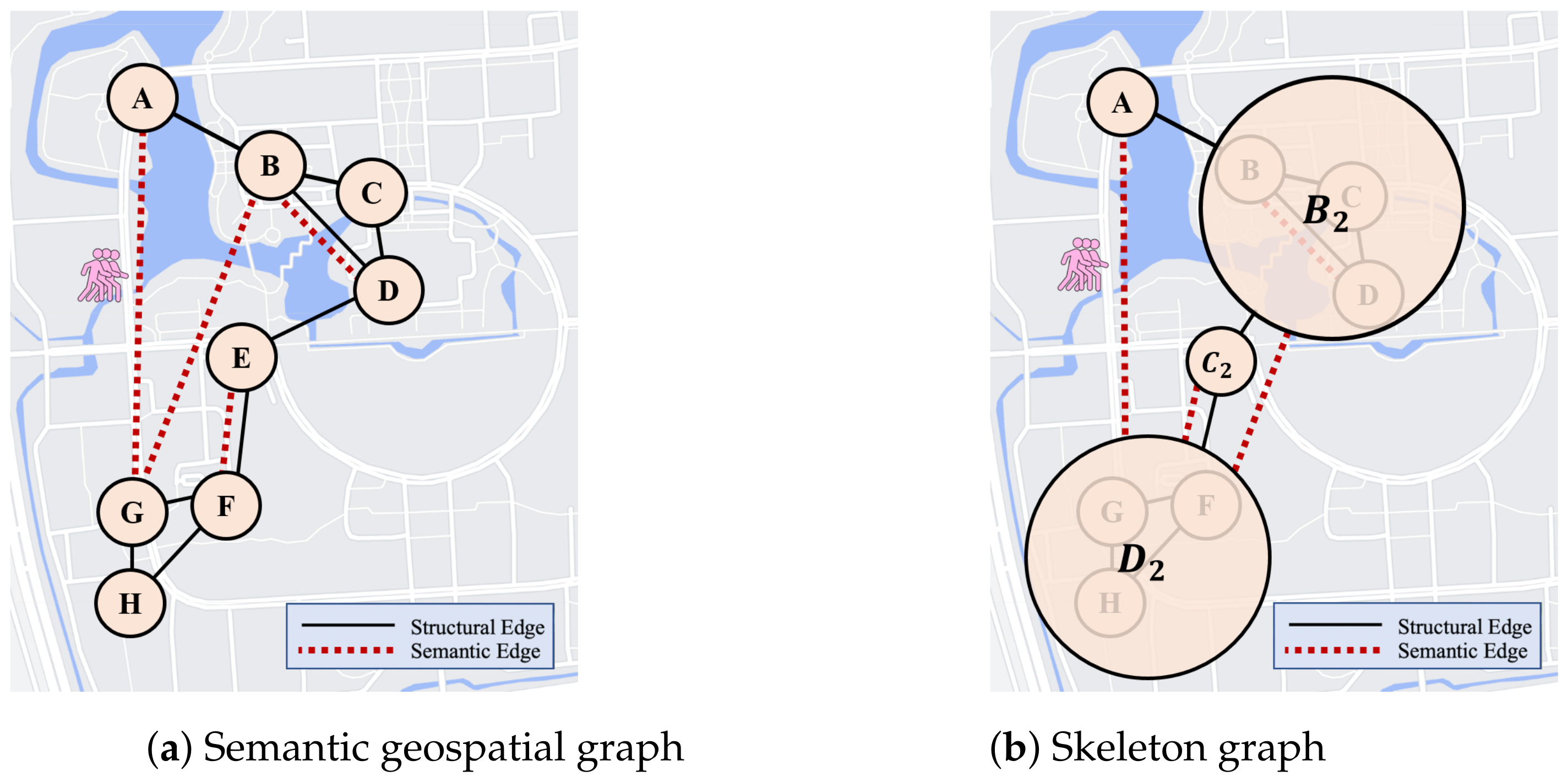

Figure 2 shows an example of the semantic geospatial graph and the skeleton graph. Given a geospatial graph

, we inject the semantic edges (red dashed lines) into

G to generate a semantic geospatial graph

(

Figure 2a). To construct the skeleton graph, we classify the nodes in

Figure 2a into four clusters based on the location distance. Each cluster correspond to a node in

, i.e.,

,

,

,

. Based on the definition of the skeleton graph, the node pairs (

,

), (

,

), (

,

) are connected by a structural edge, (

,

), (

,

) and (

,

) are connected by a semantic edge. Given a node

v, we refer to the set of nodes connected to

v via structural edges as structural neighbors, and the set of nodes connected to

v via semantic edges as the semantic neighbors.

In summary, the semantic geospatial graph helps us to address the challenge of less connected nodes by injecting semantic relations. The skeleton graph enables us to overlook the errors introduced by the positioning devices and allows us to focus on the primary structure of the geospatial graph.

4.2. Solution Overview

The high-level idea of our solution is as follows: For a given geospatial graph, we construct a semantic geospatial graph and a skeleton graph . Then, we use a graph contrastive learning method named SE-GCL to learn the representation matrices and for and , respectively. Once we have learned the two representations, we aggregate them to obtain the final node representation.

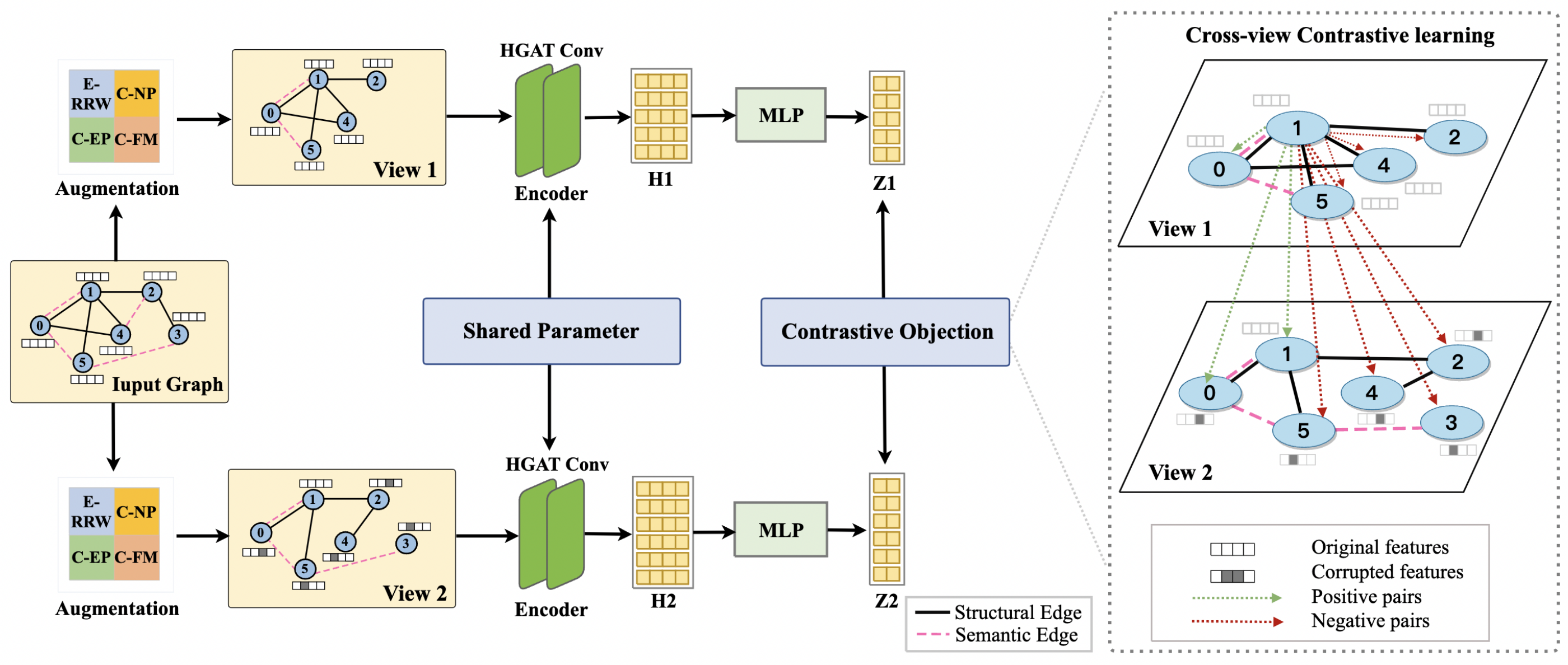

The framework of the graph contrastive learning model SE-GCL is shown in

Figure 3. For a given graph (either the semantic geospatial graph or the skeleton graph), SE-GCL generates two views by data augmentation functions. After the views are generated, we propose HGAT to capture their structural and semantic information. The outputs of HGAT will be passed to a multi-layer perceptron (MLP) network to generate node representations. We use

to denote the representation of node

in the semantic geospatial graph, and

to denote the representation of node

in the skeleton graph. For a node

in the geospatial graph, its final representation is defined as

where

is a node in the skeleton graph such that

is the node in the node cluster

that corresponds to

.

The learning process is described on the right side of

Figure 3. For each node in the views, SE-GCL aims to bring the positive samples closer and push the negative samples away. In this paper, we define the semantic neighbor nodes in the same view and the same node in different view as positive samples. The other nodes are regarded as negative samples.

In the remaining of this section, we introduce the graph contrastive learning method by elaborating on data augmentation (

Section 4.3), HGAT (

Section 4.4) and learning process (

Section 4.5) in turn. As the learning processes of the semantic geospatial graph and the skeleton graph are independent, for the ease of illustration, we abuse the notation

to denote both graphs, where

V is the set of nodes,

E is the set of structural edges and

is the set of semantic edges.

4.3. Data Augmentation

Randomly perturbing nodes and edges in the graph may sabotage the critical information in the graph. Therefore, we propose to augment data by considering the importance of each node. A natural idea to measure a node’s importance is to calculate the centrality measure of the node [

11,

28,

29]. However, most of the existing centrality measure methods focus on homogeneous graphs. Since there are two types of edges in the semantic geospatial graph, it is inappropriate to adopt existing centrality measures directly. To address this problem, we define a novel mixed node centrality measure and propose four data augmentation methods based on the measure.

4.3.1. Mixed Centrality Measure

To design a good mixed centrality measure, we propose three semantic-aware measures, namely D-ClusterRank, D-DIL and D-CC. We next elaborate these measures in turn.

D-ClusterRank measure. ClusterRank [

31] is a centrality measure based on the local aggregation coefficient:

where

represents the aggregation coefficient of the target node

,

represents the out degree of the node neighbor

and

represents the neighborhood of

.

is the nonlinear negative correlation function. Equation (

3) depicts the computation of the aggregation coefficient

for node

, where

represents the number of triangles formed with neighbors,

represents the degree of

and

represents the total number of triangles that make up a complete graph.

ClusterRank uses the degree centrality to measure the influence of each node, which treats each neighbor node equally. However, different nodes in the graph have different significance. Moreover, we need to consider two types of edges. Hence, it is inappropriate to use ClusterRank directly. To tackle this problem, we propose to improve ClusterRank as follows:

Firstly, a well-known approach for capturing the significance of different nodes is PageRank [

32]. PageRank measures the significance of the nodes in a graph. The rank of each node is the probability of random walk to the node. To distinguish the semantic and structural edges, we propose the following measure:

where

represents the connect edge number between

and

,

is the number of types of edges between

and

,

d is the damping factor,

N is the total number of nodes,

represents the probability random walk to each node and

is the degree of node

.

Equation (

4) evaluates the significance of nodes while taking into account the difference between semantic and structural edge. We next propose the improved D-ClusterRank. Specifically, we replace the node centrality measure in Equation (

2) with the significance measure in Equation (

4), as follows:

where

represents the structural neighborhood.

D-DIL measurement. DIL [

33] suggests that nodes connected to important edges have a high probability of being important nodes. It computes the weighted sum of a node’s degree and the importance of all connected edges:

where

is the degree of node

,

is the weight of the edge importance and

is the importance of edge

. The importance

is defined as follows:

where

p represents the number of triangles that the edge

participates in, and

represents the weight coefficient.

reflects the connectivity of edge

. The more triangles that

forms, the less important

is.

Similar to ClusterRank, DIL does not distinguish semantic and structural edges, which is inappropriate in handling the semantic geospatial graph and the skeleton graph. To tackle this problem, we propose the

D-DIL by considering both types of edges:

where

and

are the numbers of structural and semantic edges that are connected to

,

represents the number of edges between

and

and

is the number of triangles formed by the same type of edges as

.

D-CC measurement. Closeness Centrality (CC) [

34] measures the average shortest distance from each node to each other node:

where

is the shortest distance between node

and node

. Note that to compute the shortest distance, each edge on the shortest path has unit weight. As we have discussed in the

D-ClusterRank and

D-DIL, the CC measure does not consider the types of edges or the importance of each edge, making it inappropriate for our problem. To address this problem, we assign each edge a weight

, where

is the total number of all types of edges between

and

. Intuitively, if two nodes are connected by both structural and semantic edges, they are more important for the shortest distance. Then, we can define the

D-CC as follows:

where w-dist is the weighted distance between

and

.

Mixed centrality measure. Now, we are ready to present the mixed centrality measure.

To guarantee that the value of

falls into the range of

, we normalize

as

where

and

are the minimum and maximum values, respectively. The mixed centrality measure of node

is computed by:

where

is the sigmoid function and

is the temperature parameter to adjust the distribution.

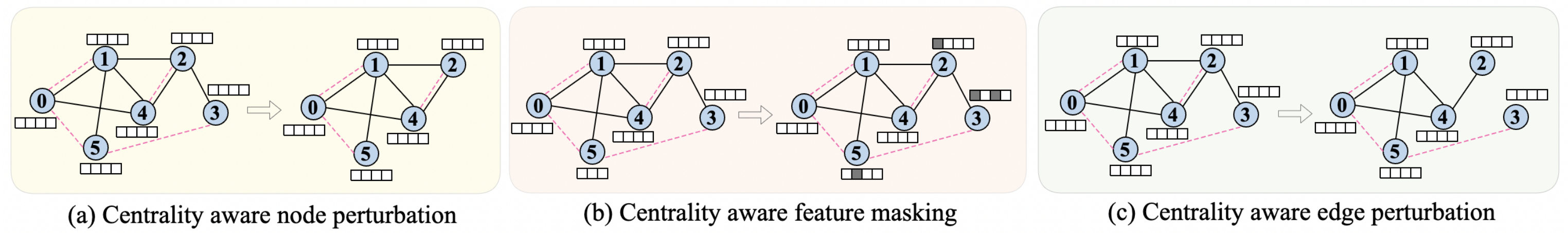

4.3.2. Augmentation Methods

The mixed centrality measure evaluates the significance of each node in the graph. Based on the mixed centrality measure, we next propose four data augmentation methods to preserve important information in the graph. We propose four augmentation methods, including Enhanced Ripple Random Walker (E-RRW), Centrality aware node perturbation (C-NP), Centrality aware Feature Masking (C-FM) and Centrality aware Edge Perturbation (C-EP). We next elaborate on the four methods in turn.

Enhanced Ripple Random Walker (E-RRW). Ripple Random Walker (RRW) [

35] is a sub-graph sampling method. It solves the problem of neighbor explosion and node dependence in random walk, and further reduces resource occupation and computing cost. Motivated by the above advantages, we propose a novel data augmentation method, namely E-RRW. Specifically, we select the initial starting node based on the mixed centrality measure. Then, we generate augmented views by constructing sub-graphs with RRW.

Figure 4 shows the procedure of the E-RRW method. E-RRW generates two augmented sub-graphs from the original graph as follows: First, E-RRW selects the node with the largest mixed centrality as the initial node in the first sub-graph, denoted by

. After that, E-RRW collects

’s

k-hop neighborhood

and calculates a score for each node

:

where

is the mixed centrality of

and

b is a constant. We select the node

with the largest score as the initial node

in the second sub-graph,

Starting from

, E-RRW randomly samples

percentage of nodes from the unselected neighbors of the selected nodes, where the expansion ratio

is the proportion of nodes sampled from the neighbors. When

is close to 0, the ripple random walk acts like random sampling. When

is close to 1, the ripple random walk acts like breadth-first search. We repeat the sampling process until the number of nodes in each sub-graph reaches a predefined threshold.

Figure 4.

E-RRW data augmentation method. The red nodes represent the initial nodes. The yellow nodes represent the first step. The green nodes represent the second step. The orange nodes represent the third step.

Figure 4.

E-RRW data augmentation method. The red nodes represent the initial nodes. The yellow nodes represent the first step. The green nodes represent the second step. The orange nodes represent the third step.

The detail process of E-RRW is shown in Algorithm 1. First, E-RRW selects the initial nodes for the two sub-graphs (lines 1–2). Starting from the initial nodes, E-RRW expand the node sets of the two sub-graphs by RRW sampling (lines 13–18) from the original graph

G (lines 3–4 and 7–12). Finally, E-RRW constructs the sub-graphs based on the extracted nodes (lines 5–6).

| Algorithm 1: E-RRW |

![Remotesensing 15 00880 i001]() |

E-RRW has the following advantages: (1) It still preserves important nodes in the graph after sampling. (2) With a size constraint, E-RRW generates small-scale sub-graphs, which greatly reduces the burden of memory and computing resources in the training process. (3) E-RRW ensures that the two generated sub-graphs are very much alike, making the learning model easy to be optimized.

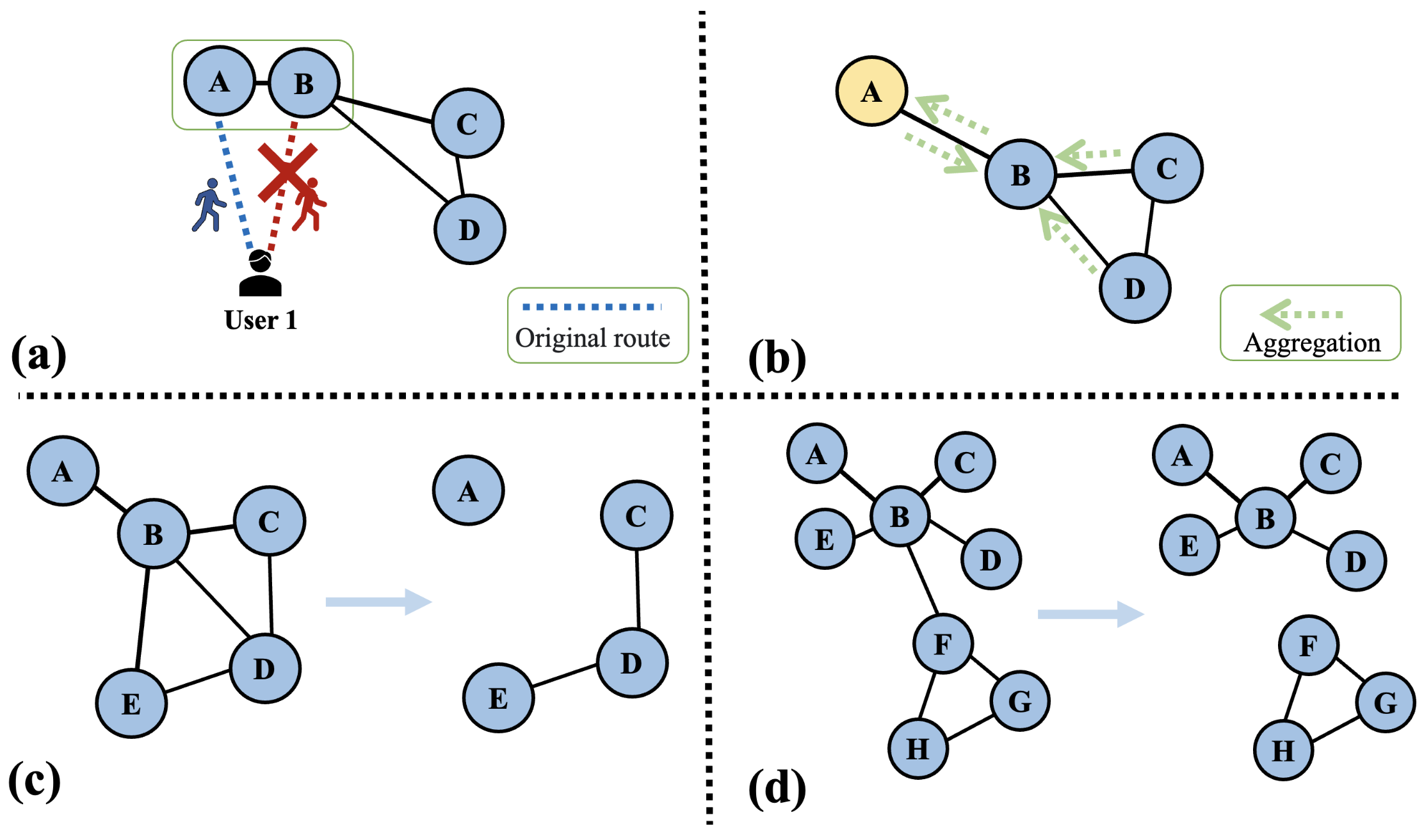

Centrality aware node perturbation (C-NP). As shown in

Figure 5a, the C-NP augmentation method deletes a fraction of nodes in the input graph based on the nodes’ mixed centrality measure. As the nodes with higher mixed centrality measure are more important, we retain such nodes with a higher probability. Formally, we define a perturbing vector that is subjected to the Bernoulli distribution

, where the probability of

is equal to

, i.e.,

. Then, the C-NP augmentation method deletes the node

with probability

.

Centrality-aware feature masking (C-FM). As shown in

Figure 5b, the C-FM augmentation method masks a fraction of dimensions with zeros in node features. We assume that the features in the nodes with a large mixed centrality measure should be important, and define the masking probability of features based on the mixed centrality measure. Formally, we sample a random matrix

, where

M is the feature dimension and

N is the number of nodes. Each element in

is drawn from a Bernoulli distribution, i.e.,

. The C-FM augmentation method masks the feature matrix by

where ∘ represents the dot product operation.

Centrality-aware edge perturbation (C-EP). As shown in

Figure 5c, C-EP augmentation adds or removes some edges in the graph. C-EP perturbs the edges in two steps: (1) For each edge

, we delete it with probability proportional to Bernoulli distribution, i.e.,

, where

and

are the mixed centrality of

and

, respectively. (2) For each pair of unconnected nodes

and

, we add an edge

with probability proportional to Bernoulli distribution, i.e.,

.

4.4. Heterogeneous Graph Attention Network (HGAT)

We have presented data augmentation methods to generate sub-graphs as views for contrastive learning. In this subsection, we propose HGAT to capture extensive structural and semantic information from the generated views. Heterogeneous graphs are composed of different types of nodes and edges. The features of nodes and edges differ in types and dimensionality. Compared with general heterogeneous graphs, the semantic graph and the skeleton graph are special cases with their own properties. In either the semantic graph or the skeleton graph, there is only one type of node. In this paper, we need to aggregate the direct neighbors connected through different types of edge. Compared with traditional heterogeneous graph attention networks [

36], it is a lightweight model that has fewer parameters and trains faster.

Before we present HGAT, we first introduce semantic edge feature vectors. The feature vector of a semantic edge is a two-dimensional vector . Let us recall that a semantic edge connects two nodes and if either they share similar features, i.e., , or they have been visited by the same user o, i.e., , . Thus, if and share similar features, the first dimension of is defined as the similarity between their features, i.e., . Otherwise, the first dimension is defined as the number of users that have visited both and . In both cases, the second dimension is the shortest distance between and in the original geospatial graph.

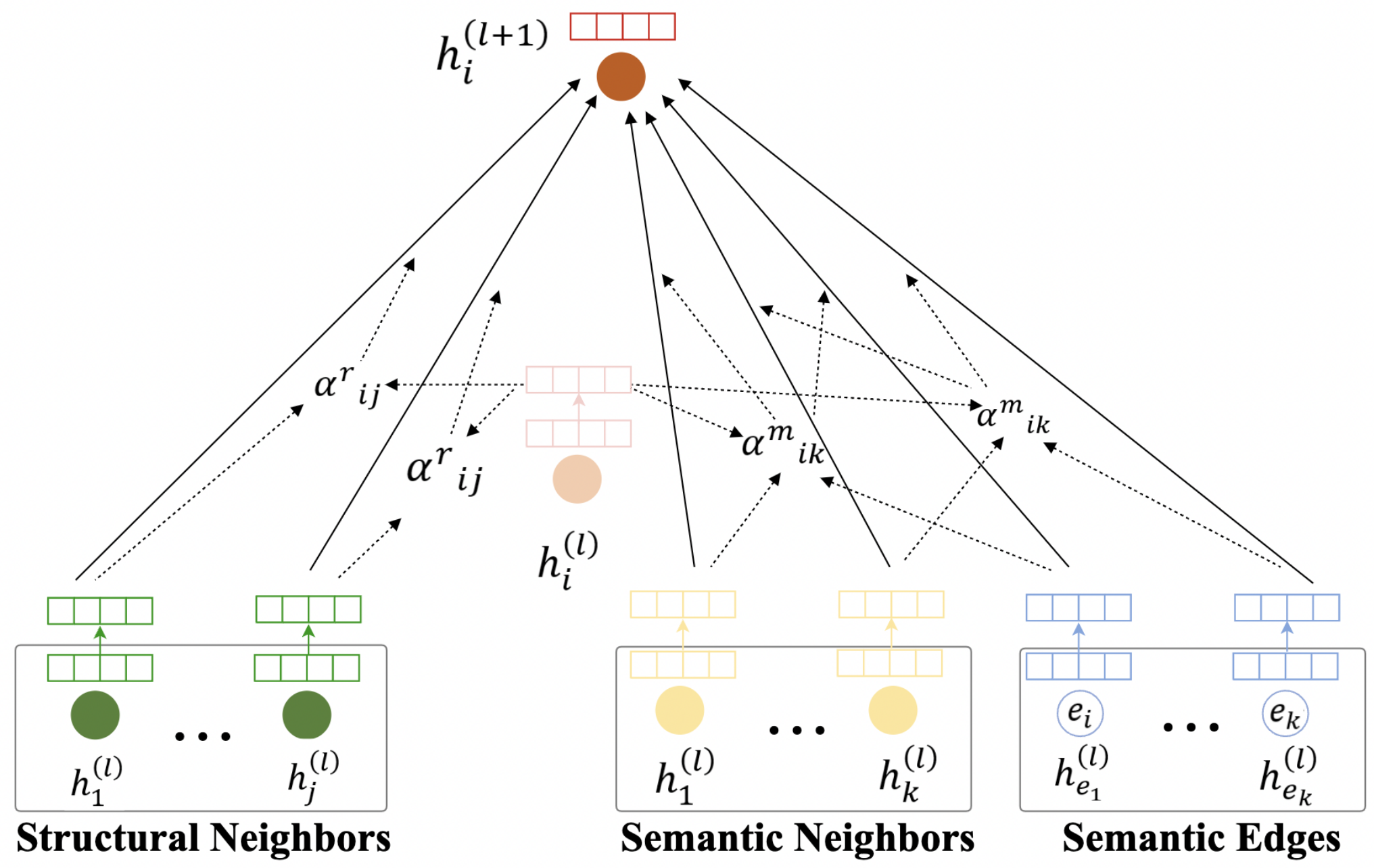

As shown in

Figure 6, HGAT calculates the representation for each node via an aggregation of information from structural neighbors, semantic neighbors and corresponding semantic edges as follows:

where

is the weighted sum of the structural node representation of the target node

at the

l layer,

is the aggregation of the semantic neighborhood node representation and the corresponding semantic edge representation at

l layer,

is the representation of

at the

l-th layer,

is the node feature of node

,

is the features of the semantic edge

,

is the activation function,

and

are parameters to be learned,

is the weight of the structural neighbor

and

is the weight of semantic neighbors and the corresponding semantic edges

where

,

are the structural and semantic neighbors of node

i and

W are

a parameters to be learned.

Finally, the outputs of HGAT are encoded by a MAP layer, i.e.,

where

is the activation function,

and

are parameters to be learned.

4.5. Contrastive Learning

Most of the existing contrastive learning methods [

9,

10,

11] take the same node under different views as positive examples. However, these methods overlook the problem of insufficient number of positive examples. To solve this problem, we take the semantic neighbors as the positive examples to expand the number of positive examples.

Please note that SE-GCL generates two views from the input graph by data augmentation and then maximizes the mutual information between encoded representations (i.e., the outputs of the MLP layer) of the two views. Let

and

denote encoded representation and the semantic neighbors of node

in one of the view, and

and

denote encoded representation and the semantic neighbors of

in the other view. Given a representation

, its positive examples include

,

and

. The negative samples consist of two parts: the set of all nodes except

, denoted by

, and

’s semantic neighbors in the same and different views, denoted by

. The objective function for positive examples in different views is defined as follows:

where

is the similarity between the representation of the same (semantic related) nodes in different views,

represents the similarity between

and its negative examples in same view,

represents the similarity between

and its negative examples in different views,

is the cosine similarity function and

is the temperature parameter. The objective function for each positive sample in the same view is calculated by:

where

represents the similarity between the representation of semantic-related nodes in the same view. The objective function of a view is defined as follows:

The total objective function of SE-GCL is the sum of the objective functions of both views.

Given a semantic geospatial graph or skeleton graph, SE-GCL generates two views by E-RRW, C-NP, C-FM and C-EP in turn. The views are then transformed into the HGAT network. The outputs of HGAT are passed to a multi-layer perceptron (MLP) network to generate final representations of nodes. Finally, the contrastive training process constantly adjusts the parameters to shorten the distance between positive sample pairs, while pushing the distance between negative sample pairs.