A Long Time Span-Specific Emitter Identification Method Based on Unsupervised Domain Adaptation

Abstract

:1. Introduction

- (1)

- The mathematical model and solution of the long time span SEI problem are presented for the first time. In this study, the problem is transformed into a domain adaptive problem. This idea is confirmed to be feasible through extensive experimental verification. To our knowledge, this is the first work to solve the long time span SEI problem.

- (2)

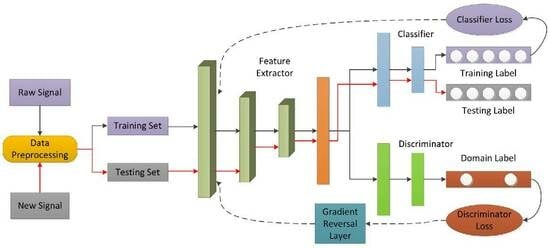

- A novel long time span SEI method, called LTS-SEI, is proposed in this study. The framework of the LTS-SEI method includes four modules: data preprocessing module, feature extractor, classifier, and domain discriminator. It can learn features with domain invariance, interclass separability, and intraclass compactness. These features are confirmed to be effective in identifying different time span signals.

- (3)

- A large number of satellite navigation signals are collected using a 13 m and a 40 m large-aperture antenna. We use these signals to construct two real datasets. Dataset A contains data on 10 navigation satellites and three data subsets. The time span between each data subset is 15 min, with a total time span of 30 min. Dataset B contains data on two navigation satellites and 14 data subsets. The time span between each data subset is in the range of 1–2 months, with a total time span of nearly 2 years.

- (4)

- Through extensive experiments, the proposed LTS-SEI method is confirmed to satisfactorily perform for the long time span SEI problem and outperform the existing methods. To our knowledge, in addition to our previous work [29], this is also the first work to study SEI using such real, long time span signals.

2. Problem Formulation and Solution

3. Methodology

3.1. Framework of LTS-SEI

3.2. Data-Preprocessing Module

- (1)

- We slice the source domain signal and target domain signal as follows:where represents length of each slice, represents slice step size, rounding down, and represents sample matrix.

- (2)

- We use the following equation to standard-normalize the source domain sample and target domain sample .where and are used to calculate the mean and standard deviation, respectively.

- (3)

- We label all source domain samples based on label set .

3.3. Feature Extractor

3.4. Classifier

- (1)

- Softmax Loss: Softmax Loss is often used for classification or recognition tasks in the field of signal processing. This is because its original intention is to enhance the interclass separation of features in a bid to identify different individuals. Without loss of generality, we also expect to minimize Softmax Loss to enhance the recognition performance of source domain samples. Softmax Loss can be expressed aswhere represents the number of source domain samples, and denotes the true labels of the source domain samples and is represented as a one-hot vector.

- (2)

- Center Loss: Center Loss was first proposed for face recognition. It can map data with intraclass diversity into feature spaces that are close to each other. Center Loss has been applied in the fields of image classification and modulation recognition to learn discriminative features. By continuously optimizing the distance between features and their clustering centers, similar samples become more compact after being mapped to the feature space. For the classifier we designed (shown in Figure 4), the source domain sample features output by the last fully connected layer are used to calculate the Center loss . Noteworthily, comprises two parts. The first part can reduce the distance between sample features and their class centers to enhance intraclass compactness of features. The second part will control the distance between different class centers to enhance the interclass separation of features. can be expressed aswhere represents the number of small batch samples, represents the feature vector extracted from the source domain sample by the last fully connected layer, and represents the class center corresponding to the real label of . is used to calculate the square Euclidean distance. represents a variable parameter that controls the distance between different class centers. After each iteration, the class centers of the entire training set need to be updated because all training sample features need to be recalculated. However, this is cumbersome and impractical because the model uses only a small batch of samples to participate in training each time. Therefore, we use the mean of small batch sample features to approximate the global class centers. Batch class center can be described aswhere and represent the features and their numbers of the same category as , respectively. Since does not train with the network parameters, we introduce to control the learning rate of to prevent singular samples in the training set from causing considerable fluctuation for class centers in the feature space. The update method of is designed as follows:where represents the class center updated by class at time , represents the class center updated by class at time , and represents the impulse function. When the condition in the parentheses holds, . Otherwise, .

- (3)

- HOMM3 Loss: We added a new constraint to the deep-features output by the classifier to enhance the domain adaptation ability of the model. By optimizing the feature-matching loss, the source domain and target domain are forced to align in the deep-feature space. High-order statistics (such as high-order moments and high-order cumulants) are typically used to describe the intrinsic distribution of signals and are also commonly used as recognition features of different signals. When a random signal follows a Gaussian distribution, its statistical characteristics can be understood through a mathematical expectation (first-order statistic) and an autocorrelation function (second-order statistic). However, the expression of statistical characteristics of non-Gaussian distribution signals by low-order statistics is limited, which may impact the effectiveness of domain matching. We propose to reduce the distance between the high-order moments of the source domain sample features and target domain sample features by optimizing the high-order moment matching loss. However, the calculation of higher-order tensors introduces higher temporal and spatial complexities. When the number of neurons in the bottleneck layer is , the dimension of the order statistics reaches . In a bid to reduce complexity and achieve fine-grained domain alignment of features, we selected the last fully connected layer of the classifier as the bottleneck layer and used this layer to calculate third-order statistics of the features. At this time, the spatial complexity of the feature-matching loss in the bottleneck layer is . This order of magnitude is acceptable for the model to learn domain-invariant features. The third-order moment matching loss can be described aswhere represents the number of categories of specific emitters, and and represent the number of small batch samples in the source domain and target domain, respectively. and represent the bottleneck layers of the source classifier and the target classifier, respectively. They all output deep features with dimensions. and represent the parameters of the bottleneck layers of the source classifier and target classifier, respectively. and represent the source domain samples and target domain samples, respectively. represents a vector product operator. represents the third-order tensor power of vector and can be expressed asSince the source domain classifier and target domain classifier share network parameters, can be simplified asAdditionally, because the bottleneck layer is the last fully connected layer of the classifier, can be further denoted asFinally, the hybrid loss of the classifier can be represented aswhere and denote parameters for the weight of control Center Loss and HOMM3 Loss, respectively.

3.5. Domain Discriminator and Gradient Reversal Layer

3.6. Optimization Problem of LTS-SEI

3.7. Optimization Method of LTS-SEI

| Algorithm 1 The optimization and testing process of the LTS-SEI framework |

| Input: Source domain signal , Source domain signal label , Target domain signal , Source domain label , Target domain label . Output: Optimal network parameter , Target domain sample label . 1. Obtain source domain sample , target domain sample, and source domain sample label . (See data-preprocessing module.) 2. Forward propagation: (1) From feature extractor to classifier: Input and into the feature extractor to obtain shallow features and . (See Equation (4)) Input and into the classifier to obtain deep features and , prediction label , and prediction probability of . (See Equations (5)–(7)) Compute Softmax Loss using and . (See Equation (8)) Compute Center Loss using and . (See Equation (9)) Compute HOMM3 Loss using and . (See Equation (16)) Compute the hybrid loss of the classifier. (See Equation (17)) (2) From feature extractor to domain discriminator: Input and into the feature extractor to obtain shallow features and . (See Equation (4)) Compute domain discrimination loss using , , and . (See Equation (18)) 3. Back propagation: (1) Calculate gradient information , , and . (2) Update , , and through random gradient descent. (See Equations (22)–(24)) 4. Repeat Step 2 and 3 until the maximum number of iterations is met. 5. Save the optimal parameter after completing the LTS-SEI training. 6. Input into the feature extractor () and classifier (). 7. Output target domain sample label . |

4. Dataset

4.1. Dataset A

4.2. Dataset B

5. Experimental Results and Discussion

5.1. Experimental Data

5.2. Identification Model

5.3. Parameters Setting

- (1)

- Learning rate : Consistent with traditional models, is used to control the learning rate of the feature extractor, classifier, and domain discriminator. For Dataset A, which has a short time span, Model A is easy to train. The learning rate of Model A is set to the commonly used value of 0.01. For Dataset B, which has a long time span, the learning rate of Model B gradually decreases with the increase in iterations to achieve fast convergence. is defined aswhere represents the current number of iterations of the model and the total number of iterations of the model.

- (2)

- Reversal Scalar : is a weight scalar used to control negative gradient. Similar to (1), we set the reversal scalar of Model A to a constant. For Model B, as gradually decreases, is set as an increasing function with respect to number of iterations in a bid to encourage the feature extractor to continuously learn domain-invariant features. is represented as

- (3)

- Epoch : After multiple experimental verifications, we determined that for Data Subsets B3, B6, and B12, Model B can achieve nearly 100% accuracy through only 100 iterations. Therefore, for these three data subsets, the number of iterations of the model are set to 100. The number of iterations for other data subsets are 300.

- (4)

- Center Loss weight and HOMM3 Loss weight : We set the weight factors of the two loss functions to the same value to quickly determine suitable hyperparameters and . The weight range is [0, 1] and the step size is 0.001. We experimentally determined that the domain adaptation ability of the model decreases when the two weight factors are small. The model may overfitting when the two weight factors are large. Model A can maintain a high recognition accuracy on Dataset A when both weight factors are 0.1. Model B can maintain a high recognition accuracy on Dataset B when both weight factors are 0.01.

5.4. Evaluation Criteria

5.5. Performance Comparison with Domain Adaptation Methods

5.6. Feature Visualization

5.7. Specific Emitter Identification Results

5.8. Training Accuracy and Loss of LTS-SEI

5.9. Ablation Experiment

5.10. Expansion to Small Training Samples

6. Conclusions

- (1)

- In this study, we used 13-m and 40-m large-aperture antennas to receive space signals, which enhanced the quality of the signals. However, new methods need to be studied when addressing low SNR-specific emitter signals with long time spans.

- (2)

- The experimental data in this study are of high-orbit satellite signals, with the sampling rate of the acquisition equipment being 250 MHz. Sufficient data samples ensure effective training of deep models. However, we must develop small samples for long time span SEI methods when the number of training samples is small.

- (3)

- In practice, the model’s predictive ability must be based on real training data without labels. Therefore, we must design new unsupervised learning algorithms to further enhance the practicality of long time span SEI.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xie, C.; Zhang, L.; Zhong, Z. Few-Shot Unsupervised Specific Emitter Identification Based on Density Peak Clustering Algorithm and Meta-Learning. IEEE Sens. J. 2022, 22, 18008–18020. [Google Scholar] [CrossRef]

- Xu, T.; Wei, Z. Waveform Defence Against Deep Learning Generative Adversarial Network Attacks. In Proceedings of the 2022 13th International Symposium on Communication Systems, Networks and Digital Signal Processing (CSNDSP), Porto, Portugal, 20–22 July 2022; pp. 503–508. [Google Scholar] [CrossRef]

- Zhang, X.; Li, T.; Gong, P.; Zha, X.; Liu, R. Variable-Modulation Specific Emitter Identification With Domain Adaptation. IEEE Trans. Inf. Forensics Secur. 2023, 18, 380–395. [Google Scholar] [CrossRef]

- Ibnkahla, M.; Cao, Y. A pilot-aided neural network for modeling and identification of nonlinear satellite mobile channels. In Proceedings of the 2008 Canadian Conference on Electrical and Computer Engineering, Niagara Falls, ON, Canada, 4–7 May 2008; pp. 001539–001542. [Google Scholar] [CrossRef]

- Roy, D.; Mukherjee, T.; Chatterjee, M.; Blasch, E.; Pasiliao, E. RFAL: Adversarial Learning for RF Transmitter Identification and Classification. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 783–801. [Google Scholar] [CrossRef]

- Niu, Y.; Xiong, W.; Li, Z.; Dong, B.; Fu, X. On the Identification Accuracy of the I/Q Imbalance-Based Specific Emitter Identification. IEEE Access 2023, 11, 75462–75473. [Google Scholar] [CrossRef]

- Shi, M.; Huang, Y.; Wang, G. Carrier Leakage Estimation Method for Cross-Receiver Specific Emitter Identification. IEEE Access 2021, 9, 26301–26312. [Google Scholar] [CrossRef]

- Zhang, L.; Huang, X.; Chai, Z.; Shen, Z.; Yang, X. Physical-Layer Hardware Authentication of Constellation Impairments Using Deep Learning. In Proceedings of the 2022 20th International Conference on Optical Communications and Networks (ICOCN), Shenzhen, China, 12–15 August 2022; pp. 1–3. [Google Scholar] [CrossRef]

- Yu, J.; Hu, A.; Peng, L. Blind DCTF-based estimation of carrier frequency offset for RF fingerprint extraction. In Proceedings of the 2016 8th International Conference on Wireless Communications & Signal Processing (WCSP), Yangzhou, China, 13–15 October 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, F.; Dobre, O.A.; Zhong, Z. Specific Emitter Identification via Hilbert–Huang Transform in Single-Hop and Relaying Scenarios. IEEE Trans. Inf. Forensics Secur. 2016, 11, 1192–1205. [Google Scholar] [CrossRef]

- Satija, U.; Trivedi, N.; Biswal, G.; Ramkumar, B. Specific Emitter Identification Based on Variational Mode Decomposition and Spectral Features in Single Hop and Relaying Scenarios. IEEE Trans. Inf. Forensics Secur. 2019, 14, 581–591. [Google Scholar] [CrossRef]

- Tan, K.; Yan, W.; Zhang, L.; Tang, M.; Zhang, Y. Specific Emitter Identification Based on Software-Defined Radio and Decision Fusion. IEEE Access 2021, 9, 86217–86229. [Google Scholar] [CrossRef]

- Sun, L.; Wang, X.; Yang, A.; Huang, Z. Radio Frequency Fingerprint Extraction Based on Multi-Dimension Approximate Entropy. IEEE Signal Process. Lett. 2020, 27, 471–475. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, J.; Xu, W.; Li, H.; Yang, L. Specific Emitter Identification Based on Joint Wavelet Packet Analysis. In Proceedings of the 2022 IEEE 10th International Conference on Information, Communication and Networks (ICICN), Zhangye, China, 23–24 August 2022; pp. 369–374. [Google Scholar] [CrossRef]

- Ramasubramanian, M.; Banerjee, C.; Roy, D.; Pasiliao, E.; Mukherjee, T. Exploiting Spatio-Temporal Properties of I/Q Signal Data Using 3D Convolution for RF Transmitter Identification. IEEE J. Radio Freq. Identif. 2021, 5, 113–127. [Google Scholar] [CrossRef]

- Yang, H.; Zhang, H.; Wang, H.; Guo, Z. A novel approach for unlabeled samples in radiation source identification. J. Syst. Eng. Electron. 2022, 33, 354–359. [Google Scholar] [CrossRef]

- Wang, Y.; Gui, G.; Lin, Y.; Wu, H.-C.; Yuen, C.; Adachi, F. Few-Shot Specific Emitter Identification via Deep Metric Ensemble Learning. IEEE Internet Things J. 2022, 9, 24980–24994. [Google Scholar] [CrossRef]

- Chen, S.; Zheng, S.; Yang, L.; Yang, X. Deep Learning for Large-Scale Real-World ACARS and ADS-B Radio Signal Classification. IEEE Access 2019, 7, 89256–89264. [Google Scholar] [CrossRef]

- Yu, J.; Hu, A.; Li, G.; Peng, L. A Robust RF Fingerprinting Approach Using Multisampling Convolutional Neural Network. IEEE Internet Things J. 2019, 6, 6786–6799. [Google Scholar] [CrossRef]

- Ding, L.; Wang, S.; Wang, F.; Zhang, W. Specific Emitter Identification via Convolutional Neural Networks. IEEE Commun. Lett. 2018, 22, 2591–2594. [Google Scholar] [CrossRef]

- Wang, Y.; Gui, G.; Gacanin, H.; Ohtsuki, T.; Dobre, O.A.; Poor, H.V. An Efficient Specific Emitter Identification Method Based on Complex-Valued Neural Networks and Network Compression. IEEE J. Sel. Areas Commun. 2021, 39, 2305–2317. [Google Scholar] [CrossRef]

- Li, H.; Liao, Y.; Wang, W.; Hui, J.; Liu, J.; Liu, X. A Novel Time-Domain Graph Tensor Attention Network for Specific Emitter Identification. IEEE Trans. Instrum. Meas. 2023, 72, 5501414. [Google Scholar] [CrossRef]

- Xie, C.; Zhang, L.; Zhong, Z. A Novel Method for Few-Shot Specific Emitter Identification in Non-Cooperative Scenarios. IEEE Access 2023, 11, 11934–11946. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, Q.; He, S.; Shi, Z. A Robust Few-Shot SEI Method Using Class-Reconstruction and Adversarial Training. In Proceedings of the 2022 IEEE 96th Vehicular Technology Conference (VTC2022-Fall), London, UK, 26–29 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Bu, K.; He, Y.; Jing, X.; Han, J. Adversarial Transfer Learning for Deep Learning Based Automatic Modulation Classification. IEEE Signal Process. Lett. 2020, 27, 880–884. [Google Scholar] [CrossRef]

- Gong, J.; Xu, X.; Lei, Y. Unsupervised Specific Emitter Identification Method Using Radio-Frequency Fingerprint Embedded InfoGAN. IEEE Trans. Inf. Forensics Secur. 2020, 15, 2898–2913. [Google Scholar] [CrossRef]

- Fu, X.; Peng, Y.; Liu, Y.; Lin, Y.; Gui, G.; Gacanin, H.; Adachi, F. Semi-Supervised Specific Emitter Identification Method Using Metric-Adversarial Training. IEEE Internet Things J. 2023, 10, 10778–10789. [Google Scholar] [CrossRef]

- Tan, K.; Yan, W.; Zhang, L.; Ling, Q.; Xu, C. Semi-Supervised Specific Emitter Identification Based on Bispectrum Feature Extraction CGAN in Multiple Communication Scenarios. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 292–310. [Google Scholar] [CrossRef]

- Liu, P.; Guo, L.; Zhao, H.; Shang, P.; Chu, Z.; Lu, X. A Novel Method for Recognizing Space Radiation Sources Based on Multi-Scale Residual Prototype Learning Network. Sensors 2023, 23, 4708. [Google Scholar] [CrossRef]

- Hanna, S.; Karunaratne, S.; Cabric, D. Open Set Wireless Transmitter Authorization: Deep Learning Approaches and Dataset Considerations. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 59–72. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, W.; Wang, H.-M. GAN Against Adversarial Attacks in Radio Signal Classification. IEEE Commun. Lett. 2022, 26, 2851–2854. [Google Scholar] [CrossRef]

- Wang, C.; Fu, X.; Wang, Y.; Gui, G.; Gacanin, H.; Sari, H.; Adachi, F. Interpolative Metric Learning for Few-Shot Specific Emitter Identification. IEEE Trans. Veh. Technology. 2023, 1–5. [Google Scholar] [CrossRef]

- Tzeng, E.; Hoffman, J.; Zhang, N.; Saenko, K.; Darrell, T. Deep Domain Confusion: Maximizing for Domain Invariance. Comput. Sci. 2014. [Google Scholar] [CrossRef]

- Zeng, M.; Liu, Z.; Wang, Z.; Liu, H.; Li, Y.; Yang, H. An Adaptive Specific Emitter Identification System for Dynamic Noise Domain. IEEE Internet Things J. 2022, 9, 25117–25135. [Google Scholar] [CrossRef]

- Zhang, R.; Yin, Z.; Yang, Z.; Wu, Z.; Zhao, Y. Unsupervised Domain Adaptation based Modulation Classification for Overlapped Signals. In Proceedings of the 2022 9th International Conference on Dependable Systems and Their Applications (DSA), Wulumuqi, China, 4–5 August 2022; pp. 1014–1015. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, B.; Zhang, J.; Yang, N.; Wei, G.; Guo, D. Specific Emitter Identification Based on Deep Adversarial Domain Adaptation. In Proceedings of the 2021 4th International Conference on Information Communication and Signal Processing (ICICSP), Shanghai, China, 24–26 September 2021; pp. 104–109. [Google Scholar] [CrossRef]

- Zhang, B.; Fang, J.; Ma, Z. Underwater Target Recognition Method Based on Domain Adaptation. In Proceedings of the 2021 4th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Yibin, China, 20–22 August 2021; pp. 452–455. [Google Scholar] [CrossRef]

- Zellinger, W.; Moser, B.A.; Grubinger, T.; Lughofer, E.; Natschläger, T.; Saminger-Platz, S. Robust unsupervised domain adaptation for neural networks via moment alignment. Inf. Sci. Int. J. 2019, 483, 174–191. [Google Scholar] [CrossRef]

- Wang, M.; Deng, W. Deep visual domain adaptation: A survey. Neurocomputing 2018, 312, 135–153. [Google Scholar] [CrossRef]

- Chen, C.; Fu, Z.; Chen, Z.; Jin, S.; Cheng, Z.; Jin, X.; Hua, X.-S. HoMM: Higher-order Moment Matching for Unsupervised Domain Adaptation. arXiv 2019, arXiv: 1912.11976. [Google Scholar] [CrossRef]

- Ganin, Y.; Lempitsky, V. Unsupervised Domain Adaptation by Backpropagation. arXiv 2014, arXiv:1409.7495. [Google Scholar] [CrossRef]

- Gong, B.; Grauman, K.; Sha, F. Connecting the Dots with Landmarks: Discriminatively Learning Domain-Invariant Features for Unsupervised Domain Adaptation. In Proceedings of the International Conference on International Conference on Machine Learning JMLR.org, Beijing, China, 21–26 June 2014. [Google Scholar]

- Long, M.; Cao, Y.; Wang, J.; Jordan, M. Learning Transferable Features with Deep Adaptation Networks. In Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar]

- Chen, C.; Chen, Z.; Jiang, B.; Jin, X. Joint Domain Alignment and Discriminative Feature Learning for Unsupervised Deep Domain Adaptation. In Proceedings of the National Conference on Artificial Intelligence Association for the Advancement of Artificial Intelligence (AAAI), Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Saenko, K.; Darrell, T. Adversarial Discriminative Domain Adaptation. arXiv 2017, arXiv:1702.05464. [Google Scholar] [CrossRef]

- Zhang, H.; Zhou, F.; Wu, Q.; Wu, W.; Hu, R.Q. A Novel Automatic Modulation Classification Scheme Based on Multi-Scale Networks. IEEE Trans. Cogn. Commun. Netw. 2022, 8, 97–110. [Google Scholar] [CrossRef]

| RFFs Extraction Method | Work | Extracted RFFs/Models | Performance | Advantages | Disadvantages |

|---|---|---|---|---|---|

| Direct Extraction | [6] | I/Q Imbalance | SNR 28 dB, Pcc 100% | Lower sample size; no complex operations | Need to carefully design features and classifiers; require prior information on signal parameters |

| [7] | I/Q Imbalance | Unknown SNR, Pcc 100% | |||

| [8] | I/Q Constellation Impairments | SNR 15 dB, Pcc 98% | |||

| [9] | CFO | Only RFFs extraction, no SEI | |||

| Domain Transformation | [10] | Hilbert Huang Transform | SNR 0 dB, Pcc 80% | Lower sample size; more domain transformation RFFs | Need to carefully design RFFs and classifiers; high computational complexity |

| [11] | VMD-SF | SNR −2 dB, Pcc 80% | |||

| [12] | tvf-EMD | SNR 16 dB, Pcc 85% | |||

| [13] | MApEn | SNR = 15 dB, Pcc 95.65% | |||

| [14] | Joint Wavelet Packet Analysis | SNR 0 dB, Pcc 83% | |||

| Deep Learning | [18] | Inception-Residual Neural Network | SNR 9 dB, Pcc 92% | Strong RFFs extraction ability; end-to-end recognition; enhanced recognition effect | Large number of training samples; label annotations; longer training time |

| [19] | MSCNN | SNR 30 dB, Pcc 97% | |||

| [20] | CNN | Achieves a Gain of about 3 dB | |||

| [21] | CVNN + Network Compression | At High SNR, Pcc 100% | |||

| [22] | TDGTAN | Unknown SNR, Pcc 100% |

| Data Subset | Specific Emitter 1 | Specific Emitter 2 |

|---|---|---|

| B1 | 8 May 2021 | 8 May 2021 |

| B2 | 24 May 2021 | 22 May 2021 |

| B3 | 9 June 2021 | 24 June 2021 |

| B4 | 10 July 2021 | 6 July 2021 |

| B5 | 19 August 2021 | 16 August 2021 |

| B6 | 8 September 2021 | 5 September 2021 |

| B7 | 14 October 2021 | 23 October 2021 |

| B8 | 6 December 2021 | 3 December 2021 |

| B9 | 11 February 2022 | 13 February 2022 |

| B10 | 8 March 2022 | 11 March 2022 |

| B11 | 10 May 2022 | 14 May 2022 |

| B12 | 15 June 2022 | 16 June 2022 |

| B13 | 7 August 2022 | 3 August 2022 |

| B14 | 21 September 2022 | 28 September 2022 |

| Symbol | Meaning | Model A | Model B |

|---|---|---|---|

| Center Loss weight | 0.1 | 0.01 | |

| HOMM3 Loss weight | 0.1 | 0.01 | |

| Initial learning rate | 0.0007 | 0.001 | |

| Learning rate | 0.01 | Equation (25) | |

| Reversal Scalar | 0.01 | Equation (26) | |

| Epoch | 100 | 100/300 | |

| Batch Size | 64 | 64 | |

| Center learning rate | 0.5 | 0.5 | |

| Marginal factor | 100 | 100 |

| Method | Average Recognition Accuracy | |||||

|---|---|---|---|---|---|---|

| A1->A2 | A1->A3 | B1->B2 | B1->B3 | B1->B4 | B1->B5 | |

| I/Q | 0.8958 | 0.8592 | 0.5200 | 0.8650 | 0.4792 | 0.4692 |

| MMD | 0.9108 | 0.8933 | 0.5400 | 0.8458 | 0.4692 | 0.5008 |

| DAN | 0.9367 | 0.8883 | 0.5456 | 0.8708 | 0.4667 | 0.4883 |

| JDA | 0.9425 | 0.8867 | 0.5233 | 0.8542 | 0.4975 | 0.4933 |

| CMD | 0.9833 | 0.9417 | 0.5308 | 0.8467 | 0.4625 | 0.4700 |

| HOMM | 0.9842 | 0.9492 | 0.5367 | 0.8433 | 0.4842 | 0.4967 |

| UDAB | 0.9975 | 0.9925 | 0.5550 | 0.9425 | 0.5208 | 0.5283 |

| LTS-SEI | 0.9995 | 0.9971 | 0.9867 | 0.9942 | 0.9650 | 0.9642 |

| Upper Limit | 0.9996 | 0.9992 | 0.9987 | 0.9983 | 0.9992 | 0.9929 |

| Method | Average Recognition Accuracy | |||||

|---|---|---|---|---|---|---|

| A1->A2 | A1->A3 | B1->B6 | B1->B7 | B1->B8 | B1->B9 | |

| I/Q | 0.8958 | 0.8592 | 0.9450 | 0.4842 | 0.5758 | 0.6817 |

| Center | 0.9550 | 0.9150 | 0.9533 | 0.4800 | 0.5750 | 0.6808 |

| MMD | 0.9542 | 0.9342 | 0.9467 | 0.5008 | 0.5742 | 0.6883 |

| DAN | 0.9758 | 0.9258 | 0.9517 | 0.4875 | 0.5850 | 0.6617 |

| JDA | 0.9517 | 0.9425 | 0.9483 | 0.4917 | 0.5850 | 0.6483 |

| CMD | 0.9283 | 0.8925 | 0.9575 | 0.4625 | 0.5633 | 0.6500 |

| HOMM | 0.9875 | 0.9575 | 0.9617 | 0.4850 | 0.6242 | 0.7150 |

| LTS-SEI | 0.9995 | 0.9971 | 0.9892 | 0.9567 | 0.9458 | 0.9850 |

| Upper Limit | 0.9996 | 0.9992 | 0.9962 | 0.9983 | 0.9983 | 0.9975 |

| Method | Average Recognition Accuracy | ||||||

|---|---|---|---|---|---|---|---|

| A1->A2 | A1->A3 | B1->B10 | B1->B11 | B1->B12 | B1->B13 | B1->B14 | |

| I/Q | 0.8958 | 0.8592 | 0.5083 | 0.4917 | 0.7308 | 0.5367 | 0.5150 |

| MMD | 0.9358 | 0.8467 | 0.5108 | 0.5200 | 0.7567 | 0.5558 | 0.5167 |

| DAN | 0.9117 | 0.8742 | 0.5550 | 0.4958 | 0.7892 | 0.5658 | 0.5233 |

| JDA | 0.9133 | 0.8425 | 0.5393 | 0.5092 | 0.7850 | 0.5283 | 0.5067 |

| CMD | 0.9058 | 0.8842 | 0.5358 | 0.5025 | 0.7208 | 0.5433 | 0.4858 |

| HOMM | 0.9533 | 0.9242 | 0.5383 | 0.5208 | 0.7417 | 0.5500 | 0.5025 |

| LTS-SEI | 0.9995 | 0.9971 | 0.9733 | 0.9392 | 0.9942 | 0.9533 | 0.9633 |

| Upper Limit | 0.9996 | 0.9992 | 0.9992 | 0.9979 | 0.9967 | 0.9933 | 0.9971 |

| Dataset | Component | |||||

|---|---|---|---|---|---|---|

| Original I/Q | Shallow Confrontation | Deep Alignment | LTS-SEI (No Center Loss) | LTS-SEI (No HOMM3 Loss) | LTS-SEI | |

| A1->A2 | 0.8958 | 0.9975 | 0.9375 | 0.9975 | 0.9992 | 0.9995 |

| A1->A3 | 0.8592 | 0.9925 | 0.8667 | 0.9946 | 0.9942 | 0.9971 |

| B1->B2 | 0.5200 | 0.5550 | 0.5708 | 0.9658 | 0.5742 | 0.9867 |

| B1->B3 | 0.8650 | 0.9425 | 0.8392 | 0.9992 | 0.9217 | 0.9942 |

| B1->B9 | 0.6817 | 0.6942 | 0.6800 | 0.9800 | 0.6958 | 0.9850 |

| B1->B14 | 0.5150 | 0.4950 | 0.4908 | 0.9425 | 0.5492 | 0.9633 |

| Datas Set | Component | |||||

|---|---|---|---|---|---|---|

| Original I/Q | Shallow Confrontation | Deep Alignment | LTS-SEI (No Center Loss) | LTS-SEI (No HOMM3 Loss) | LTS-SEI | |

| A1->A2 | 0.9410 | 0.9976 | 0.9450 | 0.9975 | 0.9992 | 0.9995 |

| A1->A3 | 0.8905 | 0.9931 | 0.8961 | 0.9946 | 0.9942 | 0.9971 |

| B1->B2 | 0.5190 | 0.5546 | 0.5710 | 0.9658 | 0.5757 | 0.9867 |

| B1->B3 | 0.8909 | 0.9446 | 0.8732 | 0.9992 | 0.9277 | 0.9942 |

| B1->B9 | 0.7097 | 0.7069 | 0.6969 | 0.9806 | 0.6976 | 0.9851 |

| B1->B14 | 0.5167 | 0.4956 | 0.4898 | 0.9441 | 0.5491 | 0.9633 |

| Dataset | Component | |||||

|---|---|---|---|---|---|---|

| Original I/Q | Shallow Confrontation | Deep Alignment | LTS-SEI (No Center Loss) | LTS-SEI (No HOMM3 Loss) | LTS-SEI | |

| A1->A2 | 0.8958 | 0.9975 | 0.9375 | 0.9975 | 0.9992 | 0.9995 |

| A1->A3 | 0.8592 | 0.9925 | 0.8667 | 0.9946 | 0.9942 | 0.9971 |

| B1->B2 | 0.5200 | 0.5550 | 0.5708 | 0.9658 | 0.5742 | 0.9867 |

| B1->B3 | 0.8650 | 0.9425 | 0.8392 | 0.9992 | 0.9217 | 0.9942 |

| B1->B9 | 0.6817 | 0.6942 | 0.6800 | 0.9800 | 0.6958 | 0.9850 |

| B1->B14 | 0.5150 | 0.4950 | 0.4808 | 0.9425 | 0.5492 | 0.9633 |

| Dataset | Component | |||||

|---|---|---|---|---|---|---|

| Original I/Q | Shallow Confrontation | Deep Alignment | LTS-SEI (No Center Loss) | LTS-SEI (No HOMM3 Loss) | LTS-SEI | |

| A1->A2 | 0.8703 | 0.9975 | 0.9370 | 0.9975 | 0.9992 | 0.9995 |

| A1->A3 | 0.8490 | 0.9925 | 0.8495 | 0.9946 | 0.9942 | 0.9971 |

| B1->B2 | 0.5190 | 0.5538 | 0.5660 | 0.9658 | 0.5686 | 0.9867 |

| B1->B3 | 0.8634 | 0.9425 | 0.8362 | 0.9992 | 0.9215 | 0.9942 |

| B1->B9 | 0.6742 | 0.6909 | 0.6752 | 0.9800 | 0.6957 | 0.9850 |

| B1->B14 | 0.5148 | 0.4950 | 0.4898 | 0.9424 | 0.5491 | 0.9633 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, P.; Guo, L.; Zhao, H.; Shang, P.; Chu, Z.; Lu, X. A Long Time Span-Specific Emitter Identification Method Based on Unsupervised Domain Adaptation. Remote Sens. 2023, 15, 5214. https://doi.org/10.3390/rs15215214

Liu P, Guo L, Zhao H, Shang P, Chu Z, Lu X. A Long Time Span-Specific Emitter Identification Method Based on Unsupervised Domain Adaptation. Remote Sensing. 2023; 15(21):5214. https://doi.org/10.3390/rs15215214

Chicago/Turabian StyleLiu, Pengfei, Lishu Guo, Hang Zhao, Peng Shang, Ziyue Chu, and Xiaochun Lu. 2023. "A Long Time Span-Specific Emitter Identification Method Based on Unsupervised Domain Adaptation" Remote Sensing 15, no. 21: 5214. https://doi.org/10.3390/rs15215214