CURI-YOLOv7: A Lightweight YOLOv7tiny Target Detector for Citrus Trees from UAV Remote Sensing Imagery Based on Embedded Device

Abstract

:1. Introduction

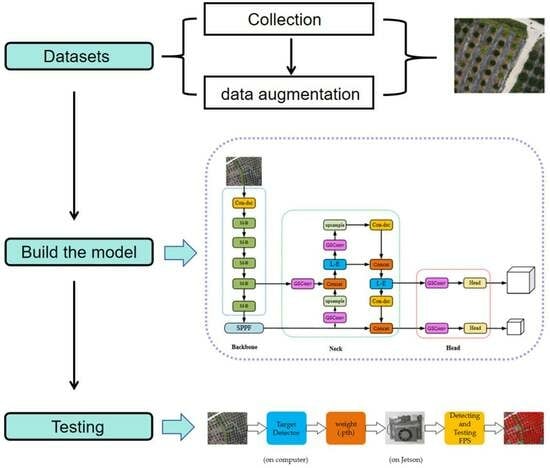

2. Materials and Methods

2.1. Collection and Production of Datasets

2.2. CURI-YOLOv7 Structure Detail

- (1)

- Redesigned backbone based on MobileOne-block structure

- (2)

- Replaced the original structure with a faster structure named SPPF

- (3)

- Removed the big target detection layer and redesigned the input structure and output structure of PANet

- (4)

- Replaced the ELAN structure in YOLOv7tiny with Lightweight-ELAN (L-E).

- (5)

- Replaced the convolutional layer in the neck network using GSConv and depthwise separable convolution.

2.2.1. Construction of Backbone

2.2.2. Spatial Pyramid Pooling and ELAN Improvements

2.2.3. Construction of the Input and Output Layers of the Neck

2.2.4. Fusion of GSConv and Depthwise Separable Convolution into the Neck

2.3. Embedded Device

2.4. Evaluation Indicators and Training Environment Setting

3. Results

3.1. Ablation Study

3.2. The Comparation of CURI-YOLOv7 on Embedded Device

3.3. The Comparison of CURI-YOLOv7 on Computer

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhou, Z.; Ming, R.; Zang, Y.; He, X.; Luo, X.; Lan, Y. Development status and countermeasures of agricultural aviation in China. Trans. Chin. Soc. Agric. Eng. 2017, 33, 1–13. [Google Scholar]

- Wang, L.; Lan, Y.; Zhang, Y.; Zhang, H.; Tahir, M.N.; Ou, S.; Liu, X.; Chen, P. Applications and prospects of agricultural unmanned aerial vehicle obstacle avoidance technology in China. Sensors 2019, 19, 642. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Lan, Y.; Chen, L.; Wang, X.; Liang, D. Current status and future trends of agricultural aerial spraying technology in China. Trans. Chin. Soc. Agric. Mach. 2014, 45, 53–59. [Google Scholar]

- Shi, Z.; Liang, Z.; Yang, Y.; Guo, Y. Status and prospect of agricultural remote sensing. Trans. Chin. Soc. Agric. Mach. 2015, 46, 247–260. [Google Scholar]

- Nie, J.; Yang, B. Monitoring Method of Crop Growth on a Large Scale Basedon Remote Sensing Technology. Comput. Simul. 2020, 37, 386–389+394. [Google Scholar]

- Shan, Y. Present situation development trend and countermeasures of citrus industry in China. J. Chin. Inst. Food Sci. Technol. 2008, 8, 1–8. [Google Scholar]

- Dai, J.; Xue, J.; Zhao, Q.; Wang, Q.; Cheng, B.; Zhang, G.; Jiang, L. Extraction of cotton seedling growth information using UAV visible light remote sensing images. Trans. Chin. Soc. Agric. Eng. 2010, 36, 63–71. [Google Scholar]

- Deng, J.; Ren, G.; Lan, Y.; Huang, H.; Zhang, Y. Low altitude unmanned aerial vehicle remote sensing image processing based on visible band. J. South China Agric. Univ. 2016, 37, 16–22. [Google Scholar]

- Lan, Y.; Deng, X.; Zeng, G. Advances in diagnosis of crop diseases, pests and weeds by UAV remote sensing. Smart Agric. 2019, 1, 1–19. [Google Scholar]

- Chen, Y.; Zhao, C.; Wang, X.; Ma, J.; Tian, Z. Prescription map generation intelligent system of precision agriculture based on knowledge model and WebGIS. Sci. Agric. Sin. 2007, pp, 1190–1197. [Google Scholar]

- Hao, L.; Shi, L.; Cao, L.; Gong, J.; Zhang, A. Research status and prospect of cotton terminal bud identification and location technology. J. Chin. Agric. Mech. 2018, 39, 72–78. [Google Scholar]

- Tian, H.; Fang, X.; Lan, Y.; Ma, C.; Huang, H.; Lu, X.; Zhao, D.; Liu, H.; Zhang, Y. Extraction of Citrus Trees from UAV Remote Sensing Imagery Using YOLOv5s and Coordinate Transformation. Remote Sens. 2022, 14, 4208. [Google Scholar] [CrossRef]

- Shu, M.; Li, S.; Wei, J.; Che, Y.; Li, B.; Ma, T. Extraction of citrus crown parameters using UAV platform. Trans. Chin. Soc. Agric. Eng. 2021, 37, 68–76. [Google Scholar]

- Sun, Y.; Han, J.; Chen, Z.; Shi, M.; Fu, H.; Yang, M. Monitoring method for UAV image of greenhouse and plastic-mulched Landcover based on deep learning. Trans. Chin. Soc. Agric. Mach. 2018, 49, 133–140. [Google Scholar]

- Wang, B.; Yang, G.; Yang, H.; Gu, J.; Zhao, D.; Xu, S.; Xu, B. UAV images for detecting maize tassel based on YOLO_X and transfer learning. Trans. Chin. Soc. Agric. Eng. 2022, 38, 53–62. [Google Scholar]

- Bao, W.; Xie, W.; Hu, G.; Wang, X.; Su, B. Wheat ear counting method in UAV images based on TPH-YOLO. Trans. Chin. Soc. Agric. Eng. 2023, 39, 155–161. [Google Scholar]

- Zhang, Y.; Lu, X.; Li, W.; Yan, K.; Mo, Z.; Lan, Y.; Wang, L. Detection of Power Poles in Orchards Based on Improved Yolov5s Model. Agronomy 2023, 13, 1705. [Google Scholar] [CrossRef]

- Luo, X.; Wu, Y.; Zhao, L. YOLOD: A Target Detection Method for UAV Aerial Imagery. Remote Sens. 2022, 14, 3240. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhou, J.; Yang, Y.; Liu, L.; Liu, F.; Kong, W. Rapid Target Detection of Fruit Trees Using UAV Imaging and Improved Light YOLOv4 Algorithm. Remote Sens. 2022, 14, 4324. [Google Scholar] [CrossRef]

- Basso, M.; Stocchero, D.; Ventura Bayan Henriques, R.; Vian, A.L.; Bredemeier, C.; Konzen, A.A.; Pignaton de Freitas, E. Proposal for an Embedded System Architecture Using a GNDVI Algorithm to Support UA V-Based Agrochemical Spraying. Sensors 2019, 19, 5397. [Google Scholar] [CrossRef]

- Ki, M.; Cha, J.; Lyu, H. Detect and Avoid System Based on Multi Sensor Fusion for UAV. In Proceedings of the 2018 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 17–19 October 2018; pp. 1107–1109. [Google Scholar]

- Chen, L.; Li, S.; Bai, Q.; Yang, J.; Jiang, S.; Miao, Y. Review of image classification algorithms based on convolutional neural networks. Remote Sens. 2021, 13, 4712. [Google Scholar] [CrossRef]

- Liu, F.; Liu, Y.K.; Lin, S.; Guo, W.Z.; Xu, F.; Zhang, B. Fast recognition method for tomatoes under complex environments based on improved YOLO. Trans. CSAM 2020, 51, 229–237. [Google Scholar]

- labelImg. Available online: https://github.com/tzutalin/labelImg (accessed on 15 June 2023).

- Vasu PK, A.; Gabriel, J.; Zhu, J.; Tuzel, O.; Ranjan, A. An improved one millisecond mobile backbone. arXiv 2022, arXiv:2206.04040. [Google Scholar]

- yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 7 July 2023).

- Li, J.; Ye, J. Edge-YOLO: Lightweight Infrared Object Detection Method Deployed on Edge Devices. Appl. Sci. 2023, 13, 4402. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Long, Y.; Yang, Z.; He, M. Recognizing apple targets before thinning using improved YOLOv7. Trans. Chin. Soc. Agric. Eng. 2023, 39, 191–199. [Google Scholar]

- Li, Z.; Yang, F.; Hao, Y. Small target detection algorithm for aerial photography based on residual network optimization. Foreign Electron. Meas. Technol. 2022, 41, 27–33. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Zhang, M.; Gao, F.; Yang, W.; Zhang, H. Wildlife Object Detection Method Applying Segmentation Gradient Flow and Fea ture Dimensionality Reduction. Electronics 2023, 12, 377. [Google Scholar] [CrossRef]

- Wu, T.; Zhang, Z.; Liu, Y.; Pei, W.; Chen, H. A lightweight small object detection algorithm based on improved SSD. Infrared. Laser Eng. 2018, 47, 703005. [Google Scholar]

- Kong, W.; Li, W.; Wang, Q.; Cao, P.; Song, Q. Design and implementation of lightweight network based on improved YOLOv4 algorithm. Comput. Eng. 2022, 48, 181–188. [Google Scholar]

- Caba, J.; Díaz, M.; Barba, J.; Guerra, R.; de la Torre, J.A.; López, S. Fpga-based on-board hyperspec tral imaging compression: Benchmarking performance and energy efficiency against gpu implementations. Remote Sens. 2020, 12, 3741. [Google Scholar] [CrossRef]

- Wang, C.; Wang, Q.; Wu, H.; Zhao, C.; Teng, G.; Li, J. Low-Altitude Remote Sensing Opium Poppy Image Detection Basedon Modified YOLOv3. Remote Sens. 2021, 13, 2130. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. Ieee Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. arXiv 2015, arXiv:1512.02325. [Google Scholar]

- Zhang, Y.; Xiao, W.; Lu, X.; Liu, A.; Qi, Y.; Liu, H.; Shi, Z.; Lan, Y. Method for detecting rice flowering spikelets using visible light images. Trans. Chin. Soc. Agric. Eng. 2021, 37, 253–262. [Google Scholar]

- Liu, Y.; Li, J.; Zhang, J.; Xu, Z. Research progress of two-dimensional human pose estimation based on deep learning. Comput. Eng. 2021, 47, 1–16. [Google Scholar]

| Stage | Block-Type | Stride | Kernel Size | Input Channels | Output Channels |

|---|---|---|---|---|---|

| 1 | Con des | 1 | 3 | 16 | |

| 2 | MobileOne-block | 2 | 1 | 16 | 32 |

| 3 | MobileOne-block | 2 | 1 | 32 | 64 |

| 4 | MobileOne-block | 2 | 1 | 64 | 128 |

| 5 | MobileOne-block | 2 | 1 | 128 | 256 |

| 6 | MobileOne-block | 2 | 1 | 256 | 512 |

| CPU | GPU | Size | DLA | Vision Accelerator |

|---|---|---|---|---|

| 6-core NVIDIA Carmel Arm v8.2 64-bit CPU | 384-core NVIDIA Volta™ architecture GPU with 48 Tensor Cores | 69.6 mm × 45 mm | 2 × NVDLA | 2 × PVA |

| CPU | GPU | CUDA | Pycharm | Pytorch | Numpy | Torchvision |

|---|---|---|---|---|---|---|

| i9-10900KF | RTX-3090 | 11.0 | 2020.1 × 64 | 1.7.1 | 1.21.5 | 0.8.2 |

| Backbone | SPPF&L-E | Neck | GFLOPs | Paras (M) | Weights (MB) | FPS (on Computer) |

|---|---|---|---|---|---|---|

| 13.181 | 6.014 | 23.1 | 83.07 | |||

| √ | 6.669 | 3.726 | 14.3 | 95.12 | ||

| √ | √ | 5.877 | 3.181 | 12.2 | 104.29 | |

| √ | √ | √ | 1.976 | 1.018 | 3.98 | 128.83 |

| Models | GFLOPs | Paras (M) | Weights (MB) | F1 | Recall (%) | Precision (%) | [email protected] (%) |

|---|---|---|---|---|---|---|---|

| Faster-Rcnn | 948.122 | 28.275 | 108 | 0.82 | 82.37 | 81.38 | 82.33 |

| SSD | 273.174 | 23.612 | 90.6 | 0.90 | 97.03 | 83.19 | 93.86 |

| YOLOv5s | 16.477 | 7.064 | 27.1 | 0.92 | 94.51 | 89.62 | 96.93 |

| YOLOv7tiny | 13.181 | 6.014 | 23.1 | 0.92 | 93.40 | 90.83 | 96.97 |

| YOLOv8n | 8.194 | 3.011 | 11.6 | 0.92 | 93.26 | 91.53 | 97.46 |

| CURI-YOLOv7 | 1.976 | 1.018 | 3.98 | 0.86 | 83.70 | 89.02 | 90.34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Fang, X.; Guo, J.; Wang, L.; Tian, H.; Yan, K.; Lan, Y. CURI-YOLOv7: A Lightweight YOLOv7tiny Target Detector for Citrus Trees from UAV Remote Sensing Imagery Based on Embedded Device. Remote Sens. 2023, 15, 4647. https://doi.org/10.3390/rs15194647

Zhang Y, Fang X, Guo J, Wang L, Tian H, Yan K, Lan Y. CURI-YOLOv7: A Lightweight YOLOv7tiny Target Detector for Citrus Trees from UAV Remote Sensing Imagery Based on Embedded Device. Remote Sensing. 2023; 15(19):4647. https://doi.org/10.3390/rs15194647

Chicago/Turabian StyleZhang, Yali, Xipeng Fang, Jun Guo, Linlin Wang, Haoxin Tian, Kangting Yan, and Yubin Lan. 2023. "CURI-YOLOv7: A Lightweight YOLOv7tiny Target Detector for Citrus Trees from UAV Remote Sensing Imagery Based on Embedded Device" Remote Sensing 15, no. 19: 4647. https://doi.org/10.3390/rs15194647