Surveillance Video Georeference Method Based on Real Scene Model with Geometry Priors

Abstract

:1. Introduction

2. Related Works

- For traditional monitoring, when the scale of the surveillance system exceeds the monitoring capabilities of humans, security operators must mentally map each surveillance monitor image to a corresponding area in the real world [9];

- This method is manually operated, so it has great automation potential;

- This method is unable to achieve accurate registration of images and actual ground.

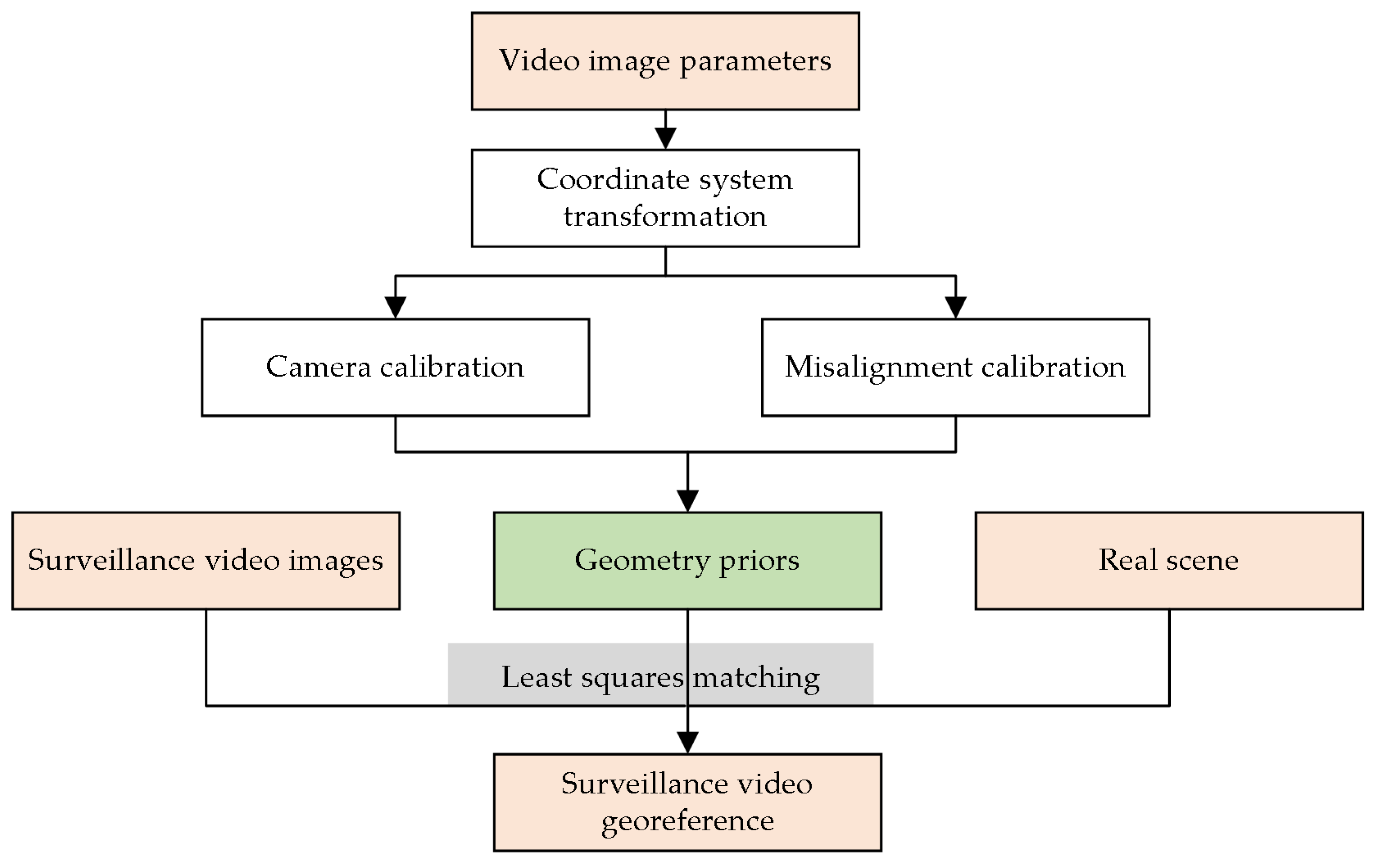

3. Methods

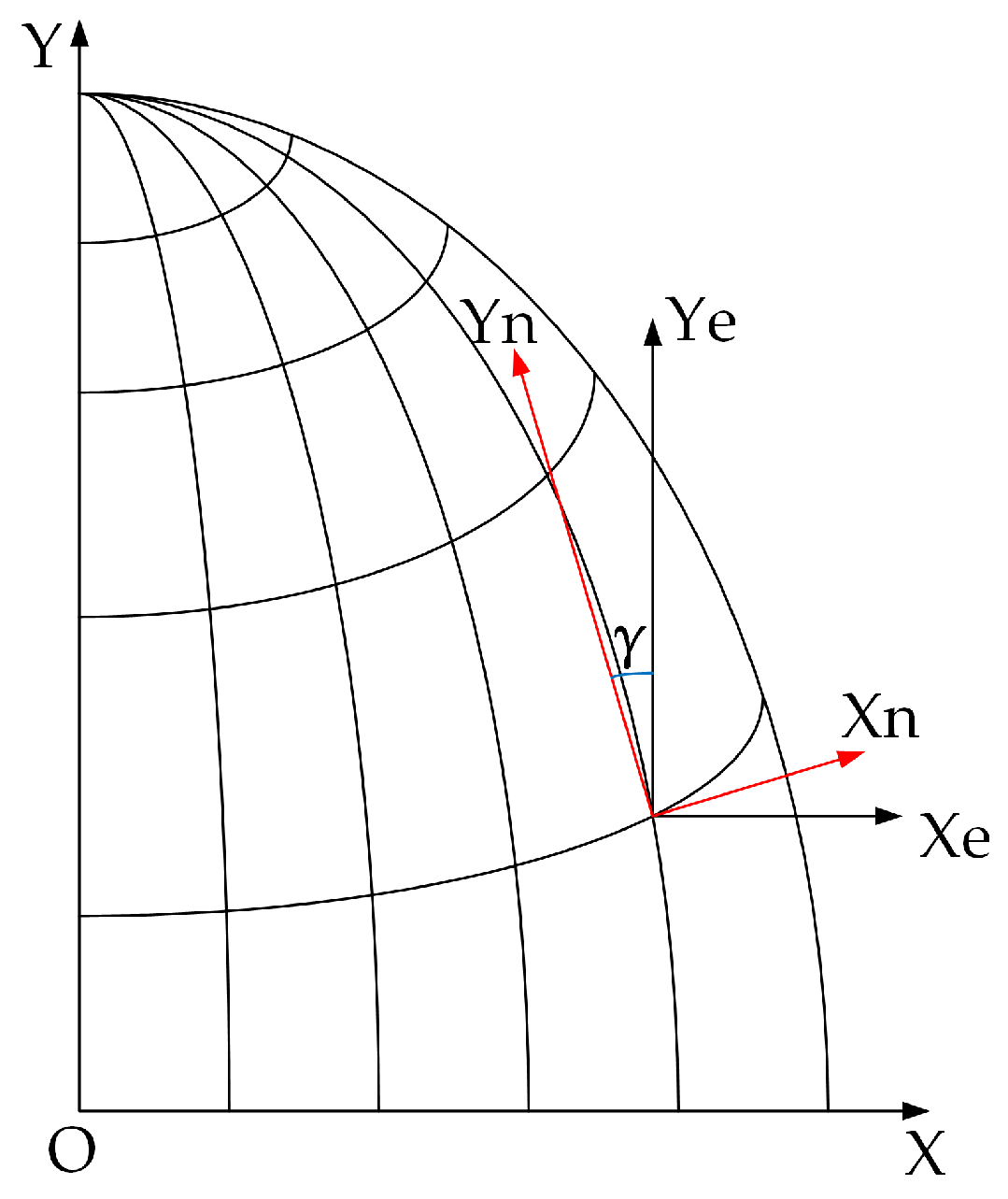

3.1. Coordinate System Transformation for Surveillance Video

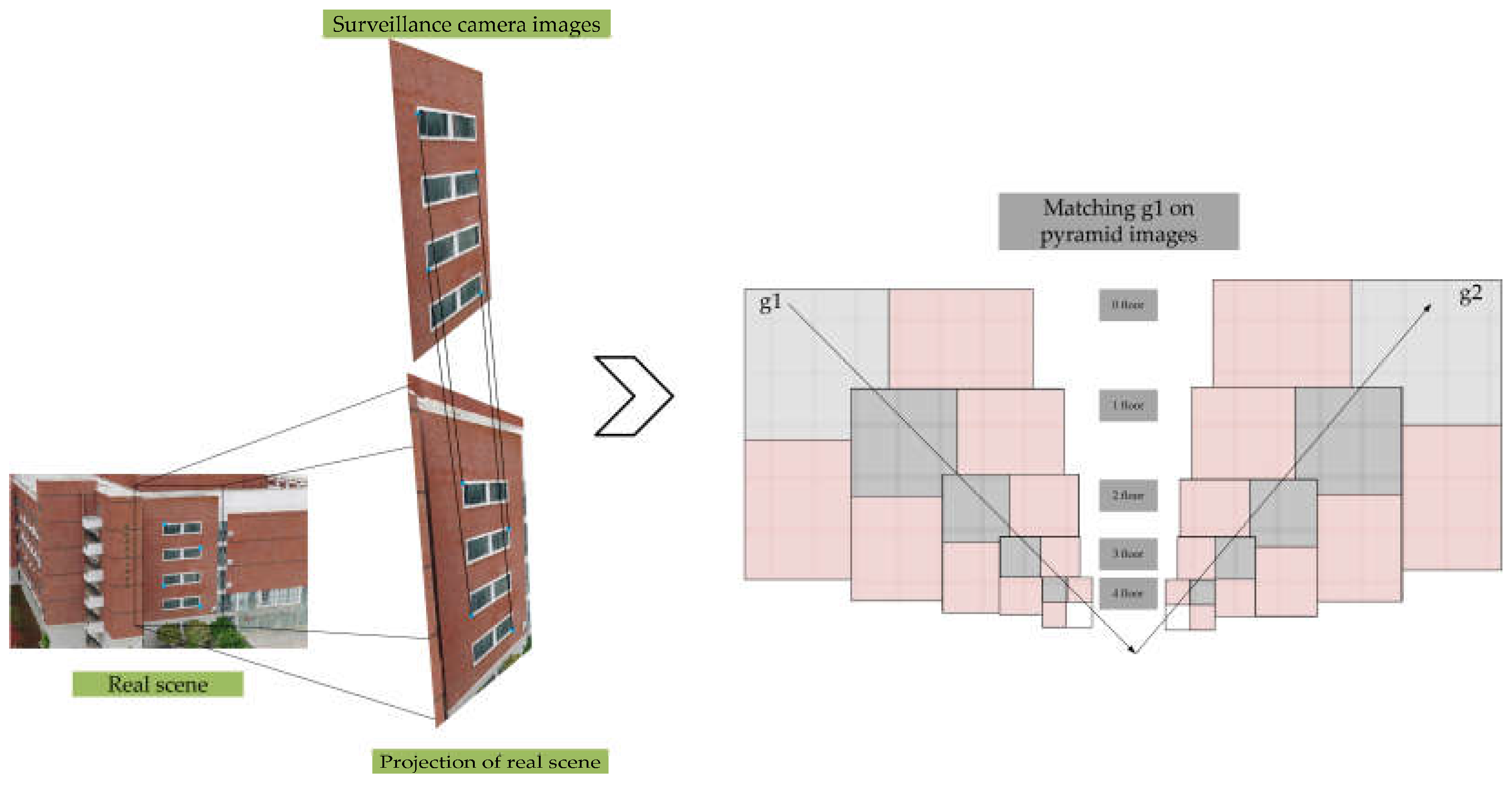

3.2. Surveillance Video Georeference Method

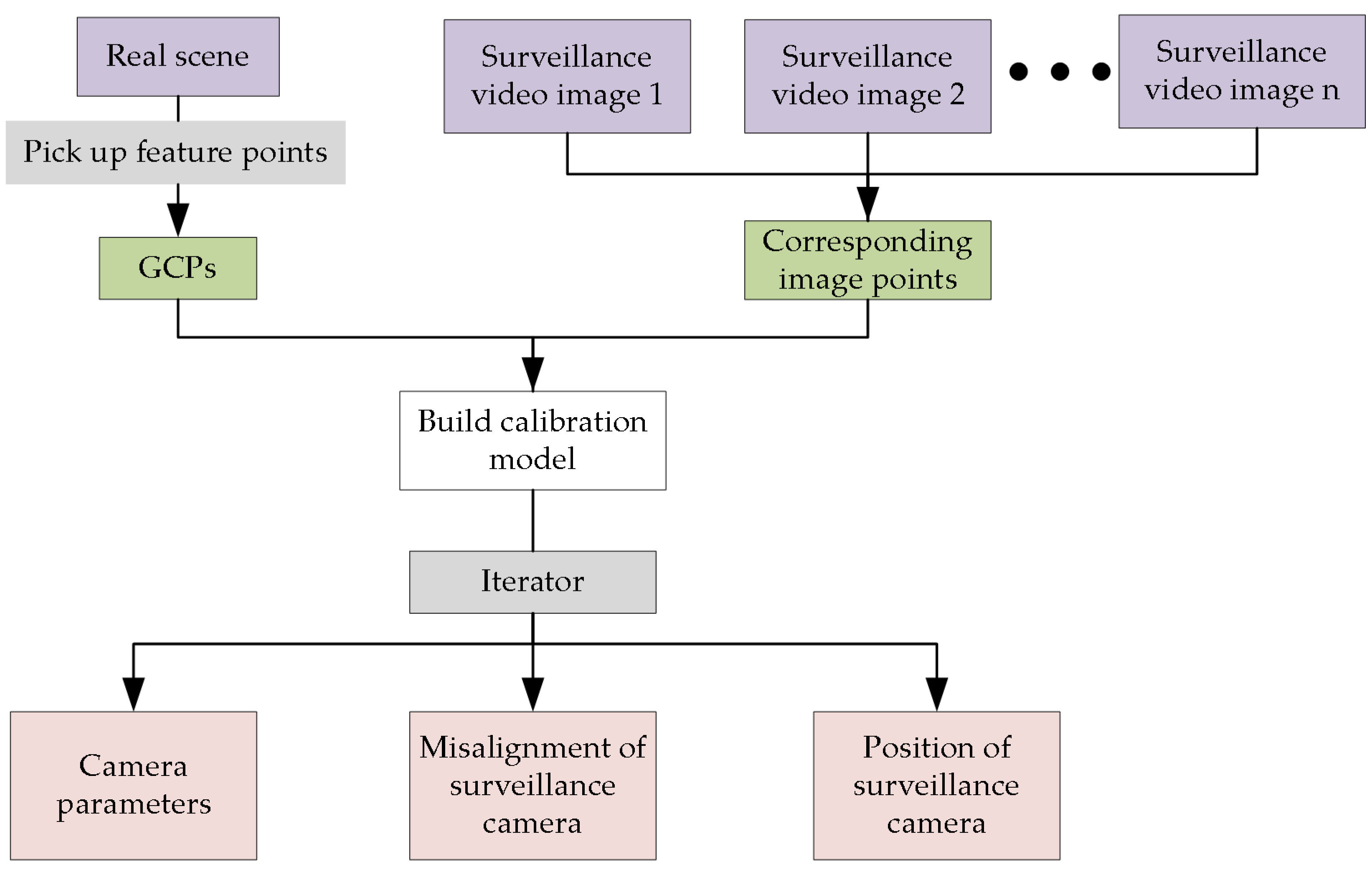

3.2.1. Camera and Misalignment Calibration for Surveillance Video

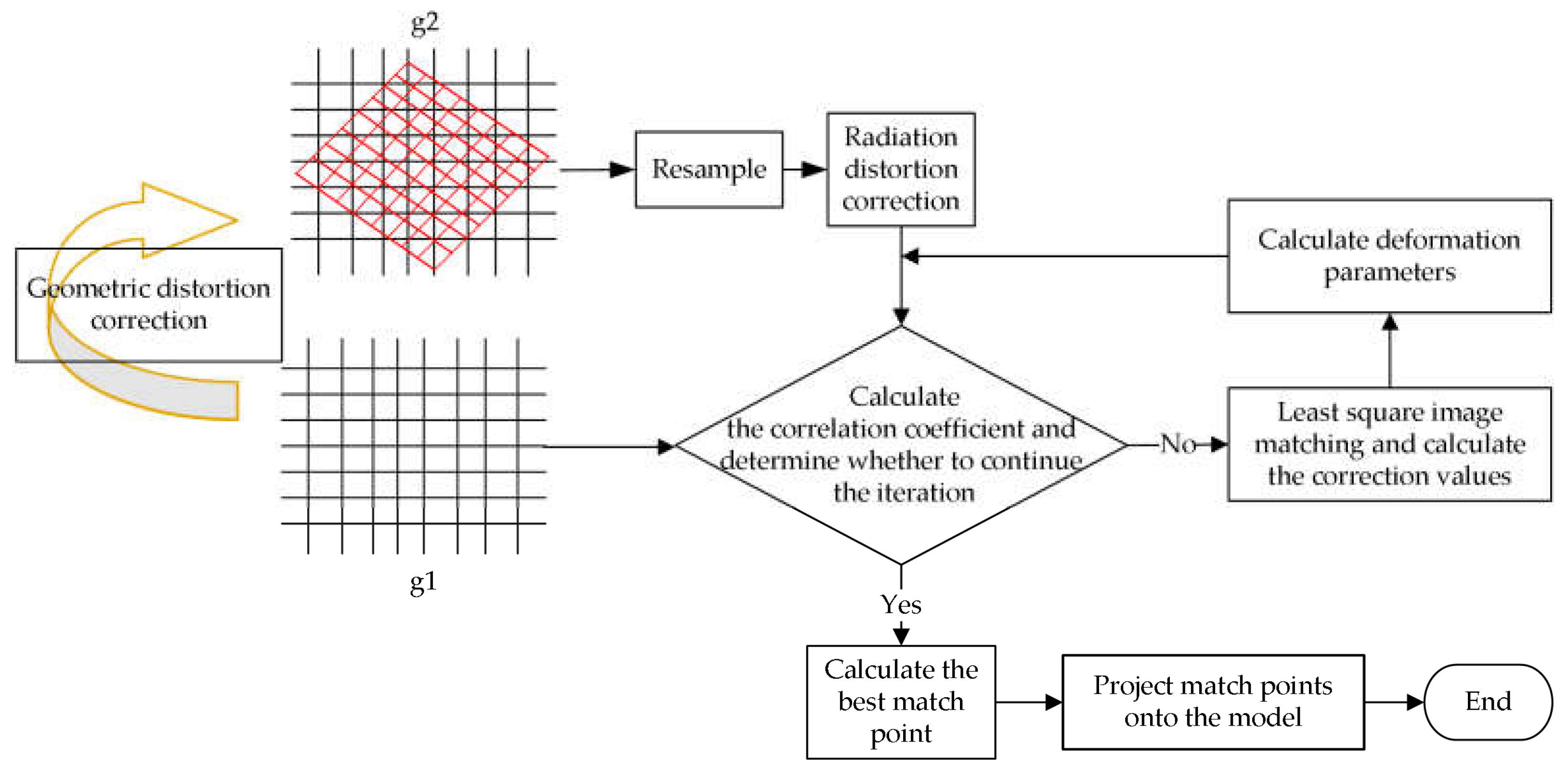

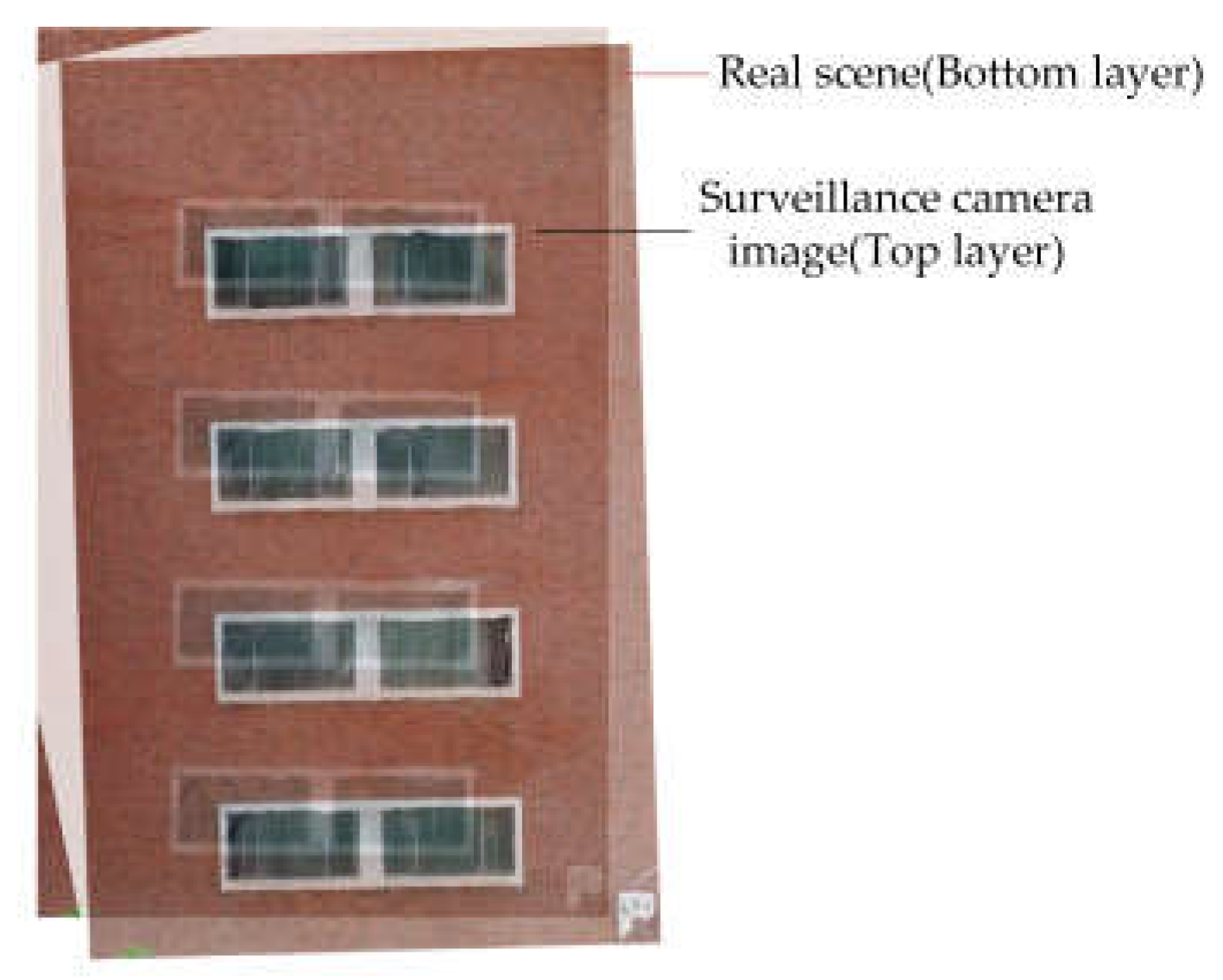

3.2.2. Accurate Registration Method with Geometry Priors

4. Results and Discussion

4.1. Description of Experimental Equipment

4.2. Result and Analysis of Camera Calibration

4.3. Result and Analysis of Position and Attitude Conversion Parameters

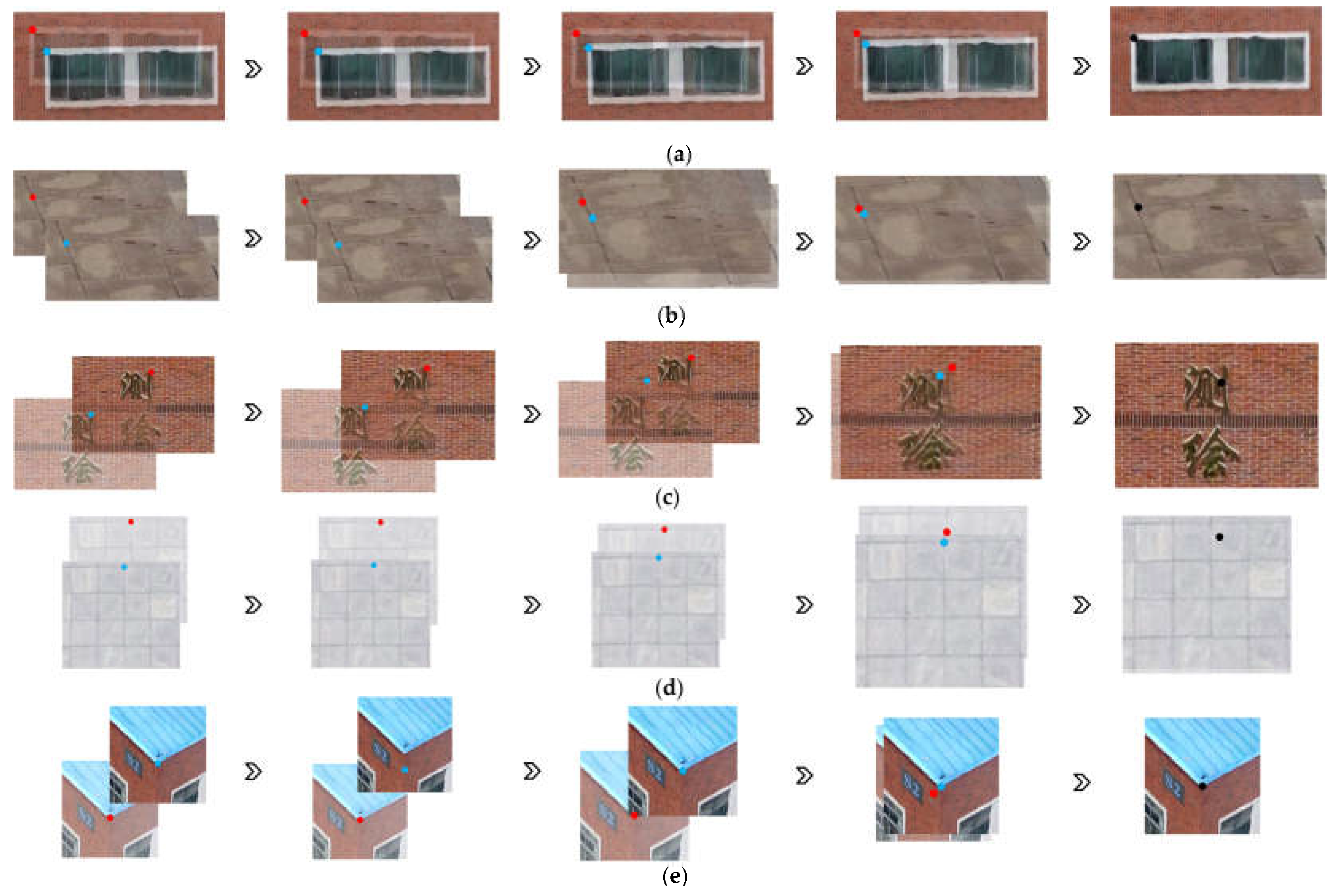

4.4. Result and Analysis of Registration Accuracy

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yaagoubi, R.; El Yarmani, M.; Kamel, A.; Khemiri, W. HybVOR: A voronoi-based 3D GIS approach for camera surveillance network placement. ISPRS Int. J. Geo-Inf. 2015, 4, 754–782. [Google Scholar] [CrossRef]

- Eugster, H.; Nebiker, S. UAV-based augmented monitoring-real-time georeferencing and integration of video imagery with virtual globes. IAPRSSIS 2008, 37, 1229–1235. [Google Scholar]

- Wang, X. Intelligent multi-camera video surveillance: A review. Pattern Recognit. Lett. 2013, 34, 3–19. [Google Scholar] [CrossRef]

- Collins, R.T.; Lipton, A.J.; Kanade, T.; Fujiyoshi, H.; Duggins, D.; Tsin, Y.; Tolliver, D.; Enomoto, N.; Hasegawa, O.; Burt, P. A system for video surveillance and monitoring. VSAM Final Rep. 2000, 2000, 1. [Google Scholar]

- Eugster, H.; Nebiker, S. Real-time georegistration of video streams from mini or micro UAS using digital 3D city models. In Proceedings of the 6th International Symposium on Mobile Mapping Technology, Presidente Prudente, São Paulo, Brazil, 21–24 July 2009. [Google Scholar]

- Milosavljević, A.; Rančić, D.; Dimitrijević, A.; Predić, B.; Mihajlović, V. A method for estimating surveillance video georeferences. ISPRS Int. J. Geo-Inf. 2017, 6, 211. [Google Scholar] [CrossRef]

- Mostafa, M.M.; Schwarz, K.-P. Digital image georeferencing from a multiple camera system by GPS/INS. ISPRS J. Photogramm. Remote Sens. 2001, 56, 1–12. [Google Scholar] [CrossRef]

- Nagalakshmi, T. A Study on Usage of CCTV Surveillance System with Special Reference to Business Outlets in Hyderabad. Tactful Manag. Res. J. 2012, 1, 1–12. [Google Scholar]

- Milosavljević, A.; Rančić, D.; Dimitrijević, A.; Predić, B.; Mihajlović, V. Integration of GIS and video surveillance. Int. J. Geogr. Inf. Sci. 2016, 30, 2089–2107. [Google Scholar] [CrossRef]

- Keller, M.; Lefloch, D.; Lambers, M.; Izadi, S.; Weyrich, T.; Kolb, A. Real-time 3d reconstruction in dynamic scenes using point-based fusion. In Proceedings of the 2013 International Conference on 3D Vision-3DV 2013, Seattle, WA, USA, 29 June–1 July 2013; pp. 1–8. [Google Scholar]

- Schall, G.; Zollmann, S.; Reitmayr, G. Smart Vidente: Advances in mobile augmented reality for interactive visualization of underground infrastructure. Pers. Ubiquitous Comput. 2013, 17, 1533–1549. [Google Scholar] [CrossRef]

- Lewis, P. Linking Spatial Video and GIS; National University of Ireland Maynooth: Maynooth, Ireland, 2009. [Google Scholar]

- Xie, Y.J.; Wang, M.Z.; Liu, X.J.; Wu, Y.G. Integration of GIS and Moving Objects in Surveillance Video. ISPRS Int. J. Geo-Inf. 2017, 6, 94. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Lee, S.C.; Nevatia, R. Robust camera calibration tool for video surveillance camera in urban environment. In Proceedings of the CVPR 2011 WORKSHOPS, Colorado Springs, CO, USA, 20–25 June 2011; pp. 62–67. [Google Scholar]

- Munoz, J.C.P.; Alarcon, C.A.O.; Osorio, A.F.; Mejia, C.E.; Medina, R. Environmental applications of camera images calibrated by means of the Levenberg-Marquardt method. Comput. Geosci. 2013, 51, 74–82. [Google Scholar] [CrossRef]

- Mukherjee, D.; Jonathan Wu, Q.; Wang, G. A comparative experimental study of image feature detectors and descriptors. Mach. Vis. Appl. 2015, 26, 443–466. [Google Scholar] [CrossRef]

- Sharma, S.K.; Jain, K.; Shukla, A.K. A Comparative Analysis of Feature Detectors and Descriptors for Image Stitching. Appl. Sci. 2023, 13, 6015. [Google Scholar] [CrossRef]

- Forero, M.G.; Mambuscay, C.L.; Monroy, M.F.; Miranda, S.L.; Méndez, D.; Valencia, M.O.; Gomez Selvaraj, M. Comparative analysis of detectors and feature descriptors for multispectral image matching in rice crops. Plants 2021, 10, 1791. [Google Scholar] [CrossRef]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F. State of the art in high density image matching. Photogramm. Rec. 2014, 29, 144–166. [Google Scholar] [CrossRef]

- Saleem, S.; Bais, A.; Sablatnig, R. Towards feature points based image matching between satellite imagery and aerial photographs of agriculture land. Comput. Electron. Agric. 2016, 126, 12–20. [Google Scholar] [CrossRef]

- Yuan, X.; Kong, L.; Feng, D.; Wei, Z. Automatic feature point detection and tracking of human actions in time-of-flight videos. IEEE/CAA J. Autom. Sin. 2017, 4, 677–685. [Google Scholar] [CrossRef]

- Liu, J.C.; Xu, W.; Jiang, T.; Han, X.F. Development of an Attitude Transformation Method From the Navigation Coordinate System to the Projection Coordinate System. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1318–1322. [Google Scholar] [CrossRef]

- Redfearn, J. Transverse mercator formulae. Emp. Surv. Rev. 1948, 9, 318–322. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An accurate O(n) solution to the PnP problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

| Frames | Abbreviation |

|---|---|

| Navigation frame | g |

| Body frame | u |

| Camera frame | c |

| Map projection frame | s |

| Parameter | Attribute |

|---|---|

| Name | DH-SD-8A1440XA-HNR |

| Focal length | 5.5 mm |

| Maximum focal length | 220 mm |

| Sensor | 1/1.8-inch CMOS |

| Image size | 2560 × 1440 |

| Pixel size | 1.97 μm |

| FOV | Horizontal: 61.4 to 2.27° Vertical: 35.99 to 1.3° |

| Heading angle | 0 to 360° |

| Pitch angle | −30 to 90° |

| Number | (Pixel) | (Pixel) | (Pixel) |

|---|---|---|---|

| Camera 1 | 1286.47 | 717.86 | 2448.52 |

| Camera 2 | 1282.55 | 722.37 | 2453.71 |

| Number | Longitude (E) | Latitude (N) | Altitude (m) | (Degree) | (Degree) | (Degree) |

|---|---|---|---|---|---|---|

| Camera 1 | 120.1246358 | 36.0009723 | 33.859 | −1.2 | 2.6 | 3.5 |

| Camera 2 | 120.1249309 | 35.9999611 | 35.454 | 0.8 | −3.4 | 2.7 |

| Number | P | T | Z |

|---|---|---|---|

| Image 1 | 20.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 2 | 40.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 3 | 60.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 4 | 80.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 5 | 100.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 6 | 120.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 7 | 140.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 8 | 160.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 9 | 180.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 10 | 200.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 11 | 220.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 12 | 240.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 13 | 260.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 14 | 280.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 15 | 300.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 16 | 320.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 17 | 340.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Image 18 | 360.0 | 45.0/55.0/65.0/75.0/85.0 | 1.0 |

| Number | Northing | Easting | Altitude | |||

|---|---|---|---|---|---|---|

| Image 1 | 240819.543391 | 3987880.875025 | 33.859000 | 31.745092666 | 50.954142676 | −34.226973360 |

| Image 2 | 240819.543391 | 3987880.875025 | 33.859000 | 47.129444042 | 38.057075507 | −55.904693437 |

| Image 3 | 240819.543391 | 3987880.875025 | 33.859000 | 54.575139746 | 22.451284505 | −70.487256792 |

| Image 4 | 240819.543391 | 3987880.875025 | 33.859000 | 57.420430967 | 5.813279602 | −81.981622930 |

| Image 5 | 240819.543391 | 3987880.875025 | 33.859000 | 56.920176724 | −11.042711394 | −92.796826270 |

| Image 6 | 240819.543391 | 3987880.875025 | 33.859000 | 52.859705079 | −27.468807568 | −104.942423737 |

| Image 7 | 240819.543391 | 3987880.875025 | 33.859000 | 43.416094145 | −42.481735575 | −121.202882060 |

| Image 8 | 240819.543391 | 3987880.875025 | 33.859000 | 24.604964601 | −53.899705132 | −146.141765924 |

| Image 9 | 240819.543391 | 3987880.875025 | 33.859000 | −4.889093366 | −57.475691796 | 178.522277674 |

| Image 10 | 240819.543391 | 3987880.875025 | 33.859000 | −31.745092666 | −50.954142676 | 145.773026640 |

| Image 11 | 240819.543391 | 3987880.875025 | 33.859000 | −47.129444042 | −38.057075507 | 124.095306563 |

| Image 12 | 240819.543391 | 3987880.875025 | 33.859000 | −54.575139746 | −22.451284505 | 109.512743208 |

| Image 13 | 240819.543391 | 3987880.875025 | 33.859000 | −57.420430967 | −5.813279602 | 98.018377070 |

| Image 14 | 240819.543391 | 3987880.875025 | 33.859000 | −56.920176724 | 11.042711394 | 87.203173730 |

| Image 15 | 240819.543391 | 3987880.875025 | 33.859000 | −52.859705079 | 27.468807568 | 75.057576263 |

| Image 16 | 240819.543391 | 3987880.875025 | 33.859000 | −43.416094145 | 42.481735575 | 58.797117940 |

| Image 17 | 240819.543391 | 3987880.875025 | 33.859000 | −24.604964601 | 53.899705132 | 33.858234076 |

| Image 18 | 240819.543391 | 3987880.875025 | 33.859000 | 4.889093366 | 57.475691796 | −1.477722326 |

| Number | Selected Cases | Successful Cases | Right Cases | Success Rate | Accuracy Rate |

|---|---|---|---|---|---|

| 1 | 55 | 55 | 54 | 100% | 98.18% |

| 2 | 52 | 52 | 51 | 100% | 98.08% |

| 3 | 64 | 62 | 60 | 96.88% | 96.77% |

| 4 | 44 | 44 | 42 | 100% | 95.45% |

| 5 | 52 | 50 | 49 | 96.15% | 98% |

| 6 | 66 | 65 | 63 | 98.48% | 96.92% |

| 7 | 58 | 58 | 57 | 100% | 98.28% |

| 8 | 72 | 69 | 69 | 95.83% | 100% |

| 9 | 49 | 47 | 44 | 95.92% | 93.62% |

| 10 | 48 | 47 | 47 | 97.92% | 100% |

| 11 | 66 | 66 | 63 | 100% | 95.45% |

| 12 | 63 | 62 | 60 | 98.41% | 96.77% |

| 13 | 59 | 57 | 56 | 96.61% | 98.25% |

| 14 | 46 | 45 | 45 | 97.83% | 100% |

| 15 | 55 | 55 | 52 | 100% | 94.55% |

| 16 | 42 | 40 | 37 | 95.24% | 92.5% |

| 17 | 65 | 63 | 59 | 96.92% | 93.65% |

| 18 | 58 | 58 | 58 | 100% | 100% |

| 19 | 47 | 46 | 44 | 97.87% | 95.65% |

| 20 | 47 | 46 | 45 | 97.87% | 97.83% |

| Total | 1108 | 1087 | 1055 | 98.1% | 97.06% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Z.; Liu, J.; Feng, M.; Cong, Y. Surveillance Video Georeference Method Based on Real Scene Model with Geometry Priors. Remote Sens. 2023, 15, 4217. https://doi.org/10.3390/rs15174217

Zhou Z, Liu J, Feng M, Cong Y. Surveillance Video Georeference Method Based on Real Scene Model with Geometry Priors. Remote Sensing. 2023; 15(17):4217. https://doi.org/10.3390/rs15174217

Chicago/Turabian StyleZhou, Zhongxian, Jianchen Liu, Miaomiao Feng, and Yuwei Cong. 2023. "Surveillance Video Georeference Method Based on Real Scene Model with Geometry Priors" Remote Sensing 15, no. 17: 4217. https://doi.org/10.3390/rs15174217

APA StyleZhou, Z., Liu, J., Feng, M., & Cong, Y. (2023). Surveillance Video Georeference Method Based on Real Scene Model with Geometry Priors. Remote Sensing, 15(17), 4217. https://doi.org/10.3390/rs15174217