High-Resolution and Wide-Swath 3D Imaging for Urban Areas Based on Distributed Spaceborne SAR

Abstract

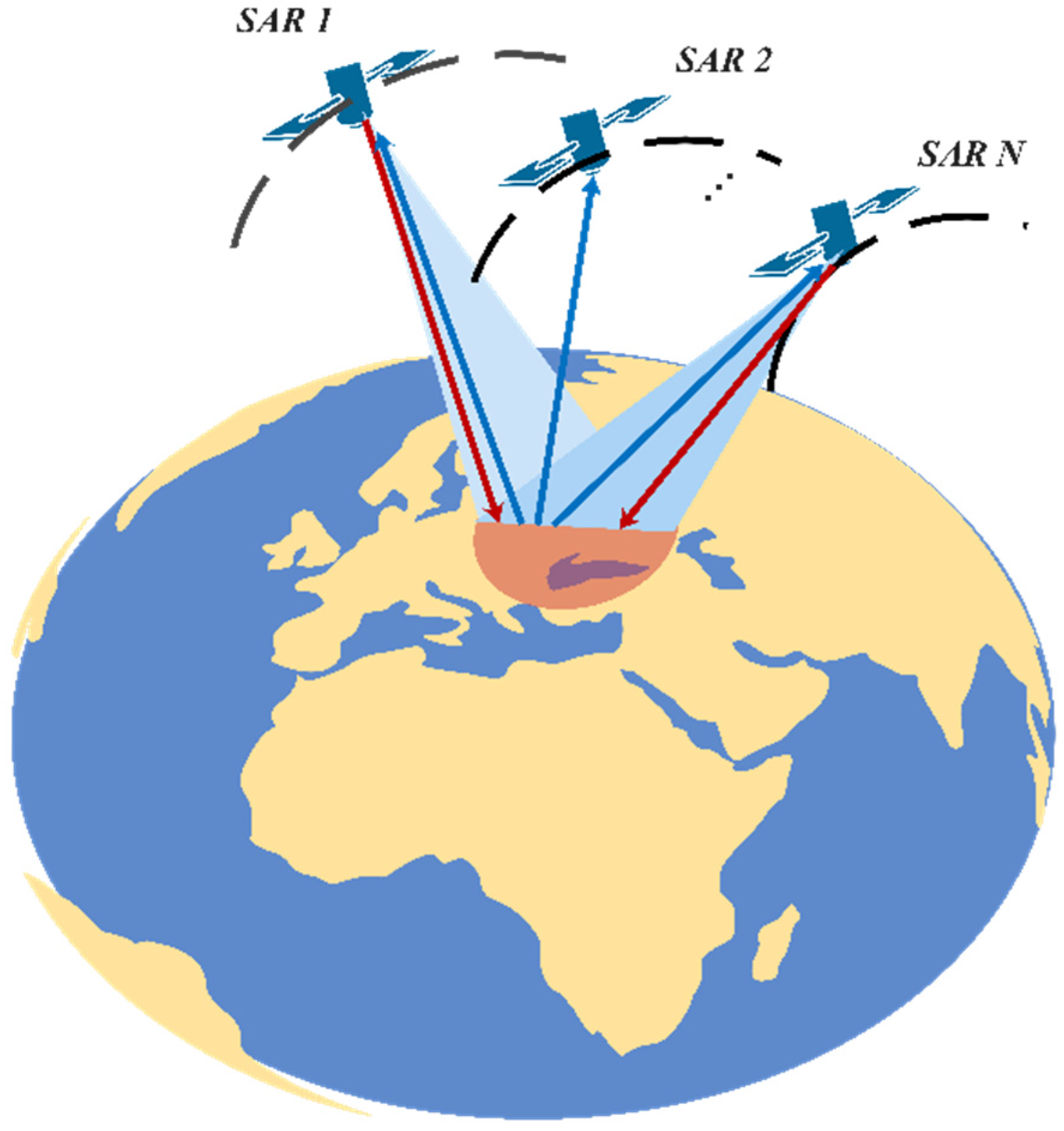

:1. Introduction

- (1)

- A scheme of a distributed SAR HRWS 3D imaging system with a multi-channel arrangement is innovatively proposed, which addresses the issues of long imaging time, low efficiency, and data redundancy in traditional 3D imaging.

- (2)

- A range ambiguity resolution method based on multi-beam forming is proposed. This method effectively achieves range ambiguity resolution for overlapping targets with non-unique wave direction.

- (3)

- The feasibility of the proposed distributed SAR HRWS system and the effectiveness of the range ambiguity resolution method are verified by using the airborne array tomographic SAR data, and the HRWS 3D imaging results are obtained.

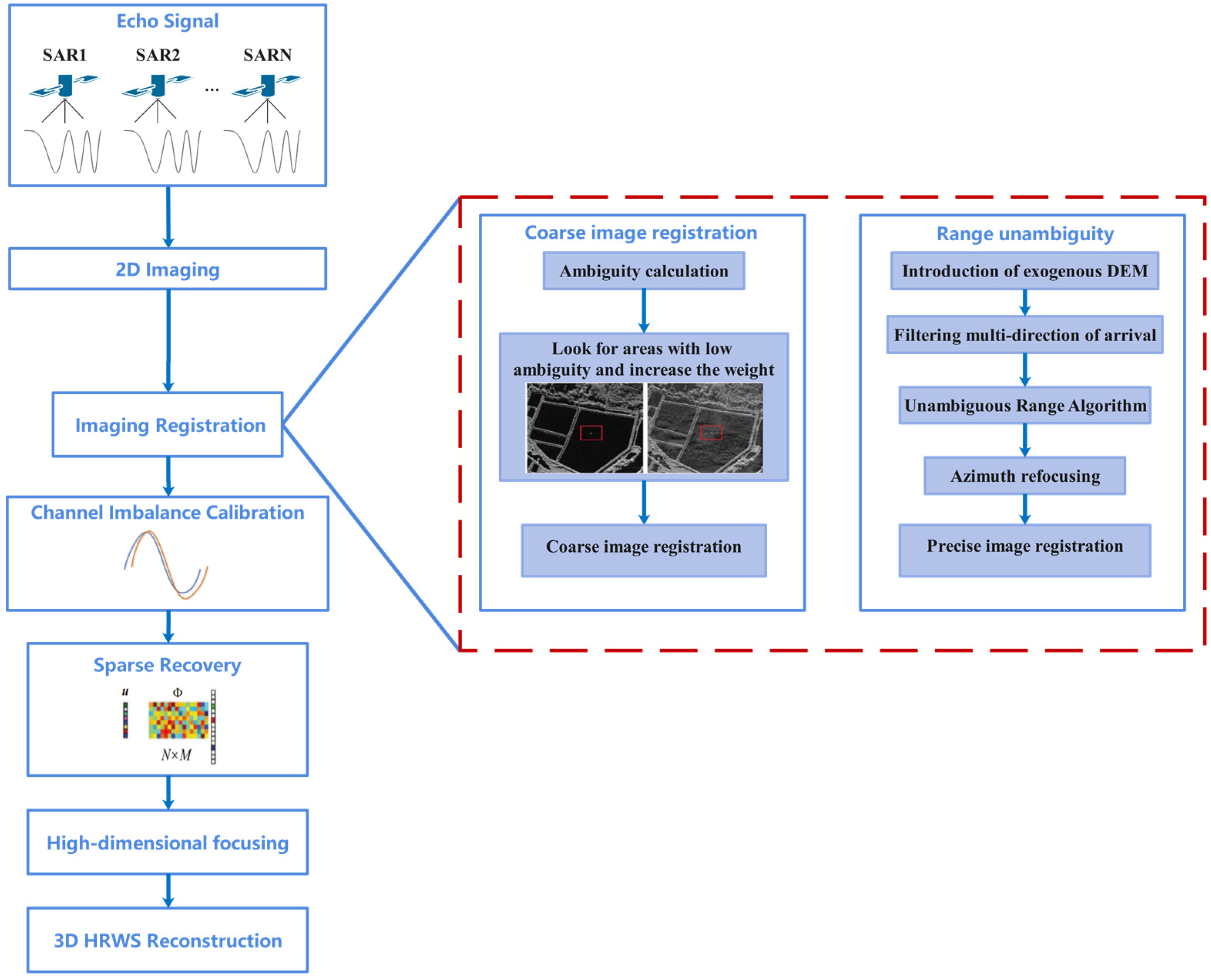

2. Materials and Methods

2.1. Overall Workflow

2.2. Distributed SAR 3D Imaging Geometry and Theory

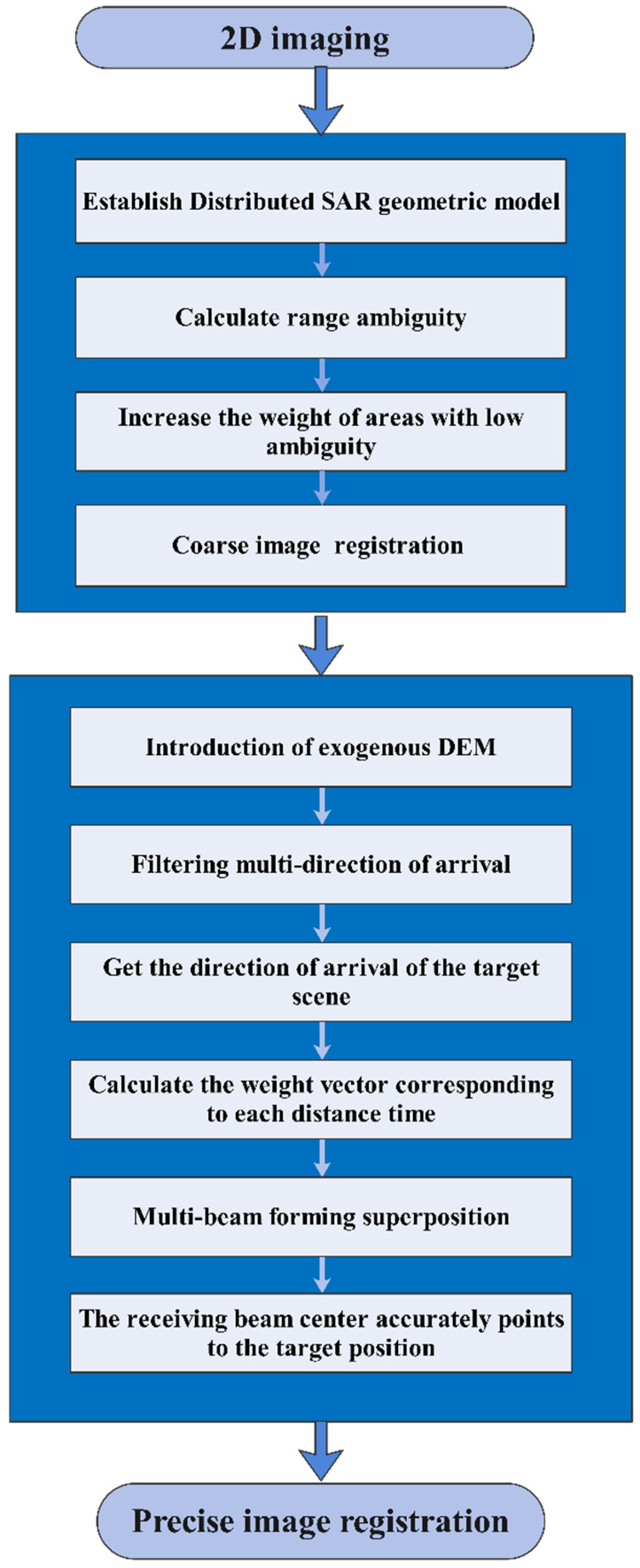

2.3. Range Ambiguity Resolution Algorithm

2.3.1. Range Ambiguity Theory

2.3.2. Range Ambiguity Calculation

2.3.3. Obtaining Range Ambiguity Resolution Based on Multi-Beam Forming

3. Experiments and Results

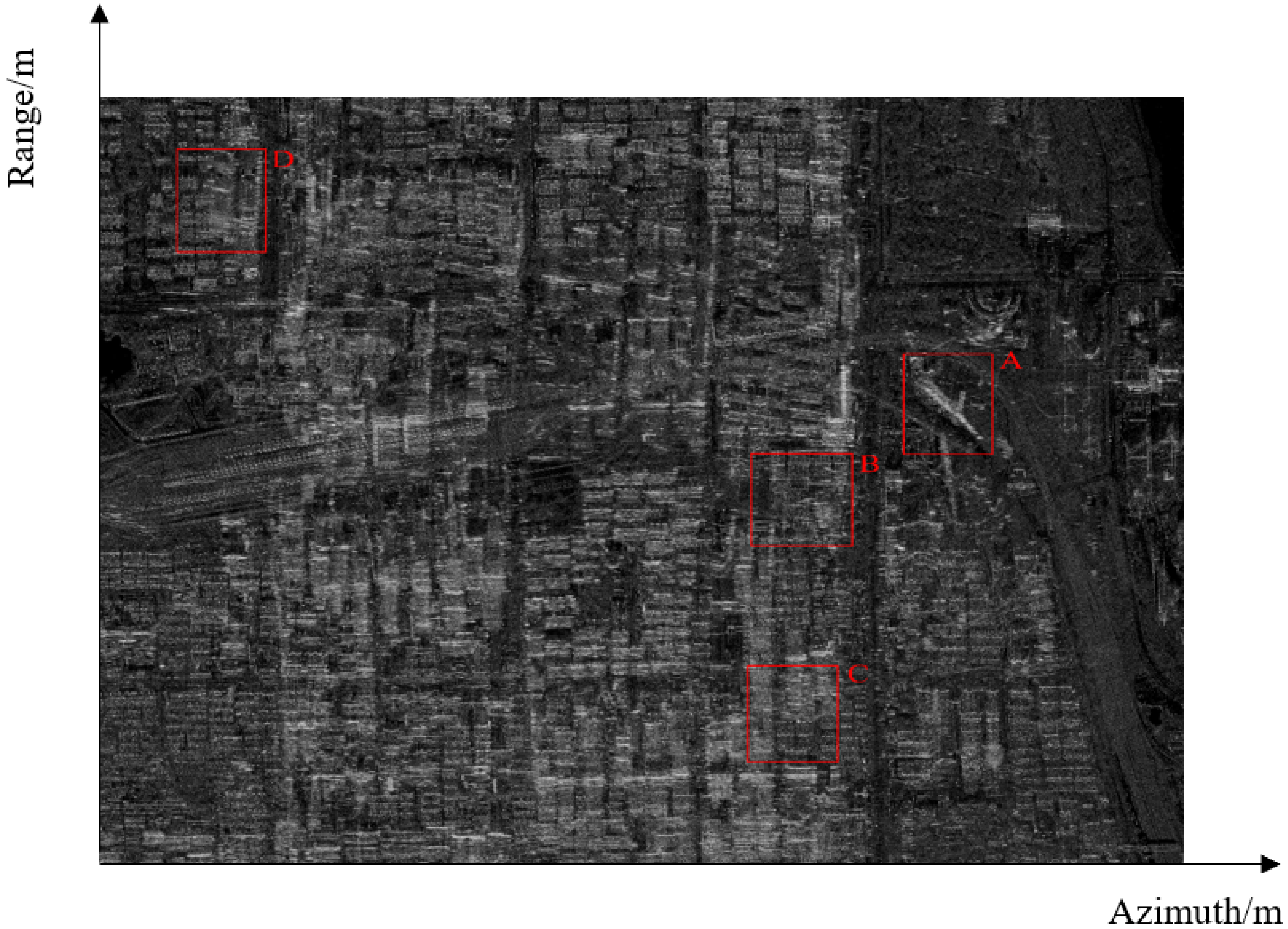

3.1. Study Area and Data

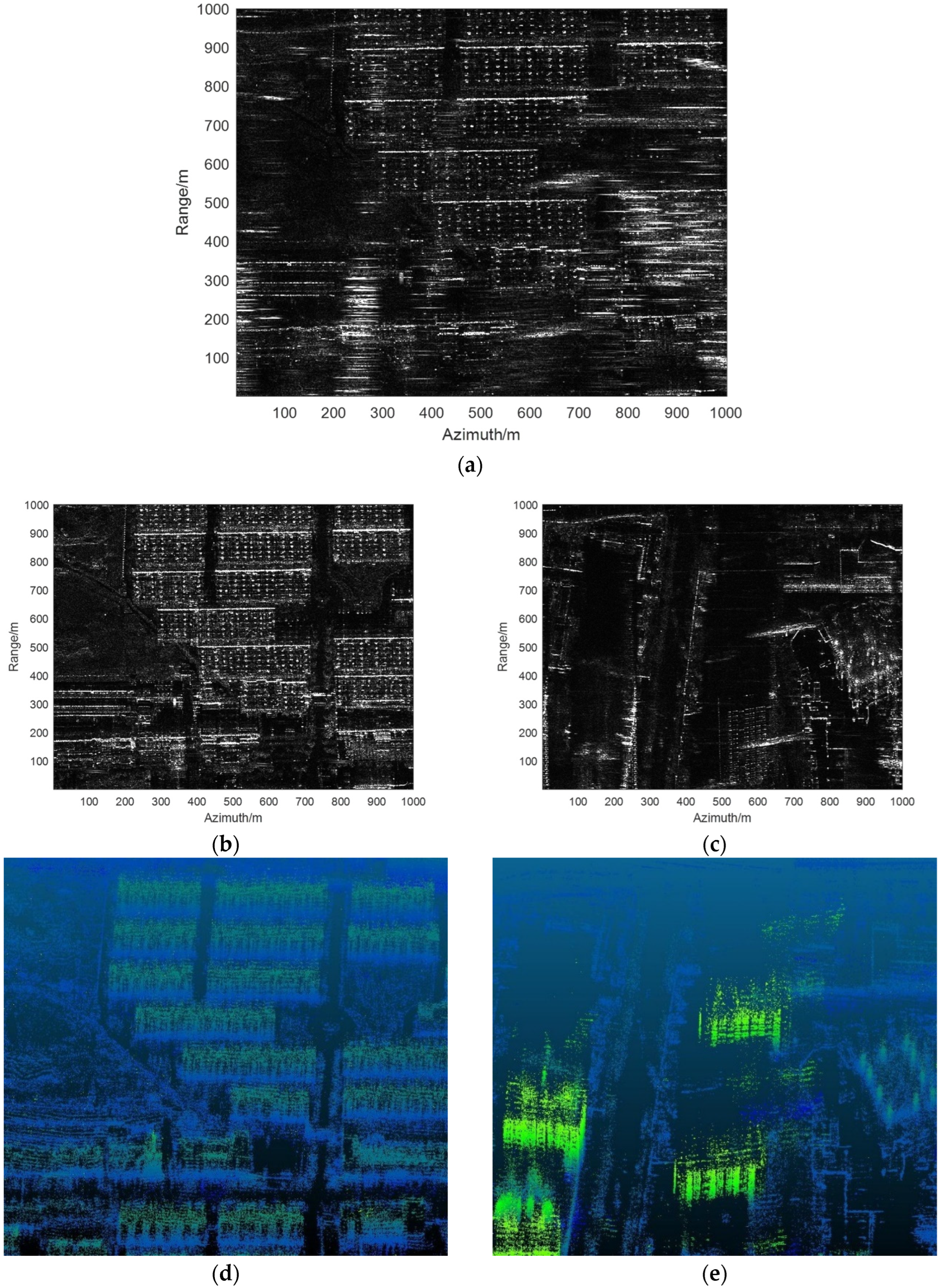

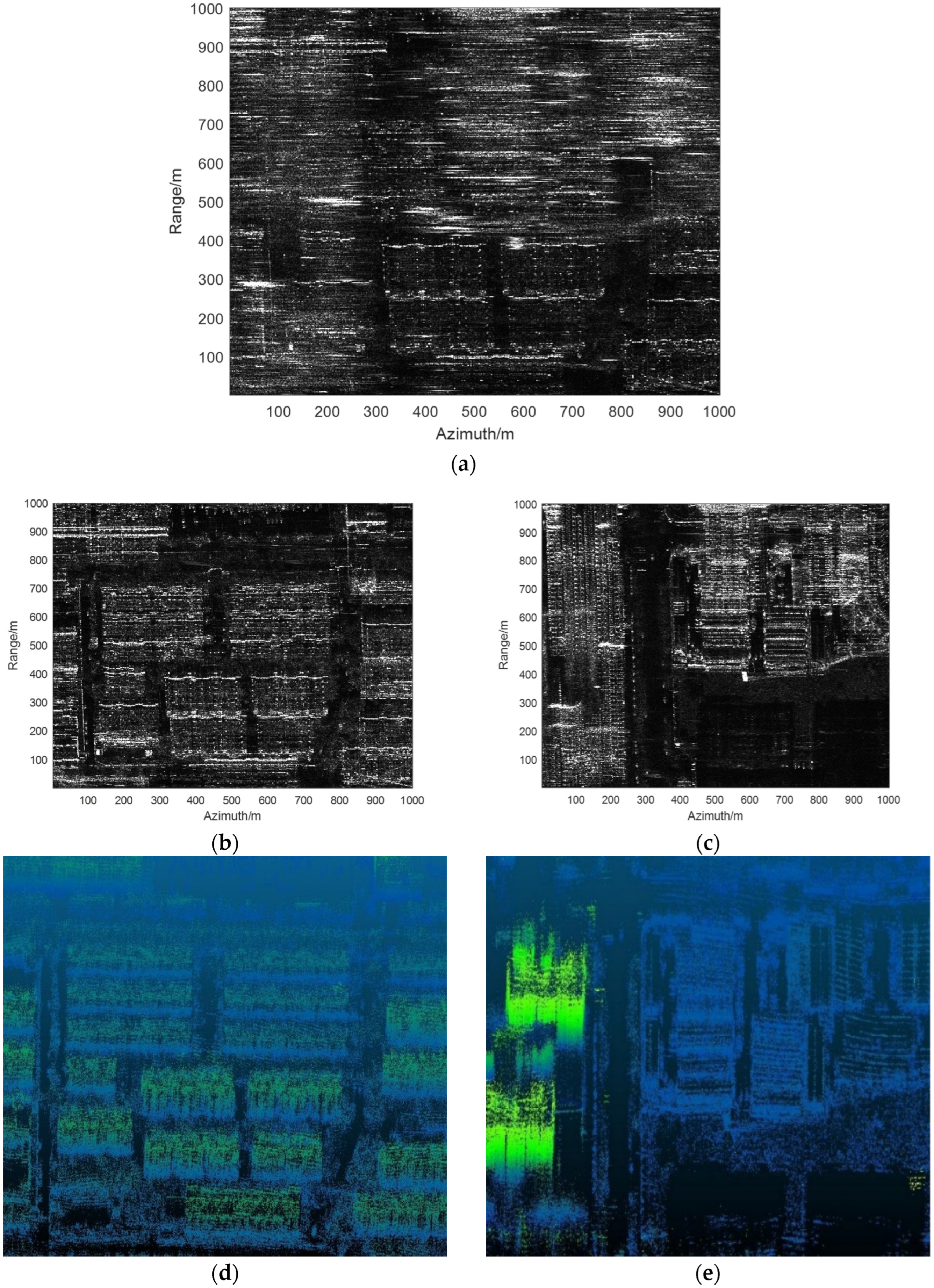

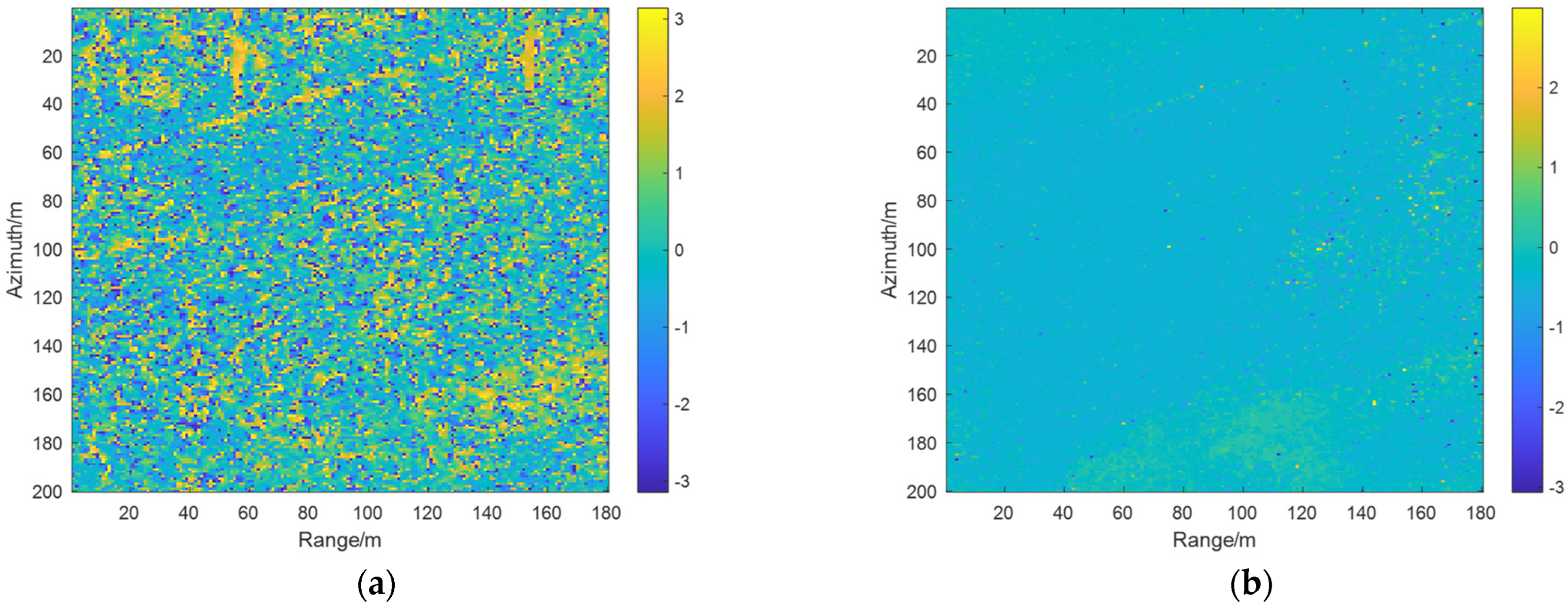

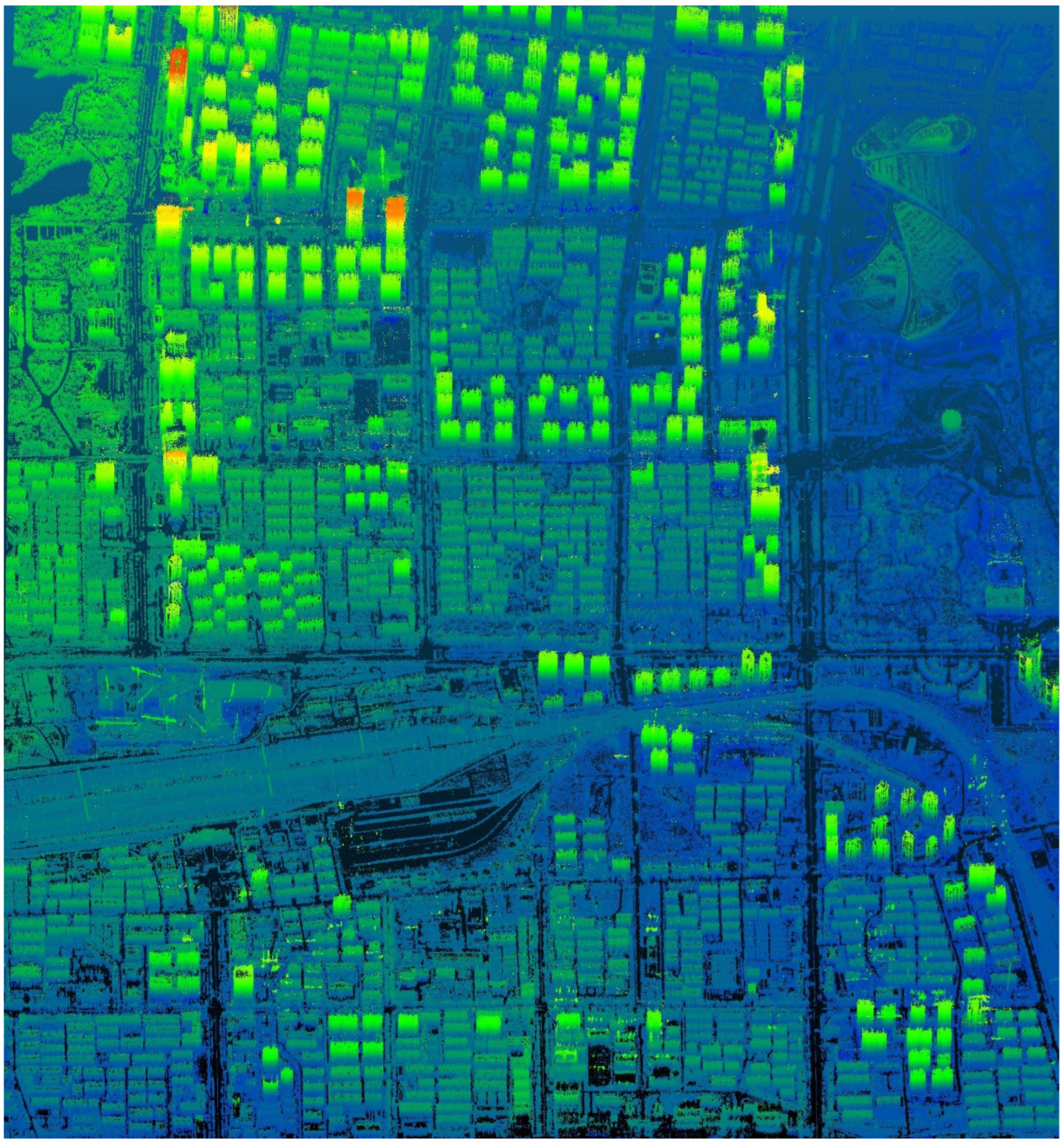

3.2. The Results of Range Ambiguity Resolution and HRWS 3D Imaging

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| TomoSAR | tomographic synthetic aperture radar |

| 3D | three-dimensional |

| HRWS | high-resolution and wide-swath |

| PRF | pulse repetition frequency |

| SAR | synthetic aperture radar |

| SCORE | scan-on-receive |

| DBF | digital beamforming |

| DOA | direction of arrival |

| ADBF | adaptive digital beamforming |

| DEM | digital elevation model |

| AIRCAS | Aerospace Information Research Institute, Chinese Academy of Sciences |

References

- Liu, S.; Wei, S.; Wei, J.; Zeng, X.; Zhang, X. TomoSAR Sparse 3-D Imaging Via DEM-Aided Surface Projection. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 5211–5214. [Google Scholar] [CrossRef]

- Yu, H.; Zhang, Z. The Performance of Relative Height Metrics for Estimation of Forest Above-Ground Biomass Using L- and X-Bands TomoSAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2021, 14, 1857–1871. [Google Scholar] [CrossRef]

- Shi, Y.; Bamler, R.; Wang, Y.; Zhu, X.X. SAR Tomography at the Limit: Building Height Reconstruction Using Only 3–5 TanDEM-X Bistatic Interferograms. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8026–8037. [Google Scholar] [CrossRef]

- Lu, H.; Zhang, H.; Deng, Y.; Wang, J.; Yu, W. Building 3-D Reconstruction With a Small Data Stack Using SAR Tomography. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2461–2474. [Google Scholar] [CrossRef]

- Shahzad, M.; Zhu, X.X. Automatic Detection and Reconstruction of 2-D/3-D Building Shapes From Spaceborne TomoSAR Point Clouds. IEEE Trans. Geosci. Remote. Sens. 2015, 54, 1292–1310. [Google Scholar] [CrossRef] [Green Version]

- Knaell, K. Radar tomography for the generation of three-dimensional images. IEE Proc.—Radar, Sonar Navig. 1995, 142, 54. [Google Scholar] [CrossRef] [Green Version]

- Reigber, A.; Moreira, A. First demonstration of airborne SAR tomography using multibaseline L-band data. IEEE Trans. Geosci. Remote. Sens. 2000, 38, 2142–2152. [Google Scholar] [CrossRef]

- Fornaro, G.; Serafino, F. Imaging of Single and Double Scatterers in Urban Areas via SAR Tomography. IEEE Trans. Geosci. Remote. Sens. 2006, 44, 3497–3505. [Google Scholar] [CrossRef]

- Zhu, X.X.; Bamler, R. Demonstration of Super-Resolution for Tomographic SAR Imaging in Urban Environment. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3150–3157. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.X.; Bamler, R. Super-Resolution Power and Robustness of Compressive Sensing for Spectral Estimation With Application to Spaceborne Tomographic SAR. IEEE Trans. Geosci. Remote Sens. 2012, 50, 247–258. [Google Scholar] [CrossRef]

- Shahzad, M.; Zhu, X.X. Reconstruction of building façades using spaceborne multiview TomoSAR point clouds. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium—IGARSS, Melbourne, VIC, Australia, 21–26 July 2013; pp. 624–627. [Google Scholar] [CrossRef]

- Ge, N.; Gonzalez, F.R.; Wang, Y.; Shi, Y.; Zhu, X.X. Spaceborne Staring Spotlight SAR Tomography—A First Demonstration With TerraSAR-X. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2018, 11, 3743–3756. [Google Scholar] [CrossRef] [Green Version]

- Arcioni, M.; Bensi, P.; Fehringer, M.; Fois, F.; Heliere, F.; Lin, C.-C.; Scipal, K. The Biomass mission, status of the satellite system. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014. [Google Scholar] [CrossRef]

- Wang, M.; Wei, S.; Zhou, Z.; Shi, J.; Zhang, X.; Guo, Y. CTV-Net: Complex-Valued TV-Driven Network With Nested Topology for 3-D SAR Imaging. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Smith, J.W.; Torlak, M. Deep Learning-Based Multiband Signal Fusion for 3-D SAR Super-Resolution. IEEE Trans. Aerosp. Electron. Syst. 2023, 1–17. [Google Scholar] [CrossRef]

- Goodman, N.; Lin, S.C.; Rajakrishna, D.; Stiles, J. Processing of multiple-receiver spaceborne arrays for wide-area SAR. IEEE Trans. Geosci. Remote. Sens. 2002, 40, 841–852. [Google Scholar] [CrossRef]

- Das, A.; Cobb, R. TechSat 21–Space Missions Using Collaborating Constellations of Satellites. In Proceedings of the 12th Annual AIAA/USU Conference on Small Satellites, Logan, UT, USA, 31 August–3 September 1998. [Google Scholar]

- Martin, M.; Klupar, P.; Kilberg, S.; Winter, J. Techsat 21 and Revolutionizing Space Missions using Microsatellites. In Proceedings of the 15th American Institute of Aeronautics and Astronautics Conference on Small Satellites, Logan, UT, USA, 13–16 August 2001. [Google Scholar]

- Cyrus, D.J. Multiobjective, Multidisciplinary Design Optimization Methodology for the Conceptual Design of Distributed Satellite Systems. Ph.D. Thesis, Department of Aeronautics and Astronautics, Massachusettes Institute of Technology, Cambridge, MA, USA, 2002. [Google Scholar]

- Lee, P.F.; James, K. The RADARSAT-2/3 Topographic Mission. In Proceedings of the IGARSS 2001, Sydney, Australia, 9–13 July 2001. [Google Scholar]

- Evans, N.; Lee, P.; Girard, R. The RADARSAT-2&3 Topographic Mission. In Proceedings of the EUSAR 2002, Cologne, Germany, 4–6 June 2002; pp. 37–40. [Google Scholar]

- Massonnet, D. Capabilities and limitations of the interferometric cartwheel. IEEE Trans. Geosci. Remote. Sens. 2001, 39, 506–520. [Google Scholar] [CrossRef]

- Reale, D.; Nitti, D.O.; Peduto, D.; Nutricato, R.; Bovenga, F.; Fornaro, G. Postseismic Deformation Monitoring With the COSMO/SKYMED Constellation. IEEE Geosci. Remote. Sens. Lett. 2011, 8, 696–700. [Google Scholar] [CrossRef]

- Moreira, A.; Krieger, G.; Hajnsek, I.; Werner, M.; Hounam, D.; Riegger, S.; Settelmeyer, E. Single-Pass SAR Interferometry with a TanDEM TerraSAR-X Configuration. In Proceedings of the EUSAR 2004, Ulm, Germany, 25–27 May 2004; pp. 53–54. [Google Scholar]

- Fiedler, H.; Krieger, G. The TanDEM-X Mission Design and Data Acquisition Plan. In Proceedings of the EUSAR 2006, Dresden, Germany, 16–18 May 2006. [Google Scholar]

- Suess, M.; Wiesbeck, W. Side-Looking Synthetic Aperture Radar System. European Patent EP1241487A1, 18 September 2002. [Google Scholar]

- Suess, M.; Grafmueller, B.; Zahn, R. A novel high resolution, wide swath SAR system. In Proceedings of the IGARSS 2001. Scanning the Present and Resolving the Future. Proceedings. IEEE 2001 International Geoscience and Remote Sensing Symposium (Cat. No.01CH37217), Sydney, NSW, Australia, 9–13 July 2001; pp. 1013–1015. [Google Scholar] [CrossRef]

- Varona, E.M. Adaptive Digital Beam-Forming for High-Resolution Wide-Swath Synthetic Aperture Radar; University Politècnica of Catalonia: Barcelona, Spain, 2009; pp. 31–42. [Google Scholar]

- Wilson, K.S.; Ryan, P.A.; Minardi, M.J. Phase-history data collection for synthetic scene modeling applications. Proc. SPIE 2001, 4382, 438201. [Google Scholar] [CrossRef]

- Fornaro, G.; Serafino, F.; Soldovieri, F. Three-dimensional focusing with multipass SAR data. IEEE Trans. Geosci. Remote. Sens. 2003, 41, 507–517. [Google Scholar] [CrossRef]

- Kay, S.M.; Luo, P.; Zhang, W.; Liu, Z. Fundamentals of Statistical Signal Processing Volume I: Estimation Theory; Publishing House of Electronics Industry: Beijing, China, 2011; pp. 451–453. [Google Scholar]

- Zitová, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef] [Green Version]

- Yu, Z.; Zhou, Y.; Chen, J.; Li, C. Approach of range ambiguity suppression for spaceborne SAR based on LCMV algorithm. J. Beijing Univ. Aeronaut. Astronaut. 2006, 32, 1035–1038. [Google Scholar] [CrossRef] [Green Version]

- Chen, E.X. Study on Ortho-Rectification Methodology of Space-Borne Synthetic Aperture Radar Imagery; Chinese Academy of Forestry: Beijing, China, 2004; pp. 123–150. [Google Scholar]

| Parameter | Symbol | Value |

|---|---|---|

| Center frequency | 10 GHz | |

| Bandwidth | 500 MHz | |

| Channel number | 14 | |

| Baseline interval | 0.2 m | |

| The horizontal inclination of the baseline | 0 deg | |

| Flight height | 3.5 km | |

| Central incidence angle | 35 deg |

| Channel | Before Range Ambiguity Resolution | After Range Ambiguity Resolution |

|---|---|---|

| 1–2 | 0.5357 | 0.9861 |

| 2–3 | 0.4751 | 0.9860 |

| 3–4 | 0.5692 | 0.9861 |

| 4–5 | 0.5596 | 0.9861 |

| 5–6 | 0.6005 | 0.9860 |

| 6–7 | 0.6290 | 0.9860 |

| 7–8 | 0.5897 | 0.9864 |

| 8–9 | 0.5695 | 0.9867 |

| 9–10 | 0.5887 | 0.9867 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Y.; Zhang, F.; Tian, Y.; Chen, L.; Wang, R.; Wu, Y. High-Resolution and Wide-Swath 3D Imaging for Urban Areas Based on Distributed Spaceborne SAR. Remote Sens. 2023, 15, 3938. https://doi.org/10.3390/rs15163938

Yang Y, Zhang F, Tian Y, Chen L, Wang R, Wu Y. High-Resolution and Wide-Swath 3D Imaging for Urban Areas Based on Distributed Spaceborne SAR. Remote Sensing. 2023; 15(16):3938. https://doi.org/10.3390/rs15163938

Chicago/Turabian StyleYang, Yaqian, Fubo Zhang, Ye Tian, Longyong Chen, Robert Wang, and Yirong Wu. 2023. "High-Resolution and Wide-Swath 3D Imaging for Urban Areas Based on Distributed Spaceborne SAR" Remote Sensing 15, no. 16: 3938. https://doi.org/10.3390/rs15163938