Exploring Multisource Feature Fusion and Stacking Ensemble Learning for Accurate Estimation of Maize Chlorophyll Content Using Unmanned Aerial Vehicle Remote Sensing

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area and Experimental Design

2.2. Data Acquisition

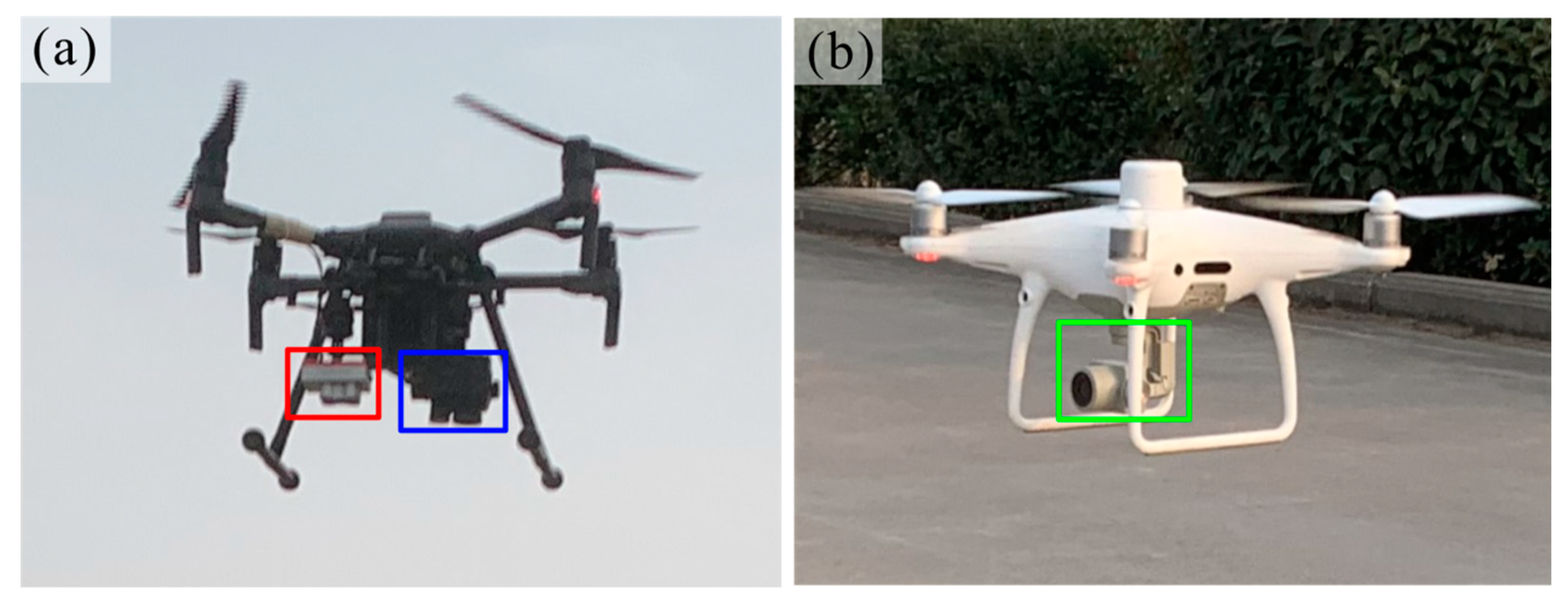

2.2.1. UAV Data Acquisition

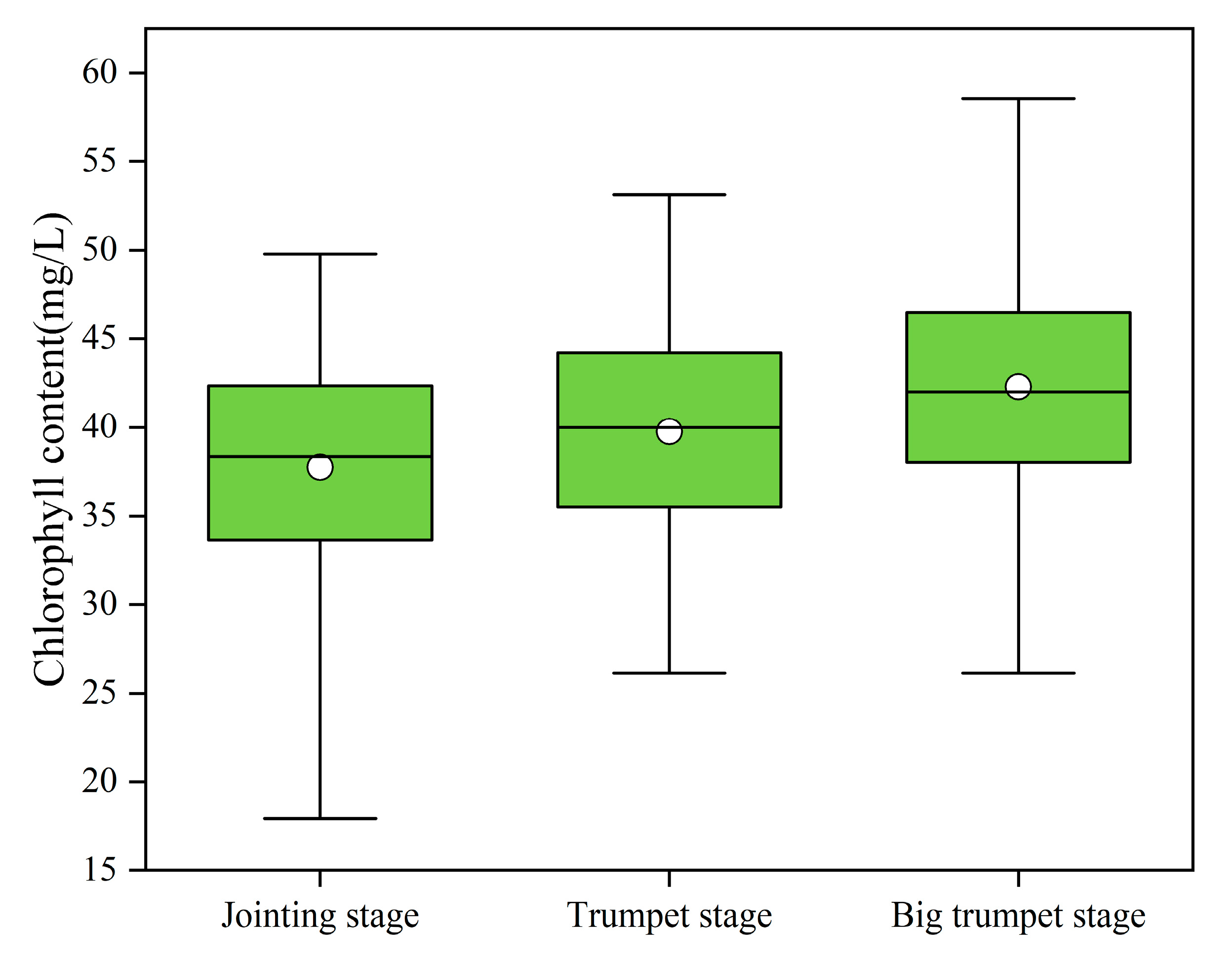

2.2.2. Maize Chlorophyll Content Acquisition

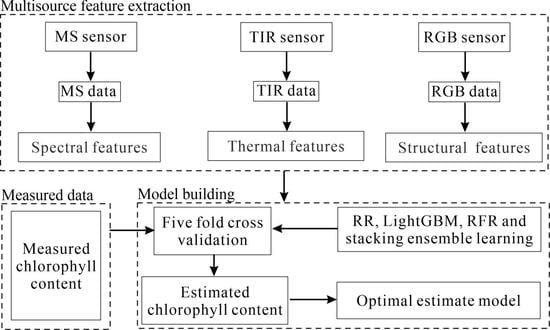

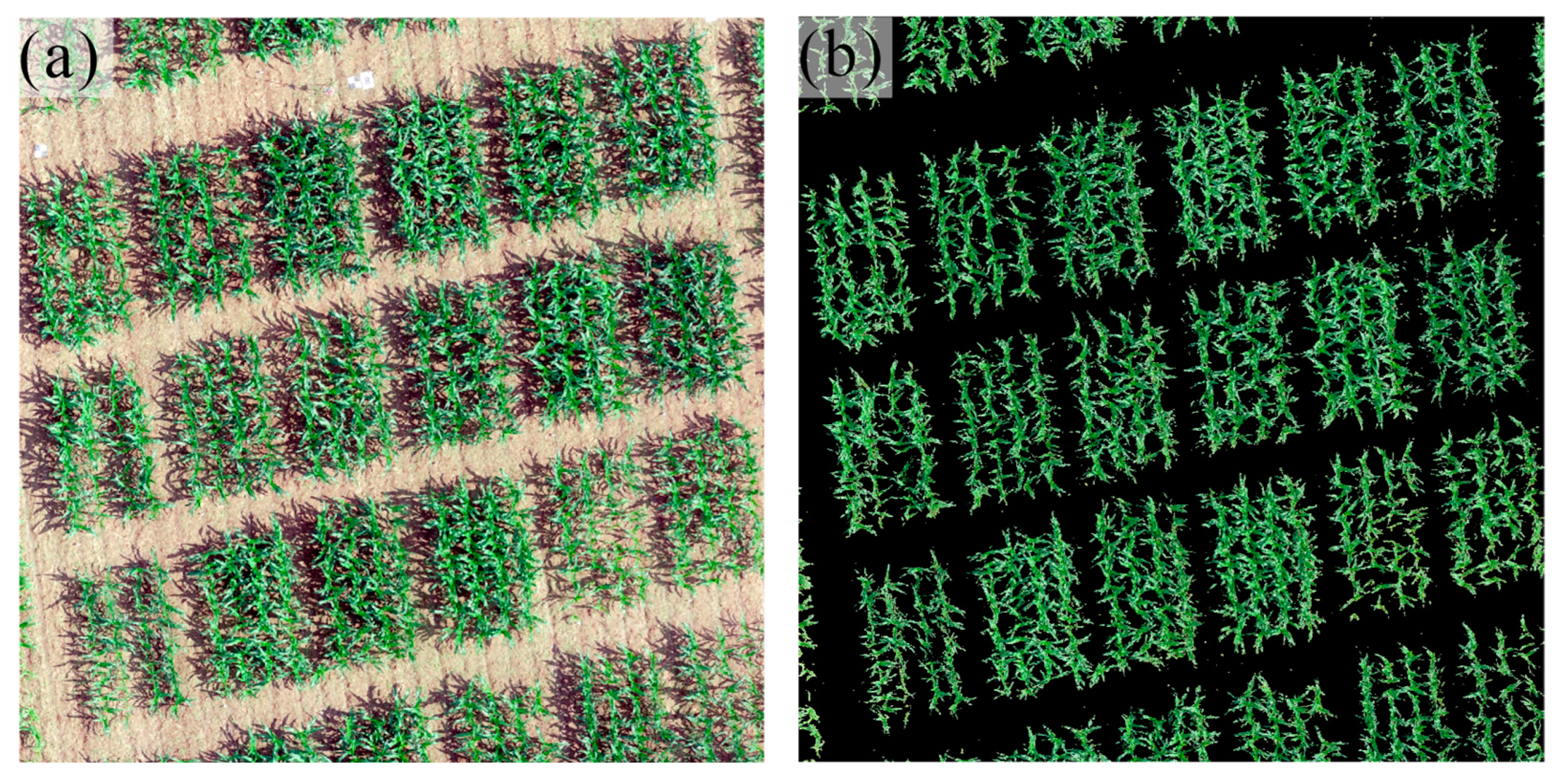

2.3. Multisource Features Extraction

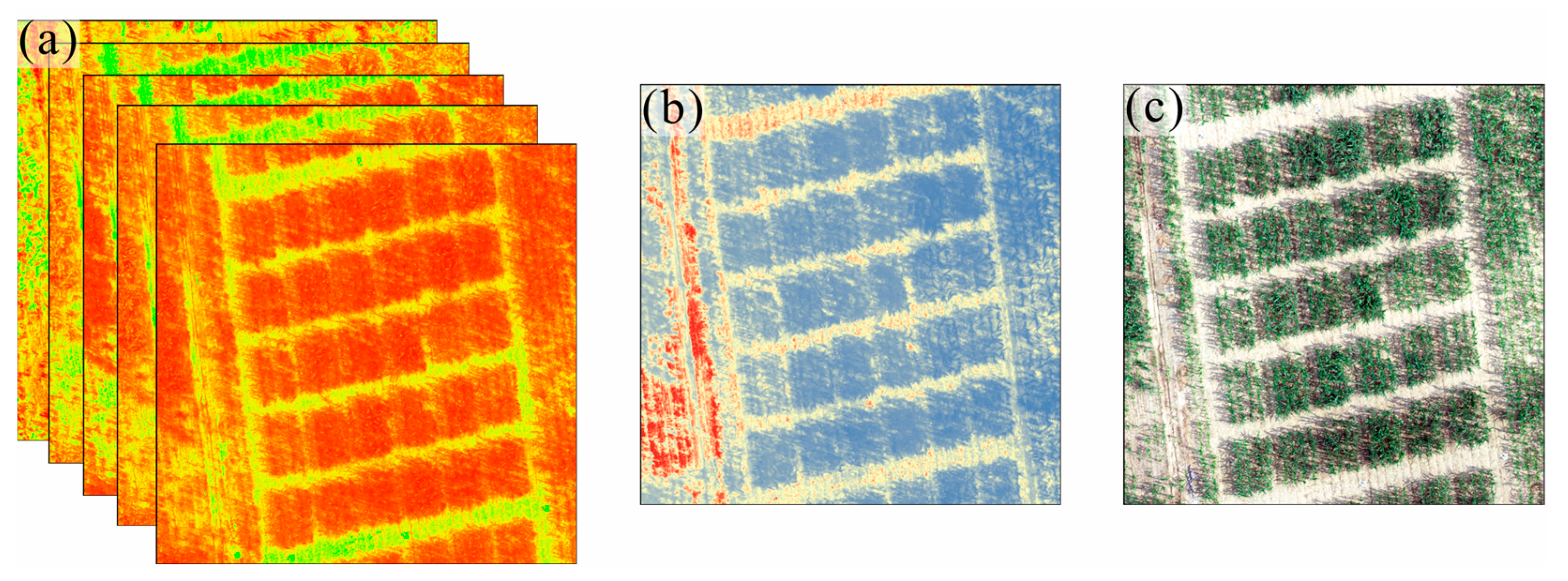

2.3.1. Canopy Spectral Features Extraction

2.3.2. Canopy Thermal Features Extraction

2.3.3. Canopy Structural Features Extraction

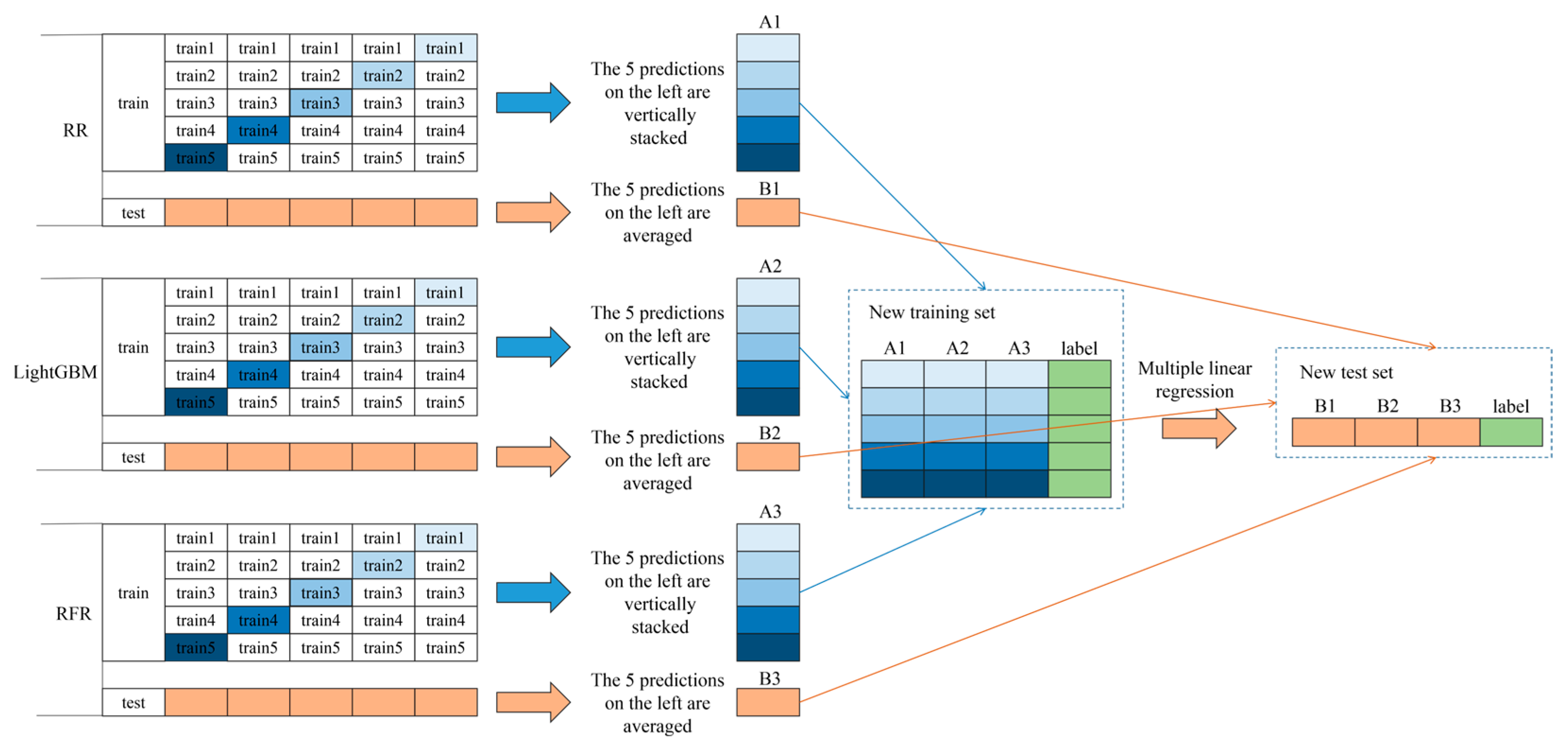

2.4. Model Building and Accuracy Assessment

2.4.1. Regression Techniques

2.4.2. Assessment Methods

3. Results

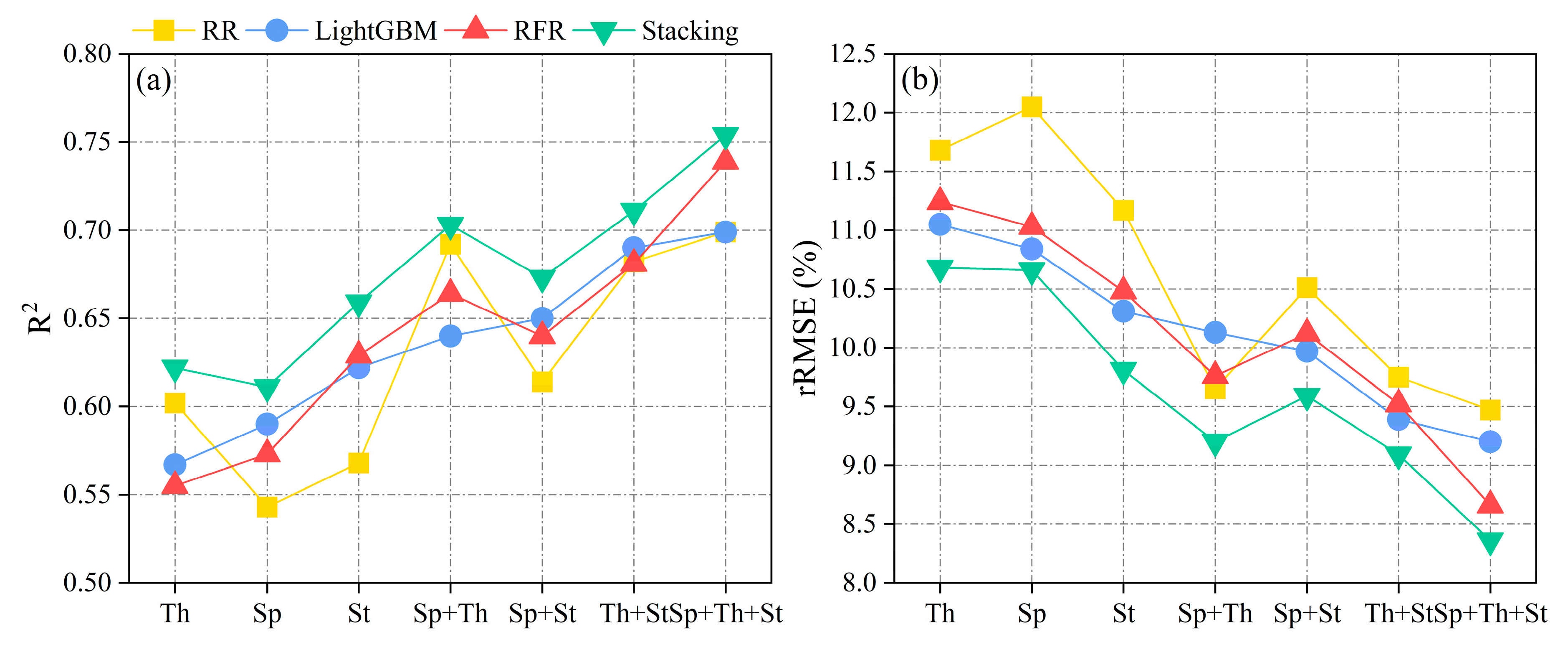

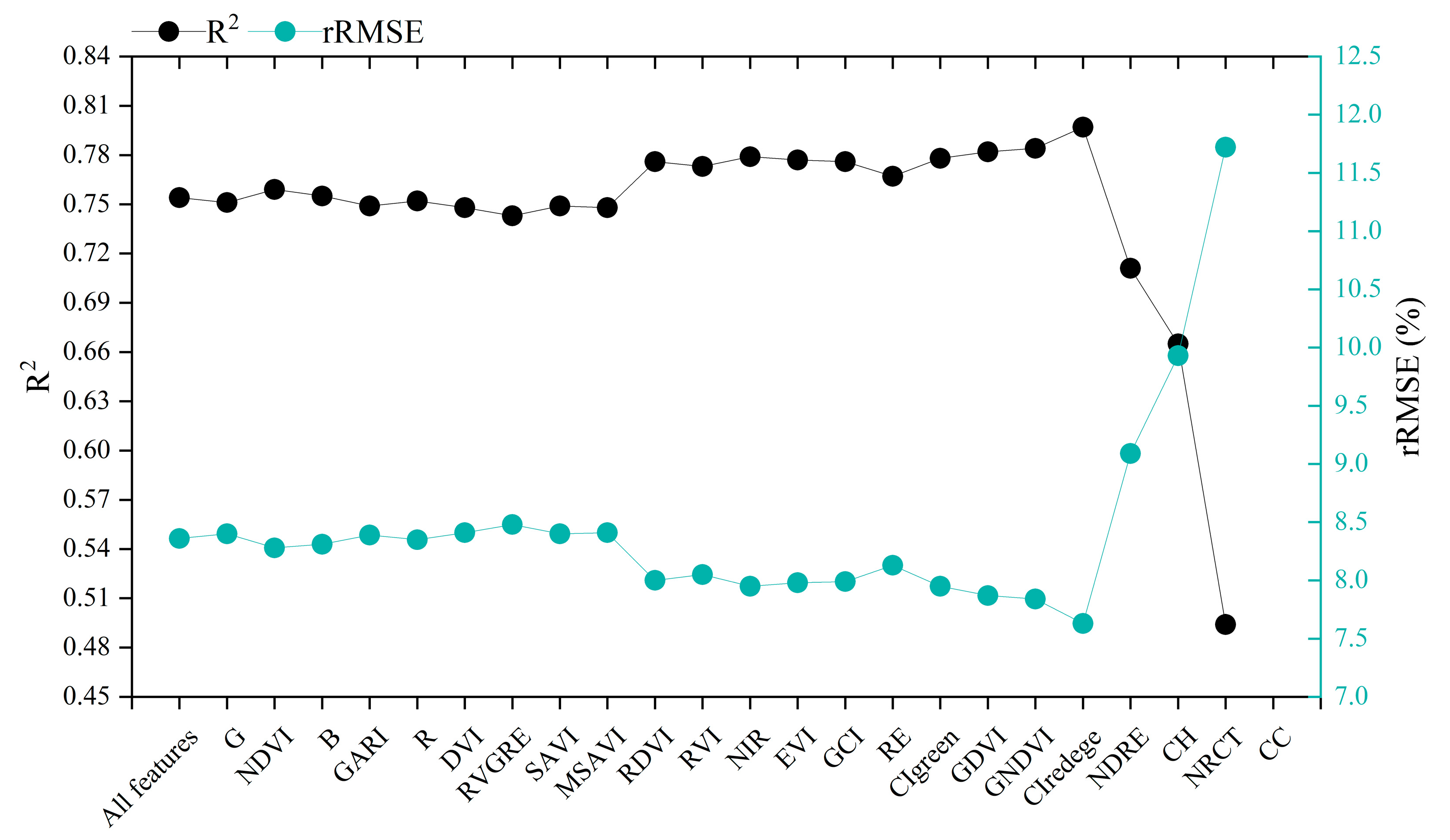

3.1. Multisource Features Fusion Chlorophyll Content Estimation

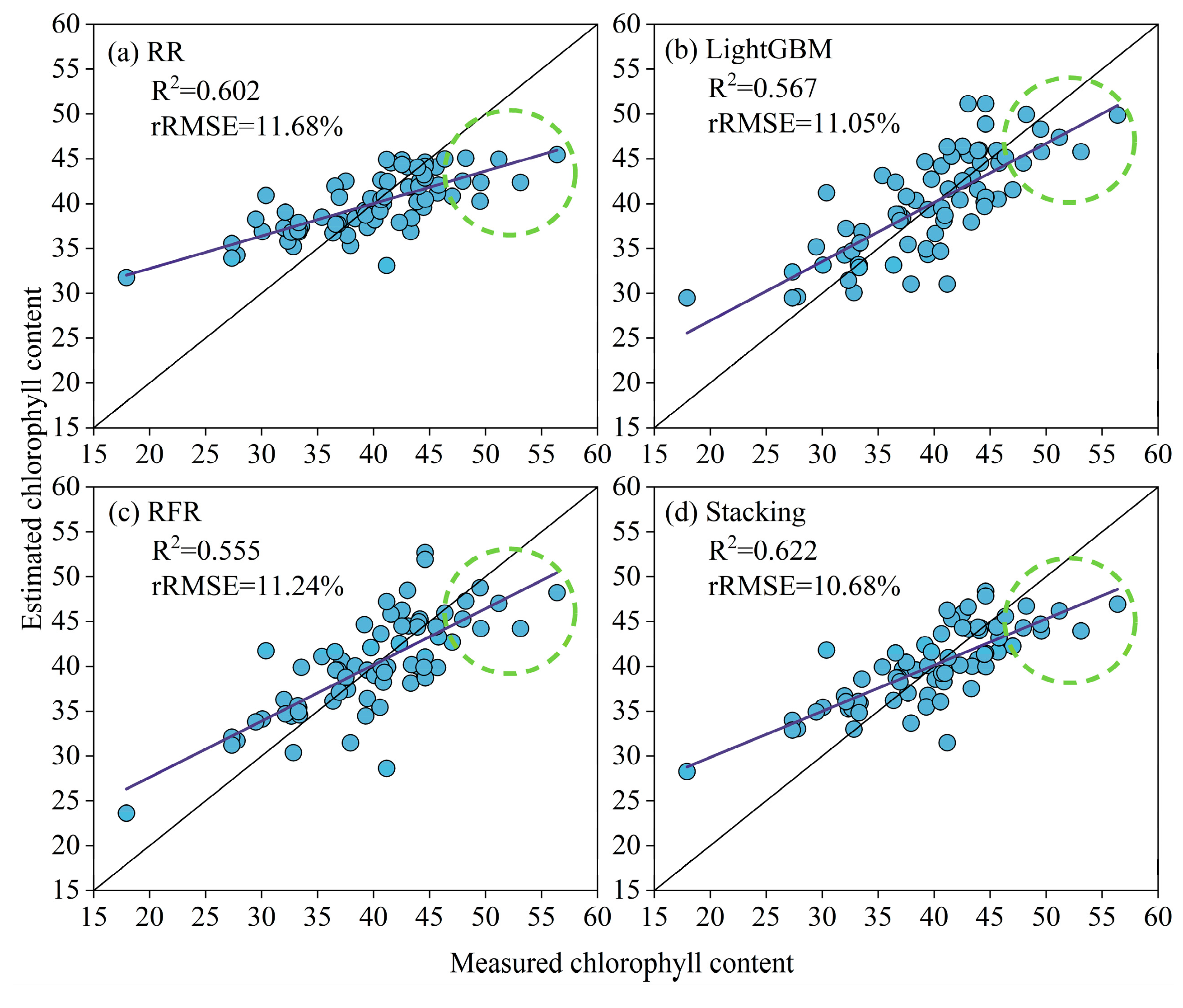

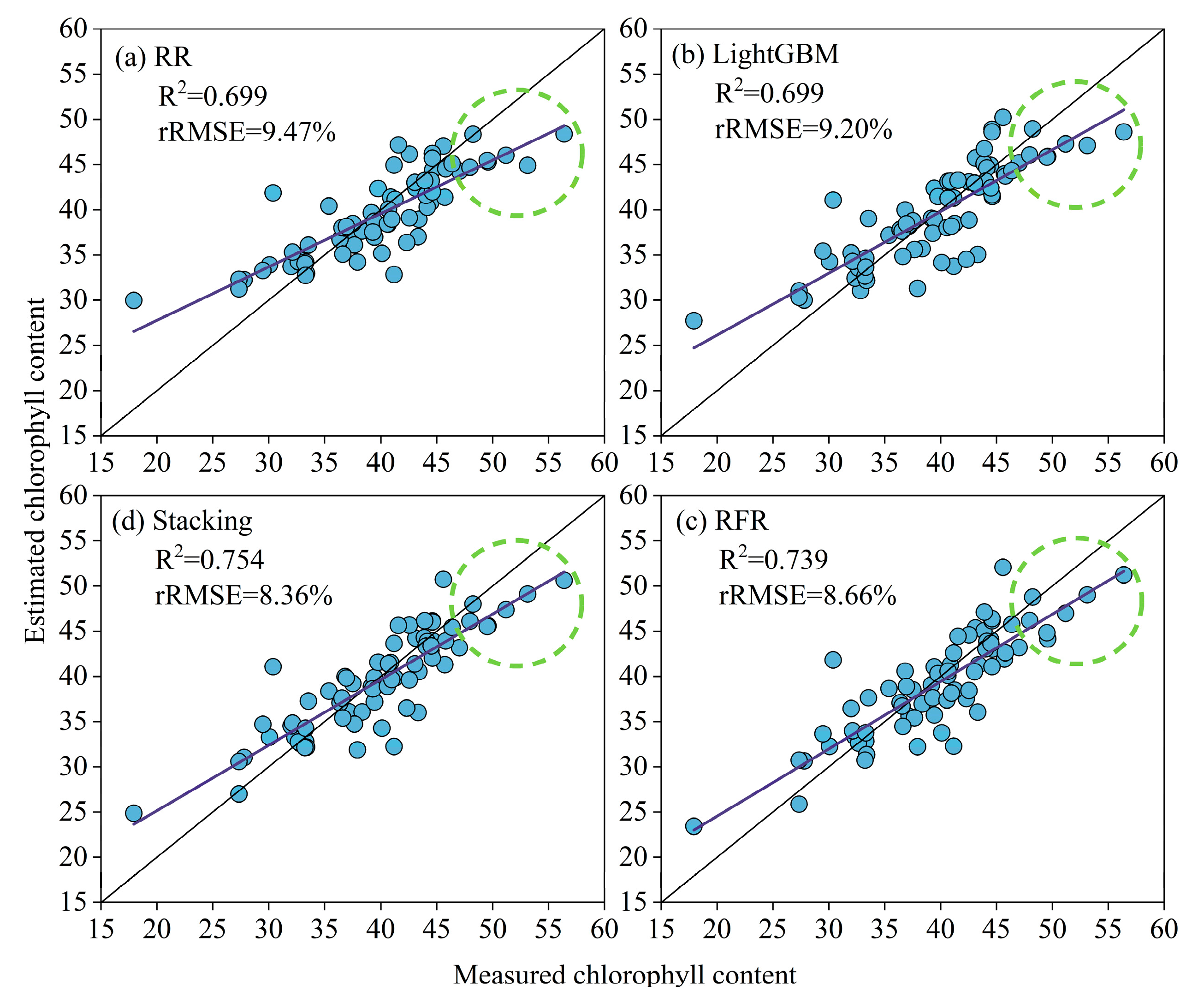

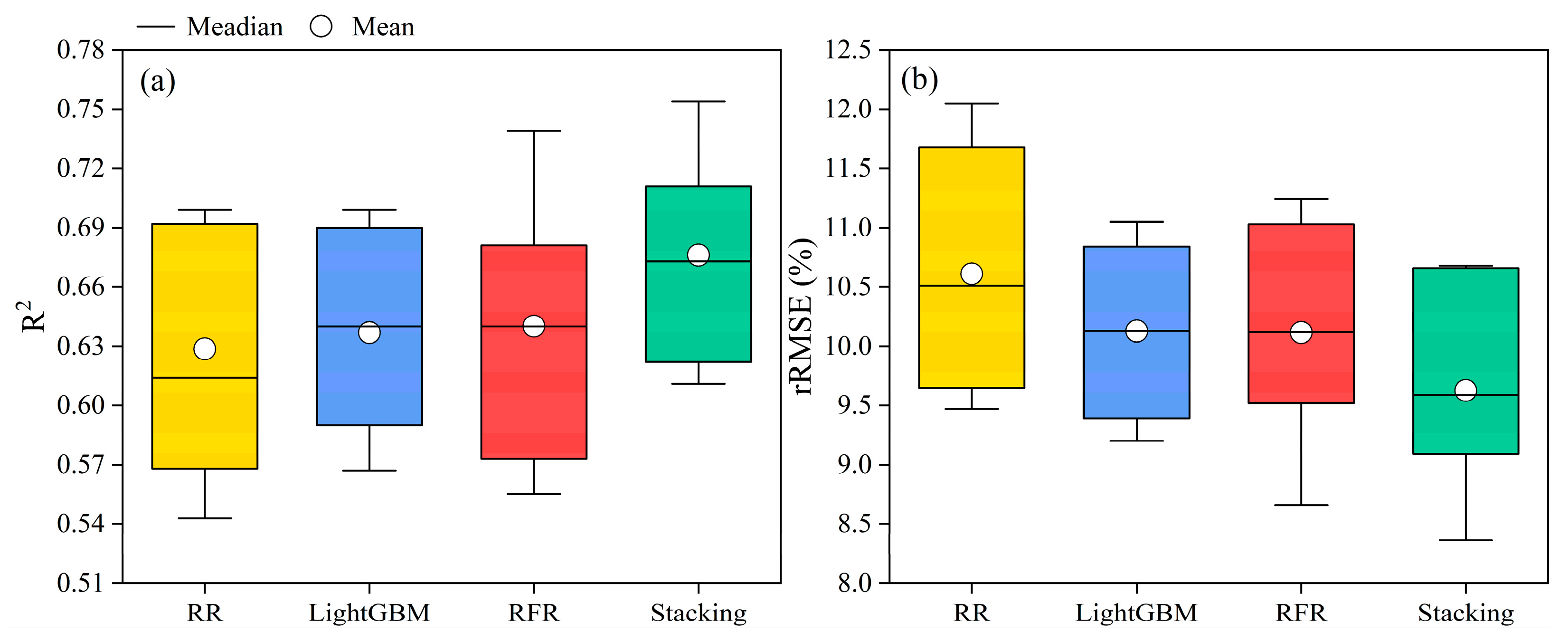

3.2. RR, LightGBM, RFR, and Stacking Chlorophyll Content Estimation

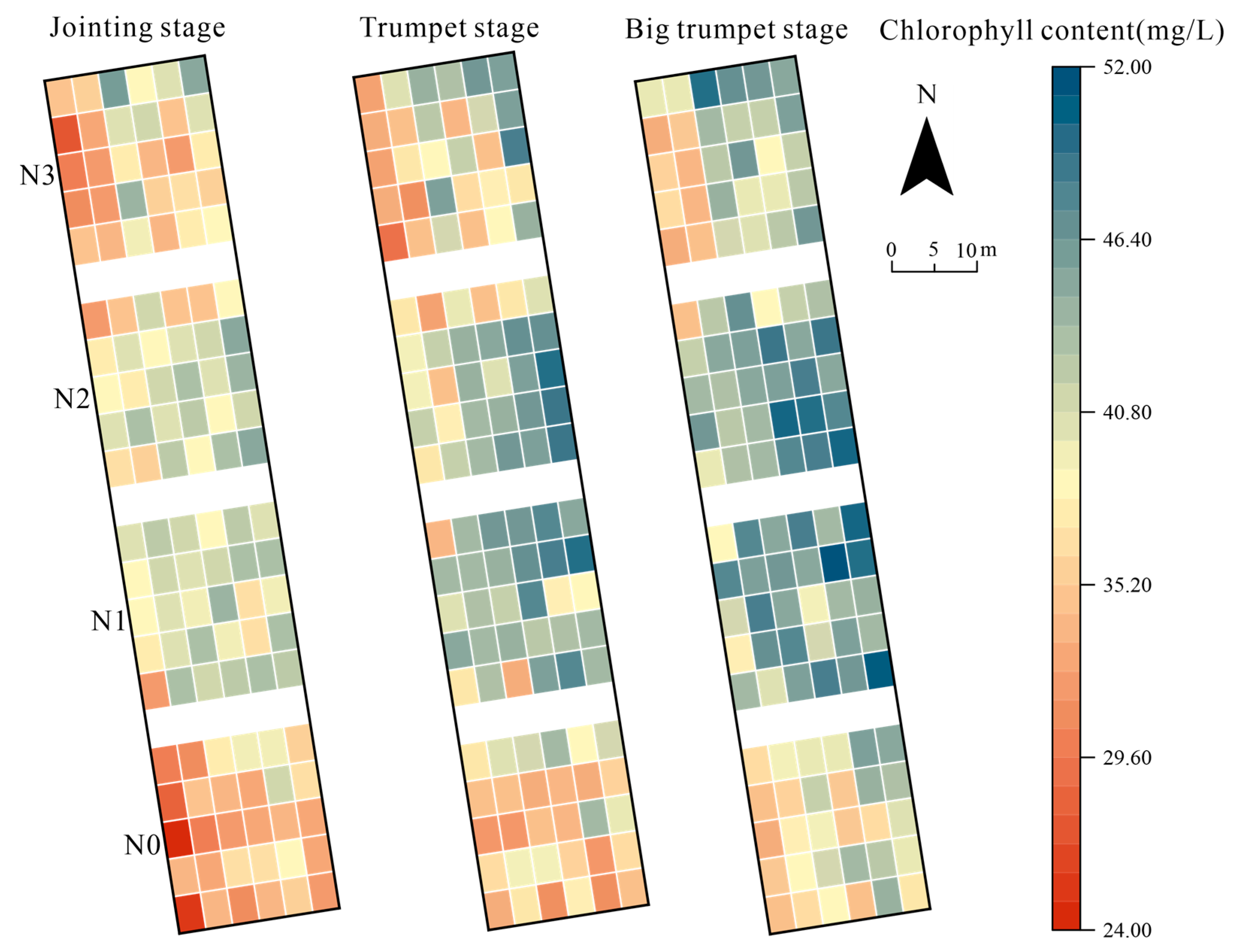

3.3. Temporal and Spatial Distribution of Chlorophyll Content

4. Discussion

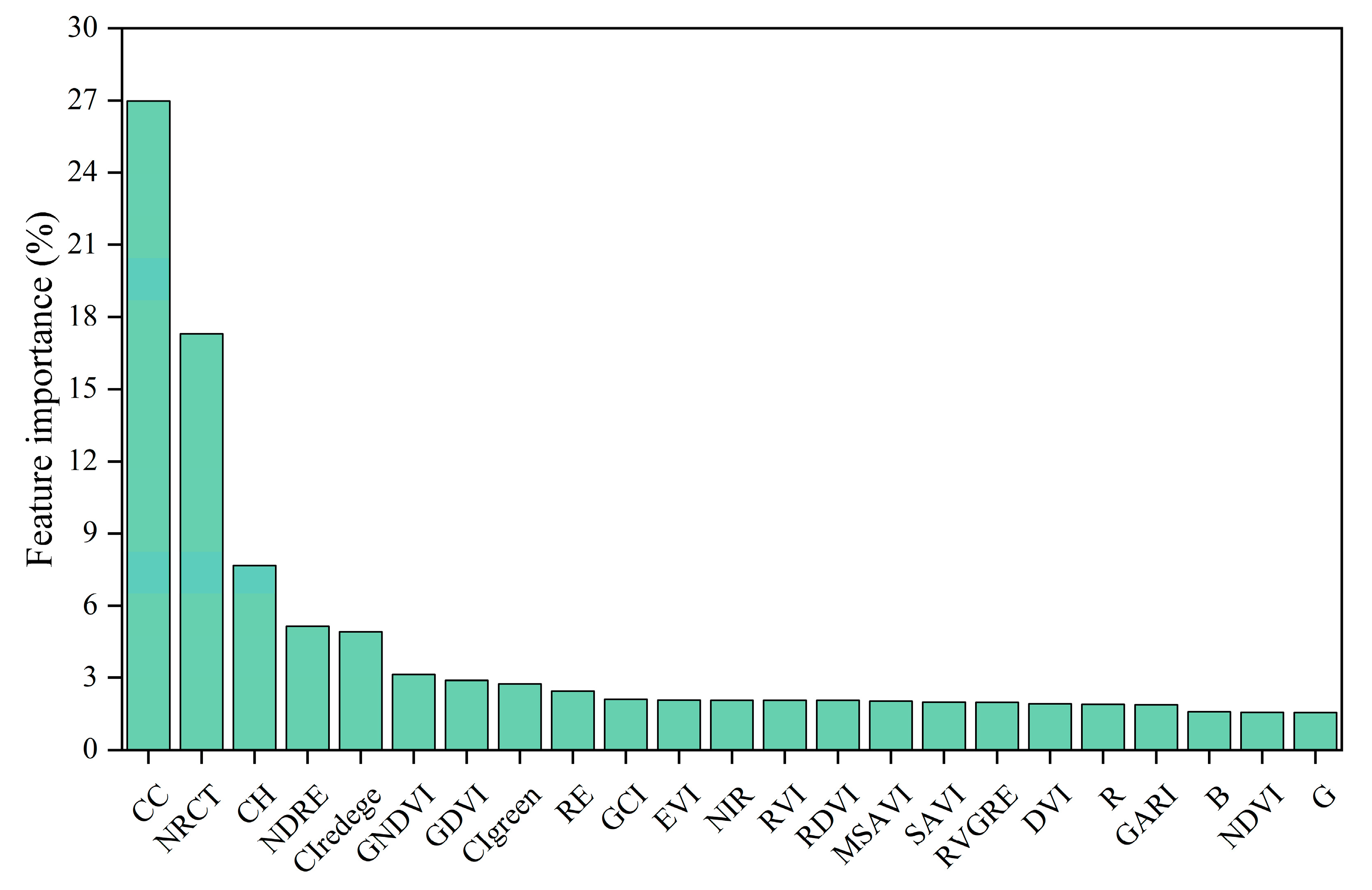

4.1. Analysis of the Potential for Multisource Feature Fusion

4.2. Analysis of the Potential for Stacking in Chlorophyll Content Estimation

4.3. Implications and Future Research

5. Conclusions

- (1)

- UAV multisource feature fusion surpasses the use of single features alone in terms of estimation accuracy. Furthermore, the fusion of multiple features effectively addresses the issue of underestimation caused by spectral saturation in areas with dense vegetation.

- (2)

- Stacking ensemble learning exhibits superior suitability for chlorophyll content estimation compared to traditional machine learning algorithms. This highlights the substantial potential of stacking ensemble learning in precision agriculture management.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Grinberg, N.F.; Orhobor, O.I.; King, R.D. An evaluation of machine-learning for predicting phenotype: Studies in yeast, rice, and wheat. Mach. Learn. 2020, 109, 251–277. [Google Scholar] [CrossRef] [PubMed]

- Ye, H.; Huang, W.; Huang, S.; Cui, B.; Dong, Y.; Guo, A.; Ren, Y.; Jin, Y. Recognition of banana fusarium wilt based on UAV remote sensing. Remote Sens. 2020, 12, 938. [Google Scholar] [CrossRef]

- Wang, J.; Zhou, Q.; Shang, J.; Liu, C.; Zhuang, T.; Ding, J.; Xian, Y.; Zhao, L.; Wang, W.; Zhou, G.; et al. UAV-and machine learning-based retrieval of wheat SPAD values at the overwintering stage for variety screening. Remote Sens. 2021, 13, 5166. [Google Scholar] [CrossRef]

- Qiao, L.; Gao, D.; Zhang, J.; Li, M.; Sun, H.; Ma, J. Dynamic influence elimination and chlorophyll content diagnosis of maize using UAV spectral imagery. Remote Sens. 2020, 12, 2650. [Google Scholar] [CrossRef]

- Xie, Q.; Dash, J.; Huete, A.; Jiang, A.; Yin, G.; Ding, Y.; Peng, D.; Hall, C.C.; Brown, L.; Shi, Y.; et al. Retrieval of crop biophysical parameters from Sentinel-2 remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 187–195. [Google Scholar] [CrossRef]

- Yang, X.; Yang, R.; Ye, Y.; Yuan, Z.; Wang, D.; Hua, K. Winter wheat SPAD estimation from UAV hyperspectral data using cluster-regression methods. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102618. [Google Scholar] [CrossRef]

- Sun, Q.; Gu, X.; Chen, L.; Xu, X.; Wei, Z.; Pan, Y.; Gao, Y. Monitoring maize canopy chlorophyll density under lodging stress based on UAV hyperspectral imagery. Comput. Electron. Agric. 2022, 193, 106671. [Google Scholar] [CrossRef]

- Cao, Y.; Li, G.L.; Luo, Y.K.; Pan, Q.; Zhang, S.Y. Monitoring of sugar beet growth indicators using wide-dynamic-range vegetation index (WDRVI) derived from UAV multispectral images. Comput. Electron. Agric. 2020, 171, 105331. [Google Scholar] [CrossRef]

- Yang, H.; Hu, Y.; Zheng, Z.; Qiao, Y.; Zhang, K.; Guo, T.; Chen, J. Estimation of Potato Chlorophyll Content from UAV Multispectral Images with Stacking Ensemble Algorithm. Agronomy 2022, 12, 2318. [Google Scholar] [CrossRef]

- Qiao, L.; Tang, W.; Gao, D.; Zhao, R.; An, L.; Li, M.; Sun, H.; Song, D. UAV-based chlorophyll content estimation by evaluating vegetation index responses under different crop coverages. Comput. Electron. Agric. 2022, 196, 106775. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Li, Z.; Yang, G.; Song, X.; Yang, X.; Zhao, Y. Remote-sensing estimation of potato above-ground biomass based on spectral and spatial features extracted from high-definition digital camera images. Comput. Electron. Agric. 2022, 198, 107089. [Google Scholar] [CrossRef]

- Fei, S.; Hassan, M.A.; Xiao, Y.; Su, X.; Chen, Z.; Cheng, Q.; Duan, F.; Chen, R.; Ma, Y. UAV-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat. Precis. Agric. 2022, 24, 187–212. [Google Scholar] [CrossRef] [PubMed]

- Shu, M.; Fei, S.; Zhang, B.; Yang, X.; Guo, Y.; Li, B.; Ma, Y. Application of UAV Multisensor Data and Ensemble Approach for High-Throughput Estimation of Maize Phenotyping Traits. Plant Phenomics 2022, 2022, 9802585. [Google Scholar] [CrossRef]

- Zhu, W.; Sun, Z.; Huang, Y.; Yang, T.; Li, J.; Zhu, K.; Zhang, J.; Yang, B.; Shao, C.; Peng, J.; et al. Optimization of multi-source UAV RS agro-monitoring schemes designed for field-scale crop phenotyping. Precis. Agric. 2021, 22, 1768–1802. [Google Scholar] [CrossRef]

- Lu, B.; He, Y. Evaluating empirical regression, machine learning, and radiative transfer modelling for estimating vegetation chlorophyll content using bi-seasonal hyperspectral images. Remote Sens. 2019, 11, 1979. [Google Scholar] [CrossRef]

- Guo, Y.; Yin, G.; Sun, H.; Wang, H.; Chen, S.; Senthilnath, J.; Wang, J.; Fu, Y. Scaling effects on chlorophyll content estimations with RGB camera mounted on a UAV platform using machine-learning methods. Sensors 2020, 20, 5130. [Google Scholar] [CrossRef] [PubMed]

- Singhal, G.; Bansod, B.; Mathew, L.; Goswami, J.; Choudhury, B.; Raju, P. Chlorophyll estimation using multi-spectral unmanned aerial system based on machine learning techniques. Remote Sens. Appl.-Soc. Environ. 2019, 15, 100235. [Google Scholar] [CrossRef]

- Han, D.; Wang, P.; Tansey, K.; Liu, J.; Zhang, Y.; Zhang, S.; Li, H. Combining Sentinel-1 and-3 Imagery for Retrievals of Regional Multitemporal Biophysical Parameters Under a Deep Learning Framework. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2022, 15, 6985–6998. [Google Scholar] [CrossRef]

- Cao, X.; Fu, X.; Xu, C.; Meng, D. Deep spatial-spectral global reasoning network for hyperspectral image denoising. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Cui, L.; Jing, X.; Wang, Y.; Huan, Y.; Xu, Y.; Zhang, Q. Improved Swin Transformer-Based Semantic Segmentation of Postearthquake Dense Buildings in Urban Areas Using Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2022, 16, 369–385. [Google Scholar] [CrossRef]

- Wu, X.; Hong, D.; Chanussot, J. Convolutional neural networks for multimodal remote sensing data classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–10. [Google Scholar] [CrossRef]

- Yao, J.; Zhang, B.; Li, C.; Hong, D.; Chanussot, J. Extended Vision Transformer (ExViT) for Land Use and Land Cover Classification: A Multimodal Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5514415. [Google Scholar] [CrossRef]

- Shao, J.; Tang, L.; Liu, M.; Shao, G.; Sun, L.; Qiu, Q. BDD-Net: A general protocol for mapping buildings damaged by a wide range of disasters based on satellite imagery. Remote Sens. 2020, 12, 1670. [Google Scholar] [CrossRef]

- Hong, D.; Hu, J.; Yao, J.; Chanussot, J.; Zhu, X.X. Multimodal remote sensing benchmark datasets for land cover classification with a shared and specific feature learning model. ISPRS-J. Photogramm. Remote Sens. 2021, 178, 68–80. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating multispectral images and vegetation indices for precision farming applications from UAV images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Hatfield, J.L.; Prueger, J.H. Value of using different vegetative indices to quantify agricultural crop characteristics at different growth stages under varying management practices. Remote Sens. 2010, 2, 562–578. [Google Scholar] [CrossRef]

- Potgieter, A.B.; George-Jaeggli, B.; Chapman, S.C.; Laws, K.; Suárez Cadavid, L.A.; Wixted, J.; Watson, J.; Eldridge, M.; Jordan, D.R.; Hammer, G.L. Multi-spectral imaging from an unmanned aerial vehicle enables the assessment of seasonal leaf area dynamics of sorghum breeding lines. Front. Plant Sci. 2017, 8, 1532. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of leaf-area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Li, F.; Miao, Y.; Feng, G.; Yuan, F.; Yue, S.; Gao, X.; Liu, Y.; Liu, B.; Ustin, S.L.; Chen, X. Improving estimation of summer maize nitrogen status with red edge-based spectral vegetation indices. Field Crop. Res. 2014, 157, 111–123. [Google Scholar] [CrossRef]

- Roujean, J.-L.; Breon, F.-M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Broge, N.H.; Mortensen, J.V. Deriving green crop area index and canopy chlorophyll density of winter wheat from spectral reflectance data. Remote Sens. Environ. 2002, 81, 45–57. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32. [Google Scholar] [CrossRef]

- Dash, J.; Jeganathan, C.; Atkinson, P. The use of MERIS Terrestrial Chlorophyll Index to study spatio-temporal variation in vegetation phenology over India. Remote Sens. Environ. 2010, 114, 1388–1402. [Google Scholar] [CrossRef]

- Elsayed, S.; Elhoweity, M.; Ibrahim, H.H.; Dewir, Y.H.; Migdadi, H.M.; Schmidhalter, U. Thermal imaging and passive reflectance sensing to estimate the water status and grain yield of wheat under different irrigation regimes. Agric. Water Manag. 2017, 189, 98–110. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña, J.M.; de Castro, A.I.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Liu, J.; Jin, L. Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging. ISPRS-J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 3149–3157. [Google Scholar]

- Shao, G.; Han, W.; Zhang, H.; Liu, S.; Wang, Y.; Zhang, L.; Cui, X. Mapping maize crop coefficient Kc using random forest algorithm based on leaf area index and UAV-based multispectral vegetation indices. Agric. Water Manag. 2021, 252, 106906. [Google Scholar] [CrossRef]

- Hoerl, A.E.; Kennard, R.W. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Cheng, M.; Jiao, X.; Liu, Y.; Shao, M.; Yu, X.; Bai, Y.; Wang, Z.; Wang, S.; Tuohuti, N.; Liu, S.; et al. Estimation of soil moisture content under high maize canopy coverage from UAV multimodal data and machine learning. Agric. Water Manag. 2022, 264, 107530. [Google Scholar] [CrossRef]

- Jin, X.; Li, Z.; Feng, H.; Ren, Z.; Li, S. Deep neural network algorithm for estimating maize biomass based on simulated Sentinel 2A vegetation indices and leaf area index. Crop J. 2020, 8, 87–97. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Liu, S.; Jin, X.; Nie, C.; Wang, S.; Yu, X.; Cheng, M.; Shao, M.; Wang, Z.; Tuohuti, N.; Bai, Y.; et al. Estimating leaf area index using unmanned aerial vehicle data: Shallow vs. deep machine learning algorithms. Plant Physiol. 2021, 187, 1551–1576. [Google Scholar] [CrossRef]

- Ding, F.; Li, C.; Zhai, W.; Fei, S.; Cheng, Q.; Chen, Z. Estimation of Nitrogen Content in Winter Wheat Based on Multi-Source Data Fusion and Machine Learning. Agriculture 2022, 12, 1752. [Google Scholar] [CrossRef]

- Jiang, J.; Johansen, K.; Stanschewski, C.S.; Wellman, G.; Mousa, M.A.; Fiene, G.M.; Asiry, K.A.; Tester, M.; McCabe, M.F. Phenotyping a diversity panel of quinoa using UAV-retrieved leaf area index, SPAD-based chlorophyll and a random forest approach. Precis. Agric. 2022, 23, 961–983. [Google Scholar] [CrossRef]

- Qiao, L.; Gao, D.; Zhao, R.; Tang, W.; An, L.; Li, M.; Sun, H. Improving estimation of LAI dynamic by fusion of morphological and vegetation indices based on UAV imagery. Comput. Electron. Agric. 2022, 192, 106603. [Google Scholar] [CrossRef]

- Wang, F.; Yi, Q.; Hu, J.; Xie, L.; Yao, X.; Xu, T.; Zheng, J. Combining spectral and textural information in UAV hyperspectral images to estimate rice grain yield. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102397. [Google Scholar] [CrossRef]

- Cheng, M.; Penuelas, J.; McCabe, M.F.; Atzberger, C.; Jiao, X.; Wu, W.; Jin, X. Combining multi-indicators with machine-learning algorithms for maize yield early prediction at the county-level in China. Agric. For. Meteorol. 2022, 323, 109057. [Google Scholar] [CrossRef]

- Shah, S.H.; Angel, Y.; Houborg, R.; Ali, S.; McCabe, M.F. A random forest machine learning approach for the retrieval of leaf chlorophyll content in wheat. Remote Sens. 2019, 11, 920. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Y.; Zhang, Q.; Duan, R.; Liu, J.; Qin, Y.; Wang, X. Toward Multi-Stage Phenotyping of Soybean with Multimodal UAV Sensor Data: A Comparison of Machine Learning Approaches for Leaf Area Index Estimation. Remote Sens. 2022, 15, 7. [Google Scholar] [CrossRef]

| Sensor Name | Sensor Type | Band | Wavelength | Bandwidth | Image Resolution |

|---|---|---|---|---|---|

| RedEdge MX | Multispectral | Red | 668 nm | 10 nm | 1280 × 960 |

| Green | 560 nm | 20 nm | 1280 × 960 | ||

| Blue | 475 nm | 20 nm | 1280 × 960 | ||

| Red edge | 717 nm | 10 nm | 1280 × 960 | ||

| Near infrared | 842 nm | 40 nm | 1280 × 960 | ||

| Zenmuse XT2 | Thermal | Thermal infrared | 10.5 μm | 6 μm | 640 × 512 |

| RGB | RGB | R, G, B | - | - | 5472 × 3468 |

| Sensor Type | Features | Formulation | Reference |

|---|---|---|---|

| MS | Normalized difference vegetation index (NDVI) | NDVI = (NIR − R)/(NIR + R) | [25] |

| Green normalized difference vegetation index (GNDVI) | GNDVI = (NIR − G)/(NIR + G) | [26] | |

| Soil adjusted vegetation index (SAVI) | SAVI = (1 + L) × (NIR − R)/(NIR + R + L)(L = 0.5) | [27] | |

| Enhanced vegetation index (EVI) | EVI = 2.5 × (NIR − R)/(NIR + 6 × R − 7.5 × B + 1) | [28] | |

| Difference vegetation index (DVI) | DVI = NIR − R | [29] | |

| Normalized difference red edge (NDRE) | NDRE = (NIR − REG)/(NIR + REG) | [30] | |

| Red difference vegetation index (RDVI) | RDVI = (NIR − R)/(NIR + R)^0.5 | [31] | |

| Green difference vegetation index (GDVI) | GDVI = NIR − G | [32] | |

| Ratio vegetation index (RVI) | RVI = NIR/R | [33] | |

| Green vhlorophyll index (GCI) | GCI = (NIR/G) − 1 | [34] | |

| Green atmospherically resistant index (GARI) | GARI = (NIR − G + 1.7(B − R))/(NIR + G − 1.7(B − R)) | [32] | |

| Modified soil adjusted vegetation index (MSAVI) | MSAVI = 1.5(NIR − R)/(NIR + R) + 0.5 | [35] | |

| Green ratio vegetation index (RVIGRE) | RVIGRE = NIR/G | [36] | |

| Chlorophyll index with red edge (CIredege) | CIredege = (NIR/REG) − 1 | [37] | |

| Chlorophyll index with green (CIgreen) | CIgreen = (NIR/G) − 1 | [38] | |

| TIR | Normalized relative canopy temperature (NRCT) | NRCT = (Ti − Tmin)/(Tmax − Tmin) | [39] |

| RGB | Crop cover (CC) | CC = number of crop pixels in the plot/total number of plot pixels | [40] |

| Crop height (CH) | CH = DSM − DEM | [41] |

| Dataset | Sample Size | Min | Mean | Max | Standard Deviation | Coefficient of Variation (%) |

|---|---|---|---|---|---|---|

| Training set | 288 | 20.55 | 39.97 | 58.56 | 6.12 | 15.32 |

| Test set | 72 | 17.94 | 39.76 | 56.39 | 6.71 | 16.87 |

| Sensor Combination | Features Combination | RR | LightGBM | RFR | Stacking | ||||

|---|---|---|---|---|---|---|---|---|---|

| R2 | rRMSE (%) | R2 | rRMSE (%) | R2 | rRMSE (%) | R2 | rRMSE (%) | ||

| TIR | Th | 0.602 | 11.68 | 0.567 | 11.05 | 0.555 | 11.24 | 0.622 | 10.68 |

| MS | Sp | 0.543 | 12.05 | 0.590 | 10.84 | 0.573 | 11.03 | 0.611 | 10.66 |

| RGB | St | 0.568 | 11.17 | 0.622 | 10.31 | 0.629 | 10.48 | 0.659 | 9.81 |

| MS + TIR | Sp + Th | 0.692 | 9.65 | 0.640 | 10.13 | 0.664 | 9.76 | 0.703 | 9.20 |

| MS + RGB | Sp + St | 0.614 | 10.51 | 0.650 | 9.97 | 0.640 | 10.12 | 0.673 | 9.59 |

| TIR + RGB | Th + St | 0.682 | 9.75 | 0.690 | 9.39 | 0.681 | 9.52 | 0.711 | 9.09 |

| MS + TIR + RGB | Sp + Th + St | 0.699 | 9.47 | 0.699 | 9.20 | 0.739 | 8.66 | 0.754 | 8.36 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhai, W.; Li, C.; Cheng, Q.; Ding, F.; Chen, Z. Exploring Multisource Feature Fusion and Stacking Ensemble Learning for Accurate Estimation of Maize Chlorophyll Content Using Unmanned Aerial Vehicle Remote Sensing. Remote Sens. 2023, 15, 3454. https://doi.org/10.3390/rs15133454

Zhai W, Li C, Cheng Q, Ding F, Chen Z. Exploring Multisource Feature Fusion and Stacking Ensemble Learning for Accurate Estimation of Maize Chlorophyll Content Using Unmanned Aerial Vehicle Remote Sensing. Remote Sensing. 2023; 15(13):3454. https://doi.org/10.3390/rs15133454

Chicago/Turabian StyleZhai, Weiguang, Changchun Li, Qian Cheng, Fan Ding, and Zhen Chen. 2023. "Exploring Multisource Feature Fusion and Stacking Ensemble Learning for Accurate Estimation of Maize Chlorophyll Content Using Unmanned Aerial Vehicle Remote Sensing" Remote Sensing 15, no. 13: 3454. https://doi.org/10.3390/rs15133454

APA StyleZhai, W., Li, C., Cheng, Q., Ding, F., & Chen, Z. (2023). Exploring Multisource Feature Fusion and Stacking Ensemble Learning for Accurate Estimation of Maize Chlorophyll Content Using Unmanned Aerial Vehicle Remote Sensing. Remote Sensing, 15(13), 3454. https://doi.org/10.3390/rs15133454