1. Introduction

In surveillance and monitoring systems, the use of unmanned aerial vehicles (UAVs), such as drones or mobile vehicles, provides advantages in terms of access to the environment for exploration like augmented range, maneuverability, and safety due to their omnidirectional displacement capacity. These tasks must be performed autonomously by capturing information from sensors in the environment at scheduled or random points at specific times and areas. The collected data present errors and uncertainties that make object recognition difficult and depend on the resolution of the sensors for detection and identification. Data acquisition resolution can be improved by integrating sensor data fusion systems to measure the same physical phenomenon by capturing information from two or more sensors simultaneously and applying filtering or pattern recognition techniques to obtain better results than those obtained with only one sensor

Sensor data fusion consists of different techniques, inspired by the human cognitive ability to extract information from the environment by integrating different stimuli. In the case of sensor fusion, measurement variables are integrated through a set of sensors, often different from each other, that make inferences that cannot be possible from a single sensor [

1].

The fusion of Radar (Radio Detecting and Ranging) and LiDAR (Light Detection and Ranging or Laser Imaging Detection and Ranging) sensor data presents a better response considering two key aspects: (i) the use of two coherent systems that allow an accurate phase capture and (ii) the improvement in the extraction of data from the environment, with the combination of two or more sensors arranged on the mobile vehicle or UAV [

1,

2]. This integration allows the error to be decreased in the detection of objects in a juxtaposition relationship by determining the distances through the reflection of radio frequency signals in the Radar case and through the reflection of a light beam (photons) for the case of the LiDAR sensor, generating a double observer facing the same event, in this case, the measurement of proximity and/or angular velocity [

3,

4]. Thus, the choice of Radar and LiDAR sensors requires special care, mainly about technical characteristics and compatibility [

5,

6], coherence in range, and data acquisition. The above allows a complementary performance to be achieved with its associated element in data fusion, facilitating a better understanding of the three-dimensional environment that feeds the data processing system integrated into the UAV [

7] or at a remote site.

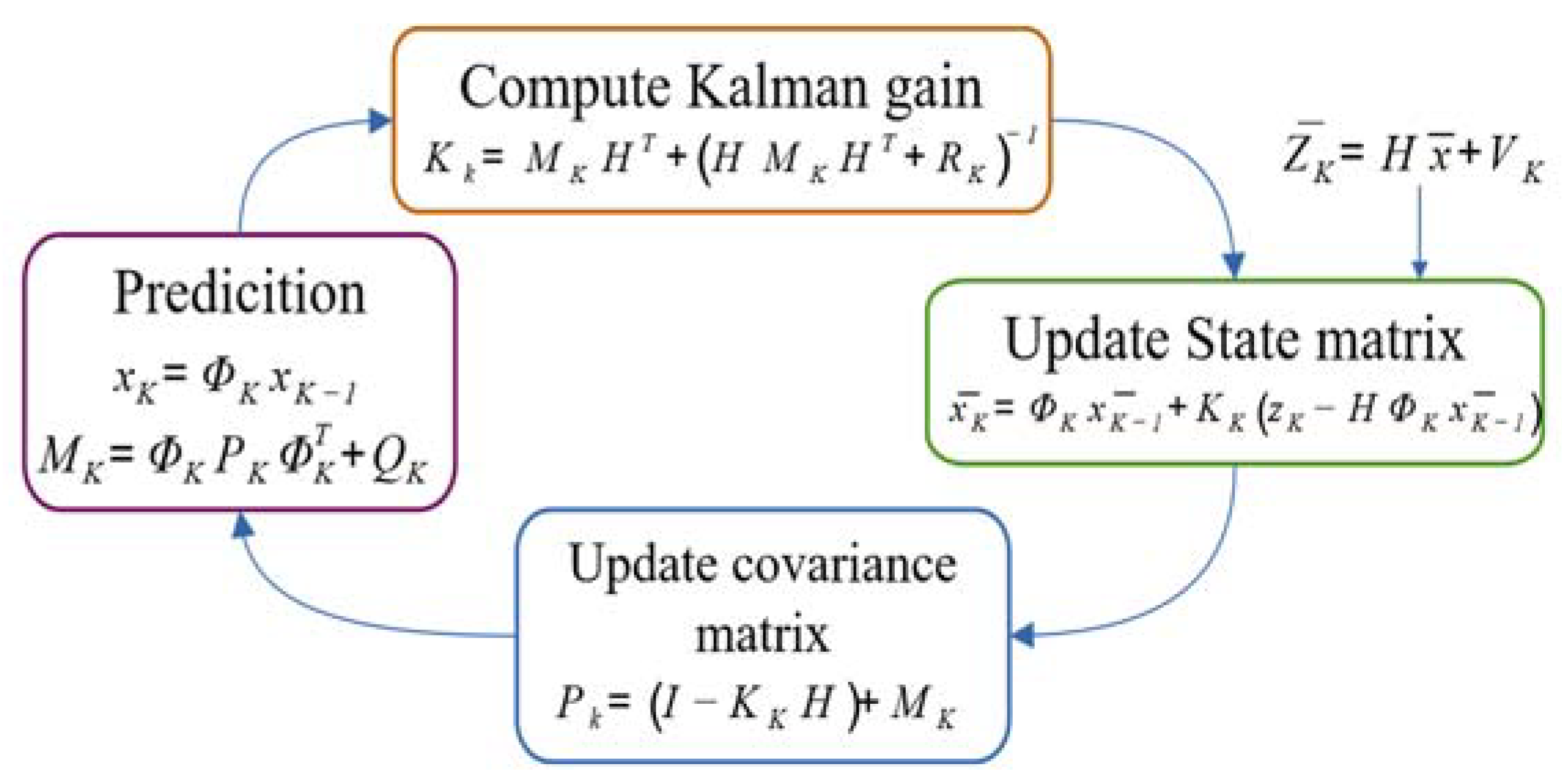

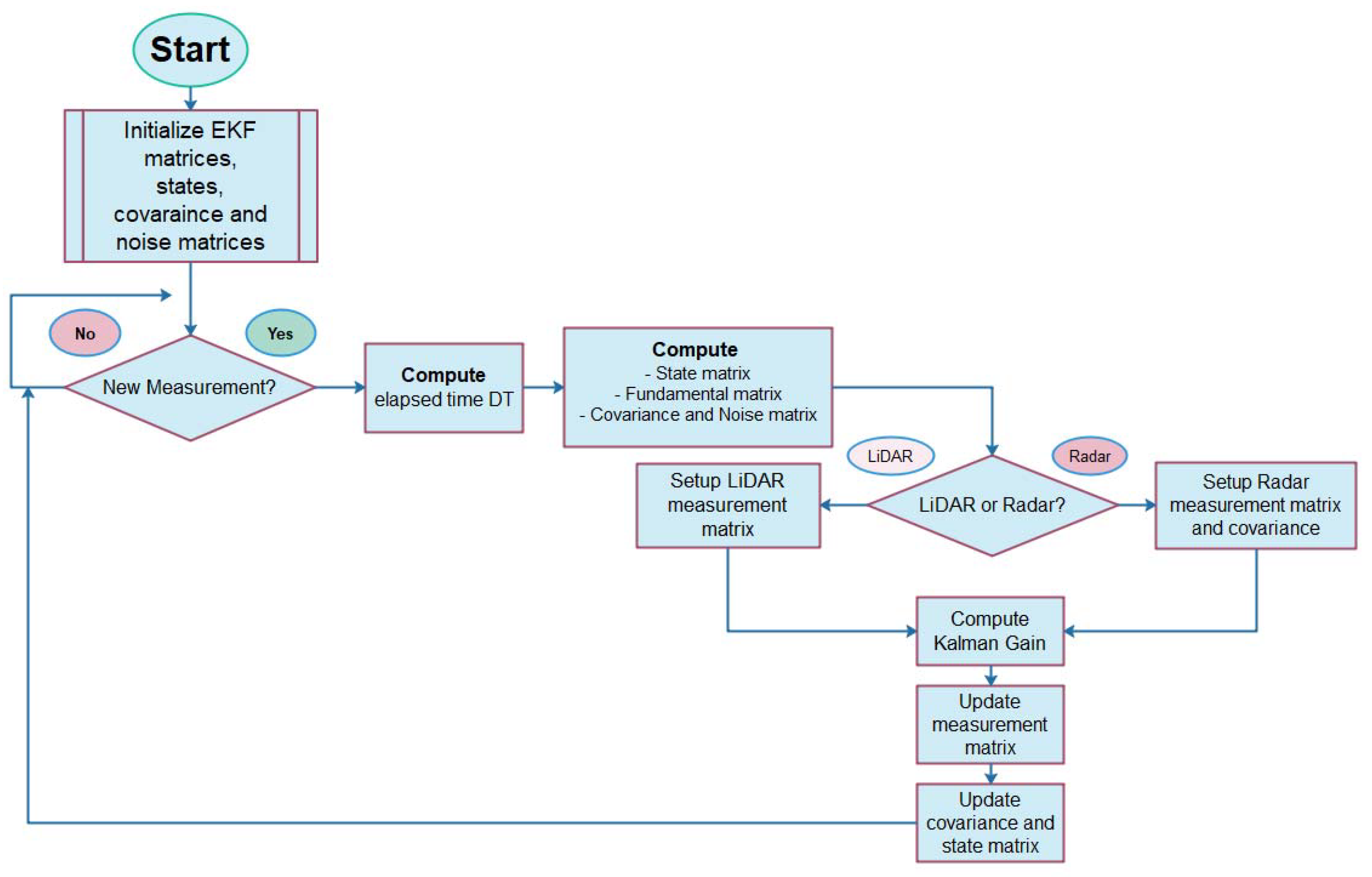

A proper sensor fusion of LiDAR and Radar data must rely on the use of estimators to achieve higher consistency in the measurements to mitigate the uncertainties by using three parameters: Radar measurements, LiDAR measurements, and Kalman filtering. This improves the estimation of the measured variable. The Kalman filtering technique allows the description of the real world using linear differential equations to be expressed as a function of state variables. In most real-world problems, the measurements may not be a linear function of the states of the system. However, applying extended Kalman filtering (EKF) techniques counteracts this situation by modeling the phenomenon using a set of nonlinear differential equations,

, which describe the dynamics of the system. The EKF allows “projecting” in time the behavior of the system to be filtered, with variables that are non-measurable but are calculable from the measurable variables. Then, by predicting the future data and their deviation concerning the measured data, the Kalman gain,

, is calculated, and it continuously adapts to the dynamics of the system. Finally, updating the matrix state

and the covariance matrix

associated with the filtered system. This process is graphically described in

Figure 1.

In this work, sensor data fusion was performed for target tracking from a UAV, using an EKF and taking into consideration the results from data fusions performed in autonomous driving. The kinematic modeling Constant Turn Rate and Velocity (CTRV) [

8] was taken as a reference, and this model includes in its description the angular velocity variable, provided by the Radar, a parameter that introduces an improvement in omnidirectional motion detection.

This paper shows the performance of an implementation of data sensor fusion using LiDAR and Radar through an EKF for the tracking of moving targets, taking into account changes in their direction and trajectory, to generate a three-dimensional reconstruction when the information is captured from a UAV.

2. Dynamic Model of UAV

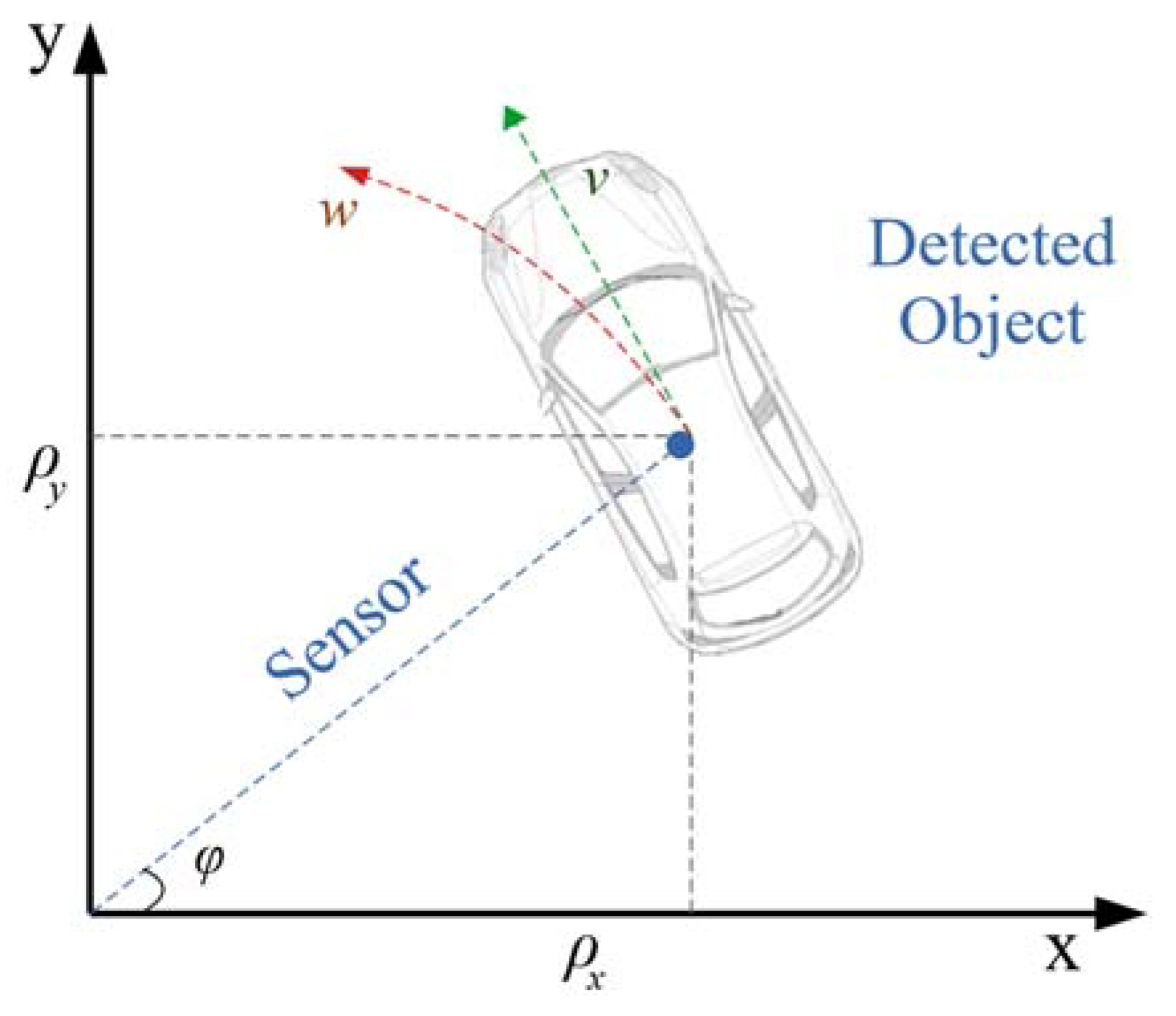

The UAV dynamics were obtained from the 2D CTRV model [

8] for vehicle and pedestrian detection on highways. It is assumed that the possible movements of the elements around the UAV are not completely arbitrary and not holonomous, in which case there will be displacements in a bi-dimensional plane. The curvilinear model (CTRV) includes angular velocities and angular movements in its modeling, which allows a better description of the changes in the direction and velocity of an object in a linear model. The CTRV model is shown in

Figure 2.

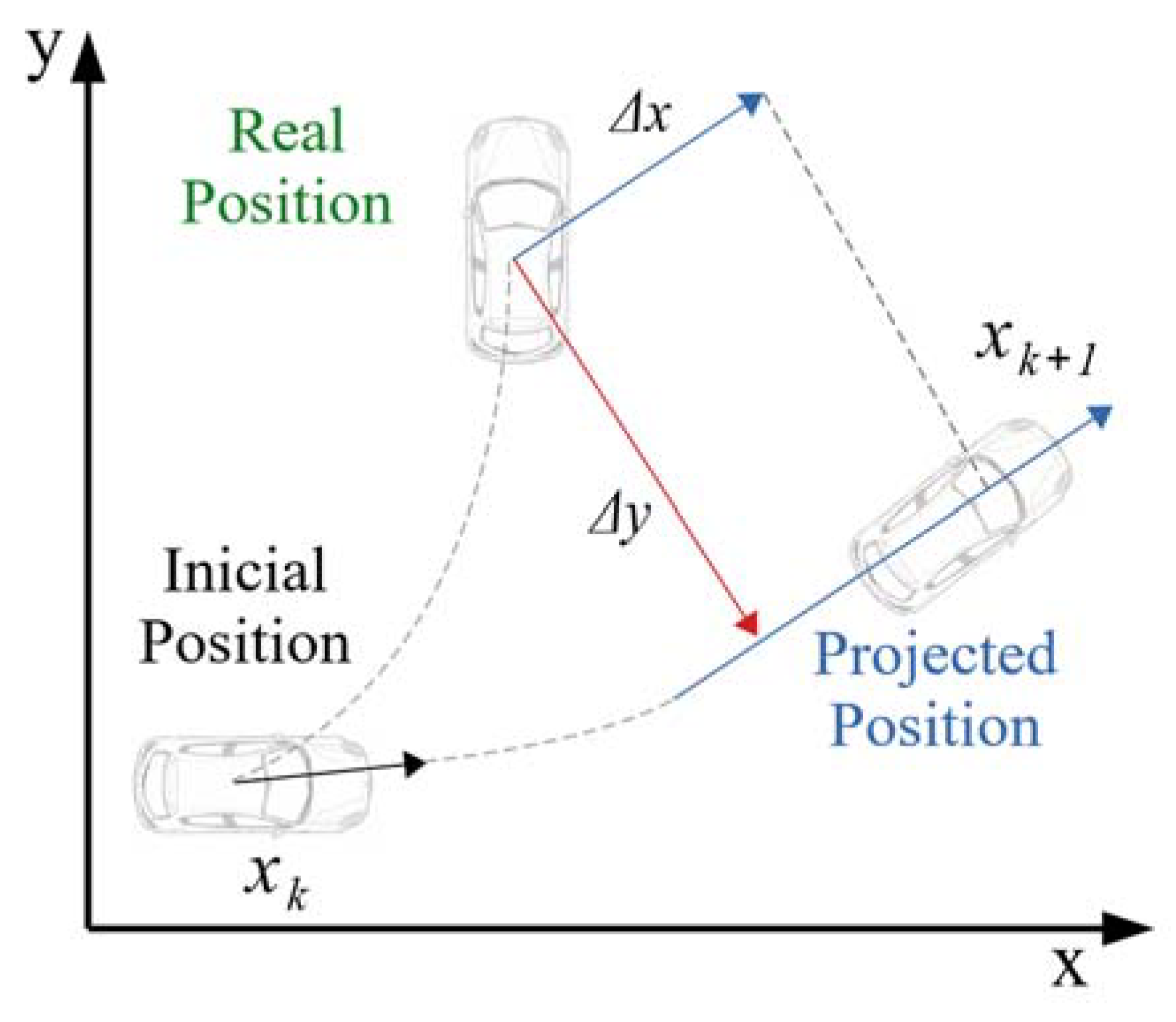

The velocity variable provides the system model the ability to calculate the target’s lateral position variations for a correct prediction of the future position of the target, thus starting from initial positions

x and

y and projecting this location over time, defined as

x + Δ

x and

y + Δ

y for the target as shown in

Figure 3.

The CTRV model for the UAV system’s moving target in the three-dimensional case determines the projection of the position of the target

xi+1 on the axis, starting from the values of the angular frequency w and the angle

θ [

9,

10,

11] for

xi, and equally for

yi and its position projection. Therefore, the variables of interest in the system are the position

x and

y; these are calculated by modeling their projection through the frontal velocity

v, the angle

θ formed between the Radar and the target, the angular frequency

w of the target, and finally the angular frequency

wd of the UAV. The set of state variables involved in the system is the following:

The kinematic equations describing the change from an initial position of the UAV to a future position are as follows:

The state variables are the frontal velocity, the theta angle, the target angular velocity, and the angular velocity of the UAV.

Because the data sensor fusion operation is bidimensional, the CTRV model does not include motion in the position around the

z-axis in its state variables. To maintain a bi-dimensional analysis, the UAV velocity [

10] is projected as

In this way,

ϕ represents the elevation of the UAV concerning the sensed target, this angle allows the velocities of the drone to be projected in the

xz plane, and the

x + Δ

x or

y + Δ

y to be determined, as shown in

Figure 3, concerning the position prediction. To simplify the model and to have a congruence of the LiDAR and Radar models in the sensor data fusion, a data acquisition method is proposed in which the UAV only uses pitch (rotation on the lateral Y axis) and yaw (rotation on the vertical Z axis) movements, and their projection in a three-dimensional coordinate system. These motions are included in the CTRV model through the projection of the UAV velocity

vd, through the angles

ϕ and

θ, as shown below.

The difference between the estimated position and the actual position of the target is determined by the displacement generated by w and

θ, i.e., (Δ

T·

w +

θ) [

8], so the space and velocity projections are also a function of these variations. The velocity equations are obtained from

xi and

yi, which correspond to the first derivative, such that

vdx and

vdy are expressed as

When the target has an initial angular velocity

w = 0, the expressions change to [

8]

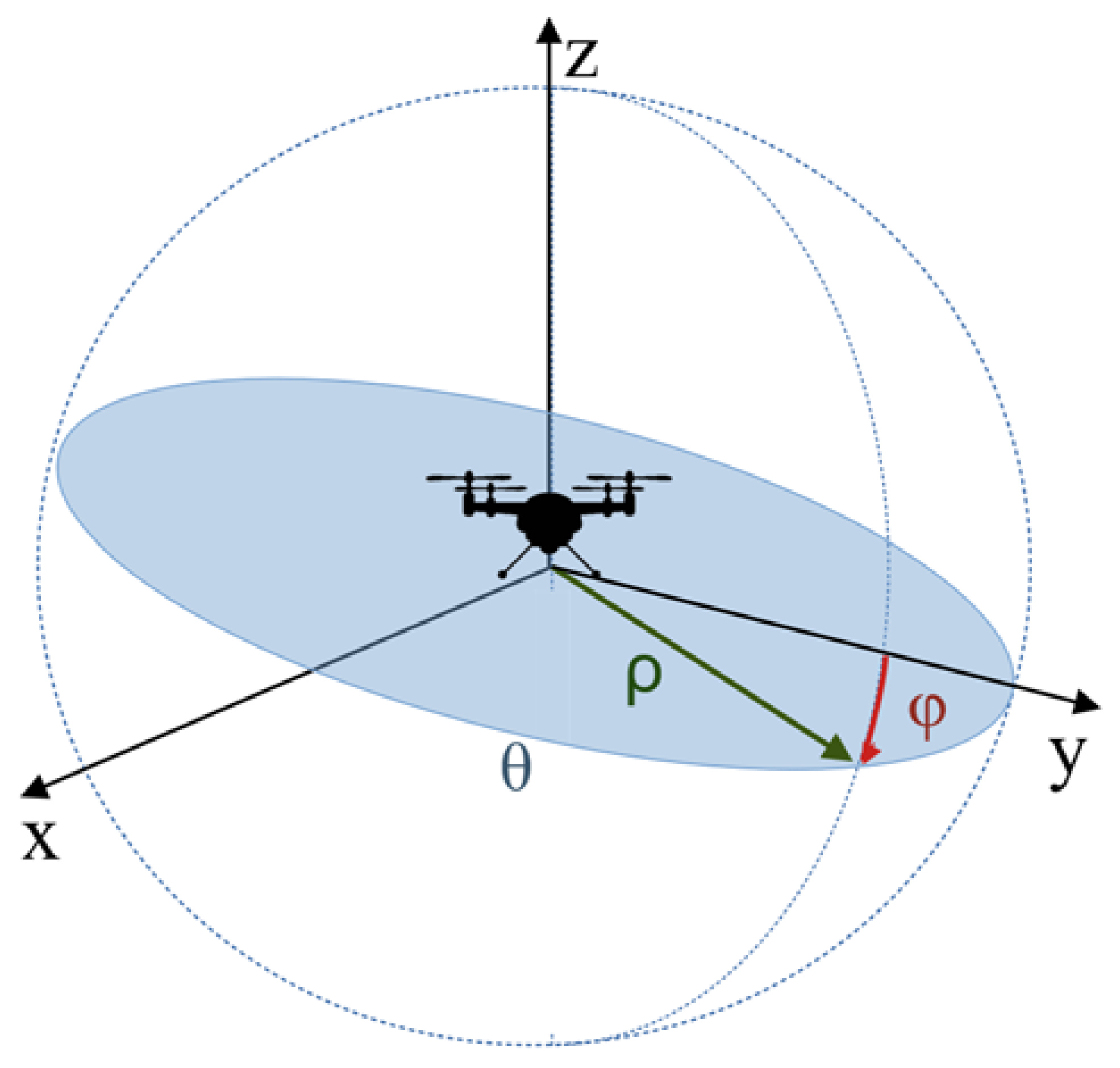

The EKF performs the filtering in a bi-dimensional plane formed by the intersection of the range of the LiDAR and Radar sensor to achieve a three-dimensional reconstruction of the sensed target and a rotation is accomplished on the

x-axis of the sensors, using the cylindrical coordinates as orientation.

Figure 4 shows the dynamics between the UAV for generating a three-dimensional reconstruction from bi-dimensional data gathered by the sensors and the target in the

XYZ plane.

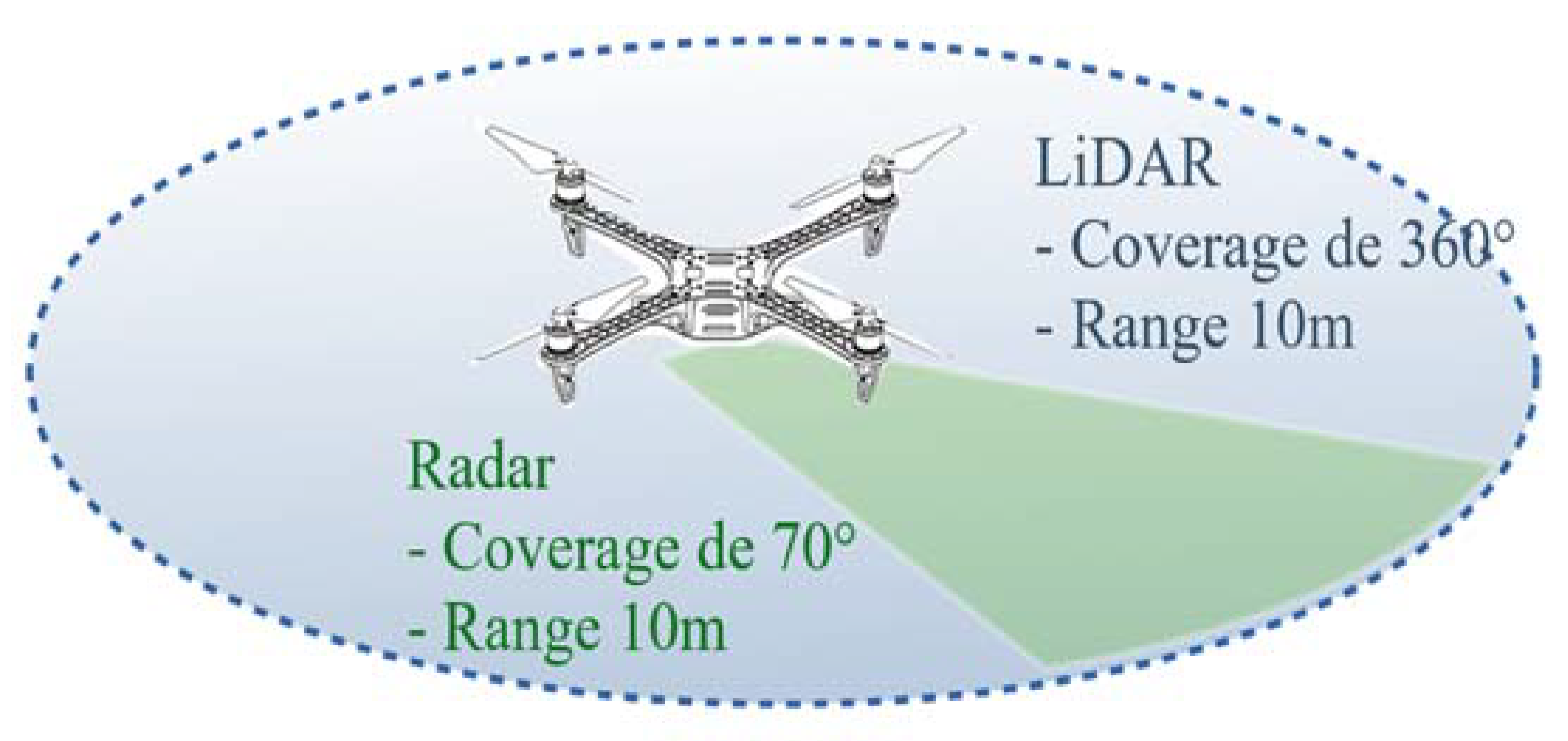

For the data fusion design, the RPLIDAR Slam S1 LiDAR sensor and the Positio2go BGT24MTR12 Radar were used as references. The LiDAR sensor operates in 2D with rotation capability, delivering data for a 360° scan, and the Radar achieves a range of 10 m. The range of the sensors according to the implementation of data fusion in the UAV is shown in

Figure 5.

Now, to improve the estimation of the measured variable from the noisy sensors, the Kalman filter is implemented through sequential steps: (i) the estimation or prediction of the system behavior from the nonlinear equations; (ii) the calculation of the Kalman gain to reduce the error of the prediction of the current state versus the previous state, and (iii) the update of the measurement matrix, as well as the covariance associated with the uncertainty of the system. The representation from the selected state variables corresponds to the following equation:

where

is the vector of the system states and

f(

x) is a nonlinear function of the states. This state–space model of the system allows us to determine the future states and the output is obtained by filtering the input signal. The Kalman filter performs estimations and corrections iteratively, where the possible errors of the system will be reflected in the covariance values present between the measured values and the values estimated by the filter. The forward projection of the covariance error has the following representation:

The system update is implemented according to the following equations:

Based on these state variables, the fundamental matrix for the extended Kalman filter is calculated and must satisfy the condition

Making

α = Δ

T +

θ,

β = −

sinθ + sinα, and

χ = −

cosθ + cosα in Equation (18), the fundamental matrix is

When the angular velocity of the target is zero, the fundamental matrix reduces to the following matrix:

The matrix associated with the system noise

was calculated from the discrete output matrix

. The matrix must consider the output variables on which the Kalman filter can act. For the proposed model, the angular acceleration of the target has been taken into account, as well as the angular velocity and acceleration of the UAV. The output matrix

for the EKF is presented below.

The noise matrix from the output matrix is calculated through the following expression:

where

With

γ =

cosθ cosφ, ν =

cosφ sinφ, η =

sinθ sinφ,

κ =

sinθ cosθ, σ =

cosθ sinφ, λ =

cosφ sinφ, the noise matrix is defined as

Regarding the variables obtained from the sensors, it should be taken into account that the LiDAR and Radar sensors provide the measurements in different formats. For the LiDAR case, position data are retrieved in rectangular coordinates for

x and

y that correspond to the first two variables of the state vector. Since

has six state variables, the measurement matrix for LiDAR data processing should operate only on the

x and

y variables, making the product between the state vector

and

H, conformable, i.e.,

For Radar, the measurement matrix changes to

The measurement error covariance matrix of the LiDAR sensor, obtained from the statistical analysis of the dataset obtained from this sensor, is as follows:

is obtained from the variance in the LiDAR dataset. Likewise, the measurement error covariance matrix of the Radar sensor obtained is

These covariance values are directly related to the resolution and reliability of both the LiDAR and the Radar sensors. In the LiDAR case, the uncertainty is present in its measurement of the target distance, measured and represented as x and y coordinates, while for the Radar, this uncertainty is found in this same measurement, but is represented as a vector distance of magnitude ρ and angle θ. Likewise, the covariance matrix for the Radar includes the estimated velocity at the target.

3. Results and Discussion

The EKF filter was implemented under numerical evaluation using Matlab

®. To determine its performance, a dataset combining position measurements from a LiDAR and Radar sensor for a pedestrian and real position measurements for the pedestrian were used, and with these results, an estimation of the performance was obtained using the RMSE [

12]. To evaluate the robustness of the model, the dataset was contaminated with different levels of additive white Gaussian noise (AWGN).

The system was initialized by predefining values for the state matrix as shown in

Figure 6, as well as the fundamental matrix, the system covariance matrix, and the noise matrix. Each new LiDAR or Radar sensor input triggers the filtering, starting by determining the time-lapse DT concerning the previous measurement. Next, the state matrix is estimated using the set of Equations (8) or (9) when

w = 0, the fundamental matrix according to Equations (18) or (19) if

w = 0, the noise matrix given by (24), and the system covariance matrix as given by Equation (11). Next, the configuration of the measurement and uncertainty matrices, (25) and (27) for the LiDAR case and (26) and (28) for the Radar case, is performed. The Kalman gain given by Equation (13) is determined, and, finally, an updating of the measurement matrix given by (15), as well as the system and state covariance matrix, is achieved.

The CTRV model proposed by [

8] in the context of autonomous driving was designed, taking as reference highways and locations commonly used by automotive vehicles. In these scenarios, the tangential velocity changes to the sensors are presented to a lesser extent concerning the same scenario, but with measurements taken from a UAV. This behavior is accentuated when it is necessary to perform a three-dimensional reconstruction of the moving target. The CTRV model developed in this work includes the angular velocity of the drone, modifying the fundamental matrix of the system, as well as the noise matrix associated with the system, and a favorable response of the filter to the newly established changes was observed.

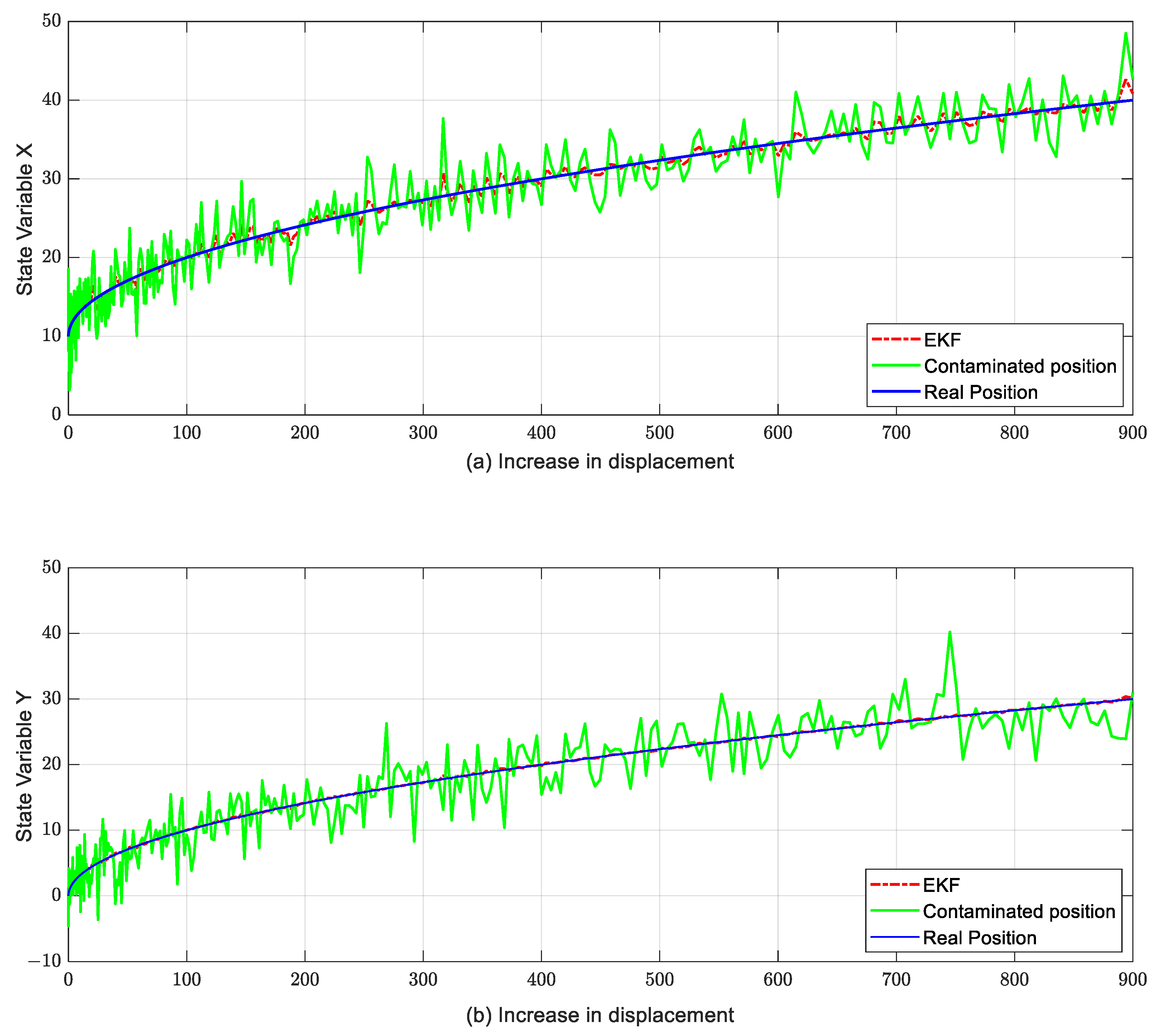

To evaluate the response of the

x and

y position variables to measurements contaminated with noise, the equations of the CTRV model were implemented in Matlab

®, and a sweep of the position variables contaminated with AWGN was performed. The response of the

x and

y state variables of the EKF to these contaminated measurements is shown in

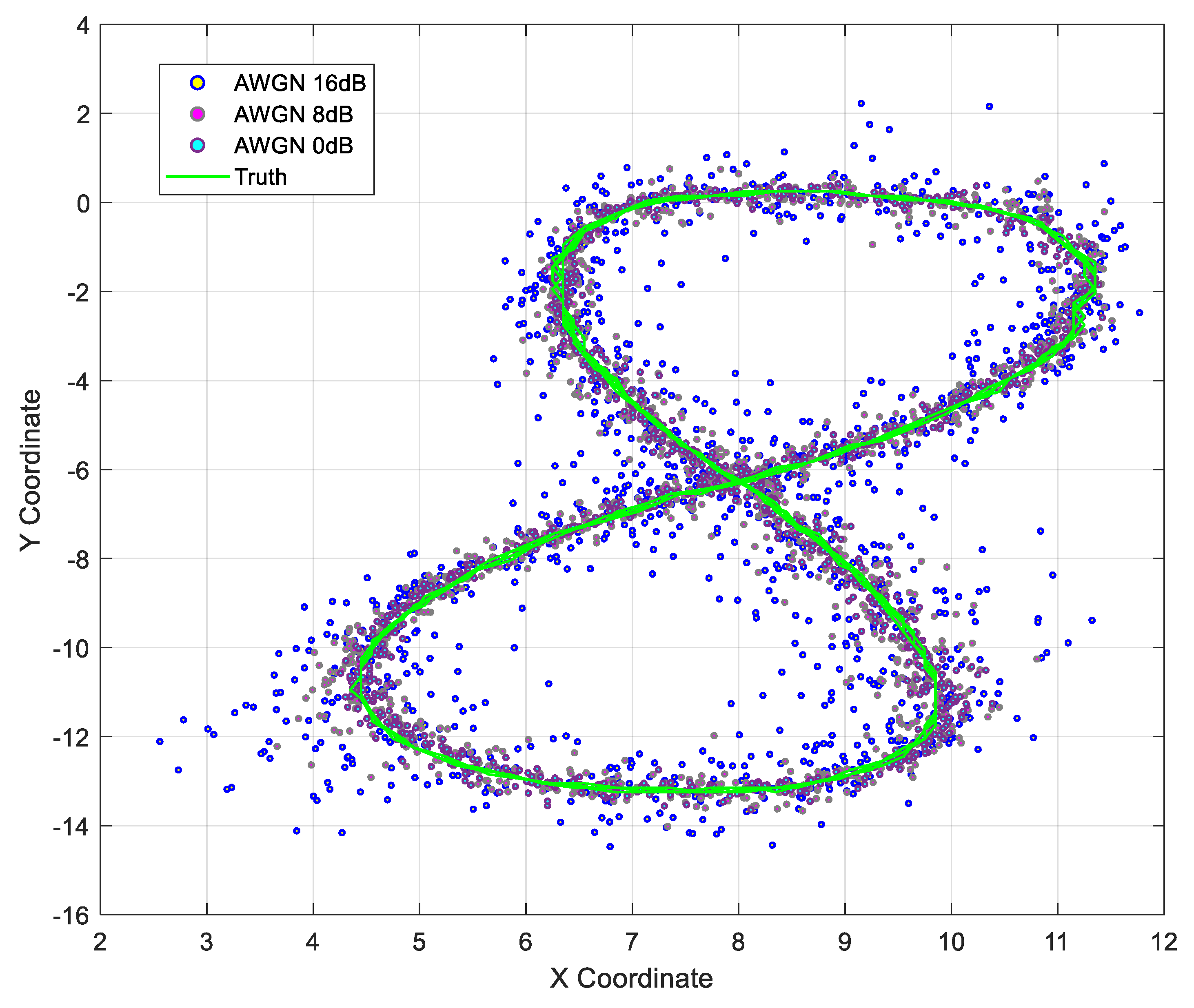

Figure 7.

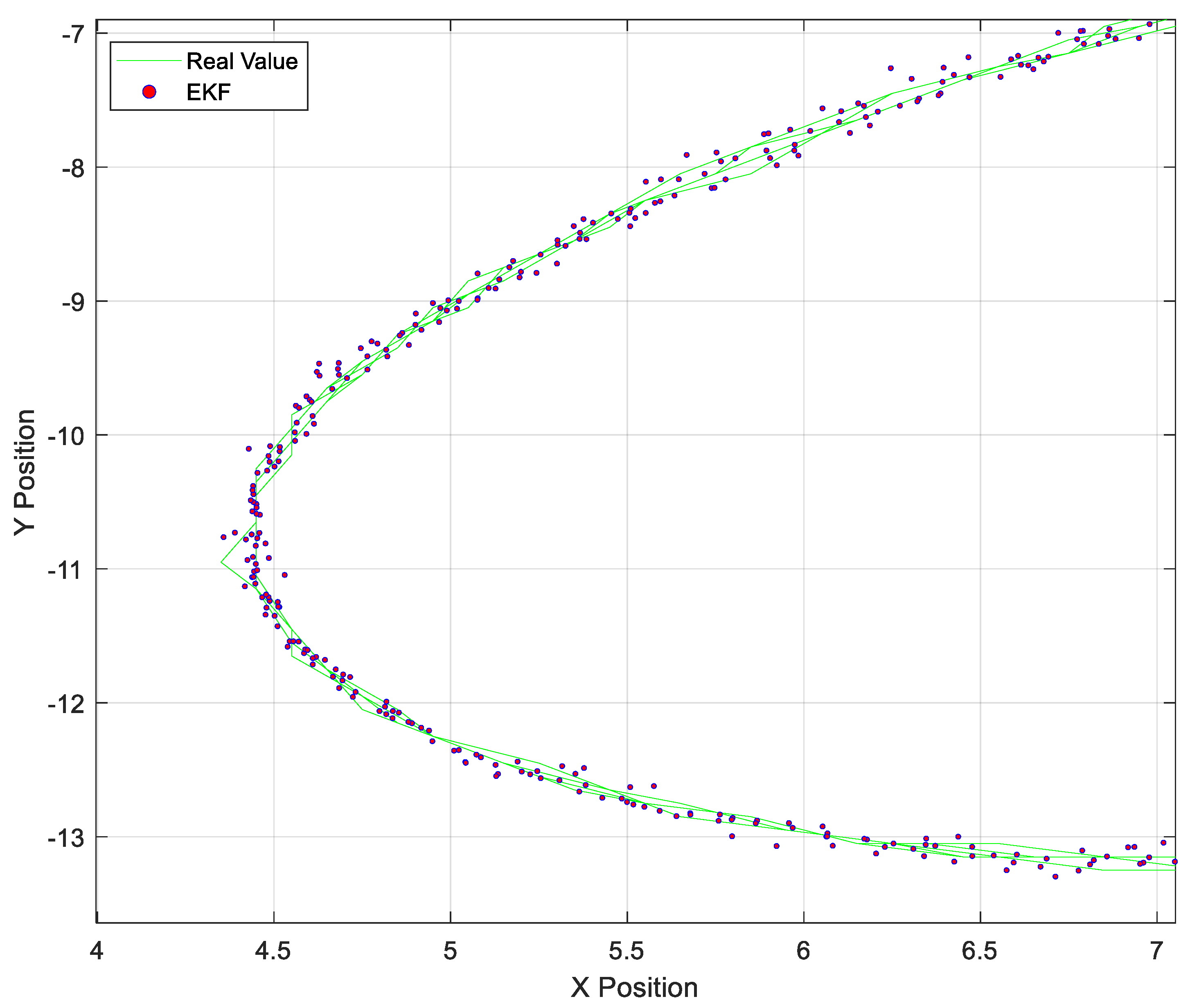

The response of the EKF to significant changes in the angular velocity of the target, represented as a change in the direction of the trajectory on the

x-axis, is presented below. The EKF succeeds in predicting the target (green band), even at the point of greatest deflection. The zoom of the filter’s response to the change in trajectory is shown in

Figure 8. The EKF was tested with the help of a dataset that provides 1225 positions and angles from simultaneous Radar and LiDAR measurements, where the Radar sensor provides the distance along with the angle of displacement, concerning the horizontal of the Radar, and also the angular velocity detected by the Radar; the LiDAR sensor gives the position in x and y coordinates. This dataset was contaminated with AWGN by increasing the noise power progressively and testing the EKF.

The representation of the real data versus the measured data from the Radar and LiDAR sensors is visualized in

Figure 9.

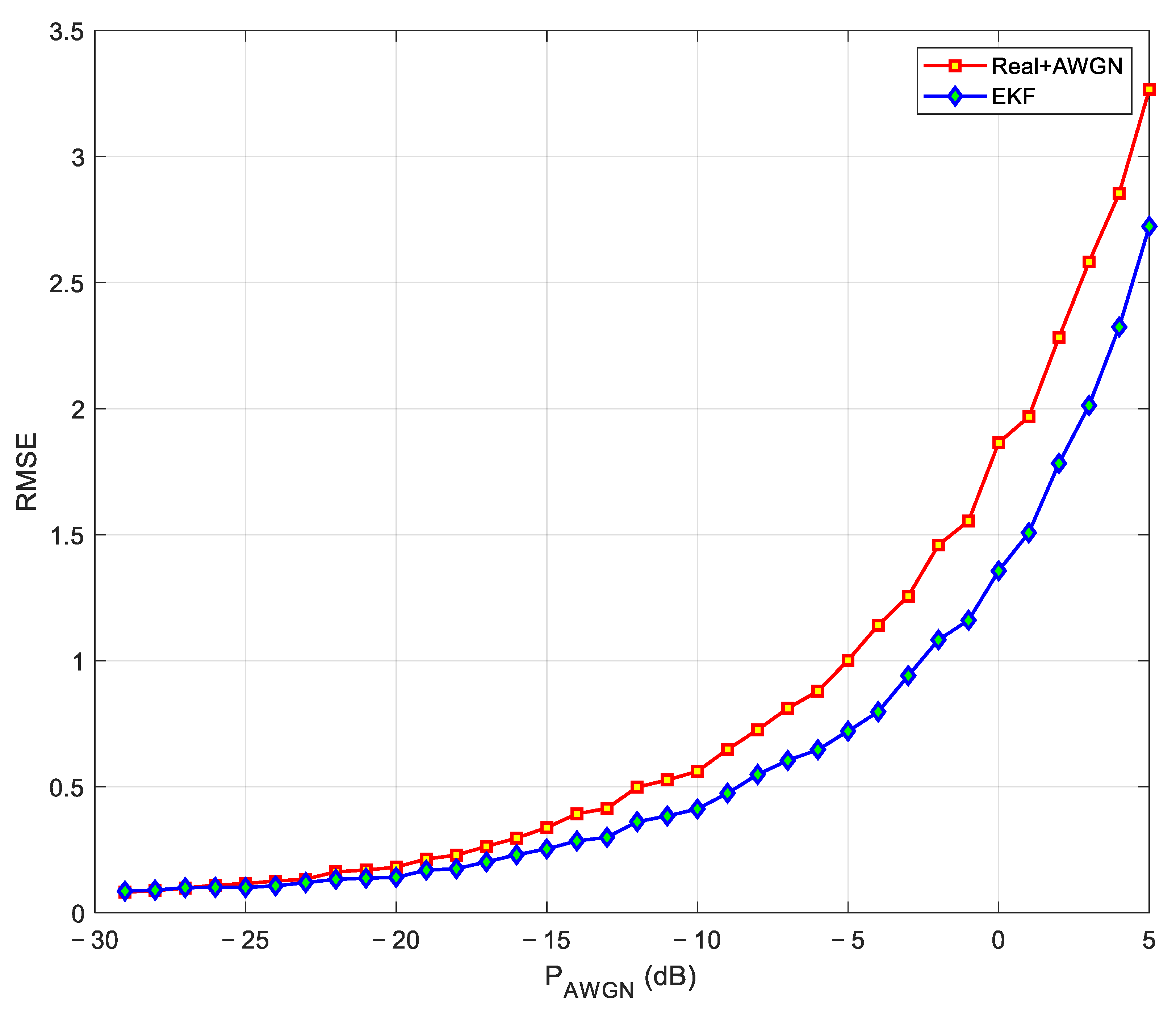

To draw a comparison with other fusion models with Radar data, the root mean square error (RMSE) was computed, and it was possible to validate the effectiveness of the proposed CTRV model against fusions of previously used sensor data. The root mean square error is reduced from 0.21 to 0.163 in terms of the linear model; however, in contrast to the state-of-the-art [

13] the unscented Kalman filter (UKF) maintains a better response compared to EKF based on the CTRV model. The response of the EKF to AWGN variations in the input data is presented below.

Figure 10 shows that the EKF acts by reducing the difference between the real values (red signal) and the values contaminated with Gaussian noise (blue signal). In [

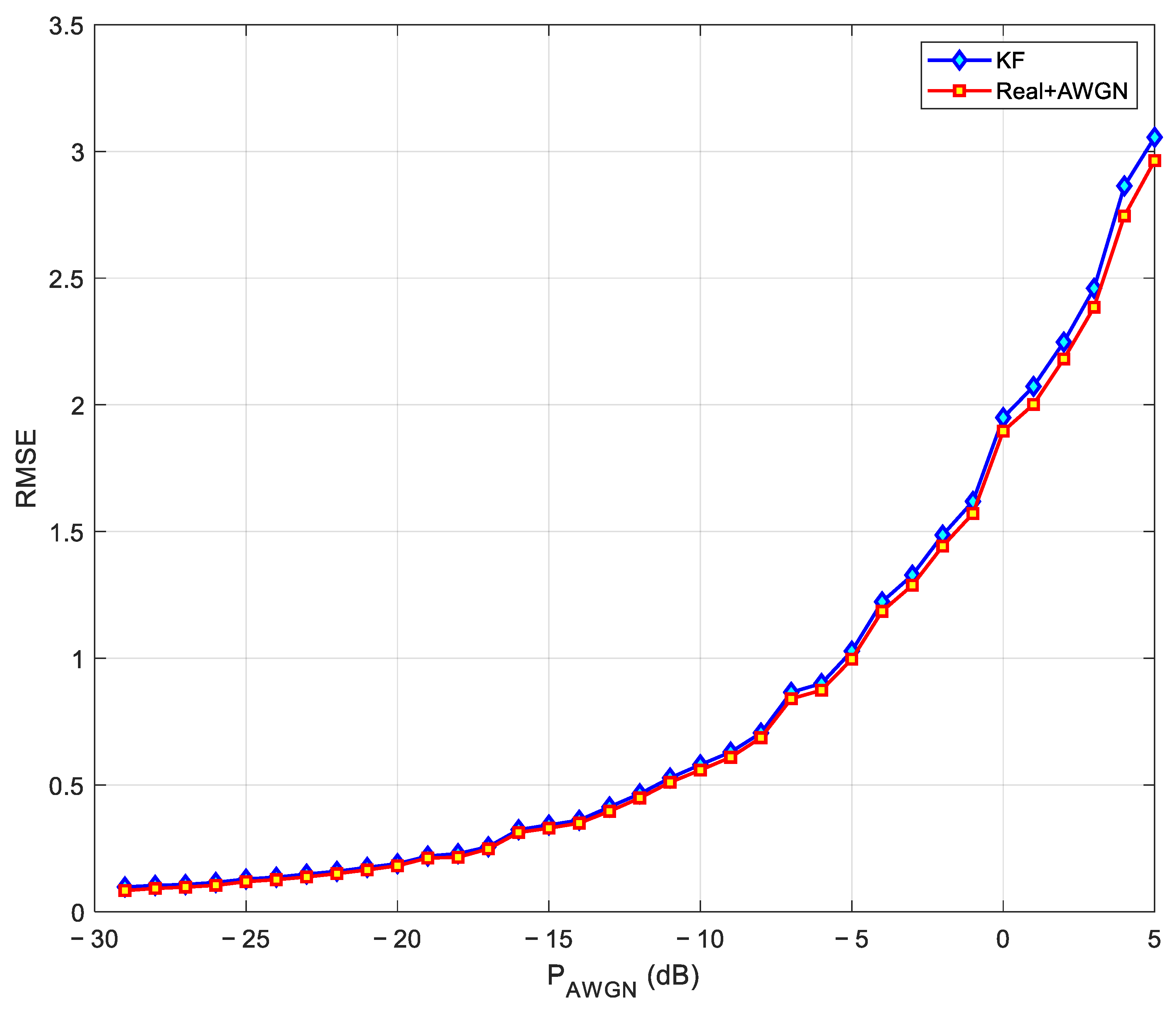

13], the authors state that the RMSE response can be improved with the unscented Kalman filter; however, it should be noted that this filter implies a higher computational complexity concerning the EKF. On the other hand, the response of the KF with a linear model and increasing AWGN is shown in

Figure 11.