Bubble Plume Target Detection Method of Multibeam Water Column Images Based on Bags of Visual Word Features

Abstract

:1. Introduction

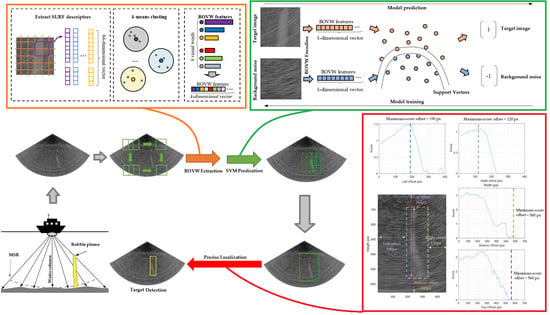

2. Theories and Methods

2.1. Basic Principle of Target Detection in Multibeam Water Column Images

- The bubble plume targets have much brighter grayscale features than the water column image background because the backscatter strengths from the gas bubbles are much stronger than those from the surrounding water;

- The bubble plume targets have special shape features. These targets are generally plume-like or ribbon-like shapes, which are obviously different from other water column targets (such as fish and fish school);

- The bubble plumes also have special orientation features. The bubble plumes usually range from the seabed to a certain height, and are usually approximately perpendicular to the seabed, but may be bent and oblique due to the ocean current effects. Due to side-lobe effects, bubble plumes in the minimum slant range are easier to detect;

- Special texture features exist around bubble plume targets. Due to the changes of two different propagation media, the texture features of the bubble plume and surrounding water are quite different.

2.2. BOVW Features of Multibeam Water Column Images

- Step 1. All the SURF descriptors (64-dimensional eigenvectors) of interest points from sample images are extracted by SURF detection or uniform-grid-point selection;

- Step 2. Due to the excessive number SURFs extracted, the SURFs of all sample images were clustered using k-means to obtain k category;

- Step 3. For any image, the SURF points can be extracted using the interest point selection (Step 1) and calculated as 64-dimensional eigenvectors, then these SURF descriptions are classified into each category (Step 3);

- Step 4. The occurrence frequencies of all the SURF clustering categories are calculated to form the k-dimensional BOVW feature vector.

2.2.1. SURF Descriptors Extraction

- Select the regions around key points and divide them into 4 × 4 small regions;

- Calculate Four features (dx, dy, |dx| and |dy|) of the sampling point corresponding to the Haar response in each small region;

- Construct and normalize the 64-dimensional (4 × 4 × 4) eigenvector of each key point.

2.2.2. Clustering SURF Descriptors

- The k-mean++ algorithm is used to initialize k centroids c(j) (j = 1, …, k);

- The Euclidean distance Lj between each SURF 64-dimensional eigenvector v and the centroids c(j) is calculated as

- 3.

- Each eigenvector is assigned to the nearest centroid and the k centroids are recalculated;

- 4.

- Steps 2 and 3 are repeated until all the cluster assignments are stable (Figure 4B).

2.2.3. Image Coding Using BOVW Feature

2.3. Bubble Plume Recognition Using Support Vector Machine

2.3.1. Support Vector Machine

- Selection of the quadratic polynomial kernel function, where the SVM problem could be converted to the convex quadratic programming problem as follows:

- 2.

- Based on the sequential minimal optimization (SMO) algorithm, the optimal solution is

- 3.

- Selection of αj* as one component of α*, satisfying 0 < αj* < C (C is the hyperparameter, called box constraint, to avoid overfitting). Then, we calculate

- 4.

- The kernel function is used to replace the inner product, and the quadratic SVM becomes

2.3.2. Recognition Procedure of Bubble Plume Targets

2.3.3. Recognition Accuracy Assessment

2.4. Target Detection Using BOVW Features

2.4.1. Precise Target Localization

- First, gradually shrink the left boundary to the right, calculate the prediction scores of the reduced images, and select the maximum-score position as the final left boundary;

- Second, gradually shrink the right boundary to the left, calculate the scores of the reduced images, and select the maximum-score position as the final right boundary as the red boundary in Figure 8e;

- Third, based on the above detection boundary, gradually shrink the top boundary to the bottom, calculate the scores of the reduced images, and select the zero-score position as the final bottom boundary;

- Finally, gradually shrink the bottom boundary to the top, calculate the scores of the reduced images, and select the zero-score position as the final top boundary as the yellow boundary in Figure 8f.

2.4.2. Detection Accuracy

3. Experiments and Results

- BOVW feature extraction and training and validation of SVM classifier. During data preparation, the measured multibeam data were used to construct the water column images. The images containing bubble plume target and only background noises were extracted as positive and negative samples and distributed in the training and validation sample sets. Then, the BOVW features were extracted from these images and the SVM classifier was trained using these features;

- Bubble target detection in water column images. Based on the recognition model using BOVW and SVM, the precise detection method of bubble plume targets was applied to detect all of the bubble plume targets in the water column images of the EM 710 multibeam sonar to prove the validity and generality of our detection method.

3.1. BOVW Feature Extraction and Classification

3.1.1. Sampling from Multibeam Water Column Images

3.1.2. Visual Vocabulary Construction and BOVW Feature Encoding

3.1.3. SVM Classifier Training and Validation

3.2. Automatic Detection of Bubble Plume Target in Water Column Image

4. Discussion

4.1. Feature and Classifier Comparison

4.2. Detection Result Comparison

4.3. Advantage Compared with Deep Learning Methods

4.4. Other Important Issues

4.4.1. Using SURF to Detect the Target

4.4.2. Ghost Targets and Targets outside the Minimum Slant Range

4.4.3. Application on Other Multibeam Water Column Data

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Colbo, K.; Ross, T.; Brown, C.; Weber, T. A review of oceanographic applications of water column data from multibeam echosounders. Estuar. Coast. Shelf Sci. 2014, 145, 41–56. [Google Scholar] [CrossRef]

- Melvin, G.D.; Cochrane, N.A. Multibeam acoustic detection of fish and water column targets at high-flow sites. Estuaries Coasts 2015, 38, 227–240. [Google Scholar] [CrossRef]

- Buelens, B.; Pauly, T.; Williams, R.; Sale, A. Kernel methods for the detection and classification of fish schools in single-beam and multibeam acoustic data. ICES J. Mar. Sci. 2009, 66, 1130–1135. [Google Scholar] [CrossRef] [Green Version]

- Cox, M.J.; Warren, J.D.; Demer, D.A.; Cutter, G.R.; Brierley, A.S. Three-dimensional observations of swarms of Antarctic krill (Euphausia superba) made using a multi-beam echosounder. Deep Sea Res. Part II Top. Stud. Oceanogr. 2010, 57, 508–518. [Google Scholar] [CrossRef]

- Di Maida, G.; Tomasello, A.; Luzzu, F.; Scannavino, A.; Pirrotta, M.; Orestano, C.; Calvo, S. Discriminating between Posidonia oceanica meadows and sand substratum using multibeam sonar. ICES J. Mar. Sci. 2010, 68, 12–19. [Google Scholar] [CrossRef] [Green Version]

- De Falco, G.; Tonielli, R.; Di Martino, G.; Innangi, S.; Simeone, S.; Michael Parnum, I. Relationships between multibeam backscatter, sediment grain size and Posidonia oceanica seagrass distribution. Cont. Shelf Res. 2010, 30, 1941–1950. [Google Scholar] [CrossRef]

- Moum, J.N.; Farmer, D.M.; Smyth, W.D.; Armi, L.; Vagle, S. Structure and Generation of Turbulence at Interfaces Strained by Internal Solitary Waves Propagating Shoreward over the Continental Shelf. J. Phys. Oceanogr. 2003, 33, 2093–2112. [Google Scholar] [CrossRef]

- Leong, D.; Ross, T.; Lavery, A. Anisotropy in high-frequency broadband acoustic backscattering in the presence of turbulent microstructure and zooplankton. J. Acoust. Soc. Am. 2012, 132, 670–679. [Google Scholar] [CrossRef] [Green Version]

- Hughes Clarke, J.E.; Lamplugh, M.; Czotter, K. Multibeam water column imaging: Improved wreck least-depth determination. In Proceedings of the Canadian Hydrographic Conference, Halifax, NS, Canada, 6–9 June 2006. [Google Scholar]

- Wyllie, K.; Weber, T.; Armstrong, A. Using Multibeam Echosounders for Hydrographic Surveying in the Water Column: Estimating Wreck Least Depths. In Proceedings of the US Hydrographic Conference, National Harbor, MD, USA, 16–19 March 2015. [Google Scholar]

- Weber, T.C. Observations of clustering inside oceanic bubble clouds and the effect on short-range acoustic propagation. J. Acoust. Soc. Am. 2008, 124, 2783–2792. [Google Scholar] [CrossRef] [Green Version]

- Schneider von Deimling, J.; Brockhoff, J.; Greinert, J. Flare imaging with multibeam systems: Data processing for bubble detection at seeps. Geochem. Geophys. Geosyst. 2007, 8, Q06004. [Google Scholar] [CrossRef] [Green Version]

- Schneider von Deimling, J.; Papenberg, C. Detection of gas bubble leakage via correlation of water column multibeam images. Ocean Sci. 2012, 8, 175–181. [Google Scholar] [CrossRef] [Green Version]

- Weber, T.C.; Mayer, L.; Jerram, K.; Beaudoin, J.; Rzhanov, Y.; Lovalvo, D. Acoustic estimates of methane gas flux from the seabed in a 6000 km2 region in the Northern Gulf of Mexico. Geochem. Geophys. Geosyst. 2014, 15, 1911–1925. [Google Scholar] [CrossRef] [Green Version]

- Dupre, S.; Scalabrin, C.; Grall, C.; Augustin, J.M.; Henry, P.; Şengör, A.; Görür, N.; Çağatay, M.N.; Géli, L. Tectonic and sedimentary controls on widespread gas emissions in the Sea of Marmara: Results from systematic, shipborne multibeam echo sounder water column imaging. J. Geophys. Res. Solid Earth 2015, 120, 2891–2912. [Google Scholar] [CrossRef] [Green Version]

- Innangi, S.; Bonanno, A.; Tonielli, R.; Gerlotto, F.; Innangi, M.; Mazzola, S. High resolution 3-D shapes of fish schools: A new method to use the water column backscatter from hydrographic MultiBeam Echo Sounders. Appl. Acoust. 2016, 111, 148–160. [Google Scholar] [CrossRef]

- Hughes Clarke, J.E. Applications of multibeam water column imaging for hydrographic survey. Hydrogr. J. 2006, 4, 3–14. [Google Scholar]

- Hughes Clarke, J.E.; Brucker, S.; Czotter, K. Improved Definition of Wreck Superstructure using Multibeam Water Column. J. Can. Hydrogr. Assoc. 2006, 5, 1–2. [Google Scholar]

- Marques, C.R.; Hughes Clarke, J.E. Automatic mid-water target tracking using multibeam water column. In Proceedings of the CHC 2012, The Arctic, Old Challenges New, Niagara Falls, ON, Canada, 15–17 May 2012. [Google Scholar]

- Gardner, J.V.; Malik, M.; Walker, S. Plume 1400 meters high discovered at the seafloor off the northern California margin. Eos Trans. Am. Geophys. Union 2009, 90, 275. [Google Scholar] [CrossRef]

- Weber, T.C.; Mayer, L.A.; Beaudoin, J.; Jerram, K.W.; Malik, M.A.; Shedd, B.; Rice, G. Mapping Gas Seeps with the Deepwater Multibeam Echosounder on Okeanos Explorer. Oceanography 2012, 25, 55–56. [Google Scholar] [CrossRef] [Green Version]

- Sahling, H.; Römer, M.; Pape, T.; Bergès, B.; dos Santos Fereirra, C.; Boelmann, J.; Geprägs, P.; Tomczyk, M.; Nowald, N.; Dimmler, W. Gas emissions at the continental margin west of Svalbard: Mapping, sampling, and quantification. Biogeosciences 2014, 11, 6029–6046. [Google Scholar] [CrossRef] [Green Version]

- Skarke, A.; Ruppel, C.; Kodis, M.; Brothers, D.; Lobecker, E. Widespread methane leakage from the sea floor on the northern US Atlantic margin. Nat. Geosci. 2014, 7, 657–661. [Google Scholar] [CrossRef]

- Nakamura, K.; Kawagucci, S.; Kitada, K.; Kumagai, H.; Takai, K.; Okino, K. Water column imaging with multibeam echo-sounding in the mid-Okinawa Trough: Implications for distribution of deep-sea hydrothermal vent sites and the cause of acoustic water column anomaly. Geochem. J. 2015, 49, 579–596. [Google Scholar] [CrossRef] [Green Version]

- Philip, B.T.; Denny, A.R.; Solomon, E.A.; Kelley, D.S. Time-series measurements of bubble plume variability and water column methane distribution above Southern Hydrate Ridge, Oregon. Geochem. Geophys. Geosyst. 2016, 17, 1182–1196. [Google Scholar] [CrossRef]

- Nikolovska, A.; Sahling, H.; Bohrmann, G. Hydroacoustic methodology for detection, localization, and quantification of gas bubbles rising from the seafloor at gas seeps from the eastern Black Sea. Geochem. Geophys. Geosyst. 2008, 9, Q10010. [Google Scholar] [CrossRef]

- Dobeck, G.; Hyland, J.; Smedley, L.D. Automated detection and classification of sea mines in sonar imagery. In Proceedings of the AeroSense ’97, Orlando, FL, USA, 22 July 1997. [Google Scholar]

- Tang, X.; Stewart, W.K. Optical and Sonar Image Classification: Wavelet Packet Transform vs Fourier Transform. Comput. Vis. Image Underst. 2000, 79, 25–46. [Google Scholar] [CrossRef] [Green Version]

- Reed, S.; Ruiz, I.T.; Capus, C.; Petillot, Y. The fusion of large scale classified side-scan sonar image mosaics. IEEE Trans. Image Process. 2006, 15, 2049–2060. [Google Scholar] [CrossRef]

- Rhinelander, J. Feature extraction and target classification of side-scan sonar images. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; pp. 1–6. [Google Scholar]

- Song, Y.; He, B.; Zhao, Y.; Li, G.; Sha, Q.; Shen, Y.; Yan, T.; Nian, R.; Lendasse, A. Segmentation of Sidescan Sonar Imagery Using Markov Random Fields and Extreme Learning Machine. IEEE J. Ocean. Eng. 2019, 44, 502–513. [Google Scholar] [CrossRef]

- Wang, X.; Zhao, J.; Zhu, B.; Jiang, T.; Qin, T. A Side Scan Sonar Image Target Detection Algorithm Based on a Neutrosophic Set and Diffusion Maps. Remote Sens. 2018, 10, 295. [Google Scholar] [CrossRef] [Green Version]

- Zhao, J.; Mai, D.; Zhang, H.; Wang, S. Automatic Detection and Segmentation on Gas Plumes from Multibeam Water Column Images. Remote Sens. 2020, 12, 3085. [Google Scholar] [CrossRef]

- Williams, D.P.; Dugelay, S. Multi-view SAS image classification using deep learning. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–9. [Google Scholar]

- Zhu, P.; Isaacs, J.; Fu, B.; Ferrari, S. Deep learning feature extraction for target recognition and classification in underwater sonar images. In Proceedings of the 2017 IEEE 56th Annual Conference on Decision and Control (CDC), Melbourne, VIC, Australia, 12–15 December 2017; pp. 2724–2731. [Google Scholar]

- Lee, S. Deep learning of submerged body images from 2D sonar sensor based on convolutional neural network. In Proceedings of the 2017 IEEE Underwater Technology (UT), Busan, Korea, 21–24 February 2017; pp. 1–3. [Google Scholar]

- Ribeiro, P.O.C.S.; dos Santos, M.M.; Drews, P.L.J.; Botelho, S.S.C. Forward Looking Sonar Scene Matching Using Deep Learning. In Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017; pp. 574–579. [Google Scholar]

- Valdenegro-Toro, M. Improving sonar image patch matching via deep learning. In Proceedings of the 2017 European Conference on Mobile Robots (ECMR), Paris, France, 6–8 September 2017; pp. 1–6. [Google Scholar]

- Li, C.; Ye, X.; Cao, D.; Hou, J.; Yang, H. Zero shot objects classification method of side scan sonar image based on synthesis of pseudo samples. Appl. Acoust. 2021, 173, 107691. [Google Scholar] [CrossRef]

- Liu, D.; Wang, Y.; Ji, Y.; Tsuchiya, H.; Yamashita, A.; Asama, H. CycleGAN-based realistic image dataset generation for forward-looking sonar. Adv. Robot. 2021, 35, 242–254. [Google Scholar] [CrossRef]

- Huang, C.; Zhao, J.; Yu, Y.; Zhang, H. Comprehensive Sample Augmentation by Fully Considering SSS Imaging Mechanism and Environment for Shipwreck Detection Under Zero Real Samples. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Specht, M.; Stateczny, A.; Specht, C.; Widźgowski, S.; Lewicka, O.; Wiśniewska, M. Concept of an Innovative Autonomous Unmanned System for Bathymetric Monitoring of Shallow Waterbodies (INNOBAT System). Energies 2021, 14, 5370. [Google Scholar] [CrossRef]

- Lewicka, O.; Specht, M.; Stateczny, A.; Specht, C.; Brčić, D.; Jugović, A.; Widźgowski, S.; Wiśniewska, M. Analysis of GNSS, Hydroacoustic and Optoelectronic Data Integration Methods Used in Hydrography. Sensors 2021, 21, 7831. [Google Scholar] [CrossRef] [PubMed]

- Kesorn, K.; Poslad, S. An Enhanced Bag-of-Visual Word Vector Space Model to Represent Visual Content in Athletics Images. IEEE Trans. Multimed. 2012, 14, 211–222. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Zhao, J.; Meng, J.; Zhang, H.; Yan, J. A New Method for Acquisition of High-Resolution Seabed Topography by Matching Seabed Classification Images. Remote Sens. 2017, 9, 1214. [Google Scholar] [CrossRef] [Green Version]

- NOAA. NOAA Office of Ocean Exploration and Research: Water Column Sonar Data Collection (EX1402L3, EM302); National Centers for Environmental Information, NOAA: Asheville, NC, USA, 2014. [Google Scholar] [CrossRef]

| Truth | Predicting Results | |

|---|---|---|

| True | False | |

| Positive | True Positive (TP) | False Positive (FP) |

| Negative | True Negative (TN) | False Negative (FN) |

| Accuracy Assessment | Computational Formula | |

|---|---|---|

| Accuracy | (10) | |

| Precision ratio | (11) | |

| Recall ratio | (12) | |

| Harmonic mean | (13) | |

| SURF Point Extraction Method | Word Number of Visual Vocabulary | SURF Point Number | Strongest PointNumber | Point Number for Each Category | Training Accuracy | Validation Accuracy |

|---|---|---|---|---|---|---|

| Grid [8 × 8] | 200 | 4,014,080 | 3,211,060 | 1,605,530 | 0.62 | 0.60 |

| 300 | 0.84 | 0.83 | ||||

| 400 | 0.86 | 0.83 | ||||

| 500 | 0.71 | 0.69 | ||||

| Grid [16 × 16] | 200 | 1,003,584 | 802,816 | 401,408 | 0.74 | 0.73 |

| 300 | 0.81 | 0.76 | ||||

| 400 | 0.74 | 0.72 | ||||

| 500 | 0.79 | 0.77 | ||||

| Grid [32 × 32] | 100 | 250,880 | 200,704 | 100,352 | 1.00 | 0.90 |

| 200 | 1.00 | 0.93 | ||||

| 300 | 1.00 | 0.95 | ||||

| 400 | 1.00 | 0.94 | ||||

| 500 | 1.00 | 0.94 | ||||

| Detector | 200 | 419,928 | 331,000 | 165,500 | 0.86 | 0.79 |

| 300 | 0.47 | 0.46 | ||||

| 400 | 0.96 | 0.89 | ||||

| 500 | 0.92 | 0.80 |

| Classifier | Validation Accuracy (%) | Prediction Speed (Observation/s) | Training Time (s) |

|---|---|---|---|

| Medium Tree | 90.3 | 750 | 8.15 |

| Linear Discriminant | 92.5 | 850 | 10.36 |

| Logistic Regression | 72.1 | 550 | 20.63 |

| Linear SVM | 98.3 | 860 | 10.16 |

| Quadratic SVM | 98.6 | 810 | 10.01 |

| Cubic SVM | 98.4 | 800 | 10.56 |

| Medium Gaussian SVM | 97.9 | 1200 | 11.07 |

| Medium KNN | 95.2 | 950 | 12.61 |

| Bagged Trees Ensemble | 96.9 | 600 | 19.65 |

| Test Image | BOVW Feature | Prediction Score | Prediction Class | Manual Label | |

|---|---|---|---|---|---|

| (a) |  |  | (0, −2.8254) | Bubble plume | Bubble plume |

| (b) |  |  | (0, −1.4815) | Bubble plume | Bubble plume |

| (c) |  |  | (0, −2.6271) | Bubble plume | Bubble plume |

| (d) |  |  | (0, −1.2373) | Bubble plume | Bubble plume |

| (e) |  |  | (−1.4502, 0) | Noise | Noise |

| (f) |  |  | (−1.3467, 0) | Noise | Noise |

| (g) |  |  | (−1.2134, −0) | Noise | Noise |

| (h) |  |  | (−0.9665, −0.0335) | Noise | Noise |

| Overall | Confusion Matrix | Validation Accuracy | 0.98 |

| Feature Extraction Method | Feature Number | Classifier | Accuracy (%) | Precision Ratio (%) | Recall Ratio (%) | F1 (%) |

|---|---|---|---|---|---|---|

| GLCM (d = 1) | 12 | Linear SVM | 77.59 | 75.32 | 82.07 | 78.55 |

| GLCM (d = 5) | 12 | Medium Tree | 71.03 | 67.84 | 80.00 | 73.42 |

| GLCM (d = 10) | 12 | Cosine KNN | 70.69 | 67.65 | 79.31 | 73.02 |

| GLCM (d = 1&5) | 24 | Logistic Regression | 82.41 | 81.76 | 83.45 | 82.59 |

| Tamura | 3 | Medium Tree | 79.31 | 81.48 | 75.86 | 78.57 |

| Tamura | 6 | Fine Tree | 84.48 | 84.25 | 84.83 | 84.54 |

| LBP (64 × 64) | 59 | Medium Gaussian SVM | 90.00 | 94.62 | 84.82 | 89.45 |

| LBP (64 × 64) | 10 | Medium Gaussian SVM | 79.31 | 78.15 | 81.38 | 79.73 |

| LBP (32 × 32) | 236 | Cubic SVM | 94.14 | 94.44 | 93.79 | 94.12 |

| HOG (32 × 32) | 36 | Weighted KNN | 82.76 | 82.31 | 83.45 | 82.88 |

| HOG (16 × 16) | 324 | Quadratic SVM | 89.66 | 89.66 | 89.65 | 89.65 |

| HOG (8 × 8) | 1764 | Linear SVM | 76.55 | 74.21 | 81.38 | 77.63 |

| GLCM + Tamura | 30 | Quadratic SVM | 91.72 | 90.60 | 93.10 | 91.84 |

| GLCM + LBP | 83 | Quadratic SVM | 91.38 | 90.54 | 92.41 | 91.47 |

| GLCM + HOG | 60 | Quadratic SVM | 90.34 | 87.74 | 93.79 | 90.67 |

| Tamura + LBP | 65 | Quadratic SVM | 93.10 | 92.52 | 93.79 | 93.15 |

| Tamura + HOG | 42 | Quadratic SVM | 90.34 | 90.91 | 89.66 | 90.28 |

| LBP + HOG | 95 | Quadratic SVM | 90.34 | 89.80 | 91.03 | 90.41 |

| GLCM + Tamura + LBP + HOG | 125 | Quadratic SVM | 95.17 | 95.80 | 94.48 | 95.14 |

| Haar-LBP [33] | - | AdaBoost | 95.80 | 99.35 | 82.70 | 90.26 |

| BOVW | 300 | Quadratic SVM | 98.62 | 99.30 | 97.93 | 98.61 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meng, J.; Yan, J.; Zhao, J. Bubble Plume Target Detection Method of Multibeam Water Column Images Based on Bags of Visual Word Features. Remote Sens. 2022, 14, 3296. https://doi.org/10.3390/rs14143296

Meng J, Yan J, Zhao J. Bubble Plume Target Detection Method of Multibeam Water Column Images Based on Bags of Visual Word Features. Remote Sensing. 2022; 14(14):3296. https://doi.org/10.3390/rs14143296

Chicago/Turabian StyleMeng, Junxia, Jun Yan, and Jianhu Zhao. 2022. "Bubble Plume Target Detection Method of Multibeam Water Column Images Based on Bags of Visual Word Features" Remote Sensing 14, no. 14: 3296. https://doi.org/10.3390/rs14143296

APA StyleMeng, J., Yan, J., & Zhao, J. (2022). Bubble Plume Target Detection Method of Multibeam Water Column Images Based on Bags of Visual Word Features. Remote Sensing, 14(14), 3296. https://doi.org/10.3390/rs14143296