1. Introduction

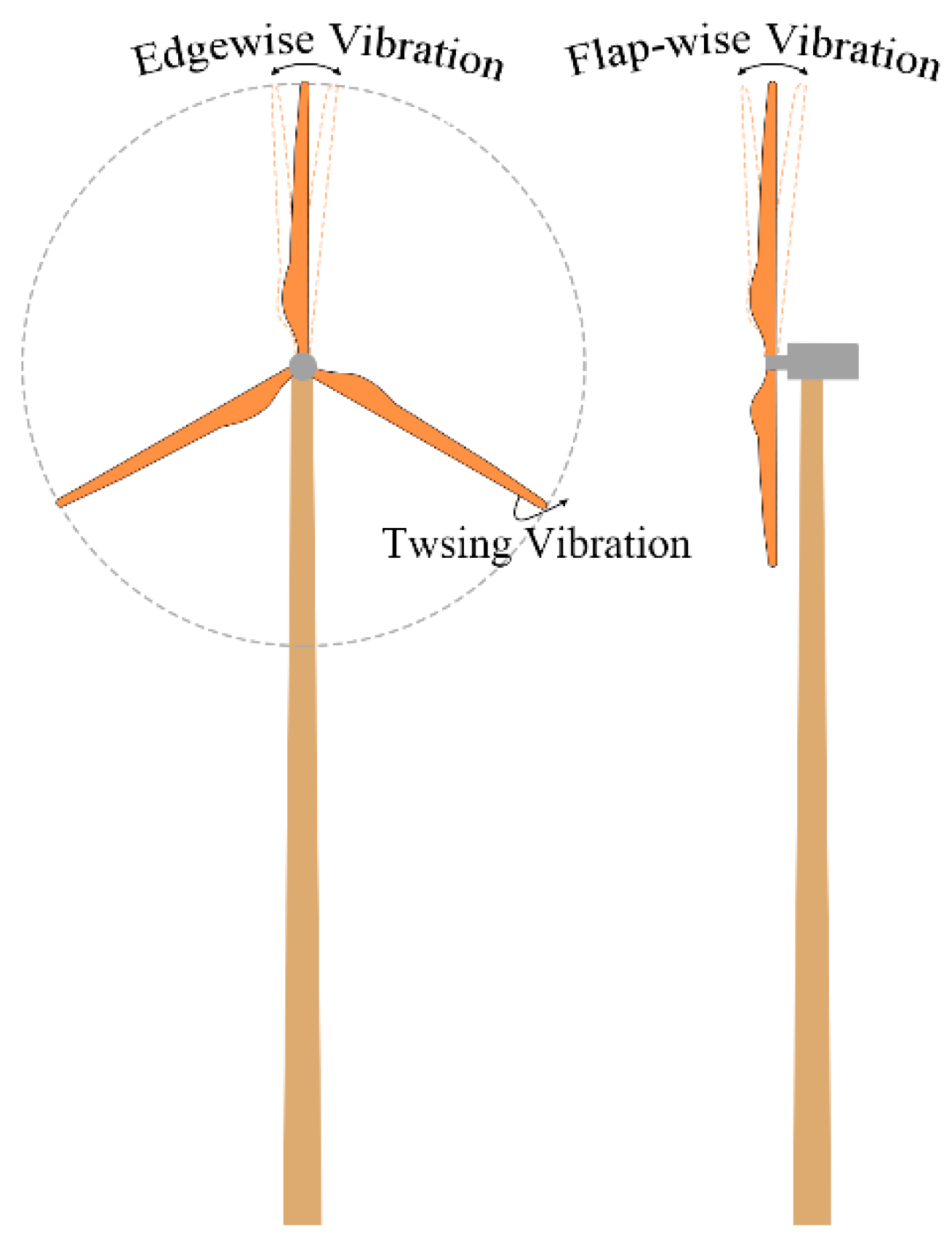

With the world’s increasing consumption and utilization of energy, the development and utilization of renewable energy has gradually gained attention, to lessen the adverse global impacts of non-renewable energy. Among the many renewable energy sources that meet the current sustainable development and future international strategies, wind energy has been spreading because of its advantages of clean use, small cost and low impact on the environment [

1]. As one of the main forms of energy conversion from wind into electric, wind power generation is deeply appreciated by people. As an important component of wind turbine structure power conversion, the state of the blade directly affects the performance and power quality of a wind turbine. Once the wind turbine blade is damaged, it will not only cause accidents but also serious economic losses [

2,

3,

4]. Therefore, accurate monitoring of blades for health assessment is of great significance to the operation, maintenance and safety of wind turbine structures.

Traditional wind turbine blade monitoring uses high-power telescope detection, acoustic emission monitoring [

5], ultrasonic monitoring [

6], thermal imaging monitoring [

7], etc. However, all the above methods are limited by human experience, equipment accuracy and environmental impact so the effect is not ideal in actual blade monitoring. In the field of civil engineering, contact sensors are usually used in monitoring structures, but installing that sensors on structures, especially the larger ones, requires substantial logistics support, such as arranging lines in advance, planning sensor locations, installing sensors, maintaining data transmission and other technicalities, which undoubtedly increase the monitoring cost. In addition, the installation of contact sensors on the structure will undoubtedly affect the dynamic characteristics of the object structure. Besides that, the monitoring data depends strictly on the number of sensors [

8]. Therefore, it is not suitable for large-scale structural health monitoring under numerous wiring arrangements. With the development of science and technology, wireless sensors have emerged in structural health monitoring [

9]. However, due to their disadvantages such as high cost, time-consuming installation, and asynchronous data reception, they cannot be widely used in wind turbine blade monitoring. Most wind farms are located in remote areas [

2], such as coastal areas, mountain regions, deserts, etc. while the blades are usually located at a high distance from the ground. Under severe natural conditions such as thunderstorms, storms, and salt fog, the traditional monitoring equipment would have low survival rates and high maintenance cost. Therefore, there is an urgent need to develop a monitoring method for a large-scale wind turbine blade with low cost, convenient installation and high reliability.

In recent years, with the rapid development of computer technology and image processing technology, computer vision technology stands out in structural health monitoring with its advantages of low cost, non-contact and non-damage properties. Many scholars have applied computer vision technology to the structural health monitoring of civil engineering [

10,

11,

12]. Yang et al. [

13] proposed a video-based method to identify the micro-full-field deformation mode and the dominant rigid body motions, but it was affected by illumination and obstacles. Dong et al. [

14] proposed a method for measuring velocity and displacement based on feature fixed matching and tested it on a footbridge, but its accuracy was greatly affected by illumination. Zhao et al. [

15] developed a color-based bridge displacement monitoring APP, which was sensitive to light and did not consider complex background, so that it was not conducive to long-term monitoring. All the above monitoring methods are still unable to accurately monitor the light changes and accommodate complex backgrounds, which are very common in the actual situations of wind turbine blades monitoring. Therefore, the above vision algorithm cannot settle the problem discussed before.

Although computer vision technology is booming in civil engineering structural health monitoring, most studies only use one or several cameras with fixed positions for monitoring [

16,

17]. The obtained data using a fixed camera depend heavily on image quality, which makes it impossible to take a comprehensive picture of large structures. The image distortion caused by the zoom due to atmospheric effect, and the inability to determine the most favorable position of the required monitoring points makes it difficult to monitor the dynamic characteristics of large structures by use of cameras [

18]. Because of the flexibility and convenience of UAV, it has become popular in the field of vision-based structural health monitoring. More and more scholars do research on vision-based structural health monitoring by UAV. Bai et al. [

19] adopted the template matching computer vision method of normalized cross correlation (NCC) to monitor structural displacement, and used the background points to obtain the vibration of UAV for displacement compensation. Zhao et al. [

20] proposed a dam health monitoring model based on UAV measurement, which artificially creates damage to the dam and uses the measurement point cloud to measure the damage. Perry et al. [

21] proposed a new remote sensing technology for dynamic displacement of three-dimensional structures, which integrates optical and infrared UAV platforms to measure the dynamic structural response. Wu et al. [

22] extracted blurred images from videos of wind turbine blades under rotation, and used adversarial generative networks to expand the blade data for the purpose of defect detection, but dynamic characteristics were not tested. Targets are used in the above methods to enhance the features so that the structural displacement will be identified. Although many scholars use target-free methods to monitor structural health [

23,

24], they still find the inherent patterns or nuts on the surface of the structure for identification. There are no obvious textures and patterns on the surface of large-scale wind turbine blades, which are different from the overall structure. In addition, it is impossible to attach targets to the blades. Therefore, a non-contact and target-free monitoring method must be sought for the visual monitoring of large-scale blades.

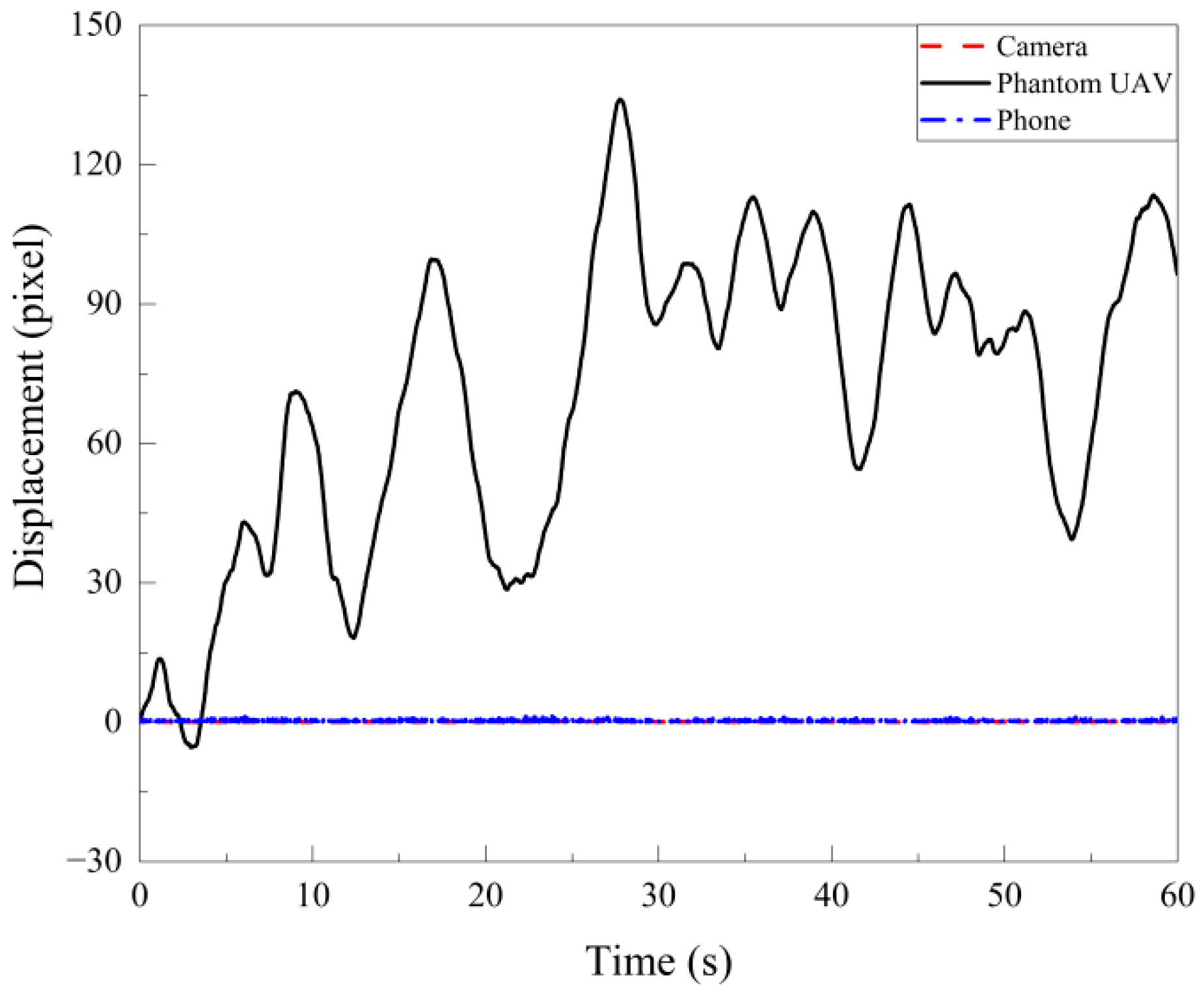

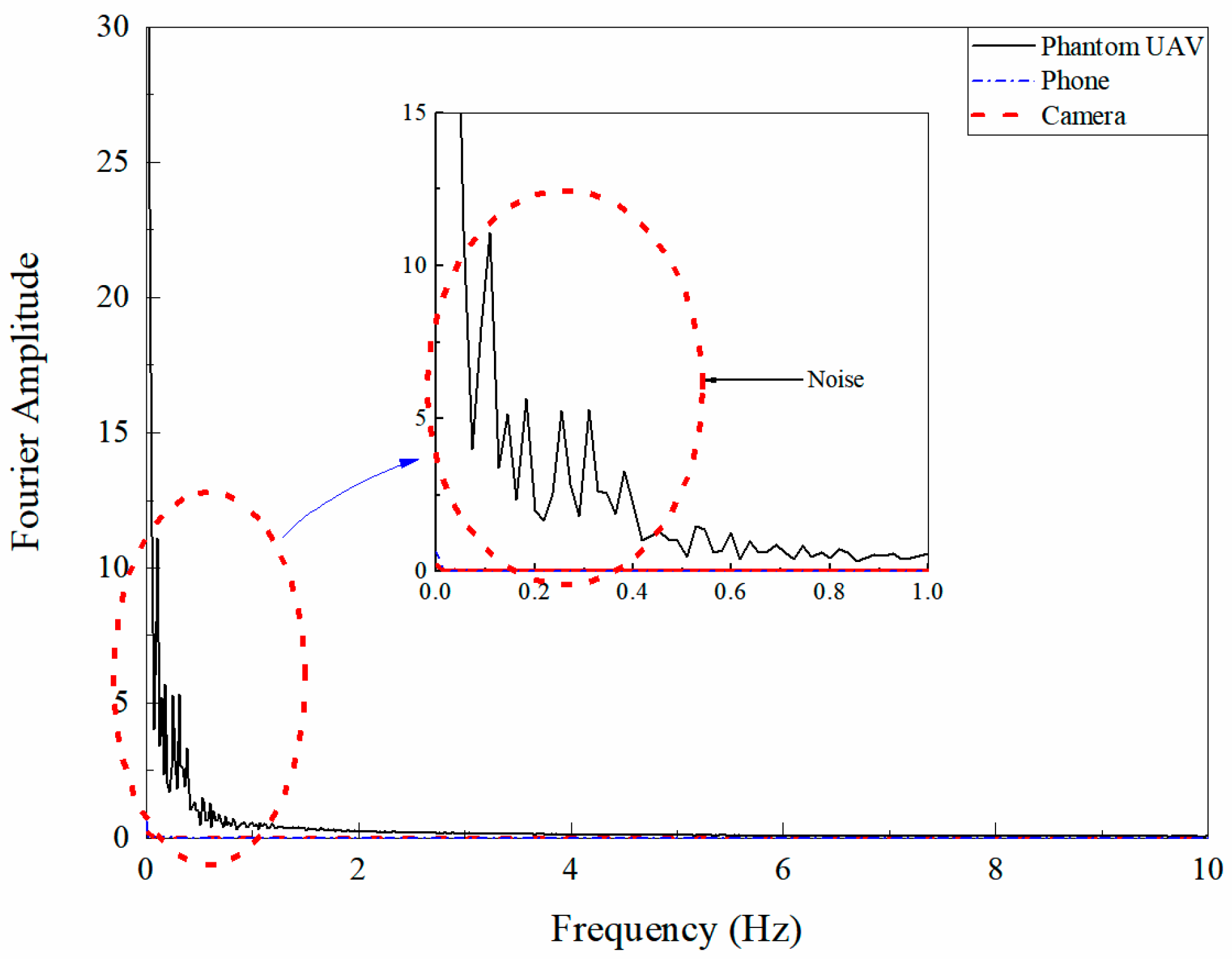

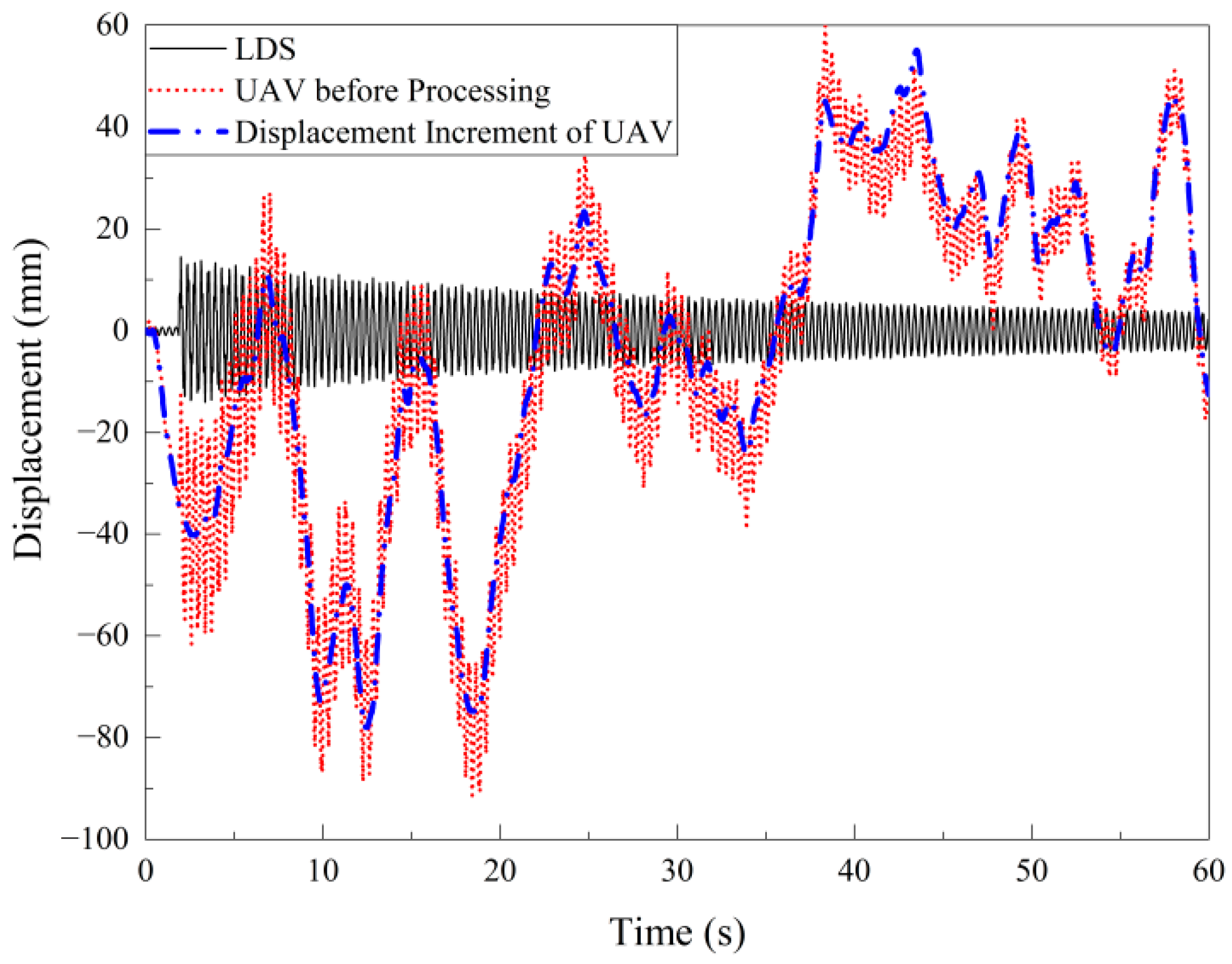

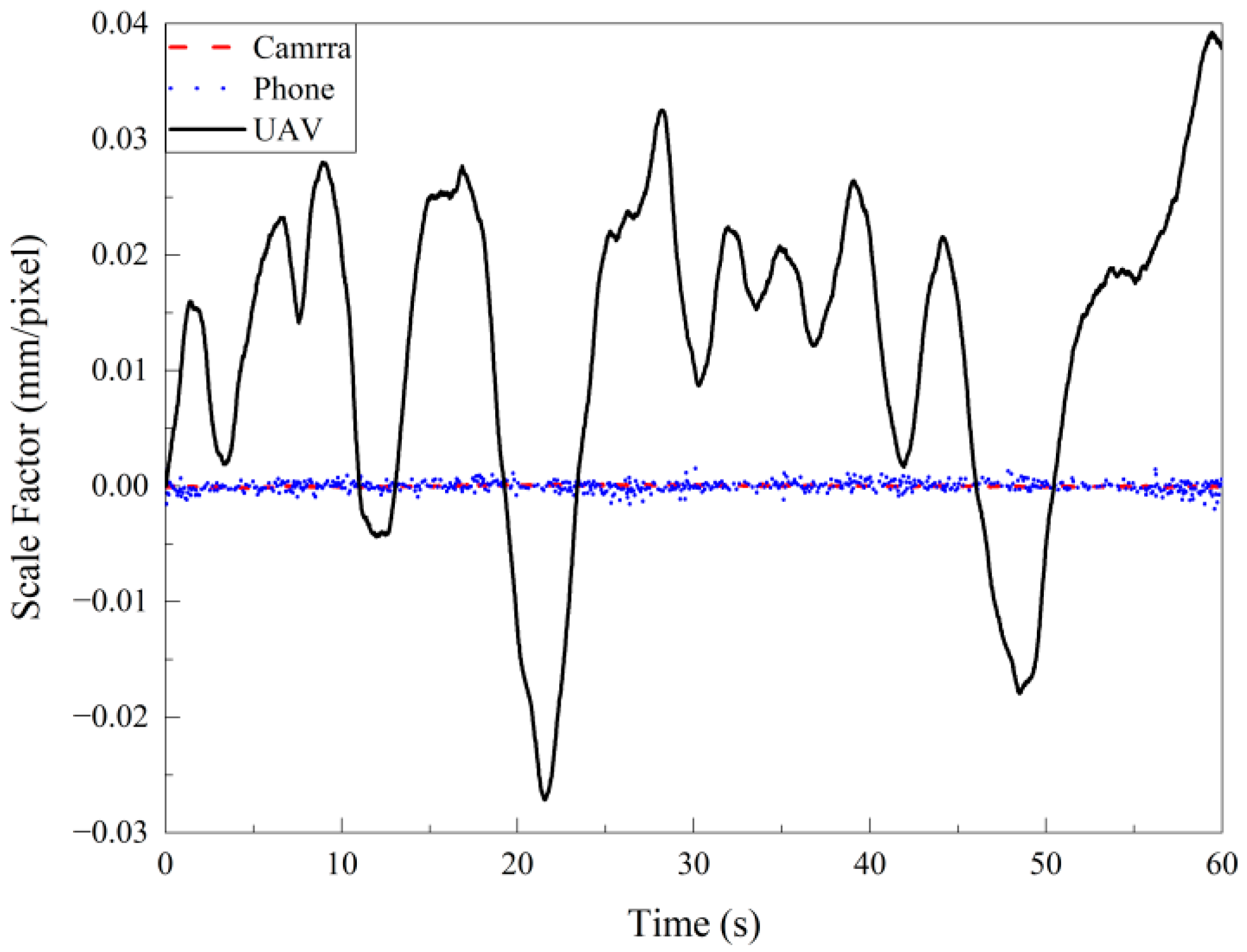

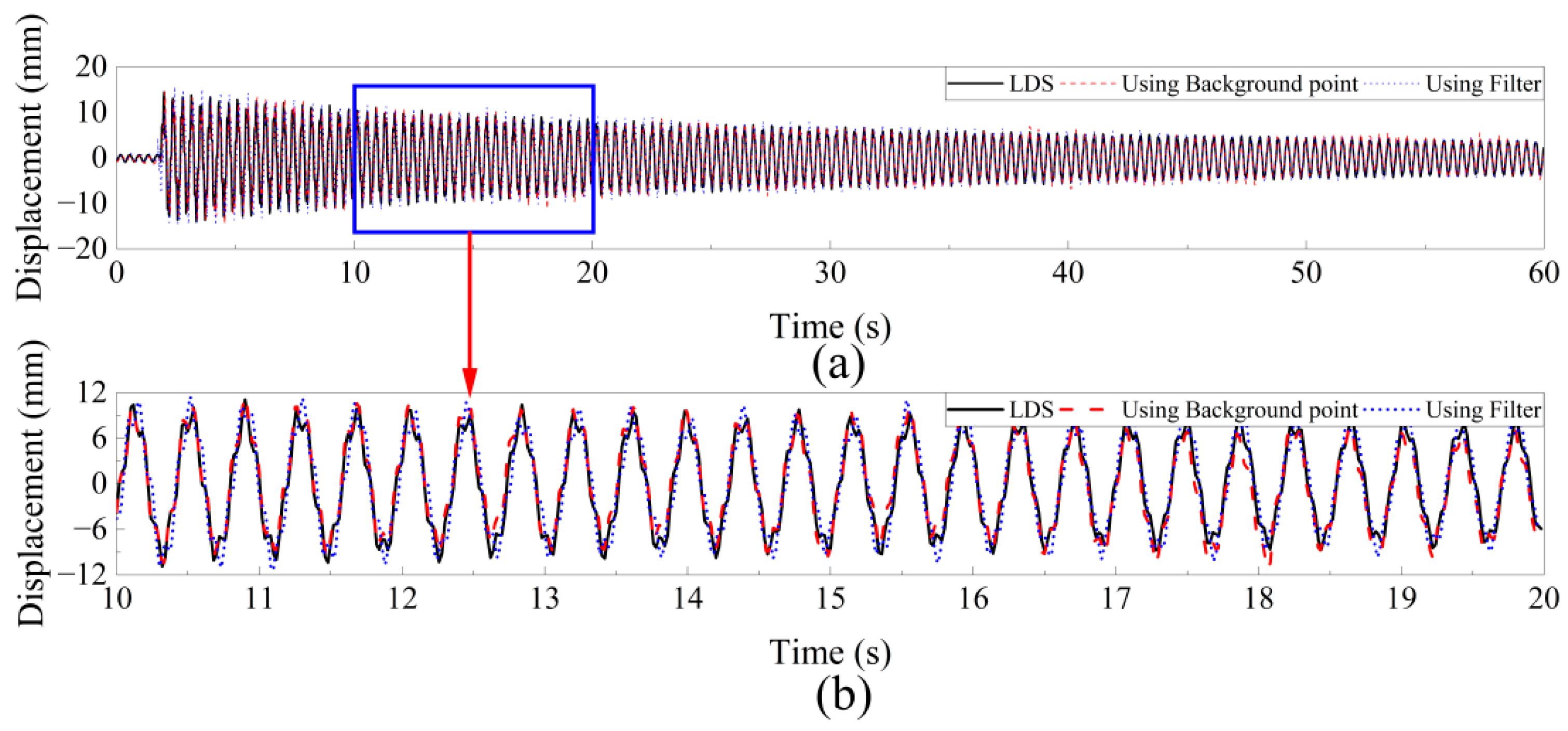

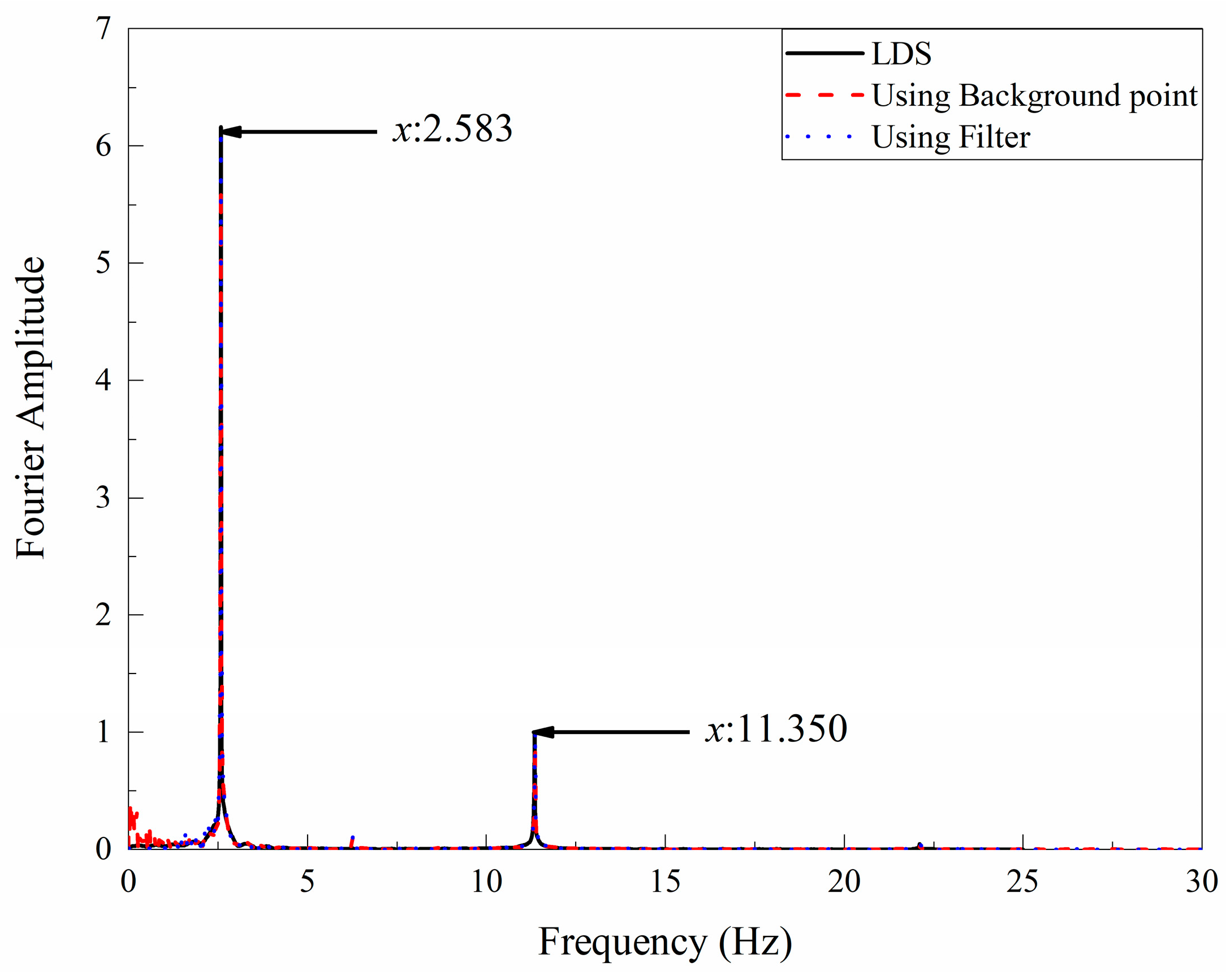

This paper is focus on monitoring the dynamic characteristics of large wind turbine blades based on vision algorithms and UAV. In the first section, the disadvantages of traditional monitoring methods and the limitations of existing vision monitoring methods in the monitoring of large wind turbine blades are summarized. Furthermore, a method for structural health monitoring of large wind turbine blades based on target-free DSST vision algorithm and UAV is proposed. The second section studies the spatial displacement drift caused by UAV hovering monitoring. The method of the background fixed point combined with high-pass filtering is proposed to eliminate the in-plane influence during hovering monitoring, the adaptive scale factor of which eliminates the UAV out-of-plane displacement drift. The spatial displacement drift of the UAV hovering monitoring is compensated and the corresponding experimental verification is done. In

Section 3, a machine learning method is adopted to train the position filter and scale filter of a DSST algorithm, and a target-free DSST visual monitoring method is proposed. In

Section 4, experiments are carried out in combination with the actual engineering background illumination transformation and complex background conditions to verify the robustness of the target-free DSST algorithm. In

Section 5, the wind turbine blades vibration videos combined with the blades corners and edges tracking are used to identify the dynamic displacement of the blades without additional targets, and the dynamic response of each monitoring point of the blades is obtained through the vision, which is evaluated and compared with the analysis results of traditional monitoring methods. In

Section 6, the main conclusions of this work are summarized.

3. Monitoring Principle Based on Target-Free DSST Vision Algorithm

Images can remotely encode information within the field of view in a non-contact manner to obtain data for structural health monitoring, potentially addressing the problem in monitoring using contact sensors. The video encodes the individual images arranged in the time dimension, and then examines the image characteristic information for structural health monitoring.

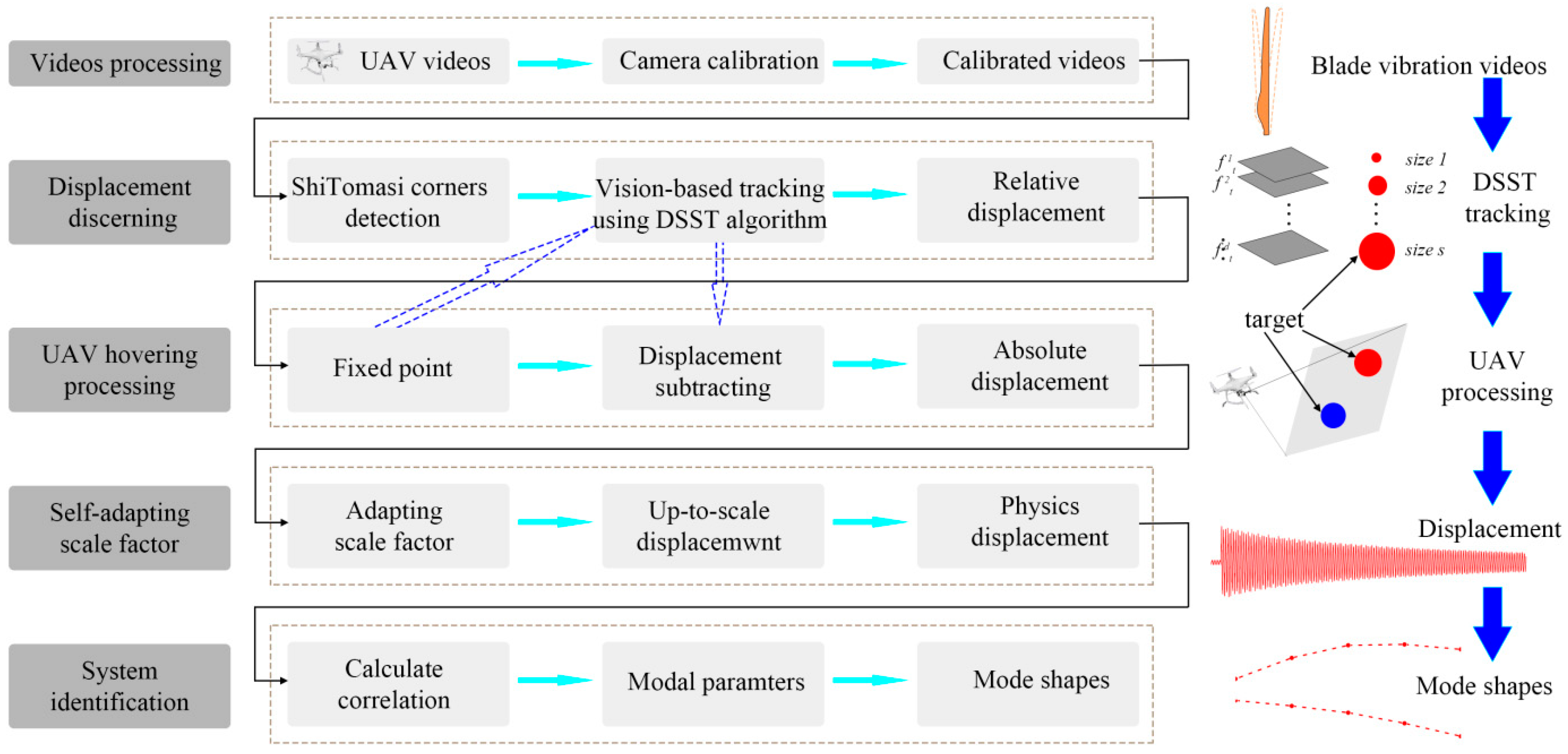

The large-scale structural health monitoring of UAV equipped with vision algorithm can be divided into five steps: camera calibration, target tracking, UAV hover processing, adaptive scale factor acquisition and system identification, as shown in

Figure 11. The previous section solved the problem of displacement drift caused by UAV hovering monitoring, which will not be repeated here.

3.1. Camera Calibration

Camera calibration is to shoot the calibration plate by the camera, determine the internal and external parameters of the camera with the intrinsic value of the characteristic points of the calibration plate, and then convert the image coordinates into physical coordinates through the scale factor. The general equation for converting from image coordinates to physical coordinates is as follows:

The simplified expression is:

where

is the scale factor;

is the image coordinate;

is the camera internal parameter representing the projection transformation from the three-dimensional real world to the two-dimensional image;

is the world coordinates; in the intrinsic parameters,

and

are the focal lengths of the camera in the horizontal and vertical directions;

and

are the offsets of the optical axis in the horizontal and vertical directions;

is the tilt factor;

and

are the camera The external parameters represent rigid rotation and translation from 3D real-world coordinates to 3D camera coordinates;

and

are elements of

R and

t, respectively.

In this paper, the calibration method in the paper [

26] is used to calibrate the UAV lens; the video is calibrated by the camera’s internal parameters, the tangential and radial distortions. The calibration of image distortion through camera calibration can effectively eliminate image distortion and result in more accurate displacement measurement.

3.2. Target Tracking with Target-Free DSST Vision Algorithm

Vision-based structural health monitoring in the field of civil engineering mostly uses digital image correlation (DIC) technology [

27], template matching [

28], color matching [

29], optical flow method [

30] and other algorithms for target tracking. Restricted to the conditions of additional structural markers, constant illumination, and single background, under the actual engineering background conditions, the above-mentioned limitations can be solved to accurately monitor the actual engineering structure.

In 2014, Danelljan et al. [

31] improved the KCF algorithm and proposed a robust scale estimation method based on the detection and tracking framework, namely the DSST (Discriminative Scale Space Tracker) algorithm. The DSST algorithm can learn translation and scale estimation by separate filters and the target is tracked with a position filter. This algorithm improves the accuracy of the exhaustive scale space search method, and the running speed can reach 25 times the frame rate, which is very effective for fast structural health monitoring.

First, a discriminative correlation filter is trained to locate the target in a new frame, using target images in several grayscale images as training samples, marked as filters that require correlation output. The optimal correlation filter obtained by the minimized error emissions and the resulting time compensation satisfies the following equation:

where the functions

,

and

are of size

.

, which represents a cyclic correlation, and the second equal sign follows from Parseval’s theorem. Capital letters denote discrete Fourier transforms (DFTs) of the corresponding functions, and the overline in

denotes the complex conjugate. The result of minimizing the above equation can be obtained:

In engineering practice, is usually divided into numerator and denominator for iterative update operations.

For the image patch z of

in a new frame of image, its response score is

, and the calculation method is as follows:

where

is the inverse DFT operator. The largest value in

is considered as an estimate of the new position of the target.

Considering the multi-dimensional features of the image, let

be the feature,

has

dimensions,

is the

dimension, and the value of 1 is 1 to

, then the minimized loss function is:

where

is the regular term. In the above equation, only one training sample is considered, and

can be obtained by solving:

Similarly,

is split into numerator

and denominator

and updated iteratively as follows:

where

is the learning rate. For patch Z in the new image, its response score is

, and the calculation method is as follows:

where the maximum value of

is considered to be the estimate of the target’s new position.

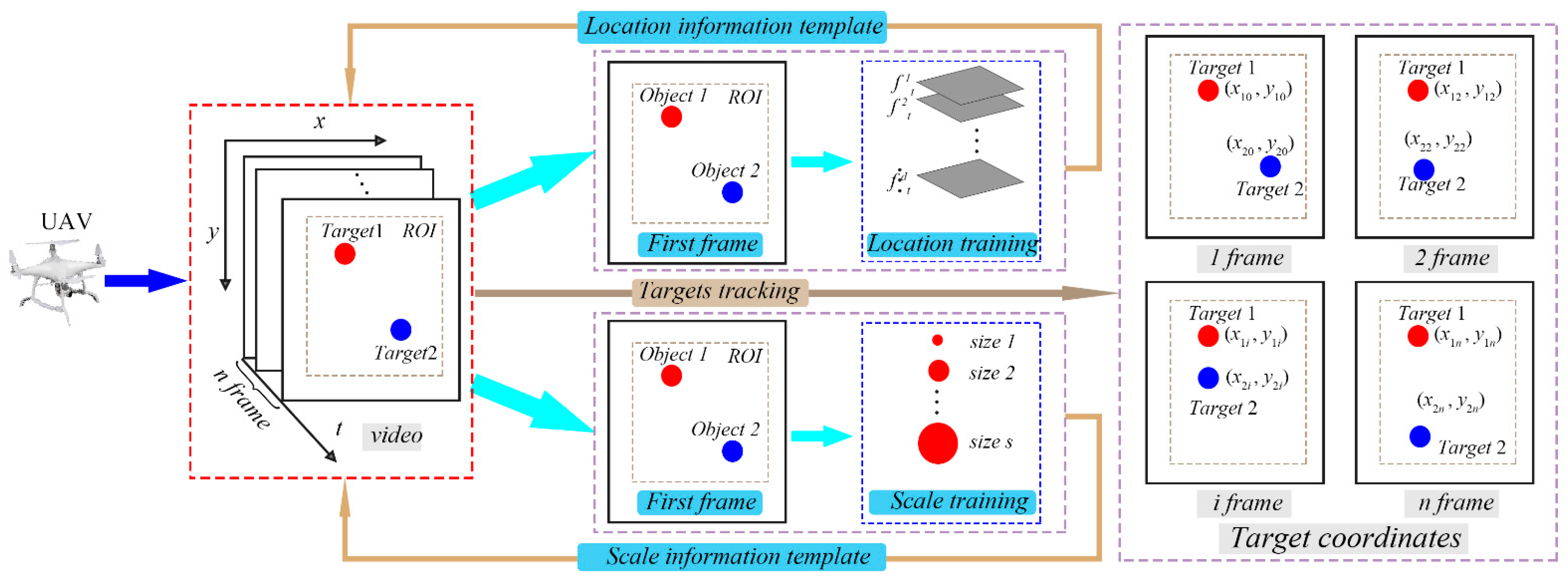

The traditional DSST algorithm performs position tracking and scale estimation on the target by constructing a position filter and a scale filter, and only a target with strong features can be tracked. In this paper, the machine learning method is used to train the position filter and the scale filter to enhance the target image features, and a target-free DSST visual tracking algorithm is proposed. The target tracking steps based on the label-free DSST algorithm are shown in

Figure 12.

As described in the figure, tracking steps are as follows:

Step 1: Create a Region of Interest (ROI). To reduce the amount of calculation and eliminate unnecessary information in the image, an ROI area is established in the image.

Step 2: Perform scale training. After the target is selected, the scale change correlation operation is performed, and the scale information is obtained by S-scale training based on the selected target sample of the image.

Step 3: Perform the position training at the same time as scale training. Taking the selected image as the sample component of the d-layer feature pyramid, each feature sample is trained to obtain the maximum response output, and then the new target center position is obtained to determine the position information.

Step 4: A model update. The parameters obtained by scale training and position training update the position filter and scale-space filter of the original model to prepare for the next step of target tracking.

Step 5: Target Tracking. The target tracking is performed on each frame of an image with the parameters of the updated model. In each frame, the target with the largest response is found according to the correlation operation to obtain a new target position and scale, and finally the coordinates are output through the tracking frame.

In this paper, the selected target is used to train the scale and position of the frame for machine learning, and the obtained target features are strengthened by machine learning training to achieve the purpose of edge and corner tracking of the structure and even target-free monitoring can be achieved.

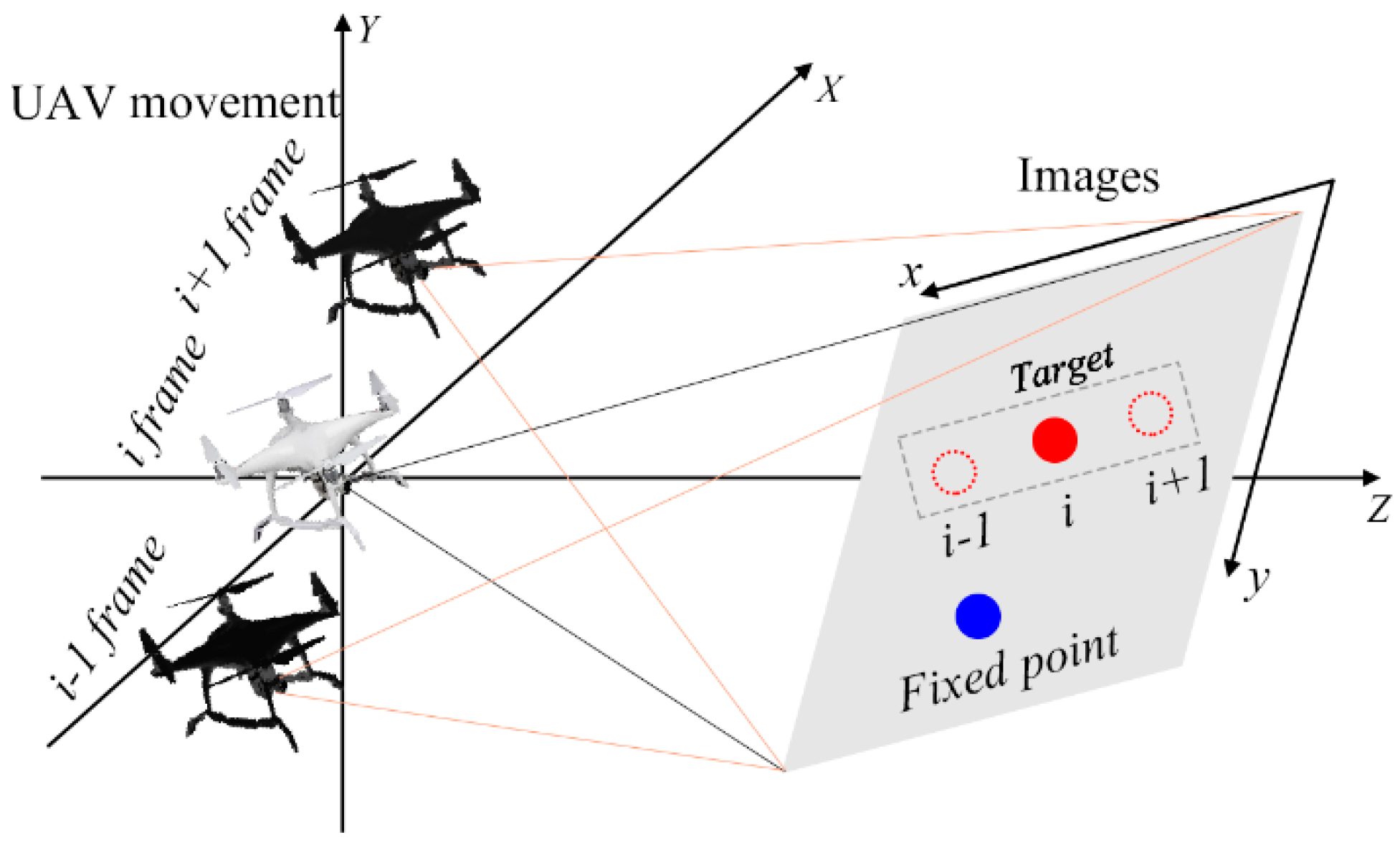

3.3. Relative Displacement Calculation

Since the UAV itself influences displacement drift when hovering, relative coordinates and displacement can be obtained by target tracking. In each frame of the time series, the corresponding coordinate

of the target and the target coordinate

of the first frame can be calculated by the following equation to find the relative displacement

of the structure (unit: pixel):

3.4. System Identification

Compared with the fixed camera, the advantages of UAV to shoot structural vibration videos go beyond finding a favorable position for monitoring and eliminating the image distortion caused by the atmosphere to the camera.The flexibility of UAV also permits a close approach to the structure, so as to maximize the resolution and vibration amplitude of UAV and achieve more accurate structural health monitoring. After the UAV displacement drift compensation is realized, the absolute displacement of the structure is calculated by Equation (4), and the displacement time history diagram of multiple monitoring points of the structure is obtained. Finally, the global modal mode shape of the structure is calculated by the response.

4. Experimental Verification Based on Target-Free DSST Vision Algorithm

At this stage, many vision algorithms have emerged for the dynamic characteristic test based on vision, but most algorithms need certain special conditions for the structure. To obtain fast, convenient and low cost results, this paper verifies the optical flow method [

30], color matching method [

29] and target-free DSST algorithm commonly used by researchers for visual health monitoring.

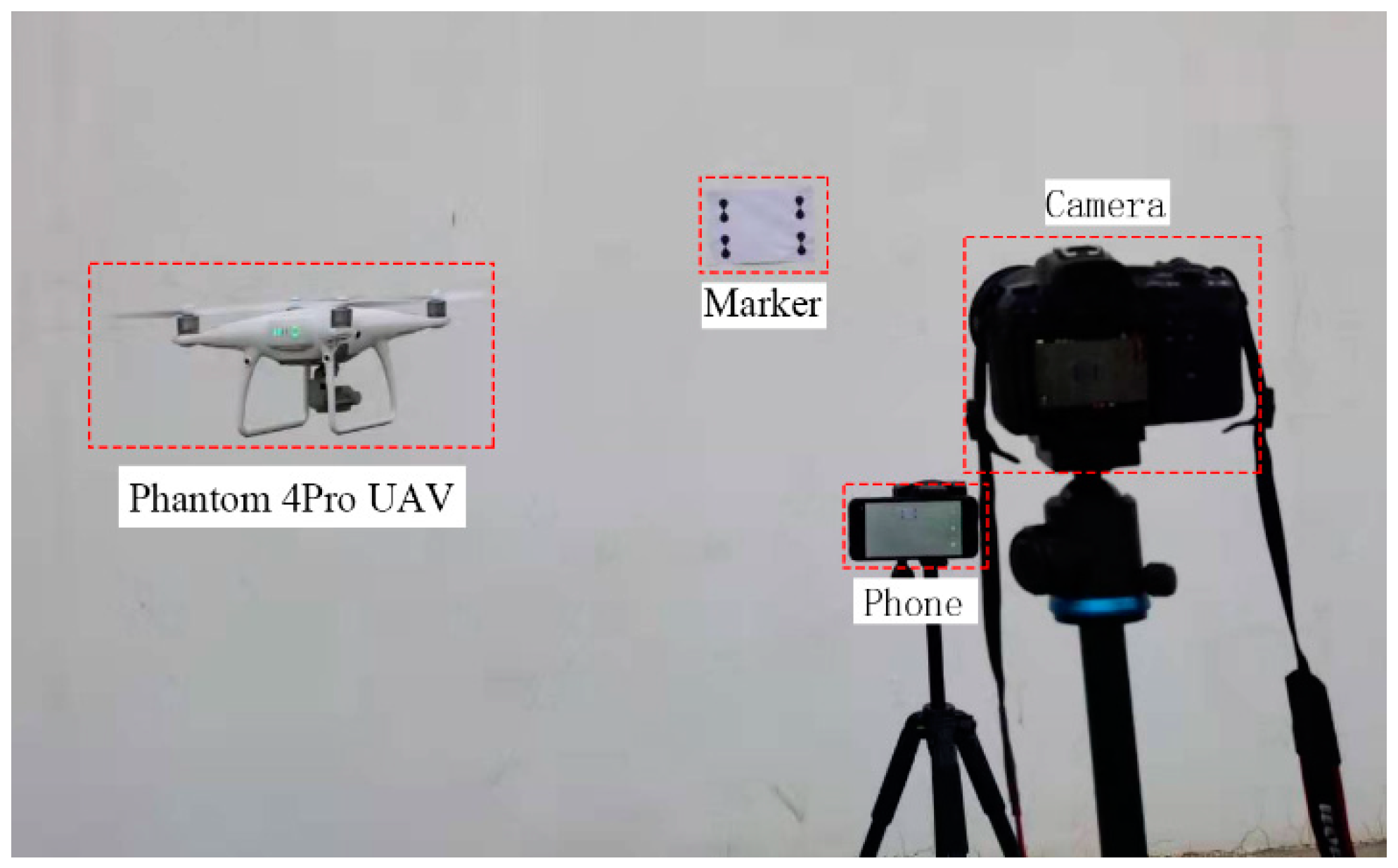

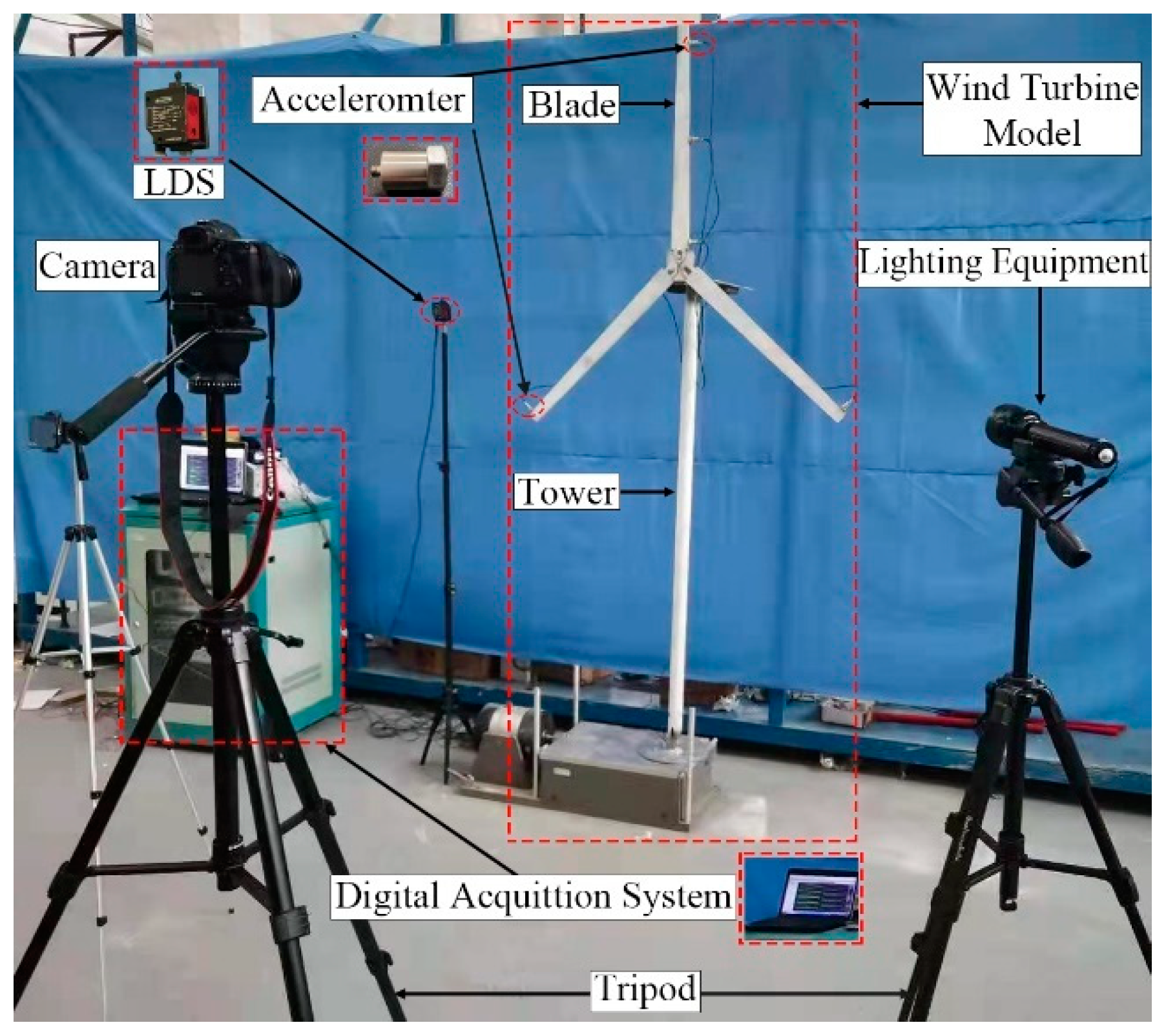

4.1. Test Equipment

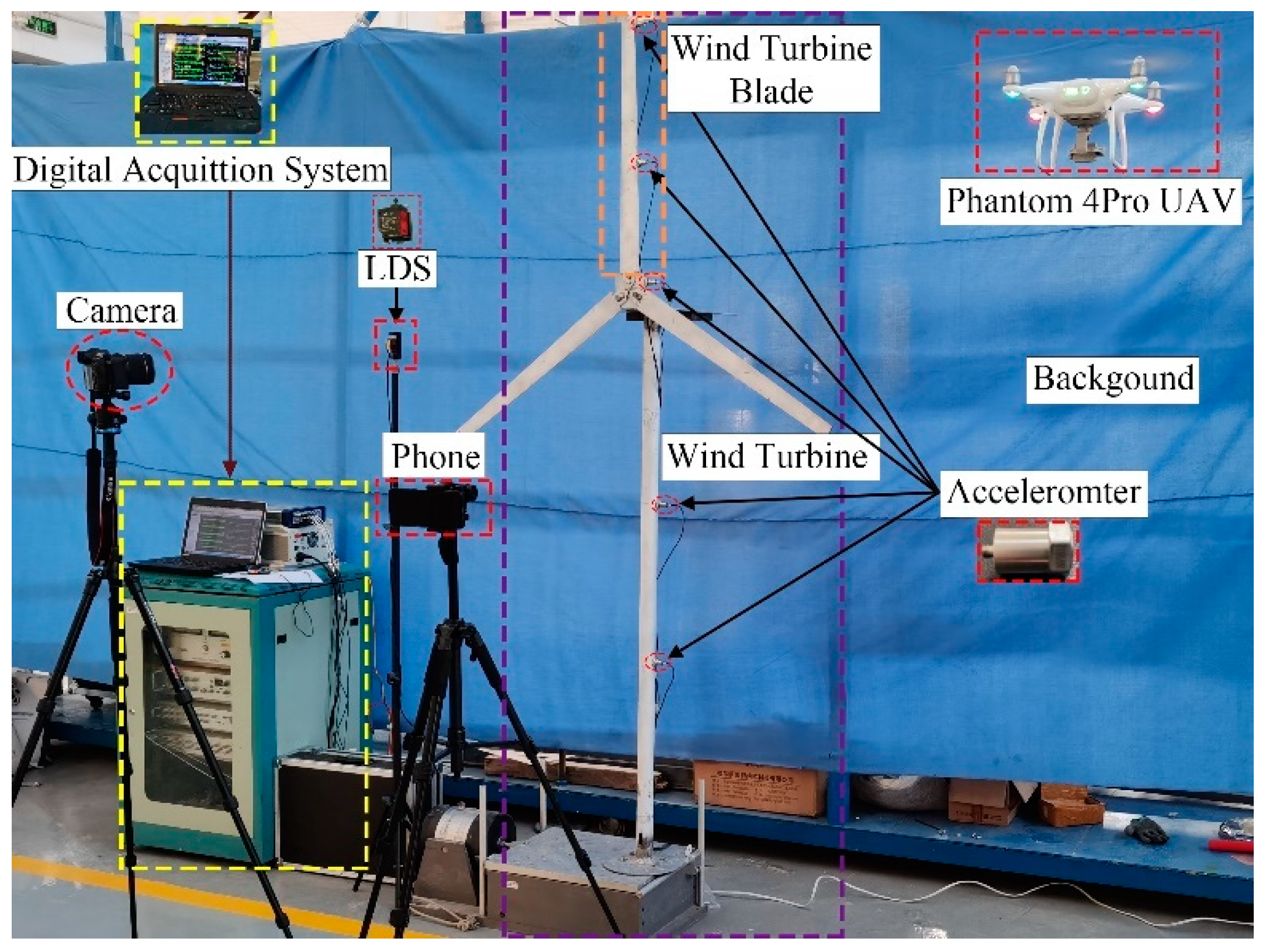

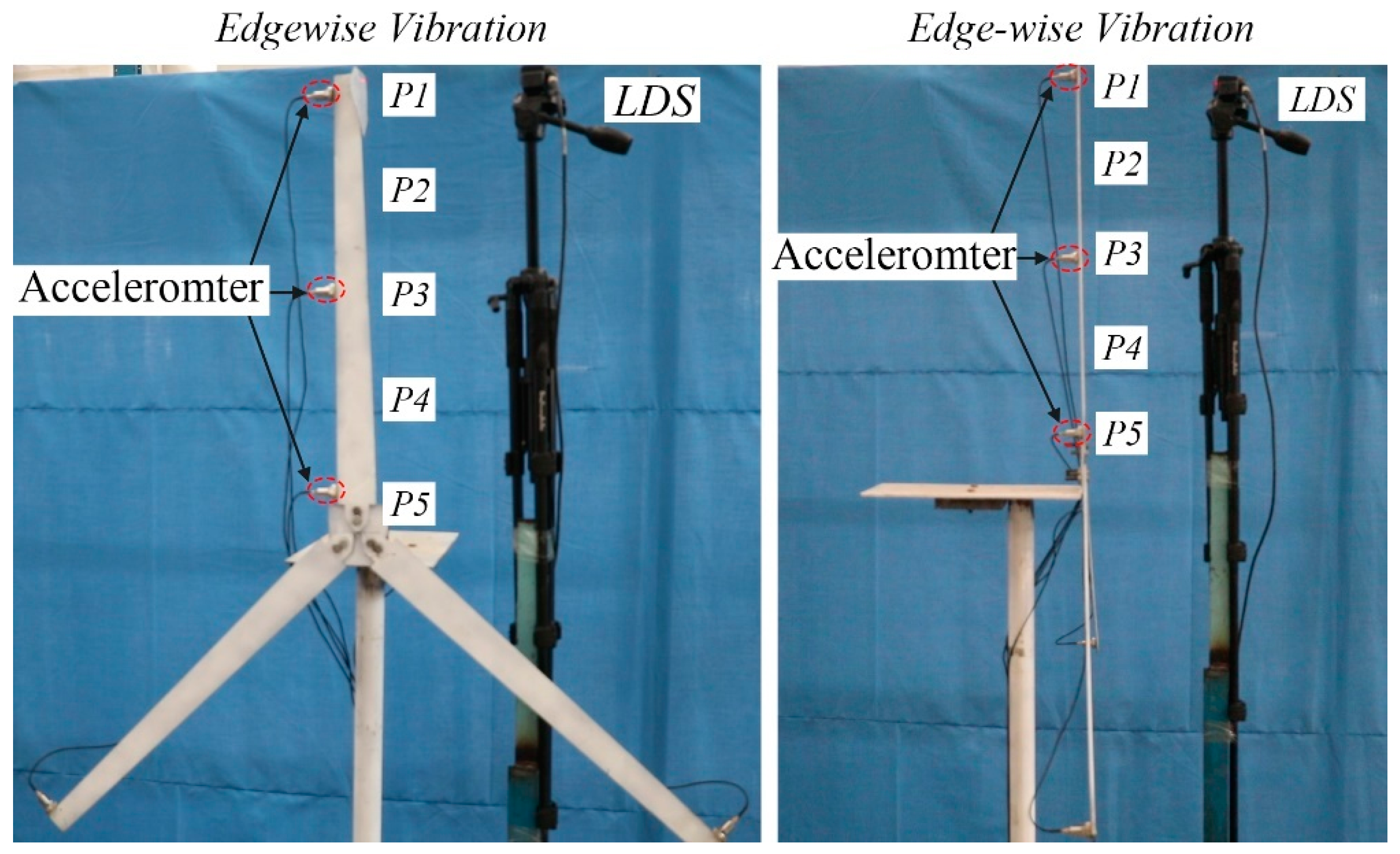

In order to quantify the accuracy of the algorithm, this experiment uses a Canon R6 camera with a 24–105 mm zoom lens with its the video resolution to 720P/1080P/4K and frame rate 50 fps, simulating the scaled wind turbine model, light source, tripod, etc. in the shutdown state. In order to verify the accuracy of computer vision monitoring of structural displacement, a Banner250U laser displacement sensor with the sampling frequency of 50 Hz was used here. The test equipment is shown in

Figure 13.

4.2. Algorithm Testing under Simulated Engineering Conditions

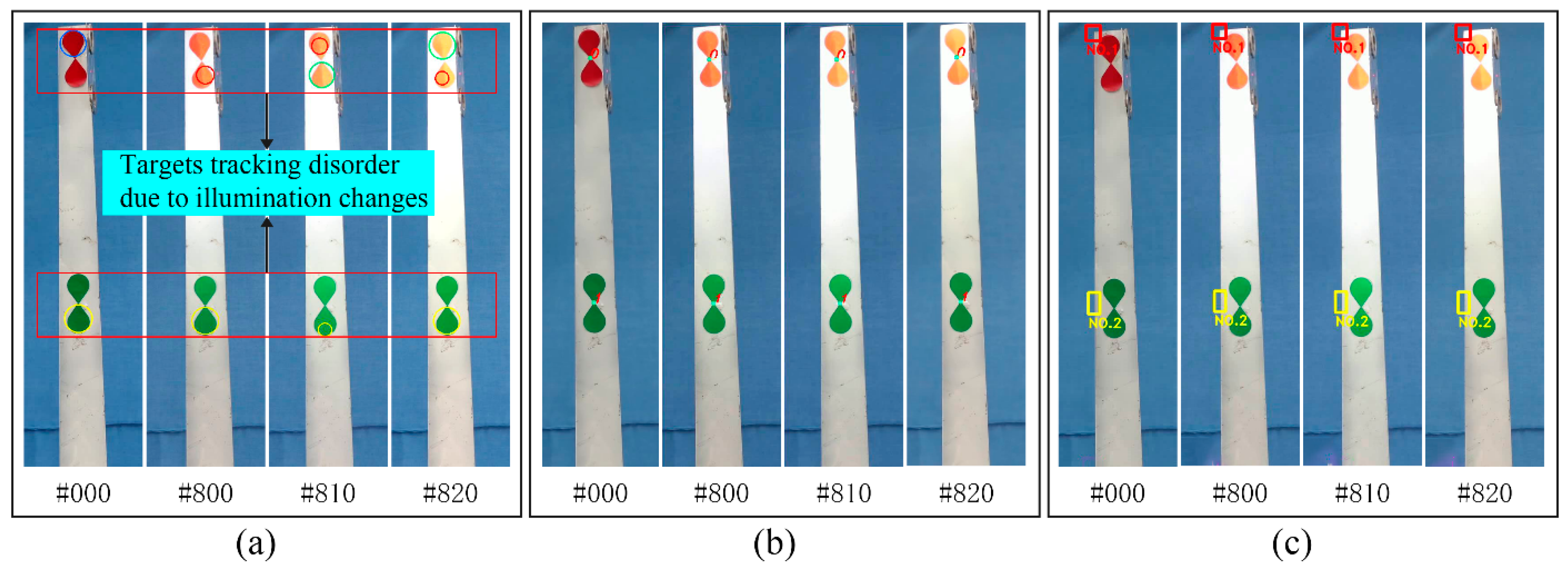

The existing tracking algorithms tests are usually carried out under ideal conditions. Because of the harsh wind field environment and tall blades, artificial markers are difficult to instal. As a result, conventional tracking algorithms cannot perform good tracking work. To verify the practicability of the target-free DSST algorithm in the actual wind farm environment, this experiment performs visual monitoring under simulated conditions including idealization, illumination change and complex background. The optical flow method and the color matching method have the obvious limitations of adding artificial markers as features during the monitoring process. However, the target-free DSST algorithm uses the inherent corners or edges of leaves for tracking.

The camera is used to monitor the states among three simulated machines during 90 s, in which the blue cloth is used as the background of the ideal environment, the lighting equipment is used to irradiate the blades from weak to strong in 16~17 s, and the internal environment of the laboratory is used as the engineering background for the identification of dynamic characteristics. In the test project with ideal conditions, the tracking effects of the three algorithms are good due to the simple background with no external interference. The monitoring results are shown in the next section, so they are not repeated here. The algorithm performance test focuses on the monitoring of illumination changes and complex background. The performance of the algorithm in the different simulated wind field environments is shown in

Figure 14,

Figure 15 and

Figure 16.

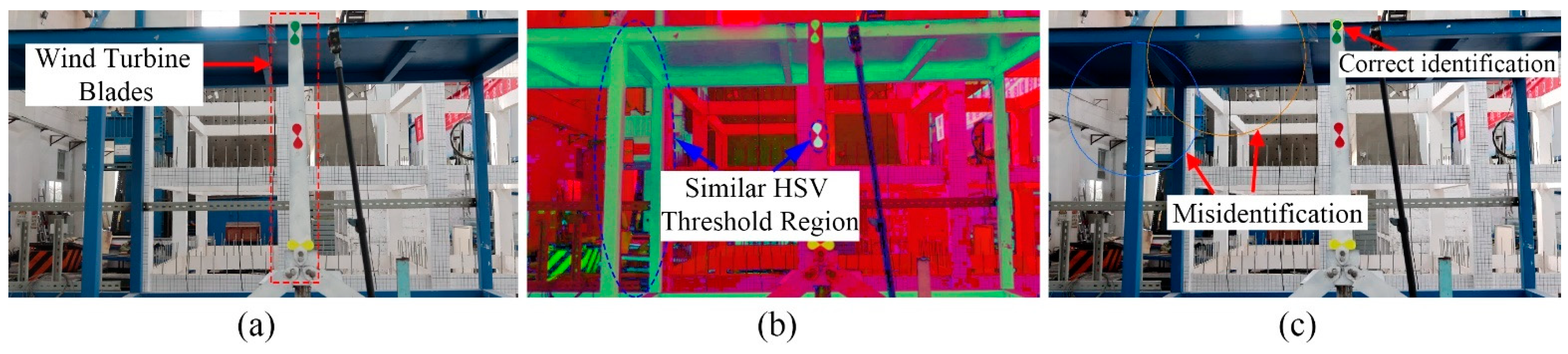

Figure 14 shows the performance of the three algorithms in the tracking process under the condition of illumination change. When the illumination changes, the predetermined threshold of the monitoring points exceeds the range, and the color matching algorithm cannot capture the target points.

Figure 14a shows the tracking situation of the color matching algorithm at different frame numbers. It can be seen that the color matching algorithm cannot track the monitoring target as long as the illumination changes occur, such as the 800th, 810th and 820th frames. The color matching algorithm is particularly sensitive to illumination changing and cannot track the object when the illumination changes. The optical flow method and the target-free DSST algorithm are not sensitive to illumination when tracking, so they can both accurately capture the target point.

Figure 15 and

Figure 16 show the monitoring performance of the color matching algorithm and optical flow method with complex background settings. It can be seen in

Figure 15 that the former algorithm has serious error in recognition when transforming RGB color space into HSV space under the complex background condition. It can be tracked only when the color difference between the monitoring point and the background is large, which is not applicable in practical engineering. From

Figure 16, it can be seen that most of the identified corners in the ROI selection under the complex background condition are background points, and 100 corners are set in the figure for matching. According to the matching results in

Figure 16b, the strong Harris corners fall on the blades is less, which will lead to the inaccurate identification of the monitoring points including to the extent of some being identified as useless points. This results in large amounts of calculation and too much data redundancy in memory, and thus is not suitable for actual monitoring.

The target-free DSST algorithm performs well in different actual monitoring environments attributed to the scale and location training of machine learning, which does not require the artificial markers in

Figure 14.

4.3. Analysis of Monitoring Results

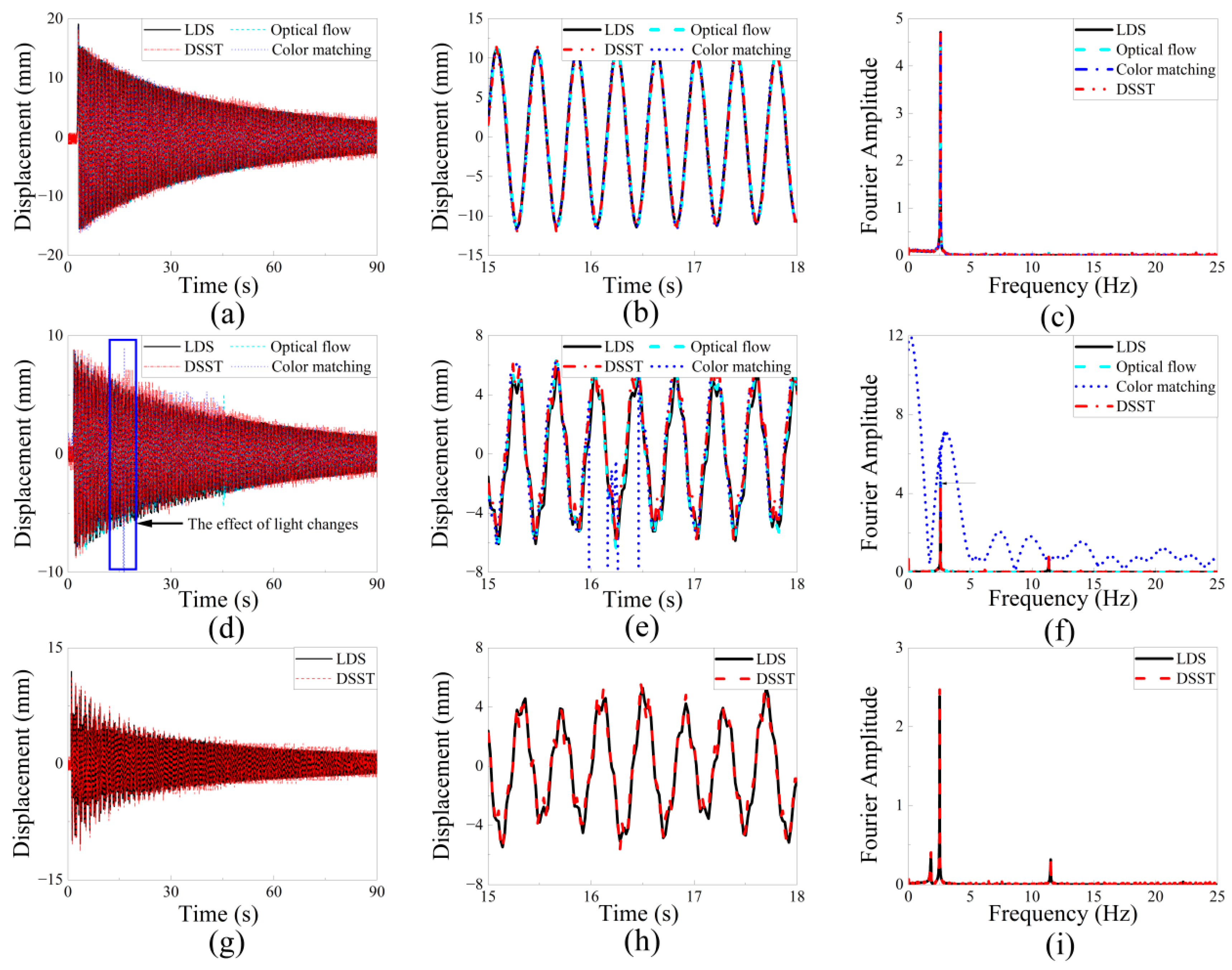

The wind turbine model vibrates freely under the effect of excitation which the three algorithms are used to track. The three algorithms are used to compare LDS, the displacement time history curve and frequency domain information under different conditions as shown in

Figure 17. Different equipment will produce time differences in monitoring. In this paper, the time point matching of the first peak measured in the time domain is used to solve the problem of displacement time history phase deviation caused by time difference [

28].

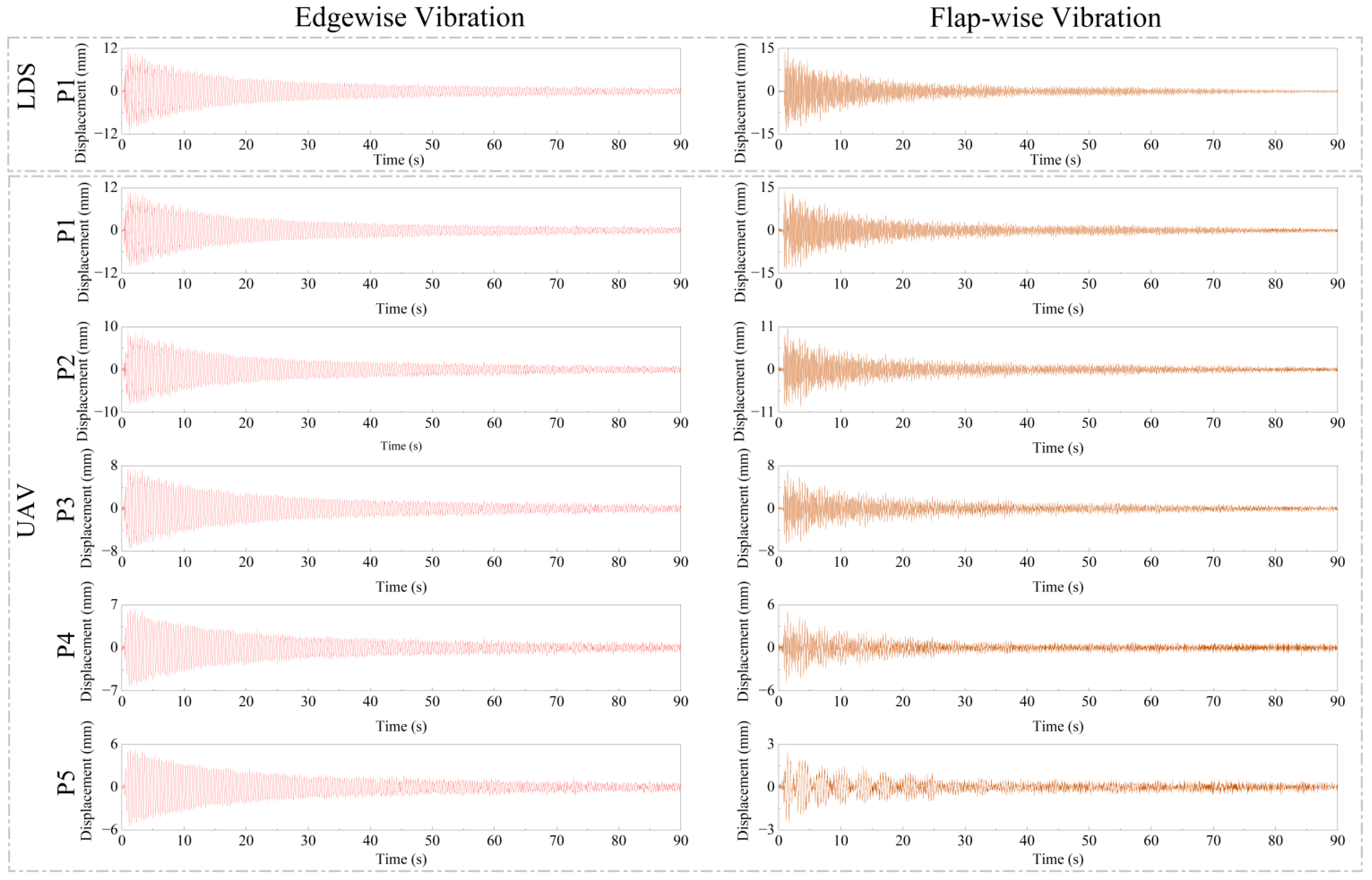

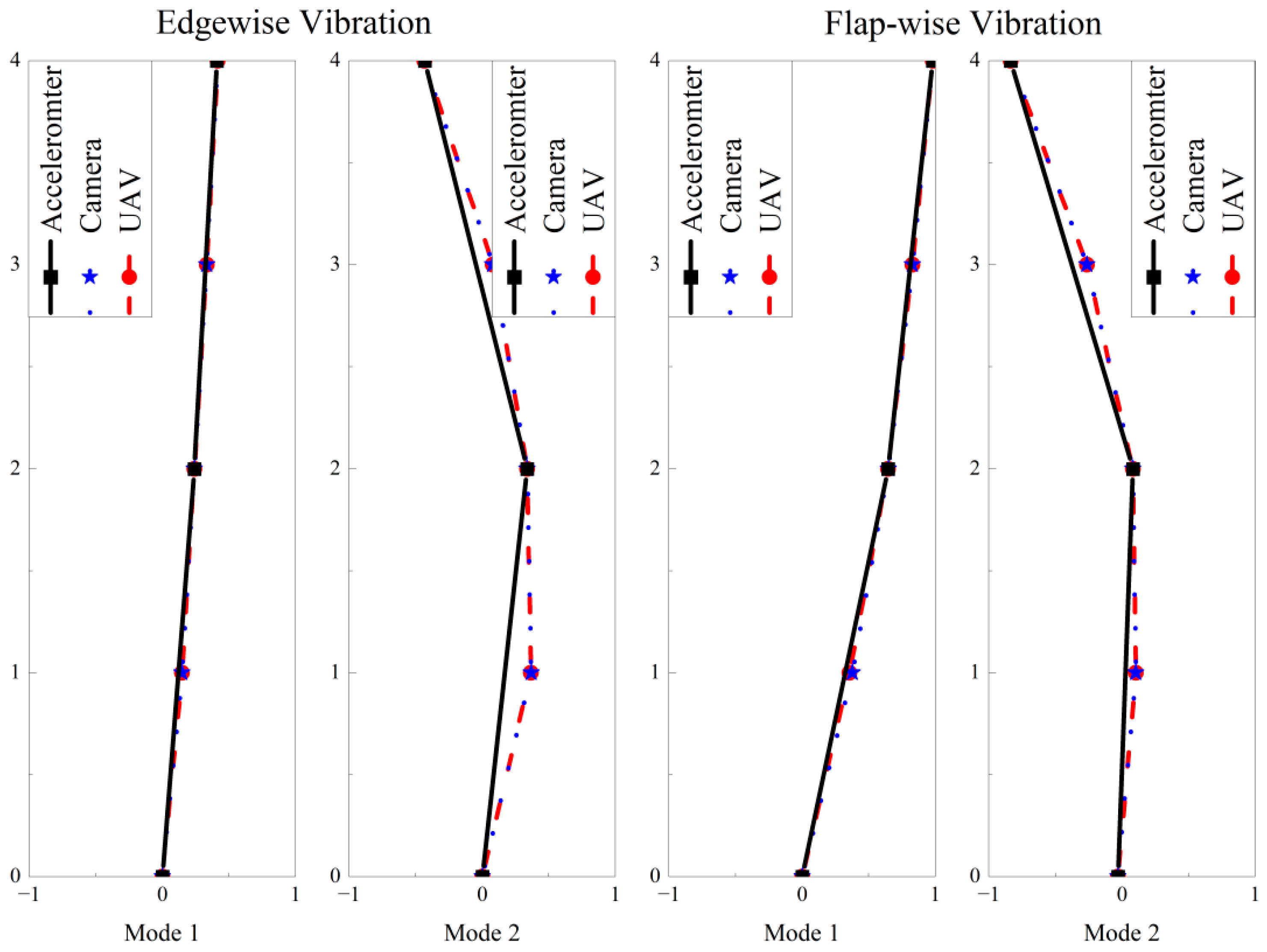

It can be seen from

Figure 17 that the three algorithms perform well in both the time and frequency domains under ideal conditions, which means these algorithms are available for health monitoring under ideal conditions. A light source is used to irradiate the tip of the wind turbine blade structure according to the change of illumination, and excitation is applied to the tip to make it vibrate freely. During the period of 16–17 s of artificial light, as shown in

Figure 14, the displacement time history under the condition of light change in

Figure 17 is interrupted three times, and there is a disorder in the frequency domain. The reason that the color matching algorithm and optical flow method under complex backgrounds are not suitable for monitoring is explained in

Section 4.2. Therefore, only the target-free DSST algorithm works in tracking object structure under complex background conditions, the curve of which is still robust in

Figure 17.

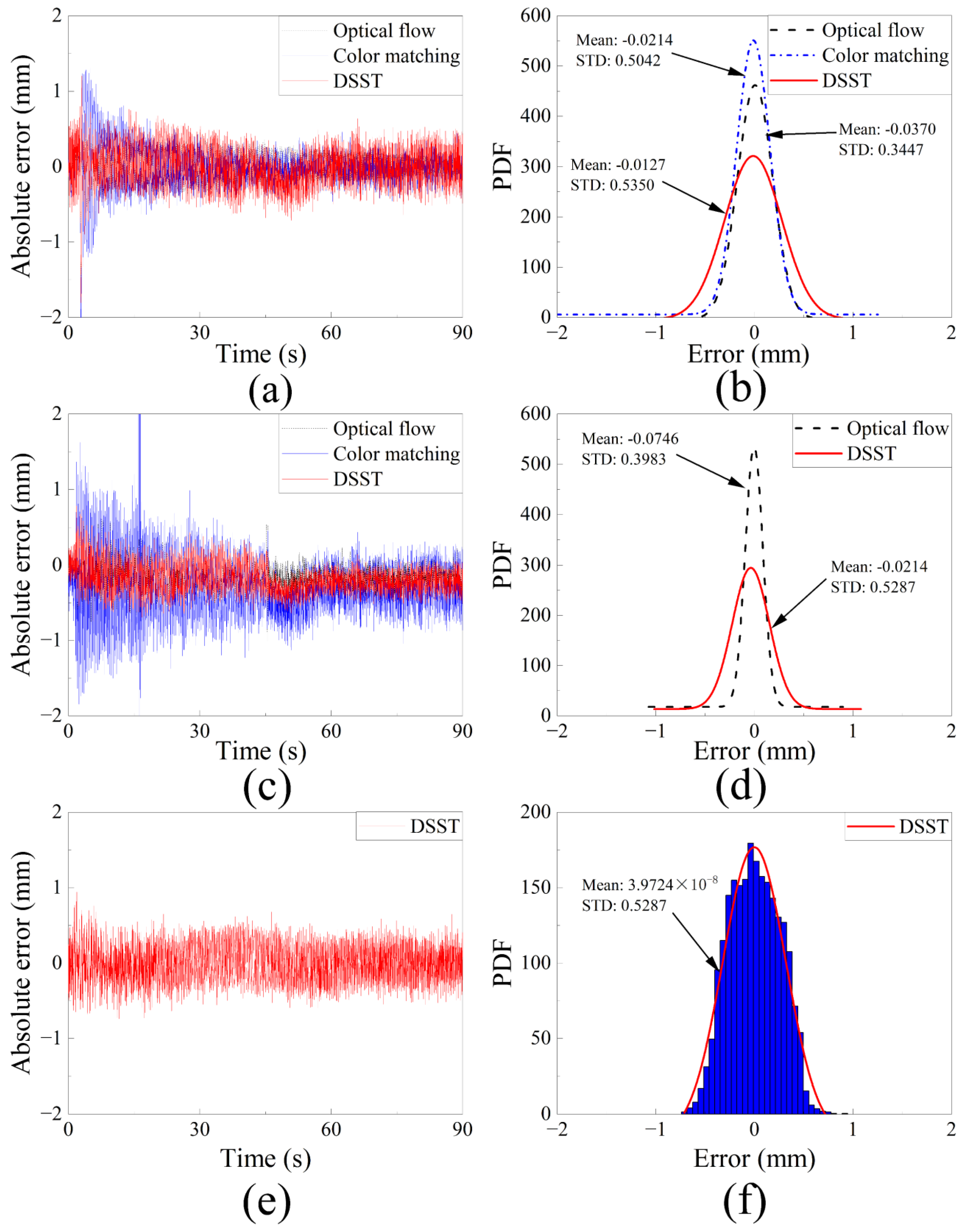

4.4. Monitoring Error Analysis

Taking the LDS data as the benchmark, the displacement data collected from different algorithms are used to perform error analysis [

32,

33]. The absolute errors and the error distributions of the three algorithms under different conditions are shown in

Figure 18.

Figure 18 shows the absolute error and the PDF value calculated from the three algorithms under different conditions. It can be seen from the figure that the target-free DSST algorithm has relatively smaller monitoring errors compared with the others in the three simulated actual environments. Under varied lighting conditions, the color matching algorithm has a large error due to the monitoring disorder caused by lighting changes. Under complex background conditions, neither both the optical flow method nor the color matching algorithm can succeed in the tracking process. However, the target-free DSST algorithm achieves an excellent performance controlling the error within 1 mm, which meets the monitoring needs.

To quantify the accuracy of the algorithm, this paper introduces the root mean square error (RMSE), the correlation coefficient (

), and the coefficient of determination (

) for error analysis. The equations are:

RMSE is calculated using Equation (17), where

is the total number of monitoring,

and

are displacement data from vision monitoring and laser displacement sensors, respectively, which measure the deviation between the measured value and the reference value.

is calculated by Formula (18), where

and

are the average values of the two displacement trajectories,

varies from 0 to 1 unit,

= 1 represents complete correlation, and

= 0 means that the two recording tracks have no correlation. The calculation formula of

is Formula (19), which is used to determine the matching degree of the two recorded tracks.

also belongs to [0, 1], and the unit represents the similarity of the two recorded tracks [

32]. The comparison of vibration monitoring data errors of the three algorithms under different conditions is shown in

Table 1.

It can be seen from

Table 1 that under ideal conditions, the three algorithms have high monitoring accuracy. Under the condition of illumination change, the optical flow method and the target-free DSST algorithm have high accuracy, but the color matching algorithm has large errors and poor correlation, meaning that the color matching algorithm cannot monitor under changing lighting conditions. Both the optical flow method and the color matching algorithm cannot monitor under complex background conditions. The target-free DSST algorithm has high monitoring accuracy and good correlation due to its good robustness. Overall, the target-free DSST algorithm can meet the monitoring task request under different conditions, with the accuracy meeting the engineering requirements.

Vibration monitoring experiments of optical flow method, color matching algorithm and target-free DSST algorithm were carried out in different simulation environments, and the robustness of the target-free DSST algorithm was verified. Compared with other algorithms, the target-free DSST algorithm has the following advantages: (1) It is suitable for monitoring the actual engineering environment (including illumination changes and complex backgrounds). (2) Without additional artificial targets, it can be accurately monitored by using the structure’s corners or edges features, which expands the application range of vision-based monitoring of large-scale structures, especially large-scale wind turbines. (3) The monitoring error can meet the needs of structural health monitoring. The monitoring results of different actual projects show that the absolute error is within 1 mm, the root-mean-square error is below 0.5, and the correlation can be above 0.97, which meets the requirements of engineering health monitoring. Given the good robustness of the target-free DSST algorithm, it was selected as the visual monitoring algorithm for monitoring in subsequent experiments.