Incident Angle Dependence of Sentinel-1 Texture Features for Sea Ice Classification

Abstract

:1. Introduction

2. Data

2.1. Sentinel-1 Data

2.2. Training and Validation Data

3. Method

3.1. Domain-Dependent Texture Extraction and Calculation of Separability

3.2. Calculation of Texture Features

3.2.1. GLCM Texture Features

3.2.2. Simple Texture Features

4. Results

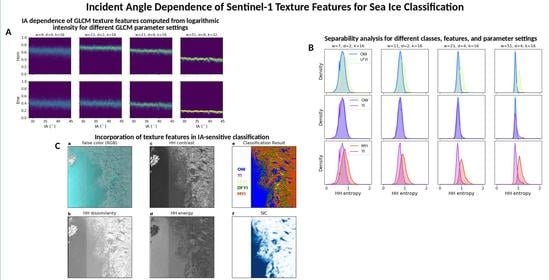

4.1. Texture and IA

4.2. Texture and Different Sea Surface States

4.3. Separability of Different Classes

4.4. Classification Result Examples

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CA | classification accuracy |

| dB | decibel |

| DFYI | deformed first-year ice |

| ESA | European Space Agency |

| EW | extra wide |

| GIA | Gaussian incident angle classifier |

| GLCM | grey level co-occurrence Matrix |

| GRDM | ground range detected medium |

| IA | incident angle |

| JM | Jeffries–Matusita |

| LFYI | level first-year ice |

| MYI | multi-year ice |

| NFI | newly formed ice |

| OW | open water |

| probability density function | |

| ROI | region of interest |

| S1 | Sentinel-1 |

| SAR | synthetic aperture radar |

| SIC | sea ice concentration |

| SNAP | Sentinel Application Platform |

| YI | young ice |

References

- Ramsay, B.; Manore, M.; Weir, L.; Wilson, K.; Bradley, D. Use of RADARSAT data in the Canadian Ice Service. Can. J. Remote Sens. 1998, 24, 36–42. [Google Scholar] [CrossRef]

- Dierking, W. Mapping of Different Sea Ice Regimes Using Images from Sentinel-1 and ALOS Synthetic Aperture Radar. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1045–1058. [Google Scholar] [CrossRef]

- Zakhvatkina, N.; Smirnov, V.; Bychkova, I. Satellite SAR Data-based Sea Ice Classification: An Overview. Geosciences 2019, 9, 152. [Google Scholar] [CrossRef] [Green Version]

- Dierking, W. Sea Ice Monitoring by Synthetic Aperture Radar. Oceanography 2013, 26, 100–111. [Google Scholar] [CrossRef]

- Moen, M.A.N.; Doulgeris, A.P.; Anfinsen, S.N.; Renner, A.H.H.; Hughes, N.; Gerland, S.; Eltoft, T. Comparison of feature based segmentation of full polarimetric SAR satellite sea ice images with manually drawn ice charts. Cryosphere 2013, 7, 1693–1705. [Google Scholar] [CrossRef] [Green Version]

- Cheng, A.; Casati, B.; Tivy, A.; Zagon, T.; Lemieux, J.F.; Tremblay, L.B. Accuracy and inter-analyst agreement of visually estimated sea ice concentrations in Canadian Ice Service ice charts using single-polarization RADARSAT-2. Cryosphere 2020, 14, 1289–1310. [Google Scholar] [CrossRef] [Green Version]

- Dierking, W. Sea Ice and Icebergs. In Maritime Surveillance with Synthetic Aperture Radar; Institution of Engineering and Technology: London, UK, 2020. [Google Scholar]

- Ulaby, F.T.; Moore, R.K.; Fung, A.K. Microwave Remote Sensing: Active and Passive. Volume 1-Microwave Remote Sensing Fundamentals and Radiometry; Addison-Wesley: Boston, MA, USA, 1981. [Google Scholar]

- Onstott, R.G.; Carsey, F.D. SAR and scatterometer signatures of sea ice. Microw. Remote Sens. Sea Ice 1992, 68, 73–104. [Google Scholar]

- Mäkynen, M.P.; Manninen, A.T.; Similä, M.H.; Karvonen, J.A.; Hallikainen, M.T. Incidence angle dependence of the statistical properties of C-band HH-polarization backscattering signatures of the Baltic Sea ice. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2593–2605. [Google Scholar] [CrossRef]

- Gill, J.P.S.; Yackel, J.; Geldsetzer, T.; Fuller, M.C. Sensitivity of C-band synthetic aperture radar polarimetric parameters to snow thickness over landfast smooth first-year sea ice. Remote Sens. Environ. 2015, 166, 34–49. [Google Scholar] [CrossRef]

- Mäkynen, M.; Karvonen, J. Incidence Angle Dependence of First-Year Sea Ice Backscattering Coefficient in Sentinel-1 SAR Imagery Over the Kara Sea. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6170–6181. [Google Scholar] [CrossRef]

- Mahmud, M.S.; Geldsetzer, T.; Howell, S.E.L.; Yackel, J.J.; Nandan, V.; Scharien, R.K. Incidence Angle Dependence of HH-Polarized C- and L-Band Wintertime Backscatter Over Arctic Sea Ice. IEEE Trans. Geosci. Remote. Sens. 2018, 56, 6686–6698. [Google Scholar] [CrossRef]

- Zakhvatkina, N.; Alexandrov, V.Y.; Johannessen, O.M.; Sandven, S.; Frolov, I.Y. Classification of Sea Ice Types in ENVISAT Synthetic Aperture Radar Images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2587–2600. [Google Scholar] [CrossRef]

- Karvonen, J. A sea ice concentration estimation algorithm utilizing radiometer and SAR data. Cryosphere 2014, 8, 1639–1650. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Guo, H.; Zhang, L. SVM-Based Sea Ice Classification Using Textural Features and Concentration From RADARSAT-2 Dual-Pol ScanSAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1601–1613. [Google Scholar] [CrossRef]

- Karvonen, J. Baltic Sea Ice Concentration Estimation Using SENTINEL-1 SAR and AMSR2 Microwave Radiometer Data. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2871–2883. [Google Scholar] [CrossRef]

- Zakhvatkina, N.; Korosov, A.; Muckenhuber, S.; Sandven, S.; Babiker, M. Operational algorithm for ice–water classification on dual-polarized RADARSAT-2 images. Cryosphere 2017, 11, 33–46. [Google Scholar] [CrossRef] [Green Version]

- Lohse, J.; Doulgeris, A.P.; Dierking, W. Mapping Sea Ice Types from Sentinel-1 Considering the Surface-Type Dependent Effect of Incidence Angle. Ann. Glaciol. 2020, 61, 1–14. [Google Scholar] [CrossRef]

- Holmes, Q.A.; Nuesch, D.R.; Shuchman, R.A. Textural Analysis And Real-Time Classification of Sea-Ice Types Using Digital SAR Data. IEEE Trans. Geosci. Remote. Sens. 1984, GE-22, 113–120. [Google Scholar] [CrossRef]

- Barber, D.G.; LeDrew, E. SAR Sea Ice Discrimination Using Texture Statistics: A Multivariate Approach. Photogramm. Eng. Remote Sens. 1991, 57, 385–395. [Google Scholar]

- Shokr, M.E. Evaluation of second-order texture parameters for sea ice classification from radar images. J. Geophys. Res. Ocean. 1991, 96, 10625–10640. Available online: http://xxx.lanl.gov/abs/https://agupubs.onlinelibrary.wiley.com/doi/pdf/10.1029/91JC00693 (accessed on 1 November 2019). [CrossRef]

- Soh, L.K.; Tsatsoulis, C. Texture Analysis of SAR Sea Ice Imagery Using Gray Level Co-Occurance Matrices. IEEE Trans. Geosci. Remote Sens. 1999, 37, 780–795. [Google Scholar] [CrossRef] [Green Version]

- Clausi, D.A. Comparison and fusion of co-occurrence, Gabor and MRF texture features for classification of SAR sea-ice imagery. Atmosphere-Ocean 2001, 39, 183–194. [Google Scholar] [CrossRef]

- Clausi, D.A. An analysis of co-occurrence texture statistics as a function of grey level quantization. Can. J. Remote Sens. 2002, 28, 45–62. [Google Scholar] [CrossRef]

- Deng, H.; Clausi, D.A. Unsupervised segmentation of synthetic aperture Radar sea ice imagery using a novel Markov random field model. IEEE Trans. Geosci. Remote Sens. 2005, 43, 528–538. [Google Scholar] [CrossRef]

- Ressel, R.; Frost, A.; Lehner, S. A Neural Network-Based Classification for Sea Ice Types on X-Band SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3672–3680. [Google Scholar] [CrossRef] [Green Version]

- Haralick, R.M.; Shanmugan, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Clausi, D.A.; Deng, H. Operational segmentation and classification of SAR sea ice imagery. In Proceedings of the IEEE Workshop on Advances in Techniques for Analysis of Remotely Sensed Data, 2003, Greenbelt, MD, USA, 27–28 October 2003; pp. 268–275. [Google Scholar] [CrossRef]

- Aulard-Macler, M. Sentinel-1 Product Definition S1-RS-MDA-52-7440; Technical Report; MacDonald, Dettwiler and Associates Ltd.: Westminster, CO, USA, 2011. [Google Scholar]

- Sen, R.; Goswami, S.; Chakraborty, B. Jeffries-Matusita distance as a tool for feature selection. In Proceedings of the 2019 International Conference on Data Science and Engineering (ICDSE), Patna, India, 26–28 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 15–20. [Google Scholar]

- Lohse, J.; Doulgeris, A.P.; Dierking, W. An Optimal Decision-Tree Design Strategy and Its Application to Sea Ice Classification from SAR Imagery. Remote Sens. 2019, 11, 1574. [Google Scholar] [CrossRef] [Green Version]

- Isleifson, D.; Hwang, B.; Barber, D.; Scharien, R.; Shafai, L. C-band polarimetric backscattering signatures of newly formed sea ice during fall freeze-up. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3256–3267. [Google Scholar] [CrossRef]

- Komarov, A.; Buehner, M. Detection of first-year and multi-year sea ice from dual-polarization SAR images under cold conditions. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9109–9123. [Google Scholar] [CrossRef]

- Ivanova, N.; Johannessen, O.; Pedersen, L.; Tonboe, R. Retrieval of Arctic sea ice parameters by satellite passive microwave sensors: A comparison of eleven sea ice concentration algorithms. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7233–7246. [Google Scholar] [CrossRef]

- Komarov, A.; Zabeline, V.; Barber, D. Ocean surface wind speed retrieval from C-band SAR images without wind direction input. IEEE Trans. Geosci. Remote Sens. 2013, 52, 980–990. [Google Scholar] [CrossRef]

| Authors | dB | w | d | k | Features | |

|---|---|---|---|---|---|---|

| Holmes et al. (1984) | ? | 5 | 2 | average | 8 | Con, Ent |

| Barber and LeDrew (1991) | ? | 25 | 1, 5, 10 | 0, 45, 90 | 16 | Con, Cor, Dis, Ent, Uni |

| Shokr (1991) | ? | 5, 7, 9 | 1, 2, 3 | average | 16, 32 | Con, Ent, Idm, Uni, Max |

| Soh and Tsatsoulis (1999) | ? | 64 | 1, 2, ..., 32 | average | 64 | Con, Cor, Ent, Idm, Uni, Aut |

| Leigh et al.(2014) | ? | 5, 11, 25, 51, 101 | 1, 5, 10, 20 | average | ? | ASM, Con, Cor, Dis, Ent, Hom, Inv, Mu, Std |

| Ressel et al. (2015) | no | 11, 31, 65 | 1 | average | 16, 32, 64 | Con, Dis, Ene, Ent, Hom |

| Karvonen (2017) | yes | 5 | 1 | average | 256 | Ent, Aut |

| Zakhvatkina et al. (2017) | yes | 32, 64, 128 | 4, 8, 16, 32, 64 | average | 16, 25, 32 | Ene, Ine, Clu, Ent, Cor, Hom |

| w | d | k |

|---|---|---|

| 5 | 1/2 | 16/32/64 |

| 7 | 1/2/4 | 16/32/64 |

| 9 | 1/2/4/8 | 16/32/64 |

| 11 | 1/2/4/8 | 16/32/64 |

| 21 | 2/4/8 | 16/32/64 |

| 51 | 2/4/8 | 16/32/64 |

| HH ASM | HH Con | HH Cor | HH Dis | HH Ene | HH Ent | HH Hom | HH Var | ||

|---|---|---|---|---|---|---|---|---|---|

| Set 1 | 0.08 | 0.16 | 0.03 | 0.10 | 0.08 | 0.12 | 0.09 | 0.29 | |

| Set 2 | 0.20 | 0.29 | 0.11 | 0.22 | 0.21 | 0.29 | 0.19 | 0.49 | |

| OW | Set 3 | 0.20 | 0.36 | 0.01 | 0.27 | 0.22 | 0.30 | 0.23 | 0.49 |

| vs. | Set 4 | 0.22 | 0.39 | 0.01 | 0.30 | 0.24 | 0.30 | 0.22 | 0.49 |

| LFYI | Set 5 | 0.61 | 0.70 | 0.16 | 0.64 | 0.63 | 0.75 | 0.59 | 0.85 |

| Set 6 | 0.66 | 0.73 | 0.14 | 0.68 | 0.68 | 0.77 | 0.61 | 0.85 | |

| Set 7 | 1.37 | 1.28 | 0.45 | 1.30 | 1.35 | 1.40 | 1.30 | 1.27 | |

| Set 8 | 1.44 | 1.29 | 0.42 | 1.33 | 1.41 | 1.43 | 1.35 | 1.27 | |

| Set 1 | 0.11 | 0.21 | 0.09 | 0.13 | 0.12 | 0.18 | 0.11 | 0.48 | |

| Set 2 | 0.28 | 0.36 | 0.26 | 0.27 | 0.31 | 0.41 | 0.24 | 0.75 | |

| OW | Set 3 | 0.30 | 0.58 | 0.04 | 0.43 | 0.33 | 0.43 | 0.36 | 0.75 |

| vs. | Set 4 | 0.33 | 0.61 | 0.03 | 0.48 | 0.36 | 0.44 | 0.35 | 0.75 |

| MYI | Set 5 | 0.79 | 0.91 | 0.29 | 0.84 | 0.81 | 0.90 | 0.81 | 1.06 |

| Set 6 | 0.86 | 0.94 | 0.26 | 0.89 | 0.87 | 0.93 | 0.84 | 1.06 | |

| Set 7 | 1.46 | 1.42 | 0.59 | 1.49 | 1.41 | 1.43 | 1.53 | 1.30 | |

| Set 8 | 1.56 | 1.43 | 0.54 | 1.51 | 1.49 | 1.45 | 1.59 | 1.30 | |

| Set 1 | 0.00 | 0.01 | 0.00 | 0.01 | 0.00 | 0.00 | 0.00 | 0.00 | |

| Set 2 | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | |

| OW | Set 3 | 0.01 | 0.01 | 0.00 | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 |

| vs. | Set 4 | 0.01 | 0.01 | 0.00 | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 |

| YI | Set 5 | 0.03 | 0.02 | 0.05 | 0.02 | 0.03 | 0.04 | 0.02 | 0.12 |

| Set 6 | 0.03 | 0.02 | 0.05 | 0.02 | 0.03 | 0.04 | 0.02 | 0.12 | |

| Set 7 | 0.16 | 0.18 | 0.54 | 0.11 | 0.20 | 0.36 | 0.08 | 0.95 | |

| Set 8 | 0.18 | 0.19 | 0.51 | 0.12 | 0.22 | 0.34 | 0.07 | 0.95 | |

| Set 1 | 0.00 | 0.01 | 0.01 | 0.00 | 0.00 | 0.01 | 0.00 | 0.07 | |

| Set 2 | 0.01 | 0.01 | 0.04 | 0.01 | 0.02 | 0.03 | 0.01 | 0.15 | |

| LFYI | Set 3 | 0.01 | 0.08 | 0.01 | 0.05 | 0.02 | 0.03 | 0.03 | 0.15 |

| vs. | Set 4 | 0.91 | 0.09 | 0.01 | 0.05 | 0.02 | 0.03 | 0.03 | 0.15 |

| MYI | Set 5 | 0.04 | 0.13 | 0.04 | 0.09 | 0.05 | 0.08 | 0.07 | 0.27 |

| Set 6 | 0.04 | 0.14 | 0.04 | 0.10 | 0.05 | 0.08 | 0.06 | 0.27 | |

| Set 7 | 0.08 | 0.21 | 0.12 | 0.18 | 0.10 | 0.16 | 0.16 | 0.38 | |

| Set 8 | 0.08 | 0.21 | 0.10 | 0.18 | 0.09 | 0.15 | 0.15 | 0.38 | |

| Set 1 | 0.15 | 0.27 | 0.08 | 0.18 | 0.15 | 0.22 | 0.15 | 0.51 | |

| Set 2 | 0.34 | 0.44 | 0.20 | 0.36 | 0.36 | 0.45 | 0.33 | 0.68 | |

| MYI | Set 3 | 0.35 | 0.58 | 0.02 | 0.46 | 0.37 | 0.45 | 0.40 | 0.68 |

| vs. | Set 4 | 0.39 | 0.61 | 0.02 | 0.50 | 0.40 | 0.47 | 0.40 | 0.68 |

| YI | Set 5 | 0.76 | 0.88 | 0.12 | 0.86 | 0.75 | 0.80 | 0.85 | 0.81 |

| Set 6 | 0.84 | 0.90 | 0.09 | 0.90 | 0.82 | 0.84 | 0.90 | 0.81 | |

| Set 7 | 0.92 | 1.16 | 0.03 | 1.32 | 0.86 | 0.78 | 1.40 | 0.23 | |

| Set 8 | 1.00 | 1.17 | 0.03 | 1.34 | 0.94 | 0.83 | 1.50 | 0.23 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lohse, J.; Doulgeris, A.P.; Dierking, W. Incident Angle Dependence of Sentinel-1 Texture Features for Sea Ice Classification. Remote Sens. 2021, 13, 552. https://doi.org/10.3390/rs13040552

Lohse J, Doulgeris AP, Dierking W. Incident Angle Dependence of Sentinel-1 Texture Features for Sea Ice Classification. Remote Sensing. 2021; 13(4):552. https://doi.org/10.3390/rs13040552

Chicago/Turabian StyleLohse, Johannes, Anthony P. Doulgeris, and Wolfgang Dierking. 2021. "Incident Angle Dependence of Sentinel-1 Texture Features for Sea Ice Classification" Remote Sensing 13, no. 4: 552. https://doi.org/10.3390/rs13040552

APA StyleLohse, J., Doulgeris, A. P., & Dierking, W. (2021). Incident Angle Dependence of Sentinel-1 Texture Features for Sea Ice Classification. Remote Sensing, 13(4), 552. https://doi.org/10.3390/rs13040552